-

Another cautionary tale... from Apple

06/04/2023 at 00:35 • 0 commentshttps://thechipletter.substack.com/p/the-first-apple-silicon-the-aquarius-7cb

It appears that Apple between 1986 and 1989 had a project so secret that I only hear about it now. The description of the architecture sounds very familiar :

Scorpius is a tightly-coupled multiprocessor CPU with efficient support for fine-grained parallelism; the architecture was developed to take advantage of the inter-connectivity of single-chip VLSI implementations. Scorpius is intended to be the processing element of a high-performance personal computer system constructed with a minimal number of components. A Scorpius CPU comprises four independent processing units (IPUs) which share access to separate instruction and data caches, a Memory Management Unit, and a Memory/Bus Interface.

IPUs have a small register-oriented instruction set in which all data access to memory Is done by register load and store instructions. (Register and word size is 32 bits.) Each PU has 16 general-purpose registers — a total of 64 for the CPU — and 7 local registers. Local registers include product, remainder, prefix, and various state saving registers. In addition, the four PUs share 8 global registers. Including interrupt, event counter, and global status registers.

SIMD (Single Instruction stream, Multiple Data streams). This mode corresponds to the usual View of parallel processing: each PU executes the same operation on different data streams.

...

I thought FC1 was original, hahaha.

OTOH it's interesting to see some sort of architectural convergence.

The Scorpius never delivered, due to complexity and compiler issues.

In the end, I know well that memory latency is what kills general-purpose performance and OOO of some kind becomes necessary. It's interesting though to see how close one can get to OOO-like performance without all the implied overhead.

...

Update :

-

Tagged registers

06/16/2021 at 23:26 • 0 commentsBEWARE !

That log might make you roll your eyeballs so much that you"ll see yourself cringing but it might also "solve" an old problem, in the sense that "solving" in engineerspeak means "moving the problem somewhere else". Hopefully it doesn't come back later to haunt you even more from its new residence.

Tagged registers were not present as such in FC0 but it contained "shadow" values, such as the zero flag. The LSB and MSB values are directly available in the register set (or a shadow copy somewhere else), so they could be used for conditional instructions. The Zero flag can be recomputed any time the register is written, either after a computation or context switch. This shadow mechanism is simple, cheap, non intrusive... Another register attribute bit would have contained the carry flag, but it was not considered "good" and FC0 preferred 2R2W instructions instead. It was still "fair game" though.

Now I'm thinking of something potentially much weirder than that, and I can already hear some of your sarcasm, of the kind "Why don't you go full iAPX32 while you're at it ?". I'll simply answer : "Because that's not the purpose", despite some temptations that are quickly swatted by the teachings of Computer Development History.

So let's consider our "Tagged Registers". To be honest, FC1 already has some "shadow flags" for some registers to help reduce checking bounds all the time, for example, when a pointer is stored, like when copying a valid address register to a common register : this would also propagate its TLB status as well, to prevent excessive TLB lookups and save some energy and time. This helps speed up jumps or passing parameters through function calls, for example. This again, this is "non intrusive" and hidden from the ISA, a mere convenience. If the shadow flag is invalid, you lost a few cycles and that's all.

But the ISA faces a growth crisis. The 2R1W instruction format already saves a little room by dropping 2 bits for one source register address, but that's still 6+4+6=16 bits and the opcode can only have 16 bits, of which 3 are eaten by the "size field". The basic decoding table for FC0 was:

000 scalar byte 001 scalar half-word 010 scalar word 011 scalar double word 100 SIMD byte 101 SIMD half-word 110 SIMD word 111 SIMD double word

Soon it appeared that this approach was still too limiting because you always need to handle something that won't fit these orthogonal yet arbitrary types. For example, some people will desire 128-bit integers, or 256 bits. DSP people love fixed point formats. Float formats abound and some "IA" (neural networks) algos want small numbers, while some crypto nerds wish for 512 bits. Whenever we define a size, it will be too large for some and too small for others.

Furthermore, most of the time, you will use only 2 or 3 types, which would reduce the use(fulness) of one bit. Wasting one opcode bit is baaaaaad.... One solution that was considered was to use a lookup table, which meant that you needed opcodes to read and set it, one by one... And since you can't count on people to agree, many of your function calls and returns would have to save that damned "Size LUT". What sounded simple and cheap seemed to implant its madness all over the code "just to be sure". And you would have to count on the LUT format to evolve !

Now, the "solution" I propose here might not be better, but it looks like a more serious alternative. You will still need dedicated opcodes, you will still need some hidden state that must be saved and restored upon all traps, but you will save a few opcode bits and the save-restore madness is gone ! So what is this so-called solution ? Add tags to each register to store the individual data type. This type could even be NULL and trigger a trap when this register is read as an operand.

The tag can be in machine-dependent format and is used by computational opcodes, that will obey the common-sense rules, such as: if you add two operands with a different type tag, trap, else store the result as the same type. Some opcodes could even change the type or inquire it.

The type will contain:

- the scalar/SIMD/vector attribute,

- integer/fixed point/floating point/whatever attribute,

- the granularity (how many bits per element)

- the overall size of the data (?)

- if the register contains a valid value

They are set by :

- load constant opcodes (explicit)

- load from memory (explicit)

- Type conversion opcodes (explicit, modify the register's content, for example float to integer)

- Type casting opcodes (explicit, doesn't touch the register's contents)

- computations/operations (implicit)

They are read by :

- computations/operations

- type read opcodes (introspection)

- trap register backup mechanism

The good :

- The code will be more flexible, more scalable

- vectoring code would be easy, and the scalar version would still work/execute.

- You don't need a separate FP or vector register set (though this could be done in practice, and you might limit the allowed types for certain registers, such as addresses)

- One computation opcode for MANY cases ! Change the register's size and the code remains the same.

- Not too intrusive when dealing with casts and conversions, if you have proper coding hygiene, unlike the LUT idea.

- Better type safety, maybe better security (even though each new feature adds complexity, bugs, misunderstandings and potential flaws).

The bad :

- Now the opcode table must specify which types it accepts, when/why it traps

- Should the opcodes trap on any type mismatch or autopromote/autocast types à la JavaScript ?

- Yes, that's more trap sources/reasons. Who wants more traps ? not me.

- That's also more opcodes to explicitly handle.

- You have to handle a type NULL ! and never mind the "whatever" needed for storing in the tagged format.

- This adds LATENCY ! This is the part that bugs me the most. You don't know at instruction fetch time if the operation is integer or FP, you need to read the register set and check the respective operand types before you can reserve or allocate an execution unit (though the instruction words could be "shadow tagged" in the cache to help loops). This wouldn't be a huge drawback with OOO though.

The overall :

It shouldn't be such a crazy undertaking for mundane code, because in C for example, the source code explicitly declares all types in advance and it would be redundant (hence space-wasting) to repeat the size all the time in the opcodes. The compiler already tracks the register widths and handles explicit type conversions. With hardware size tags, things will be a bit more funky but this will also help save other opcodes for SIMD or vectors operations, because you don't need to create a separate opcode that adds a scalar to each element of a vector (for example).

Of course, overall, the architecture moves further away from its squarely RISC roots but FC1 is also meant to be a good general-purpose number cruncher that could handle a wide variety of workload types (that are not well handled already by the #YASEP and the #YGREC8). Keeping the type separate from the opcode leaves many doors open without the risk of saturating the opcode table. You could add LNS ("Logarithmic Number System"), complex or quaternions, matrices or whatever, and the same old ADD opcode would still work. The work would be directed to the appropriate execution unit.

Another interesting effect, by saving opcodes (both from the 3 bits saved and the deduplication for each type) is that not only it has more opcodes to enjoy but also more bits for the register addresses ! If 3 bits are saved then I can bump the register set's size to 4×32 ! This would use "only" 7+5+7=19 bits :-) And there would still be 13 bits available for 2R1W operations.

.

.

.

.

PS: This thought process was rekindled by http://lists.libre-soc.org/pipermail/libre-soc-dev/2021-June/003142.html ...

-

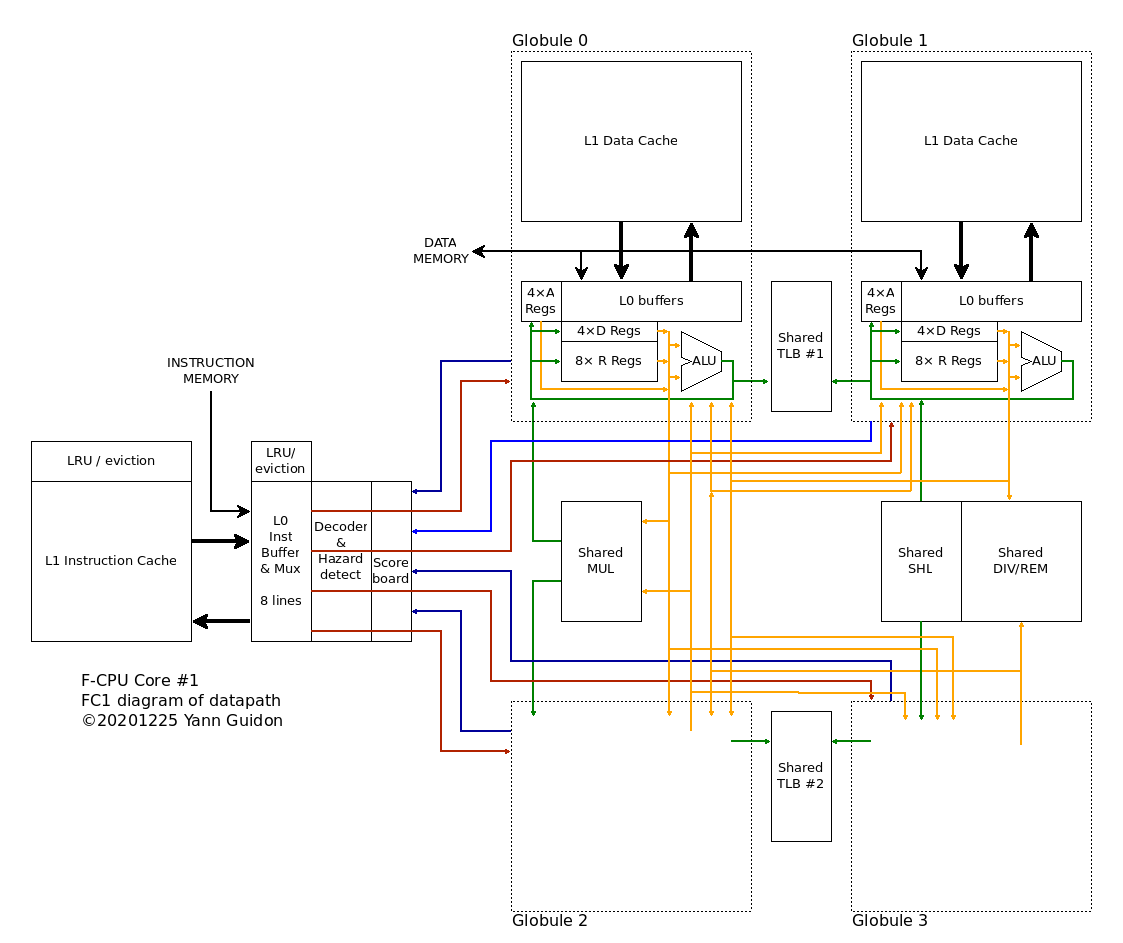

FC1 : the memo

12/26/2020 at 00:46 • 0 commentsHere is a summary of the design so far.

FC1 or F-CPU core #1 is the successor of FC0 which was designed more than 20 years ago. You can have a look at the original F-CPU manual for an overview of the original concept and history. FC1 is a more mature version that drops the ideas that failed and introduces new ones, the FC1 instruction set is inspired but incompatible with FC0.

So many features have changed/evolved but the founding spirit remains: make a decent application processor with a fresh RISC architecture, avoid complex out-of-order circuits and instead redesign the instruction set around the problems that OOO tries to solve in HW.

FC1 is a 4-ways superscalar processor from the ground up. FC0 would require re-engineering to go superscalar and instead counted on its superpipeline (very short pipeline stages, or "the carpaccio approach") to reach high speed and throughput. The cost was more complexity, longer pipeline stages and maybe lower single-thread performance (reminiscent of the "plague of the P4"). The Low FO4 can quickly hit a logic wall and the intended granularity might have been overly optimistic.

Instead the FC1 is designed as superscalar with very fewer pipeline stages, which is easier to convert to 2-ways or 1-way issue, than the reverse. Code that is correctly compiled and scheduled will run equally well on the 3 possible implementations, though 4-ways is the most natural choice. 2 and 1 way would be interesting for gates-limited versions, such as FPGA.

Just like FC0, FC1 is an in-order processor that uses a scoreboard to stall the instructions at the decode stage if hazards are detected. To some, this is ugly and unthinkable in 2020 but the "lean philosophy" attempts to avoid feature creep that will add a considerable burden later.

Instead, the instruction set and architecture are designed to reduce the effects and causes of decode stalls. Precise exceptions, mostly from memory reference faults, are possible by splitting the classic "LOAD" or "STORE" instructions in 2:

- compute the address, TLB lookup and tag the corresponding address register

- access the data and use it as operand for another operation

The access instructions are the one to trap, once the address is known, but only if the address is referenced. This is possible by flagging the corresponding register as "invalid access" for example. This also enables prefetch, to shadow some of the latency from memory.

By the way, FC1 uses explicitly dedicated Address registers and Data registers. This reduces the complexity and overhead caused by FC0's more flexible and general approach, since now only 16 register addresses have to be flagged "invalid/ready" instead of 63.

Just like FC0, FC1 uses 64 registers though as explained above, the register set is not homogeneous, but split into 3 main functions. Just like the #YASEP and the #YGREC8, FC1 uses register-mapped memory:

- 32 "normal" registers (R0 to R31, and R0 is not hardwired to 0)

- A0 to A15 hold data addresses

- D0 to D15 are "windows" to the memory pointed by the respective address register (they can be thought as a port to the L0 memory buffers)

This is a LOT of ports to memory and the question of the relevance is legitimate (particularly since it creates a LOT of aliasing problems) but we'll see later that it also creates interesting opportunities.

If Data/Address pairs can be paired, that makes 8 blocks of dual-port L1 cache memory, a particularly high bandwidth is expected and it should be matched with eventual L2 cache and main memory bandwidth, but this is something that is not directly inside the scope of the design. Let's just say that it's less constrained than most existing designs.

An even more radical aspect of FC1 is that the pipelines are "loosely coupled", and in fact quite decoupled. Each of the 4 pipelines has its own 2R1W register set with 16 addressable registers (8×R, 4×D, 4×A) to keep speed as high as possible. Gone is the humongous register set with 64 registers, 2 write ports and 3 read ports that was a major timing problem. Selecting only 2 operands among 16 is faster and smaller.

Each pipeline has dedicated registers and the only way to communicate between pipelines is to write the result of an operation to another target pipeline. This of course creates a hazard (and one stall cycle) but it keeps the decoder & Xbar complexity low.

The diagram above also shows that each "Globule", or pipeline+cache block, has 1 output port (wrongly tied to the source register selector) and selects input data from one of four sources : either the shared execution unit(s) or one of the 3 other globules. Two shared TLB manage the aliases, check the data that go to Address registers, while keeping cache eviction reasonable (if you manage your pointer well).

Look ma', no OOO !

The "loosely coupled" approach helps when dealing with code that would benefit from OOO but can be detected at compile time, with "sub-threads" that can be allocated to given pipelines to complete a sequence while the other pipeline(s) start a new sequence. A virtual FIFO (through the L0 instruction cache's multiple instruction pointers) lets a pipeline, or two, or three, stall during L1 cache misses, while the remaining pipeline(s) still proceed in the program's logic. While loads are big headaches (and can be managed through the A registers by early address computations), stores don't slow the program logic as long as no aliasing occurs.

Another breakthrough that is possible with a split register set is that all the pesky instructions that need more than 3 register addresses and don't fit in the clean 2R1W scenario are now handled by "paired instructions".

A pair of pipelines can now handle addition with carry, full-precision multiplies, or long-shifting with almost no effort. The same instruction is duplicated BUT the 2nd instruction specifies a destination in another pipeline (which will stall to accept the new result). This is both trap-safe (the pair of instruction can be split into 2 and be functionally equivalent despite the break of the pair at decode time) and a good use of the available resources.

The example below shows such a case of paired instructions:

; here is a pair of instructions that will be decoded and executed in parallel IMULL R01, R2, R03 ; feed back the result in the pipeline IMULH R01, R2, R13 ; send extra result in another pipelineNote that the register names are ... in octal. This is not to emulate Cray's philosophy but to ease coding: the first digit will encode the pipeline's number.

Another note on instruction encoding: both operands of an instruction are located in the same pipeline. The result can be sent to another pipeline though. As a result, one needs to encore 6+6+4=16 bits, 2 bits less than FC0 due to the explicit partitioning of the register sets. Decoding is also greatly simplified/smaller.

For paired instructions, the encoding is even smaller because some bits are implicit. The result can't be sent to another globule. A single instruction could also be used, that will be expanded by the decoder, sending the result to the opposite globule at an implicit address. The extra 3 bits can encode more options for the opcode.

-

F-CPU as a decent vector processor

12/22/2020 at 03:15 • 5 commentsRe-inventing-the-wheel-warning ! But in the middle of the decades-old tricks, some new ones could prove fruitful.

To celebrate the 22nd anniversary of the project, I bring a new life and perspective to vector processing, which fully exploits the superscalar architecture that has evolved these last years.

To be fair, parts of these considerations are inspired by another similar project : https://libre-soc.org/ is currently trying to tape-out a RISC processor capable of GPU functions, with a CDC-inspired OOO core that executes POWER instructions. Not only that, the project is also trying to add vector computation to the POWER ISA, and this is now completely weird. See https://libre-soc.org/openpower/sv/vector_ops/

My personal opinion about POWER may bias my judgement but here it is : despite the insane amount of engineering that has been invested in it, it's overly complex and I still can't wrap my head around it, even 25 years after getting a book about it.

However some of the discussions have tickled me.

There is one architectural parameter that defines the capacity and performance of vector computers : the number and length of the vector registers. Some years ago, I evaluated a F-CPU coprocessor that contains a large number of scalar registers (probably 256 or 1024) that could then be processed, eventually in parallel if "suitable hardware" is designed, and for now, Libre-SoC considers 128, eventually 256 scalar registers that can be processed in a vector-like way.

But this number is a hard limit, it defines and cripples the architecture, and as we have seen in the scientific HPC industry, the practical vector sizes have grown and completely exceeded the 8×64 numbers (4Ki bytes) of the original Cray-1. For example the NEC SX-6 (used for the Earth Simulator) uses a collection of single-chip supercomputer with 72 registers of 256 numbers (147456 bytes) and that was 20 years ago. That is way beyond the 1K bytes considered by Libre-SoC which will barely allow to mask main memory's latency. Furthermore, because of the increased number of ports, the vector register set will be less dense and will draw more power than standard cache SRAM for example.

Clearly, setting a hard limit for the vector size and capacity is a sure way to create problems later. Scalability is critical and some implementation will favour a smaller and cheaper implementation that makes compromises for performance, while other will want to push all the buttons in the quest for performance.

And you know what is scalable and configured at will by designers ? Cache SRAM. It totally makes sense to use the Data L1 cache (and other levels) to emulate vectors. User code that relies on cache is totally portable (and if adaptive code is not used, the worst that can happen is thrashing the cache) and memory lines are already pretty wide (256 or 512 bits at once) which opens the floodgates for wide SIMD processing as well (you can consider 4 or 8 parallel computational pipelines already). This would consume less power, be denser and more scalable than using dedicated registers. In fact, one of the Patterson & Hennessy books writes:

In 1986, IBM introduced the System/370 vector architecture and its first implementation in the 3090 Vector Facility. The architecture extends the System/370 architecture with 171 vector instructions. The 3090/VF is integrated into the 3090 CPU. Unlike most other vector machines, the 3090/VF routes its vectors through the cache.

That is not exactly what I have in mind but it shows that the idea has been floating around for such a long time that the first patents have long expired.

Cache SRAM have enough burst bandwidth to emulate a huge vector register but this is far from being enough to make a half-decent vector processor. The type of CPU/GPU hybrid I consider is rather used for accelerating graphics primitives, not for large matrix maths (which is the golden standard for HPC, and I don't care about LINPACK ratings) so I'm aiming at "massive SIMD" performance, knowing that scatter/gather access is a PITA. But graphics primitives are not just single primitive operations on a long string of numbers: there can be several streams of data that get combined by multiple execution units. DSP such as FFT and DCT require tens of operations to create tens of results from tens of operands. There is a significant potential for heterogeneous parallelism, and a single cache block is obviously underwhelming. This is where FC1's structure changes the rules.

For those who have not followed the development of FC1, here's a summary :

FC1 is best implemented as a 4-way superscalar processor (though a narrower one with 1 and 2 ways is easy to devise). Each instruction is 32-bits wide and is targeted at one of the 4 independent pipelines. The program stream should ensure that instructions for the 4 pipelines are packed following a few simple rules for optimal pipeline use. Each pipeline has their private computation units, register file and cache memory, to keep latency as low as possible. For communication, one pipeline can write a result to another pipeline, with a time penalty.

Each pipeline has 16 registers only. Just like the #YASEP and the #YGREC8, it uses register-mapped memory:

- R0 to R7 are "normal" registers

- A0 to A3 hold addresses

- D0 to D3 are "windows" to the memory pointed by the respective address register (they can be thought as a port to the L0 memory buffers)

Each pipeline can be backed by its own TLB mirror and cache memory block (4 or 8-way). This allows FC1 to read 8 operands and write 4 64-bits results in a single cycle. For a while I wondered if this was balanced or overkill but the vector extension makes it "just right", I think.

The proposed vector extension reuses some of these mechanisms but doesn't use the whole scalar register file, which can still work in parallel for housekeeping duties. The instruction format is the same (the SIMD bit is now a vector bit) and the decoding and packing rules are mostly the same but the vector-flagged instructions operate on another subset of the system.

- D0-D3 operands refer to vectors in cache, either as a operand or destination. You could "vadd D0 D1 D2" and that's all.

- A0 to A3 don't make sense as such but that could be used for scatter/gather.

- R0 to R7 could be scalar operands, or a "port" to another pipeline.

The point of the proposed architecture is its ability to write sequences of instructions that describe a dataflow/route of the multiple parallel streams of vector data, that is more powerful than simple chaining. Each pipeline can contain more than one processing unit (integer ALUs, integer Multiply-Accumulate, FPadd, FPmul, FPrecip/trans...) and the sequence of instructions can virtually wire them to form a more complex and useful operation. Multiple operations could even be "in flight" in the same "pipeline" (cluster), and there are 4 of them that can send their result to the others.

A simple scoreboard can help the decoder stall when a register is still processing a vector, for example. But non-vector operations can still be executed because R0 to R7 are not used or affected by vector operations. A and D register are updated though, and special circuits are required for auto-incrementing the addresses, but this was intended since the very beginning, no biggie here. So if the vector operations use A1 and A2, the scalar core can still work with A0 and A3.

With the system as I imagine it, it is possible to execute simultaneous operations on identical operands, such as

R1 <= D0 + D1, R2 <= D0 - D1

This will take 2 consecutive instructions, yet D0 and D1 will be only read once, and both operations are executed during the same cycle (if the execution units are available). You can even chain another instruction before sending the result to another pipeline or to memory through D3 or D4. In this example, R1 and R2 are not real registers but symbolic names for bus/port numbers, for example.

I'm only starting to explore this route but I'm already happy that it promises a lot of operations per cycles (and potentially high operation unit utilisation) with little control logic. No need for OOO. Of couse, a lot of refinements will be required, in particular for scatter/gather and inter-lane communication. But we have to start somewhere and the basic "scalar" FC1 is barely changed, the vector extension is easy to add or remove at will.

Please show your interest if illustrations are necessary ;-)

-

Celebration

12/22/2018 at 20:23 • 1 commenthttps://news.slashdot.org/story/98/09/08/1646240/the-f-cpu-project-the-freedom-cpu-project

20 years ago, I shyly joined a crazy pipedream vaporware project, which I found on Slashdot. Since then, it's been a constant adventure.

I have learned a LOT. I have met a LOT of people. I have gotten a LOT of knowledge and understanding and this project has shaped a lot of my day-to-day life, you couldn't imagine. And it's not going to end. My presence on HAD is fueled by the need to innovate, create, build, design, and break ALL the ground that was needed for FC0. We didn't have the right people, the right tools, the right structure. I do all my best to at least create the environment that will make F-CPU a reality, even if I have to scale things down to the extreme (see #YGREC8 ) before going back to the full-scale SIMD.

There is no way I could build everything alone and no way I could tapeout FC1 either alone or with the original structure. I have grown a bit wiser and I try to surround myself with the BEST people. I have found many here on HAD (if you read this, you might be one of them).

The motto will always remain :

« There can be no Free Software without Free HardWare Designs »

and such a chip is long overdue.

There is so much to do and there is certainly something you can do.

-

Multicast memory regions

12/01/2018 at 12:27 • 0 commentsAs already stated, F-CPU enforces a strong separation between the data and instruction memory spaces. This is a central requirement because this processor will run user-provided (or worse: 3rd party) code and security must not be an afterthought.

Another security measure is to ensure that each data block can be accessed by only one thread. Zero-copy information sharing happens when one thread "yields" a block to another thread, as a payload of a message for example. At that point, the original owner loses ownership and rights to that block, that must be previously allocated from a dynamic pool. This ensures (ideally) that no information is leaked (as long as the allocator clears the blocks after use).

There are also "hard" semaphores in the Special Registers space, with a scratch area where appropriate read and write rights allow deadlock-free operation.

But there are still cases where this is not enough and zero-copy operation is impossible or too cumbersome. In a few cases, the overhead of yielding a block is too high and we would like one thread to be able to write to a buffer while other threads can read it. Without this feature, the system is forced to clump data into a message packet, which adds latency. This is multiplied by the number of interested recipients but the added cost would not be justified if this specific update is not required at the moment. It would be faster and lighter to read some word here and there from time to time.

This desired "multicast" feature is reminiscent of a system that must be somehow implemented : ONE thread is allowed to write to the instruction space, to load programs, and this space may be shared by several threads. However, all the other threads can't access the program space, and shared constants (read-only values) must exist in the data addressing space, a multicast system is required anyway.

-

What can you do with 64 bits wide pointers ?

12/01/2018 at 10:47 • 0 commentsWhen looking at (almost?) all the 64 bits CPUs out there, you see that the MSB of addresses are not really used. 48 bits give you 256 terabytes, which is about the RAM installed into a HPC rack these days. But so much space can't be really used conveniently because access times prevent efficient access across a rack or a datacenter. Message passing often takes over when communication is required across compute nodes or blades.

So we're left with these dangling bits... The addresses are usually "virtual" and require translation to physical space. But you know I hate wasted resources, right ? So I want to use the MSB as an "address space ID" and store some meta-information such as process number. It's easy to check if this address is valid so it adds some sort of protection while allowing the whole addressable space to be linearised.

Of course there is the question of "how many bits should be allocated" because there is always a case that will bump into fixed limitations. The solution is to make a flexible system with not just an ID to compare, but also a bitmask (this is used nowadays for comparing addresses in chipsets, for example). The OS will allocate as many bits as required by the workload, either 56 address bits for 256 tasks, or 40 bits for 16M tasks, or any desired mix (think : unbalanced binary tree)...

-

Decoupled Globules

11/30/2018 at 14:37 • 0 commentsOne design goal of the F-CPU is to increase efficiency with smart architecture features, and with the FC1 I would like to get closer to OOO performance with in-order cores.

You can only go so far with all the techniques already deployed and explored before : superscalar, superpipeline, register-mapped memory, ultra-short branch latency... Modern OOO processors go beyond that and can execute tens or hundreds of instructions while still completing old ones.

FC1 can't get that far but can at least go a bit in this direction. Typically, the big reordering window is required for 1) compensate for false branches 2) wait for external memory.

1) is already "taken care of" with by the design rule of having the shortest pipeline possible. A few cycles here and there won't kill the CPU and the repeated branches have almost no cost because branch targets are flagged.

2) is a different beast : external memory can be SLOW. Like, very slow. Prefetch (like branch target buffering) helps a bit, but only so much...

So here is the fun part : why wait for the slow memory and block all the core ?

Prefetch is good and it is possible to know when data are ready, but let's go further: don't be afraid anymore to stall a pipeline... because we have 3 others that work in parallel :-)

I was wondering earlier about "microthreads", how one could execute several threads of code simultaneously, without SMT, to emulate OOO. I had seen related experimental works in the last decade(s) but they seemed too exotic. And I want to avoid the complexity of OOO.

The method I explore now is to "decouple" the globules. Imagine that each globule has a FIFO of instructions, so they could proceed while one globule is stalled. Synchronisation is kept simple with

- access to SR

- jumps (?)

- writes to other globules

The last item is the interesting one : the last log moved the inter-globule communication from the read to the write part of the pipeline. The decoder can know in advance when data must cross a globule boundary and block the recipient(s). This works more or less as implied semaphores, with a simple scoreboard (or just four 4-bits fields to be compared, one blocking register per globule).

I should sketch all that...

Note:

I vaguely remember that in the late 80s, one of the many RISC experimental contenders was a superscalar 2-issues pipeline where one pipeline would process integer operations and the other would just do memory read/writes. They could work independently under some constraints. I found it odd and I have never seen it mentioned since, so the name now escapes me...

Addendum :Decoupling the globules creates a new problem and goes against one of the golden rules of F-CPU scheduling : don't "emit" an instruction that could trap in the middle of the pipeline. It creates so many problems and requires even more bookkeeping...

Invalid memory accesses could simply silently fail and raise a flag (or something). A memory barrier instruction does the trick as well (à la Alpha).

Anyway, decoupling is a whole can of worm and would appear in FC1.5 (maybe).

-

Inter-globule communication

11/22/2018 at 01:42 • 0 commentsSo far, the 2R1W opcodes have 1 full destination field (6 bits) that determines the globule number, one partial source register address within the same globule, and one full register address that can read from any other globule. It's quite efficient on paper but the corresponding circuit is not as straight-forward as I'd like.

Decoding the full source field creates significant delays because all the source globules must be checked for conflict, the 4 addresses must be routed/crossbarred, oh and the globules need 3-read register sets.

Inter-globule communication will be pretty intense and will determine the overall performance/efficiency of the architecture... it is critical to get it right. And then, I remember one of the lessons learned with the #YASEP Yet Another Small Embedded Processor : You can play with the destination address because you have time to process it while the value is being decoded, fetched and computed.

OTOH the source registers must be directly read. Any time spent tweaking it will delay the appearance of the result and increase the cost of branches, among others. So I evaluate a new organisation :

- one full (6 bits) source register address, that determines the globule

- one partial (4 bits) source register address, in the same globule as above

- one full destination address register that might be in a different globule.

This way, during the fetch and execute cycles, there is ample time to gather the 4 destination globule numbers and prepare a crossbar, eventually detect write after write conflicts, route the results to the appropriate globules...

The partial address field is a significant win when compared to FC0, there are 16 address bits compared to 18 in the 2000-era design. This means more space for opcodes or options in the instruction word. And moving the complexity to the write stage also reduces the size of the register sets, that now have only 2 read ports !

But communication will not be as flexible as before...

I have considered a few compromises. For example, having shared registers that are visible across globules. It would create more problems than it solves : it effectively reduces the number of real registers and makes allocation harder, among other things that could go wrong. Forget it.

Or maybe we can use more bits in the destination register. The basic idea is to use 1 bit per destination globule and we get a bitfield. The destination address has 4 bits to select the destination globule, and 4 bits for the destination register in the globule. The result could be written to 4 globules at once !

Decoding and detecting the hazards would be very easy, by working with 4 decoded bits directly. The control logic is almost straight-forward.

However this wastes all the savings in precious opcode bits and many codes would not be used or make sense.

A globule field with 3 bits would be a good compromise, and the most usual codes would be expanded before going to the hazard detection logic :

000 G0 001 G1 010 G2 011 G3 100 Gx, Gx+1 101 Gx, Gx+2 110 Gx, Gx+3 111 G0, G1, G2, G3 (broadcast)

The MSB selects between unicast and multicast. One instruction can write to one, two or four globules at once. The last option would be very efficient for 4-issue wide pipelines, and take more cycles for smaller versions.

x is the local globule, determined by the source's full address. Some wire swappings and we get the proper codes for every decode slot simultaneously...

Moving data to a different globule would still have one cycle of penalty (because transmission is slow across the chip) but this is overall much better than delaying the whole code when one source must be fetched in another globule. Furthermore, broadcast uses fewer instructions.

It is less convenient than reading from a different globule, because moves must be anticipated. This is just a different way to read/scan/analyse/partition the dataflow graph of the program... More effort for the compiler but less complexity for the whole processor. And a simpler core is faster, cheaper and more surface&power-efficient :-)

-

Check-in, Check-out

03/07/2018 at 08:22 • 0 commentsI think I'm closer to the solution of the old old problem of copy-less data transmission between threads or processes.

Already, switching from one process to another is almost as easy as a Call/Return within a given process. The procedure uses 3 dedicated opcodes : IPC, IPE and IPR (InterProcess Call/Enter/Return). The real problem is how to transfer more data than what the register set can hold.

My new solution is to "mark" certain address registers to yield the ownership of the pointer to the called process.

- The caller saves all the required registers on its stack and "yields" one or more pointers with a sort of "check-out" instruction that sets the Read and Write bits, as well as changes the PID (ownership of the block) OR puts the block in a sort of "Limbo" state (still owned but some transfer of ownership is happening, in case the block is not used by the recipient and needs to be reused).

- The called process "accepts" the pointer(s) with a "check-in" instruction so the blocks can be accessed within the new context and mapped to the new process' addressing range.

This creates quite a lot of new sub-problems but I believe it is promising.

- How do you define a block ? It's basically 1) a base pointer 2) a length 3) access rights 4) owner's PID. All of these must have some sort of HW support, merged with the paging system.

The sizes would probably be powers-of-two, so a small number easily describes it, and binary masks checks the pointer's validity. - Transferable blocks must come from a global "pool" located in an addressing region that is identical for everybody because we don't want to bother with translating the addresses.

- IF there is a global pool then one process must be responsible for handling the allocation and freeing of those blocks.

This affects the paging system in many ways so this will be the next thing to study...

Yann Guidon / YGDES

Yann Guidon / YGDES