-

Hardware Startups: Building the 'Extensions of Man'

07/13/2014 at 21:48 • 0 comments![]()

-

#blackboard fun

06/19/2014 at 04:32 • 0 comments![]()

-

Interview with Zach Supalla - CEO of Spark

05/10/2014 at 22:53 • 2 comments![]()

Following our post on IoT platforms, Spark guys reached out and asked if we can do a full interview. Given the unique position Spak is currently in (post-successful Kickstarter, betting heavily on Open Hardware and Open Software...) - we were more than happy to do it. And were pleasantly surprised by all the things we have learned along the way.

Here we give a full (uncut) transcript of our interview with Zach Supalla, CEO of Spark.

Hackaday: Tell us a little bit of a backstory behind Spark

Zach: OK, to rewind all the way back.. in January 2012 I started working on connected lighting product. The inspiration for this was - my dad is deaf and he has lights that flash when someone is at the door, when he gets a phone call on his tty etc. He and my mom had this problem that when he takes his phone from his pocket, he is completely unreachable and I wanted lights to flash when he got a text message. So that is really where all this started - I had a problem that was very real to me, which was my parents communicating and I saw a way to solve this problem with connectivity. If we make our lights Internet-connected, then I can figure out a way to get the signal that this behavior happened to his lights. But then of course, that grew into a larger concept of, well, why should it just be limited to the deaf? lights should be a source of information.. what if lights were more than just lights? what if lights conveyed information about the world and they did things automatically, not like... "I have a remote control on my phone", but like... "the sun went down and lights automatically turn on"-kind of home automation. All to do with lights connected to the Internet.

So we launched this product which eventually took the form of a little plastic thing that sits between your lightbulb socket and the light, that was wifi-connected, sort of WiFi-connected light dimmer switch integrated into light socket, called SparkSocket. It launched in November 2012 and failed. We had a goal of $250,000, we raised $125,000. And so we felt like there was something there, we still had 6-digit raise and 1600 backers but it wasn't enough to cover our manufacturing cost. And so, it took us a while to realize that we needed to change. The feedback that we had received from the market was that our product was not good enough and so over the course of the next few months we struggled to figure out what we should be doing and so we have joined the incubator program called HAXLR8R that was based in Shenzhen and moved there for 4 months during this process.

And we have settled out after a bunch of iterating. We saw an opportunity that there are cool new things you can do when things are connected to the internet and the challenge that we felt firsthand was that it was... hard. The tools that were available were either so difficult to use that they were not really solving the problem or were very closed and very risky for us where as a startup we can't use that because we'll be tied into somebody else's system and we really didn't want that. And so we thought... what if this is what we do? What if we solve this problem of creating a tech stack that people can use for Internet of Things that we can make available to others and that's our business? We were originally planning on going straight B2B like we're going to sell it to Enterprise customers but we saw that there were dev kits doing well on Kickstarter and that Arduino-e stuff did well on Kickstarter... and, of course, that's where we come from - we're all makers, and thought... why don't we make this "Arduino-compatible" (which for us means.. same programming language) and sell it as a dev kit. Because, really, what we're trying to do is make it easier to make these products and that's a problem that is relevant for Enterprise and it's relevant for weekend hobbyist. And that's what turned into the Spark Core. And we launched it May 2 last year which was... a year and couple of days ago. And we had luck. We had a much lower goal of $10,000 this time - it was a much cheaper product to manufacture, we can make them by hand at small scale and mass-manufacture at large scale, so set a very small goal and we passed in a little over an hour and a half. And then the campaign kinda got away from us in terms of what our expectation was. Maker Faire was during that campaign and we had a lot of people stopping by and I think that contributed a lot to our success. And then, yeah, we shipped in November and since then we have been just trying to keep it in stock.

Hackaday: So, you made a comment that tools, devices and whatnot were too difficult to use. Elaborate on that a bit, give me the context. Too difficult for whom?

Zach: I think the good example is.. a lot of Cloud services that are available like Xively and Etherios I think provide valuable service but the challenge is that they are so... they require you to first get your hardware setup, so it might take you, depending on what you're using as a prototyping tool, it could take you a week or two to just get on the service. And when we looked at hardware stuff, there are some hardware modules that are really use to use, like RN171 - it's a great wireless module except that it's really expensive. So you can pick either easy or good for production environment but you couldn't have both and that was important for us - we want to make prototyping tools that people can get started with quickly but then when they have some intent to scale, they're either startup or a big company, when they want to make 10,000 of these things that they don't have to start over. That they don't have to prototype on one thing and then rebuild from scratch. That was very important for us.

Hackaday: So, in terms of the choice of device... what's the retail price on the Spark Core right now?

Zach: $39

Hackaday: OK, so the retail price on RN171 is $26. So, explain your characterization that RN171 was too expensive?

Zach: It's more about the cost when you start to scale. The problem with RN171 is $26, but when you start to scale it $16. When you're buying 100,000s of them, it's still $16. Spark Core is $39 because we're taking a TI WiFi module and adding some other stuff and providing firmware, but then when you go to scale, the CC3000 is a pretty cheap part. Their budgetary pricing is $9.99 at a 1000 units and it comes down from there. So you can buy them one at the time but you can also scale with this product. And that was important for us. We think the money matters in terms of how much product costs and pushing that price down is important. We want to make it available for more people and price when you scale is what's important for us.

Hackaday: So the scalability in that context is the move away from module to individual device, right? Where RN171 is all-encompassing - it is the module. You're talking about the scalability there is really in in move away from the module to the individual device and putting C3000 on the board and that's the secret to my scalability. Is that an accurate characterization?

Zach: Exactly, because our plan is providing dev kits so that people can prototype and so when they go to scale, the follow the reference design or our open source design and implement it in their product. And we're out of the supply chain. And that's great because, in the end, that's the cheapest way. Any time you add an layer to the supply chain you're incurring overhead and cost. So we want to provide a fully integrated solution that's easy to use but then when you go to scale, we want to get out of the supply chain so that you can make it cheap and make your product more affordable to your customers in the end.

Hackaday: Gotcha. Give us some background on the choice of CC3000?

Zach: This might still be true, but at the time of our launch, this was the only affordable WiFi module that you purchase in low quantities extremely easily. For instance, there are other companies that make affordable WiFi modules, when you get to scale - Broadcom and Qualcomm are two of note. One of the challenges of them is that it's difficult to gain access to these chips in low quantities. So, if you do have intent to scale but you're starting small because, maybe you're a startup - you can't. TI's chip is great because it scales but it's also available in low quantities. And to be meaningfully Open Source, that was important for us. And, you know, CC3000 has it's quirks, but that's one of the reasons we thing we can help with our firmware libraries to wrap it and abstract it. But I think it was an opportunity in that it came up just around when we launched our campaign so we have been taking this new product to the market that still haven't been vetted by anybody else.

Hackaday: Yeah, Broadcom and Marvell can be a pain if you're not doing high quantities. Even getting an access to a data sheet can sometimes feel like getting your teeth pulled. But, there are other devices on the market like, for example, Ralink MTK. You were in Shenzhen and there you're surrounded surrounded by all other sorts of devices you don't get to see in the West - devices that run that run Linux at much lower cost, ballpark of $3 and can run OpenWrt. CC3000 can't run Linux, it requires more in the way of traditional embedded. Explain that decision a bit..

Zach: It all depends on what is your application and what are you trying to do. I would say that Spark Core is good for some application and not for others. What we wanted to do is bring this stuff to a low-cost embedded world and there are things that are easier and better to do in embedded world then in a Linux-based system - realtime-stuff, low-level direct access to pins - that's what Arduino is good for. In prototyping world there's Arduino and there's Raspberry Pi. They're good for different things, although you see people using wrong tools all the time - you see people using Raspberry Pi for something that should be done with an Arduino and vice versa. And sometime you look at that and say, well ok, they're both easy to use and there's definitely overlap but we wanted to really focus on this embedded world and providing solutions there.

Hackaday: Tell us a little bit about the details of CC3000. Take us through what are the aspects of that made it the winner?

Zach: Yeah, and there are definitely other ones that weren't available when we started. Atmel has their chip now too and this is a landscape in which a lot of companies are investing. And we're constantly evaluating the landscape as we're thinking about our next-generation hardware. Things change all the time, and we change too because now we have access to things we didn't have access to back then. As a startup, we now can talk to people that we wouldn't be able to talk to if we haven't run the successful Kickstarter campaign and if we weren't already generating an active community around our product. So that changes our perspective. The main ones that we were evaluating at the time were RN171, GainSpan solution and the CC3000. And the main part of it was being affordable. There are some features that CC3000 doesn't have that others did but when it comes to providing something that's cost-effective solutions it's a trade-off you inevitably must make. CC3000 doesn't add up to 11n, it doesn't do SoftAP setup, they have their own thing SmartConfig, which is pretty slick but has its quirks also. And so when we launched this choice felt good, felt like a right mix of what we're trying to do. But as the landscape changes we need to keep changing to, we need to preserve our agility as a startup.

Hackaday: One of the things you're talking about in your top-level video is that "setup is seamless and everything is secure". That's a bold statement. Talk a little bit about the security model and the challenges for security at this level.

Zach: There's a lot of pieces of communication to "secure". One is WiFi setup - you're sending your credentials, how do you make sure those are not (openly) shared? SmartConfig is sending this as AES-encrypted and so that provides some security there, but one of the challenges for us is - we release our Open Source libraries, they contain the AES key. That is kind of half-way. So basically what we do there is - when someone goes to production with the product and it's based of SparkCore design, and they're not Open Sourcing it, they can use different AES key and make it secure. Then the other aspect is device-to-cloud...

Hackaday: Yeah, so you guys are using CoAP? With that, are you implementing DTLS?

Zach: So, we want to be able to implement good encryption standards, but we're also constrained in our application - how much RAM do we have, how much computational capabilities, we're not using a processor that has a hardware crypto... all of which which creates constraints. But we want it to be something that can run everywhere. So basically what we ended up doing was implementing RSA handshake off to a AES tunnel. You can think of it as a very slimmed-down TLS/SSL, we're managing certificates in a slightly different way but we're fundamentally using the same building blocks for encryption. And figuring out how to implement this wasn't a matter of finding the libraries that made sense - there are not a lot of Open Source embedded encryption libraries.A lot of them are under dual licensing, but we ended up finding an old XySSL (now PolarSSL) which was BSD licensed. The most important from security perspective is, I think, the fact that's it's open and auditable, so that anyone can take look at the process we're using for security and make sure it's secure. Because, when it comes to security, and this is true with all the things that have been going on recently in the security world with Snowden, NSA and all that is - what you need to feel secure is an open and auditable process.

Hackaday: It sounds like you're implementing something like what you would have under a UDP-based system?

Zach: Yeah, we really liked CoAP as a protocol, although we run it on top of TCP-based sockets instead. CoAP is designed for constrained environments and I think a big part of the reason it's UDP instead of TCP is how do we make it work on as small of a processor as we can. But our processor comes with TCP and we were like, OK we're constrained with RAM but we do have TCP, so we should leverage that.

Hackaday: So why not MQTT?

Zach: We have debated this for a while. What we didn't like about MQTT is two things: one, we wanted it to do request-response model and MQTT is pub-sub and second, it didn't define the payload which felt to us like it's not solving enough of the problem. So we can say - OK we will use MQTT but what do we say when we actually want to communicate? What does the message look like? So we ended up going down another rathole there... CoAP had much more of that defined and it felt like a more complete solution. In that sense, we are making some departures from standard implementation of CoAP, but we wanted to make sure it's based on Open Standards and the important thing for us is that we open source the libraries so that people can replicate the communications.

Hackaday: So what are the risks with some of these departures from the standard?

Zach: Well, we really liked CoAP as a protocol, but fundamentally, nobody is using it. So in that sense it felt somewhat unnecessary to strictly follow the standard since there was nobody to talk to, whereas if it was MQTT we would feel more pressure to follow the standard and at the end, the purpose of all this is to tell - here's what we think is a good way for device to talk to the Cloud, but if you want other communications, if you want MQTT, you can. It's Open Source, and many people have done that. If you look at our community and look at some things that people have built - MQTT is a common one. And we're all about that. I think that, if you're trying to figure out device-device, MQTT is a great way to do that. But the fundamental infrastructure we're providing is more focused on device-to-cloud and so, for that reason, we felt comfortable with the departure. But it's something we continue to keep an eye on. The nice thing about a system that supports over-the-air firmware updates is that we can make changes to that in the future if there becomes more reasons to be more compliant with the original spec, we will keep that in our consideration set and can make that change.

Hackaday: This raises an interesting question - a question of device-to-device vs device-to-cloud. In terms of speed, we have a different set of expectations if we're receiving a text message vs. say, a phone call. How is that problem solved in the Spark implementation?

Zach: I would say that we have not so much of a protocol, but a design pattern how to do local communication, because, inevitably, one of the challenge of this open source project is - you don't really know what people are going to make downstream, they are going to do different things and might have conflicting needs and we want it to work for as many people as possible without being super-confusing. So, what we have done is said - we have a Cloud communication setup, it's good for global communication, it provides a good authentication layer, you can also use it to do local communication - but it's higher latency. We try to keep latency of the Cloud pretty low, currently it's 100ms + round trip time which is pretty good, but then there are things where you want to have a really real-time communication. So the design pattern that we're encouraging and that we're seeing people really using is basically saying - if you want to do local stuff, you can open the direct TCP socket, but you can use the Cloud for authentication. Say, if I have a phone, I open up a TCP socket on a phone and do an API call to the device through the Cloud that says "hey you, device, open a socket to me...here is my IP address on a local network". So the Cloud have authenticated that request, so you don't have to have ports open, which can be a security issue, and then you can do direct communication. One day we might publish a protocol, maybe for CoAP, maybe for MQTT, maybe something else for what these communications should be, but in the meantime, people are just generally doing custom "this byte means X"-style protocols implemented for their specific application. But it was important for us that we open up TCP/UDP stuff so that everyone can use it and we also have a perspective of, when we're not sure about an answer for something, let's just leave it open and see what people do and see if community starts to fall into a pattern and then let's just adopt that pattern.

Hackaday: Can you characterize the community, what's the size? You have 15000 devices out there, is that spread across 7500 users?

Zach: Yeah, that's about right, it's somewhere in that range. We have a very active community site, I don't know the exact number but we have some over 10,000 forum posts covering everything. When we look at the community what are people talking about - it's very implementation focused but it's a really really wide range. But there are tons of things you would not predict - they're pushing this technology's limits and we're learning from that. It's great to have such vibrant community so that we can also understand what people are up to.

Hackaday: Tell us about the Cloud side of things? What does Spark Cloud look like? What's that implementation?

Zach: I think the best way to describe what our cloud is, because there are so much companies doing "cloud" services for Internet of Things and we're in the world where vocabulary is core, because a lot of these services are different from each other but there are no words to describe this market yet. But our cloud is fundamentally a messaging service - it's making sure you can communicate with your device directly. A lot of other cloud services are focused on data and data visualization but we don't really have that yet. We do hope to add these capabilities at some point, but whether it's building it ourselves or partnering with some of these other services, we're not totally sure. Right now it's really about making sure you get messages back and forth. We provide a request-response model for communicating with your device remotely where you can essentially call function remotely and pull variables out of local memory from the devices. It's sort of 1-1 communications and also pushing firmware 1-1 and we also have a pub-sub model where devices can publish data, we're also implementing devices subscribing to data, I think this feature goes out today or tomorrow. You can publish messages either privately and publicly and also subscribe to these messages from other devices.

Hackaday: Take for example a temperature sensor. If we want to know particular details, like temperature or some register values, is there a shadow instance of that data that exists in the cloud? Or are all these requests going the full roundtrip?

Zach: Yeah. Also one of the things we're not doing right now is queueing messages, if your device is offline - it doesn't respond. There is a whole queueing aspect that we're yet to resolve, there's also something along the lines of "virtualized devices", being able to update their information and change the state when they come online, there's a bunch of pieces we're playing around with and thinking how to implement them, whether they're valuable or not, but for now, it the most basic - getting the messages back and forth.

Hackaday: Let's take for example an ADC where we have accumulation of data which is taking place over time. Are there libraries that would allow you to do things like moving averages of whatever that data is, buffer handling and all that stuff?

Zach: So if I am trying to so something like that, store bunch of stuff locally, do some moving averages, there's some information on the device that I might want to process and some I want to publish, they might be the same but might be different. So in that case what I would do is have a local function running in a loop, write my own buffer averaging implementation with some output and then I can, depending on whether I want it to be push or pull, I can either publish the message every 1 second, 10 seconds... or I can just keep the running average in the local variable and then expose that variable so that I can reach in and grab the data when I want.

Hackaday: Gotcha, so it's basically up to implementer to solve that problem?

Zach: Exactly. And there are some things you want to do in the web world and some things you want to do in the device wold and I would leave it up to you to decide for a particular product where this logic should live. And it's usually a mix of what lives in a Cloud and what lives in the firmware.

Hackaday: In terms of the actual Spark Cloud implementation - it's processing requests, brokering transaction etc. but you did indicate that at some point you'll tackle the data side of this. Do you have sense on how would the model for that look like? Would people pay to store the data in the Spark Cloud? Also there are a lot of problems - data models, history models, permissions etc.. Is that a problem for Spark to solve?

Zach: I wouldn't say I have an answer yet. As far as our development process goes so far we still feel like we're fulfilling the promises from Kickstarter campaign. And I would say we're at 85-90%. We hope to wrap all that up by the summer and all the features we implement as part of that will be Open Source as well. And then, going forward, the next step is to say - what else do we do? What do we do on top of this? And then every feature we create will be a question of - what do we give away, what do we charge for, what do we build, who do we partner with, do we open source or don't and I think a lot of that is still hazy for us. But the most important thing for now is making sure we wrap this up and deliver on our Kickstarter promises and have a base upon we can grow and we're really proud of.

Hackaday: What are some of these things that you still feel you need to close on?

Zach: There are several things that we have been planning to open source but haven't got around to yet, mostly as a function of making them easy enough for somebody to actually use. For example - for publishing the open source Spark Cloud we need to put some command line tools in place and that's one of the next things will be doing. iOS app is not open source yet because there are some licensing issues we need to work out with Texas Instruments and that's been holding up for a little while. There is a patch for improving stability that TI has been working on that we cannot yet deploy because they haven't completely signed off on it yet. There's also bunch of smaller but important improvements we want to make to our development environment that we want to do. There's also some things like library system where we want to make it easy to extend and add libraries and right now it's a pain in the ass. So we're putting in place package management system that I think will be very cool. We also want to make sure that if you want to do development outside our Web IDE, we want to make sure that the command line tool is in the good position to support that. Those are the bigger ones, I am sure there are a lot of smaller ones that are not coming to my mind right now.

Hackaday: Do you think that an effort to build a Web-based IDE might be taking you back in terms of the time it takes to build a quality IDE and quality dev environment? Was building an IDE a prerequisite?

Zach: Yeah so the real "killer feature" that we wanted to have in is over-the-air firmware update. You can build Spark Core into your product or project and then push firmware without having to disassemble or plug into something. And it's really powerful when you have a product in the field. It's definitely not easy both from development and Cloud perspective. I think IDE is not definitely done yet but I think the structure we're building is very powerful but it's definitely not for everyone - I think it's more geared towards the beginners. Basically, we have people coming from 3 worlds - novices, that might be familiar Arduino, but to which most of this stuff might be completely new, you have people coming from the Web world and they're used to using editor - Sublime Text, Vim etc. and there is a third group, coming from the embedded world where they might be more familiar with, say - Eclipse and more comprehensive IDEs out there. And so what we want to do is say - we have a solution for all 3 of these groups. For novice - it's Web IDE, for Web guy we say OK you can use Sublime Text or Vim but command tool provides the build environment that you need and then for the embedded guys it's Eclipse using the command like as underlying build infrastructure. So eventually that's where we want to go and building out the Web IDE and making it better all the time is something we'll keep doing, but it's also important to have other development environments for these other developers and the command line tool is just now getting ready for people to start using it in that context.

Hackaday: How do you solve the "bricking" problem of cloud-deployed firmware?

Zach: That's tricky. It depends on what do you define as a "brick". I have not yet fundamentally heard the case of someone actually bricking a Spark Core, which I am actually happy with. What we do have is a lot of ways in which it can get semi-bricked, like it's not working in the way that's expecting and you can factory-reset to the original firmware, which is a good back fall. It's one of trickiest problems, and I am glad that we have a good factory reset implementation that makes sure that nobody breaks their stuff too bad and, of course, worst case you can use JTAG etc. In theory, you can always get your firmware back but making that robust has been a big challenge and I think with every firmware push it gets better. We're doing a lot of checksumming on the incoming data but there's a lot of edge cases to cover. One of things we have been the most frustrated with is that this is such a common problem for connected devices but there is no open source implementation that we could find that really solved this. So I am looking forward to when it's kinda "done" and we can say "look guys, here is a way" and that can be something that others use in their projects, that we can say "here is the right way to solve all the edge cases to make sure you have a really robust implementation". Because, it is tricky and there so many cases to cover.

Hackaday: You're absolutely right. It can always be recovered, but if I have a seismic sensor somewhere in the middle of Mojave desert I have a long drive to there to plug in my laptop to recover that device. So it's very interesting to hear you're solving that problem. It's a noble endeavor.

Zach: Yeah, and another thing is - we're very much in the world in which we do our development in public, this is all happening in Github repos that are publicly available, tons of contributions come from our community members. There are some massive stability changes that came from one of our top community members that are such an improvement to the firmware. And that's the part we love we love about Open Source - its all, to some extent under a peer review. When people have concerns about implementation, we provide an opportunity for some of them to dig in and say, there is a better way and, say, issue a pull request. And we're seeing a lot of that which is awesome.

Hackaday: Tell us a little bit about supply chain side - supply chain issues, lessons learn etc. as you're building the Spark Core

Zach: The nice thing about Spark Core is that, as far as products go, it's really easy to make. I love that there is no plastic. Of course, that's true of any dev kit, dev kits in general are easy to make, but thank God we don't have injection molded plastic. I am grateful for that every day. So our supply chain challenges are relatively narrow. Supply chain is always hard and messy, but ours is relatively easy. We have a manufacturing partner in Shenzhen, they do everything, which basically involves surface mount and hand-soldering of pins, packaging and that's it. It's a pretty short supply chain. I think in general the main challenge was - we have never manufactured a product before our first manufacturing run. And so, there are always a lot of surprises that come with that. It was things like, teaching our manufacturing partner how to bake components correctly so that the yield was high enough and learning from them about design changes to make on PCB so that we have a good yield. Our first run was 10,000 units and manufacturing process was total just 3 days, because it's just surface mount, which is great, but also a problem because it provides no opportunity for feedback in the process. So basically, you're going to do 'em all so you better hope they come on the other side good. So we had to have a process that we're really comfortable with before actually starting the line. I think on our first run, yield was 95%, our second one was 99% or better and our third one was as well. It didn't take us that long to get to the yield that worked for us.

Hackaday: That's extremely high for boards. Is that all the way through the assembly? Because, there is a tendency for board shop not to return the panels that had bunch of failures on them.

Zach: Right. We don't see the failures there, we just get the good stuff.

Hackaday: What's the monetization strategy moving forward? Is it selling the hardware, selling the software platform? Is it support services?

Zach: It's a mix, definitely, of all three. One of the challenges we have been working through is... how does an Open Source company make money? I think it's not just a question for us, it's a question for a lot of companies that go into this space and there is a bunch of different models that we have been looking at to see who has figured this out and what can we learn on how they have been able to monetize their product. I think the way we have come out of it is basically the feeling that what we want to do is provide basic technology as Open Source and more advanced that we charge for. And so, that will be essentially providing additional features that we charge for and also focusing on helping support companies with large-scale deploys of connected devices. If somebody is deploying 10,000 or more devices, we're building a lot of expertise on scalable systems and our cloud is designed for "infinite scale" and we can sell services, which include SaaS but also implementation and other services along the way to help companies deliver these large-scale deployments. So, there is a core "cloud service" / "software as service" model of helping larger companies and startups to achieve that scale and then other complementary services. But these days, all we make is hardware.

Hackaday: So you need to get the data back-end side worked out to adopt that model?

Zach: Absolutely. And I think that will inevitably be the part of our next feature push for this summer. We have a couple of bigger customers that we're working with that will be helping guide us in terms of what they need. We're very customer-driven in how we do our product development. In Open Source world that means just opening everything and letting people participate and in enterprise world that means, we have some pilot customers, let's work through with them on what their needs are and how we can help them solve their needs.

Hackaday: Is there a path in which you partner with someone to build services you need on the back-end?

Zach: I think we'll end up doing that to some extent, because there are so many ways for data to be used and also many of our customers already have some data infrastructure in place for other data. And so, there are some problems which are device-specific and common problems that everyone will have about, for instance, high-level visualization of top-level metrics coming off the devices and being able to visualize logs that devices generate. I think that we will provide as a common interface because. There are some problems that are the same everywhere and we should deliver that as a service and there are other problems like - you have an ERP system, you don't need a second ERP system, you just want to plug your data into your ERP system and I think inevitably there will be both. And we'll say - look, we'll do some things ourselves but then we can also plug into various systems that you already have to pump the data to where you want it to be. I think fundamentally one of the issues we're seeing in market right now is this question of who owns the data, because you have IoT "platforms" that have the perspective that they really own the data and you as a manufacturers of the product are "renting" the data. I think our perspective is much more of - you made the product - it's your data. And we're here to help facilitate you having access to that. So we're putting in place products that feed your data back to you. But it's not data that we own.

Hackaday: Just one final question - you have gone to the process of being an Open Hardware Company, do you have a sense that that is going to be a problem in the long run? Or do you think it's potentially a big differentiator?

Zach: It's definitely different, I suppose. I think and hope it's more of an opportunity than a handicap. I imagine there will be cases where it is though. We had a conference where someone in the audience was talking about how he was making a decision between Electric Imp and us and ended up choosing Electric Imp. And it was a conference between me, Bunnie Huang and Jason from BeagleBone all on one stage and we asked "Why did you choose Electric Imp?" and he said... "well I thought Open Source means... lower quality, so I went with Imp". Bunnie and Jason and I were like "what the hell?? what are you talking about?". He talked about it - he's not an engineer and he comes from more of a business side and he sees more of a Photoshop vs Gimp and feels like Photoshop is a better tool and that proprietary tools are generally better. Whereas I think, if you're coming from more of an engineering perspective you're thinking Linux vs Windows and Linux feels like a better tool than Windows. And so, I think, in the consumer world, consumer proprietary products are usually better... because, design is hard to do well Open Source, whereas fundamental infrastructure, backbone kid of stuff is better in Open Source because it gets peer reviewed. And so I think it will be an asset but I think it's definitely going to be a marketing challenge or communicating that, we believe that Open Source leads to a better product. And I also think there will inevitably be some customers that we will loose out on because, instead of using our hosted managed infrastructure, they will build their own using our Open Source tools. I think that's OK. I don't think we're a solution for everybody and inevitably we're not going to get every customer in the world. I don't think Internet of Things is a world where anybody "owns" it. It's not the AOL of Things. We just see a huge opportunity and a growing space to solve the problems that companies are going to be facing. And so I am hoping that Open Source will be an asset. The other thing is that, Open Source Hardware... I think it's funny, I don't know why anybody doesn't open source their hardware. Because it is Open Source. All you need is an X-Ray and it's so easy to reverse-engineer a hardware design. I feel that anybody who doesn't open source their hardware is just... he might add a week to the time it take for someone to copy you, but it's just not that meaningful.

Matt Berggren / twitter.com/technolomaniac

Aleksandar Bradic / twitter.com/randomwalks

-

The Promise (?) of Online EDA

05/09/2014 at 07:10 • 0 commentsAfter nearly 15 years building, selling, supporting, implementing, and yes, even using, EDA software - schematic, PCB, FPGA, SIM, SI, etc. - I’m convinced I must’ve missed a meeting somewhere. Slept clean thru it. Perhaps there was a meetup somewhere where would-be entrepreneurs speed-date their way thru 5 minute vet-fests, but it seems, from the collective ether, a whole gaggle of guys cropped with precisely the same idea at exactly the same moment in time...entangled states perhaps. And here I’d fallen asleep, waiting for something to build.

![]()

The notion? Build an online schematic and PCB tool.

Led by the likes of Upverter, DesignSpark and others, it’s like waking up in a parallel universe -- though strangely reminiscent of the 80s when desktop EDA SW became all the rage and we were disrupted by the ease and disease these new entrants created in a market dominated by dedicated CAD machines, and legions of guys with scalpel skills to make a surgeon blush (here’s to the Minions of Mylar!).

The premise: to make an online EDA tool. The promise: We can do it better. We can do it cheaper. We can do it smarter. “...It’s time for a change.” The reality? So far a boulevard of broken dreams, unfulfilled promises, and ill-spent VC dollars (that’s your money too folks). Though before we pack it in and sneak out through the bathroom window, all’s not lost. Just misplaced perhaps. And as for that last item: ‘it’s time for a change’ ...this still rings profoundly true. But it’s going to take more than a few years of Javascript experience and a double-E degree to get there.

Sage wisdom: building an EDA tool is hard. And there isn’t a REST API on the planet that’s going to save you. It may get you that next round of funding but the problems of EDA are hardly exclusive to the tools. The concepts are hard. The data models are hard. And teaching people to adopt a new way of thinking about the engineering problem (!) -- that’s the hardest problem of them all.

![]()

More background: For years I worked as an FAE (amongst other roles) rocking up and rolling out EDA software in various companies large and small - software that had extraordinary features for collaboration, sharing, managing quality, ensuring compliance, communicating intent (sound familiar?) - and time and time again, saw the promise of these new tools dashed by an inability or unwillingness to adapt to a new way of thinking, a new methodology. Sure, the tools had their share of blunders - not everyone’s a winner - but overall the software was indeed a part of the solution, and evolved into something solid, or in some cases, even spectacular! (few tools truly suck these days)

But it’s this lethal combination of technology and people’s adaptability that *must* intersect, for anyone to be successful evangelizing a new approach. And what you’re endeavoring to do, is a new approach in a market that moves like medicine.

All smoke and no fire

To-date, we’ve been over-promised tools that have seriously, profoundly under-delivered when contrasted against their desktop counterparts...simply stated: they are largely unusable (there, I said it). Sure you can get something together, but would you want to?

And what’s altogether worse is that the self-promotion has only focused and refocused attention on an opportunity that those developing these things seem all too ill-equipped to deliver on.

See, the risk is that the “bridesmaid’s at the karaoke bachelorette party” enthusiasm to be out front, owning the mic & seen changing the world only works if we’re converting on the back-end. If not, the media hype only calls attention to our inability to do this right, and as user enthusiasm wanes, so does the available capital as VCs lose interest and move on to more obvious wins.

The challenge to the anyone endeavoring to build online tools is that minimum viable product is scary-hard. You should know this. So let’s stop talking about changing the world, and figure out how to do it. In this series, I give my two cents on just how to do that...

Level set

Firstly, ask yourselves: Have you ever built electronic products and taken them thru to production? Do you have a sense of what that involves? Truly. (Not bullshit...it’s ok, this is just between you and I ;). Have you ever worked in a PCB fab or assembly house? Have you ever worked on the supply chain side of things and seen that process first hand (not one board, try 5K, 10K, 50K)? Have you ever owned a copy of a top shelf EDA tool and used it extensively to build stuff in a corporate environment? Really gotten under the hood of it? Implemented and had to support it? Learned the reasons behind the features that make some really special, and others tragic.

What about complex enterprise software that involves revision management, sign-offs, etc? PLM tools? ERP tools? Ever built any? Implemented any? Ever had a company’s future success hinge on decisions you’ve made implementing any of these?

See, here’s the deal, don’t pack up! It’s ok. We just need to understand the background around what we’re endeavoring to do. <Reassurance> A little secret: many of the guys building EDA tools hadn’t done a lot of that stuff either until they got into the biz...but over time, they got wise from the guys that had. Your day will come. Just don’t mistake experience for baggage...It can be a fatal mistake.

Solving the hard problems...

Simply put, so far, you haven’t solved (m)any:

How about good schematic wiring? How about drag on schematic? How about complex buses? Complex hierarchy? How about selection and filtering of objects? How about polygons on PCB? Overlapping polys? Pour order? Different connection styles? Split planes? Plane rules? Polygon rules? 2D/3D? Handling images? Logos? The huge numbers of objects you have to display in PCB? Zoom? ...Try it with 400K primitives in the window at a time. Really nailing Gerber and ODB export? It needs to be

100%. How’s your PCB rule system? How about sourcing & supply chain integration? Parts equivalents? Variants? The list goes on.

Now you say you want to do this in a browser? Egads!

![]()

And this is to say nothing of high speed or precision RF work, which I won’t even begin to get in to. Match lengths for impedance controlled differential signals spanning multiple layers with series terminations and you’ll know what I’m talking about.

See most of these may seem like corner cases to those that haven’t been around companies with more than a few products, but they’re not. And many of those serious about design need these tools to be successful -- even startups. Or perhaps better: especially startups. Afterall, who will benefit most from free or ultra low-cost tools?

The point is, we need to ease into it. No one likes a cannonballer and you are jumping in right next to the water-aerobics class at the senior’s center.

Stage setting

Throughout this and my follow up article, I’m going to offer some suggestions on how to sequence this thing and how to build kickass online tools for engineering. Flame me if you like, call me a relic, blame me for all that’s wrong in EDA...But you can’t say this: I made EDA profitable. I can.

Pick your battles

Let’s begin with the all-important question ‘does it make sense to do this a browser?’ Or perhaps better: ‘what part of this provides the maximum return to our users if it happens in the browser?’

Browsers are - as you know - tools for people to interact with data and people across vast distances. But ask yourself (or better: ask the users...yeah yeah, I get it...’faster horse’...just bare with me):

Is it truly the ability to route a PCB in a browser that people are after? Or is that a problem we can “get to”?

Even schematic - though this definitely trumps PCB in terms of sequencing:

Was there a pitchfork wielding hoard of folks lined up outside demanding browser based schematic tools?

Of course not. Many people like the idea but that isn’t enough. So what parts of these broader “areas” of design (schematic and PCB) might folks truly want? Where can we make a dent? Where can we create the most value?

That doesn’t mean you abandon the dream, but the things you should do or can do in the browser today - are things that will prepare you for the day when you have the data models worked out and the utility to a level where it rivals desktop tools...And what's more, you can deliver real value today.

Subtext: There’s a lesson in just learning to render something and maintain it’s intelligence...Start there, figure this out for the myriad design styles that people employ and you have a really, really hard problem solved! And we’ll all love you for it. I promise. More importantly, we'll create value.

Who you calling git?

![]()

So what was that? You say you want to be the GitHub of electronics design? Awesome. A great place to start. Opportunities abound! Abstraction, sharing, collaboration, reuse! Excellent idea! Let’s start here and build a winning strategy; there’s SO much we can learn by doing this.

Version control tools like git and GitHub, Bitbucket, etc. all built on top of it; facilitate collaboration, sharing, revisioning, reporting, comparison, history management, etc. But more pragmatically, they are a great way for a company or an individual or a group to track products and their dependencies over time. And these are big concepts (ask PTC, Siemens, Dassault, Aras or any of the others feeding their families slinging PLM systems). They mean something to a company who has anything like a history of building products.

Managing the resources engineers use and share and demand along this temporal dimension is a powerful first step on the road toward understanding the data and how best to model it in the browser environment.

Now no use trying to compete with the aforementioned PLM big boys just yet (an exit? perhaps) but rather, let’s build utility that serves engineers. This will build audience. PLM is a big, unwieldy thing to try and bite off...but I promise, PLM providers will gaze longingly, lovingly in your direction if you can peel back the outer shell that is the black box of electronics. They have all tried to varying degrees of success to unlock the electronics and model them correctly and anyone that ventures in this direction is sure to get noticed.

What’s in it for me?

The average Joe - of which I am one of them - could use really good online markup, sharing, collaboration, history / revision management, etc. tools for managing schematic content and communicating intent (it may not be obvious to folks just yet, but hang in there). But also consider, Version Control possesses the ability to compare, merge, roll back, etc. And hooks to generate notifications, what-not. Sprinkle in really good navigation tools and back-end integration with the systems we’re already using, and you begin to see a narrative unfolding (i.e. I don’t change compilers to use git, I bring git to the IDE).

Is there money in there somewhere?

There are at least two business models in there (and real value) that are either not served, underserved, or perhaps overserved by big, unwieldy enterprise software.

Looking at what you’ve got today, ask yourselves: Can I check in design content? “Yes.” Great. Can I view it? “No...or kinda. Some.” Ok. Fail. Let’s figure out how to render EDA content in a browser. Graphics on screen. And let’s do this while maintaining a minimum of intelligence.

What’s a minimum you ask?

Nets and parts and parameters. Start with schematic and work forward from there. Schematic is good for now.

Can you read an OrCAD schematic? “Nope.” Why? “It’s a hard file format to crack.” I know. Crack your knuckles, grab your favorite hex editor and pull up a chair...Someone’s going to be here for a while.

Can you import an Altium file? If not, why not? That’s not as hard and probably number two in terms of popularity behind OrCAD schematic. And of course, there’s Eagle and KiCAD. (Must-haves if you want to service a market that has really made a career out of free or low-cost software tools. These will be your biggest fans when you crack the code on a really awesome online package.)

But don’t stop there...What about PDF content? Can you crack that code?

You’re going to work toward a normalized format but don’t let this be a barrier to getting things to display and getting some level of intelligence into the browser. Get it to display. Keep the BOM intelligence behind it and parameters and net detail. Fake it. You don’t need to or want to try to infer net intelligence any more than you have to and this is a nice, straightforward(-ish) way to do it (ok, some formats don’t have nets in the files - they're generated after the file loads - so you’ll need to figure it out after you read and process the data...it’s a hard problem. But remember, we’re solving hard problems now.)

Make it possible to inline schematics into forum posts and blogs. Think Wordpress widget. Make it intelligent enough that the application schematic in datasheets are living, breathing things with more than one-dimensional intelligence to them. Link SPICE netlists to the back end and allow it to run simulations from the content you supply (albeit limited in it’s practical intelligence, this is just another document behind it). Berkeley and XSPICE engines are both freely available. Do that? And you have something special! Magical!

<Applause>

This makes datasheets pop. Imagine simulating in the document. Curves that actually display as interactive elements. Manufacturers will sit up and take notice. It makes the social web come alive with content that as yet, is flat and stupid. People will take notice. Make it searchable by crawlers. Make sense of the data! Search engines will take notice.

<More applause>

Now another important feature of this is versioning and like software, we have a hierarchy of dependencies we can do cool and interesting things with. e.g. Schematics contain parts, which contain pins, parameters, whathaveyou...And all of this can be modeled as a series of things to be versioned (if you want to get really fancy).

In its simplest form, treat it all the same (flat) and solve the problem of comparing two schematics or two pcbs to one another...Or two library components. If you want to get kung fu about it, maintain the hierarchy of elements and assign revisions that span the hierarchy with tags relating each dependent item to the higher order elements. That? Oh that’s awesome stuff. PLM providers and users alike should (& would) sit up and take notice.

Heroes are born.

![]()

Now, we don’t stop there...We’re just getting started. In my next piece, we’ll look at the move from this first-line functionality into other more interesting areas. Things like file conversion, models for socializing circuits across the open web, higher abstractions, and a whole heap of other things that will take us forward in a direction that means we have the intelligence right before we attempt full-fledge schematic-in-a-browser. It’s not that far off, but I would hope that if we sequence things right, when you get there, there’ll be far fewer disappointed users, angry VCs, and misled media lined up behind it. And it may turn out that many of those “hard things” don’t really matter at all...If you have the users behind you.

We’ll get there. Patience.

Promise.

matt b. / tlm - may, 2014

-

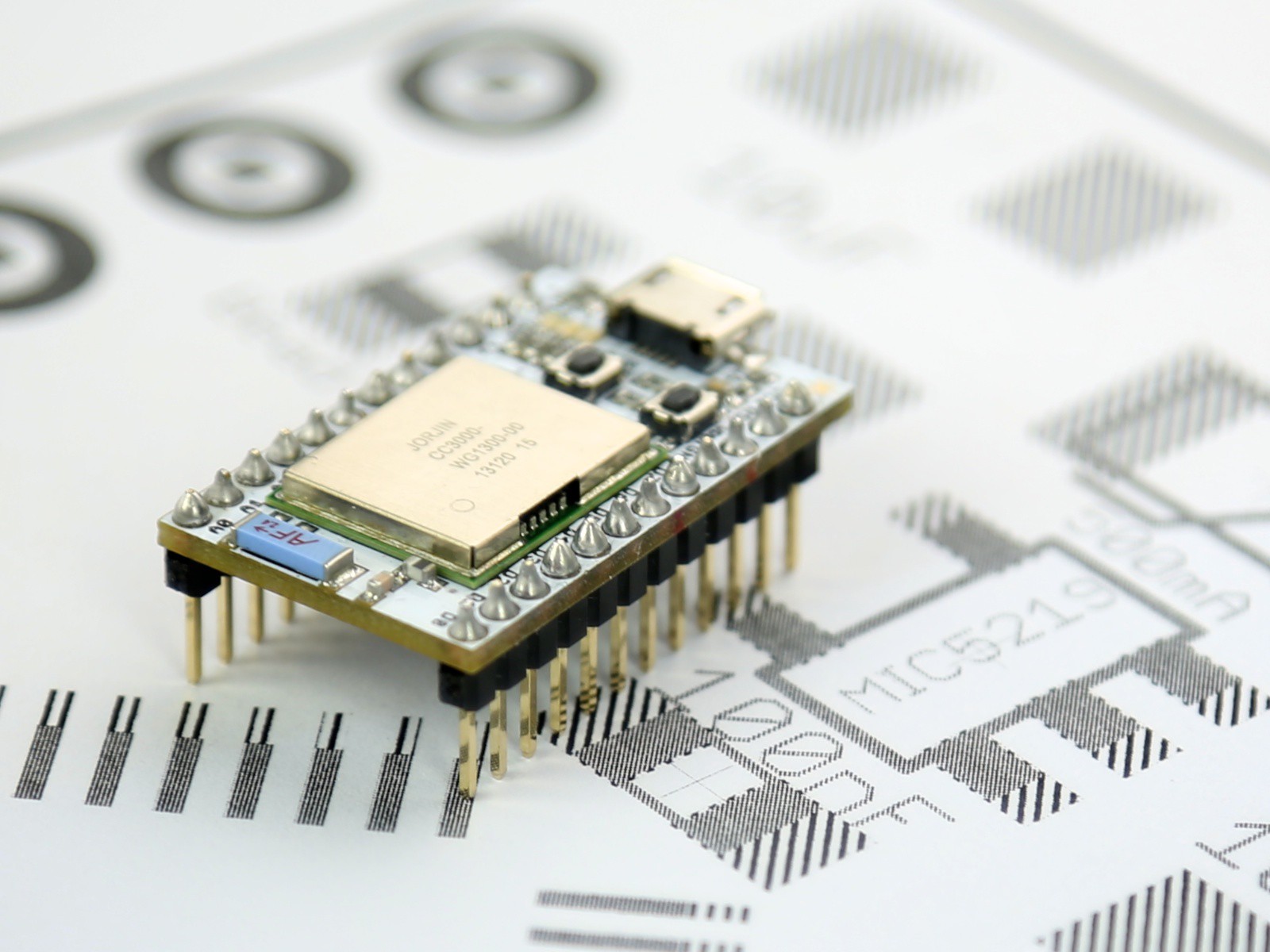

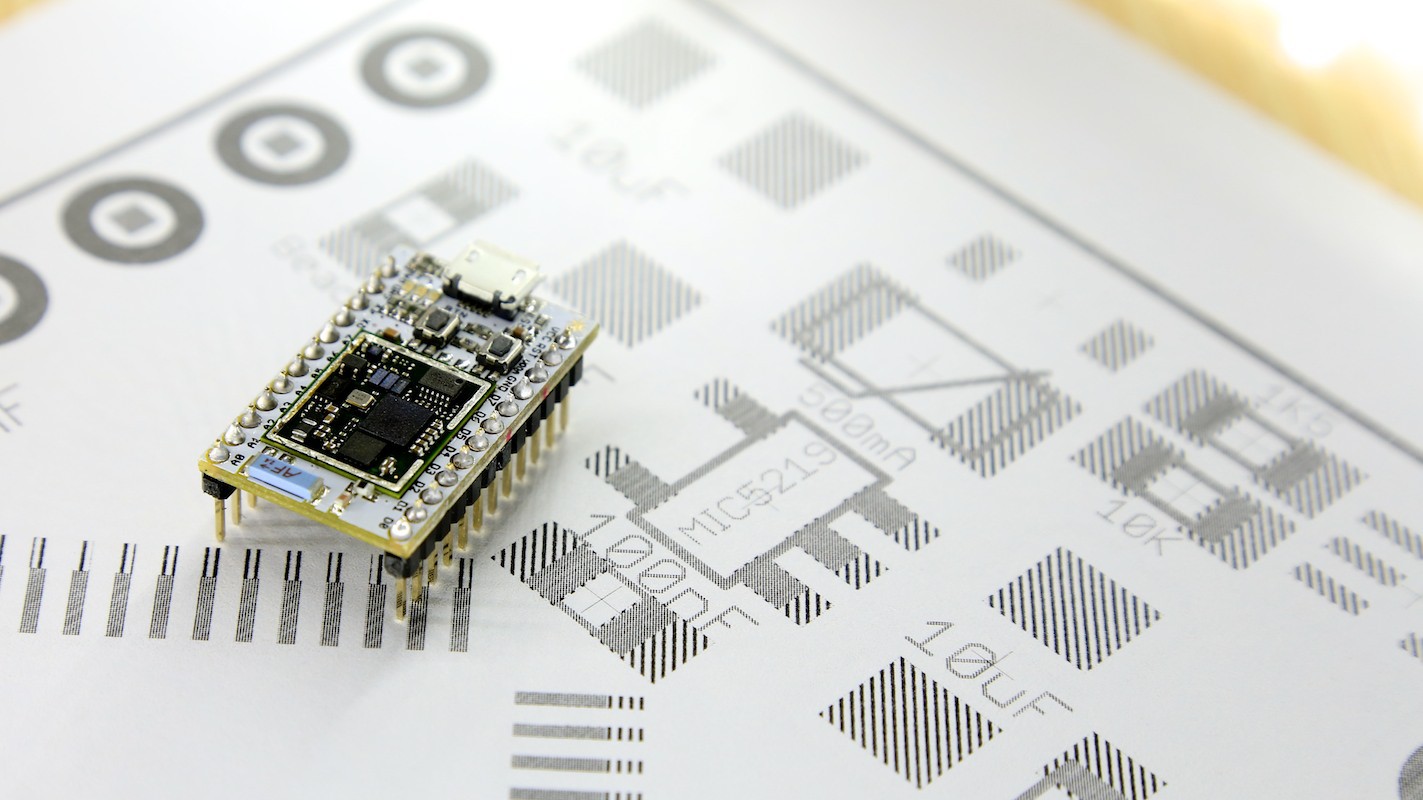

Spark Core and the battle for IoT "platform"

04/26/2014 at 11:41 • 1 commentIn a world of tech fairy tales, there would be nothing wrong with telling the Spark Core story as yet-another Kickstarter miracle that surprised everyone, especially its founders, who were subsequently left with only one option - to go ahead and change the world. "People have spoken", the Oracle would announce.. "now go and fulfill your destiny". Intentionally or not, Kickstarter has been often instrumental in myth-making and repackaging of impulse reactions to a particular product pitch into ultimate and irrevocable expression of collective free will. Some people just take the whole crowdfunding thing at face value, while others know how to exploit it for fun & profit.

![]()

Spark guys were certainly on the "smart" side of this dilemma. Six months before launching the campaign, they joined HAXLR8R, a San Francisco based hardware accelerator program. There they have refined the pitch, product and identified the market and target group that was most likely to help in taking the whole thing off the ground. The market was Maker community, and the trigger was - being a WiFi shield for Arduino that can also operate as an independent device (although these features were presented in reverse order). In the spirit of "lean" playbook, final step was playing the Kickstarter card in order to test the product-market-fit. Learning from experiences of their previous (Spark Socket) campaign which failed to reach its $250,000 funding target, Spark Core set a relatively modest fundraising goal - only $10,000. And knocked its socks off. Being 50x+ oversubscribed just makes everything look so much better.

The product itself is exactly what you would expect out of Y Combinator-style accelerator in 2013 - a "Platform for Internet of Things". People love to fund platforms. They are much more lucrative than the product you sell a piece at the time. Platforms are about a steady stream of recurring revenues, long-term user lock-in and high switching costs, network effects, "app" ecosystem, scalable business models... the works. Selling X "units" of a product only yields revenue that is a function of its net margin. Bringing the same number of users on a "platform", on the other hand, can be worth anything you can dream of. And investors like to dream.

![]()

Great news for Spark guys was that, around the time, Texas Instruments came up with a module that's just a tool for the job - CC3000 wireless network processor with a price tag in the low $10 range. It was not the first such module on the market, but with features such as Smart Config, it was the easiest to use and apply to most real-world situations straight out of the box. Startups love things that work out of the box - it leaves more time for all the handwaving.

Another factor was the fact that the module has already generated some buzz in the maker community, and it was only a matter of weeks before someone was to create a popular Arduino breakout board for it. Spark guys saw an opportunity here and jumped right on it. They were also smart enough to realize that being married to Arduino might not be the smartest business move and decided to up the price a bit and include an onboard SPI controller of it's own - STMicro's STM32F103 Cortex-M3. Increase in BOM cost was roughly $5 (microcontroller itself is in the $3 ballpark), but well worth the cost - suddenly the product was not limited to a relatively small market anymore (~1M of Arduinos sold in the entire world). Now it was a device that can be plugged into "anything". And that gives marketers limitless bragging opportunities.

![]()

The question is - how does Spark become the Platform for Internet of Things? Sure, it does provide a drop-in worry-free Internet connectivity to any hardware device, but at $39 price tag, how does it get designed in? For Makers, handing out this sum for one Spark Core to hook it up to that one Arduino sitting in the drawer is a no brainer. But will it ever get designed in into actual products that are produced in thousands if not millions? Even more so because the actual "internet connectivity" is not achieved by Spark Core, but rather by TI's CC3000 chip. Software guys are used to the model where you take a library that does something complex (and that you did not write), wrap it into a simple API and then resell API access at massive premium. Hardware guys, on the other hand, still care about every single nickel of cost and don't like paying a premium for all the fluff.

Another aspect is the cost of the module itself. Given that It aims at having a pretty general functionality and uses quite expensive chip, the cost of components alone (even at significant volumes) ends up in the high $20 ballpark. With all other things on top, this leaves a fairly narrow profit margin for Spark. Also, we know there are similar sub-$10 drop-in WiFi connectivity modules out there which might not be as easy to use, but not too hard either. To make matters worse, if you were to try to strip down the cost of the board itself (the BOM and all the schematics are open) you would end up with something that's essentially just a TI CC3000 reference design. So why do you need Spark for and how does it get designed in?

![]()

It would be tempting to dismiss Spark guys at this point claiming that it will "never work" as a big business, but this part is exactly why people go to accelerators and things alike and immenselyout there

If the design was closed, the board would never stand a chance of getting designed in into anything aimed at mass production and would probably remain in the hobbyist realm forever. And at that point it would just become yet-another board on the Adafruit / Sparkfun catalog. However, exactly because the board open, there is chance of serious engineer picking up the whole stack as a development platform, thinking he will just reimplement it later on his own and wouldn't have the pay the full price.

And therein lies the catch.

![]()

The catch is in the fact that monetization of IoT "platforms" like this simply can't be about the hardware. In that aspect, it will always be a race to the bottom and it is the manufacturer's modules that will end up being designed in at the end, not the board that someoneXivley

That is not to say that using REST APIs to drive IoT devices is not the right way to go. On the contrary - it will allow moving all the complexity and computational requirements back on the server side, which will provide a lot more power to every single IoT device at only a fraction of the cost and allow for previously unimaginable applications. This shift is bound to happen because of the economics of the whole thing - deploying expensive devices as endpoints, where they will idle most of the time, makes no financial sense. Centralization is known to solve this very efficiently and is a direction that everything in this space will take, sooner or later.

And this is the reason everyone is jumping on board wanting to be "the" platform for Internet of Things. Once you're providing services in the 'cloud', things get obfuscated very quickly and you can claim that your "minimum viable product" does magic and get away with it (as people have learned from Spark's sensationalist publicity stunt earlier this year). And it would be crazy for so many software guys out there to miss such a rare opportunity at building a simple Rails+Mongo (or any other combo of choice) API and calling it the "Cloud Platform for Internet of Things".

Spark did an incredible job at writing the playbook on how to get there, but now it's up to everyone else to replicate and see if they can take it up it a notch. It will be a while until any of these platforms starts getting built-in into real products and until then, the IoT "platform" battle is on.

Aleksandar Bradic

The Hardware Startup Review

Weekly insights in Hardware Startup Universe. Hackaday-style.

Aleksandar Bradic

Aleksandar Bradic