-

Battery Charger Circuit

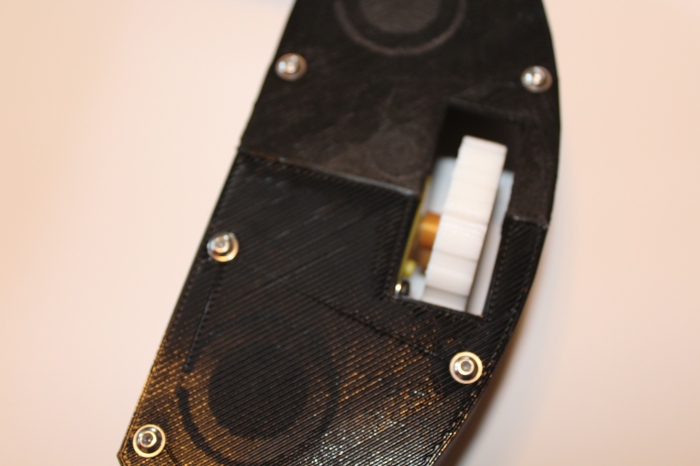

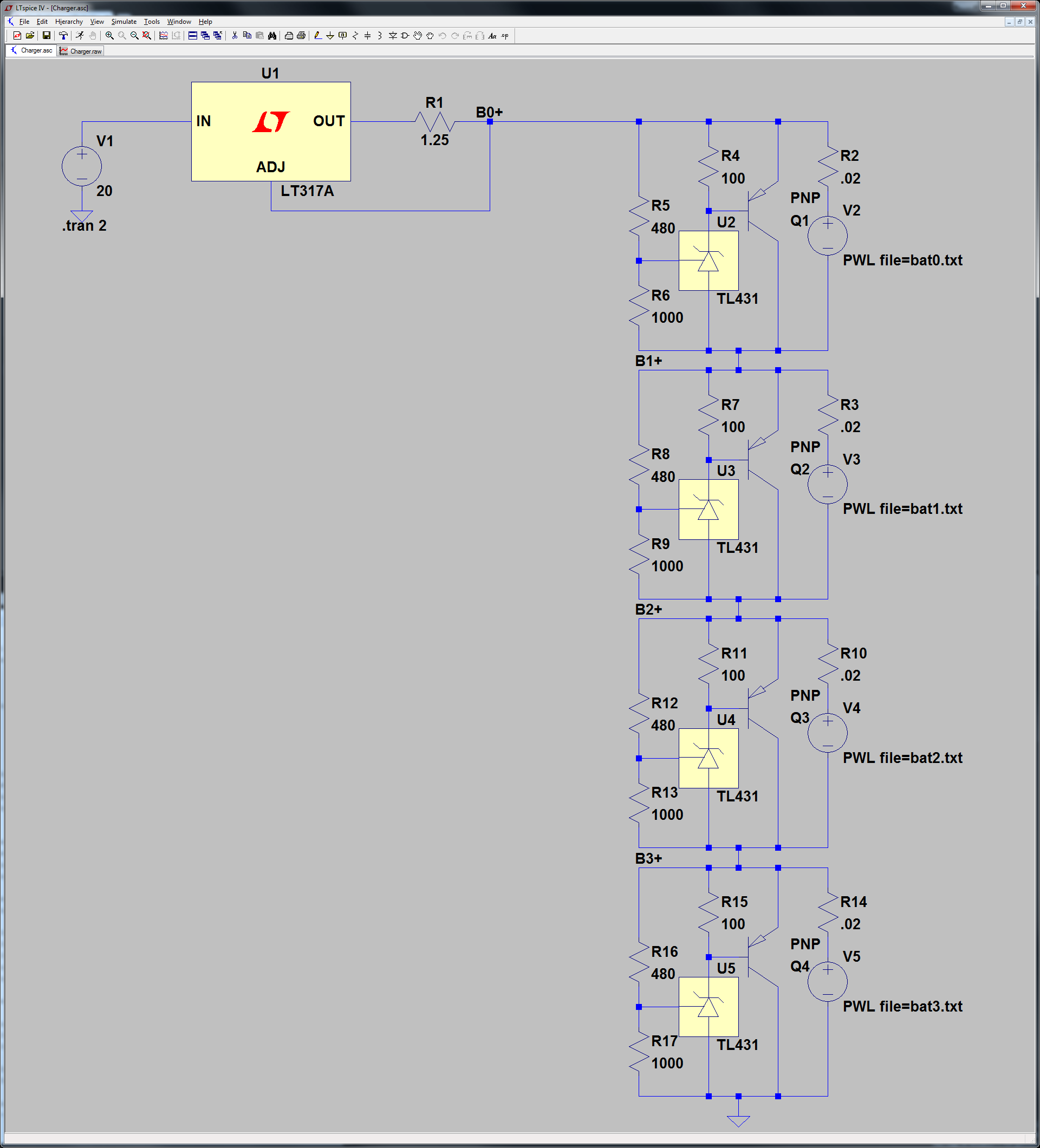

07/15/2016 at 00:29 • 0 commentsSince I decided to use a 4S LiFePO4 battery in my previous post, I’ve been looking online for charger design resources and examples. From what I gathered from several sources, I’ve come up with what I think is a circuit that will work for charging the LiFePO4 batteries. Before I go into the details of the circuit, however, let me briefly cover the basics of LiFePO4 charging.

LiFePO4 Charging Basics

Most of what I learned about LiFePO4 charging comes from this article which goes into great detail about the charging specifics, but which I’ll summarize here for the sake of brevity. LiFePO4 uses what’s called CC/CV, or constant-current/constant-voltage, charging. This means that a constant amount of current is supplied to the battery when charging begins, with the charger increasing or decreasing the supplied voltage to maintain the constant current. Once the battery reaches a certain voltage, 3.65V in the case of LiFePO4 batteries, the constant-current phase stops and the charger supplies a constant voltage to the battery. As the battery gets closer to fully charged, it draws less current until it’s full.

Constant-Current Circuit

![LM317]()

The constant-current circuit was easy enough. The popular LM317 regulator has a constant-current configuration, shown by Figure 19 in this PDF. Based on the equation in the attached PDF diagram, a 1.25 Ohm power resistor would result in 1A of constant current.

Constant-Voltage Circuit

The constant-voltage circuit was a little more complicated to design. The difficulty with supplying a voltage to four batteries simultaneously is that the voltage needs to be evenly distributed to each of the batteries. This is called cell balancing. Without it, one battery may charge faster than the others, resulting in a larger voltage drop across that particular cell. As the battery pack becomes more charged, the individual cells will become more unbalanced, resulting in wasted energy and decreased performance. Once I took this into account, I realized I needed to both keep a consistent voltage across the battery terminals, and redirect current away from the battery when it had completed charging so that the other batteries could finish charging as well.

With this in mind I looked into using Zener diodes. These operate like regular diodes by only allowing current to travel in one direction, up to a certain point. Unlike regular diodes, however, they allow current to travel in reverse when a threshold voltage is exceeded. They’re commonly used in power supplies to prevent voltage spikes. When there’s a spike in voltage that exceeds the threshold, the Zener starts conducting and acts as a short circuit, preventing the voltage spike from affecting the rest of the circuit. The problem with Zeners, as outlined in this post, is that they have a “soft knee” with a slow transition to conductance around the threshold voltage. This results in inaccuracies in the threshold voltage required for the Zener to conduct, making it too inconsistent to use as a voltage limiter.

Luckily that posts outlines an alternative. The TL431 acts like a Zener diode, but has a much sharper curve and a programmable voltage threshold. By using a voltage divider with a potentiometer, it’s possible to tune the TL431 circuit to conduct at exactly the desired voltage.

![CellCircuit]()

Above is the circuit I implemented. Because the TL431 has a maximum current rating of 100 mA, I opted to use a PNP transistor as an amplifier. When the threshold voltage of 3.65V is exceeded the TL431 conducts, turning on the PNP transistor and providing a short circuit to route current around the battery cell. The complete charger is made up of four of these circuits in series with the high side connected to the constant-current circuit discussed above.

Improvements

Once I’ve verified that this circuit works and properly charges a battery, there are a few things I’d like to eventually go back and add to this circuit. The first is an undervoltage detection circuit. Lithium rechargeable batteries become useless if they’re ever fully discharged. This full discharge can be prevented by cutting off the power from the batteries before they reach the absolute minimum cell voltage. This is ~2V in the case of LiFePO4 cells. A circuit similar to the one for maximum voltage detection could be used to check for the minimum voltage and disconnect the cells, preventing permanent damage to the battery pack.

I’d also like to add an output for the total battery pack voltage so a microcontroller can measure and detect the state of charge for the pack. This would involve using a voltage divider to provide a divided down voltage within the measurement range of standard microcontrollers.

Next Steps

I drew up the circuit schematic described above in LTSpice, a free circuit simulation tool. Despite LiFePO4 batteries being safer than LiPos, I’m still erring on the side of caution and will check my work every step of the way. Simulation seems like the next best option to actually testing a physical circuit. My next update will outline my simulation parameters and the results I saw.

-

Long Term Plans

07/12/2016 at 00:47 • 0 comments![ProductHierarchy]() With the beginning of the Automation round beginning today, I decided to sketch out some of the long term plans I have for OpenADR. All my updates so far have referenced it as a robot vacuum, with a navigation module and vacuum module that have to be connected together.

With the beginning of the Automation round beginning today, I decided to sketch out some of the long term plans I have for OpenADR. All my updates so far have referenced it as a robot vacuum, with a navigation module and vacuum module that have to be connected together.The way I see it, though, the navigation module will be the core focus of the platform with the modules being relatively dumb plug-ins that conform to a standard interface. This makes it easy for anyone to design a simple module. It's also better from a cost perspective, as most of the cost will go towards the complex navigation module and the simple plug-ins can be cheap. The navigation module will also do all of the power conversion and will supply several power rails to be used by the connected modules.

The modules that I'd like to design for the Hackaday Prize, if I have time, are the vacuum, mop, and wipe. The vacuum module would provide the same functionality as a Roomba or Neato, the mop would be somewhere between a Scooba and Braava Jet, and the wipe would just be a reusable microfiber pad that would pick up dust and spills.

At some point I'd also like to expand OpenADR to have outdoor, domestic robots as well. It would involve designing a new, bigger, more robust, and higher power navigation unit to handle the tougher requirements of yard work. From what I can tell the current robotic mowers are sorely lacking, so that would be the primary focus, but I'd eventually like to expand to leaf collection and snow blowing/shoveling modules due to the lack of current offerings in both of those spaces.

Due to limited time and resources the indoor robotics for OpenADR will be my focus for the foreseeable future, but I'm thinking ahead and have a lot of plans in mind!

-

Data Visualization

07/02/2016 at 12:11 • 0 commentsAs the navigational algorithms used by the robot have gotten more complex, I've noticed several occasions where I don't understand the logical paths the robot is taking. Since the design is only going to get more complex from here on out, I decided to implement real time data visualization to allow me to see what the robot "sees" and view what actions it's taking in response. With the Raspberry Pi now on board, it's incredibly simple to implement a web page that hosts this information in an easy to use format. The code required to enable data visualization is broken up into four parts; the firmware part to send the data, the serial server to read the data from the Arduino, the web server to host the data visualization web page, and the actual web page that displays the data.

Firmware

// Prints "velocity=-255,255" Serial.print("velocity="); Serial.print(-FULL_SPEED); Serial.print(","); Serial.println(FULL_SPEED); // Prints "point=90,25" which means 25cm at the angle of 90 degrees Serial.print("point="); Serial.print(AngleMap[i]); Serial.print(","); Serial.println(Distances[i]);The firmware level was the simplest part. I only added print statements to the code when the robot changes speed or reads the ultrasonic sensors. It prints out key value pairs over the USB serial port to the Raspberry Pi.

Serial Server

from PIL import Image, ImageDraw import math import time import random import serial import json # The scaling of the image is 1cm:1px # JSON file to output to jsonFile = "data.json" # The graphical center of the robot in the image centerPoint = (415, 415) # Width of the robot in cm/px robotWidth = 30 # Radius of the wheels on the robot wheelRadius = 12.8 # Minimum sensing distance minSenseDistance = 2 # Maximum sensing distance maxSenseDistance = 400 # The distance from the robot to display the turn vector at turnVectorDistance = 5 # Initialize global data variables points = {} velocityVector = [0, 0] # Serial port to use serialPort = "/dev/ttyACM0" robotData = {} ser = serial.Serial(serialPort, 115200) # Parses a serial line from the robot and extracts the # relevant information def parseLine(line): status = line.split('=') statusType = status[0] # Parse the obstacle location if statusType == "point": coordinates = status[1].split(',') points[int(coordinates[0])] = int(coordinates[1]) # Parse the velocity of the robot (x, y) elif statusType == "velocity": velocities = status[1].split(',') velocityVector[0] = int(velocities[0]) velocityVector[1] = int(velocities[1]) def main(): # Three possible test print files to simulate the serial link # to the robot #testPrint = open("TEST_straight.txt") #testPrint = open("TEST_tightTurn.txt") #testPrint = open("TEST_looseTurn.txt") time.sleep(1) ser.write('1'); #for line in testPrint: while True: # Flush the input so we get the latest data and don't fall behind #ser.flushInput() line = ser.readline() parseLine(line.strip()) robotData["points"] = points robotData["velocities"] = velocityVector #print json.dumps(robotData) jsonFilePointer = open(jsonFile, 'w') jsonFilePointer.write(json.dumps(robotData)) jsonFilePointer.close() #print points if __name__ == '__main__': try: main() except KeyboardInterrupt: print "Quitting" ser.write('0');The Raspberry Pi then runs a serial server which processes these key value pairs and converts them into a dictionary representing the robot's left motor and right motor speeds and the obstacles viewed by each ultrasonic sensor. This dictionary is then converted into the JSON format and written out to a file.

{"velocities": [-255, -255], "points": {"0": 10, "90": 200, "180": 15, "45": 15, "135": 413}}This is an example of what the resulting JSON data looks like.Web Server

import SimpleHTTPServer import SocketServer PORT = 80 Handler = SimpleHTTPServer.SimpleHTTPRequestHandler httpd = SocketServer.TCPServer(("", PORT), Handler) print "serving at port", PORT httpd.serve_forever()Using a Python HTTP server example, the Raspberry Pi runs a web server which hosts the generated JSON file and the web page that’s used for viewing the data.Web Page

<!doctype html> <html> <head> <script type="text/javascript" src="http://code.jquery.com/jquery.min.js"></script> <style> body{ background-color: white; } canvas{border:1px solid black;} </style> <script> $(function() { var myTimer = setInterval(loop, 1000); var xhr = new XMLHttpRequest(); var c = document.getElementById("displayCanvas"); var ctx = c.getContext("2d"); // The graphical center of the robot in the image var centerPoint = [415, 415]; // Width of the robot in cm/px var robotWidth = 30; // Radius of the wheels on the robot wheelRadius = 12.8; // Minimum sensing distance var minSenseDistance = 2; // Maximum sensing distance var maxSenseDistance = 400; // The distance from the robot to display the turn vector at var turnVectorDistance = 5 // Update the image function loop() { ctx.clearRect(0, 0, c.width, c.height); // Set global stroke properties ctx.lineWidth = 1; // Draw the robot ctx.strokeStyle="#000000"; ctx.beginPath(); ctx.arc(centerPoint[0], centerPoint[1], (robotWidth / 2), 0, 2*Math.PI); ctx.stroke(); // Draw the robot's minimum sensing distance as a circle ctx.strokeStyle="#00FF00"; ctx.beginPath(); ctx.arc(centerPoint[0], centerPoint[1], ((robotWidth / 2) + minSenseDistance), 0, 2*Math.PI); ctx.stroke(); // Draw the robot's maximum sensing distance as a circle ctx.strokeStyle="#FFA500"; ctx.beginPath(); ctx.arc(centerPoint[0], centerPoint[1], ((robotWidth / 2) + maxSenseDistance), 0, 2*Math.PI); ctx.stroke(); xhr.onreadystatechange = processJSON; xhr.open("GET", "data.json?" + new Date().getTime(), true); xhr.send(); //var robotData = JSON.parse(text); } function processJSON() { if (xhr.readyState == 4) { var robotData = JSON.parse(xhr.responseText); drawVectors(robotData); drawPoints(robotData); } } // Calculate the turn radius of the robot from the two velocities function calculateTurnRadius(leftVector, rightVector) { var slope = ((rightVector - leftVector) / 10.0) / (2.0 * wheelRadius); var yOffset = ((Math.max(leftVector, rightVector) + Math.min(leftVector, rightVector)) / 10.0) / 2.0; var xOffset = 0; if (slope != 0) { xOffset = Math.round((-yOffset) / slope); } return Math.abs(xOffset); } // Calculate the angle required to display a turn vector with // a length that matched the magnitude of the motion function calculateTurnLength(turnRadius, vectorMagnitude) { var circumference = 2.0 * Math.PI * turnRadius; return Math.abs((vectorMagnitude / circumference) * (2 * Math.PI)); } function drawVectors(robotData) { leftVector = robotData.velocities[0]; rightVector = robotData.velocities[1]; // Calculate the magnitude of the velocity vector in pixels // The 2.5 dividend was determined arbitrarily to provide an // easy to read line var vectorMagnitude = (((leftVector + rightVector) / 2.0) / 2.5); ctx.strokeStyle="#FF00FF"; ctx.beginPath(); if (leftVector == rightVector) { var vectorEndY = centerPoint[1] - vectorMagnitude; ctx.moveTo(centerPoint[0], centerPoint[1]); ctx.lineTo(centerPoint[0], vectorEndY); } else { var turnRadius = calculateTurnRadius(leftVector, rightVector); if (turnRadius == 0) { var outsideRadius = turnVectorDistance + (robotWidth / 2.0); var rotationMagnitude = (((Math.abs(leftVector) + Math.abs(rightVector)) / 2.0) / 2.5); var turnAngle = calculateTurnLength(outsideRadius, rotationMagnitude); if (leftVector < rightVector) { ctx.arc(centerPoint[0], centerPoint[1], outsideRadius, (1.5 * Math.PI) - turnAngle, (1.5 * Math.PI)); } if (leftVector > rightVector) { ctx.arc(centerPoint[0], centerPoint[1], outsideRadius, (1.5 * Math.PI), (1.5 * Math.PI) + turnAngle); } } else { var turnAngle = 0; if (vectorMagnitude != 0) { turnAngle = calculateTurnLength(turnRadius, vectorMagnitude); } // Turning forward and left if ((leftVector < rightVector) && (leftVector + rightVector > 0)) { turnVectorCenterX = centerPoint[0] - turnRadius; turnVectorCenterY = centerPoint[1]; ctx.arc(turnVectorCenterX, turnVectorCenterY, turnRadius, -turnAngle, 0); } // Turning backwards and left else if ((leftVector > rightVector) && (leftVector + rightVector < 0)) { turnVectorCenterX = centerPoint[0] - turnRadius; turnVectorCenterY = centerPoint[1]; ctx.arc(turnVectorCenterX, turnVectorCenterY, turnRadius, 0, turnAngle); } // Turning forwards and right else if ((leftVector > rightVector) && (leftVector + rightVector > 0)) { turnVectorCenterX = centerPoint[0] + turnRadius; turnVectorCenterY = centerPoint[1]; ctx.arc(turnVectorCenterX, turnVectorCenterY, turnRadius, Math.PI, Math.PI + turnAngle); } // Turning backwards and right else if ((leftVector < rightVector) && (leftVector + rightVector < 0)) { turnVectorCenterX = centerPoint[0] + turnRadius; turnVectorCenterY = centerPoint[1]; ctx.arc(turnVectorCenterX, turnVectorCenterY, turnRadius, Math.PI - turnAngle, Math.PI); } } } ctx.stroke(); } function drawPoints(robotData) { for (var key in robotData.points) { var angle = Number(key); var rDistance = robotData.points[angle]; var cosValue = Math.cos(angle * (Math.PI / 180.0)); var sinValue = Math.sin(angle * (Math.PI / 180.0)); var xOffset = ((robotWidth / 2) + rDistance) * cosValue; var yOffset = ((robotWidth / 2) + rDistance) * sinValue; var xDist = centerPoint[0] + Math.round(xOffset); var yDist = centerPoint[1] - Math.round(yOffset); ctx.fillStyle = "#FF0000"; ctx.fillRect((xDist - 1), (yDist - 1), 2, 2); } } }); function changeNavigationType() { var navSelect = document.getElementById("Navigation Type"); console.log(navSelect.value); var http = new XMLHttpRequest(); var url = "http://ee-kelliott:8000/"; var params = "navType=" + navSelect.value; http.open("GET", url, true); http.onreadystatechange = function() { //Call a function when the state changes. if(http.readyState == 4 && http.status == 200) { alert(http.responseText); } } http.send(params); } </script> </head> <body> <div> <canvas id="displayCanvas" width="830" height="450"></canvas> </div> <select id="Navigation Type" onchange="changeNavigationType()"> <option value="None">None</option> <option value="Random">Random</option> <option value="Grid">Grid</option> <option value="Manual">Manual</option> </select> </body> </html>The visualization web page uses an HTML canvas and JavaScript to display the data presented in the JSON file. The velocity and direction of the robot is shown by a curved vector, the length representing the speed of the robot and the curvature representing the radius of the robot’s turn. The obstacles seen by the five ultrasonic sensors are represented on the canvas by five points.![DataImage]()

The picture above is the resulting web page without any JSON data.

Here’s a video of the robot running alongside a video of the data that’s displayed. As you can see, the data visualization isn’t exactly real time. Due to the communication delays over the network some of the robot’s decisions and sensor readings get lost.

Further Steps

Having the main structure of the data visualization set up provides a powerful interface to the robot that can be used to easily add more functionality in the future. I’d like to add more data items as I add more sensors to the robot such as the floor color sensor, an accelerometer, a compass, etc. At some point I also plan on creating a way to pass data to the robot from the web page, providing an interface to control the robot remotely.

I’d also like to improve the existing data visualization interface to minimize the amount of data lost due to network and processing delays. One way I could do this would be by getting rid of the JSON file and streaming the data directly to the web page, getting rid of unnecessary file IO delays. The Arduino also prints out the ultrasonic sensor data one sensor at a time, requiring the serial server to read five lines from the serial port to get the reading of all the sensors. Compressing all sensor data to a single line would also help improve the speed.

Another useful feature would be to record the sensor data rather than just having it be visible from the web page. The ability to store all of the written JSON files and replay the run from the robot’s perspective would make reviewing the data multiple times possible.

-

Much Ado About Batteries

06/18/2016 at 23:01 • 2 commentsThis post is going to be less of a project update, and more of a stream of consciousness monologue about one of the aspects of OpenADR that’s given me a lot of trouble. Power electronics isn’t my strong suit and the power requirements for the robot are giving me pause. Additionally, in my last post I wrote about some of the difficulties I encountered when trying to draw more than a few hundred milliamps from a USB battery pack. Listed below are the ideal features for the Nav unit’s power supply and the reason behind each. I will also be comparing separate options for batteries and power systems to see which system best fits the requirements.

Requirements

- Rechargeable – This one should be obvious, all of the current robot vacuums on the market use rechargeable batteries. It’d be too inconvenient and expensive for the user to have to constantly replace batteries.

- In-circuit charging – This just means that the batteries can be charged inside of the robot without having to take it out and plug it in. The big robot vacuum models like Neato and Roomba both automatically find their way back to a docking station and start the charging process themselves. While auto-docking isn’t high on my list of priorities, I’d still like to make it possible to do in the future. It would also be much more convenient for the user to not have to manually take the battery out of the robot to charge it.

- Light weight – The lighter the robot is, the less power it needs to move. Similarly, high energy density, or the amount of power a battery provides for its weight, is also important.

- Multiple voltage rails – As stated in my last post I’m powering both the robot motors and logic boards from the same 5V power source. This is part of the reason I had so many issues with the motors causing the Raspberry Pi to reset. More robust systems usually separate the motors and logic so that they use separate power supplies, thereby preventing electrical noise, caused by the motors, from affecting the digital logic. Due to the high power electronics that will be necessary on OpenADR modules, like the squirrel cage fan I’ll be using for the vacuum, I’m also planning on having a 12V power supply that will be connected between the Nav unit and any modules. Therefore my current design will require three power supplies in total; a 5V supply for the control boards and sensors, a 6V supply for the motors, and a 12V supply for the module interface. Each of these different voltage rails can be generated from a single battery by using DC-DC converters, but each one will add complexity and cost.

- High Power – With a guestimated 1A at 5V for the logic and sensors, 0.5A at 6V for the drive motors, and 2A at 12V for the module power, the battery is going to need to be capable of supply upwards of 30W of power to the robot.

- Convenient – The battery used for the robot needs to be easy to use. OpenADR should be the ultimate convenience system, taking away the need to perform tedious tasks. This convenience should also apply to the battery. Even if the battery isn’t capable of being charged in-circuit, it should at least be easily accessible and easy to charge.

- Price – OpenADR is meant to be an affordable alternative to the expensive domestic robots on the market today. As such, the battery and battery charging system should be affordable.

- Availability – In a perfect world, every component of the robot would be easily available from eBay, Sparkfun, or Adafruit. It would also be nice if the battery management and charging system used existing parts without needing to design a custom solution.

- Safety – Most importantly the robot needs to be safe! With the hoverboard fiasco firmly in mind, the battery system needs to be safe with no chance of fires or other unsavory behaviors. This is also relates to the availability requirement. An already available, tried and true system would be preferable to an untested, custom one.

Metrics

The rechargeability and safety requirements, as well as the need for three separate power supplies, are really non-negotiable factors that I can’t compromise on. I also have no control over whether in-circuit charging is feasible, how convenient a system is, how heavy a system is, and the availability of parts, so while I’ll provide a brief discussion of each factor, they will not be the main factors I use for comparison. I will instead focus on power, or rather the efficiency of the power delivery to the three power supplies, and price.

Battery Chemistry

There were three types of battery chemistry that I considered for my comparison. The first is LiPo or Li-Ion batteries which currently have the highest energy density in the battery market, making them a good candidate for a light weight robot. The latest Neato and Roomba robots also use Li-Ion batteries. The big drawback for these is safety. As demonstrated by the hoverboard fires, if they’re not properly handled or charged they can be explosive. This almost completely rules out the option to do a custom charging solution in my mind. Luckily, there are plenty of options for single cell LiPo/Li-Ion chargers available from both SparkFun and Adafruit.

Second is LiFePO4 batteries. While not as popular as LiPos and Li-Ions due to their lower energy density, they’re much safer. A battery can even be punctured without catching fire. Other than that, they’re very similar to LiPo/Li-Ion batteries.

Lastly is NiMH batteries. They were the industry standard for most robots for a while, and are used in all but the latest Roomba and Neato models. They have recently fallen out of favor due to a lower energy density than both types of lithium batteries. I haven’t included any NiMH systems in my comparisons because they don’t provide any significant advantages over the other two chemistries.

Systems

![1S Li-Ion System]() 1S Li-Ion – A single cell Li-Ion would probably be the easiest option as far as the battery goes. Single cell Li-Ion batteries with protection circuitry are used extensively in the hobby community. Because of this they’re easy to obtain with high capacity cells and simple charging electronics available. This would make in-circuit charging possible. The trade-off for simplicity of the battery is complexity in the DC-DC conversion circuits. A single cell Li-Ion only has a cell voltage of 3.7V, making it necessary to convert the voltage for all three power supplies. Because the lower voltage also means lower power batteries, several would need to be paralleled to achieve the same amount of power as the other battery configurations. Simple boost converters could supply power to both the logic and motor power supplies. The 12V rail would require several step-up converters to supply the requisite amount of current at the higher voltage. Luckily these modules are cheap and easy to find on eBay.

1S Li-Ion – A single cell Li-Ion would probably be the easiest option as far as the battery goes. Single cell Li-Ion batteries with protection circuitry are used extensively in the hobby community. Because of this they’re easy to obtain with high capacity cells and simple charging electronics available. This would make in-circuit charging possible. The trade-off for simplicity of the battery is complexity in the DC-DC conversion circuits. A single cell Li-Ion only has a cell voltage of 3.7V, making it necessary to convert the voltage for all three power supplies. Because the lower voltage also means lower power batteries, several would need to be paralleled to achieve the same amount of power as the other battery configurations. Simple boost converters could supply power to both the logic and motor power supplies. The 12V rail would require several step-up converters to supply the requisite amount of current at the higher voltage. Luckily these modules are cheap and easy to find on eBay.![3S LiPo System]() 3S LiPo – Another option would be using a 3 cell LiPo. These batteries are widely used for quadcopters and other hobby RC vehicles, making them easy to find. Also, because a three cell LiPo results in a battery voltage of 11.1V, no voltage conversion would be necessary when supplying the 12V power supply. Only two step-down regulators would be needed, supplying power to the logic and motor power supplies. These regulators are also widely available on eBayand are just as cheap as the step-up regulators The downside is that, as I’ve mentioned before, LiPos are inherently temperamental and can be dangerous. I also had trouble finding high quality charging circuitry for multi-cell batteries that could be used to charge the battery in-circuit, meaning the user would have to remove the battery for charging.

3S LiPo – Another option would be using a 3 cell LiPo. These batteries are widely used for quadcopters and other hobby RC vehicles, making them easy to find. Also, because a three cell LiPo results in a battery voltage of 11.1V, no voltage conversion would be necessary when supplying the 12V power supply. Only two step-down regulators would be needed, supplying power to the logic and motor power supplies. These regulators are also widely available on eBayand are just as cheap as the step-up regulators The downside is that, as I’ve mentioned before, LiPos are inherently temperamental and can be dangerous. I also had trouble finding high quality charging circuitry for multi-cell batteries that could be used to charge the battery in-circuit, meaning the user would have to remove the battery for charging.![4S LiFePO4 System]() 4S LiFePO4 – Lastly is the four cell LiFePO4 battery system. It has all the same advantages as the three cell LiPo configuration, but with the added safety of the LiFePO4 chemistry. Also, because the four cells result in a battery voltage of 12.8V-13.2V, it would be possible to put a diode on the positive battery terminal, adding the ability to safely add several batteries in parallel, and still stay above the desired 12V module power supply voltage. LiFePO4 are also easier to charge and don’t have the same exploding issues when charging as LiPo batteries, so it would be possible to design custom charging circuitry to enable in-circuit charging for the robot. The only downside, as far as LiFePO4 batteries go, is with availability. Because this chemistry isn’t as widely used as LiPos and Li-Ions there are less options when it comes to finding batteries to use.

4S LiFePO4 – Lastly is the four cell LiFePO4 battery system. It has all the same advantages as the three cell LiPo configuration, but with the added safety of the LiFePO4 chemistry. Also, because the four cells result in a battery voltage of 12.8V-13.2V, it would be possible to put a diode on the positive battery terminal, adding the ability to safely add several batteries in parallel, and still stay above the desired 12V module power supply voltage. LiFePO4 are also easier to charge and don’t have the same exploding issues when charging as LiPo batteries, so it would be possible to design custom charging circuitry to enable in-circuit charging for the robot. The only downside, as far as LiFePO4 batteries go, is with availability. Because this chemistry isn’t as widely used as LiPos and Li-Ions there are less options when it comes to finding batteries to use.

Power Efficiency Comparison

To further examine the three options I listed above, I compared the power delivery systems of each and roughly calculated their efficiency. Below are the Google Sheets calculations. I assumed the nominal voltage of the batteries in my calculations and that the total power capacity of each battery was the same. From there I guesstimated the power required by the robot, the current draw on the batteries, and the power efficiency of the whole system. I also used this power efficiency to calculate the runtime of the robot lost due to the inefficiency. The efficiencies I used for the DC-DC converters were estimated using the data and voltage-efficiency curves listed in the datasheets.

Due to the high currents necessary for the single cell Li-Ion system, I assumed that there would be an always-on P-Channel MOSFET after each battery, effectively acting as a diode with a lower voltage drop and allowing multiple batteries to be added together in parallel. I also assumed a diode would be placed after the 12V boost regulators, due to the fact that multiple would be needed to supply the desired 1.5A.

Here I assumed that a diode would be connected between the battery and 12V bus power, allowing for parallelization of batteries and bringing the voltage closer to the desired 12V.

Conclusion

Looking at the facts and power efficiencies I listed previously, the 4S LiFePO4 battery is looking like the most attractive option. While its efficiency would be slightly lower than the 3S LiPo, I think the added safety and possibility for in-circuit charging makes it worth it. While I’m not sure if OpenADR will ultimately end up using LiFePO4 batteries, that’s the path I’m going to explore for now. Of course, power and batteries aren’t really in my wheelhouse so comments and suggestions are welcome.

-

Connector to Raspberry Pi

06/12/2016 at 18:23 • 0 comments![2016-06-11 23.25.45]()

The most frustrating part of developing the wall following algorithm from my last post was the constant moving back and forth between my office, to tweak and load firmware, and test area (kitchen hallway). To solve this problem, and to overall streamline development, I decided to add a Raspberry Pi to the robot. Specifically, I'm using a Raspberry Pi 2 that I had lying around, but expect to switch to a Pi Zero once I get code the code and design in a more final state. By installing the Arduino IDE and enabling SSH, I'm now able to access and edit code wirelessly.

Having a full-blown Linux computer on the robot also adds plenty of opportunity for new features. Currently I'm planning on adding camera support via the official camera module and a web server to serve a web page for manual control, settings configuration, and data visualization.

While I expected hooking up the Raspberry Pi as easy as connecting to the Arduino over USB, this turned out to not be the case. Having a barebones SBC revealed a few problems with my wiring and code.

The first issue I noticed was the Arduino resetting when the motors were running, but this was easily attributable to the current limit on the Raspberry Pi USB ports. A USB 2.0 port, like those on the Pi, can only supply up to 500mA of current. Motors similar to the ones I'm using are specced at 250mA each, so having both motors accelerating suddenly to full speed caused a massive voltage drop which reset the Arduino. This was easily fixed by connecting the motor supply of the motor controller to the 5V output on the Raspberry Pi GPIO header. Additionally, setting the max_usb_current flag in /boot/config.txt allows up to 2A on the 5V line, minus what the Pi uses. 2A should be more than sufficient once the motors, Arduino, and other sensors are hooked up.

The next issue I encountered was much more nefarious. With the motors hooked up directly to the 5V on the Pi, changing from full-speed forward to full-speed backward caused everything to reset! I don't have an oscilloscope to confirm this, but my suspicion was that there was so much noise placed on the power supply by the motors that both boards were resetting. This is where one of the differences between the Raspberry Pi and a full computer is most evident. On a regular PC there's plenty of space to add robust power circuitry and filters to prevent noise on the 5V USB lines, but because space on the Pi is at a premium the minimal filtering on the 5V bus wasn't sufficient to remove the noise caused by the motors. When I originally wrote the motor controller library I didn't have motor noise in mind and instead just set the speed of the motor instantly. In the case of both motors switching from full-speed forward to full-speed backward, the sudden reversal causes a huge spike in the power supply, as explained in this app note. I was able to eventually fix this by rewriting the library to include some acceleration and deceleration. By limiting the acceleration on the motors, the noise was reduced enough that the boards no longer reset.

While setting up the Raspberry Pi took longer than I'd hoped due to power supply problems, I'm glad I got a chance to learn the limits on my design and will keep bypass capacitors in mind when I design a permanent board. I'm also excited for the possibilities having a Raspberry Pi on board provides and look forward to adding more advanced features to OpenADR!

-

Wall Following

05/30/2016 at 03:56 • 0 commentsAfter several different attempts at wall following algorithms and a lot of tweaking, I’ve finally settled on a basic algorithm that mostly works and allows the OpenADR navigation unit to roughly follow a wall. The test run is below:

Test Run

Algorithm

I used a very basic subsumption architecture as the basis for my wall following algorithm. This just means that the robot has a list of behaviors that in performs. Each behavior is triggered by a condition with the conditions being prioritized. Using my own algorithm as an example, the triggers and behaviors of the robot are listed below:

- A wall closer than 10cm in front triggers the robot to turn left until the wall is on the right.

- If the front-right sensor is closer to the wall than the right sensor, the robot is angled towards the wall and so turns left.

- If the robot is closer than the desired distance from the wall, it turns left slightly to move further away.

- If the robot is further than the desired distance from the wall, it turns right slightly to move closer.

- Otherwise it just travels straight.

The robot goes through each of these conditions sequentially, and if a certain condition is met the robot performs the triggered action and then skips the rest and doesn’t bother checking the rest of the conditions. As displayed in the test run video this method mostly works but certainly has room for improvement.

Source

#include <L9110.h> #include <HCSR04.h> #define HALF_SPEED 127 #define FULL_SPEED 255 #define NUM_DISTANCE_SENSORS 5 #define DEG_180 0 #define DEG_135 1 #define DEG_90 2 #define DEG_45 3 #define DEG_0 4 #define TARGET_DISTANCE 6 #define TOLERANCE 2 // Mapping of All of the distance sensor angles uint16_t AngleMap[] = {180, 135, 90, 45, 0}; // Array of distance sensor objects HCSR04* DistanceSensors[] = {new HCSR04(23, 22), new HCSR04(29, 28), new HCSR04(35, 34), new HCSR04(41, 40), new HCSR04(47, 46)}; uint16_t Distances[NUM_DISTANCE_SENSORS]; uint16_t Distances_Previous[NUM_DISTANCE_SENSORS]; L9110 motors(9, 8, 3, 2); void setup() { Serial.begin(9600); // Initialize all distances to 0 for (uint8_t i = 0; i < NUM_DISTANCE_SENSORS; i++) { Distances[i] = 0; Distances_Previous[i] = 0; } } void loop() { updateSensors(); // If there's a wall ahead if (Distances[DEG_90] < 10) { uint8_t minDir; Serial.println("Case 1"); // Reverse slightly motors.backward(FULL_SPEED); delay(100); // Turn left until the wall is on the right do { updateSensors(); minDir = getClosestWall(); motors.turnLeft(FULL_SPEED); delay(100); } while ((Distances[DEG_90] < 10) && (minDir != DEG_0)); } // If the front right sensor is closer to the wall than the right sensor, the robot is angled toward the wall else if ((Distances[DEG_45] <= Distances[DEG_0]) && (Distances[DEG_0] < (TARGET_DISTANCE + TOLERANCE))) { Serial.println("Case 2"); // Turn left to straighten out motors.turnLeft(FULL_SPEED); delay(100); } // If the robot is too close to the wall and isn't getting farther else if ((checkWallTolerance(Distances[DEG_0]) == -1) && (Distances[DEG_0] <= Distances_Previous[DEG_0])) { Serial.println("Case 3"); motors.turnLeft(FULL_SPEED); delay(100); motors.forward(FULL_SPEED); delay(100); } // If the robot is too far from the wall and isn't getting closer else if ((checkWallTolerance(Distances[DEG_0]) == 1) && (Distances[DEG_0] >= Distances_Previous[DEG_0])) { Serial.println("Case 4"); motors.turnRight(FULL_SPEED); delay(100); motors.forward(FULL_SPEED); delay(100); } // Otherwise keep going straight else { motors.forward(FULL_SPEED); delay(100); } } // A function to retrieve the distance from all sensors void updateSensors() { for (uint8_t i = 0; i < NUM_DISTANCE_SENSORS; i++) { Distances_Previous[i] = Distances[i]; Distances[i] = DistanceSensors[i]->getDistance(CM, 5); Serial.print(AngleMap[i]); Serial.print(":"); Serial.println(Distances[i]); delay(1); } } // Retrieve the angle of the closest wall uint8_t getClosestWall() { uint8_t tempMin = 255; uint16_t tempDist = 500; for (uint8_t i = 0; i < NUM_DISTANCE_SENSORS; i++) { if (min(tempDist, Distances[i]) == Distances[i]) { tempDist = Distances[i]; tempMin = i; } } return tempMin; } // Check if the robot is within the desired distance from the wall int8_t checkWallTolerance(uint16_t measurement) { if (measurement < (TARGET_DISTANCE - TOLERANCE)) { return -1; } else if (measurement > (TARGET_DISTANCE + TOLERANCE)) { return 1; } else { return 0; } }Conclusion

There are still plenty of problems that I need to tackle for the robot to be able to successfully navigate my apartment. I tested it in a very controlled environment and the algorithm I’m currently using isn’t robust enough to handle oddly shaped rooms or obstacles. It also still tends to get stuck butting against the wall and completely ignores exterior corners.

Some of the obstacle and navigation problems will hopefully be remedied by adding bump sensors to the robot. The sonar coverage of the area around the robot is sparser than I originally thought and the 2cm blindspot around the robot is causing some problems. The more advanced navigation will also be helped by improving my algorithm to build a map of the room in the robot’s memory in addition to adding encoders for more precise position tracking.

-

Test Platform

05/29/2016 at 23:44 • 0 commentsThe second round of the Hackaday Prize ends tomorrow so I’ve spent the weekend hard at work on the OpenADR test platform. I’ve been doing quite a bit of soldering and software testing to streamline development and to determine what the next steps I need to take are. This blog post will outline the hardware changes and software testing that I’ve done for the test platform.

Test Hardware

While my second motion test used an Arduino Leonardo for control, I realized I needed more GPIO if I wanted to hook up all the necessary sensors and peripherals. I ended up buying a knockoff Arduino Mega 2560 and have started using that.

![2016-05-28 19.00.22]()

![2016-05-28 19.00.07]() I also bought a proto shield to make the peripheral connections pseudo-permanent.

I also bought a proto shield to make the peripheral connections pseudo-permanent.![2016-05-29 11.47.13]() Hardwiring the L9110 motor module and the five HC-SR04 sensors allows for easy hookup to the test platform.

Hardwiring the L9110 motor module and the five HC-SR04 sensors allows for easy hookup to the test platform.HC-SR04 Library

The embedded code below comprises the HC-SR04 Library for the ultrasonic distance sensors. The trigger and echo pins are passed into the constructor and the getDistance() function triggers the ultrasonic pulse and then measures the time it takes to receive the echo pulse. This measurement is then converted to centimeters or inches and the resulting value is returned.

#ifndef HCSR04_H #define HCSR04_H #include "Arduino.h" #define CM 1 #define INCH 0 class HCSR04 { public: HCSR04(uint8_t triggerPin, uint8_t echoPin); HCSR04(uint8_t triggerPin, uint8_t echoPin, uint32_t timeout); uint32_t timing(); uint16_t getDistance(uint8_t units, uint8_t samples); private: uint8_t _triggerPin; uint8_t _echoPin; uint32_t _timeout; }; #endif#include "Arduino.h" #include "HCSR04.h" HCSR04::HCSR04(uint8_t triggerPin, uint8_t echoPin) { pinMode(triggerPin, OUTPUT); pinMode(echoPin, INPUT); _triggerPin = triggerPin; _echoPin = echoPin; _timeout = 24000; } HCSR04::HCSR04(uint8_t triggerPin, uint8_t echoPin, uint32_t timeout) { pinMode(triggerPin, OUTPUT); pinMode(echoPin, INPUT); _triggerPin = triggerPin; _echoPin = echoPin; _timeout = timeout; } uint32_t HCSR04::timing() { uint32_t duration; digitalWrite(_triggerPin, LOW); delayMicroseconds(2); digitalWrite(_triggerPin, HIGH); delayMicroseconds(10); digitalWrite(_triggerPin, LOW); duration = pulseIn(_echoPin, HIGH, _timeout); if (duration == 0) { duration = _timeout; } return duration; } uint16_t HCSR04::getDistance(uint8_t units, uint8_t samples) { uint32_t duration = 0; uint16_t distance; for (uint8_t i = 0; i < samples; i++) { duration += timing(); } duration /= samples; if (units == CM) { distance = duration / 29 / 2 ; } else if (units == INCH) { distance = duration / 74 / 2; } return distance; }L9110 Library

This library controls the L9110 dual h-bridge module. The four controlling pins are passed in. These pins also need to be PWM compatible as the library uses the analogWrite() function to control the speed of the motors. The control of the motors is broken out into forward(), backward(), turnLeft(), and turnRight() functions whose operation should be obvious. These four simple motion types will work fine for now but I will need to add finer control once I get into more advanced motion types and control loops.

#ifndef L9110_H #define L9110_H #include "Arduino.h" class L9110 { public: L9110(uint8_t A_IA, uint8_t A_IB, uint8_t B_IA, uint8_t B_IB); void forward(uint8_t speed); void backward(uint8_t speed); void turnLeft(uint8_t speed); void turnRight(uint8_t speed); private: uint8_t _A_IA; uint8_t _A_IB; uint8_t _B_IA; uint8_t _B_IB; void motorAForward(uint8_t speed); void motorABackward(uint8_t speed); void motorBForward(uint8_t speed); void motorBBackward(uint8_t speed); }; #endif#include "Arduino.h" #include "L9110.h" L9110::L9110(uint8_t A_IA, uint8_t A_IB, uint8_t B_IA, uint8_t B_IB) { _A_IA = A_IA; _A_IB = A_IB; _B_IA = B_IA; _B_IB = B_IB; pinMode(_A_IA, OUTPUT); pinMode(_A_IB, OUTPUT); pinMode(_B_IA, OUTPUT); pinMode(_B_IB, OUTPUT); } void L9110::forward(uint8_t speed) { motorAForward(speed); motorBForward(speed); } void L9110::backward(uint8_t speed) { motorABackward(speed); motorBBackward(speed); } void L9110::turnLeft(uint8_t speed) { motorABackward(speed); motorBForward(speed); } void L9110::turnRight(uint8_t speed) { motorAForward(speed); motorBBackward(speed); } void L9110::motorAForward(uint8_t speed) { digitalWrite(_A_IA, LOW); analogWrite(_A_IB, speed); } void L9110::motorABackward(uint8_t speed) { digitalWrite(_A_IB, LOW); analogWrite(_A_IA, speed); } void L9110::motorBForward(uint8_t speed) { digitalWrite(_B_IA, LOW); analogWrite(_B_IB, speed); } void L9110::motorBBackward(uint8_t speed) { digitalWrite(_B_IB, LOW); analogWrite(_B_IA, speed); }Test Code

Lastly is the test code used to check the functionality of the libraries. It simple tests all the sensors, prints the output, and runs through the four types of motion to verify that everything is working.

#include <L9110.h> #include <HCSR04.h> #define HALF_SPEED 127 #define FULL_SPEED 255 #define NUM_DISTANCE_SENSORS 5 #define DEG_180 0 #define DEG_135 1 #define DEG_90 2 #define DEG_45 3 #define DEG_0 4 uint16_t AngleMap[] = {180, 135, 90, 45, 0}; HCSR04* DistanceSensors[] = {new HCSR04(23, 22), new HCSR04(29, 28), new HCSR04(35, 34), new HCSR04(41, 40), new HCSR04(47, 46)}; uint16_t Distances[NUM_DISTANCE_SENSORS]; L9110 motors(9, 8, 3, 2); void setup() { Serial.begin(9600); pinMode(29, OUTPUT); pinMode(28, OUTPUT); } void loop() { updateSensors(); motors.forward(FULL_SPEED); delay(1000); motors.backward(FULL_SPEED); delay(1000); motors.turnLeft(FULL_SPEED); delay(1000); motors.turnRight(FULL_SPEED); delay(1000); motors.forward(0); delay(6000); } void updateSensors() { for (uint8_t i = 0; i < NUM_DISTANCE_SENSORS; i++) { Distances[i] = DistanceSensors[i]->getDistance(CM, 5); Serial.print(AngleMap[i]); Serial.print(":"); Serial.println(Distances[i]); } }As always, all the code I’m using is open source and available on the OpenADR GitHub repository. I’ll be using these libraries and the electronics I assembled to start testing some motion algorithms!

-

Motion Test #2

05/19/2016 at 01:51 • 1 commentI just finished testing v0.2 of the chassis and results are great! The robot runs pretty well on hardwood/tile but it still has a little trouble on carpet. I think I may need to further increase the ground clearance but for now it’ll work well enough to start writing code!

![2016-05-18 19.54.18]() To demo the robot’s motion I wired up an Arduino Leonardo to an L9110 motor driver to control the motors and an HC-SR04 to perform basic obstacle detection. I’m using a backup phone battery for power. The demo video is below:

To demo the robot’s motion I wired up an Arduino Leonardo to an L9110 motor driver to control the motors and an HC-SR04 to perform basic obstacle detection. I’m using a backup phone battery for power. The demo video is below:https://videopress.com/v/O71ugt3V

Over the next couple of days I plan on wiring up the rest of the ultrasonic sensors and doing some more advanced motion control. Once that’s finished I’ll post more detailed explanations of the electronics and the source code!

-

Navigation Chassis v0.2

05/15/2016 at 21:31 • 3 comments![]()

Based on the results of the first motion test, I made several tweaks to the chassis for version 0.2.

![IMG_0476]()

- As I stated in the motion test, ground clearance was a big issue that caused problems on carpet. I don’t know the general measurements for carpet thickness, but the medium-pile carpet in my apartment was tall enough that the chassis was lifted up and the wheels couldn’t get a good purchase on the ground. This prevented the robot from moving at all. I’m hoping 7.5mm will be enough ground clearance, but I’ll find out once I do another motion test.

![IMG_0478.JPG]()

- I added a side wall to the robot to increase stiffness. With the flexibility of PLA, the middle of the robot sagged and as a result the center dragged on the ground. Adding an extra dimension to the robot in the form of a wall helps with stiffness and prevents most sagging. My first attempt at this was in the form of an integrated side wall, allowing the entire thing to be printed in one piece. This, however, turned out to be a bad idea. With the wall in the way it was extremely difficult to get tools into the chassis to add in the vitamins (e.g. motors, casters, etc.). DFM is important! So instead of integrated walls I went for a more modular approach.

![IMG_0480.JPG]()

![IMG_0479.JPG]()

- The base is much like it was before, flat and simple, but I’ve added holes for attaching the side walls as separate pieces. The side walls then have right angle bolt hole connected so the wall can be bolted on to the base after everything has been assembled.

![IMG_0483.JPG]()

- One thing I don’t like about this method is that all of the bolt heads that will be sticking out of the bottom of the chassis. I’d like to go back at a later time and figure out how to recess the bolts so the bolt heads don’t poke though.

- With the stiffness added by the side wall I decided to decrease the thickness of the chassis base and wall from 2.5mm to 2mm. This results in saved plastic and print time.

- Adding the side wall had the downside of interfering with the sonar sensor. I’m not too familiar with the beam patterns of the HC-SR04 module, but I didn’t want to risk the wall causing signal loss or false positives, so I moved the distance sensors outward so the tip of the ultrasonic transducers are flush with the side wall. Unfortunately, due to the minimum detectable distance of the sensor, this means that there will be a 2cm blindspot all around the robot that will have to be dealt with. I’m unsure of how I’ll deal with this at the moment, but I’ll most likely end up using IR proximity sensors or bumper switches to detect immediate obstacles.

- Other than the above changes, I mostly just tweaked the sizing and placement of certain items to optimize assembly and part fits.

This is still very much a work in progress and there are still several things I’d like to add before I start working on the actual vacuum module, but I’m happy with the progress so far on the navigation chassis and think it’ll be complete enough to work as a test platform for navigation and mapping.

-

Motion Test #1

04/22/2016 at 01:58 • 0 commentsThis is just a quick update on the OpenADR project. Last night I hooked up the motor driver and ran a quick test with the motors hardwired to drive forward. I wanted to verify that the motors were strong enough to move the robot and also see if there were any necessary tweaks that needed to be applied to the mechanics.

https://videopress.com/v/cCzwqfXcLooking at the result, there are a few things I want to change. Most notably, the center of the chassis sags a lot. The center was dragging on the ground during this test and it wouldn’t even run on carpet. This is mostly due to the fact that the test chassis has no sidewalls and PLA isn’t very stiff, so there’s a great deal of flex that allows the center to touch the ground. This can be fixed by adding some small sidewalls in the next test chassis revision.

Additionally, I’m not happy with the ground clearance. This chassis has about 3.5mm of ground clearance which isn’t quite enough to let the wheels rest firmly on the ground in medium pile carpet. In light of this, I’ll be increasing the ground clearance experimentally, with my next test having 7.5mm of clearance.

Open Autonomous Domestic Robots

Open Autonomous Domestic Robots – An open source system for domestic cleaning robots using low-cost hardware

Keith Elliott

Keith Elliott

With the beginning of the

With the beginning of the

1S Li-Ion – A single cell Li-Ion would probably be the easiest option as far as the battery goes. Single cell Li-Ion batteries with protection circuitry are used extensively in the hobby community. Because of this they’re easy to obtain with

1S Li-Ion – A single cell Li-Ion would probably be the easiest option as far as the battery goes. Single cell Li-Ion batteries with protection circuitry are used extensively in the hobby community. Because of this they’re easy to obtain with  3S LiPo – Another option would be using a 3 cell LiPo. These batteries are widely used for quadcopters and other hobby RC vehicles, making them easy to find. Also, because a three cell LiPo results in a battery voltage of 11.1V, no voltage conversion would be necessary when supplying the 12V power supply. Only two

3S LiPo – Another option would be using a 3 cell LiPo. These batteries are widely used for quadcopters and other hobby RC vehicles, making them easy to find. Also, because a three cell LiPo results in a battery voltage of 11.1V, no voltage conversion would be necessary when supplying the 12V power supply. Only two  4S LiFePO4 – Lastly is the four cell LiFePO4 battery system. It has all the same advantages as the three cell LiPo configuration, but with the added safety of the LiFePO4 chemistry. Also, because the four cells result in a battery voltage of 12.8V-13.2V, it would be possible to put a diode on the positive battery terminal, adding the ability to safely add several batteries in parallel, and still stay above the desired 12V module power supply voltage. LiFePO4 are also easier to charge and don’t have the same exploding issues when charging as LiPo batteries, so it would be possible to design custom charging circuitry to enable in-circuit charging for the robot. The only downside, as far as LiFePO4 batteries go, is with availability. Because this chemistry isn’t as widely used as LiPos and Li-Ions there are less options when it comes to finding batteries to use.

4S LiFePO4 – Lastly is the four cell LiFePO4 battery system. It has all the same advantages as the three cell LiPo configuration, but with the added safety of the LiFePO4 chemistry. Also, because the four cells result in a battery voltage of 12.8V-13.2V, it would be possible to put a diode on the positive battery terminal, adding the ability to safely add several batteries in parallel, and still stay above the desired 12V module power supply voltage. LiFePO4 are also easier to charge and don’t have the same exploding issues when charging as LiPo batteries, so it would be possible to design custom charging circuitry to enable in-circuit charging for the robot. The only downside, as far as LiFePO4 batteries go, is with availability. Because this chemistry isn’t as widely used as LiPos and Li-Ions there are less options when it comes to finding batteries to use.

I also bought a

I also bought a  Hardwiring the L9110 motor module and the five HC-SR04 sensors allows for easy hookup to the test platform.

Hardwiring the L9110 motor module and the five HC-SR04 sensors allows for easy hookup to the test platform. To demo the robot’s motion I wired up an

To demo the robot’s motion I wired up an