There were a very few 'high-resolution' modifications available for the TRS-80 Model I. Some were downright weird, such as the 80-GRAPHIX board, which worked though the character generator. One of the more sane ones was the HRG1B, which was produced in The Netherlands . It worked by or'ing in video dots from a parallel memory buffer, and this buffer was sized at the TRS-80's native resolution of 384x192.

This was on my 'to-do- list of things to implement, but [harald-w-fischer], actually had one of these on-hand from time long past. He also had manuals, some of the stock software, and some extensions he his self wrote! This was needed to be able to faithfully reproduce the behaviour, and some of my initial assumptions were proven false by it.

Since my emulator already has framebuffer(s) at the native resolution, my thinking was that this would be easy to implement. However, I was mistaken. It was mostly easy to implement, but there were several challenges.

- merging

At first I planned to directly set bits into the 'master' frame buffer(s) when writes to the HRG1B happened, but after disassembling the 'driver', it was clear this wouldn't work. For one, there needs to be supported reads from the HRG buffer that don't reflect merging, so a separate backing buffer would be needed to do this. Second, the HRG supports a distinct on/off capability, so again the separate buffer would be needed, but also the case of when to merge the buffers arises. Lastly, the computational overhead of doing the merging on-demand caused overload and required some legerdemain. - memory

Whereas the Olimex Duinomite-Mini board (which Harald is using) can easily accommodate the 9K frame buffer required, my color UBW32-based board is just too memory constrained. The color boards already have 3 frame buffers, and this makes a fourth. Initial experiments were done on the Olimex, where the memory was not a problem, but I really wanted it to work on color, too.

The first experiment I tried was to just see if there would be enough time to merge the image during the vertical blanking interval. Since I am upscaling the TRS-80 native 384x192 to 768x576, and then padding with blanking around to get to 800x600, there is a bunch of vertical retrace time where I could do the buffer merging. Maybe the processor is fast enough to do it on-the-fly?

Well, no, it's not. The buffers are small (9K), and the operation is simple (or'ing on the hi-res buffer onto the main buffer), and the operation is done on a word basis on word-aligned quantities, but it's not enough. The major problem is that the vertical blanking interval is driven in software by a state machine that is in turn driven by an Interrupt Service Routine (ISR) at the horizontal line frequency. The vertical state machine is mostly line counters for the vertical 'front porch', sync period, and 'back porch'. So, although the vertical blanking period is a long time in toto, it is comprised of a bunch of short times. The naive implementation requires all the work to be done actually during a horizontal line period. In my first iteration, I wound up dividing the work into chunklettes, spreading out between several horizontal line intervals. I generated a test pattern, and this scheme appeared to work.

Having received the HRG1B driver and sample apps from Harald, I naturally had to immediately disassemble them. I have to say, the driver is a little piece of art. The goal is to extend the existing BASIC with some new keywords, and what they chose to do was hook a routine that is sometimes used during the basic execution process (it is rst10h, used to skip whitespace to the next token). The hook observes if it is coming from the interesting places, and either leaves if not, or carries on with it's shenanigans. The shenanigans consist of looking for a '#', which is the prefix for the HRG extensions. As implemented, the HRG repurposes the existing BASIC keywords of: SET, RESET, POINT, OPEN, CLOSE, LINE, CLS, CLEAR. If those are prefixed with '#', then the HRG driver performs it's alternative implementation, parsing a parameter list, and executing functionality. When it has finished consuming as much as it can, it then returns to the regular routine. So, in effect, the HRG commands act like whitespace to the command interpreter, and the driver consumes and executes those commands. By reusing the existing BASIC tokens, the parsing is simplified, since those have been converted to a one-byte code, but this is not required. In fact, Harald had made his own extension to the driver which introduced a new command 'CIRCLE' which would necessitate a full string compare of 'circle' since that is not a standard token. After my fun with disassembly, I returned to the task at-hand.

The video code was quite a mess because it is a legacy of the Maximite code that Geoff Graham had given me permission to use, and upon which had I mercilessly hacked. In that project, there were a bunch of different video modes supported, and also composite (CVBS) video -- none of which were relevant here, and had been cut out and replaced with the single 800x600 mode with funky pixel doubling and line tripling needed for the TRS80. Since I was about to add more complexity, I decided to take a couple days and clean that code up for intelligibility. Mostly this came in the form of lucid comments, and explicit computation of timing parameters from the specs. Some came from discarding vestiges of features no longer realized (headless, TFT LCD, etc). There's more to do, but that module is now a little bit tidier.

I still felt bad about merging the entire frame buffer each vertical retrace time, and thought about optimising this to only be done when output (either to the main screen buffer, or the HRG buffer), though this would be major surgery. However, my desire for color support (or at least thoroughly understanding the limitations) drew me in a different direction.

So, a component of the system, the 'hypervisor' (borrowing jargon from the virtual machine industry) is realized with a 'dialog manager' facility that I created. This dialog manager is similar to the way things are done in Windows, and you create a screen with a 'template' that specifies the location of 'controls' that are things like static text, buttons, check boxes, list boxes, edit fields. You provide a 'dialog procedure' that handles 'messages' sent from the controls when things happen. I implemented a text version of all those controls that works on the TRS80 screen. It's a development boon to have this facility, but I know that it's heavy on the use of malloc(). But how heavy is heavy? The malloc() implementation in the standard library does not expose methods to let you know how much heap you've used, or do a 'heapwalk' to inspect all the allocated objects. This is something I've long wanted, and now push came to shove.

So, I implemented my own heap manager. It implements malloc(), free(), realloc(), and has some diagnostic features where you can inquire how much free space there is, and also do a heapwalk though all blocks (allocated and unallocated) to scrutinize heap use closely. There's some magic in the gcc linker that can be used to 'wrap' a function by giving it an alternative name. This is particularly useful, because other things that have already been compiled use malloc(), and I need them to be redirected to my malloc() -- not the one in the standard library. This is a handy feature, but gcc specific. Anyway, I discovered that the dialog manager uses a lot of memory. Something like 12 KiB for the first hepervisor dialog. A full video screen is 1 KiB, so it's certainly not just the content. What could be taking up all that space?

Looking at the code, I found one culprit: v-tables. The dialog system is designed in a object-oriented manner, as might be expected, but I had put the vtable of the controls as members of the objects, rather than a single pointer to a const table (that is in ROM). That was foolish of me to start with -- what was I thinking -- and a bit painful to fix, but that gave me about 5K back right away. Half a frame screen buffer! There's more improvements I think I can make there (e.g. copy-on-write for text values -- why copy it to RAM if it never changes), but I'm past my RAM crisis for color support for the moment.

Returning to the HRG implementation, I have a new new idea: merge on a raster-by-raster basis. I.e., there is no master buffer, but rather there is the TRS-80 buffer, the HRG buffer, and just a single raster line buffer for the current raster line. Only a single line needs to be merged (prior to display), and no sophisticated (and bug-prone) code for 'optimized' merging needs to be implemented, and the implementation of HRG on/off becomes trivial (you either merge TRS80 and HRG, or just copy TRS80). But... it all needs to be completed during a few clock cycles at the beginning of the horizontal interrupt period.

At first, this seems plausible, because a horizontal line is just 12 words long. But there's still not a lot of time to dawdle, because there are just 256 CPU clocks from the moment that the interrupt is stimulated (by timer 3 maximum count being reached) before raster data is needed to be available for shifting out via SPI and DMA. Some of those cycles have to go into state machine logic and also to setting up the peripherals before the trigger comes (via output comparator that ends the horizontal sync pulse). 'Time' will tell.

As before, I have enough time to keep up on the monochrome Olimex board, but it's not quite enough for color. Looking at the screen in the failing color mode, it looks like it is almost there, but just shy of having enough time. sigh. Maybe some assembler will help. At the least, I unrolled the loops, since it's just 12 sequential 'x++ = y++ | z++' operations.

As a side benefit of this approach, a thing that had vexed me for some time is effectively solved: the hardware is such that the rising edge of the horizontal sync pulse is what triggers the SPI/DMA to start shifting out dots. The problem is that this is not per-spec. The spec requires some 'horizontal sync back porch', which is some quite time before the pixel data comes. In CVBS signals, this is where the color burst goes, but in VGA it's just a quiet time. This quiet time is simulated by left-padding the horizontal lines in the screen buffers with dummy bytes. This has always irritated me, because that space adds up: 192*4 = 768 bytes per color plane, == 3072 bytes for color with HRGB. I want that 3K back! But by doing this raster line merging, only the raster buffer needs to have the padding, so that's a 3K savings in RAM. It's the little things in life....

Anyway, one morning I awoke with another distributed work solution to my color problem: only merge half the raster line, then setup SPI/DMA, then merge the other half. By only merging half, that gives enough time to setup SPI/DMA before the trigger comes in from the output comparator that starts shifting out dots, while at the same time providing enough work for the SPI/DMA to keep them busy while we finish up the second half of the raster line. This wound up to be not too tricky to implement, and worked fine for both monochrome and color.

Now, I had to finish the hardware emulation of the API used to interface with the HRG board. As mentioned Harald Fischer had a physical unit, and also he had the driver software. So I disassembled it. He also had some scans of the manual, which he sent as well. I'm so glad he did, because the API is a bit byzantine. First, the addressing scheme is a 16-bit quantity, but it's not linear. Rather, the 16-bit 'address' is actually a bitfield of character row, column, and raster line within the character cell. Second, each memory location is 6-bits, not 8. In retrospect, I'm not so surprised - the board is implemented with SSI TTL and the 6 bits reflects the 6 pixel wide characters on the TRS-80, and I'm sure that it was convenient from the standpoint of tapping signal lines to keep that structure (yes; many hardware upgrades in those days required cutting traces and soldering. Plug-in cards are for babies!). But, I now have to emulate that addressing scenario and do a bunch of masking and bit-shifting. My favorite.

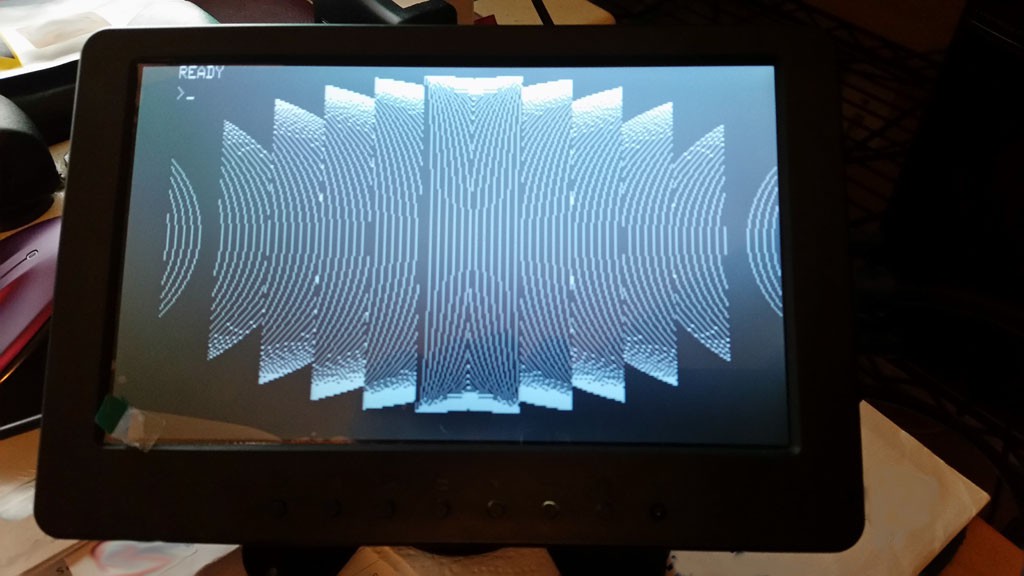

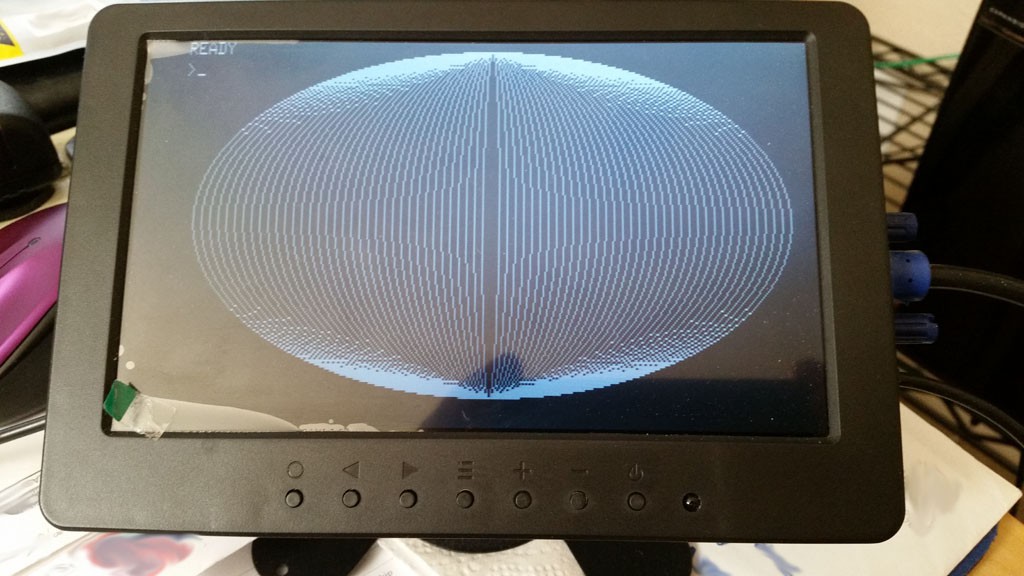

Knowing that this would be a brain-melter, I put it off until I had a morning to come into it fresh, ready to formulate test cases for boundary conditions, and code up a bunch of bit masking and shifting, dealing with 6-bit-bytes that may or may not straddle a 32-bit word boundary. About 4 hours later, I had gotten it wired in, and ran a test program that came with the board (again, thanks to Harald's provision). This is a program called 'elips', which draws a series of concentric ellipses with ever widening minor axes.

Hmm. Somethings not quite right. Oh! The SPI on the PIC32 is MSB first, but the HRG1B is LSB first. I forgot this. When I was doing the character generator ROM, I preconditioned that data to be pre-reversed to accommodate. However, in this case I've got to do the bit reversal in software and then also map in reverse into the words of the raster line. sigh; more brain-melting.

After about three more hours I had managed to get the bit reversals, shifts, and masks right.

9 minutes, 42 seconds. 36 ellipses.

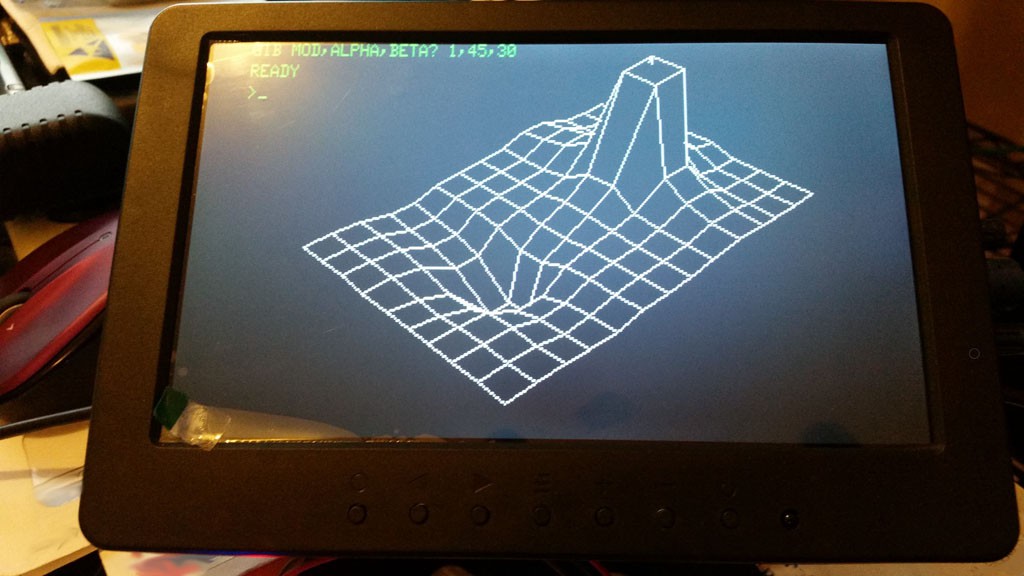

And for fun, once I figured out meaningful parameter parameters:

Hope you've got a little time for that one; something like 15 minutes. (-1, 45, 30 turns off hidden line removal, and takes about a third the time)

ziggurat29

ziggurat29

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

Google translate

I received an HRG1B card from the Netherlands.I found part of the manual in the three boxes that a Dutchman gave me for my collection. Unfortunately in the hundred or so floppy disks, I can't find the BASGR / CMD Disk versie. Could you help me out?

http://prof-80.fr/

Are you sure? yes | no

Well, the programs elips and k3d didn't come with the board. Only the driver grl2 was included. So the missing #OPEN in k3d was solely my fault and of course you may feel free to modify it any manner.

Regards

Harald

P.S.: I also tried the version with the HRG1B extension and it works fine for me.

Are you sure? yes | no

Holy cow; did you author k3d? The listing looks like a beast (in early 1980's sensibilties). Admittedly, I did not study the code, but is the function being plotted easy to substitute; e.g. 'lines xxx-yyy are a subroutine computing Z(x,y) = f(x,y)' . (could also be a model use-case for Level III 'defn').

Are you sure? yes | no

Yes, I did, but in fact I was taking the algorithm for the hidden line removal from a FORTRAN program with the same name and a description which was available from the computer center at my university (running an "enormous" Telefunken TR440 main computer with 48 Kwords core memory in the late 1970-ties).

Yes, of course the function to be plotted can be easily changed by replacing the respective lines of code. The number of grid points can be changed as well.

If the code looks weird that is because the original FORTRAN was full of computed goto's and my port to BASIC certainly didn't improve the structure ;-)

One more question: When I tried your emulator to assemble the source for the driver using EDTASM, it was not possible to load the source into EDTASM from the .cas file. Could it be that EDTASM uses its own routines to read from cassette, and not the ones you have patched in the TRS-80 ROM?

Are you sure? yes | no

It is possible. I know it uses it's own file format, though I'm not aware of it using it's own low-level IO routines, but maybe. If I get a moment today, I'll try to disassemble EDTASM and verify (or at least hook an LED up to the I/O so I can visually confirm it's attempting it's own I/O.

Are you sure? yes | no

OK, because I am so addicted to disassembly, I had to take a quick peek. I did verify that, yes, EDTASM (for whatever reason) has it's own cassette IO routines, rather than using those in the ROM. Don't know why.

It will be a bit of effort for me to make a cassette I/O implementation that simulates from the I/O standpoint (and you won't get the speedup benefit). Possibly the biggest challenge would be reliably simulating the timing expectations of the Z-80 code, but then again, maybe that is not too bad, since I can track CPU cycles. Hmm...

Another alternative is that I could patch EDTASM to use the ROM routines (which in turn I have trapped). Then you /would/ get the speedup benefit. From a cursory glance, that might not be to difficult, since the structure looks the same (four routines: read/write leader and sync, read/write byte), but I would need to study the EDTASM code more closely to be sure. I don't know why they did this, but maybe EDTASM predates the ROM? lol.

And then of course there's using a desktop emulator to get the work done, which I guess you are doing already in some cases.

Lastly, as mentioned sometime earlier, I do recommend using zmac. It's so much easier to code on the desktop, and it spews out a bunch of files ready for many emulators. For some reason, the TRS-80 cassette file has the '.lcas' extension, (.cas is some other format). Link for the curious:

http://www.48k.ca/zmac.html

Are you sure? yes | no

OK, because I am /really/ addicted to disassembly, I did disassemble all the cassette IO in EDTASM. The routines are almost exactly the same as in the ROM -- (the ROM has a couple very slightly modified for the BASIC integration). The routines are also in the same order as they are placed in ROM. This tends to make me feel like they were indeed created before the ROM, and probably given to Microsoft to do the integration into their BASIC (which became Level II and Level III). Also supporting this hypothesis is that the device drivers, Keyboard, Video, Printer, are present in EDTASM, not using ROM, but veritably code-identical to the ROM routines, and data is placed in the same manner. So I suspect that code pre-existed the ROM, and was also given to Microsoft when they integrated their BASIC.

Anyway, this tends to suggest that it would be trivial to make a patched EDTASM that thunks over to the ROM routines, and can enjoy the traps placed there. The one fly in that ointment is that there is a state variable related to cassette operation that would effectively move from the EDTASM location to where the ROM is expecting it. I need to look to see if that is a show-stopper or not.

Code forensics; fun! Now I really must get back to my day job, alas...

Are you sure? yes | no

I am very glad to read from your postings below that looking after my problems using EDTASM and even dissassembling it to answer my questions means fun to you.

Of course I might use xtrs with EDAS under LDOS ( under xtrs EDTASM in cassette-only mode also has problems creating correct .cas-files for the resulting objects, probably a timing issue since .cpt "cassette pulse timing" format works) or even zmac with a WINDOWS-PC, if the only goal was to produce a valid .cas file.

For me much of the fun with retro-computing comes from the nostalgic feelings that come with the memories of the good-old-times almost 40 years ago when I was learning something about computers playing with my real TRS-80 (including all the troubles it took getting along with a cassette-only system). So typing into EDTASM is much more authentic ;-). Unfortunately my "real" TRS-80 has a problem with cassette output (probably a bad diode, which I should replace) and is also getting a bit hot when operated for longer times (the HRG1B board is in the way of the venting air for the main power transistor).

So it is not a matter of life-or-death, but if you like computer forensics and have fun to patch EDTASM to work with your emulator I will happily use it. (Hopefully EDTASM is the only program using its own cassette routines, because the level 2 ROM was not available at the time).

Are you sure? yes | no

I'll try my hand at it, and test to the extent of my capacity. Most of the effort will be in having to learn EDTASM again to generate adequate test-cases.

I will attempt to patch EDTASM to use the rom routines. This should be very straightforward. To interface with a physical cassette is partially easy and partially hard.

The easy part is that I have already implemented the write side, since I needed that for 'cassette sound' that many games use. The read side is the hard part, since I never mapped that to any particular IO pin on any of the boards.

Additionally, external circuitry will be needed to condition the physical cassette electrical output into something that the IO plns can take and read. On the other hand, a clever implementation would be to implement a digital filter to obviate most of that circuitry, and reduce it to some clamping diodes for safety. Hmmm. I don't know if there's enough CPU cycles, but this is a really low frequency input with veritably no phase considerations, so it might be possible to create a small IIR filter to get the job done cheaply. Now I'm intrigued.

Are you sure? yes | no

OK, I did it. There is a new SD image out there (20180505a) which contains two pieces:

1) a new file in the cass dir 'edtasm-p.cas' which is the patched Editor/Assembler.

2) a new set of hex images that need to be burned.

There wound up being a slight complication with patching EDTASM: a state variable. The ROM routines place a state variable (a RAM copy of port 0xFF) at location 403dh, but the EDTASM places the same at 4028h. I was fearful this movement would collide with EDTASM's other use of that memory, but fortunately it was only meaningful for cassette on/off. Unfortunately, I had never actually implemented cassette on/off from the I/O standpoint (I patched the ROM routines), so I needed to complete that emulation so that I could leave EDTASM unpatched from the cassette on/off standpoint (thereby avoiding moving the state variable, or doing more extensive patches).

Anyway, you will need to flash the new firmware, then you can load the edtasm-p version, and you should be able to save and load source assembly, and also assemble and burn to tape.

Are you sure? yes | no

How do you enable #CLS, #LINE etc? It says something about BASGR in the manual, but where is this software and how do you start it. I now use the hex-file from trs80sd-20180427c in DuinoMite-Mini.

Are you sure? yes | no

yep! that is the current one. It includes the cassette file corruption fix, and also later that day I implemented Orchestra-85 support.

The various hires commands are enabled by a driver which you must load prior to using the commands. That is a SYSTEM tape named 'grl2.cas'. Do: SYSTEM, G, / and that will load the graphics kernel. Now the commands will be available.

There are two demo programs:"elips" and "k3d". Those are each BASIC, so you CLOAD them.

Be aware that k3d has a bug whereby it fails to activate the HRG before running, so you should execute a "#OPEN" beforehand to turn on the HRG. Also be aware it is quite slow. It will look like your computer is hung for quite a few seconds before you get a cryptic prompt requesting three numbers. Try these for starters:

-1,45,30

that will turn off the hidden line removal, and make it run much quicker to produce output. Then you can try:

1,45,30

which will turn on the hidden line removal, and show the display as above.

Ellipse takes quite a long time to run, but will start producing output immediately.

Side note: how to avoid further filesystem corruption:

* before turning off your board, be sure to 'eject' all cassettes and disks. This will close those files, and cause the filesystem driver to write out any pending data (and fat updates!). Then you can turn the board off.

Strictly, you only have to do this if you've ever written data, but it's a good practice. I may eventually add an 'eject all' button at some point once I figure out all the consequences of that action relative to the emulator's running state. (The emulator possibly should be reset, but maybe not.)

Are you sure? yes | no

Both programs worked great! Could you not just add "3 #OPEN" to k3d? It's useful that the text and the graphics screens are separate.

Are you sure? yes | no

Yes, indeed. I put k3d program in the SD image that way simply because that was the way it came from the factory. But I guess I'm not really running an archival museum, so maybe I should just fix the bug. It certainly confused me, at first.

Are you sure? yes | no