-

Hack Chat Transcript, Part 4

09/11/2019 at 20:20 • 0 comments![]()

@13r1ckz I recommended a couple of books earlier in the chat:

https://www.manning.com/books/deep-learning-with-python

https://www.amazon.com/Hands-Machine-Learning-Scikit-Learn-TensorFlow/dp/1491962291

![]() @Daniel Situnayake yeah actually, working on an algorithm to detect various open surgical tools identified so that it can help us out categorizing them after being jumbled in a surgery. any resources I can jump start from, typically a dataset, or do you advise me to build my own and work my way up?

@Daniel Situnayake yeah actually, working on an algorithm to detect various open surgical tools identified so that it can help us out categorizing them after being jumbled in a surgery. any resources I can jump start from, typically a dataset, or do you advise me to build my own and work my way up?![]() Is there an example(s) with YOLO + ML combo implementation with a MCU?

Is there an example(s) with YOLO + ML combo implementation with a MCU?Thanks @Daniel Situnayake and @Pete Warden

![]() Thank you for that link, and I second 'Deep learning with python' for a beginner.

Thank you for that link, and I second 'Deep learning with python' for a beginner.![]() @Daniel Situnayake wow great ill get those books asap

@Daniel Situnayake wow great ill get those books asap![]() @as.instrumedglobal that's very interesting! I think you'd likely need to find or build a dataset first, then you could use transfer learning to re-train an existing model to recognize the surgical tools

@as.instrumedglobal that's very interesting! I think you'd likely need to find or build a dataset first, then you could use transfer learning to re-train an existing model to recognize the surgical tools![]() @Don Gabriel not that I know of!

@Don Gabriel not that I know of!![]() @as.instrumedglobal this blog post I just found shows how you can do transfer learning with an object detection model (which can identify multiple instances of multiple classes of object in an image): https://medium.com/practical-deep-learning/a-complete-transfer-learning-toolchain-for-semantic-segmentation-3892d722b604

@as.instrumedglobal this blog post I just found shows how you can do transfer learning with an object detection model (which can identify multiple instances of multiple classes of object in an image): https://medium.com/practical-deep-learning/a-complete-transfer-learning-toolchain-for-semantic-segmentation-3892d722b604![]() Also, RISC-V seems to more becoming popular. Any ML / DL ports for RISC-V in the future?

Also, RISC-V seems to more becoming popular. Any ML / DL ports for RISC-V in the future?![]() OK, I better head out and finish off writing this book :D Ping me on Twitter if you have any more questions!! https://twitter.com/dansitu

OK, I better head out and finish off writing this book :D Ping me on Twitter if you have any more questions!! https://twitter.com/dansitu![]() Thank you everyone!!

Thank you everyone!!We talked a lot about Corrtex CPUs here. Are they strictly neccessary? What about ATmegas for example? I guess it depends on the size of the model and what the plan is with the processed dat

![]() I am comfortable with PICs since I have used them for many years

I am comfortable with PICs since I have used them for many years![]() if only they had an open source toolchain...

if only they had an open source toolchain...![]() @Max-Felix Müller atmegas are on the smaller side of the microcontroller families, might be enough to do a simple perceptron, but 2kB of ram doesn't work well with bigger nets

@Max-Felix Müller atmegas are on the smaller side of the microcontroller families, might be enough to do a simple perceptron, but 2kB of ram doesn't work well with bigger nets![]() open source toolchain for PICs? RISC-V does

open source toolchain for PICs? RISC-V does![]() with an open source programmer as well?

with an open source programmer as well?![]() I just started experimenting with RISC-V and seems promising

I just started experimenting with RISC-V and seems promising@de∫hipu Yeah, I think you will need a bit more than the atmega has to offer

![]() @Daniel Situnayake hmmm yeah I figured I'd have to do that since I couldn't find any. well, I think it can be an interesting project to work on, and I have access to plenty surgical sets from work so I know I can get decent info at least for the common tools. Thanks for the help and to all the Tensorflow Dev team, keep up the great work! To the peeps from Adafruit, your hardware makes me drool and everytime I check you Insta feed there's one more board that goes on the bucket list :D

@Daniel Situnayake hmmm yeah I figured I'd have to do that since I couldn't find any. well, I think it can be an interesting project to work on, and I have access to plenty surgical sets from work so I know I can get decent info at least for the common tools. Thanks for the help and to all the Tensorflow Dev team, keep up the great work! To the peeps from Adafruit, your hardware makes me drool and everytime I check you Insta feed there's one more board that goes on the bucket list :D![]() @Max-Felix Müller you can always add external memory, but it would be sloooooow

@Max-Felix Müller you can always add external memory, but it would be sloooooow![]() Microchip now has a bunch of ARM processor cores, including the M7 Core, that they program using the same toolchain, and have hung the same old peripherals on them, so not a big transition.

Microchip now has a bunch of ARM processor cores, including the M7 Core, that they program using the same toolchain, and have hung the same old peripherals on them, so not a big transition.![]() cool thx gh78731

cool thx gh78731@de∫hipu I think I will have to run some tests... What a great project idea for my holidays :D

![]() @Max-Felix Müller you know, someone actually ran a Linux kernel on an UNO with additional ram, running an ARM emulator...

@Max-Felix Müller you know, someone actually ran a Linux kernel on an UNO with additional ram, running an ARM emulator...![]() @Max-Felix Müller it only took about one week to boot

@Max-Felix Müller it only took about one week to boot![]() can we do facial recognition effectively at the edge? Just curious

can we do facial recognition effectively at the edge? Just curious![]() @Don Gabriel sure, there is that esp32 camera board that does it

@Don Gabriel sure, there is that esp32 camera board that does it![]() for $10 or something

for $10 or something![]() Summarizing resources to get started (tip: try out the codelabs aka tutorials first)

Summarizing resources to get started (tip: try out the codelabs aka tutorials first)For mobile and micro-controllers (in general):

Codelab: https://codelabs.developers.google.com/codelabs/recognize-flowers-with-tensorflow-on-android/#0

Tensorflow Lite: https://www.tensorflow.org/lite

For micro-controllers only:

Codelab: https://codelabs.developers.google.com/codelabs/sparkfun-tensorflow/#0

Tensorflow Lite for MicroControllers:

https://www.tensorflow.org/lite/microcontrollers/overview

(github) https://github.com/tensorflow/tensorflow/tree/master/tensorflow/lite/experimental/micro

For machine learning:

Andrew NG’s Machine Learning Course and Deep Learning Specialization

(Available on youtube and coursera for free)

UPCOMING: Watch out for the TinyML book release in January 2020: http://shop.oreilly.com/product/0636920254508.do (@Pete Warden and @Daniel Situnayake have done an excellent job to consolidate all resources into one book -- for anyone who wants to get started in this field)

-

Hack Chat Transcript, Part 3

09/11/2019 at 20:18 • 0 comments![]() @happyday.mjohnson the general rule is to do as little preprocessing as possible. We do run FFTs on audio to get features, but we take images and raw accelerometer data

@happyday.mjohnson the general rule is to do as little preprocessing as possible. We do run FFTs on audio to get features, but we take images and raw accelerometer data![]() @Sébastien Vézina keep reading :) You won't need to know much of the underlying theory to build some amazing stuff

@Sébastien Vézina keep reading :) You won't need to know much of the underlying theory to build some amazing stuff![]() How well does the square identifier model detects the backs of a human as a human? (have some problems with that)

How well does the square identifier model detects the backs of a human as a human? (have some problems with that)![]() @Dan Maloney Our Dan had a similar idea for recognizing pigeons! :)

@Dan Maloney Our Dan had a similar idea for recognizing pigeons! :)![]() @mtfurlan sorry, I've been having infrastructure troubles, will look into it tonight

@mtfurlan sorry, I've been having infrastructure troubles, will look into it tonight![]() @Pete Warden giving away my million dollar idea!! haha

@Pete Warden giving away my million dollar idea!! haha![]() @pete - in my way minimum exploration of accel data, there seems to be a benefit on some level of filtering, But I must admit I'm a bit clueless. I guess to me it makes intuitive sense. Since modeling is such a "Dark Art"

@pete - in my way minimum exploration of accel data, there seems to be a benefit on some level of filtering, But I must admit I'm a bit clueless. I guess to me it makes intuitive sense. Since modeling is such a "Dark Art"![]() @happyday.mjohnson yes colab is awesome -- it's free and convenient -- and you get GPUs too! A lot of folks think you need a lot of data and resources to get started -- but you actually don't. :)

@happyday.mjohnson yes colab is awesome -- it's free and convenient -- and you get GPUs too! A lot of folks think you need a lot of data and resources to get started -- but you actually don't. :)![]() from youtube "PTS

from youtube "PTSany boards planned with MCUs that include tensor accelerators like the MAIX?'

![]() @Arsenijs No worries I completely understand. Maybe have it echo your message back to IRC so it's clear if it doesn't work?

@Arsenijs No worries I completely understand. Maybe have it echo your message back to IRC so it's clear if it doesn't work?![]() @Pete Warden @Daniel Situnayake Incredible book, a lot thanks

@Pete Warden @Daniel Situnayake Incredible book, a lot thanks![]() @Meghan i just wish I understood the path from colab -> model on CP.

@Meghan i just wish I understood the path from colab -> model on CP.![]() @happyday.mjohnson I'm a newbie to accelerometer data too, but our Beijing team are working on the demo, and seem to have made a lot of progress without much preprocessing. The networks themselves are good at learning features

@happyday.mjohnson I'm a newbie to accelerometer data too, but our Beijing team are working on the demo, and seem to have made a lot of progress without much preprocessing. The networks themselves are good at learning features![]() Thank you @Andres Manjarres !!

Thank you @Andres Manjarres !!![]() @pete - do you have a timeframe when we can learn from your accel work?

@pete - do you have a timeframe when we can learn from your accel work?![]() @pete - it would be so cool to get this figured out before my parents pass away :-)

@pete - it would be so cool to get this figured out before my parents pass away :-)@Max-Felix Müller not really , do you have a link for it ?

![]() @happyday.mjohnson we'll have example code available by the end of the month!

@happyday.mjohnson we'll have example code available by the end of the month!![]() @mtfurlan as soon as I have the "it doesn't forward" detection coded in, it will just re-get the HaD token and re-send it, in short, it will fix itself instead of just signalling "broken" =)

@mtfurlan as soon as I have the "it doesn't forward" detection coded in, it will just re-get the HaD token and re-send it, in short, it will fix itself instead of just signalling "broken" =)![]() @Daniel - thank you. Where is the best place to follow for new postings?

@Daniel - thank you. Where is the best place to follow for new postings?@Foxmjay no, I was just curious

![]() Limor, PT I am working on the Hackaday Finals could I DM you about your M4 and 240x240 Displays (mechanical33486@icloud.com)

Limor, PT I am working on the Hackaday Finals could I DM you about your M4 and 240x240 Displays (mechanical33486@icloud.com)![]() @John Loeffler post here! happy to answer anything!

@John Loeffler post here! happy to answer anything!![]() @happyday.mjohnson Twitter is useful! I'd follow https://twitter.com/tensorflow for big announcements, and you can follow me (https://twitter.com/dansitu) for more fine-grained stuff

@happyday.mjohnson Twitter is useful! I'd follow https://twitter.com/tensorflow for big announcements, and you can follow me (https://twitter.com/dansitu) for more fine-grained stuff![]() @Daniel ...sigh...ok...i stay off of THE twitter and THE Facebook...but for TF/Keras....

@Daniel ...sigh...ok...i stay off of THE twitter and THE Facebook...but for TF/Keras....![]() @happyday.mjohnson we have a bunch of news, guides, projects, etc. that we post up here too : https://blog.adafruit.com/category/machine-learning/

@happyday.mjohnson we have a bunch of news, guides, projects, etc. that we post up here too : https://blog.adafruit.com/category/machine-learning/![]() and we're adding more things here too: https://www.adafruit.com/index.php?main_page=category&cPath=1006

and we're adding more things here too: https://www.adafruit.com/index.php?main_page=category&cPath=1006![]() @pt thank you. Your learning resources are incredible. Thank you for such an amazing company.

@pt thank you. Your learning resources are incredible. Thank you for such an amazing company.![]() @happyday.mjohnson Twitter is surprisingly amazing for ML stuff, you can follow a lot of very interesting folks

@happyday.mjohnson Twitter is surprisingly amazing for ML stuff, you can follow a lot of very interesting folks![]() one of our goals is to have something that is under $20 that does ML speech rec really good and something under $100 that does ML vision really good

one of our goals is to have something that is under $20 that does ML speech rec really good and something under $100 that does ML vision really good![]() thanks @happyday.mjohnson

thanks @happyday.mjohnson![]() all edge (not net connected)

all edge (not net connected)![]()

![]()

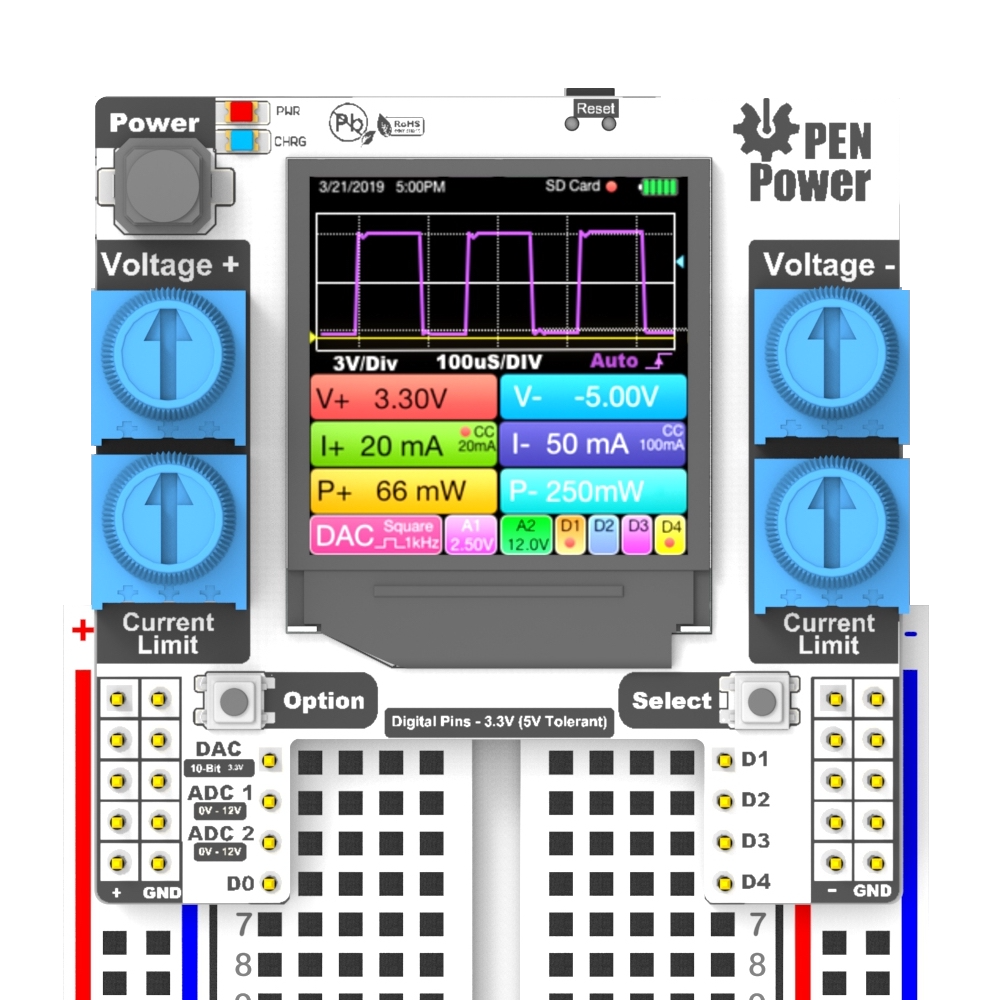

![]() How fast can the 240x240 update i wanted to explore this for a moonshot powersupply that can be a crude oscope

How fast can the 240x240 update i wanted to explore this for a moonshot powersupply that can be a crude oscope![]() @limor - you've got as much time as you want. Just let me know when you've got to get back to work

@limor - you've got as much time as you want. Just let me know when you've got to get back to work![]() 96Boards

96Boards@Adafruit Industries Have you ever done any work on a hybrid Arm cortex-A / cortex-M chipset? Something like the STM32MP1 or equivalent? Could use MCU for realtime and cortex A for heavy lifting etc..

![]() why the hell is called 'edge computing' btw?

why the hell is called 'edge computing' btw?![]() @Prof. Fartsparkle the idea is that it's happening at the "edge" of the network

@Prof. Fartsparkle the idea is that it's happening at the "edge" of the network![]() @John Loeffler you can send it SPI data as fast as 64MHz so you just need to update the display whenever ya want

@John Loeffler you can send it SPI data as fast as 64MHz so you just need to update the display whenever ya want![]() instead of in the middle

instead of in the middle![]() because we keep going back and forth between client-server and distributed, and we have since what, 1970?

because we keep going back and forth between client-server and distributed, and we have since what, 1970?![]() every time we call it something else

every time we call it something else![]() Hi, how hard is it to work with the PIC32 series like the PIC32MX/MZ ?

Hi, how hard is it to work with the PIC32 series like the PIC32MX/MZ ?![]() remember when "thin client PC" were all the rage

remember when "thin client PC" were all the rage![]() @pt did you think in FPGAs for these low-cost and low-power solutions?

@pt did you think in FPGAs for these low-cost and low-power solutions?![]() For ML

For ML![]() in many cases, there's no network connection involved whatsoever

in many cases, there's no network connection involved whatsoever![]() PIC32 is not based on ARM so it will be tougher

PIC32 is not based on ARM so it will be tougher![]() there's no strong optimization efforts going that we know of

there's no strong optimization efforts going that we know of![]() @Andres Manjarres for FPGAs tough for low cost and harder to learn/use

@Andres Manjarres for FPGAs tough for low cost and harder to learn/use![]() Do you see the need for custom chip solution to really take advantage of "edge" Deep learning?

Do you see the need for custom chip solution to really take advantage of "edge" Deep learning?![]() Have you considered the Lattice ECP series?

Have you considered the Lattice ECP series?![]() nope

nope![]() want to keep things easy for everyone to use!

want to keep things easy for everyone to use!![]() @Don Gabriel The PIC32 is a MIPS processor, and MIPS went bankrupt, so no future products coming. Better to invest your software effort in ARM.

@Don Gabriel The PIC32 is a MIPS processor, and MIPS went bankrupt, so no future products coming. Better to invest your software effort in ARM.![]() Thx gh78731

Thx gh78731![]() @happyday.mjohnson there is a ton of ML-optimized hardware on the way, but even a Cortex-M can do really useful stuff today

@happyday.mjohnson there is a ton of ML-optimized hardware on the way, but even a Cortex-M can do really useful stuff today![]() @pt Okey, very understandable

@pt Okey, very understandable![]() ok! ladyada says we need to go back to engineering

ok! ladyada says we need to go back to engineering![]() thank you everyone!

thank you everyone!![]() keep hangin out here and posting up your questions and more!

keep hangin out here and posting up your questions and more!![]() Thanks!

Thanks!![]() 'so i think we're gonna go! that said, we have a show tonight too at 8pm ET

'so i think we're gonna go! that said, we have a show tonight too at 8pm ET![]() Adafruit / Google/ Hackaday - thanks very much.

Adafruit / Google/ Hackaday - thanks very much.![]() thank you!

thank you!![]() Thanks for hosting us, and all the great questions!

Thanks for hosting us, and all the great questions!![]() Is anyone considering a particular project they'd like to build? I'd be happy to help figure out if it's feasible and point you at the right resources to get started!

Is anyone considering a particular project they'd like to build? I'd be happy to help figure out if it's feasible and point you at the right resources to get started!![]() Great links!

Great links!![]() thanks

thanks![]() @limor and @pt - As always, it's a rush to have you guys on. Lot's of info, lots to digest. Thanks so much for your time, and thanks to @Daniel Situnayake , @Meghna Natraj , and @Pete Warden for everything!

@limor and @pt - As always, it's a rush to have you guys on. Lot's of info, lots to digest. Thanks so much for your time, and thanks to @Daniel Situnayake , @Meghna Natraj , and @Pete Warden for everything!![]() special thanks to google folks for being here!

special thanks to google folks for being here!![]() Thank you

Thank you![]() Thanks!

Thanks!![]() Thanks everyone!! Great chatting with you :D

Thanks everyone!! Great chatting with you :D![]() Feel free to ping me on https://twitter.com/dansitu if you have more q's

Feel free to ping me on https://twitter.com/dansitu if you have more q's![]() Thanks

Thanks![]() And I will hang out in here for a while longer :)

And I will hang out in here for a while longer :)![]() thanks everyone for all the good resources and updates. it took me 6 months of stalking the PyBadge to finally be able to order our makerspace some boards. They're still clearing customs but we can't wait to give them a try!

thanks everyone for all the good resources and updates. it took me 6 months of stalking the PyBadge to finally be able to order our makerspace some boards. They're still clearing customs but we can't wait to give them a try!![]() thank you @Dan Maloney and hackaday!

thank you @Dan Maloney and hackaday!![]() Thank you @Dan Maloney!!

Thank you @Dan Maloney!!![]() @pt - Great Chat, thanks so much!

@pt - Great Chat, thanks so much!![]() And thanks @pt and @limor for inviting us :D

And thanks @pt and @limor for inviting us :D@Daniel Situnayake I want to build a traffic sign detector (german signs) with a camera and a jetson nano. Where do I start? Getting images of signs / finished networks etc.?

![]() hopefully we'll get them in for our Google DevFest come October 5th

hopefully we'll get them in for our Google DevFest come October 5th![]() Are you planning to do any examples on GANs?

Are you planning to do any examples on GANs?![]() Thank you for the information all and adafruit for the chat,

Thank you for the information all and adafruit for the chat,![]() @max-fe

@max-fe![]() What ML libraries are you planning to use? TensorFlow, Keras, etc.. ??

What ML libraries are you planning to use? TensorFlow, Keras, etc.. ??![]() Gonna take me a while to post the transcript, but it'll be here: https://hackaday.io/event/166253-machine-learning-with-microcontrollers-hack-chat

Gonna take me a while to post the transcript, but it'll be here: https://hackaday.io/event/166253-machine-learning-with-microcontrollers-hack-chat![]() And don't forget to tune in next week for the SDR Hack Chat with Corrosive!

And don't forget to tune in next week for the SDR Hack Chat with Corrosive!![]()

https://hackaday.io/event/167395-software-defined-radio-hack-chat

Software Defined Radio Hack Chat

SDR guru Corrosive joins us for the Hack Chat on Wednesday, September 18 2019 at noon PDT. Time zones got you down? Here's a handy time converter! If you've been into hobby electronics for even a short time, chances are you've got at least one software-defined radio lying around.

![]() @Max-Felix Müller yes! I'd first of all look for datasets of signs, or a trained network you can use. Since they're pan-EU signs, I bet there's something out there. then, train the best model that you can without even thinking about the "edge" part. you might want to try transfer learning with an existing vision model

@Max-Felix Müller yes! I'd first of all look for datasets of signs, or a trained network you can use. Since they're pan-EU signs, I bet there's something out there. then, train the best model that you can without even thinking about the "edge" part. you might want to try transfer learning with an existing vision model![]() @Max-Felix Müller after that, try and shrink the model down so it fits on your device while still being accurate

@Max-Felix Müller after that, try and shrink the model down so it fits on your device while still being accurate![]() what would be the recommended point of start for learning machine learning from scratch?

what would be the recommended point of start for learning machine learning from scratch?![]() @cet we have some Google tutorials on GANs, e.g. https://www.tensorflow.org/beta/tutorials/generative/dcgan

@cet we have some Google tutorials on GANs, e.g. https://www.tensorflow.org/beta/tutorials/generative/dcgan![]() @13r1

@13r1@Daniel Situnayake I heard about transfer learning and know what it's about but I have no idea how to use it. Do you have a link for a tutorial?

![]() Thank you @Daniel Situnayake

Thank you @Daniel Situnayake![]() @Max-Felix Müller here's a good place to start: https://codelabs.developers.google.com/codelabs/tensorflow-for-poets/#0

@Max-Felix Müller here's a good place to start: https://codelabs.developers.google.com/codelabs/tensorflow-for-poets/#0![]() @13r1ckz I recommended a couple of books earlier in the chat:

@13r1ckz I recommended a couple of books earlier in the chat:https://www.manning.com/books/deep-learning-with-python

https://www.amazon.com/Hands-Machine-Learning-Scikit-Learn-TensorFlow/dp/1491962291

![]()

Hack Chat Transcript, Part 2

09/11/2019 at 20:17 • 0 comments![]() @Daniel thanks for the github link.

@Daniel thanks for the github link.![]() are you leveraging transfer learning in these demos?

are you leveraging transfer learning in these demos?![]() @Tara the demos we are showing are pi 4, nordic nrf52840, and samd 51

@Tara the demos we are showing are pi 4, nordic nrf52840, and samd 51![]() @Duncan our speech model that can detect "yes" or "no" is ~20kb, our vision model that can detect the presence or absence of a person is ~250kb. I'm not sure

@Duncan our speech model that can detect "yes" or "no" is ~20kb, our vision model that can detect the presence or absence of a person is ~250kb. I'm not sure![]() @Tara we're actually working on a book that has a chapter about setting up TF Lite for new devices. TF Lite Micro should be a lot easier to port than regular TF Lite too - http://shop.oreilly.com/product/0636920254508.do

@Tara we're actually working on a book that has a chapter about setting up TF Lite for new devices. TF Lite Micro should be a lot easier to port than regular TF Lite too - http://shop.oreilly.com/product/0636920254508.do![]() that looks amazing for when you're far out @Evan Juras thank you

that looks amazing for when you're far out @Evan Juras thank you![]() @Andres Manjarres Unfortunately no. 1) we train regular tensor flow models (and factor in micro controller constraints if possible- small model size, low latency) 2) convert it to a tensorflow lite model 3) run it on a microcontroller that we support.

@Andres Manjarres Unfortunately no. 1) we train regular tensor flow models (and factor in micro controller constraints if possible- small model size, low latency) 2) convert it to a tensorflow lite model 3) run it on a microcontroller that we support.![]() *I'm not sure of the exact latency, but it's pretty low!

*I'm not sure of the exact latency, but it's pretty low!![]() @Duncan we'll show the yes no demo on video right now here too ---

@Duncan we'll show the yes no demo on video right now here too --- ![]() Thanks @pt and @Pete Warden !! I'll definitely check it out.

Thanks @pt and @Pete Warden !! I'll definitely check it out.![]() @Tara drop an email to petewarden@google.com and we might be able to send you a draft copy

@Tara drop an email to petewarden@google.com and we might be able to send you a draft copy![]() Thanks @Matteo Borri :D

Thanks @Matteo Borri :D![]() @Meghna Natraj Okey, thanks

@Meghna Natraj Okey, thanks![]() @Dan Maloney yes! we would have to take ~100+ photos of me and pt and train a new model to detect us

@Dan Maloney yes! we would have to take ~100+ photos of me and pt and train a new model to detect us![]() Limor and PT any Cortex M7 Boards in the works?

Limor and PT any Cortex M7 Boards in the works?![]() Question: Do you plan to build a Deep Learning demo also?

Question: Do you plan to build a Deep Learning demo also?![]() I use google colab for deep learning/keras/tensorflow , mu for CP...what is/will be the ideal tool chain for this experience?

I use google colab for deep learning/keras/tensorflow , mu for CP...what is/will be the ideal tool chain for this experience?![]() @Pete Warden I'd be interested in that for a high school class...

@Pete Warden I'd be interested in that for a high school class...![]() we haven't written a guide on that yet - hopefully soon, we're learning a lot every weekend!

we haven't written a guide on that yet - hopefully soon, we're learning a lot every weekend!![]() @limor Cool, thanks. Thought it would be something like that.

@limor Cool, thanks. Thought it would be something like that.![]() @Daniel Situnayake 20kB is impressive! Nice work.

@Daniel Situnayake 20kB is impressive! Nice work.![]() @jcradford please drop me an email too

@jcradford please drop me an email too![]() Thanks @Daniel Situnayake

Thanks @Daniel Situnayake![]() @limor do some models made for desktop/server run with tensorflow lite on the SAMD51? Like the more popular image recognition networks like mobilnet or tiny-yolo?

@limor do some models made for desktop/server run with tensorflow lite on the SAMD51? Like the more popular image recognition networks like mobilnet or tiny-yolo?![]() Very cool. Also, Matteo, you misremembered what we generated. It was not a learning capable program, it was a fixed program. We used genetic programming and simulation to develop task specific programs.

Very cool. Also, Matteo, you misremembered what we generated. It was not a learning capable program, it was a fixed program. We used genetic programming and simulation to develop task specific programs.![]() I was planning on scraping family photos from Google Photos to train a model for a security system I'm working on

I was planning on scraping family photos from Google Photos to train a model for a security system I'm working on![]() ok! all the demos worked, yay

ok! all the demos worked, yay![]() @Dick Brooks if you want to see the absolute basics of training a deep learning model and deploying it to device, check out this sample: http://github.com/tensorflow/tensorflow/blob/master/tensorflow/lite/experimental/micro/examples/hello_world

@Dick Brooks if you want to see the absolute basics of training a deep learning model and deploying it to device, check out this sample: http://github.com/tensorflow/tensorflow/blob/master/tensorflow/lite/experimental/micro/examples/hello_world![]() one tip for folks who want to play around, check out https://runwayml.com

one tip for folks who want to play around, check out https://runwayml.com![]() @pt Is the demo code you are showing for the pi4, Nordic nrf52840, and samd 51 available?

@pt Is the demo code you are showing for the pi4, Nordic nrf52840, and samd 51 available?![]() @tara yep!

@tara yep!![]() Everyone, if you miss something, rest assured that there will be a full transcript posted after the chat, as well as links to the demos and livestream.

Everyone, if you miss something, rest assured that there will be a full transcript posted after the chat, as well as links to the demos and livestream.![]() @Daniel - thank you for the github on learning tensorflow...besides Adafruit learning (most awesome) where else should we go to explore?

@Daniel - thank you for the github on learning tensorflow...besides Adafruit learning (most awesome) where else should we go to explore?![]() @Evan Juras yay i used your awsome guide to make the demo im running now. just changed a couple things and using pygame instead of opencv so it works with framebuffer devices (no X11)

@Evan Juras yay i used your awsome guide to make the demo im running now. just changed a couple things and using pygame instead of opencv so it works with framebuffer devices (no X11)![]() i gotta learn TF

i gotta learn TF![]() @John Loeffler "In the works" is subjective but signs point to yes ;)

@John Loeffler "In the works" is subjective but signs point to yes ;)![]() Did you try to use the optimizations like quantization? to reduce the size and improve the power consumption o something like that?

Did you try to use the optimizations like quantization? to reduce the size and improve the power consumption o something like that?![]() @happyday.mjohnson it's not quite out yet, but @Pete Warden and I are writing this book: http://shop.oreilly.com/product/0636920254508.do

@happyday.mjohnson it's not quite out yet, but @Pete Warden and I are writing this book: http://shop.oreilly.com/product/0636920254508.do![]() @limor that's so great to hear! Thanks!! Glad it was helpful :D

@limor that's so great to hear! Thanks!! Glad it was helpful :D![]() Play Video

Play Video![]() however, if you're looking to learn tensorflow generally, I'd recommend one of these courses:

however, if you're looking to learn tensorflow generally, I'd recommend one of these courses:![]() the goal is to be able to do some type of ML in 5 mins or less from taking it out of the box

the goal is to be able to do some type of ML in 5 mins or less from taking it out of the box![]() Limor and PT any Cortex M7 Boards in the works?

Limor and PT any Cortex M7 Boards in the works?![]() @Pete Warden YES!! Thank you

@Pete Warden YES!! Thank you![]() @John Loeffler I have an informed answer above ;)

@John Loeffler I have an informed answer above ;)![]() @John Loeffler not yet :)

@John Loeffler not yet :)![]() @Daniel, i'm sorry ...didn't get info on tensorflow learning courses... (I'm currently trying CS230/stanford...)

@Daniel, i'm sorry ...didn't get info on tensorflow learning courses... (I'm currently trying CS230/stanford...)![]() <- eats foot

<- eats foot![]() from youtube "Ronald Mourant

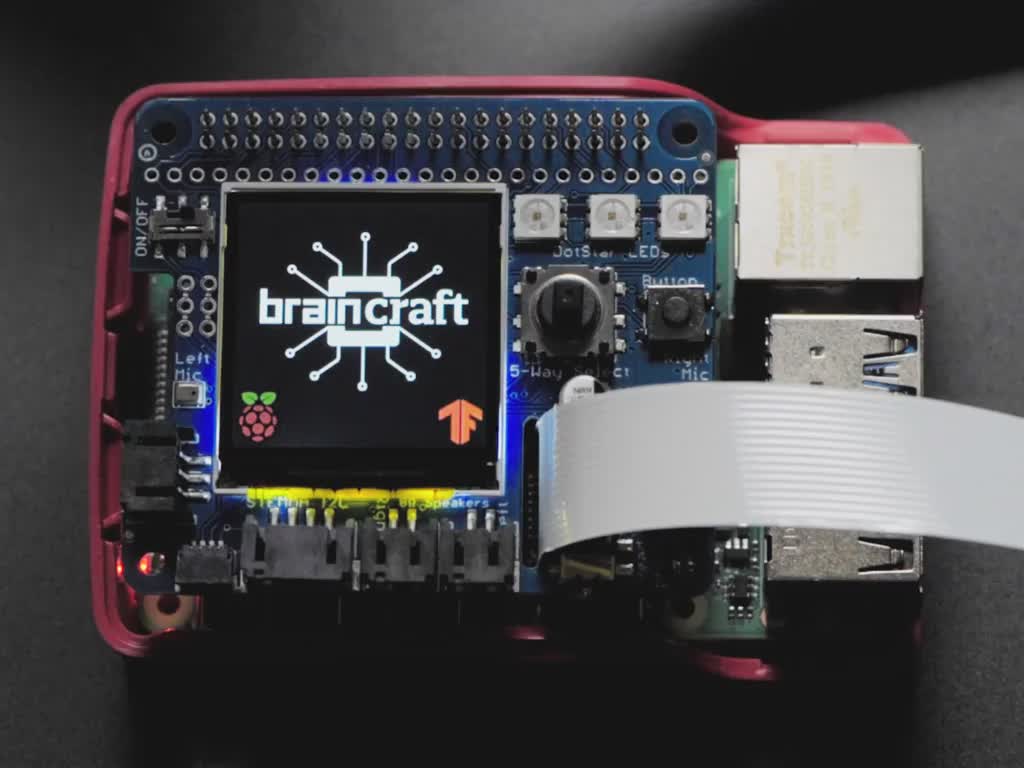

from youtube "Ronald MourantWill the BrainCroft Hat be available as a product? When? Price?"

![]() from youtube "chuck

from youtube "chuckDo you do training on microcontrollers for something adaptive?"

![]() @limor @pt do some models made for desktop/server run with tensorflow lite on the SAMD51? Like the more popular image recognition networks like mobilnet or tiny-yolo?

@limor @pt do some models made for desktop/server run with tensorflow lite on the SAMD51? Like the more popular image recognition networks like mobilnet or tiny-yolo?![]() Here are some general courses for learning TensorFlow:

Here are some general courses for learning TensorFlow:- https://www.udacity.com/course/intro-to-tensorflow-for-deep-learning--ud187

- https://www.coursera.org/specializations/tensorflow-in-practice

- https://www.deeplearning.ai/tensorflow-in-practice/

![]() "GJ E

"GJ EQ: how far are you thinking you will go on making these projects? in the sense of only making identifiers, training models or .... I would like to know what to look forward to"

![]() Not sure if you answered: Did you have a model that can tell the difference between two people?

Not sure if you answered: Did you have a model that can tell the difference between two people?![]() rather than just object detection

rather than just object detection![]() These were all developed by the TensorFlow team at Google and contain roughly the same content

These were all developed by the TensorFlow team at Google and contain roughly the same content![]() @Daniel - yay - thank you for the course info.

@Daniel - yay - thank you for the course info.![]() Vid and audio demos are fine but I imagine the models are expensive to train. Are there plans to to create demos that feature just raw data? Readings from multiple sensors and so on.

Vid and audio demos are fine but I imagine the models are expensive to train. Are there plans to to create demos that feature just raw data? Readings from multiple sensors and so on.![]() "rohan joshi

"rohan joshiwhat are upcoming projects ?"

![]() I also love this book; it's by the guy who created Keras, TF's high-level API: https://www.manning.com/books/deep-learning-with-python

I also love this book; it's by the guy who created Keras, TF's high-level API: https://www.manning.com/books/deep-learning-with-python![]() How much do you need to know about DL/NN theory in order to use TF efficiently? Or start, at least. I find that deciding what hidden layers and how many of them should go into your NN isnt very clear.

How much do you need to know about DL/NN theory in order to use TF efficiently? Or start, at least. I find that deciding what hidden layers and how many of them should go into your NN isnt very clear.![]() @Phillip Scruggs yes we answered, see above or check out @Evan Juras 's guide

@Phillip Scruggs yes we answered, see above or check out @Evan Juras 's guide![]() @Phillip Scruggs - @limor answered yes when I asked. She said it would take ~ 100 images to train each person.

@Phillip Scruggs - @limor answered yes when I asked. She said it would take ~ 100 images to train each person.![]() great place to start if you're learning! I would emphasize that you DO NOT need to go and learn a bunch of math or do CS-style courses to get started with deep learning

great place to start if you're learning! I would emphasize that you DO NOT need to go and learn a bunch of math or do CS-style courses to get started with deep learning![]() @Daniel Sit

@Daniel Sit![]() @Daniel - yah the deep learning w/ python is a most excellent book. I also bought the audible so I can sit there look at the pictures and be read to....i love that he doesn't get too much in the math and has an intuitive way about him.

@Daniel - yah the deep learning w/ python is a most excellent book. I also bought the audible so I can sit there look at the pictures and be read to....i love that he doesn't get too much in the math and has an intuitive way about him.![]() @Daniel Situnayake AH! exactly what i've been doing :s

@Daniel Situnayake AH! exactly what i've been doing :s![]() "Speak & ML", lol

"Speak & ML", lol![]() some upcoming projects... 1) speak and spell (speak and ML) kid's toy that sees things and says them, kids play scavenger hunt with it.

some upcoming projects... 1) speak and spell (speak and ML) kid's toy that sees things and says them, kids play scavenger hunt with it.2) emoji cam, all it does is sit on your desk and change your mood status based on your smile, etc.

3) video game that changes characters based on what you show it, want to be a cat? show it a cat.

![]() @happyday.mjohnson [What resources do you use for training?] Using colab or even your local desktop (only CPU) just works fine. It would take longer -- but you don't need 'fancy' hardware to get started. :) Especially with micro controller constraints, models sizes and training times are quite less. To get stated, you can train models for a day, try to get them to run on a mcu -- done!. I'll share some resources shortly..

@happyday.mjohnson [What resources do you use for training?] Using colab or even your local desktop (only CPU) just works fine. It would take longer -- but you don't need 'fancy' hardware to get started. :) Especially with micro controller constraints, models sizes and training times are quite less. To get stated, you can train models for a day, try to get them to run on a mcu -- done!. I'll share some resources shortly..![]() re: braincraft hat - its still under revision, we want to build a few more projects to make sure we didnt miss anything, check out the singup page here - as soon as we have it ready for release we'll email you!

re: braincraft hat - its still under revision, we want to build a few more projects to make sure we didnt miss anything, check out the singup page here - as soon as we have it ready for release we'll email you!![]()

https://www.adafruit.com/product/4374

ADAFRUIT

ADAFRUIT INDUSTRIES![]()

Coming Soon! Machine Learning BrainCraft HAT for Raspberry Pi 4

Adafruit Industries, Unique & fun DIY electronics and kits Coming Soon! Machine Learning BrainCraft HAT for Raspberry Pi 4 ID: 4374 - Coming soon! Sign up to be notified when we have these in the store!The idea behind the BrainCraft board (stand-alone, and Pi “hat”) is that you’d be able to “craft brains” for Machine Learning on the EDGE, with Microcontrollers & Microcomputers.

![]() @pt, @limor and @Pete Warden is there a book/resource to start learning about Tiny ML? I want to do my final project based on tiny ml.

@pt, @limor and @Pete Warden is there a book/resource to start learning about Tiny ML? I want to do my final project based on tiny ml.![]() @Sébastien Vézina it's super fun to learn the math stuff along the way, but I would definitely recommend just going through a couple of the intro books. The first one I recommended is great:

@Sébastien Vézina it's super fun to learn the math stuff along the way, but I would definitely recommend just going through a couple of the intro books. The first one I recommended is great:https://www.manning.com/books/deep-learning-with-python

Also, this one is very good (but I haven't read it myself yet)

https://www.amazon.com/Hands-Machine-Learning-Scikit-Learn-TensorFlow/dp/1491962291

I have made a simple perception implementation some time ago on an atmega328 , for 1kb challenge and for fun

https://hackaday.io/project/18769-avr-perceptron-initiation-to-neural-networks

Seeing now that we can do speech and image detection using TF on microcontrollers makes me happy. looking forward to see more !.

![]() we are also making it so all the models show up as files on the "drive" so you can just swap them out

we are also making it so all the models show up as files on the "drive" so you can just swap them out![]() @Meghna - thanks. re: colab w/ GPU has been a terrific experience (so far). i also like how easy it is to put datasets on google drive and read in. Also, github integration is nice.

@Meghna - thanks. re: colab w/ GPU has been a terrific experience (so far). i also like how easy it is to put datasets on google drive and read in. Also, github integration is nice.![]() from discord "unawooToday at 3:29 PM

from discord "unawooToday at 3:29 PMHi, anyone know of an audio database for tensorflo? i want a microphone in the yard to tell me if someone woofed or meowed"

![]() For Tiny ML specifically, @Pete Warden and I are working on this book:

For Tiny ML specifically, @Pete Warden and I are working on this book:http://shop.oreilly.com/product/0636920254508.do

![]() any boards planned with MCUs that include tensor accelerators like the MAIX?

any boards planned with MCUs that include tensor accelerators like the MAIX?![]() @Daniel - so hard to wait for 6/2020....

@Daniel - so hard to wait for 6/2020....![]() oh wow, it shouldn't be June 2020!

oh wow, it shouldn't be June 2020!![]() it should be Jan 2020 - I will have them fix the date :)

it should be Jan 2020 - I will have them fix the date :)![]() @Daniel Situnayake Yep I'm on the 3rd chapter of that book but felt I needed to backtrack a bit after seeing a few mentions of linear algebra - i'll keep reading!

@Daniel Situnayake Yep I'm on the 3rd chapter of that book but felt I needed to backtrack a bit after seeing a few mentions of linear algebra - i'll keep reading!![]() what about preprocessing of sensor data? Are there "best practices" as there are for electronics signal processing?

what about preprocessing of sensor data? Are there "best practices" as there are for electronics signal processing?@Foxmjay cool, do you know about more ATMega ML Projects?

![]() it's already mostly written, and you can sign up to O'Reilly's plan and read the currently finished chapters

it's already mostly written, and you can sign up to O'Reilly's plan and read the currently finished chapters![]() On a Pi 4, how long does it take the inference engine to recognize an image? Assume the image is an array of 64 bytes.

On a Pi 4, how long does it take the inference engine to recognize an image? Assume the image is an array of 64 bytes.![]() @Daniel - thanks for bringing that up about the book. i'll try..

@Daniel - thanks for bringing that up about the book. i'll try..![]() @limor @pt have you done any work with the STM32MP1 or any other Cortex-A / Cortex-M hybrid chipsets?

@limor @pt have you done any work with the STM32MP1 or any other Cortex-A / Cortex-M hybrid chipsets?![]() Oh hey the IRC bridge is down. How useful.

Oh hey the IRC bridge is down. How useful.What kind of demo usecases do you use while working on tensorflow?

![]() @Peabody1929 we are going to show that now on vid!

@Peabody1929 we are going to show that now on vid! ![]() I had an idea to train a model against all the dogs that bark all day in my neighborhood. See if I can identify which dog is barking just from it's "voice". Not sure what I do with that information once I have it though...

I had an idea to train a model against all the dogs that bark all day in my neighborhood. See if I can identify which dog is barking just from it's "voice". Not sure what I do with that information once I have it though...![]() Hack Chat Transcript, Part 1

09/11/2019 at 20:15 •

0 comments

Hack Chat Transcript, Part 1

09/11/2019 at 20:15 •

0 comments

OK, big crowd today, let's get started. Welcome to the Hack Chat everyone, thanks for coming along for a tour of what's possible with machine learning and microcontrollers. We've got @pt and @limor from Adafruit, along with @Meghna Natraj, @Daniel Situnayake, and Pete Warden fromt he Google TensorFlow team.

We've also got a livestream for demos -

Also, we hear that a class of sixth-graders is tuned in. Let's make sure we make them feel welcome - we always support STEM!

![]() heyyyy everybooooody

heyyyy everybooooody![]() Hey everyone!! I am so jealous of that sixth-grade class; they have an awesome teacher

Hey everyone!! I am so jealous of that sixth-grade class; they have an awesome teacher![]() Can everyone on the Adafruit and Google side just introduce themselves briefly?

Can everyone on the Adafruit and Google side just introduce themselves briefly?![]() hi everyone itsa me- ladyada

hi everyone itsa me- ladyada![]() Hi! This is Pete from the TensorFlow team at Google

Hi! This is Pete from the TensorFlow team at Google![]() check out the youtube video for the live demoooos

check out the youtube video for the live demoooos![]() and more chitchat :)

and more chitchat :)![]() Hi everyone! I'm Meghna from the Google Tensorflow Lite team. Excited to be a part of this event! :)

Hi everyone! I'm Meghna from the Google Tensorflow Lite team. Excited to be a part of this event! :)![]() i'm pt, work with limor at adafruit, and founded hackaday 15 years ago which is a coincidence

i'm pt, work with limor at adafruit, and founded hackaday 15 years ago which is a coincidence![]() lets drop some LINKS

lets drop some LINKS![]() Hey @Pete Warden and google folks ! When is TensorFlow 2.0 officially releasing?? :D

Hey @Pete Warden and google folks ! When is TensorFlow 2.0 officially releasing?? :D![]() while y'all think of the questions and ya wanna ask

while y'all think of the questions and ya wanna ask![]() A happy coincidence ;-)

A happy coincidence ;-)![]() Hey!! I'm Dan, and I work on the TensorFlow Lite team at Google. TF Lite is a set of tools for deploying machine learning inference to devices, from mobile phones all the way down to microcontrollers. Before I was at Google, I worked on an insect farming startup called Tiny Farms :)

Hey!! I'm Dan, and I work on the TensorFlow Lite team at Google. TF Lite is a set of tools for deploying machine learning inference to devices, from mobile phones all the way down to microcontrollers. Before I was at Google, I worked on an insect farming startup called Tiny Farms :)Hello everyone... sorry for the late apperance...

![]() Hey Dan, so it's a Bug's Life?

Hey Dan, so it's a Bug's Life?![]() Can we get a brief overview of ML on uCs for those of us unfamiliar with the space? What problems is it solving, and on generic microcontrollers or special parts?

Can we get a brief overview of ML on uCs for those of us unfamiliar with the space? What problems is it solving, and on generic microcontrollers or special parts?![]() So when does it start?

So when does it start?![]() @pt How neuronal network do yo use in this process?

@pt How neuronal network do yo use in this process?![]() OK so, if you're not familiar with ML on MCUs, I can share a few thoughts!

OK so, if you're not familiar with ML on MCUs, I can share a few thoughts!![]() Can we make our own models (e.g.: using an LSTM for timeseries accelerometer readings)?

Can we make our own models (e.g.: using an LSTM for timeseries accelerometer readings)?![]() @pt What problems this Tiny ML can solve?

@pt What problems this Tiny ML can solve?![]() Who invented the term 'confusion matrix'?

Who invented the term 'confusion matrix'?![]() @Daniel Situnayake What is the size of a typical TensorFlow Lite network (in kB) and what is the inference latency?

@Daniel Situnayake What is the size of a typical TensorFlow Lite network (in kB) and what is the inference latency?![]() @andres for raspberry pi we're using the mobilenet v2 models that are optimized for mobile device use - its really a tone of work they put into it to optimize them and we wouldn't be able to do a better job!

@andres for raspberry pi we're using the mobilenet v2 models that are optimized for mobile device use - its really a tone of work they put into it to optimize them and we wouldn't be able to do a better job!![]() Re: TensorFlow 2.0 - I honestly don't know, but we did just publish a release candidate I believe, so it won't be long

Re: TensorFlow 2.0 - I honestly don't know, but we did just publish a release candidate I believe, so it won't be long![]() @Christopher Bero while @Daniel Situnayake is answering, here are 2 example, one for the pi 4 and one for a samd https://learn.adafruit.com/running-tensorflow-lite-on-the-raspberry-pi-4?view=all & https://learn.adafruit.com/tensorflow-lite-for-microcontrollers-kit-quickstart?view=all

@Christopher Bero while @Daniel Situnayake is answering, here are 2 example, one for the pi 4 and one for a samd https://learn.adafruit.com/running-tensorflow-lite-on-the-raspberry-pi-4?view=all & https://learn.adafruit.com/tensorflow-lite-for-microcontrollers-kit-quickstart?view=all![]() i'm interested in fall detection for my (very elderly) parents.

i'm interested in fall detection for my (very elderly) parents.![]() Okey thanks!!!

Okey thanks!!!![]() Don't forget to tune into the livestream everyone:

Don't forget to tune into the livestream everyone: ![]() Thanks!

Thanks!![]() Re: fall detection - We're working on an accelerometer-based example for gesture recognition, and hope to release that soon, it might be a good starting point

Re: fall detection - We're working on an accelerometer-based example for gesture recognition, and hope to release that soon, it might be a good starting point![]() @happyday.mjohnson I'm interested in home electricity consumption patterns for older people too, ditto water usage. (eg nobody used the toilet today)

@happyday.mjohnson I'm interested in home electricity consumption patterns for older people too, ditto water usage. (eg nobody used the toilet today)![]() thank you. i'm interested also in the preprocessing. For example, one bunch-oh-data for human activity w/ accel used 4th order Butterworth Filter...whatever that means...

thank you. i'm interested also in the preprocessing. For example, one bunch-oh-data for human activity w/ accel used 4th order Butterworth Filter...whatever that means...![]() @Arthur Lorencini Bergamaschi for us, we can do non-net connected voice recognition for microcontrollers, for example - we made a low cost example that can control a servo to move up ro down, based on voice only, good start for folks with mobility issues

@Arthur Lorencini Bergamaschi for us, we can do non-net connected voice recognition for microcontrollers, for example - we made a low cost example that can control a servo to move up ro down, based on voice only, good start for folks with mobility issues![]() @pt and @limor thanks for answering!

@pt and @limor thanks for answering!![]() @Pete Warden Accelerometer only detection would be impressive. I've found that a gyro adds a lot of valuable signal with that type of task.

@Pete Warden Accelerometer only detection would be impressive. I've found that a gyro adds a lot of valuable signal with that type of task.![]() OK, so for a brief overview of ML on microcontrollers, I guess we should introduce ML first?

OK, so for a brief overview of ML on microcontrollers, I guess we should introduce ML first?![]() @Arthur Lorencini Bergamaschi using TFlite means you dont have to do a lot of the optiizations required for heuristic-based pattern recognition

@Arthur Lorencini Bergamaschi using TFlite means you dont have to do a lot of the optiizations required for heuristic-based pattern recognition![]() so there is preprocessing, training, making the model...maintaining...all these steps. NOt sure what goes on cloud what goes in edge. e.g.: I plop a acell/gyro on my parents....somehow get the readings...then do analysis in-dah-cloud? Then "compile" for edge?

so there is preprocessing, training, making the model...maintaining...all these steps. NOt sure what goes on cloud what goes in edge. e.g.: I plop a acell/gyro on my parents....somehow get the readings...then do analysis in-dah-cloud? Then "compile" for edge?![]() I'm wondering, how much further development do Google see for Tensorflow.js? MCUs can use that easily with a web server.

I'm wondering, how much further development do Google see for Tensorflow.js? MCUs can use that easily with a web server.![]() ML is the idea that you can "train" a piece of software to output predictions based on data. You do this by feeding it data, along with the output you'd like it to produce.

ML is the idea that you can "train" a piece of software to output predictions based on data. You do this by feeding it data, along with the output you'd like it to produce.![]() @Duncan - definitely, we're trying to keep it very simple so that you don't need an IMU, but so far using just accelerometer data seems to be effective for our use case

@Duncan - definitely, we're trying to keep it very simple so that you don't need an IMU, but so far using just accelerometer data seems to be effective for our use case![]() instead you can take advantage of the optimizations that ARM & google have done, and you dont need to re-invent the matching algorithms - you just need to turn your model

instead you can take advantage of the optimizations that ARM & google have done, and you dont need to re-invent the matching algorithms - you just need to turn your model![]() what about keras? It seems far easier to me than TF?

what about keras? It seems far easier to me than TF?![]() 2: Once your model is trained, you can feed in new data that it hasn't seen before, and it will output predictions that are hopefully somewhat accurate!

2: Once your model is trained, you can feed in new data that it hasn't seen before, and it will output predictions that are hopefully somewhat accurate!![]() @happyday.mjohnson we love Keras, and TF 2.0 is based around it as an API

@happyday.mjohnson we love Keras, and TF 2.0 is based around it as an API![]() Can you run Keras on micro/circuitpython?

Can you run Keras on micro/circuitpython?![]() yah - keras on micro/cp?

yah - keras on micro/cp?![]() Question for LadyAda: What software and hardware was used to build the demo and how many people hours did it take to reach a stable state?

Question for LadyAda: What software and hardware was used to build the demo and how many people hours did it take to reach a stable state?![]() right now the code for TF Lite on microntrollers is super streamlined

right now the code for TF Lite on microntrollers is super streamlined![]() 3: This second part, making predictions, is called inference. When we talk about ML on microcontrollers, we're generally talking about running the second part on microcontrollers, not the training part. This is because training takes a lot of computation, and only needs to happen once, so it's better to do it on a more powerful device

3: This second part, making predictions, is called inference. When we talk about ML on microcontrollers, we're generally talking about running the second part on microcontrollers, not the training part. This is because training takes a lot of computation, and only needs to happen once, so it's better to do it on a more powerful device![]() Ah, OK that makes sense.

Ah, OK that makes sense.![]() You guys think TPUs will make continuous training on the edge possible?

You guys think TPUs will make continuous training on the edge possible?![]() @happyday.mjohnson @Sébastien Vézina we're focused on running models that have already been trained on MCUs, and Keras is all about training, so Keras isn't a great fit for our embedded use cases

@happyday.mjohnson @Sébastien Vézina we're focused on running models that have already been trained on MCUs, and Keras is all about training, so Keras isn't a great fit for our embedded use cases![]() 4: So, why would we want to run inference on microcontrollers?! "Traditionally", meaning the last few years (since this stuff is all pretty new), ML inference has been done on back-end servers, which are big and powerful and can run models and make predictions really fast

4: So, why would we want to run inference on microcontrollers?! "Traditionally", meaning the last few years (since this stuff is all pretty new), ML inference has been done on back-end servers, which are big and powerful and can run models and make predictions really fast![]() @Dick Brooks to get the micro speech demos working took maybe 2 weekends of work, about 20 hours total - but it was in a much less stable state. now the code is nearly ready for release so ya dont have to relearn all the stuff i did. also, i wrote a guide on what i learned on training new speech models

@Dick Brooks to get the micro speech demos working took maybe 2 weekends of work, about 20 hours total - but it was in a much less stable state. now the code is nearly ready for release so ya dont have to relearn all the stuff i did. also, i wrote a guide on what i learned on training new speech models![]() @daniel that makes sense. I hope it is as easy as going from making model on desktop and pushing a "compile" run/restart to go to CP?

@daniel that makes sense. I hope it is as easy as going from making model on desktop and pushing a "compile" run/restart to go to CP?![]() @Daniel Situnayake - so how compact can the trained models be? In terms of storage, processing power needed, etc.

@Daniel Situnayake - so how compact can the trained models be? In terms of storage, processing power needed, etc.![]() (chuck from youtube asks) "Are you limiting yourself to only neural networks? ML methods based on decision trees like Haar Classifiers might be more appropiate for microcontrollers"

(chuck from youtube asks) "Are you limiting yourself to only neural networks? ML methods based on decision trees like Haar Classifiers might be more appropiate for microcontrollers"![]() 5: But this means that if you want to make a prediction on some data, you have to send that data to your server. This involves an internet connection, and has big implications around privacy, security, and latency. It also requires a lot of power!

5: But this means that if you want to make a prediction on some data, you have to send that data to your server. This involves an internet connection, and has big implications around privacy, security, and latency. It also requires a lot of power!![]() from discord "can I use my Google AIY Vision setup for this?"

from discord "can I use my Google AIY Vision setup for this?"![]() Hello!

Hello!![]() please lemme know when there is a tutorial/stuff on accell/gyro human activity recognition....

please lemme know when there is a tutorial/stuff on accell/gyro human activity recognition....![]() we did some stuff with a parallax propeller for this, but it was in like 2009. GA generating a learning capable proggie

we did some stuff with a parallax propeller for this, but it was in like 2009. GA generating a learning capable proggie![]() or perhaps human activity via processing videos (like when a "smart camera" is watching my mother and it notifies me she fell.

or perhaps human activity via processing videos (like when a "smart camera" is watching my mother and it notifies me she fell.![]() Did you make the training phase in the microcontroller?

Did you make the training phase in the microcontroller?![]() Re: Neural-networks only? We're fans of other types of ML methods (this paper from Arm on using Bonsai Trees is great for example https://www.sysml.cc/doc/2019/107.pdf ) but for now we have our hands full with NN approaches, so that's what we're focused on

Re: Neural-networks only? We're fans of other types of ML methods (this paper from Arm on using Bonsai Trees is great for example https://www.sysml.cc/doc/2019/107.pdf ) but for now we have our hands full with NN approaches, so that's what we're focused on![]() 6: If we can run inference on the device that captures the data, we avoid all these issues! If the data never leaves the device, it doesn't need a network connection, there's no potential for privacy violations, it's not going to get hacked, and there's no need to waste energy sending stuff over a network

6: If we can run inference on the device that captures the data, we avoid all these issues! If the data never leaves the device, it doesn't need a network connection, there's no potential for privacy violations, it's not going to get hacked, and there's no need to waste energy sending stuff over a network![]() There is a scroll bar over to the right - it only appears when you're over it

There is a scroll bar over to the right - it only appears when you're over it![]() its hard for a computer to wreck a nice beach. plus, auto car wreck is sum thymes a problem

its hard for a computer to wreck a nice beach. plus, auto car wreck is sum thymes a problem![]() for chuck's questions, we're using NN here because it lets us take advantage of the huge resources available with tensorflow! you can do tests on cpython to tune things and them deploy to a smaller device

for chuck's questions, we're using NN here because it lets us take advantage of the huge resources available with tensorflow! you can do tests on cpython to tune things and them deploy to a smaller device![]() @happyday.mjohnson we can show people detection now on vid... it's just a pi 4 too...

@happyday.mjohnson we can show people detection now on vid... it's just a pi 4 too... ![]() 7: But on tiny devices like microcontrollers, there's not a lot of memory or CPU compared to a big back-end server, so we have to train tiny models that fit comfortably in that environment

7: But on tiny devices like microcontrollers, there's not a lot of memory or CPU compared to a big back-end server, so we have to train tiny models that fit comfortably in that environment![]() have you tried tiny-yolov3 yet on the Pi 4?

have you tried tiny-yolov3 yet on the Pi 4?![]() @pt i saw image detection (cat, parrot..) but not movement detection (ooh - looky - the parrot fell off it's perch and is rolling around the floor...).

@pt i saw image detection (cat, parrot..) but not movement detection (ooh - looky - the parrot fell off it's perch and is rolling around the floor...).![]() 8: Despite the need for tiny models, it's possible to do some pretty amazing stuff with all types of sensor input: think image, audio, and time-series data captured from all sorts of different places

8: Despite the need for tiny models, it's possible to do some pretty amazing stuff with all types of sensor input: think image, audio, and time-series data captured from all sorts of different places![]() Besides the great Adafruit resources where can you find other available micro-ML models?

Besides the great Adafruit resources where can you find other available micro-ML models?![]() 9: Hopefully that's a useful intro!! I will now stop going on and on and answer some questions :)

9: Hopefully that's a useful intro!! I will now stop going on and on and answer some questions :)![]() @happyday.mjohnson check what Bristol university are doing re: fall detection https://www.irc-sphere.ac.uk/100-homes-studyhttps://www.irc-sphere.ac.uk/100-homes-study

@happyday.mjohnson check what Bristol university are doing re: fall detection https://www.irc-sphere.ac.uk/100-homes-studyhttps://www.irc-sphere.ac.uk/100-homes-study![]() @daniel i think of those possibilities....i just don't understand the preprocessing -> training -> model building -> compiling into CP...

@daniel i think of those possibilities....i just don't understand the preprocessing -> training -> model building -> compiling into CP...![]() @Daniel Situnayake Awesome, thank you!

@Daniel Situnayake Awesome, thank you!![]() @happyday.mjohnson yah, that is possible, could look for parrot and then look for movement

@happyday.mjohnson yah, that is possible, could look for parrot and then look for movement![]() yes the google AIY vision uses the movidius accelerator and a pi zero - totally will work with tensorflow lite. we wanted to experiment with non-aceelerated raspi 4's cause they're available, low cost, and we think that these SBC will only get faster!

yes the google AIY vision uses the movidius accelerator and a pi zero - totally will work with tensorflow lite. we wanted to experiment with non-aceelerated raspi 4's cause they're available, low cost, and we think that these SBC will only get faster!![]() @somenice we're busy adding examples to the TensorFlow Lite repo, you can see them here at http://github.com/tensorflow/tensorflow/blob/master/tensorflow/lite/experimental/micro/examples/

@somenice we're busy adding examples to the TensorFlow Lite repo, you can see them here at http://github.com/tensorflow/tensorflow/blob/master/tensorflow/lite/experimental/micro/examples/![]() @PaulG - thank you.

@PaulG - thank you.![]() Is it possible to train for particular people? IOW, differentiate between pt and limor when you're in frame together?

Is it possible to train for particular people? IOW, differentiate between pt and limor when you're in frame together?![]() @Daniel Situnayake @Pete Warden How tiny are the models in kB? What is the inference latency on a SAMD level mcu for something like gesture recognition?

@Daniel Situnayake @Pete Warden How tiny are the models in kB? What is the inference latency on a SAMD level mcu for something like gesture recognition?![]() 2 Questions:

2 Questions: 1. Is this only targeted for Cortex M boards?

2. Will there be any additional guidance on setting up MCUs that are not already available/vetted on TFLite repo or in book. A coworker and I set up the TFLite for Azure Sphere and we got stuck on changes needed for the linker. If we didn't have the ability to ping a guy on Sphere team we wouldn't have made progress. I would love guidance on trying this on different chipsets.

![]() i.e., not just "person"

i.e., not just "person"![]() we'll soon have models and training scripts for speech hotword detection, image classification, and gestures captured with accelerometer data

we'll soon have models and training scripts for speech hotword detection, image classification, and gestures captured with accelerometer data![]() Hey everyone - hope you don't mind if I make a shameless plug while we're here! If you're interested in running TensorFlow's Object Detection API on the Raspberry Pi to detect, identify, and locate objects from a live Picamera or webcam stream, I've made a video/written guide that gives step-by-step instructions on how to set it up. It's very useful for creating a portable, inexpensive "Smart Camera" that can detect whatever you want it to. Check it out here:

Hey everyone - hope you don't mind if I make a shameless plug while we're here! If you're interested in running TensorFlow's Object Detection API on the Raspberry Pi to detect, identify, and locate objects from a live Picamera or webcam stream, I've made a video/written guide that gives step-by-step instructions on how to set it up. It's very useful for creating a portable, inexpensive "Smart Camera" that can detect whatever you want it to. Check it out here:

Lutetium

Lutetium