The Instrument

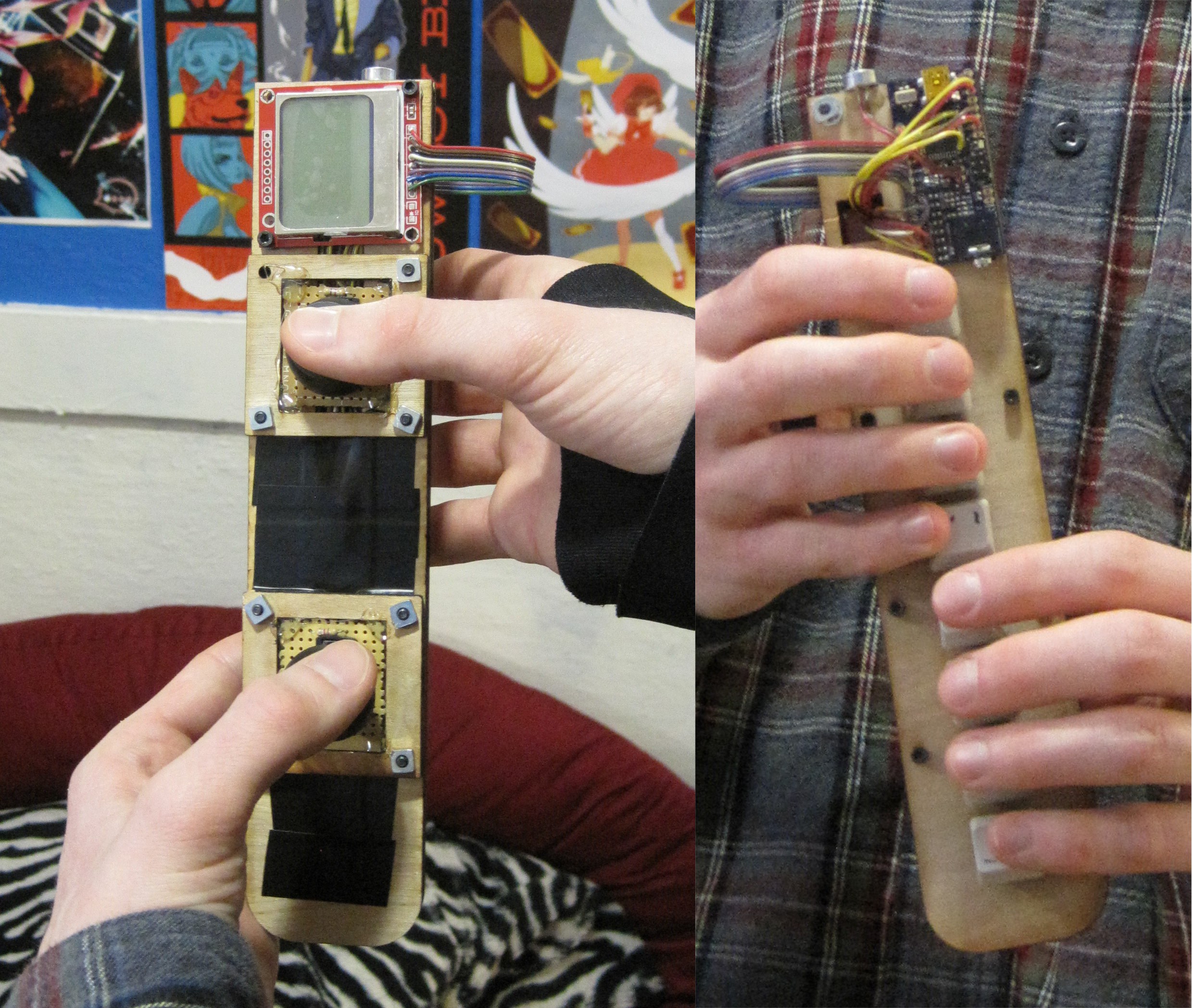

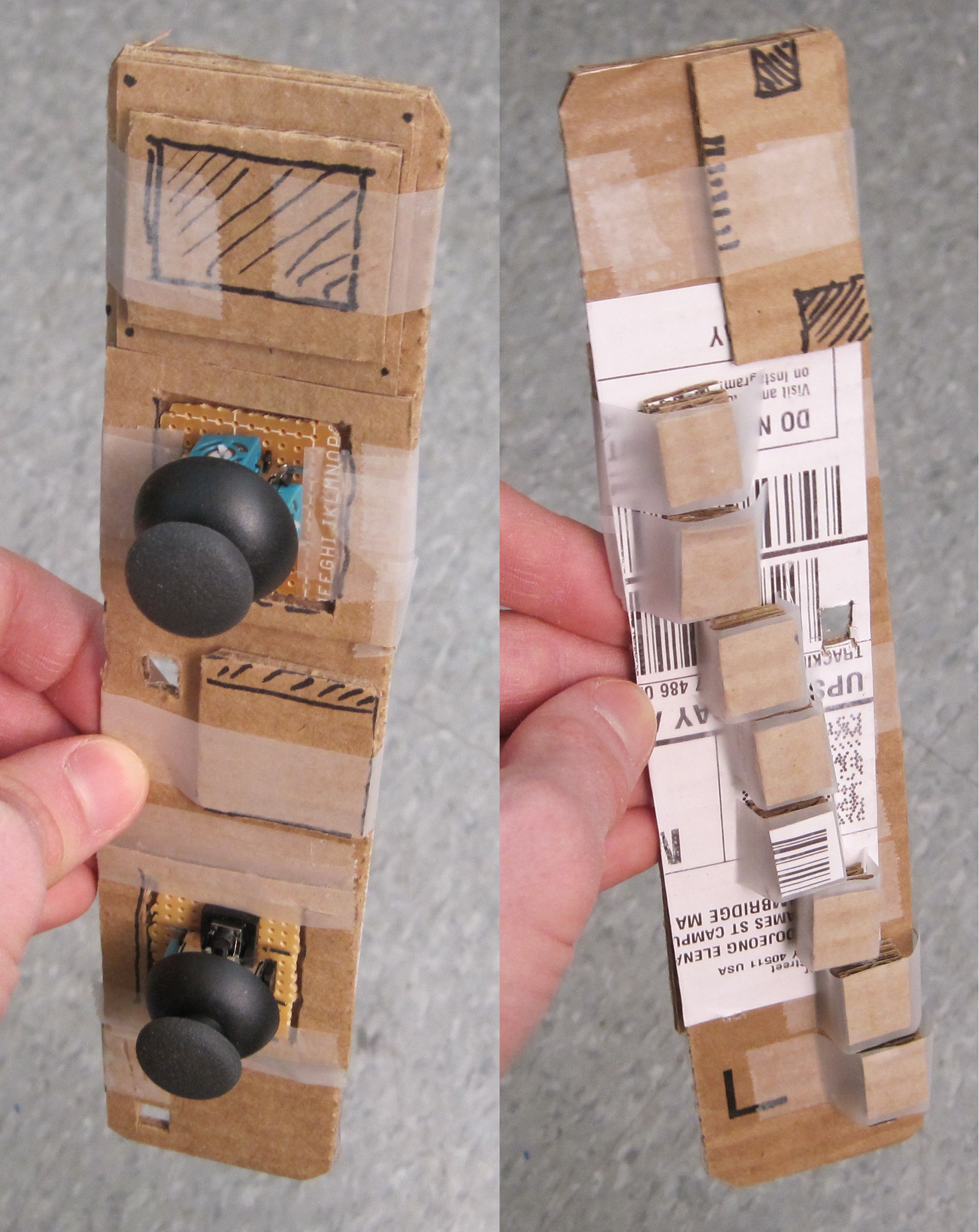

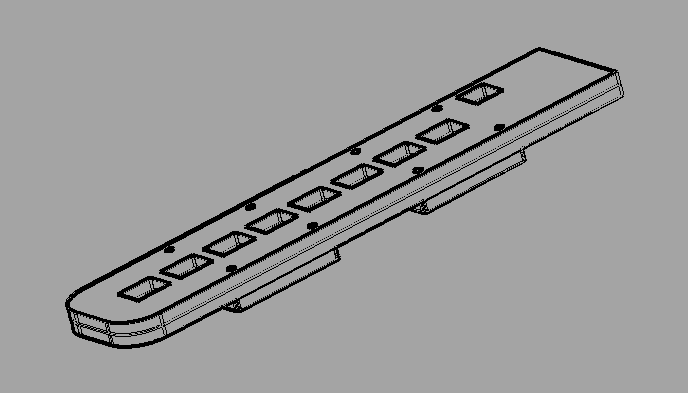

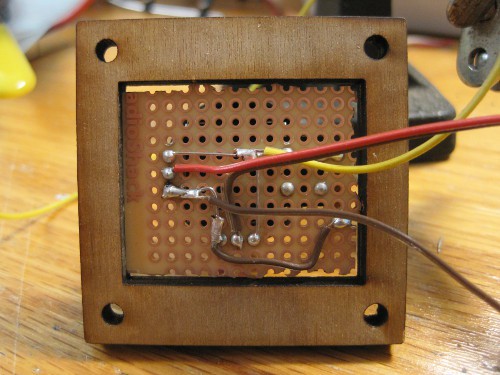

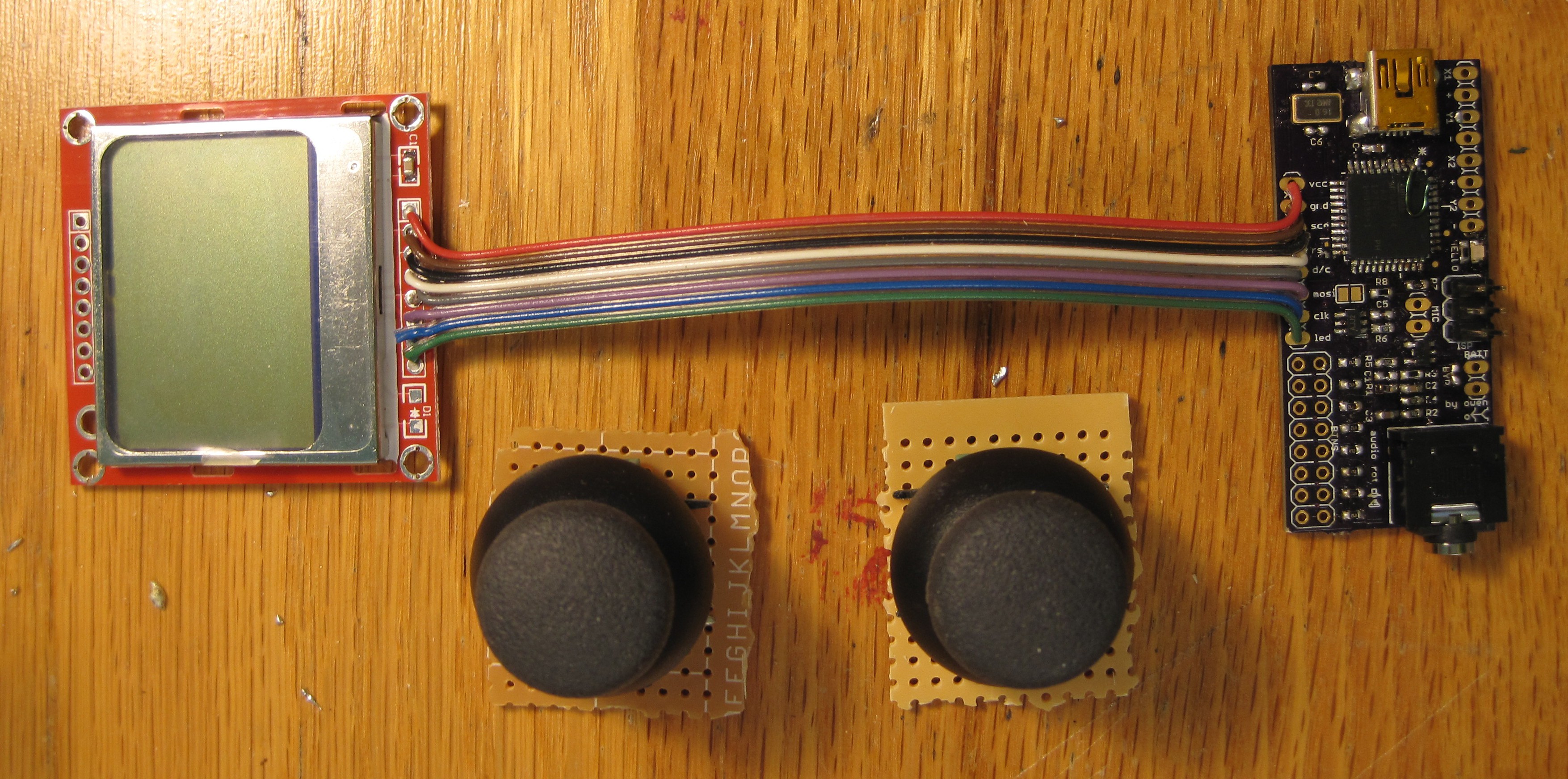

The interface is designed to reduce the time between having an idea and hearing the result. The chorded keyboard allows one hand to write code while the other adjusts values with the knobs, for example.

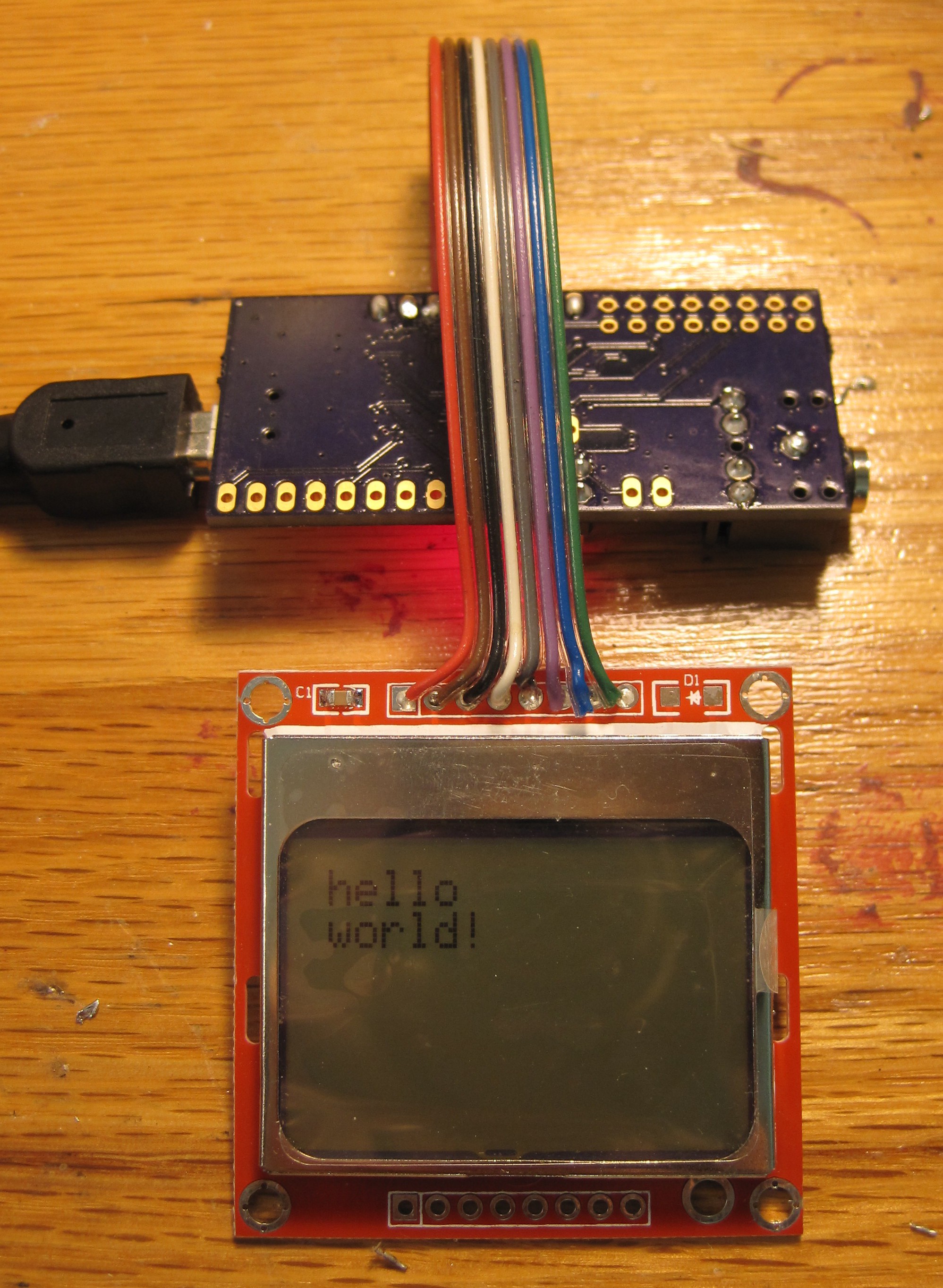

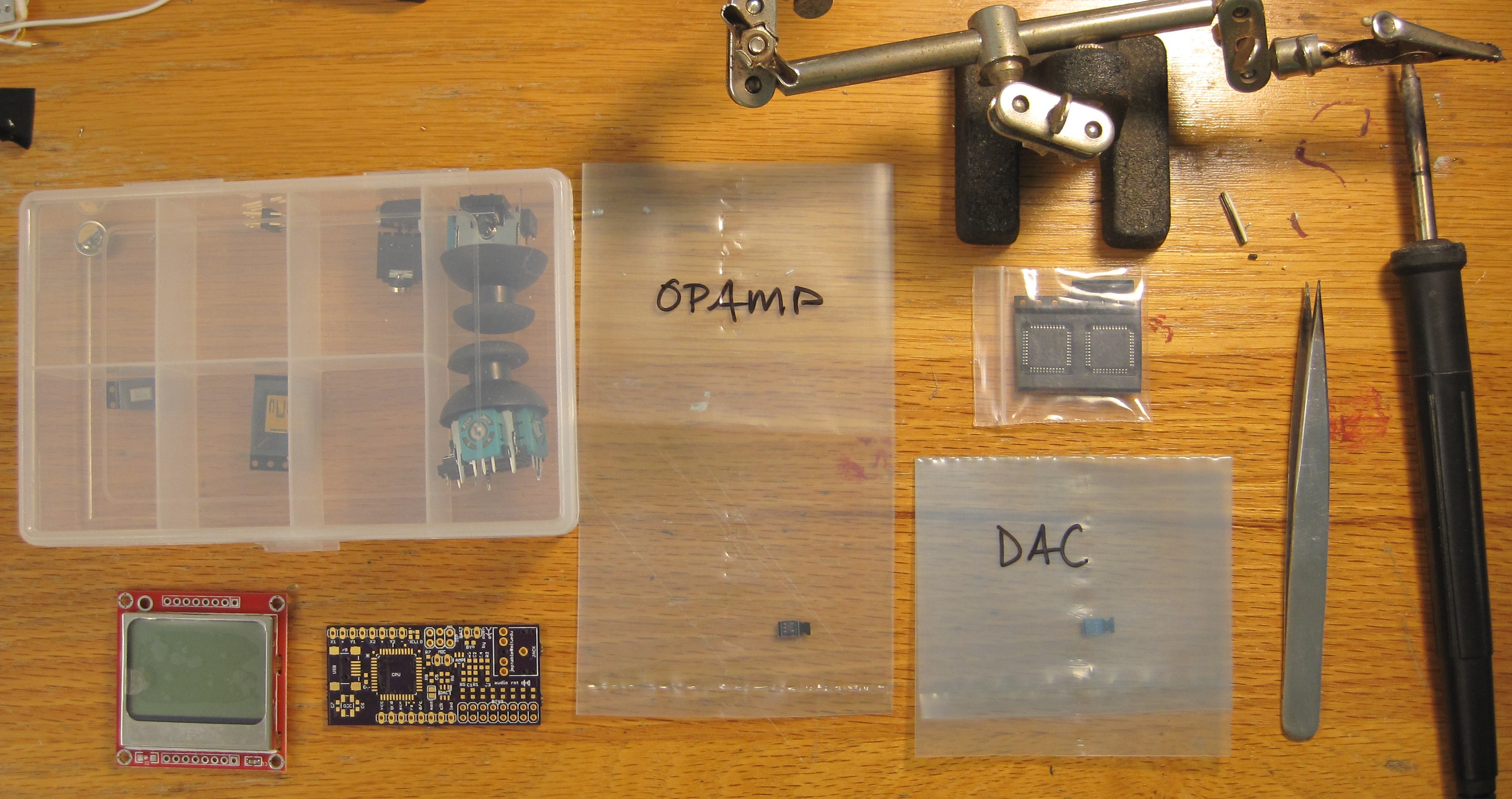

The Synthesizer

Designed to play, mix, and modify audio samples on the fly.

The Language

Construct a graph of nodes that modify sound as it flows through them. Inspired by existing environments i've had experience with, such as Max/MSP and Sunvox. The language itself is an extremely minimal variant of Forth designed to efficiently operate on queues. Steep learning curve, but very fast to write once understood.

Owen Trueblood

Owen Trueblood

The Big One

The Big One

recursinging

recursinging

Miroslav Zuzelka

Miroslav Zuzelka

John Wetzel

John Wetzel

So, as I see it, this lets you 'collect' samples and loop or one-shot play them, sequencing and re-recording as desired, is that correct...?

I wonder how hard it would be to extract 'notable' ambient sounds from a microphone that was essentially free-running whenever the device was on, and automate that sequencing process -- say, during a walk through a city, sounds like the 'chirps' of a crosswalk sounder, or various construction equipment would be sensed as staccato noises and used effectively as percussive instruments; cars and trucks buzzing by would become the bass line; emergency sirens and car horns would turn into a saxophone- or trumpet-like section, maybe... you get the point. I'm picturing something using eg a Pi Zero in a headless config, just pure audio processing -- microphone in, 'music' out.

But I'm neither programmer nor musician, so IDK.

Your thoughts?