-

Algorithm bits

06/03/2018 at 16:27 • 2 commentsIn this log I will discuss some algorithm ideas I have in mind for MARB. Some are easy, some require higher math.

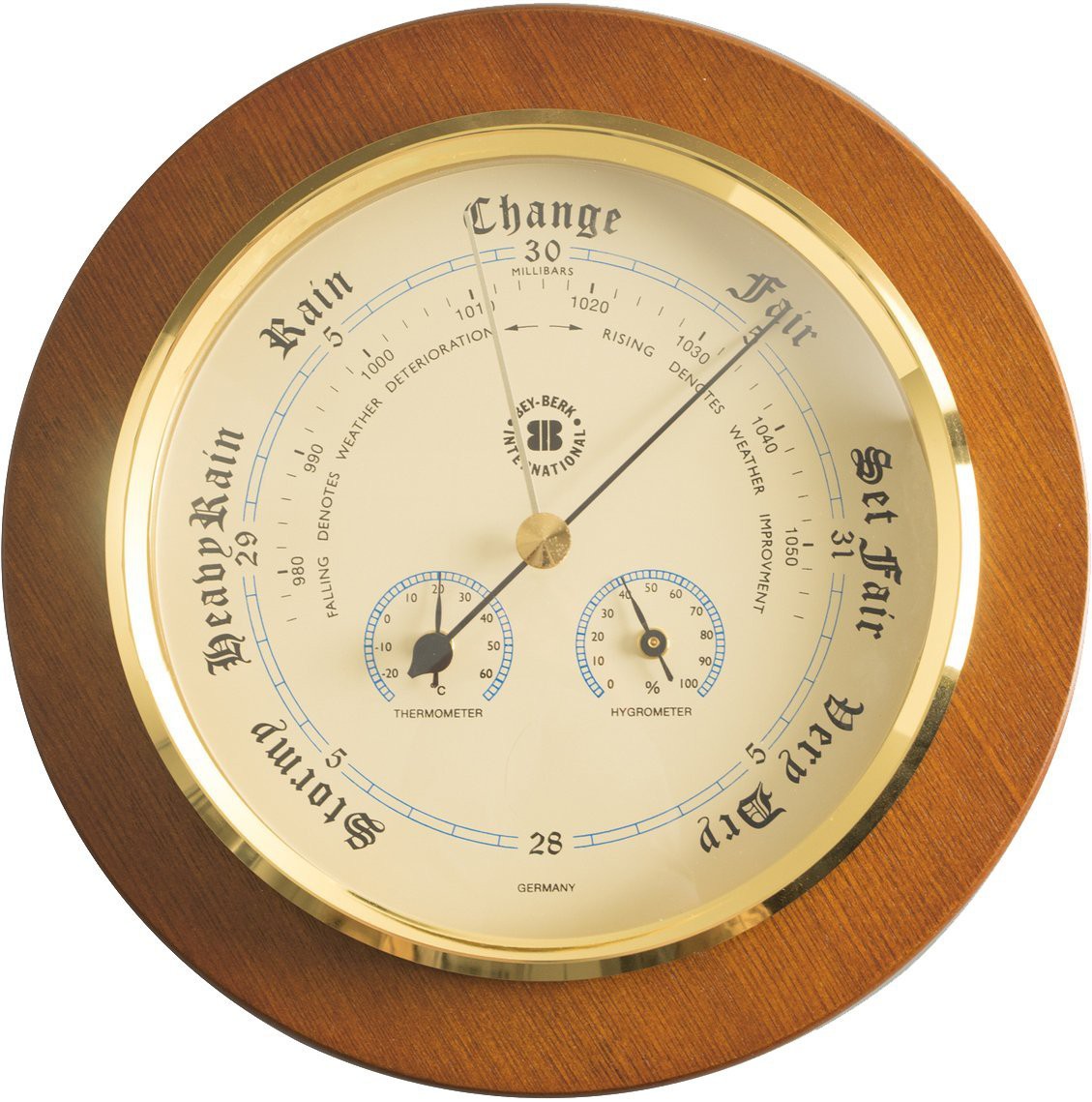

1. Weather forecast

MARB will not be connected to the internet. Call me old-fashioned, but it has nothing to do with AI to get for instance the weather forecast from some online services like weather.com. So how can MARB forecast the weather?

In the old time we used analog barometers like the following:

![]()

With a digital barometer like the BMP180 and a few if-statements we can predict the current weather. By storing the previous measured atmospheric pressure and compare it with the current one, we can also predict if the weather is improving or deteriorating. To forecast the weather of the next couple of days, we can use so called Markov chains. The probabilities of weather conditions (rainy or sunny), given the weather on the preceding day, are represented by the transition matrix:

The matrix P represents the weather model in which the probability that a sunny day followed by another sunny day is 0.9, and a rainy day followed by another rainy day is 0.5. Markov chains are usually memory-less. That means, the next state depends only on the current state and not on the sequence of events that preceded it. But we can change the initial state if we have other experiences to model the weather or something else. One more note: Columns must sum to 1 because it's a stochastic matrix.

The weather on the current day 0 is either sunny or rainy. This is represented by the following vectors (100% sunny weather and 0% rainy weather or 0% sunny weather and 100% rainy weather):

The general rule for day n is now:

or equally

This leaves us with the question how to compute these matrices multiplications for our example:

Let us assume the weather on day 0 is sunny, then weather on day 1 can be predicted by:

Hence, the probability that the day 1 will be sunny is 0.9.Applying the general rule again, weather on day 2 can be predicted by:

2. Intelligent obstacle avoidance

Today I spend the half day writing basic code for an obstacle avoidance algorithm, mainly a function to store all the distances of the ultrasonic sensors in an array and functions to represent all possible movements of the robot in the plain, taking PWM (maybe based on temperature, means entropy, or remaining battery power) and other things into account. Now I have to think about how MARB could avoid obstacles in an intelligent way. I have some ideas, let's see, how it goes...

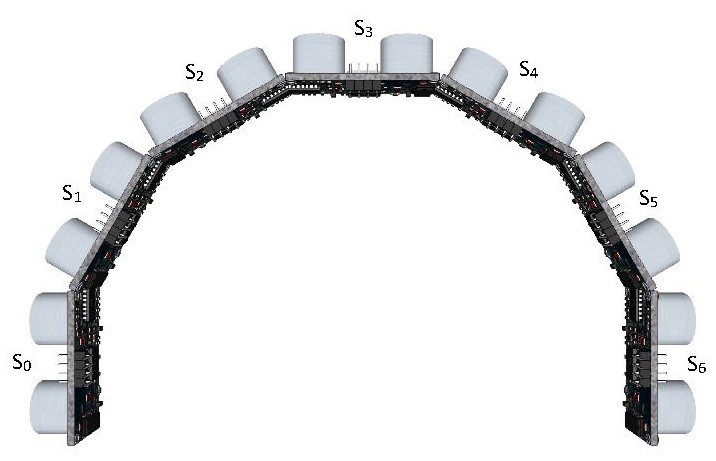

Let every sensor Si of the sensor array representing a bit of a 7-bit binary number S0, S1, S2, S3, S4, S5, S6. Let Si = 1 if the sensor detects an obstacle and else 0.

![]()

Now we can build a decision tree of an obstacle avoidance strategy and asign a movement behavior of the robot to every of the 128 possible permutations, binary 0000000 to 1111111 or decimal 0 to 127. Further reading of a similar idea: Obstacle avoidance design for a humanoid intelligent robot with ultrasonic sensors.First code snippet, which converts all obstacle occurrence possibilities into a decimal number between 0 and 127. I am sure, this could be coded much more elegant, but for now I want to focus on the functionality, on the beauty I will work later...

const int trigPin_0 = 22; // sensor 90 degrees left const int echoPin_0 = 23; const int trigPin_1 = 24; // sensor 30 degrees left const int echoPin_1 = 25; const int trigPin_2 = 26; // sensor 60 degrees left const int echoPin_2 = 27; const int trigPin_3 = 28; // sensor 0 degrees const int echoPin_3 = 29; const int trigPin_4 = 30; // sensor 30 degrees right const int echoPin_4 = 31; const int trigPin_5 = 32; // sensor 60 degrees right const int echoPin_5 = 33; const int trigPin_6 = 34; // sensor 90 degrees right const int echoPin_6 = 35; long duration; // used to calculate distance bool distances[7]; // stores the binary values in an array bool boolDis; // 1 if obstacle within min_distance of sensor, else 0 const int min_distance = 20; // minimum distance range threshold int obstacle_identifier; // stores an unique number between 0 and 127 void setup() { US_setup (); Serial.begin(9600); } void loop() { get_distances (); // print out array for(int i = 0; i < 7; i ++) { Serial.print(distances[i]); } Serial.println(); Serial.println(obstacle_identifier); Serial.println(); Serial.println(); delay(200); } // ********************* FUNCTIONS ********************* // ultrasonic sensor setup void US_setup () { pinMode(trigPin_0, OUTPUT); pinMode(echoPin_0, INPUT); pinMode(trigPin_1, OUTPUT); pinMode(echoPin_1, INPUT); pinMode(trigPin_2, OUTPUT); pinMode(echoPin_2, INPUT); pinMode(trigPin_3, OUTPUT); pinMode(echoPin_3, INPUT); pinMode(trigPin_4, OUTPUT); pinMode(echoPin_4, INPUT); pinMode(trigPin_5, OUTPUT); pinMode(echoPin_5, INPUT); pinMode(trigPin_6, OUTPUT); pinMode(echoPin_6, INPUT); } // boolean array to int converter int boolean_to_decimal(bool bol[]) { int sum = 0; for (int i = 0; i < 7; i++) { sum += bol[i] * (1 << (6 - i)); } return sum; } // get the 7 distances converted to binary values and stored in an array // convert the 7 bit binary number then into a decimal number void get_distances () { for (int i = 0; i < 7; i ++) { switch (i) { case 0: digitalWrite(trigPin_0, LOW); delayMicroseconds(2); digitalWrite(trigPin_0, HIGH); delayMicroseconds(10); digitalWrite(trigPin_0, LOW); duration = pulseIn(echoPin_0, HIGH); break; case 1: digitalWrite(trigPin_1, LOW); delayMicroseconds(2); digitalWrite(trigPin_1, HIGH); delayMicroseconds(10); digitalWrite(trigPin_1, LOW); duration = pulseIn(echoPin_1, HIGH); break; case 2: digitalWrite(trigPin_2, LOW); delayMicroseconds(2); digitalWrite(trigPin_2, HIGH); delayMicroseconds(10); digitalWrite(trigPin_2, LOW); duration = pulseIn(echoPin_2, HIGH); break; case 3: digitalWrite(trigPin_3, LOW); delayMicroseconds(2); digitalWrite(trigPin_3, HIGH); delayMicroseconds(10); digitalWrite(trigPin_3, LOW); duration = pulseIn(echoPin_3, HIGH); break; case 4: digitalWrite(trigPin_4, LOW); delayMicroseconds(2); digitalWrite(trigPin_4, HIGH); delayMicroseconds(10); digitalWrite(trigPin_4, LOW); duration = pulseIn(echoPin_4, HIGH); break; case 5: digitalWrite(trigPin_5, LOW); delayMicroseconds(2); digitalWrite(trigPin_5, HIGH); delayMicroseconds(10); digitalWrite(trigPin_5, LOW); duration = pulseIn(echoPin_5, HIGH); break; case 6: digitalWrite(trigPin_6, LOW); delayMicroseconds(2); digitalWrite(trigPin_6, HIGH); delayMicroseconds(10); digitalWrite(trigPin_6, LOW); duration = pulseIn(echoPin_6, HIGH); break; } if((duration * 0.017) >= min_distance) boolDis = 0; // no danger, obstacle is too far else boolDis = 1; // danger, obstacle is close distances[i] = boolDis; // store the binary value of each sensor into the bool array } obstacle_identifier = boolean_to_decimal(distances); }Today I had an interesting idea. The robot could count the number of turns in a certain time interval and try to improve it to a minimum by changing the min_distance value by itself to find a minimum. I need to work out an according mathematical function and differentiate it to see if this function has a local or global minimum.

Complete code for a first experiment below. So far I am quite satisfied with the results, see Videos! I still have the possibility to use the current measurements of each motor, compass as well as some advanced mathematical optimization theory.

const int trigPin_0 = 22; // sensor 90 degrees left const int echoPin_0 = 23; const int trigPin_1 = 24; // sensor 30 degrees left const int echoPin_1 = 25; const int trigPin_2 = 26; // sensor 60 degrees left const int echoPin_2 = 27; const int trigPin_3 = 28; // sensor 0 degrees const int echoPin_3 = 29; const int trigPin_4 = 30; // sensor 30 degrees right const int echoPin_4 = 31; const int trigPin_5 = 32; // sensor 60 degrees right const int echoPin_5 = 33; const int trigPin_6 = 34; // sensor 90 degrees right const int echoPin_6 = 35; long duration; // used to calculate distance bool distances[7]; // stores the binary values in an array bool boolDis; // 1 if obstacle within min_distance of sensor, else 0 const int min_distance = 20; // minimum distance range threshold int obstacle_identifier; // stores an unique number between 0 and 127 #include "DualVNH5019MotorShield.h" DualVNH5019MotorShield md; int turn_delay_left = 500; // turn left duration int turn_delay_right = 500; // turn right duration int move_delay_backward = 500; // turn right duration int motor_acc = 2; // motor acceleration int PWM_value = 300; void setup() { US_setup (); md.init(); randomSeed(analogRead(15)); Serial.begin(9600); } void loop() { get_distances (); if(obstacle_identifier == 0 || obstacle_identifier == 1 || obstacle_identifier == 65) { robot_forward (); } if(obstacle_identifier == 2) { robot_forward_stop (); robot_turn_left (); } if(obstacle_identifier == 32 || obstacle_identifier == 48 || obstacle_identifier == 64 || obstacle_identifier == 66) { robot_forward_stop (); robot_turn_right (); } if(obstacle_identifier == 3 || obstacle_identifier == 4 || obstacle_identifier == 5 || obstacle_identifier == 6 || obstacle_identifier == 7 || obstacle_identifier == 9 || obstacle_identifier == 10 || obstacle_identifier == 11 || obstacle_identifier == 12 || obstacle_identifier == 13 || obstacle_identifier == 14 || obstacle_identifier == 15 || obstacle_identifier == 17 || obstacle_identifier == 18 || obstacle_identifier == 19 || obstacle_identifier == 20 || obstacle_identifier == 21 || obstacle_identifier == 22 || obstacle_identifier == 23 || obstacle_identifier == 25 || obstacle_identifier == 26 || obstacle_identifier == 27 || obstacle_identifier == 29 || obstacle_identifier == 30 || obstacle_identifier == 31 || obstacle_identifier == 35 || obstacle_identifier == 36 || obstacle_identifier == 37 || obstacle_identifier == 38 || obstacle_identifier == 39 || obstacle_identifier == 42 || obstacle_identifier == 45 || obstacle_identifier == 46 || obstacle_identifier == 47 || obstacle_identifier == 51 || obstacle_identifier == 53 || obstacle_identifier == 55 || obstacle_identifier == 61 || obstacle_identifier == 63 || obstacle_identifier == 67 || obstacle_identifier == 69 || obstacle_identifier == 70 || obstacle_identifier == 71 || obstacle_identifier == 74|| obstacle_identifier == 75 || obstacle_identifier == 77 || obstacle_identifier == 78 || obstacle_identifier == 79 || obstacle_identifier == 83 || obstacle_identifier == 87 || obstacle_identifier == 91 || obstacle_identifier == 95 || obstacle_identifier == 103 || obstacle_identifier == 111) { robot_forward_stop (); robot_backward (); robot_turn_left (); } if(obstacle_identifier == 16 || obstacle_identifier == 24 || obstacle_identifier == 33 || obstacle_identifier == 40 || obstacle_identifier == 41 || obstacle_identifier == 42 || obstacle_identifier == 44 || obstacle_identifier == 49|| obstacle_identifier == 50 || obstacle_identifier == 52 || obstacle_identifier == 56 || obstacle_identifier == 57 || obstacle_identifier == 58 || obstacle_identifier == 59 || obstacle_identifier == 60 || obstacle_identifier == 68 || obstacle_identifier == 72 || obstacle_identifier == 76 || obstacle_identifier == 80 || obstacle_identifier == 81 || obstacle_identifier == 82 || obstacle_identifier == 84 || obstacle_identifier == 86 || obstacle_identifier == 88 || obstacle_identifier == 89 || obstacle_identifier == 90 || obstacle_identifier == 92 || obstacle_identifier == 94 || obstacle_identifier == 96 || obstacle_identifier == 97 || obstacle_identifier == 98 || obstacle_identifier == 100 || obstacle_identifier == 101 || obstacle_identifier == 102 || obstacle_identifier == 104 || obstacle_identifier == 105 || obstacle_identifier == 106 || obstacle_identifier == 108 || obstacle_identifier == 109 || obstacle_identifier == 110 || obstacle_identifier == 112 || obstacle_identifier == 113 || obstacle_identifier == 114 || obstacle_identifier == 115 || obstacle_identifier == 116 || obstacle_identifier == 117 || obstacle_identifier == 118 || obstacle_identifier == 120 || obstacle_identifier == 121 || obstacle_identifier == 122 || obstacle_identifier == 123 || obstacle_identifier == 124 || obstacle_identifier == 125 || obstacle_identifier == 126) { robot_forward_stop (); robot_backward (); robot_turn_right (); } if(obstacle_identifier == 8 || obstacle_identifier == 28 || obstacle_identifier == 34 || obstacle_identifier == 54 || obstacle_identifier == 62 || obstacle_identifier == 73 || obstacle_identifier == 85 || obstacle_identifier == 93 || obstacle_identifier == 99 || obstacle_identifier == 107 || obstacle_identifier == 119|| obstacle_identifier == 127) { robot_forward_stop (); robot_backward (); robot_turn_randomly (); } } // ********************* FUNCTIONS ********************* // ultrasonic sensor setup void US_setup () { pinMode(trigPin_0, OUTPUT); pinMode(echoPin_0, INPUT); pinMode(trigPin_1, OUTPUT); pinMode(echoPin_1, INPUT); pinMode(trigPin_2, OUTPUT); pinMode(echoPin_2, INPUT); pinMode(trigPin_3, OUTPUT); pinMode(echoPin_3, INPUT); pinMode(trigPin_4, OUTPUT); pinMode(echoPin_4, INPUT); pinMode(trigPin_5, OUTPUT); pinMode(echoPin_5, INPUT); pinMode(trigPin_6, OUTPUT); pinMode(echoPin_6, INPUT); } // boolean array to int converter int boolean_to_decimal(bool bol[]) { int sum = 0; for (int i = 0; i < 7; i++) { sum += bol[i] * (1 << (6 - i)); } return sum; } // get the 7 distances converted to binary values and stored in an array // convert the 7 bit binary number then into a decimal number void get_distances () { for (int i = 0; i < 7; i ++) { switch (i) { case 0: digitalWrite(trigPin_0, LOW); delayMicroseconds(2); digitalWrite(trigPin_0, HIGH); delayMicroseconds(10); digitalWrite(trigPin_0, LOW); duration = pulseIn(echoPin_0, HIGH); break; case 1: digitalWrite(trigPin_1, LOW); delayMicroseconds(2); digitalWrite(trigPin_1, HIGH); delayMicroseconds(10); digitalWrite(trigPin_1, LOW); duration = pulseIn(echoPin_1, HIGH); break; case 2: digitalWrite(trigPin_2, LOW); delayMicroseconds(2); digitalWrite(trigPin_2, HIGH); delayMicroseconds(10); digitalWrite(trigPin_2, LOW); duration = pulseIn(echoPin_2, HIGH); break; case 3: digitalWrite(trigPin_3, LOW); delayMicroseconds(2); digitalWrite(trigPin_3, HIGH); delayMicroseconds(10); digitalWrite(trigPin_3, LOW); duration = pulseIn(echoPin_3, HIGH); break; case 4: digitalWrite(trigPin_4, LOW); delayMicroseconds(2); digitalWrite(trigPin_4, HIGH); delayMicroseconds(10); digitalWrite(trigPin_4, LOW); duration = pulseIn(echoPin_4, HIGH); break; case 5: digitalWrite(trigPin_5, LOW); delayMicroseconds(2); digitalWrite(trigPin_5, HIGH); delayMicroseconds(10); digitalWrite(trigPin_5, LOW); duration = pulseIn(echoPin_5, HIGH); break; case 6: digitalWrite(trigPin_6, LOW); delayMicroseconds(2); digitalWrite(trigPin_6, HIGH); delayMicroseconds(10); digitalWrite(trigPin_6, LOW); duration = pulseIn(echoPin_6, HIGH); break; } if((duration * 0.017) >= min_distance) boolDis = 0; // no danger, obstacle is too far else boolDis = 1; // danger, obstacle is close distances[i] = boolDis; // store the binary value of each sensor into the bool array } obstacle_identifier = boolean_to_decimal(distances); } // motor control void robot_turn_left () { int i = 0; for(i = 0; i < PWM_value + 1; i ++) { md.setM1Speed(i); // right motor forward md.setM2Speed(i); // left motor backward delay(motor_acc); } delay(turn_delay_left); for(i = PWM_value; i < 1; i --) { md.setM1Speed(i); // right motor forward md.setM2Speed(i); // left motor backward delay(motor_acc); } } void robot_turn_right () { int i = 0; for(i = 0; i > -PWM_value - 1; i --) { md.setM1Speed(i); // right motor backward md.setM2Speed(i); // left motor forward delay(motor_acc); } delay(turn_delay_right); for(i = -PWM_value; i > -1; i ++) { md.setM1Speed(i); // right motor backward md.setM2Speed(i); // left motor forward delay(motor_acc); } } void robot_turn_randomly () { int randNumber = random(499); if(randNumber < 250) robot_turn_right (); else robot_turn_left (); } void robot_forward () { md.setM1Speed(PWM_value); // right motor forward md.setM2Speed(-PWM_value); // left motor forward } void robot_backward_stop () { for(int i = PWM_value; i > -1; i --) { md.setM1Speed(-i); // right motor backward md.setM2Speed(i); // left motor backward delay(motor_acc); } } void robot_backward () { for(int i = 0; i < PWM_value + 1; i ++) { md.setM1Speed(-i); // right motor backward md.setM2Speed(i); // left motor backward delay(motor_acc); } delay(move_delay_backward); robot_backward_stop (); } void robot_forward_stop () { for(int i = PWM_value; i > -1; i --) { md.setM1Speed(i); // right motor forward md.setM2Speed(-i); // left motor forward delay(motor_acc); } }3. Color sensing

The k-NN (k-nearest neighbors) algorithm is among the simplest of all machine learning algorithms. We will use this algorithm to classify the color of a new object. As our training set of learned colors will only contain one sample per color, k = 1, and the object is simply assigned to the class of that single nearest neighbor.

We will work in a n-dimensional Euclidean space. In general, for an n-dimensional Euclidean space the Euclidean distance between two points

and

is given by

I remember when my daughter was a toddler, it took her nearly one year until she could classify all basic colors. This is no easy task even for a human brain.

4 years back I developed an algorithm to classify colors based on the color sensor TCS3200. I adapted this code for the APDS-9960 RGB and gesture sensor. It still works quite well even the APDS-9960 has no collimator lens and LED's to illuminate the object and rules out the ambient light.

#include <SunFounder_Emo.h> #include <emo_maps.h> // saved emotions #include <Wire.h> #include <SparkFun_APDS9960.h> // global Variables SparkFun_APDS9960 apds = SparkFun_APDS9960(); uint8_t proximity_data = 0; uint16_t ambient_light = 0; uint16_t red_light = 0; uint16_t green_light = 0; uint16_t blue_light = 0; int PercentageRed, PercentageGreen, PercentageBlue; // learned colors // array = [row][column] int learned_colors[3][6] = { {44, 46, 48, 80, 36, 34}, // R {41, 41, 43, 30, 49, 44}, // G {39, 44, 41, 33, 57, 57} // B }; char* color[] = {"white", "orange", "yellow", "red", "green", "blue"}; Emo my_emo(10); // CS pin void setup() { my_emo.show_emo(look5); // just show a face during color sensing Serial.begin(9600); apds.init(); // adjust the proximity sensor gain apds.setProximityGain(PGAIN_2X); // start running the APDS-9960 proximity sensor (no interrupts) apds.enableProximitySensor(false); // start running the APDS-9960 light sensor (no interrupts) apds.enableLightSensor(false); delay(500); } void loop() { // read the proximity value and print apds.readProximity(proximity_data); if(proximity_data > 244) { // get values from sensor apds.readAmbientLight(ambient_light); apds.readRedLight(red_light); apds.readGreenLight(green_light); apds.readBlueLight(blue_light); PercentageRed = int((float(red_light) / float(ambient_light)) * 100.0); PercentageGreen = int((float(green_light) / float(ambient_light)) * 100.0); PercentageBlue = int((float(blue_light) / float(ambient_light)) * 100.0); Serial.print("Red: "); Serial.print(PercentageRed); Serial.print(" Green: "); Serial.print(PercentageGreen); Serial.print(" Blue: "); Serial.println(PercentageBlue); classify_color(); } delay(500); } void classify_color() { int i_color; int ClosestColor; float MaxDiff; float MinDiff = 100.0; // find nearest neighbor, k = 1 for (i_color = 0; i_color < 6; i_color ++) { // compute Euclidean distances float ED = sqrt(pow((learned_colors[0][i_color] - PercentageRed),2.0) + pow((learned_colors[1][i_color] - PercentageGreen),2.0) + pow((learned_colors[2][i_color] - PercentageBlue),2.0)); MaxDiff = ED; // find minimum distance if (MaxDiff < MinDiff) { MinDiff = MaxDiff; ClosestColor = i_color; } } Serial.print("Object is "); // print result Serial.println(color[ClosestColor]); Serial.println(); } -

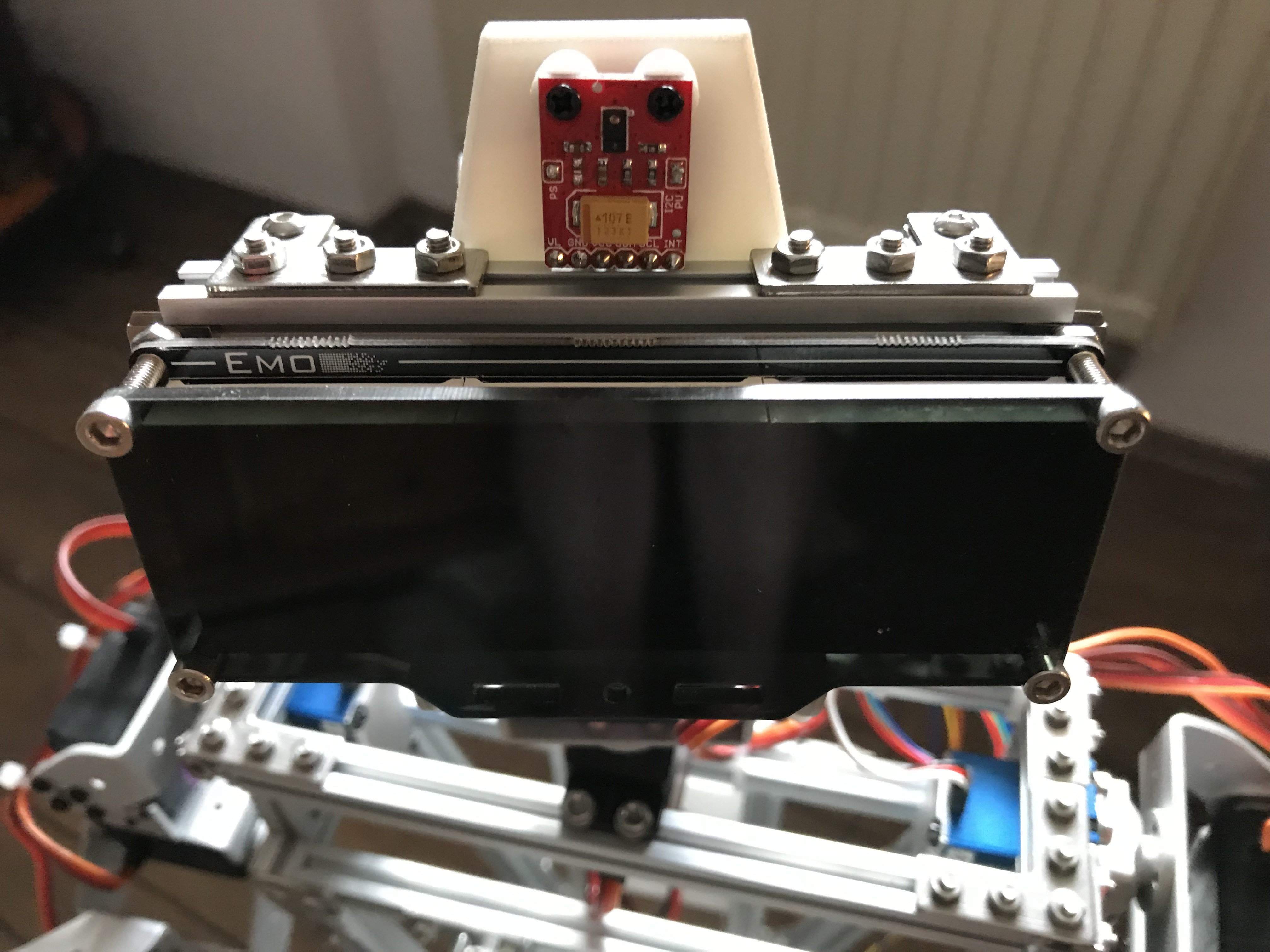

Vision module

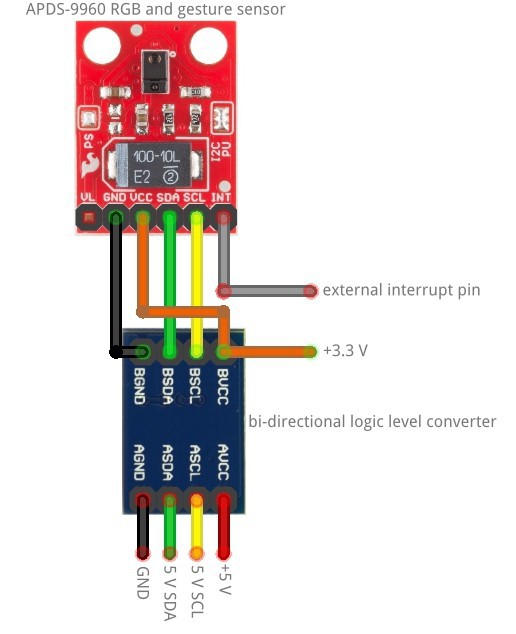

06/03/2018 at 12:42 • 0 commentsAn APDS-9960 RGB and gesture sensor acts as a low level machine vision to recognize gestures, colors of objects and distances. The Samsung Galaxy S5 actually uses the same sensor. A bi-directional logic level converter is needed for 5 V micro-controllers, because the APDS-9960 works only with a VCC of 2.4 - 3.6 V.

Wiring diagram:

![]()

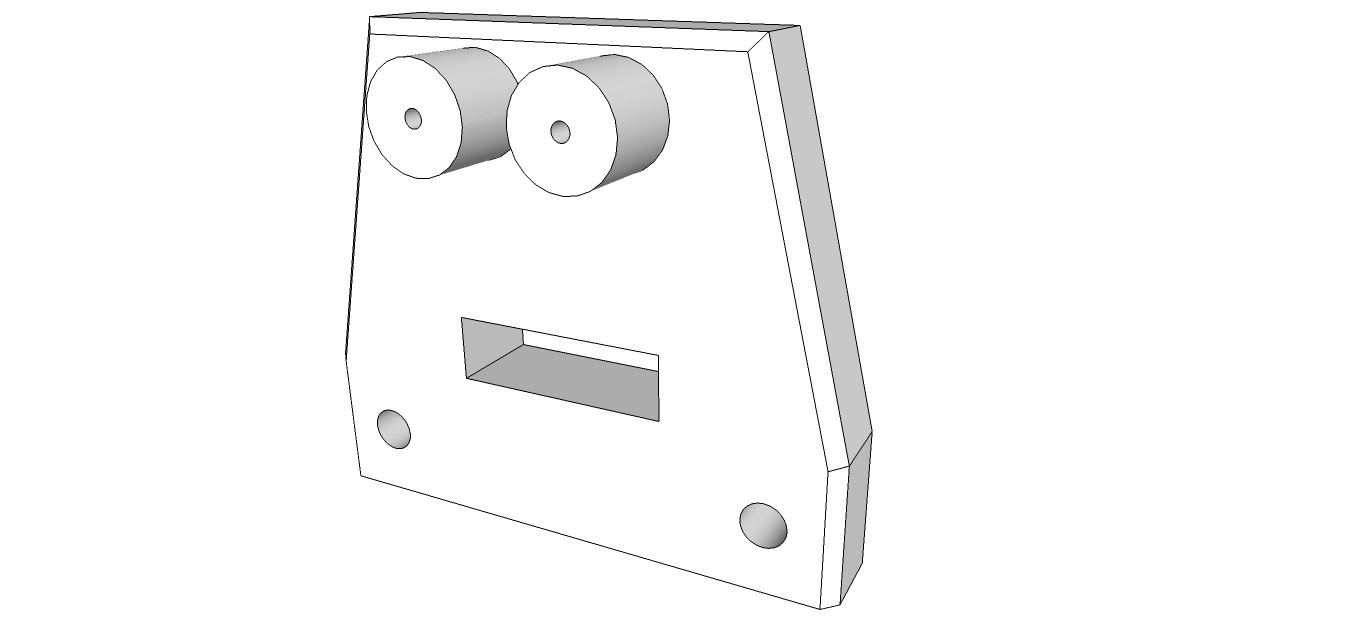

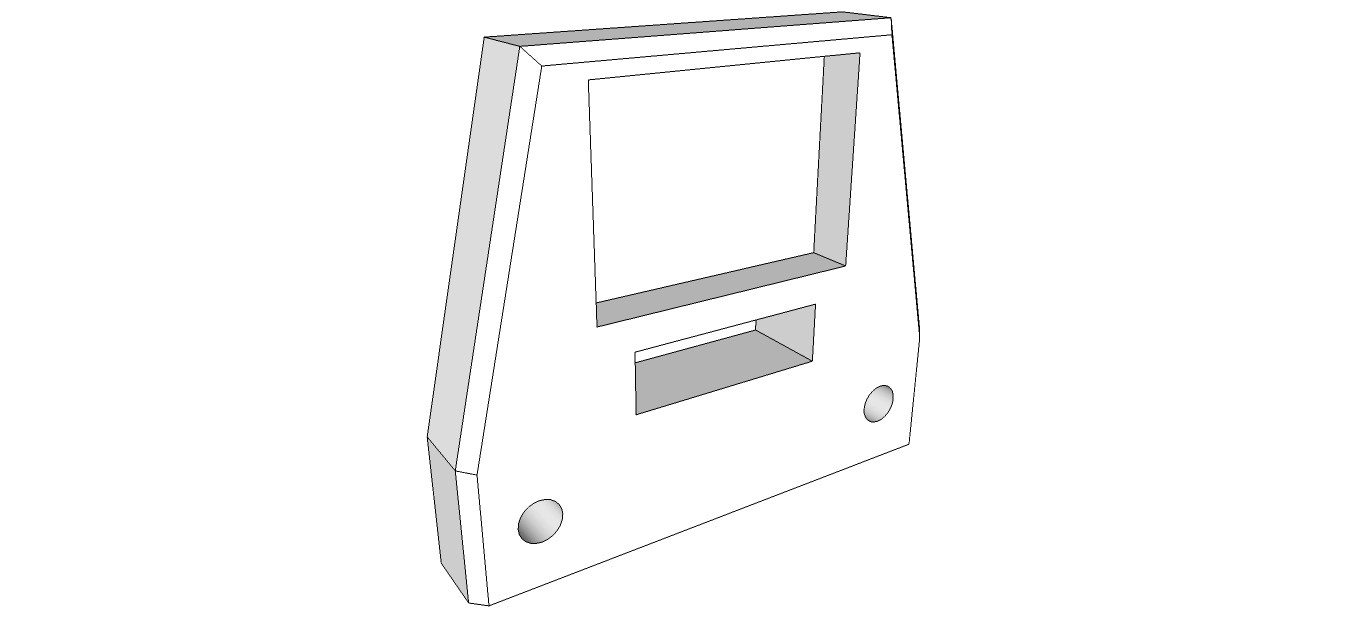

Mounting bracket design:

![]()

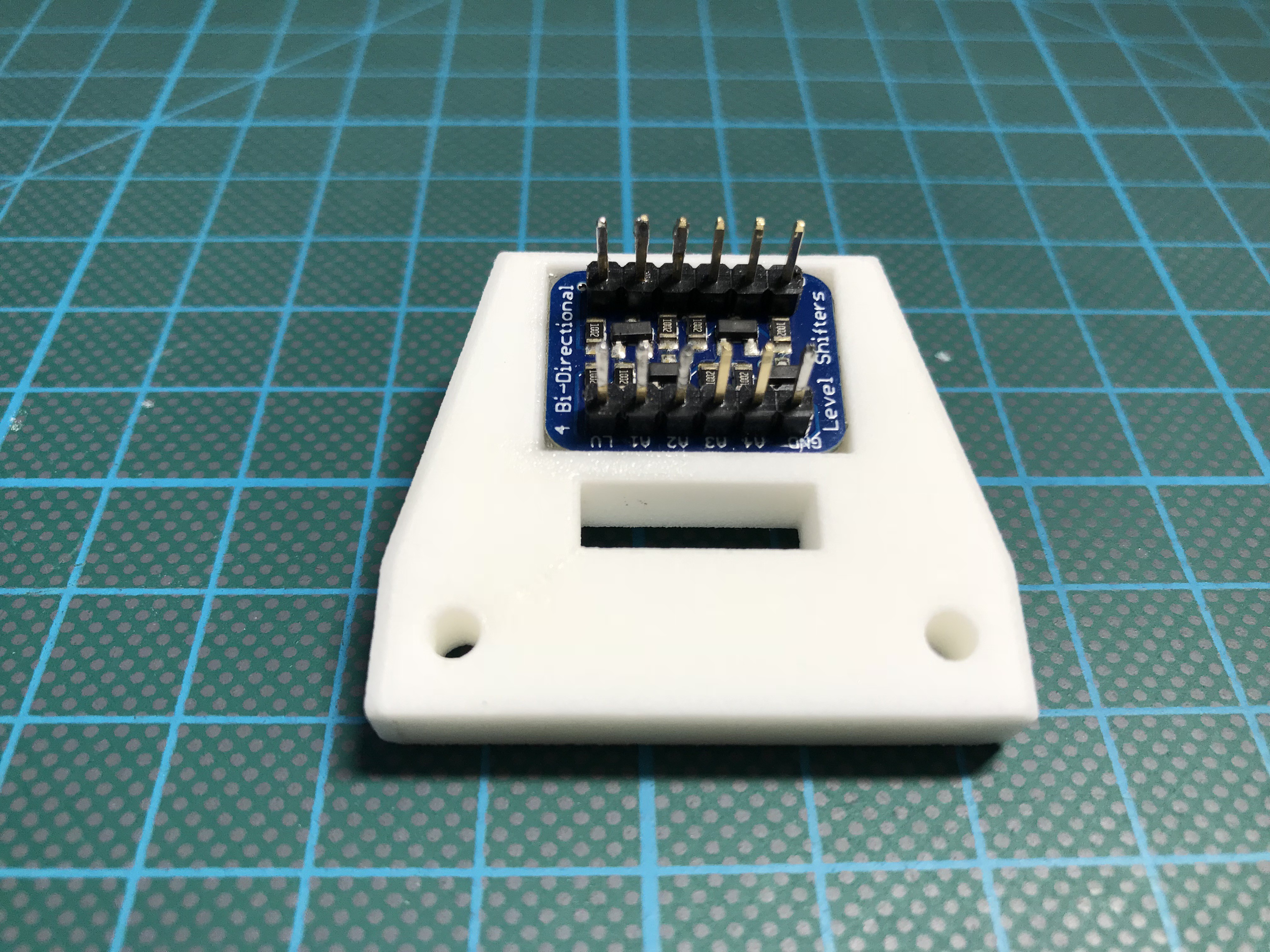

I am using this bi-directional level shifter from Adafruit. Those breakouts have usually no mounting holes, so I added a cavity on the back of the mounting bracket, where the level shifter will be glued in place.

![]() A lot of work went today into the vision module. I glued the level shifter into place with 2-component epoxy adhesive, wired the level shifter and the APDS-9960 breakout and assembled the module on MARB's head.

A lot of work went today into the vision module. I glued the level shifter into place with 2-component epoxy adhesive, wired the level shifter and the APDS-9960 breakout and assembled the module on MARB's head.I also tested two APDS-9960 libraries, one from Sparkfun and one from Adafruit. The Sparkfun library is much better regarding the gesture control. It senses every movement correctly at a distance up to 20 cm while the Adafruit fails very often even at much lower distances.

I will also try to detect colors of close objects, using the k-nearest neighbors algorithm in an n-dimensional Euclidean space. More on that soon in the log Algorithm bits.

-

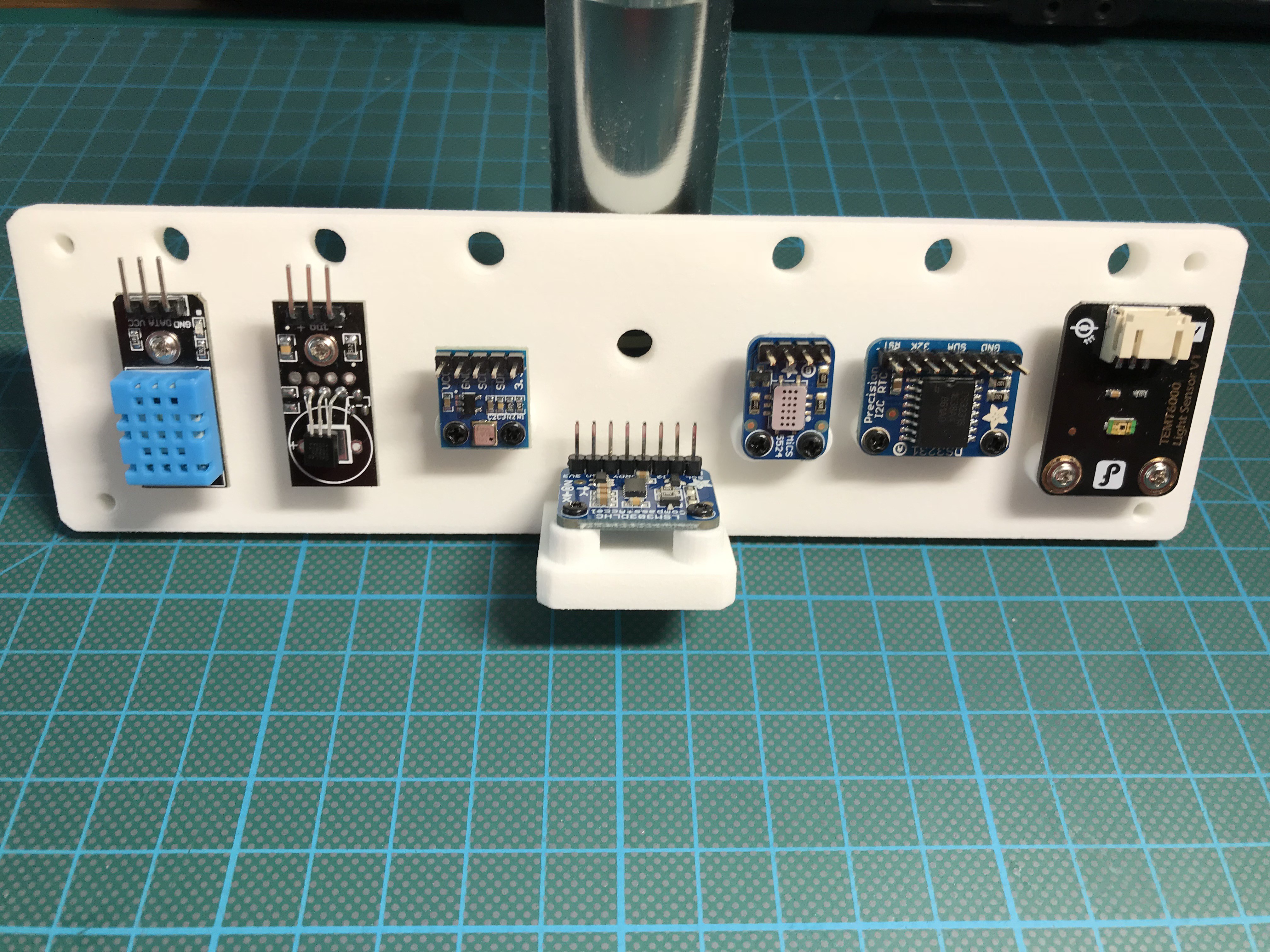

Sensor module

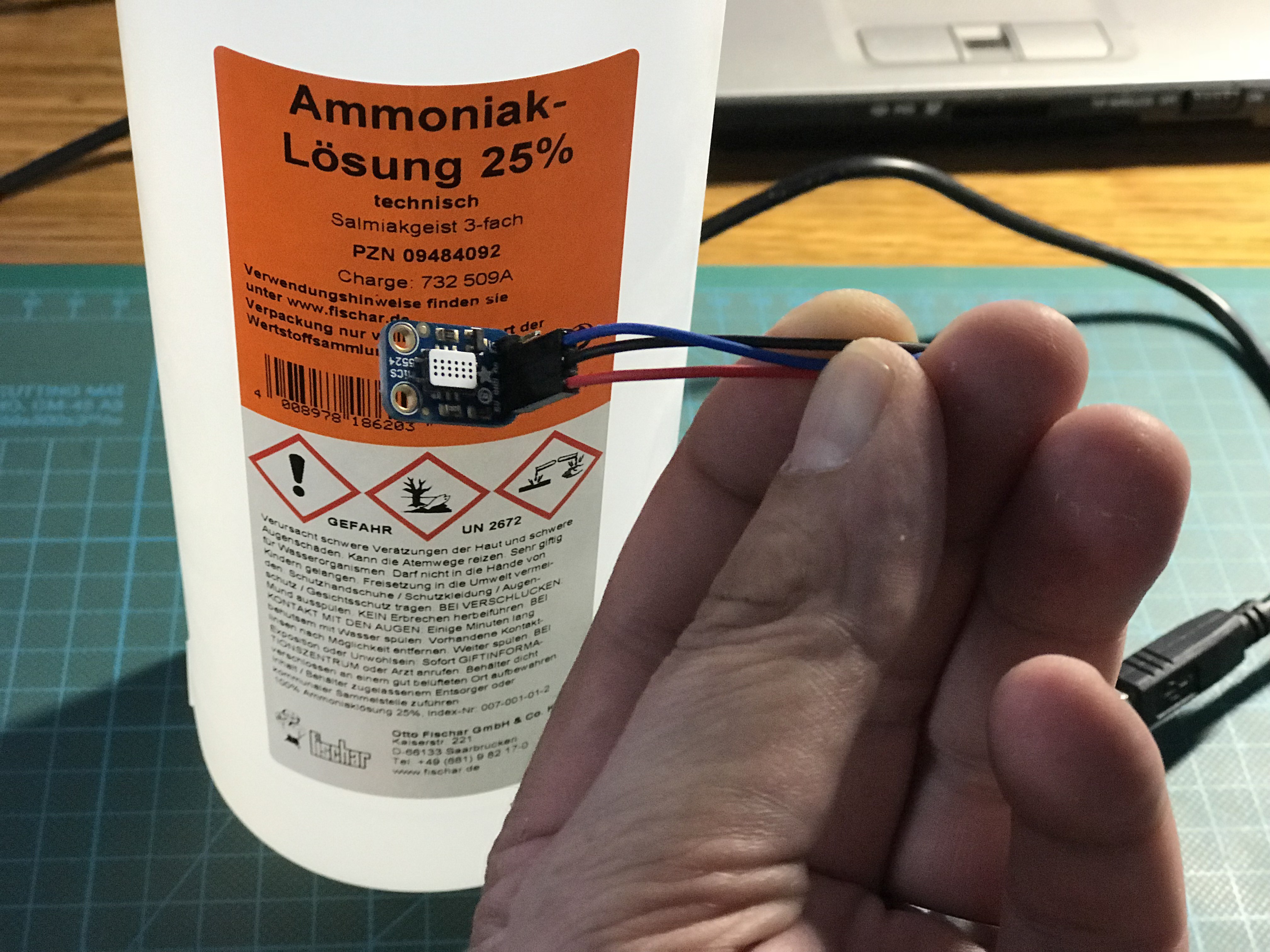

05/25/2018 at 14:31 • 0 commentsMARB will be equipped with a bunch of sensors to sense time, humidity, temperature, barometric pressure, ambient light, gas concentration, orientation and acceleration. So far I have tested a DHT11 based hygrometer, a TEMT6000 ambient light sensor and a MiCS-5524 based gas sensor.

The gas sensor can easily detect an open bottle of ammonia solution.

Testing a DS1302 RTC breakout from Sunfounder:

After tinkering a while with many different sensors, I decided to use for now following sensors:

- DHT11 breakout humidity sensor 20-80% (5% accuracy)

- BMP180 breakout barometric pressure sensor (300-1100 hPa, 0.03 hPa resolution)

- Dallas 18B20 breakout temperature sensor (-55 to +125 °C)

- TEMT6000 breakout light sensor (illumination Range: 1 – 1000 Lux, spectral response closely emulates human eye)

- DS3231 precision RTC breakout

- MiCS5524 CO, alcohol and VOC gas sensor breakout

- LSM303DLHC compass and accelerometer breakout

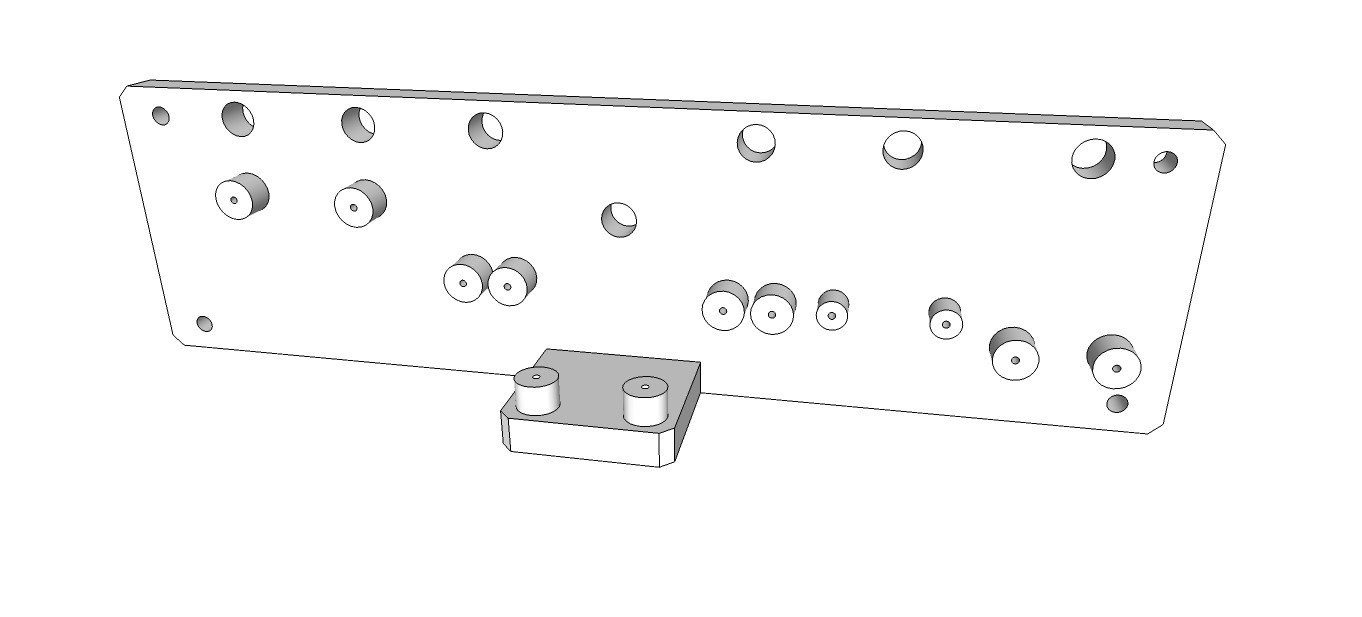

Resulting sensor module design:

![]()

3-D printed part in the mail:Sensors assembled:

-

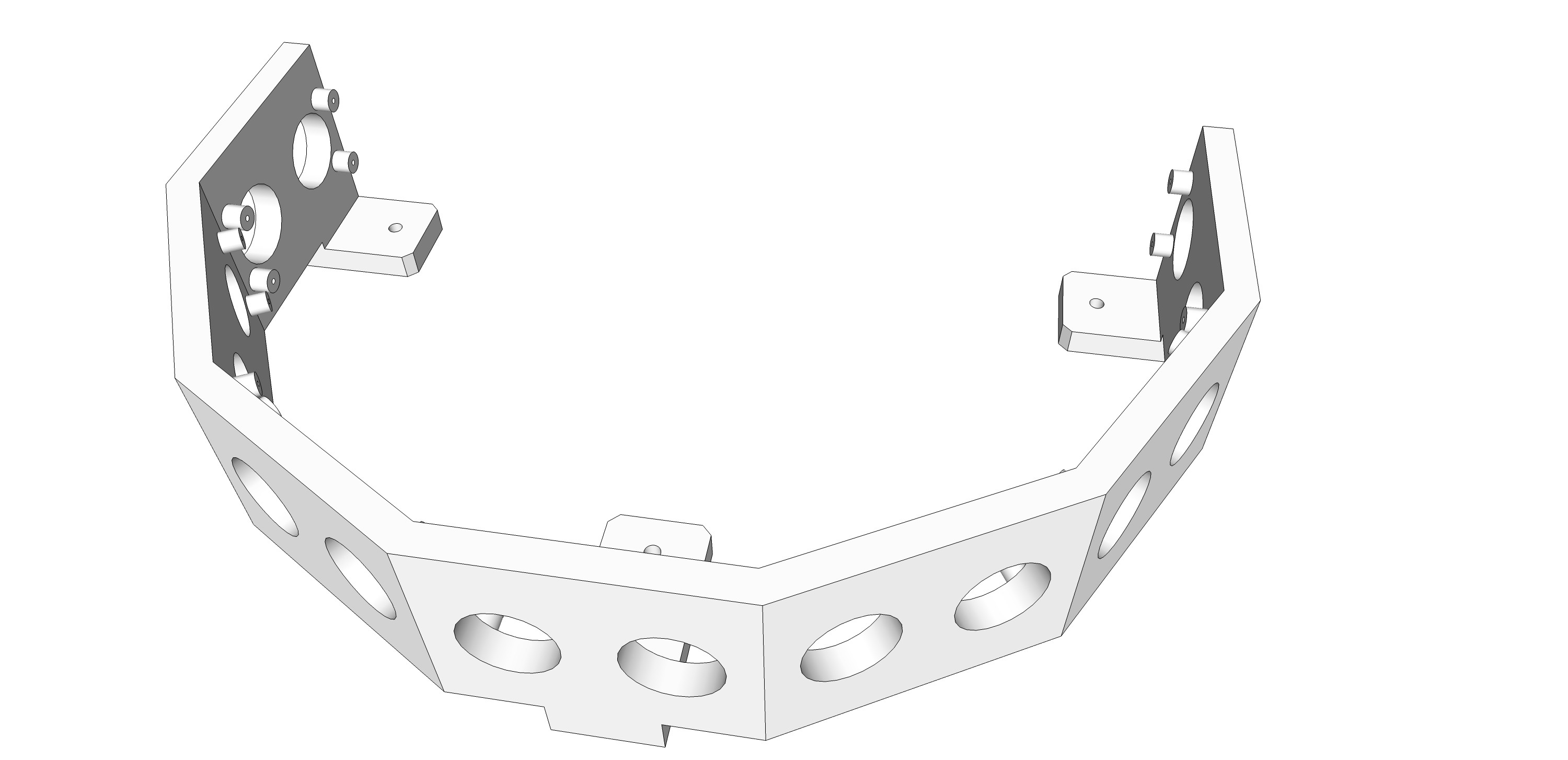

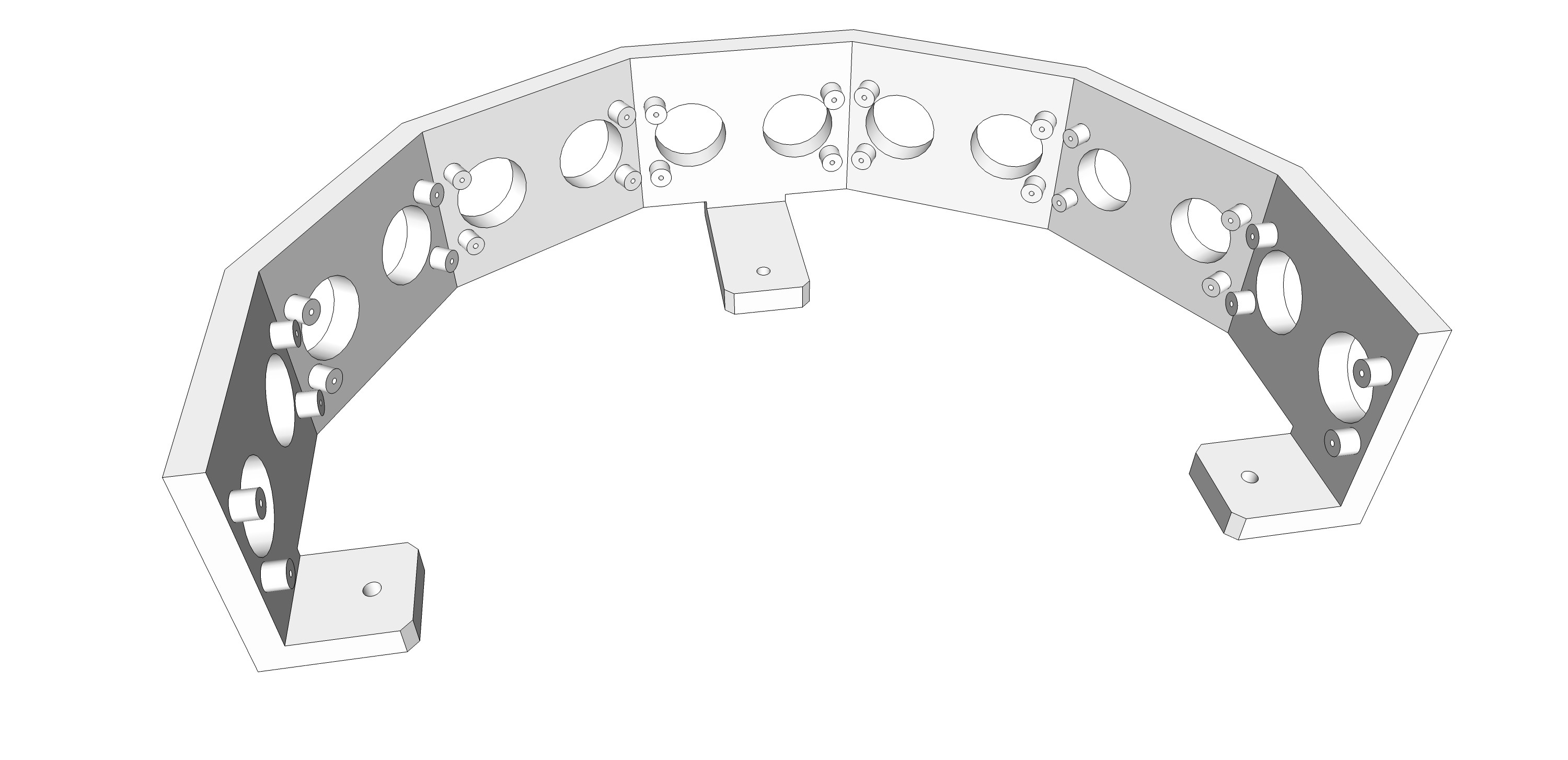

Ultrasonic sensor module

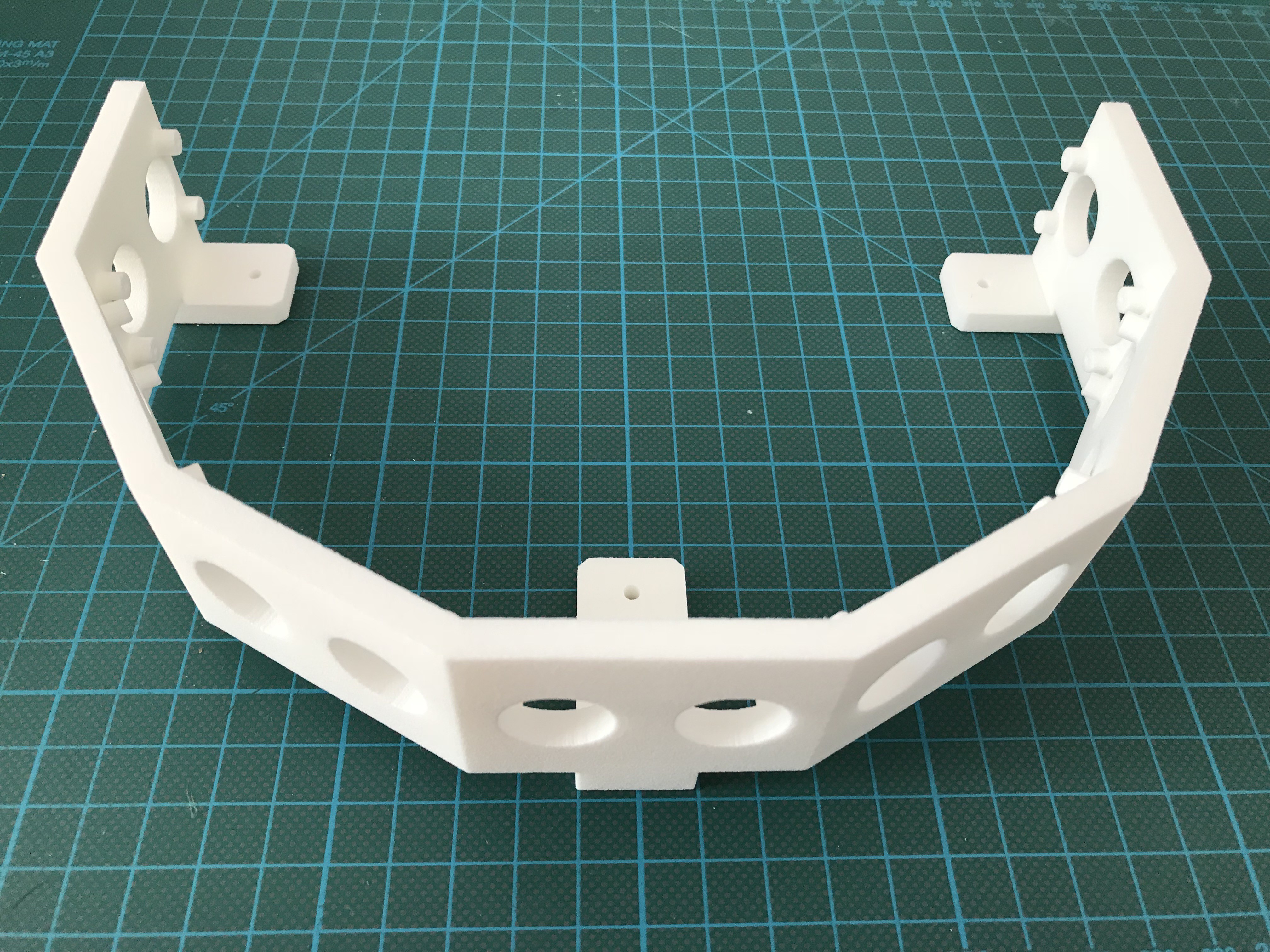

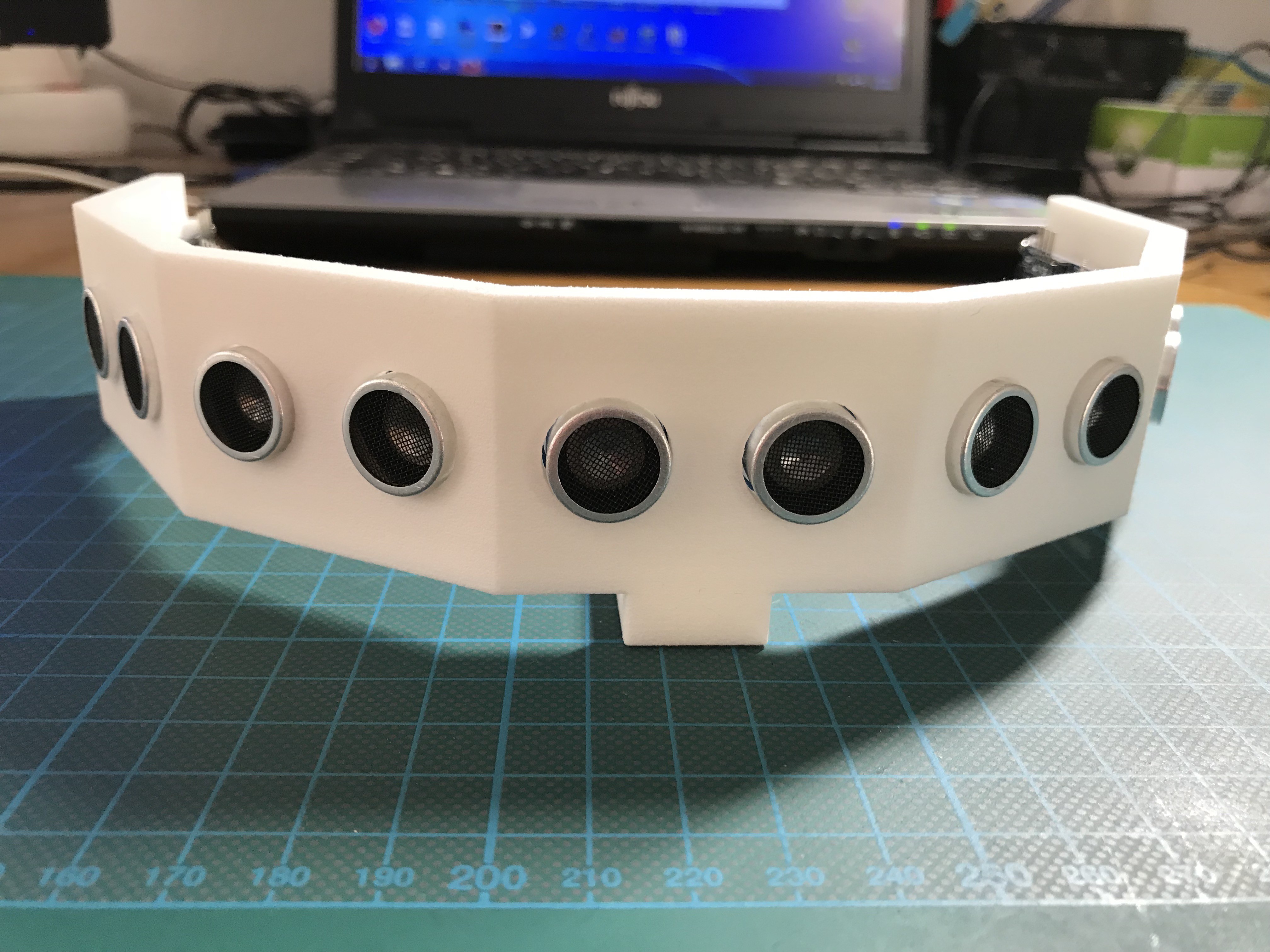

05/24/2018 at 09:57 • 0 commentsThe ultrasonic module consists of 7 HC-SR04 distance sensors and a 3-D printed enclosure. The module will be mounted on the front of the robot base, a second one can be mounted on the backside if necessary. So far I finished the 3-D drawing of the enclosure.

![]()

![]() 3-D printed part from Shapeways:

3-D printed part from Shapeways:7 HC-SR04 mounted:

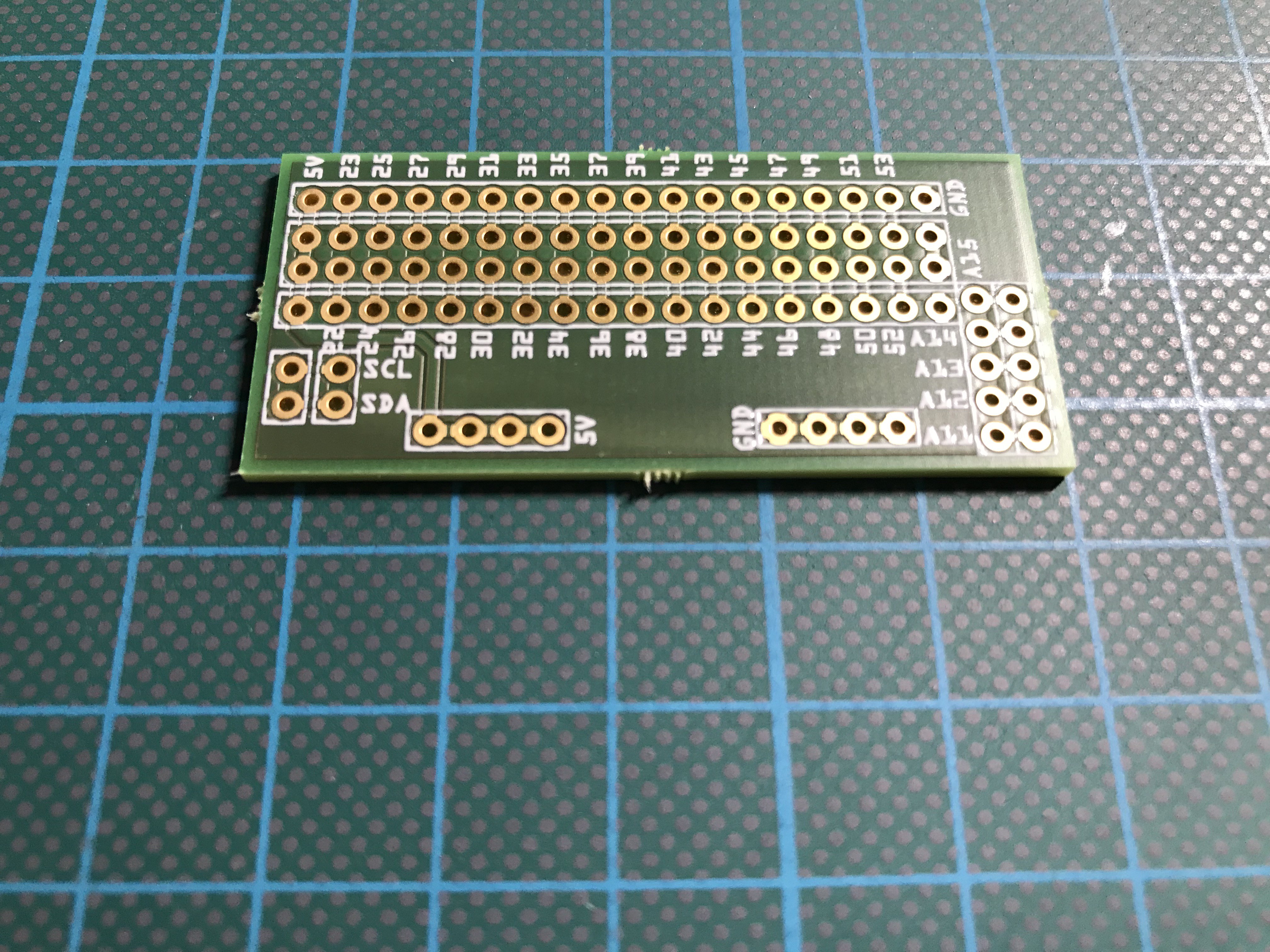

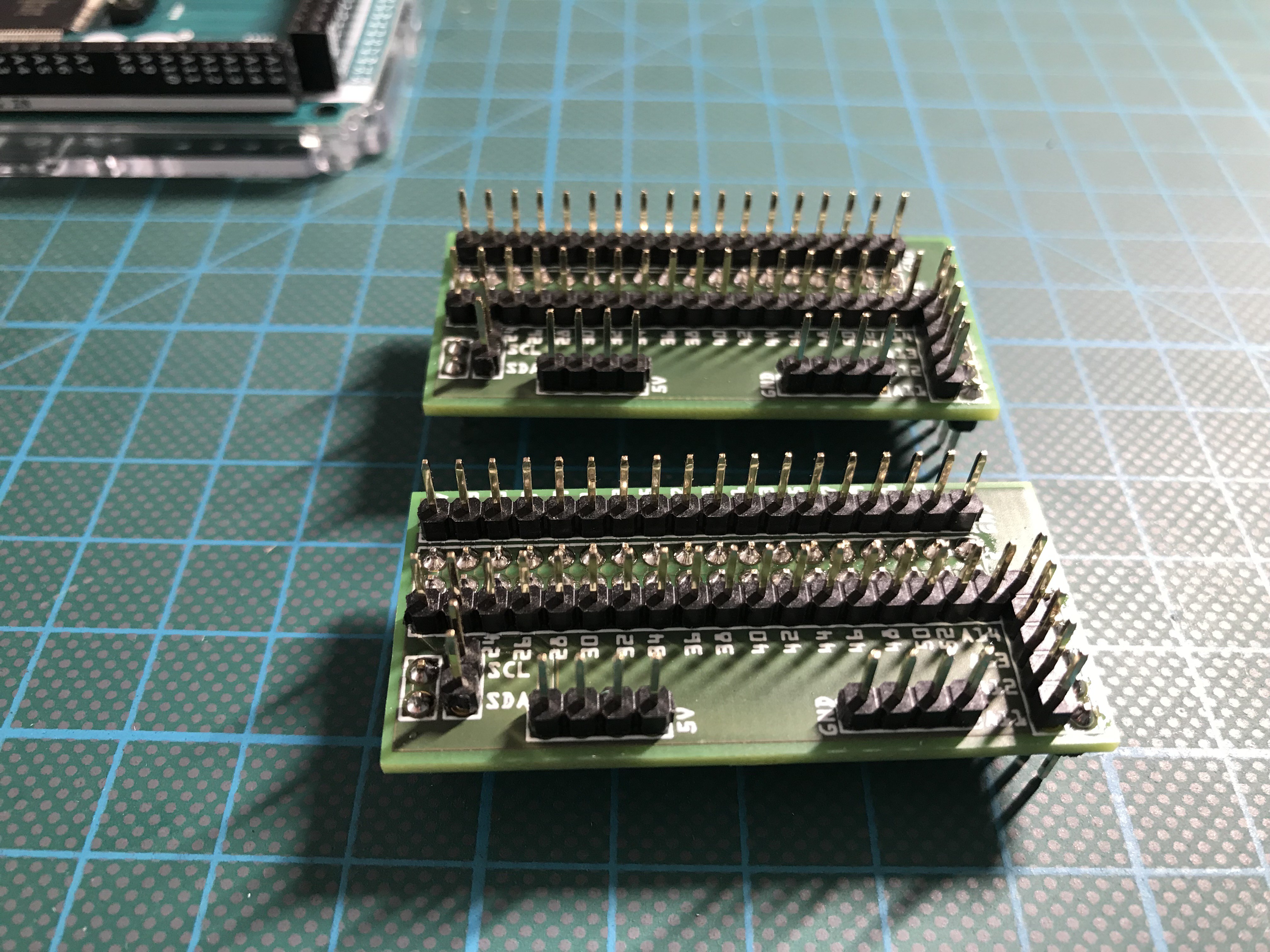

I also designed an extension shield for the two Arduino MEGA's to have better access to the remaining I/O and analog pins:

Populated extension shields for the two Arduino MEGA's, MARB has on board:

Wired and tested ultrasonic sensor module:

-

Videos!

05/21/2018 at 18:17 • 0 commentsAll videos of MARB will be posted here!

First video shows nothing exciting. Just very basic arm movements, emojis, TFT SD card and bitmap test:

Again nothing fancy, testing MARB's motor driver. Beside of wiring the ultrasonic sensors I need to connect the compass breakout to do some advanced obstacle avoidance. Stay tuned.MARB is doing a first experiment of decision tree based obstacle avoidance:

MARB performing rudimentary vision and Corneal reflex:

MARB performing color sensing and speech. Beside of this I tested I²C communication between two Arduinos.

-

Power supply

05/11/2018 at 20:36 • 0 commentsTime to take in some considerations about the power supply of MARB. I am using a 9.6 V, 5000 mAh NiMH racing pack battery. Obsolete, you will think. Why not using a Li-Ion or Li-Po battery? I can tell you. NiMH batteries are much easier to handle than Li-Ion or Li-Po batteries. They forgive mistakes Li-Ion or Li-Po batteries won't. They may have less energy capacity and a memory effect, but they normally don't burn or explode when treated the wrong way.

Battery mounted on the robot:

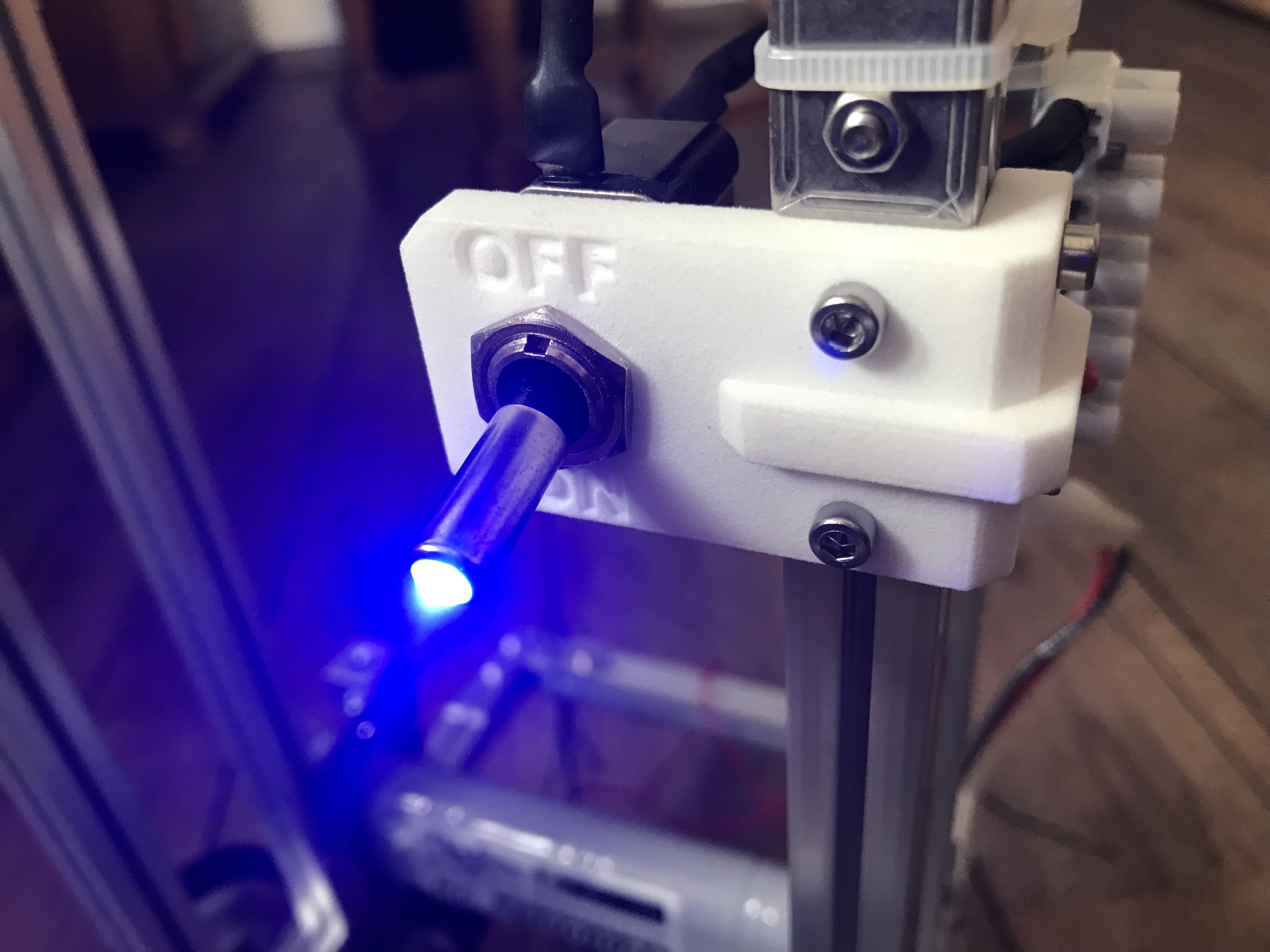

3-D printed bracket for the ON/OFF switch:

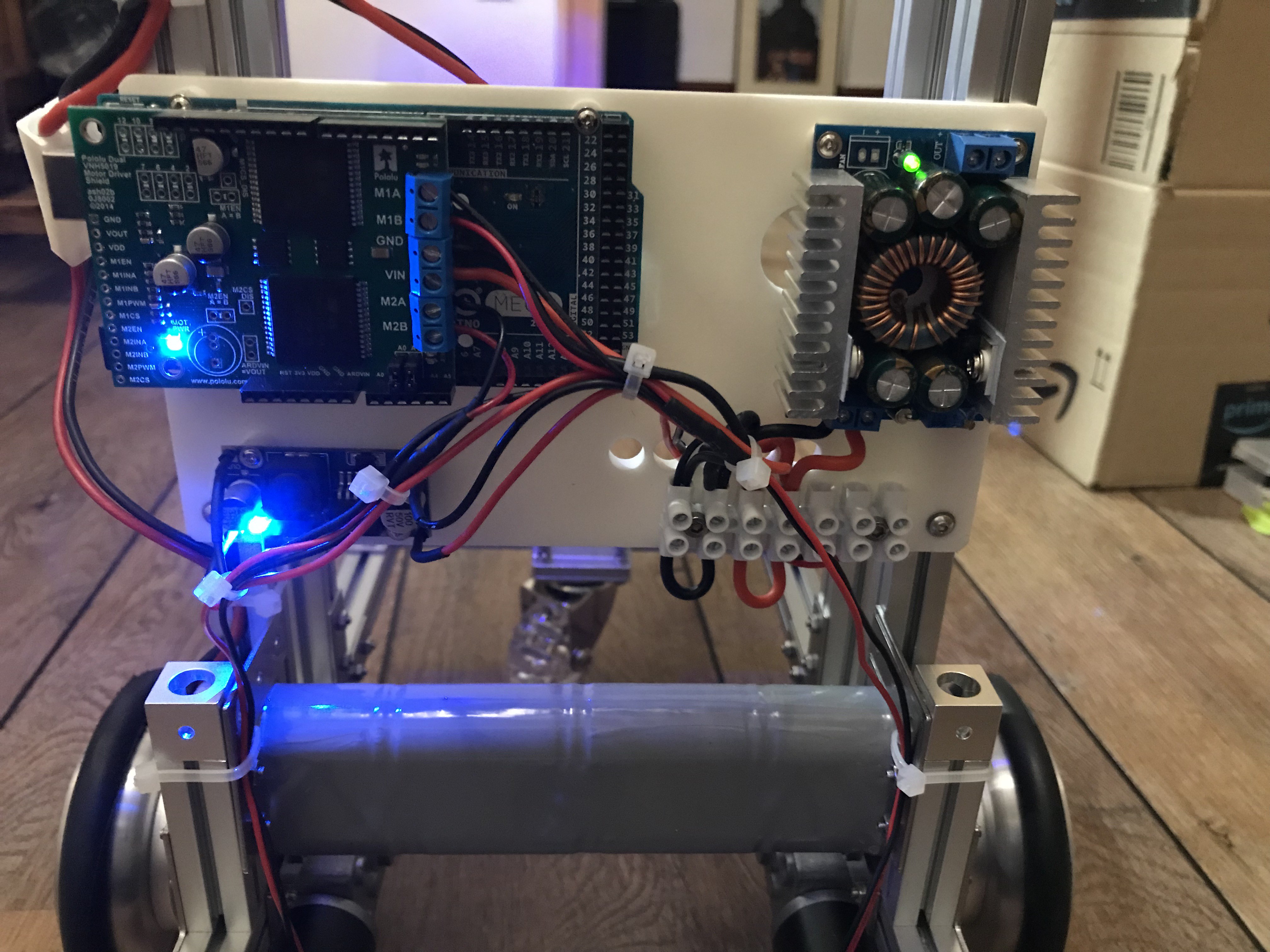

Another 3-D printed part for the power distribution and motor control module:Assembled and partly wired module. The LM 2596 based voltage regulator powers the micro controllers, the other step-down DC-DC converter the servos.

-

Human Machine Interface

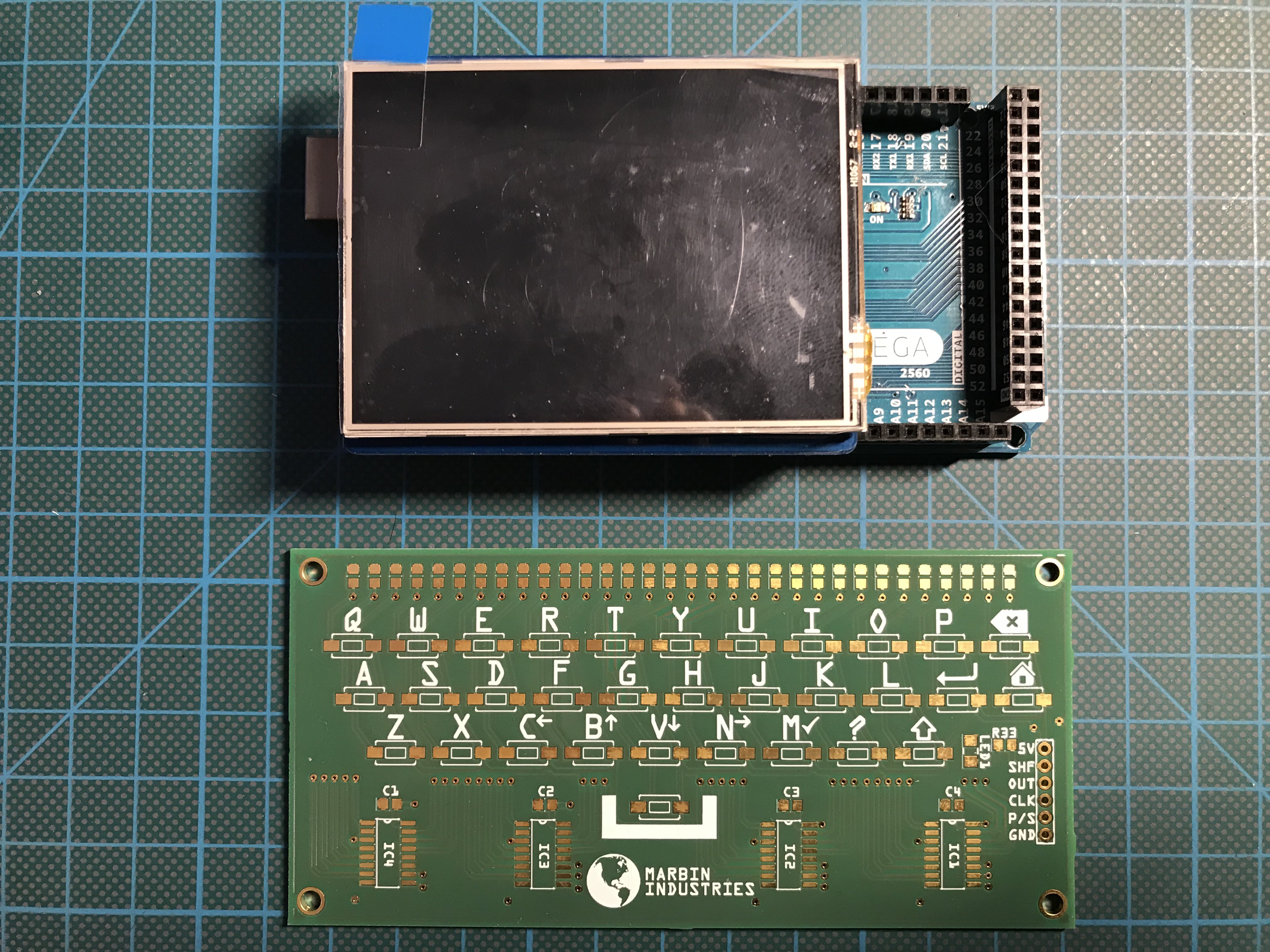

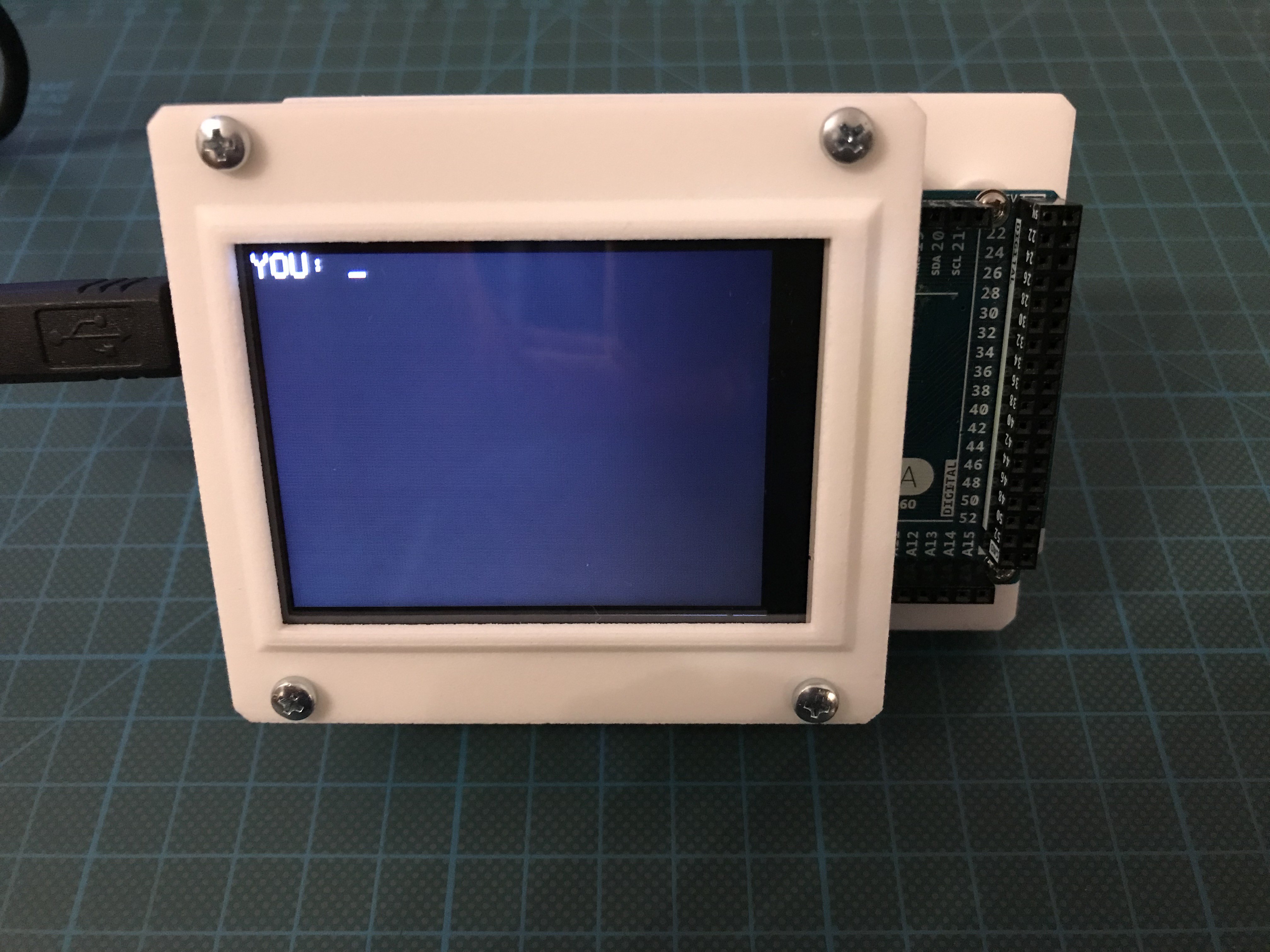

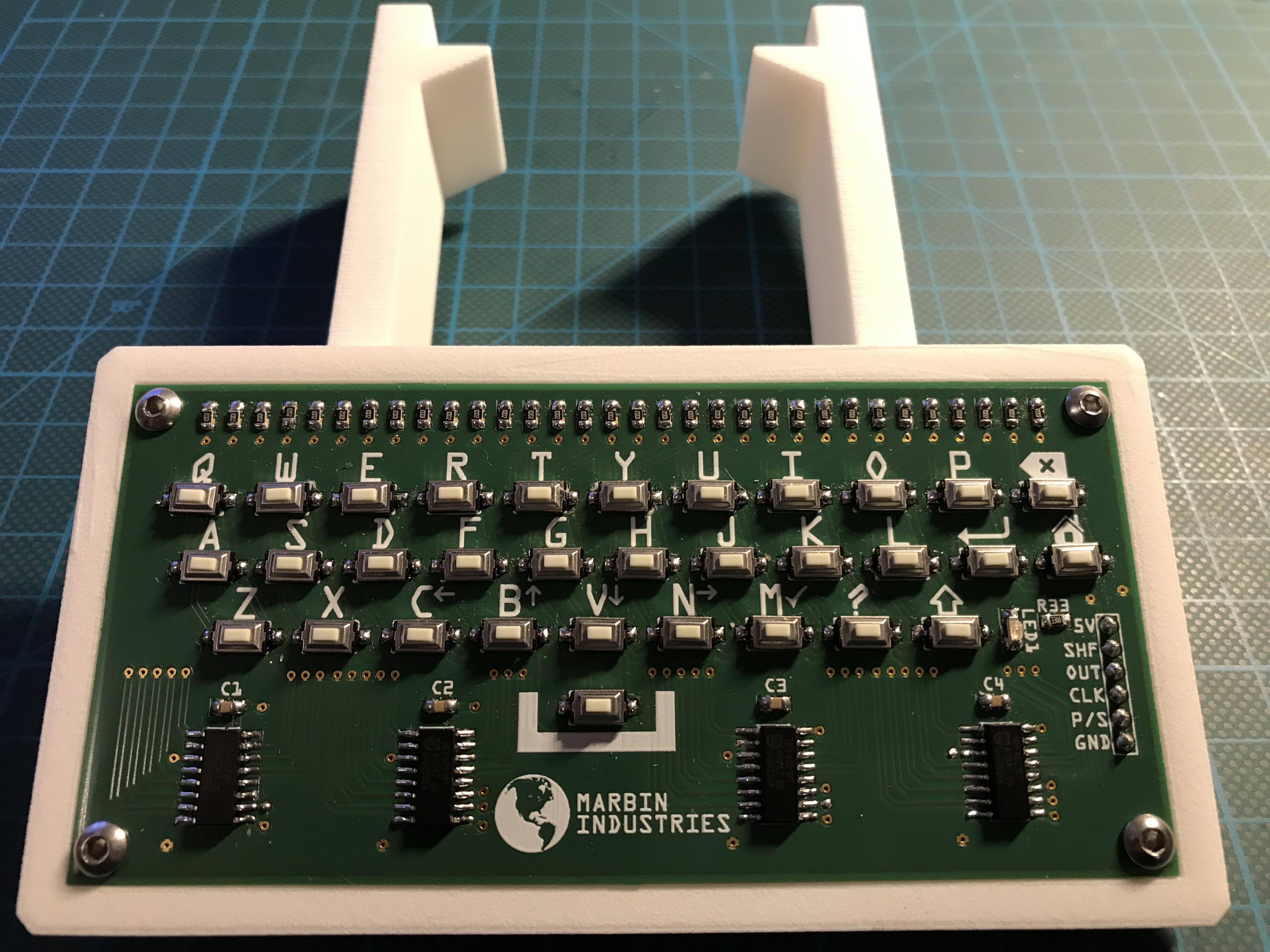

05/03/2018 at 20:40 • 0 commentsMain human machine interface will be a DFrobot 2.8" TFT touch shield on an Arduino MEGA (which works fine on Arduino UNO and MEGA by setting the 3 tiny SMD switches on the backside of the PCB and using the SPI, Adafruit GFX and Adafruit ILI9341 libraries) and a custom designed shift registers based keyboard PCB, which I still need to populate and test:

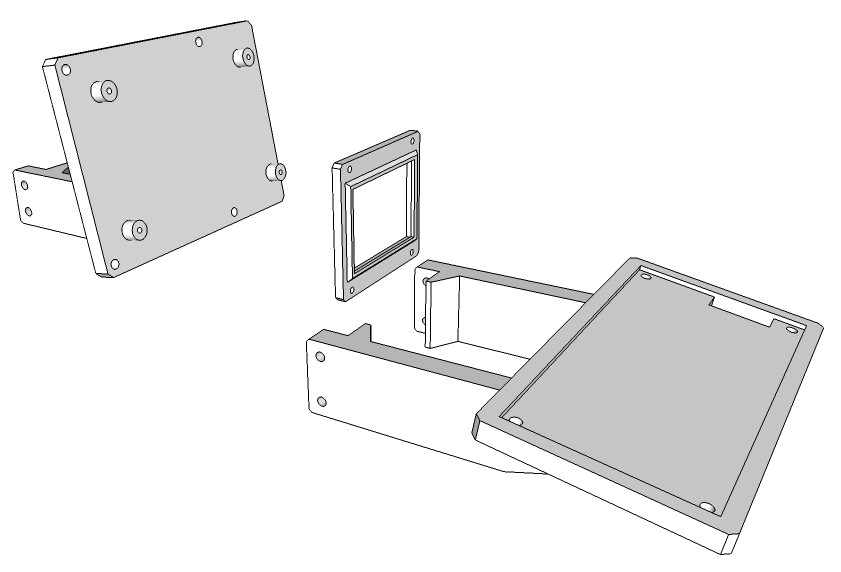

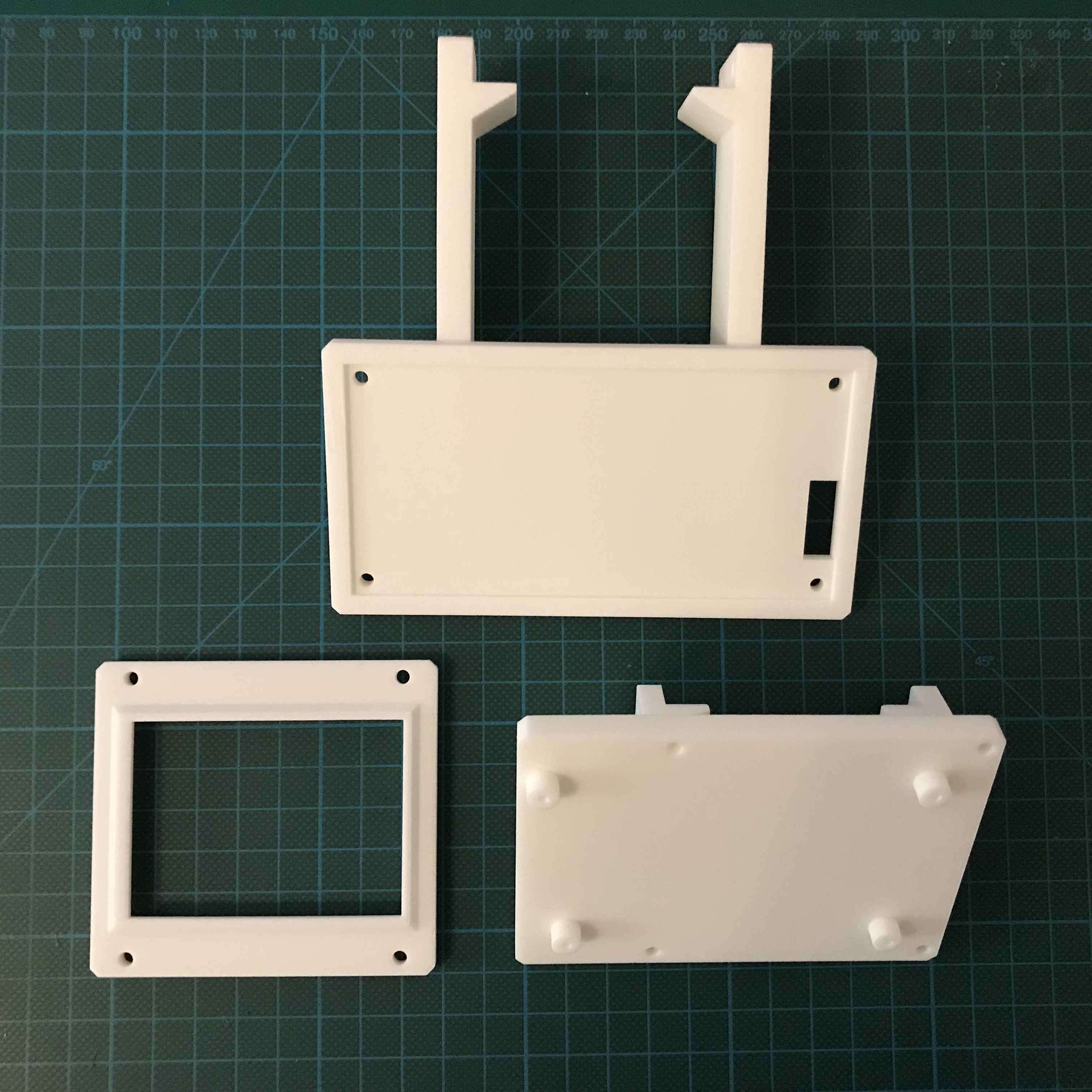

3 parts to 3-D print: the TFT and Arduino MEGA bracket, the keyboard bracket and the TFT cover.

![]()

Finally I populated the keyboard PCB and tested it:

Printed parts:

Touch screen module completed:

Keyboard module completed:

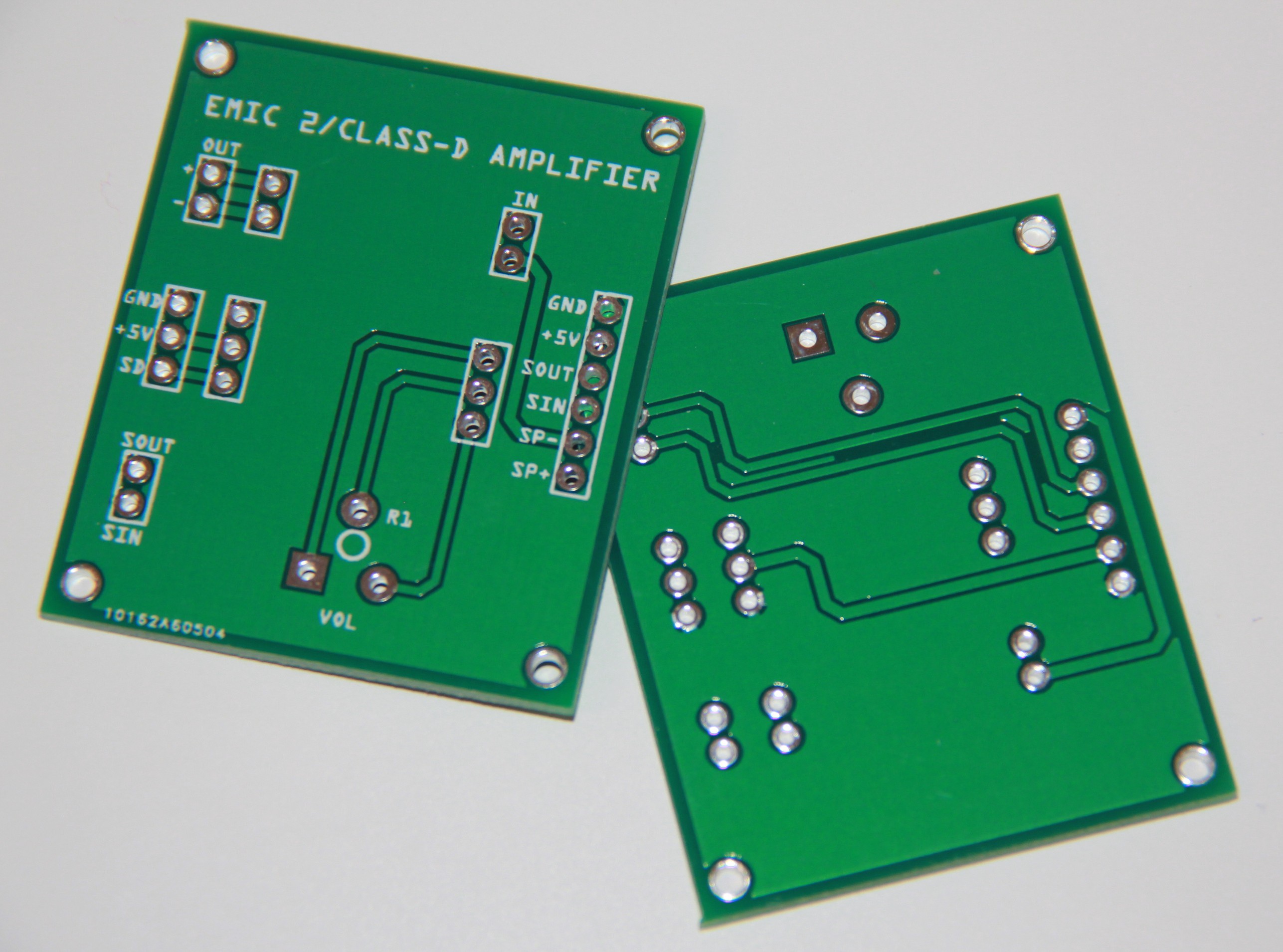

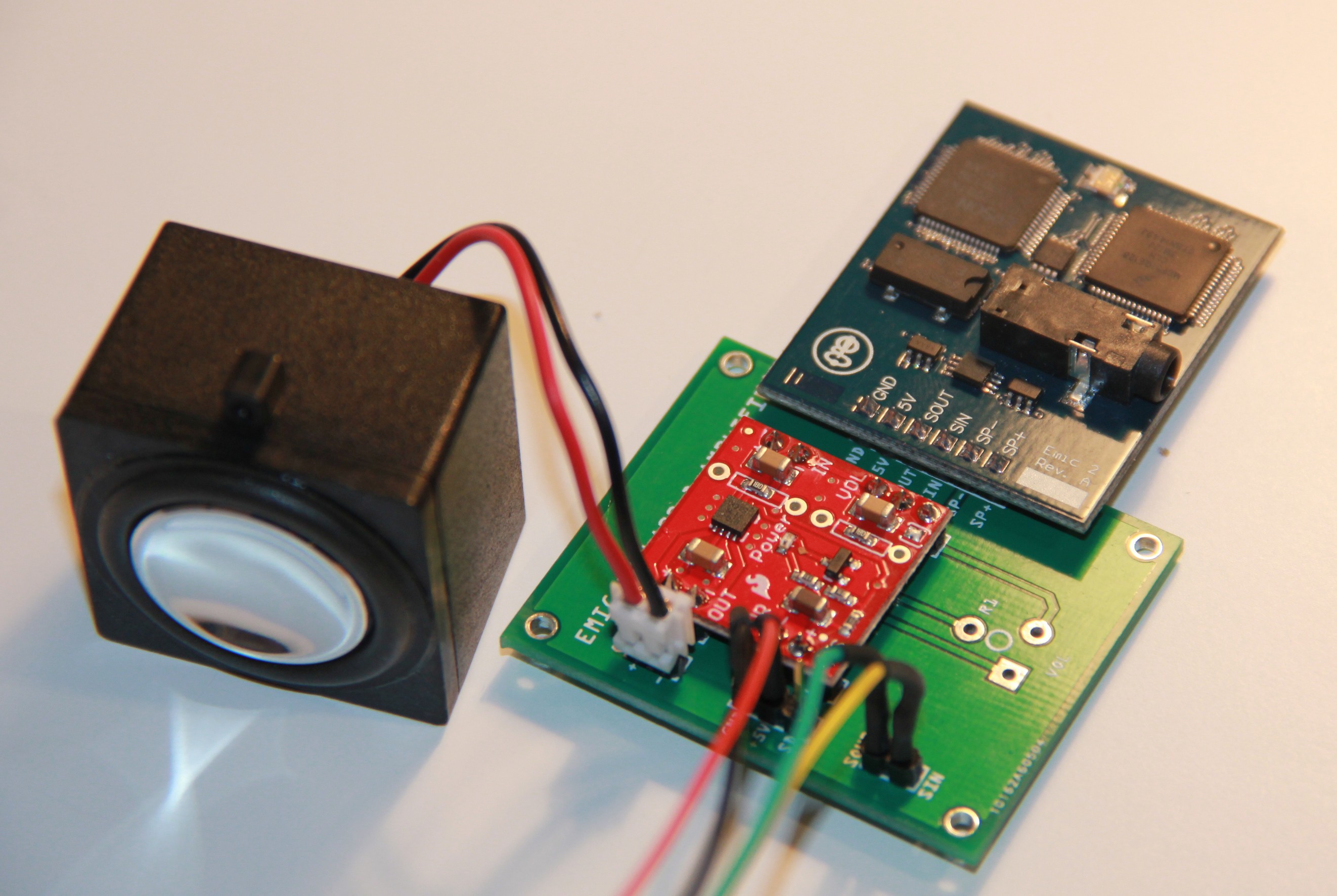

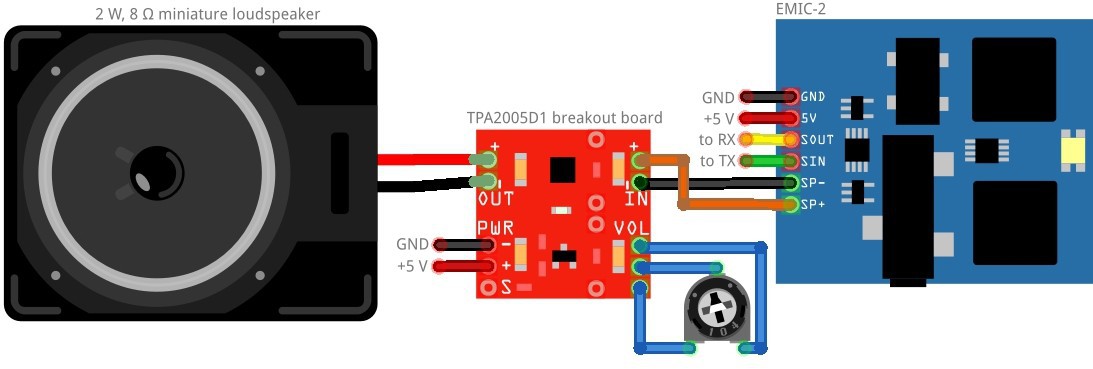

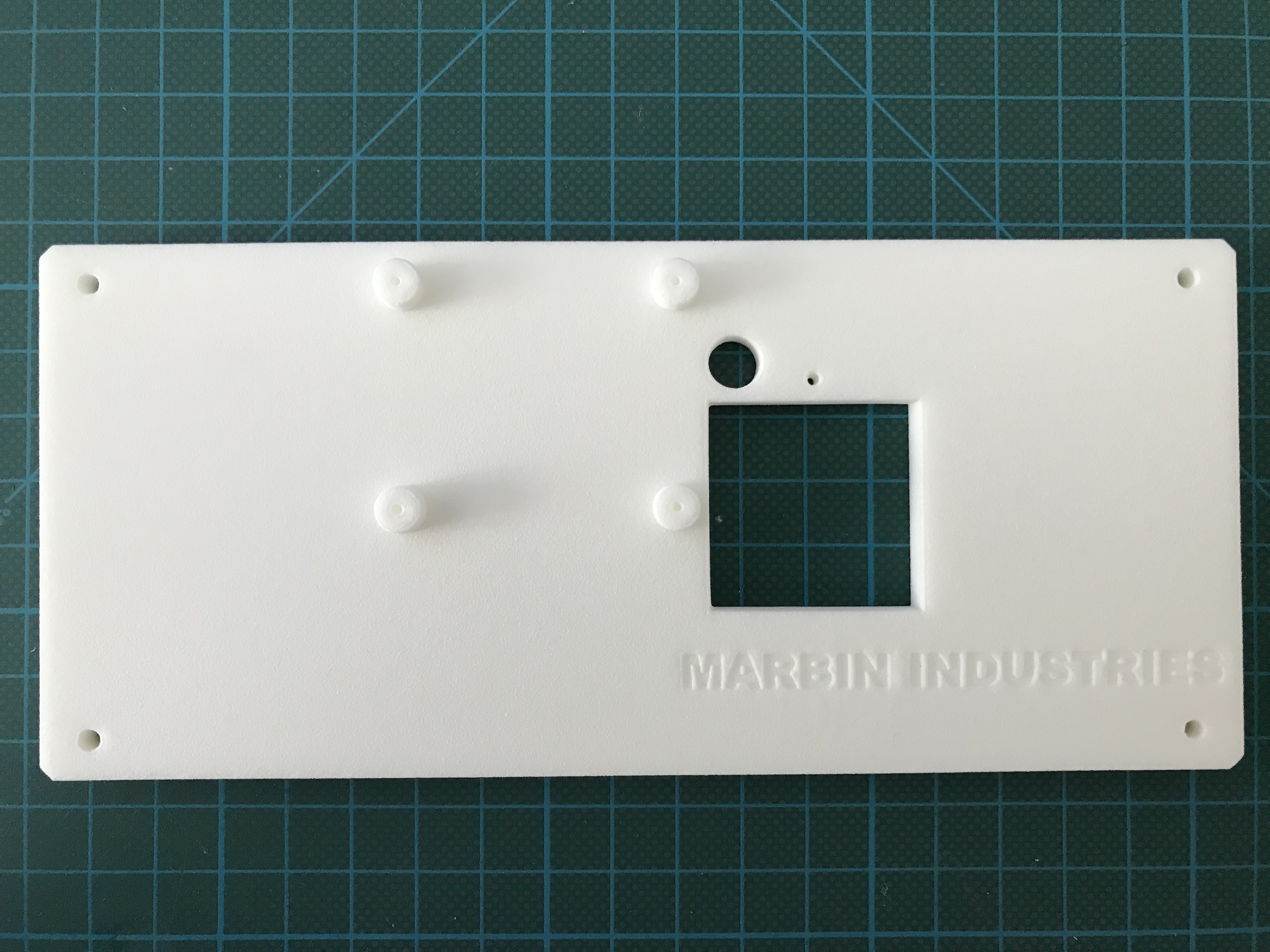

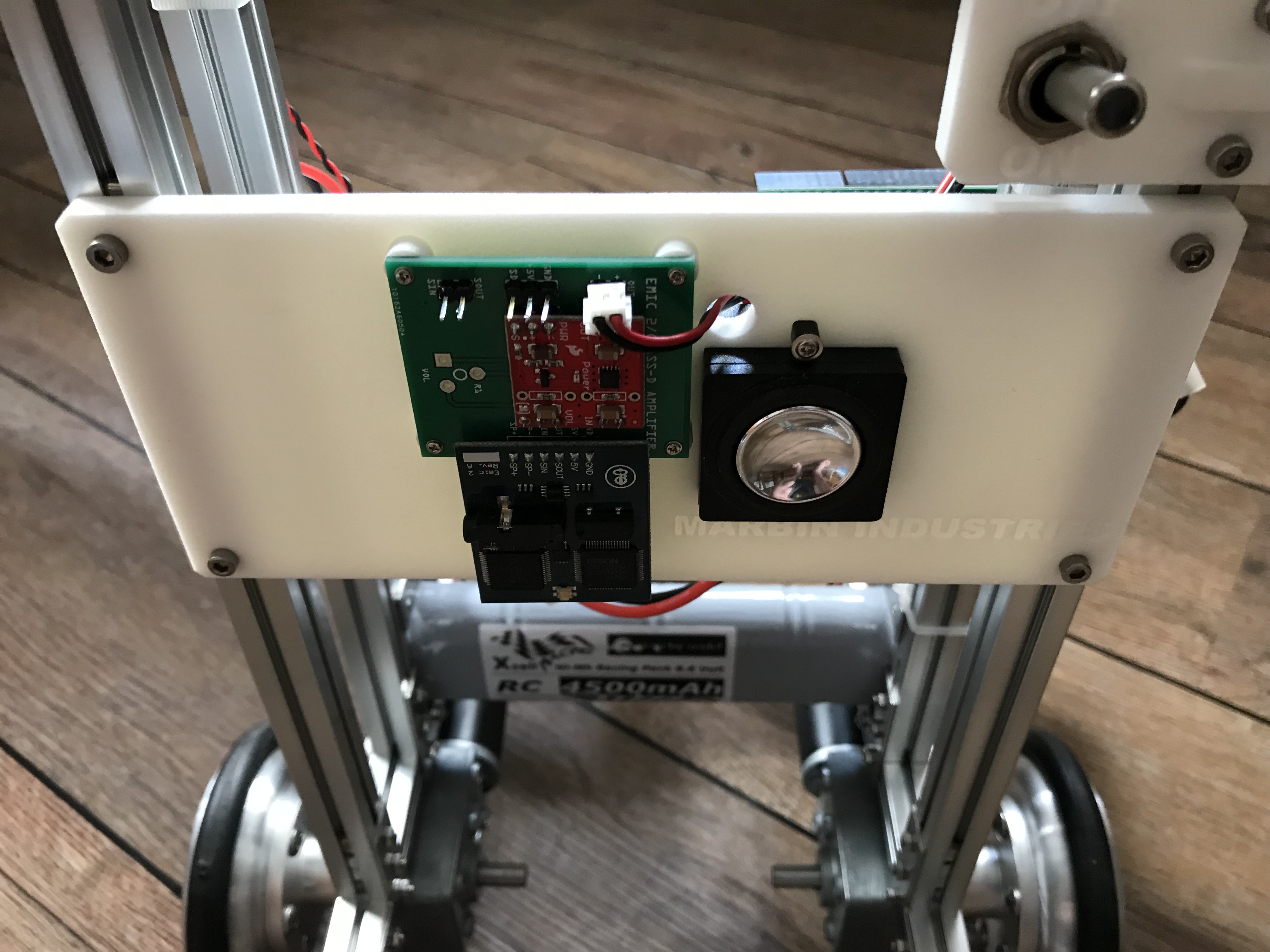

Like for my previous robot project Murphy I am using a 2 W, 8 Ω miniature loudspeaker, an EMIC-2 text-to-speech module, a TPA2005D1 breakout board and a custom designed carrier board:

Populated :

Wiring diagram:

![]()

Important notes: The EMIC-2 does not work properly with Arduino hardware serial. Use the SoftwareSerial Library instead. The potentiometer is not really necessary as the volume can be adjusted by software.

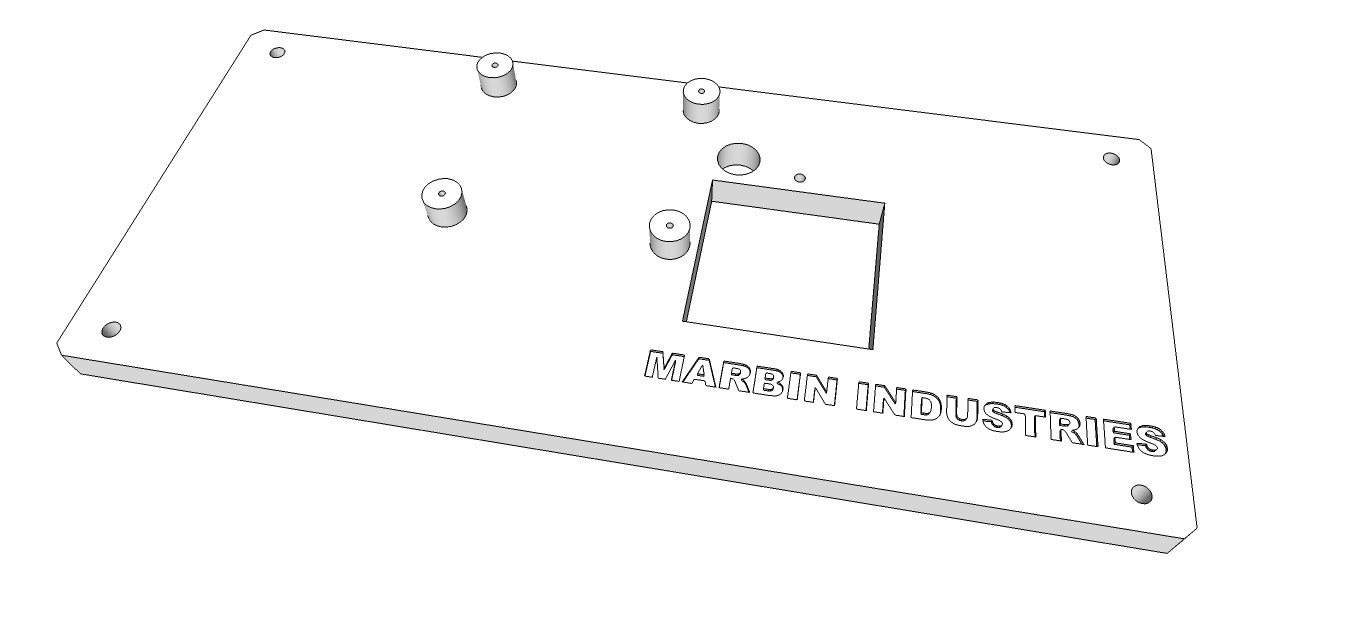

Speech module design:

![]()

Again, Shapeways has done a great job printing this part:

EMIC-2, TPA2005D1 breakout board and loudspeaker assembled and attached to the robot: -

Propulsion system of the robot

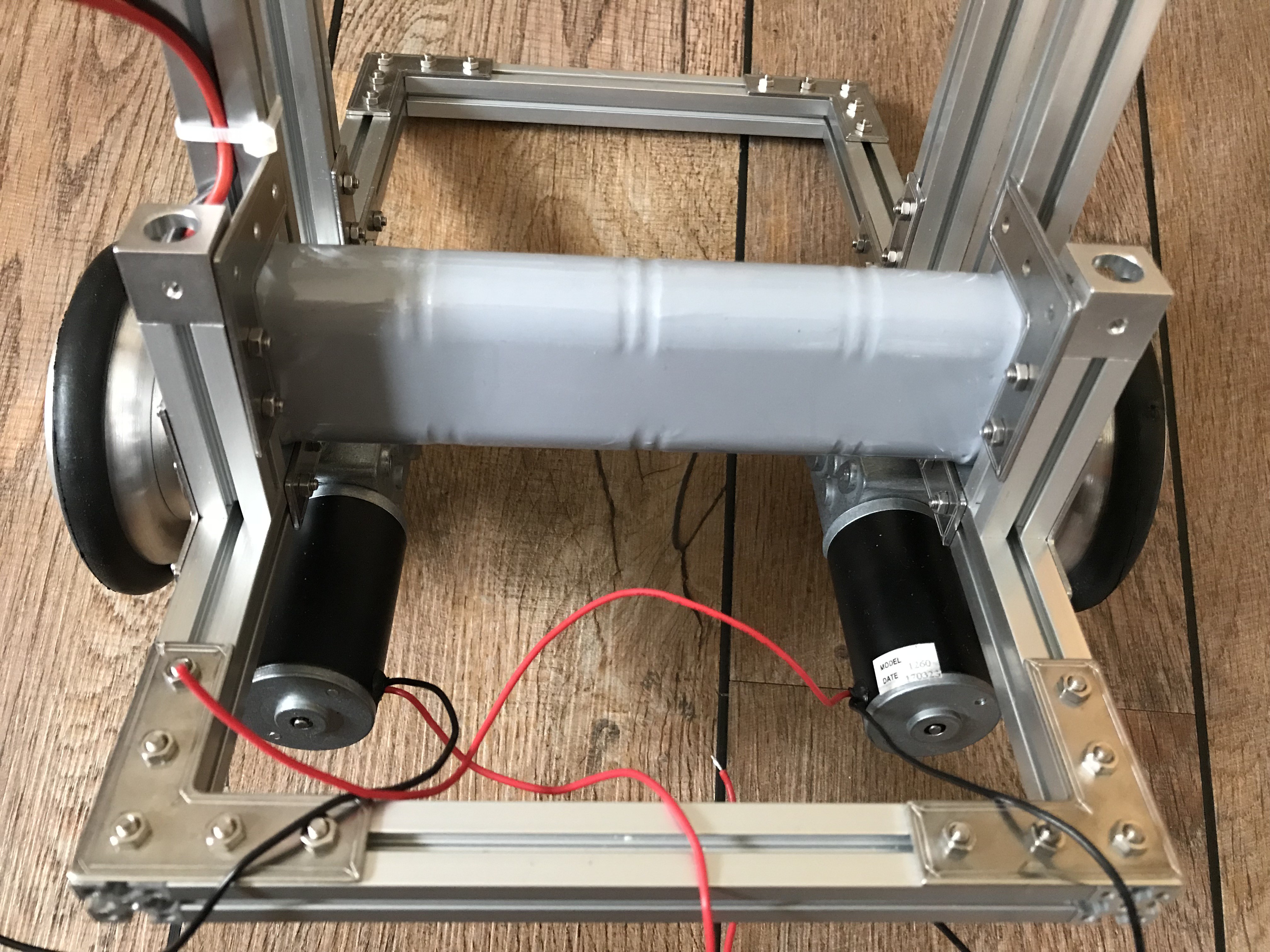

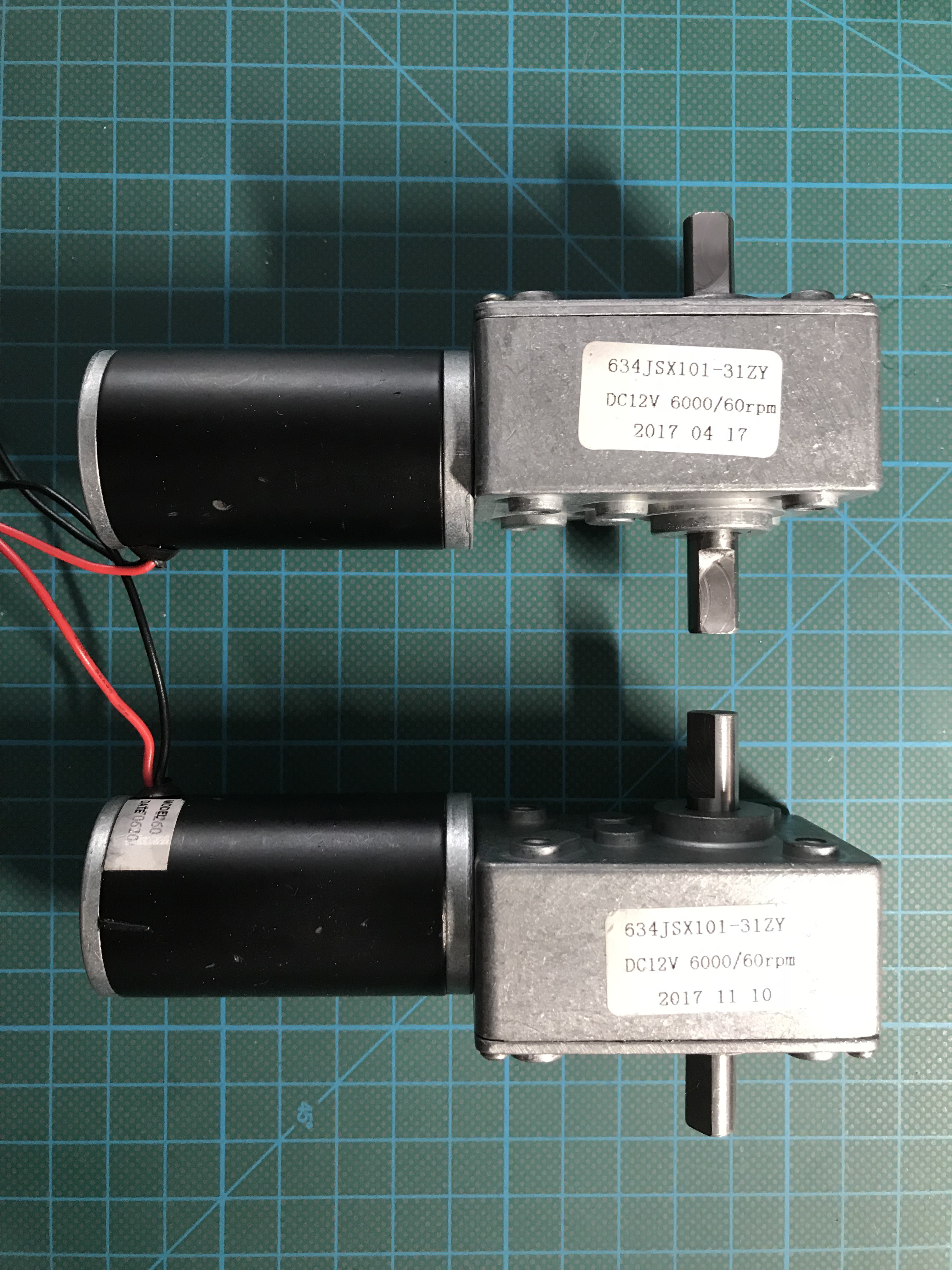

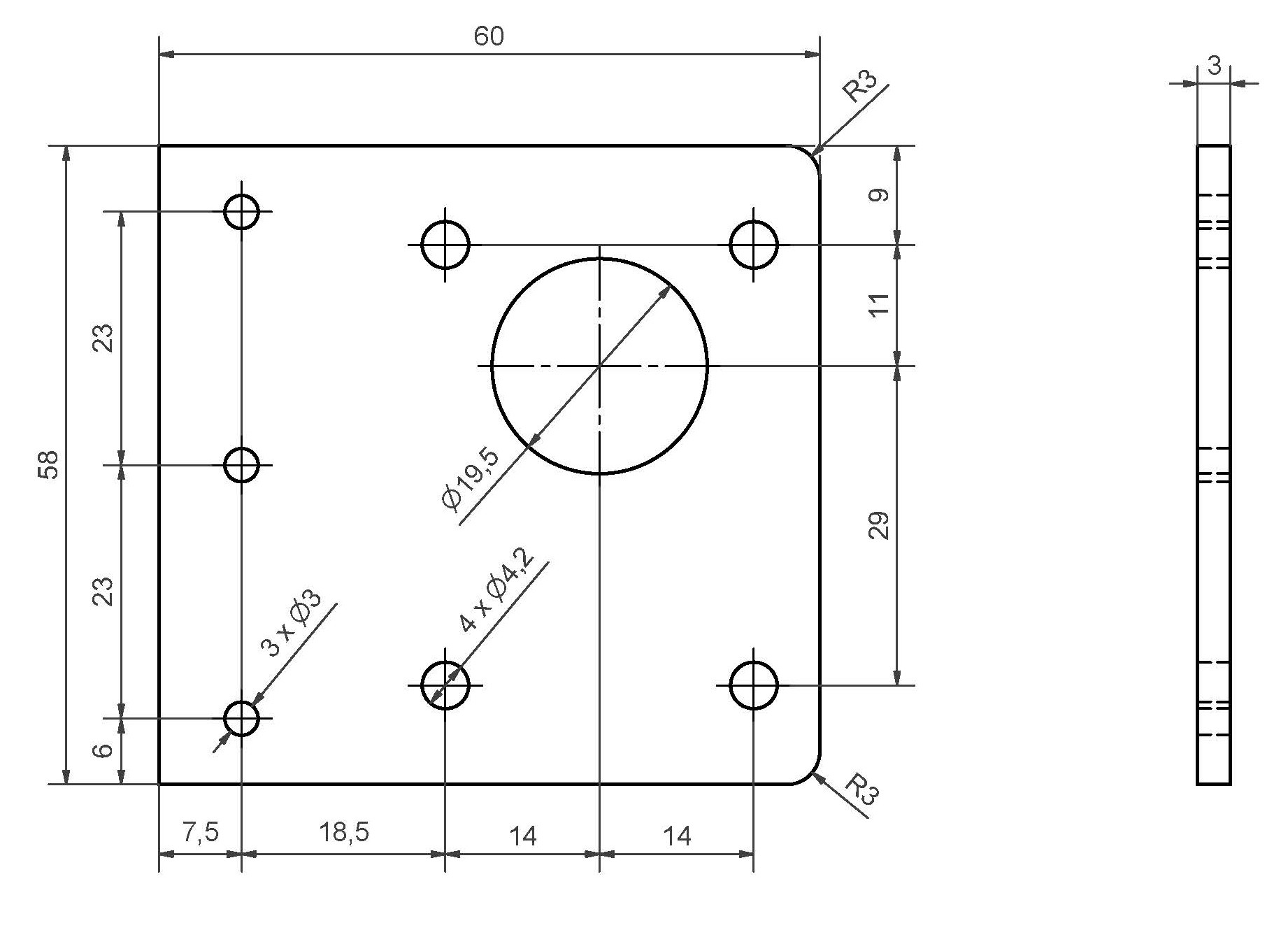

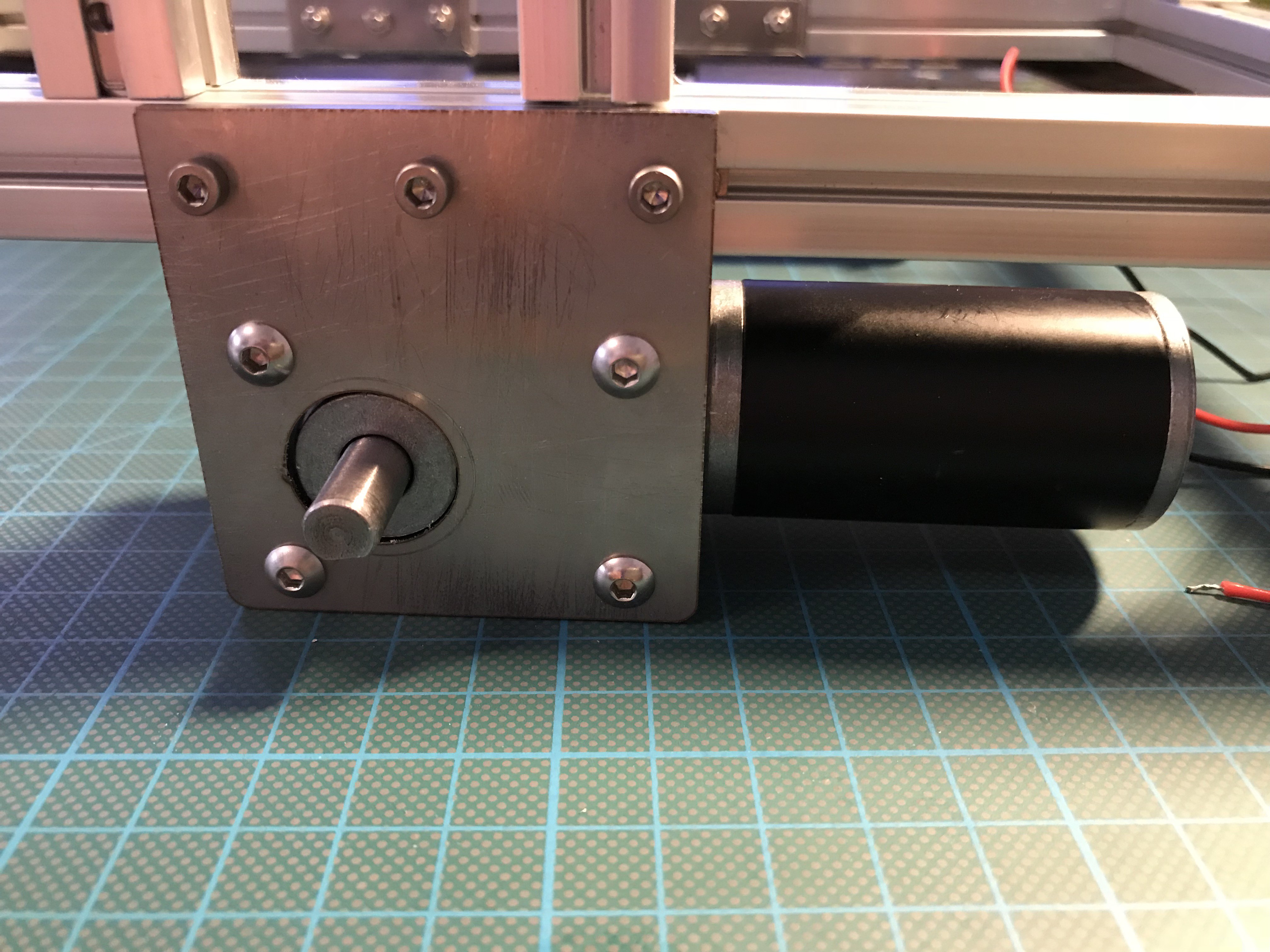

05/01/2018 at 15:01 • 0 commentsI will use these angular geared motors to propel the robot:

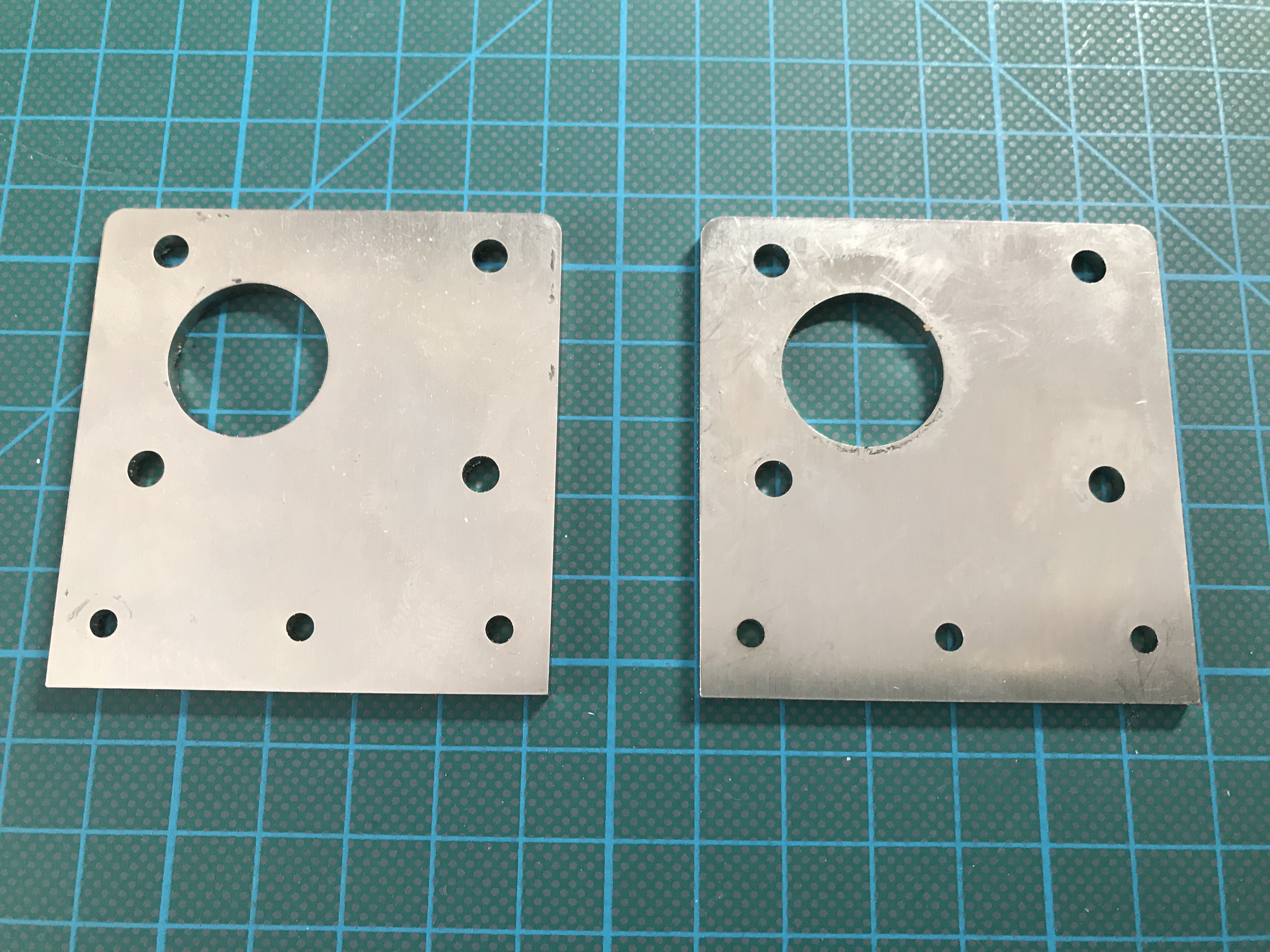

I designed according mounting plates to attach the motor to the 15 x 15 mm MakerBeam T-nut profiles and laser cut it from 3 mm stainless steel plates. The DFX file will be provided later in the file section.

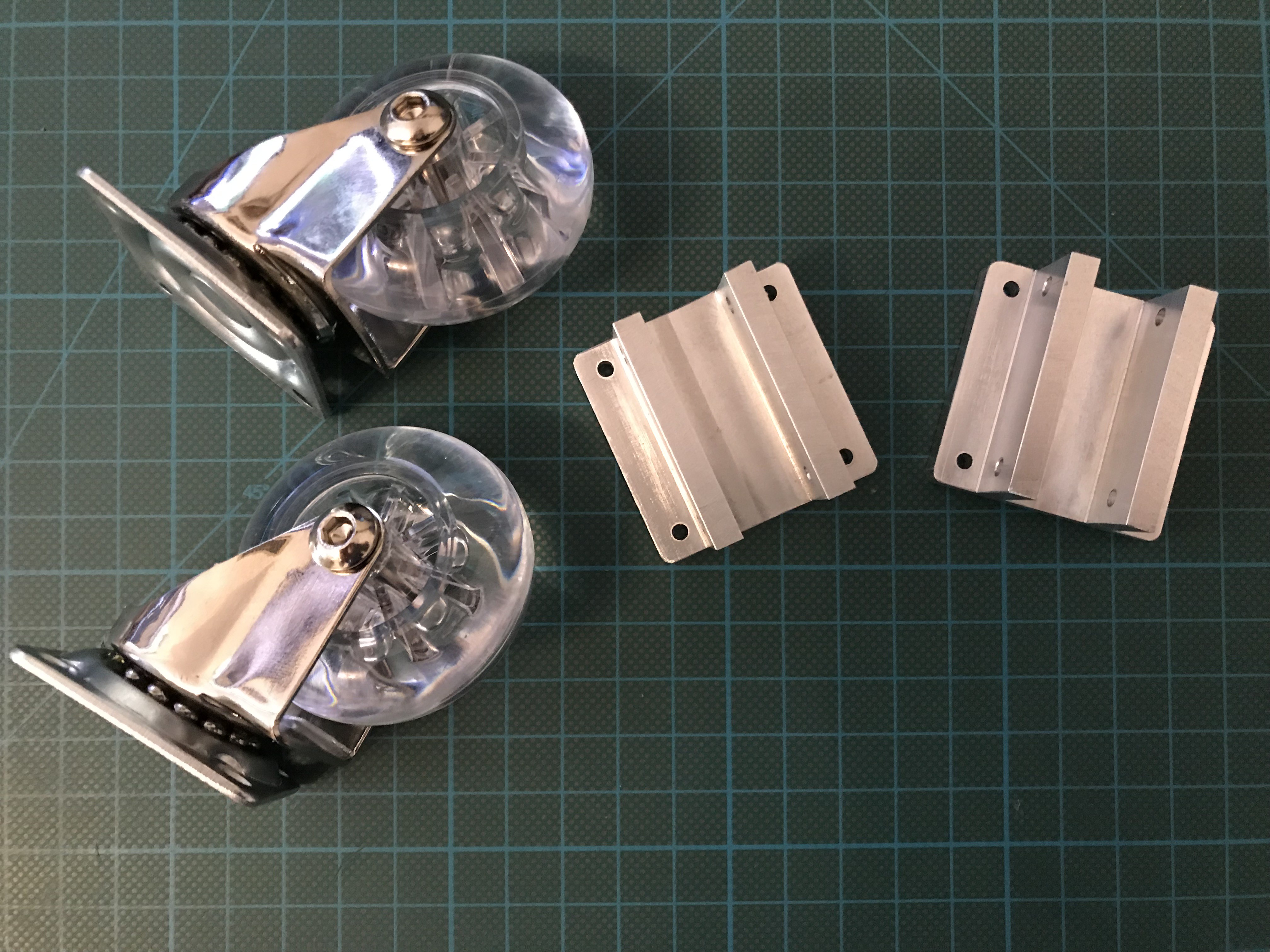

![]()

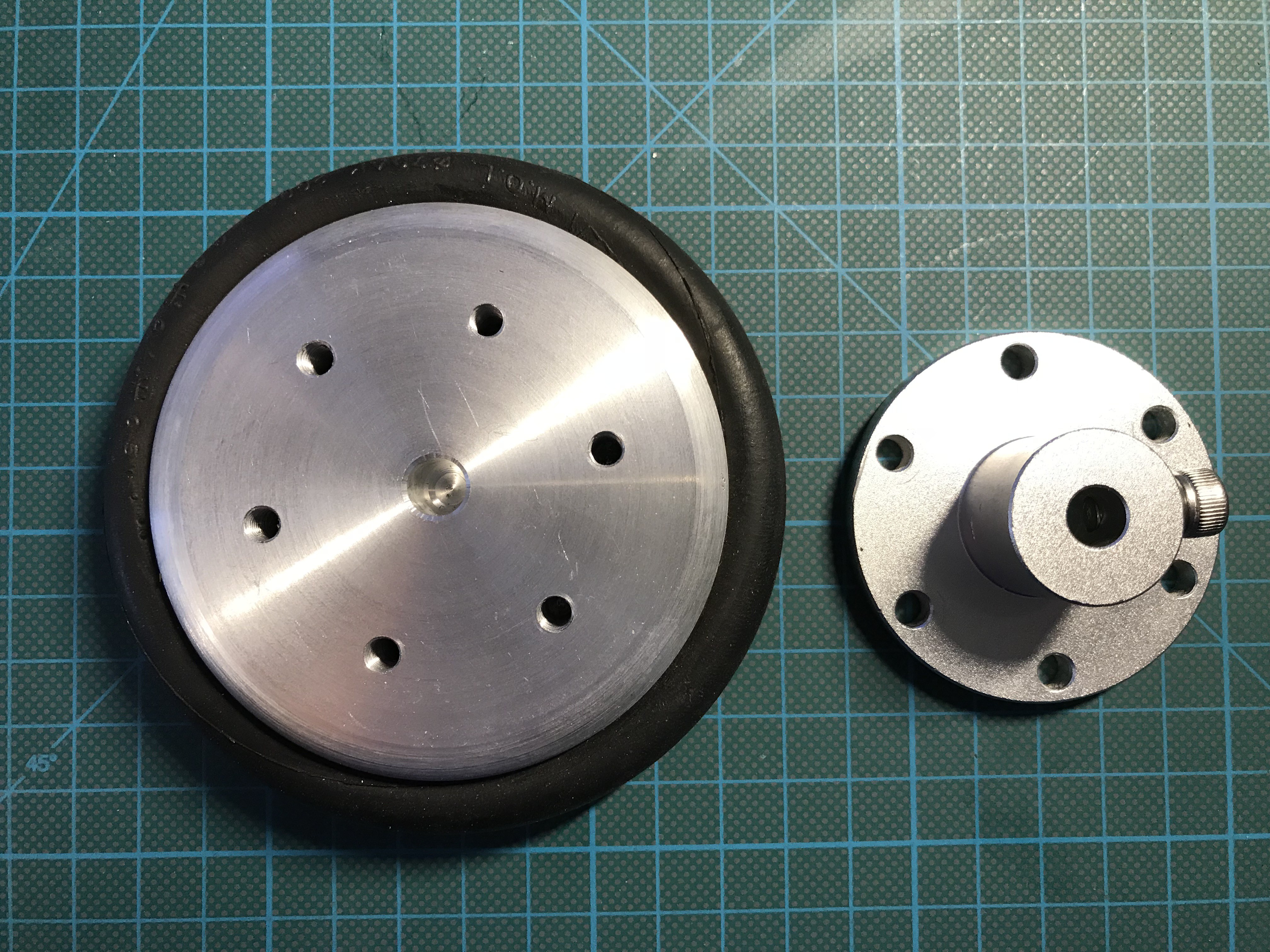

Steel plates attached to the motors and tested by a Pololu Dual VNH5019 Motor Driver Shield.Beefy aluminum wheel, CNC'd by a colleague in his workshop. Heavy, but this will help to keep the center of gravity nearby the ground, because the robot is very tall. Tire is a 10 mm dia O-ring. Next to it an off-the-shelf wheel hub for a 8 mm motor axis. The bore hole in the center of the wheel is there to position wheel and hub perfectly before fixing it by six M5 screws.

Motors attached to base.

Wheels attached to the motors:

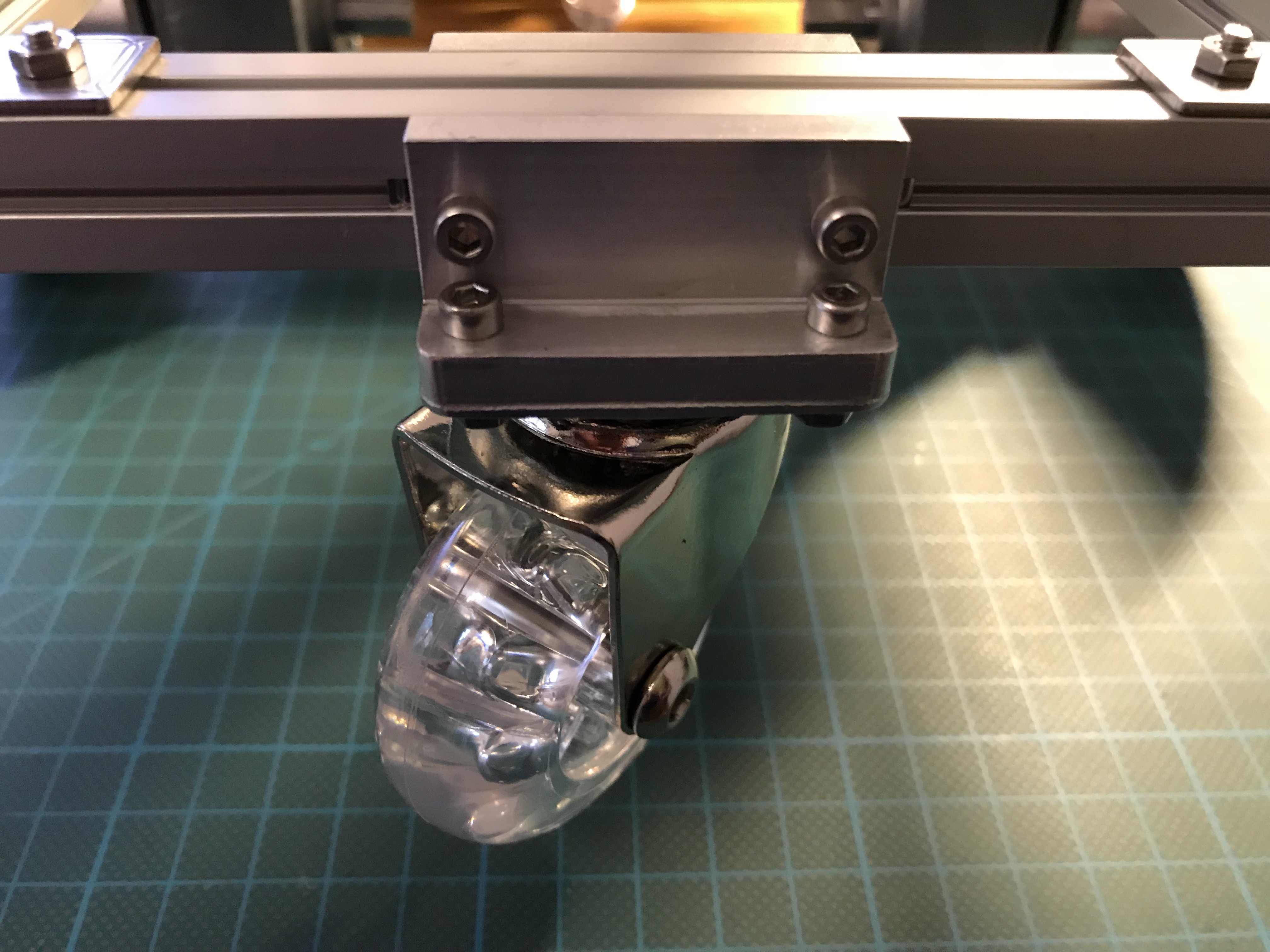

To assemble the caster wheels to the MakerBeam system I CNC'd two mounting brackets:It is important that the caster wheels have a few millimeter ground clearance, otherwise the robot can not overcome smallest obstacles like a door sill.

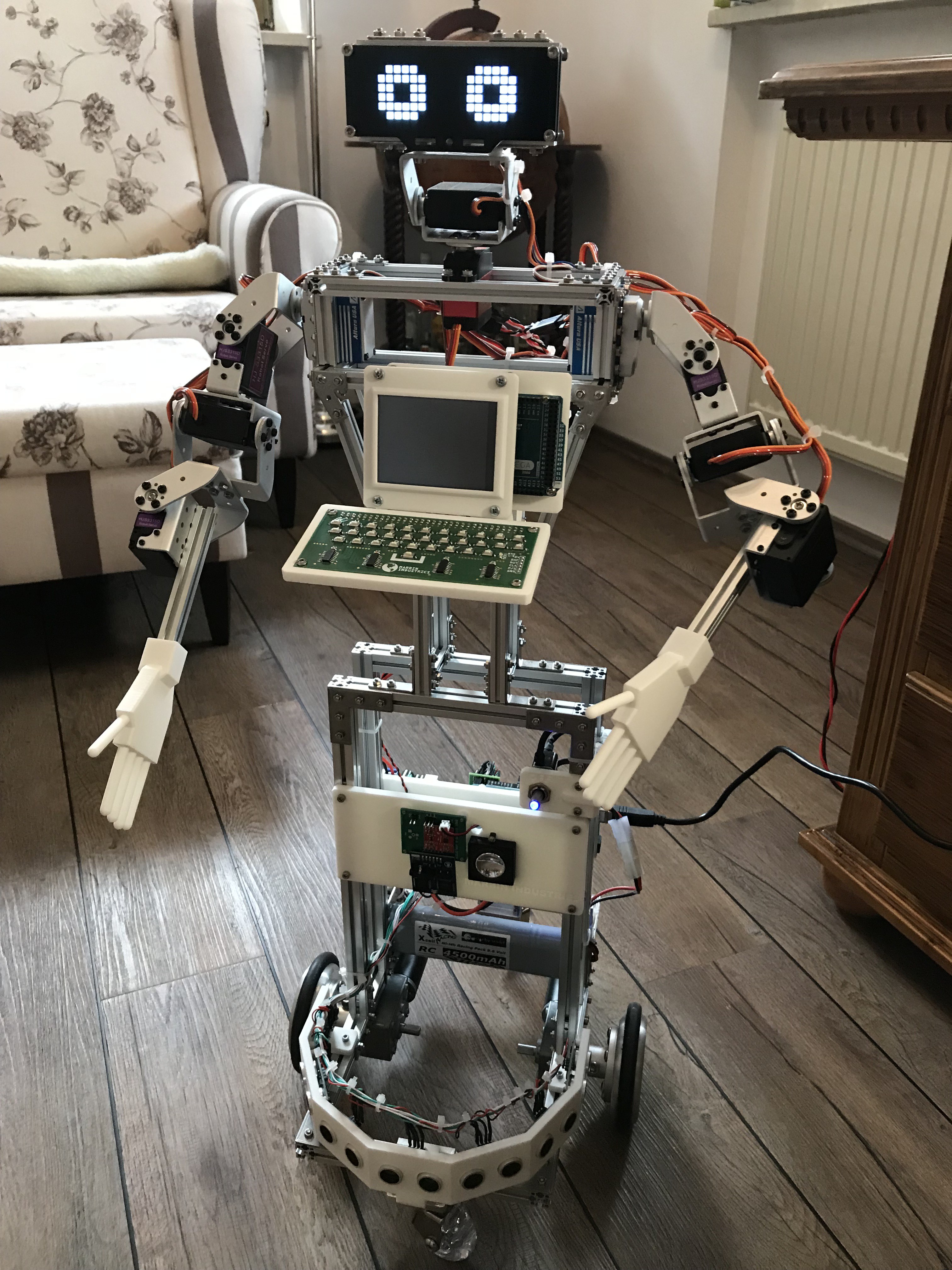

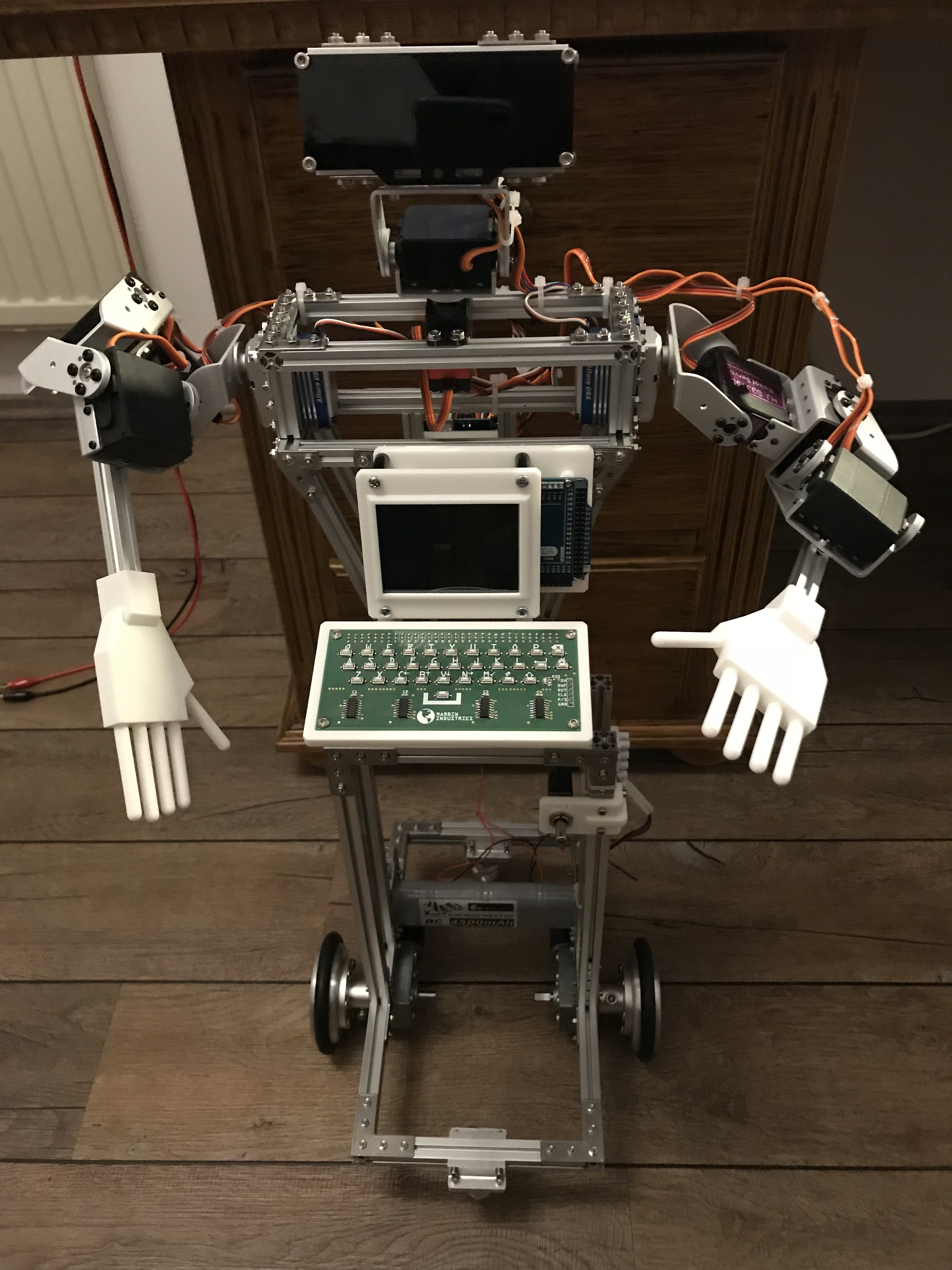

Modular Humanoid Robot "MARB"

Meet MARB, a modular humanoid robot you can build yourself

M. Bindhammer

M. Bindhammer

A lot of work went today into the vision module. I glued the level shifter into place with 2-component epoxy adhesive, wired the level shifter and the APDS-9960 breakout and assembled the module on MARB's head.

A lot of work went today into the vision module. I glued the level shifter into place with 2-component epoxy adhesive, wired the level shifter and the APDS-9960 breakout and assembled the module on MARB's head.

Testing a DS1302 RTC breakout from Sunfounder:

Testing a DS1302 RTC breakout from Sunfounder:

Sensors assembled:

Sensors assembled:

3-D printed part from Shapeways:

3-D printed part from Shapeways:

Populated extension shields for the two Arduino MEGA's, MARB has on board:

Populated extension shields for the two Arduino MEGA's, MARB has on board: Wired and tested ultrasonic sensor module:

Wired and tested ultrasonic sensor module:

Assembled and partly wired module. The LM 2596 based voltage regulator powers the micro controllers, the other step-down DC-DC converter the servos.

Assembled and partly wired module. The LM 2596 based voltage regulator powers the micro controllers, the other step-down DC-DC converter the servos.

3 parts to 3-D print: the TFT and Arduino MEGA bracket, the keyboard bracket and the TFT cover.

3 parts to 3-D print: the TFT and Arduino MEGA bracket, the keyboard bracket and the TFT cover.

Printed parts:

Printed parts: Touch screen module completed:

Touch screen module completed:

Like for my previous robot project

Like for my previous robot project  Populated :

Populated :

Beefy aluminum wheel, CNC'd by a colleague in his workshop. Heavy, but this will help to keep the center of gravity nearby the ground, because the robot is very tall. Tire is a 10 mm dia O-ring. Next to it an off-the-shelf wheel hub for a 8 mm motor axis. The bore hole in the center of the wheel is there to position wheel and hub perfectly before fixing it by six M5 screws.

Beefy aluminum wheel, CNC'd by a colleague in his workshop. Heavy, but this will help to keep the center of gravity nearby the ground, because the robot is very tall. Tire is a 10 mm dia O-ring. Next to it an off-the-shelf wheel hub for a 8 mm motor axis. The bore hole in the center of the wheel is there to position wheel and hub perfectly before fixing it by six M5 screws.

Wheels attached to the motors:

Wheels attached to the motors: