Here is a quick video showing off some of the robots functionality. I took this at 11:00 PM at the lab, so I apologize for the quality:

I'd like to begin by posting a few of the key design features before linking in all of the design files. Then each project update will showcase each phase of design, and how I planned to meet these requirements.

If feature creep scares you, then this lifestyle ain't for you! This project started as just the robot, and later grew to incorporate the trackball electronics, the video server, a GUI to wrap it all together, and bonus software features requested on the week of delivery ;)

Here were the essential requirements for the robot itself:

| Description | Quantitative Requirement |

| Wirelessly stream low-latency video to computers within the same sub-network. | 640x480 at less than 200 mS latency |

| Save the displayed video stream as a sequence of individual images with an associated timestamp of the moment it was displayed, | ~10ms accuracy |

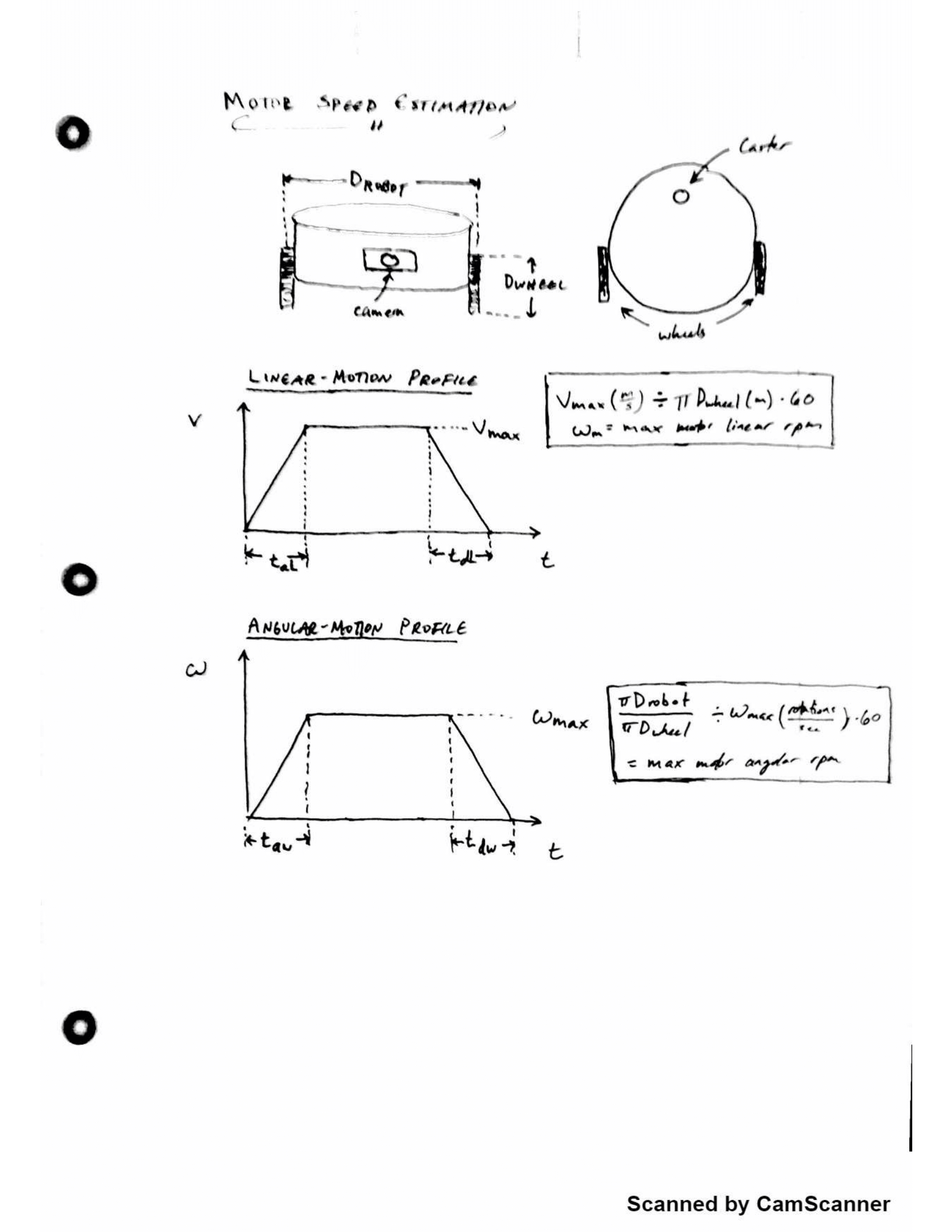

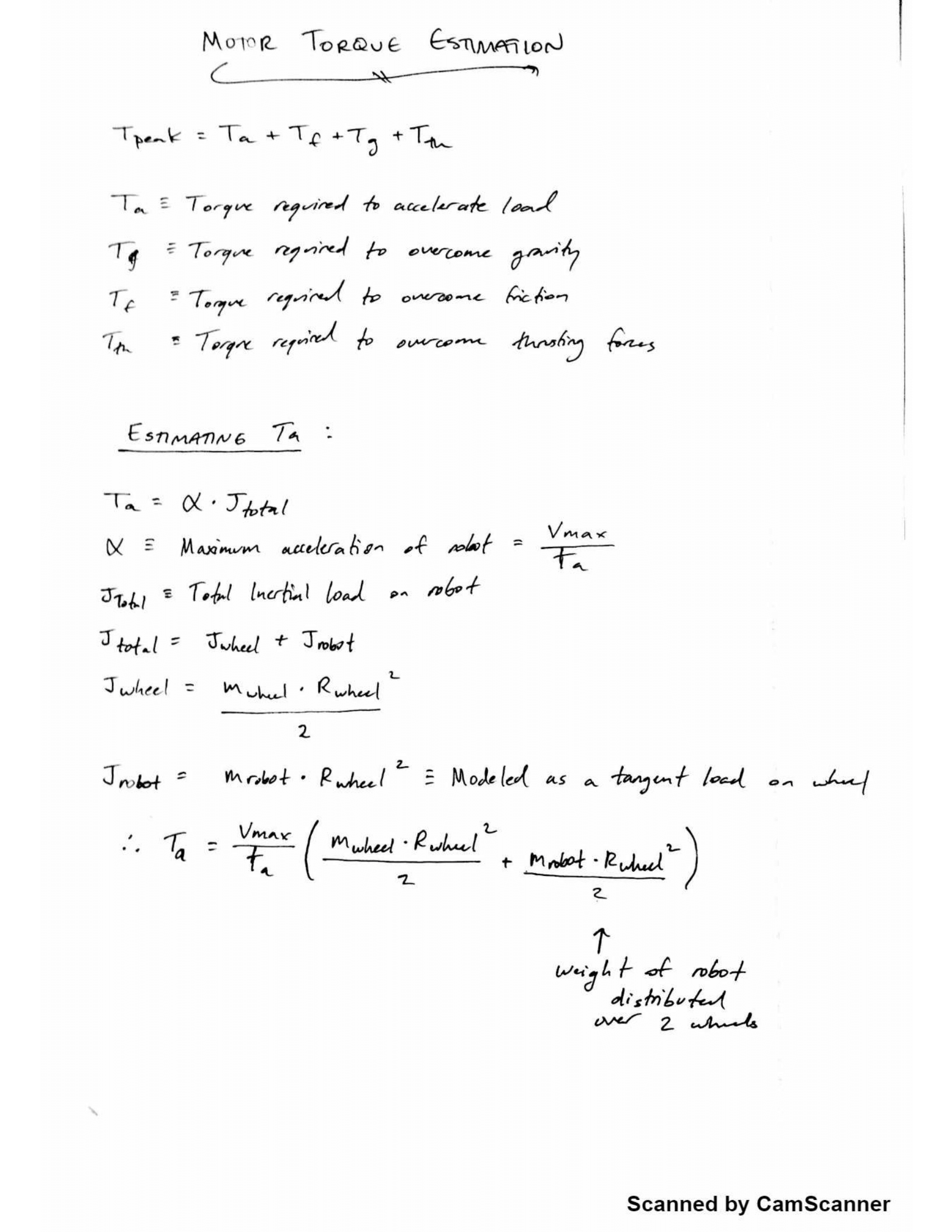

| Mechanically mimic the kinematics of a lab mouse under a "head-fixed" position. | > Top linear velocity of 50 cm/s > Minimum linear acceleration of 2 m/s/s > Top rotational speed of 2 rot/s (from stopped position) |

| Control robot movement wirelessly from trackball | |

| Minimum battery life of 1 hour |

Pretty lenient all things considered! No demands for any specific hardware, no requirements for any specific software, and given they're operating under an exploratory research grant, no "do or die" style deadlines.

My client expects this project to go through several iterations (which is always a sign of sanity in a potential employer), but who wouldn't?

Consider a testing session with the mouse under the 'scope for 30 minutes. You've got one camera on the microscope, one the bot, and a handful of others monitoring mouse behavior and orientation, all sampling at ~30 frames/sec. Between the two "main" video feeds, your looking at over a 100k frames of data (or about 126 GB of uncompressed pixel data). And although I don't understand all the details, I'd be pretty amazed if that was all the data you needed to uncover all of the convolution networks of a rodents visual cortex...

Given the sheer size of the data that needs to be collected and analyzed, any improvements can save a lot of peoples time, and are well worth the money!

_____________________________________________________________________________

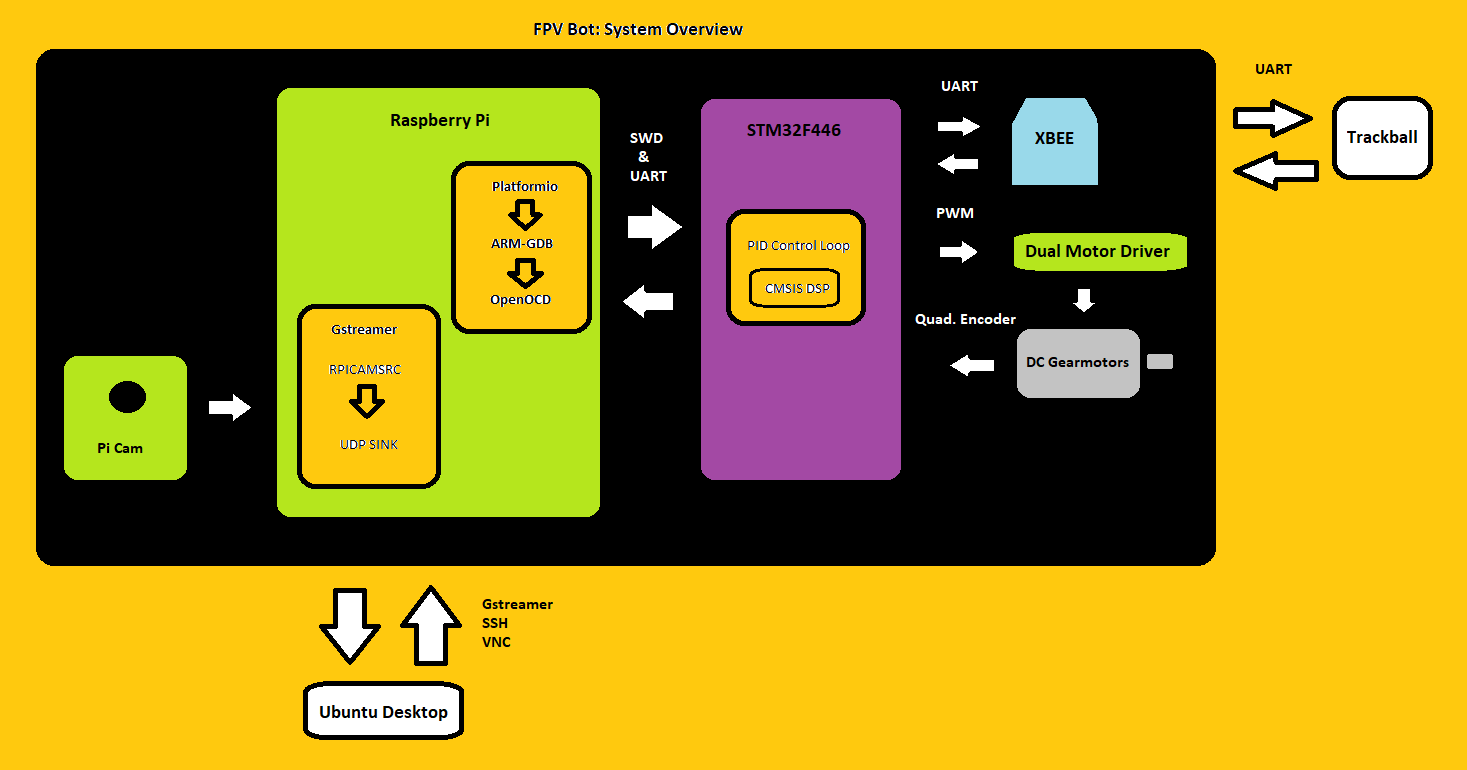

I realize I still have a lot of information to unpack here from a documentation standpoint, so I put together a quick chart to show how data is flowing through this system,

Brett Smith

Brett Smith

rlsutton1

rlsutton1

Maksim Surguy

Maksim Surguy