We have launched our 2nd KickStarter Campaign!

Our first KickStarter is below:

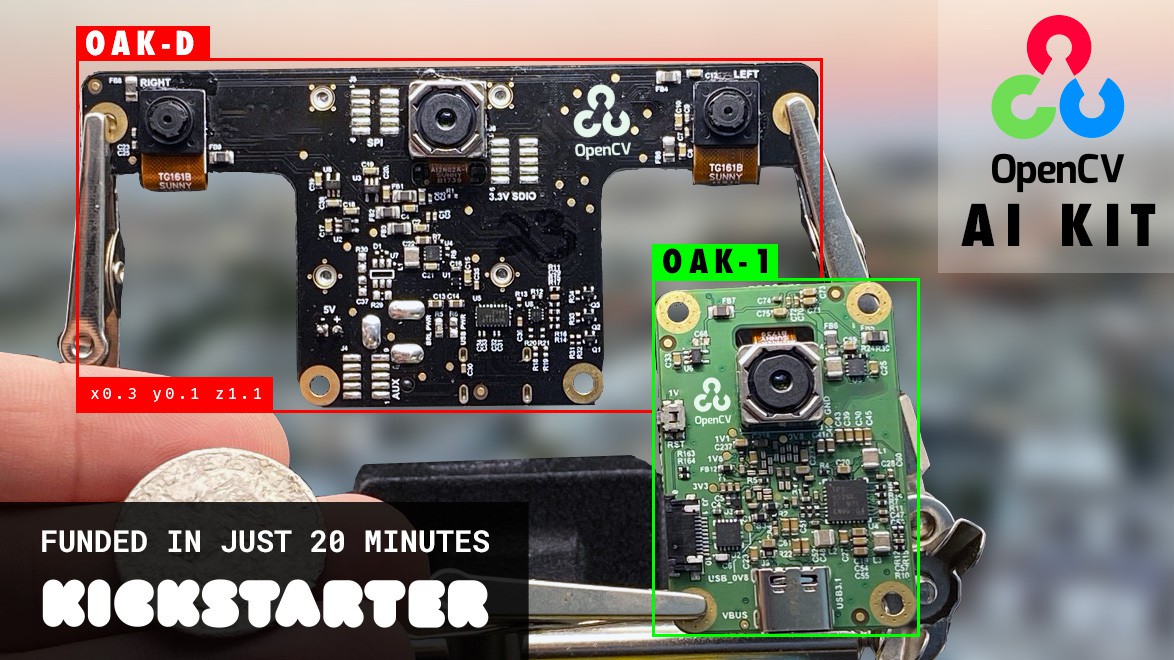

Today, our team is excited to release to you the OpenCV AI Kit, OAK, a modular, open-source ecosystem composed of MIT-licensed hardware, software, and AI training - that allows you to embed Spatial AI and CV super-powers into your product.

And best of all, you can buy this complete solution today and integrate it into your product tomorrow.

Back our campaign today!

https://www.kickstarter.com/projects/opencv/opencv-ai-kit?ref=card

The Why

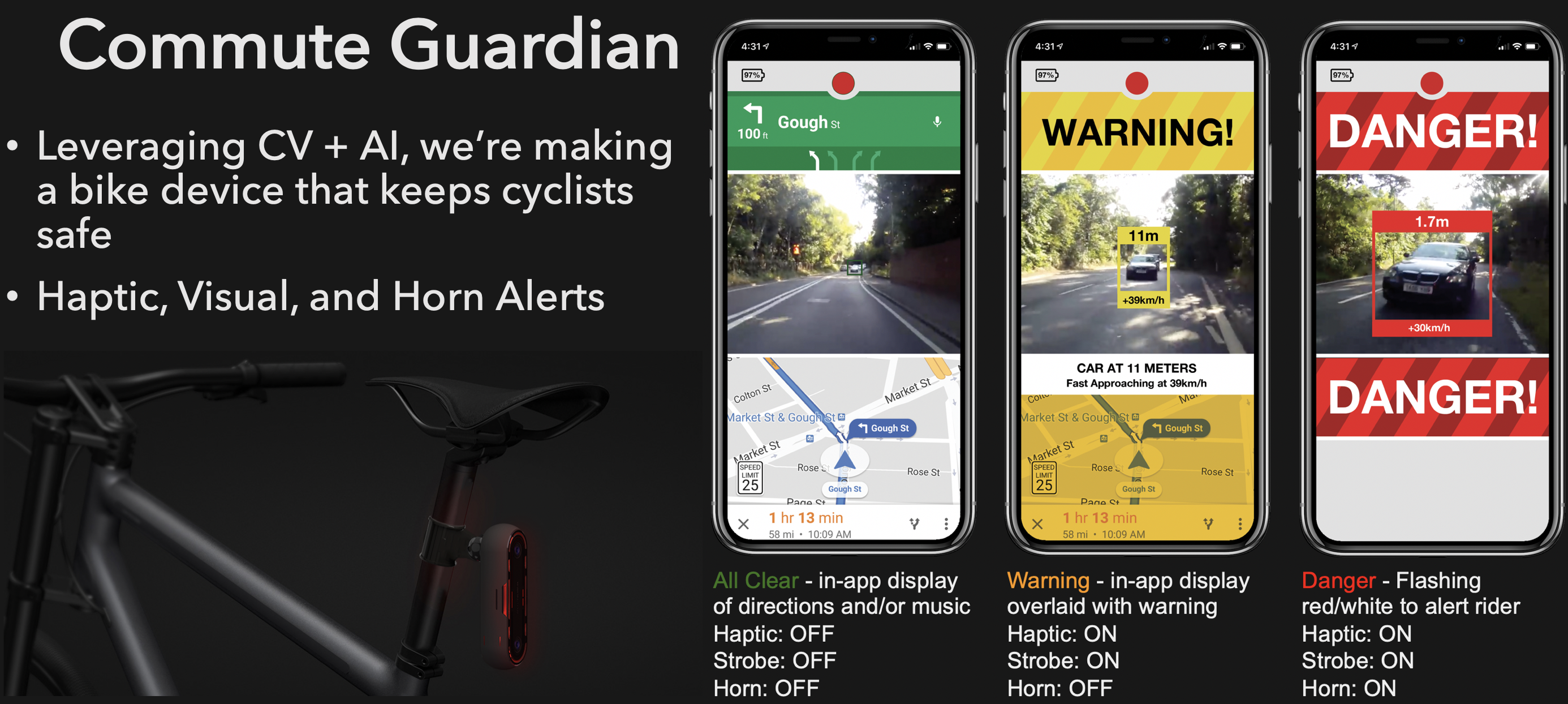

- There’s an epidemic in the US of injuries and deaths of people who ride bikes

- Majority of cases are distracted driving caused by smart phones (social media, texting, e-mailing, etc.)

- We set out to try to make people safer on bicycles in the US

- We’re technologists

- Focused on AI/ML/Embedded

- So we’re seeing if we can make a technology solution

Commute Guardian

(If you'd like to read more about CommuteGuardian, see here)

DepthAI Platform

- In prototyping the Commute Guardian, we realized how powerful the combination of Depth and AI is.

- And we realized that no such embedded platform existed

- So we built it. And we're releasing it to the world through a Crowd Supply campaign, here

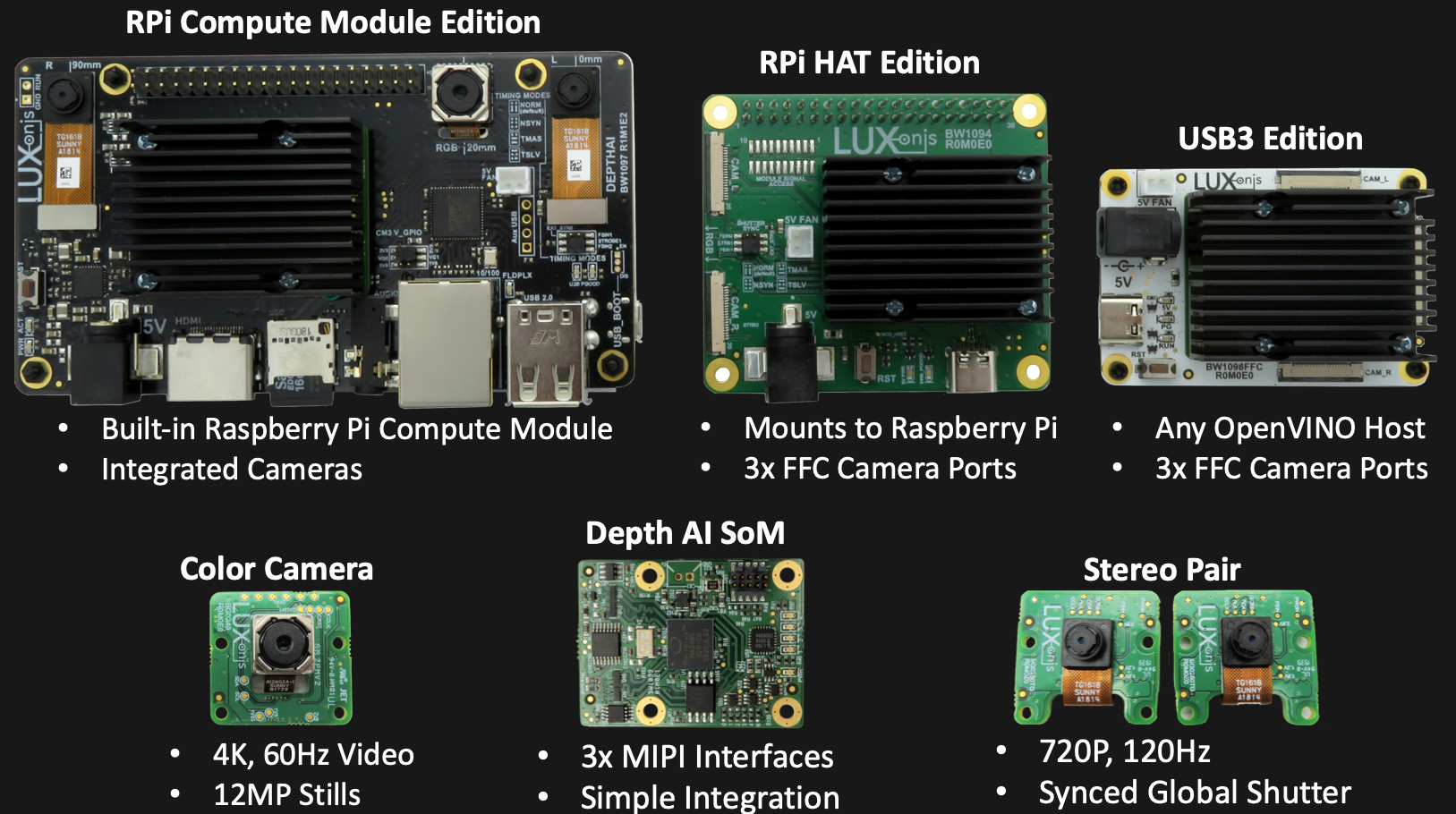

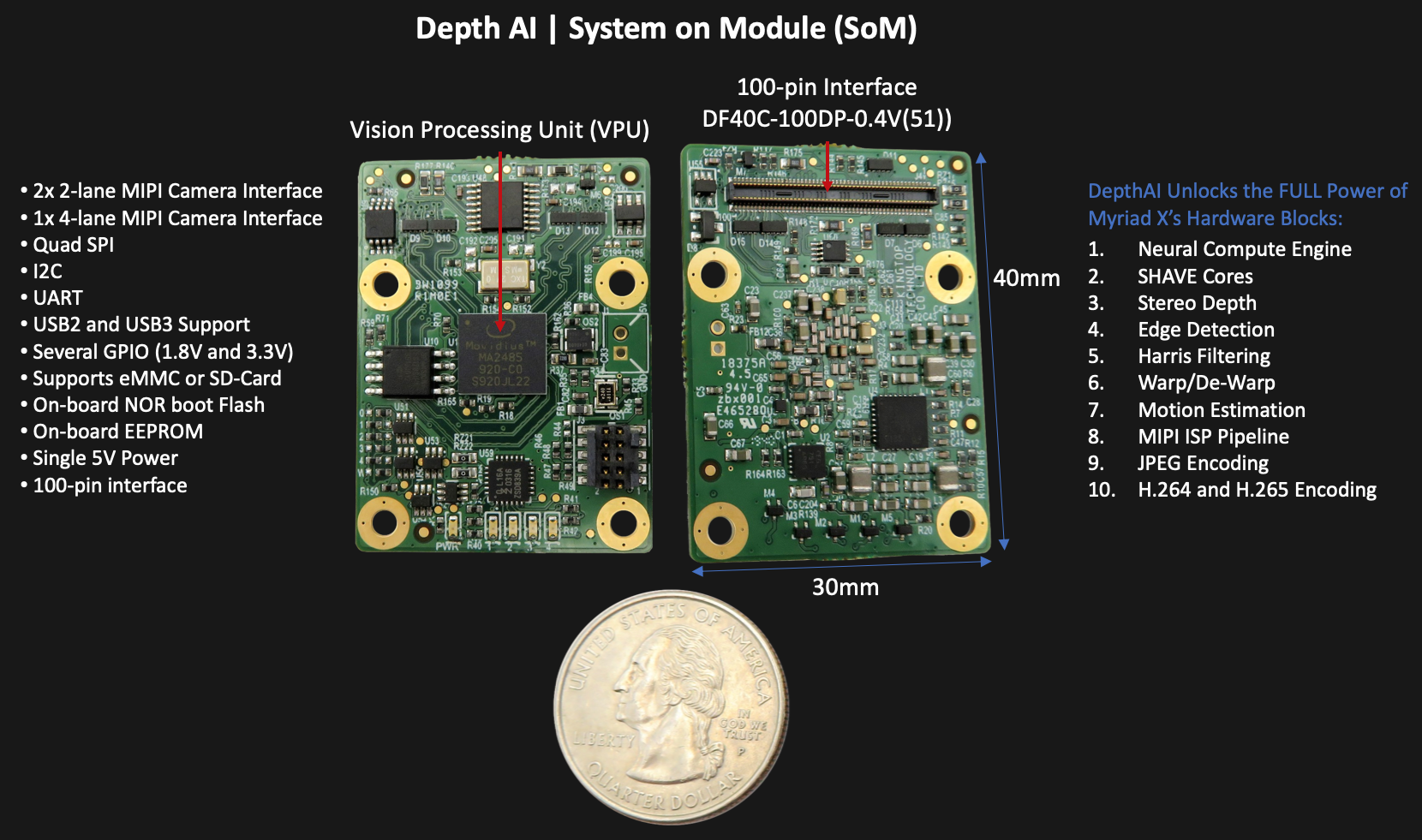

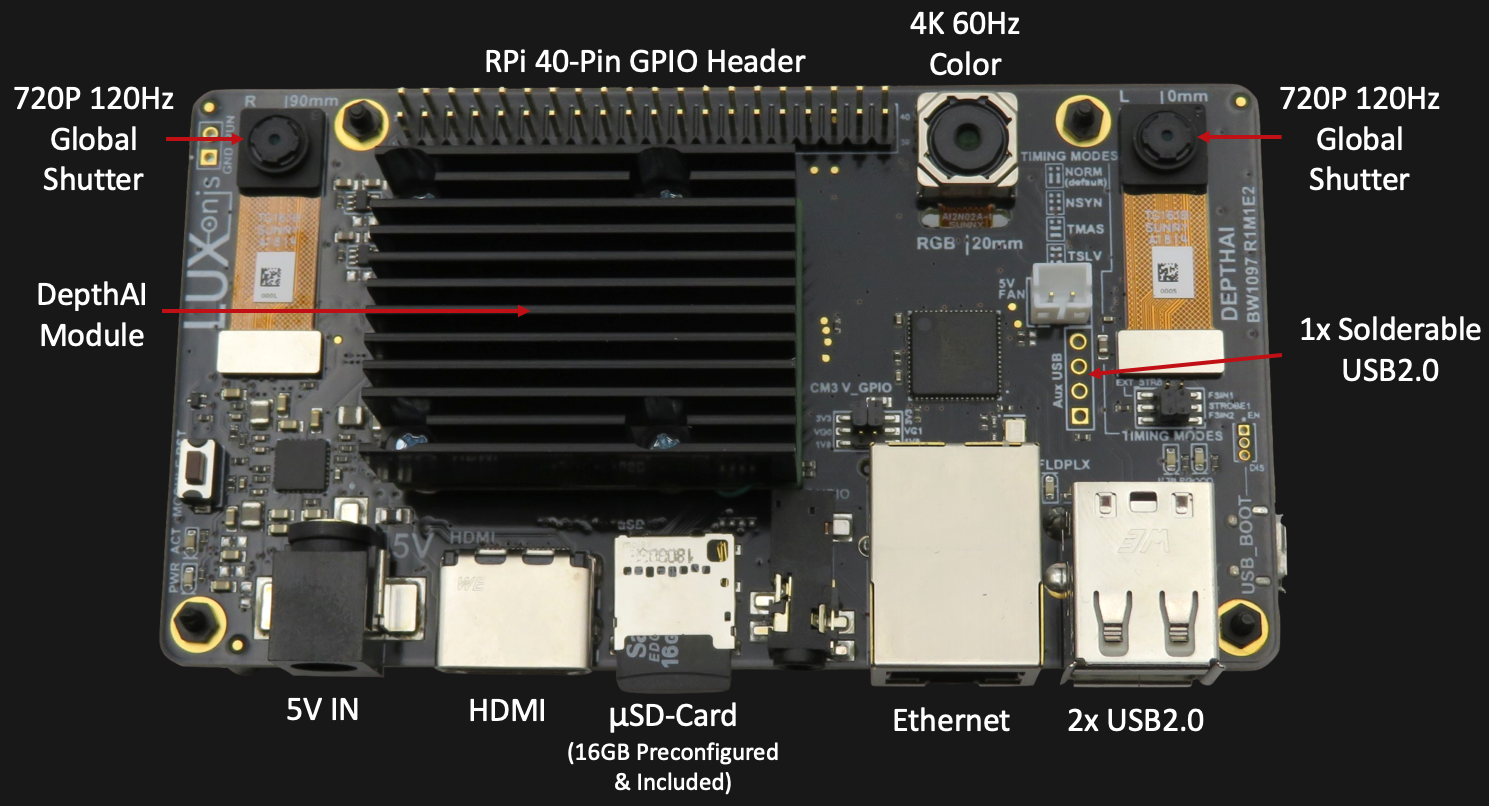

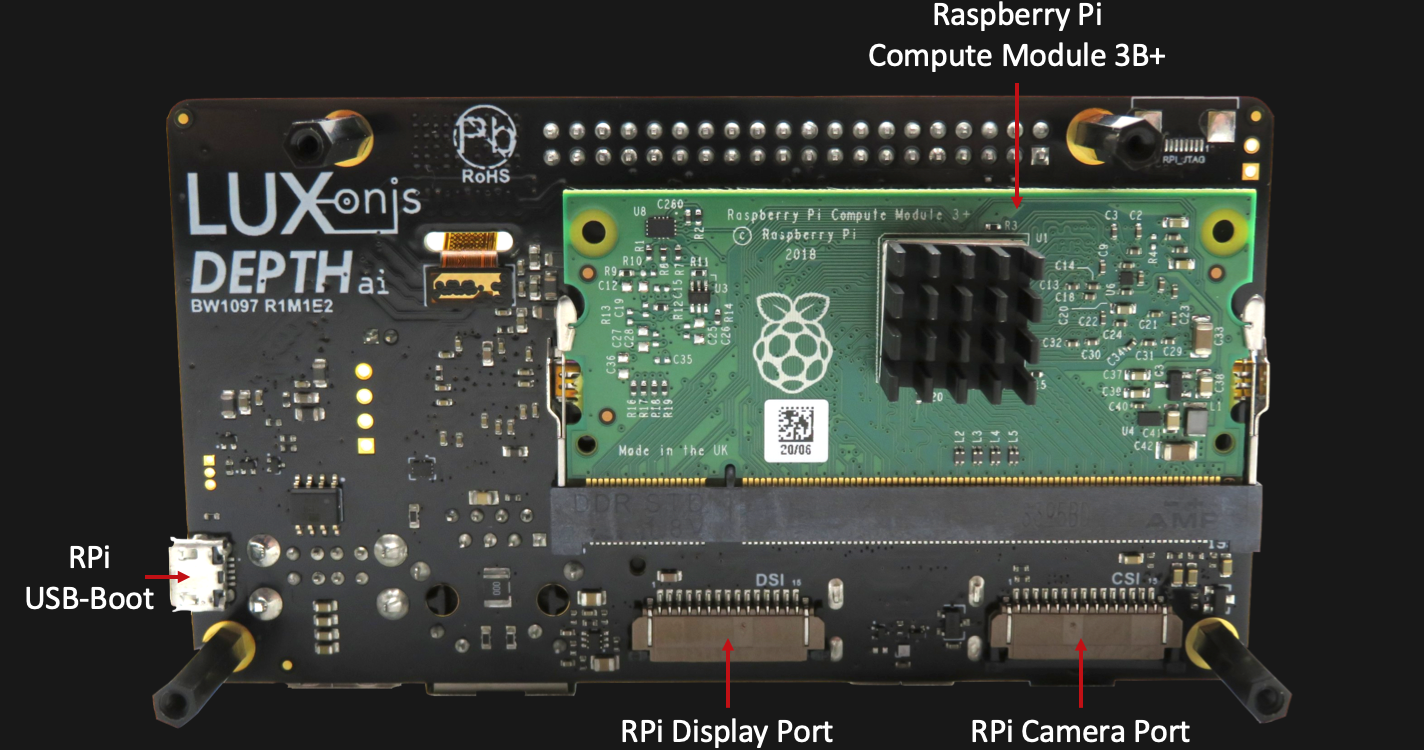

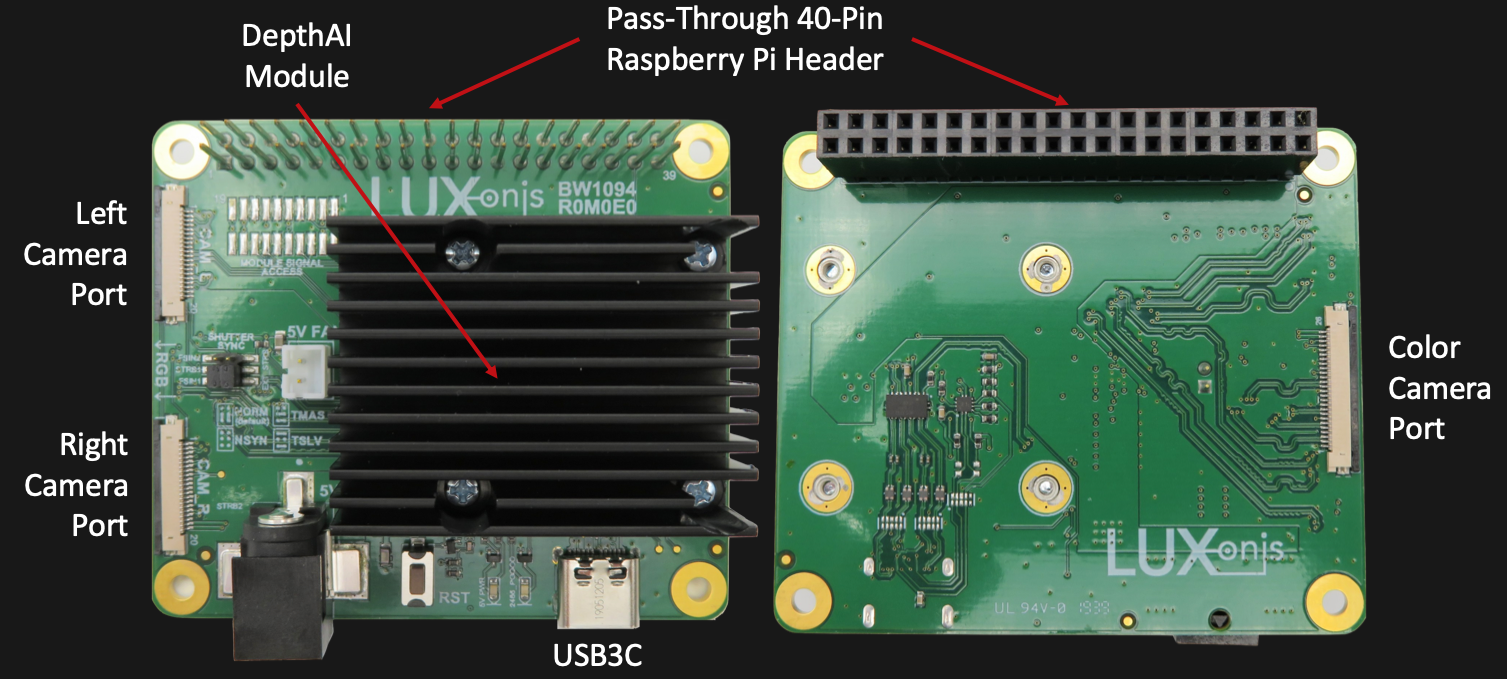

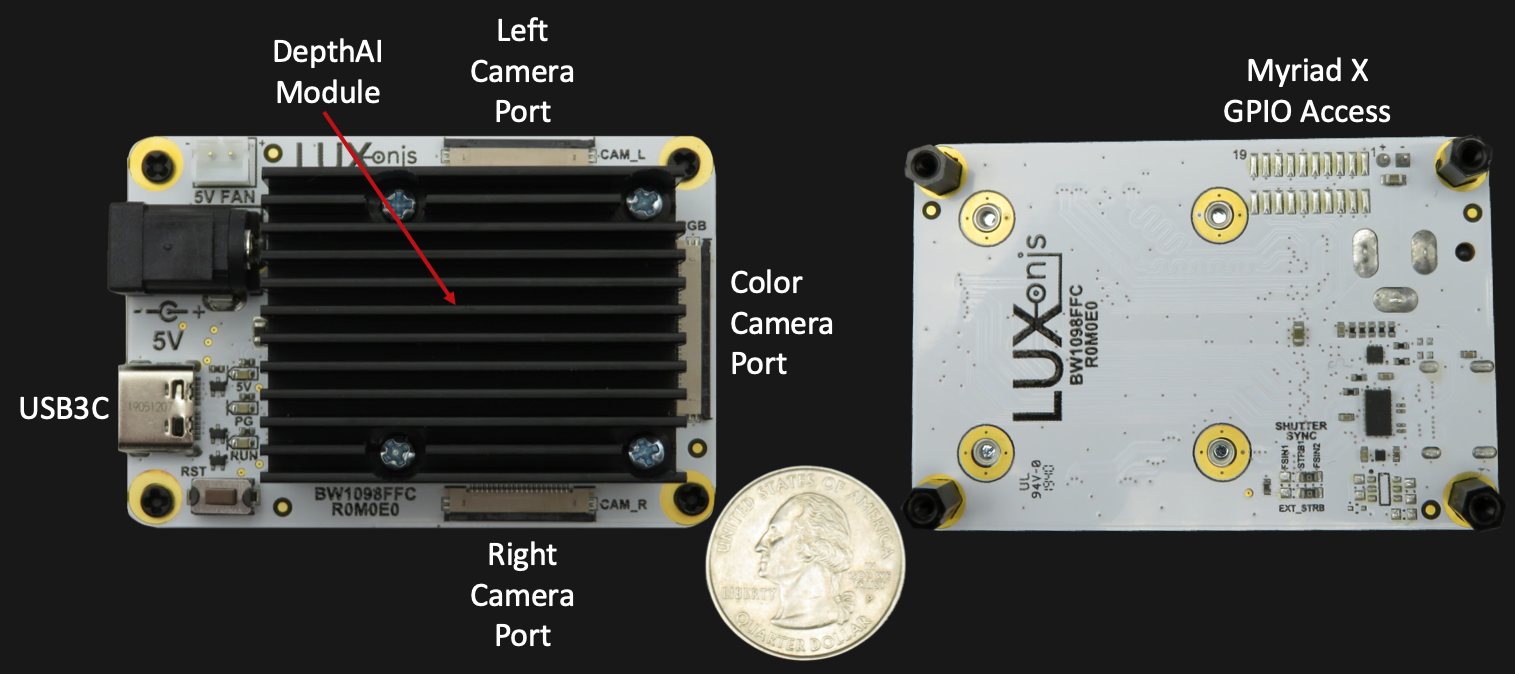

We want this power to be easily embeddable into products (including our own) in a variety of form-factors (yours and ours). So we made a System on Module which exposes all the key interfaces through an easy-to-integrate 100-pin connector.

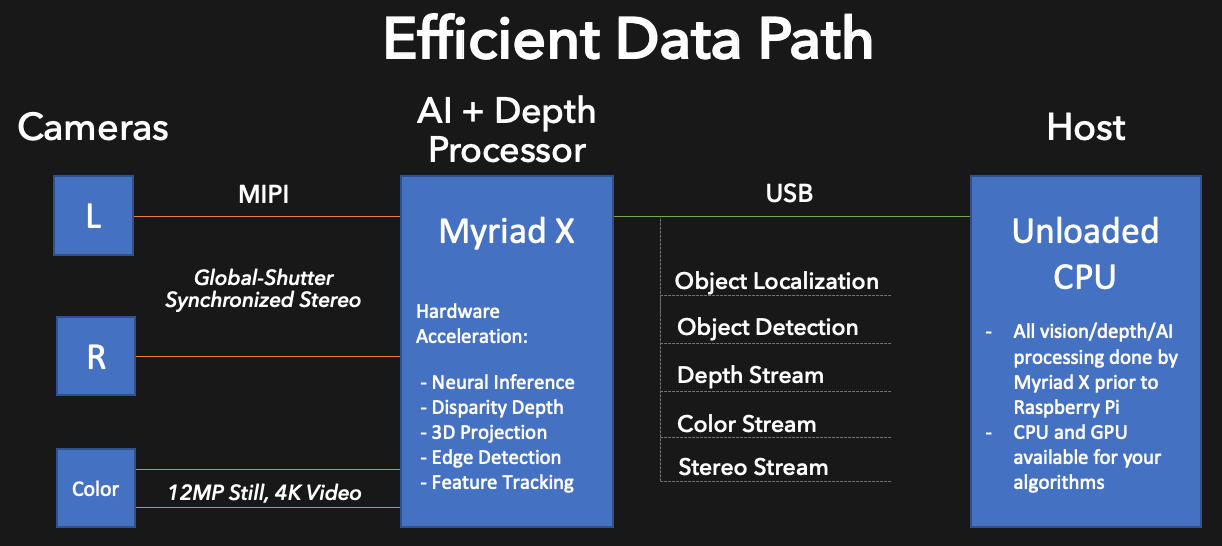

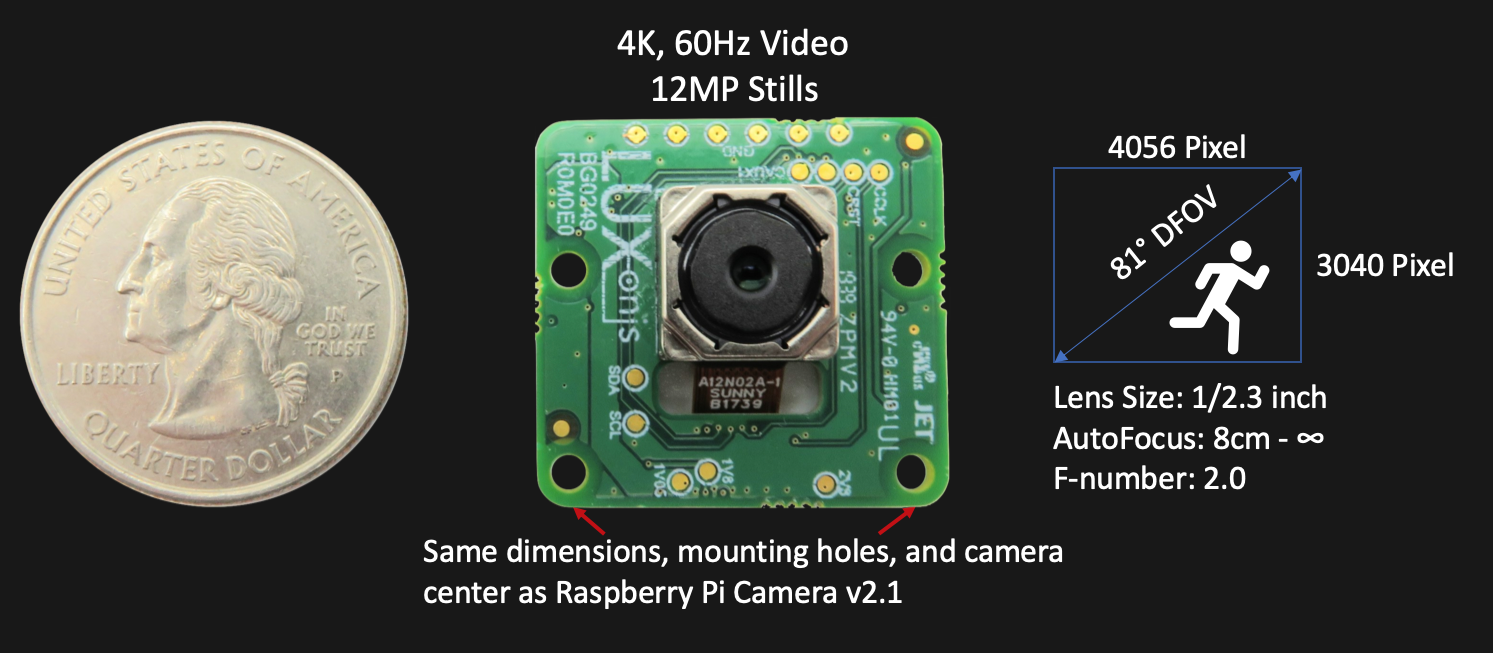

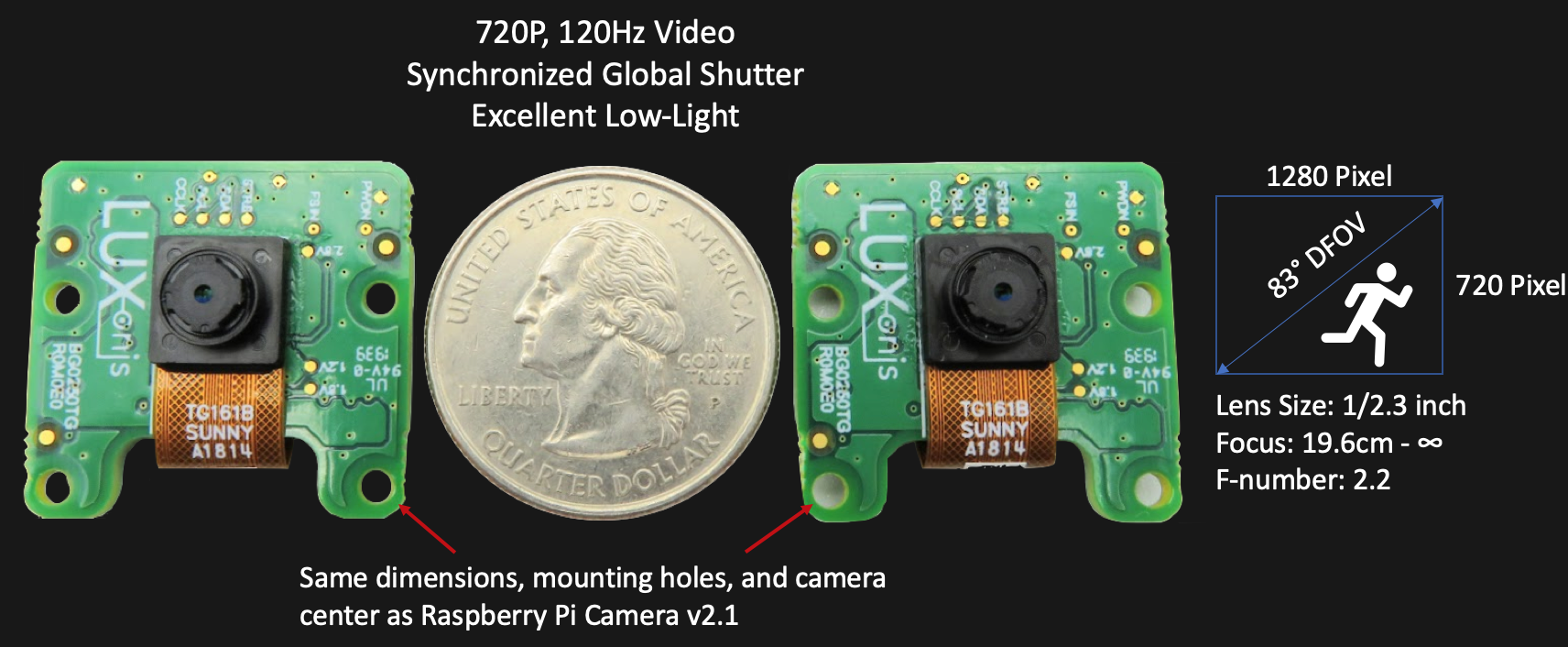

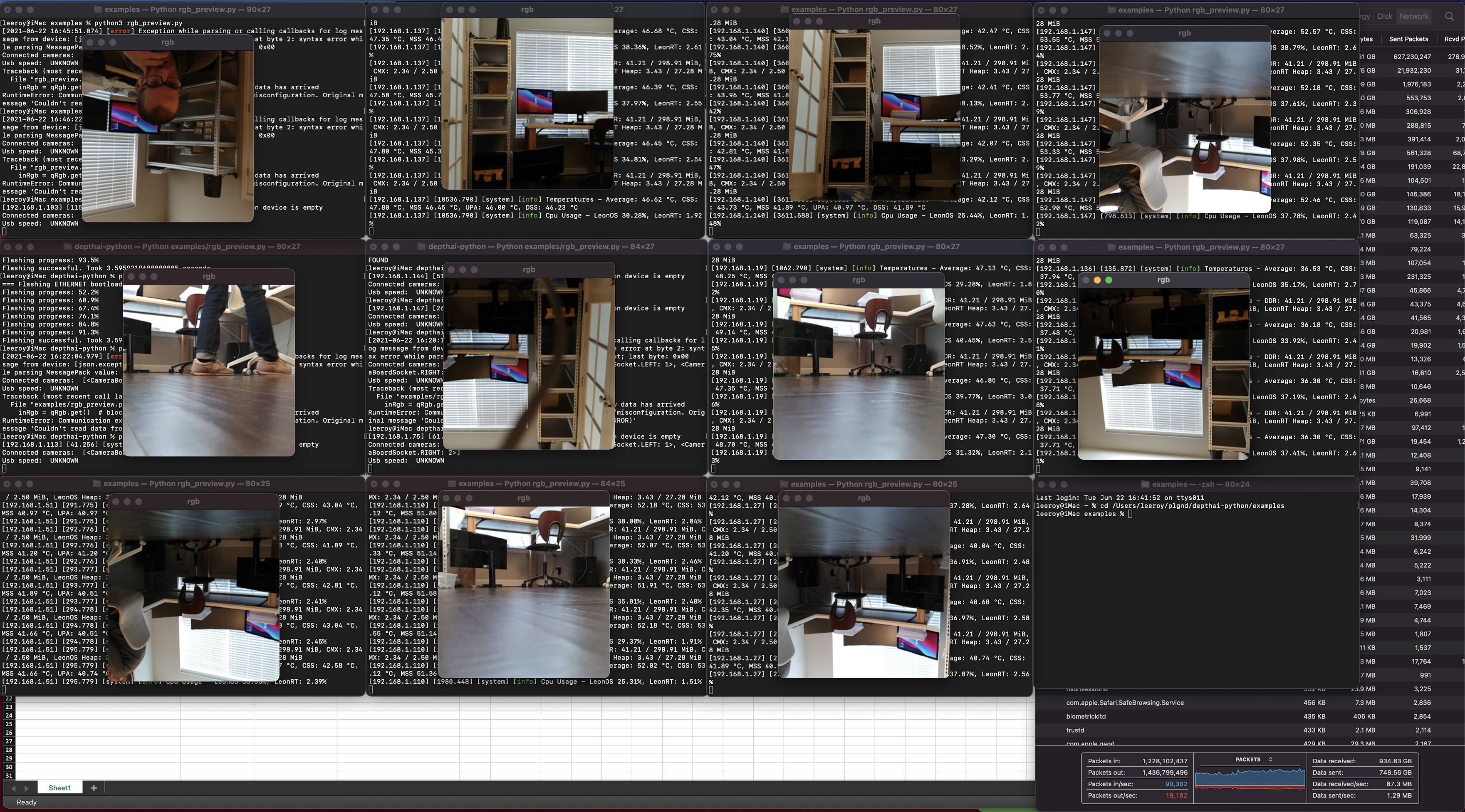

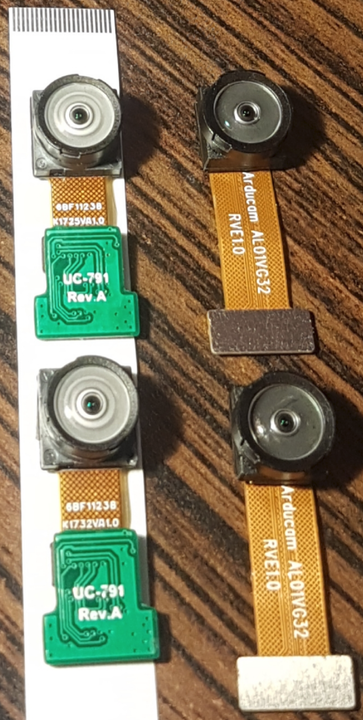

Unlike existing USB or PCIE Myriad X modules, our DepthAI module exposes 3x MIPI camera connections (1x 4-lane, 2x 2-lane) which allows the Myriad X to received data directly from the camera modules - unburdening the host

The direct MIPI connections to the Myriad X removes the video data path from the host entirely. And actually this means the Myriad X can operate without a host entirely. Or it can operate with a host, leaving the host CPU completely unburdened with all the vision and AI work being done entirely on the DepthAI module/Myriad X.

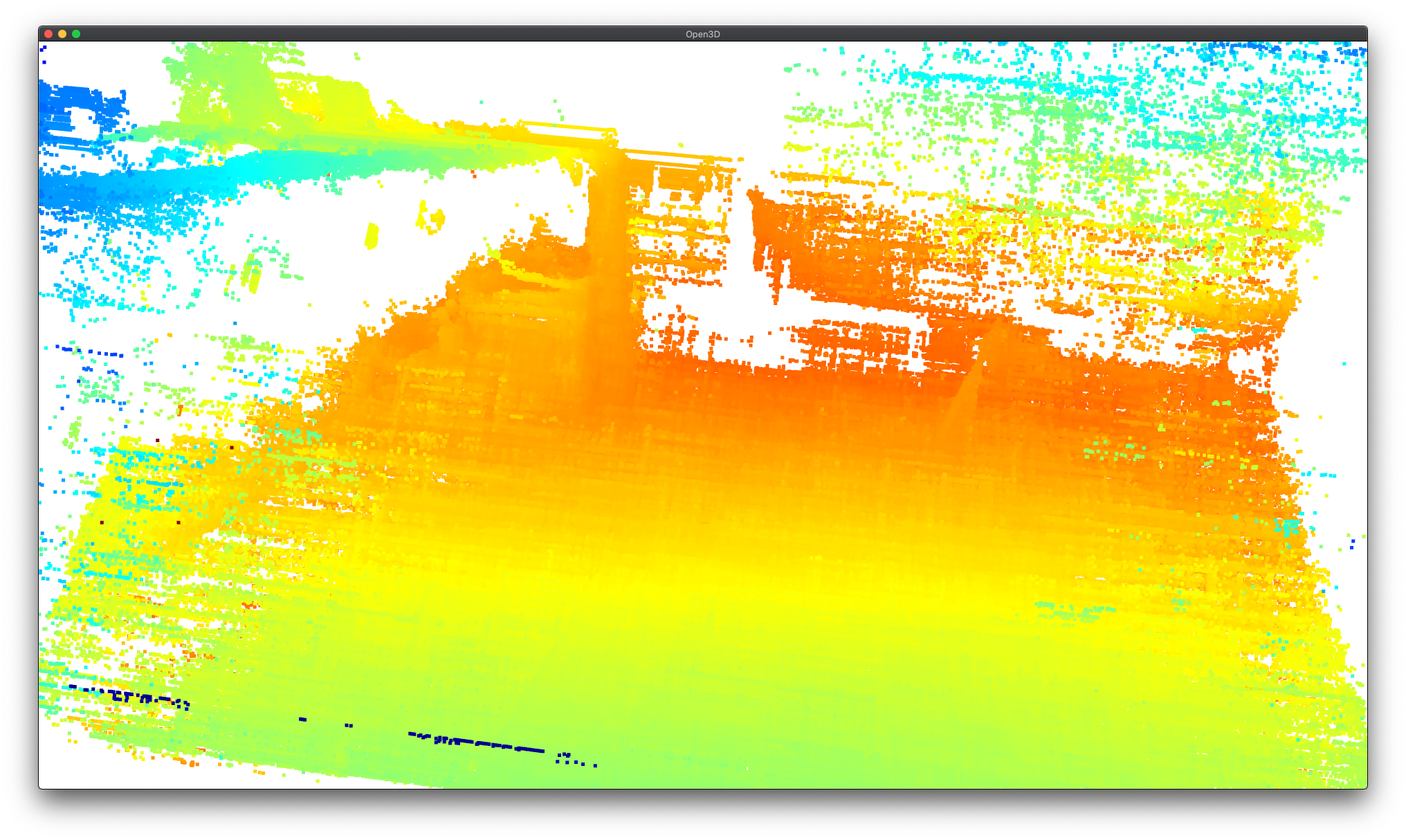

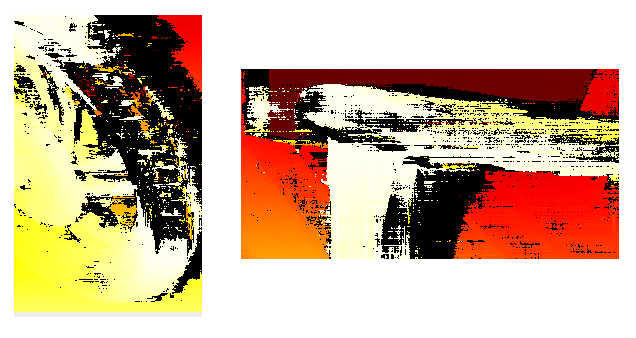

This results in huge efficiency increases (and power reduction) while also reducing latency, increasing overall frame-rate, and allowing hardware blocks which were previously unusable to be leveraged.

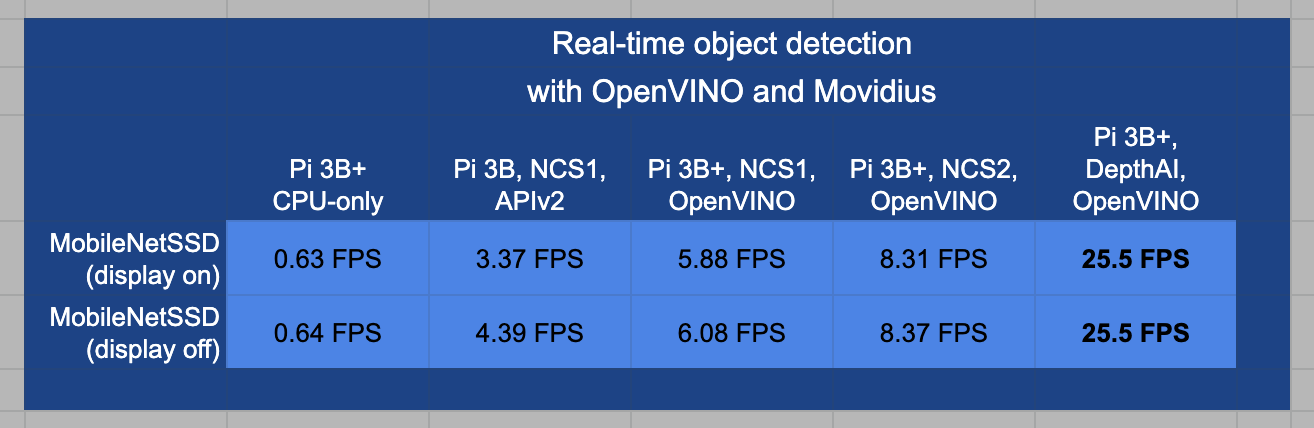

Take real-time object detection on the Myriad X interface with the Raspberry Pi 3B+ as an example:

Because of the data path efficiencies of DepthAI vs. using an NCS2, the frame rate increases from 8 FPS to 25FPS.

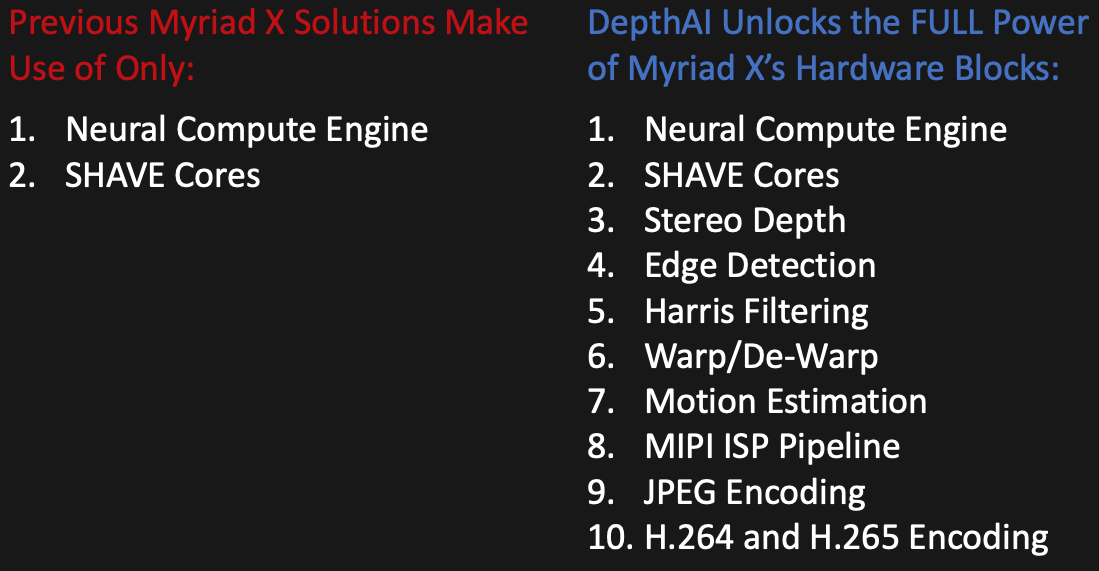

And most importantly, using this data path allow utilization of the following Myriad X hardware blocks which are unusable with previous solutions:

This means that DepthAI is a full visual perception module - including 3D perception - and no longer just a neural processor, enabling real-time object localization in physical space, like below, but at 25FPS instead of 3FPS:

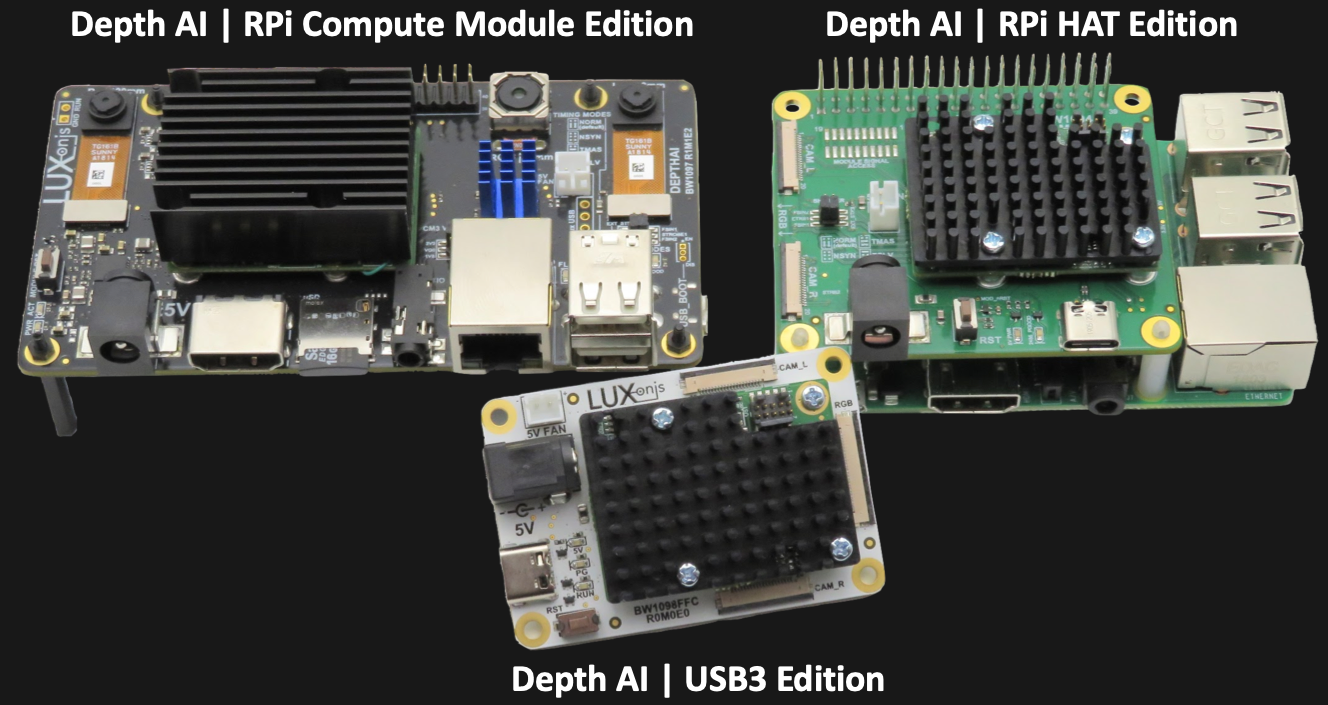

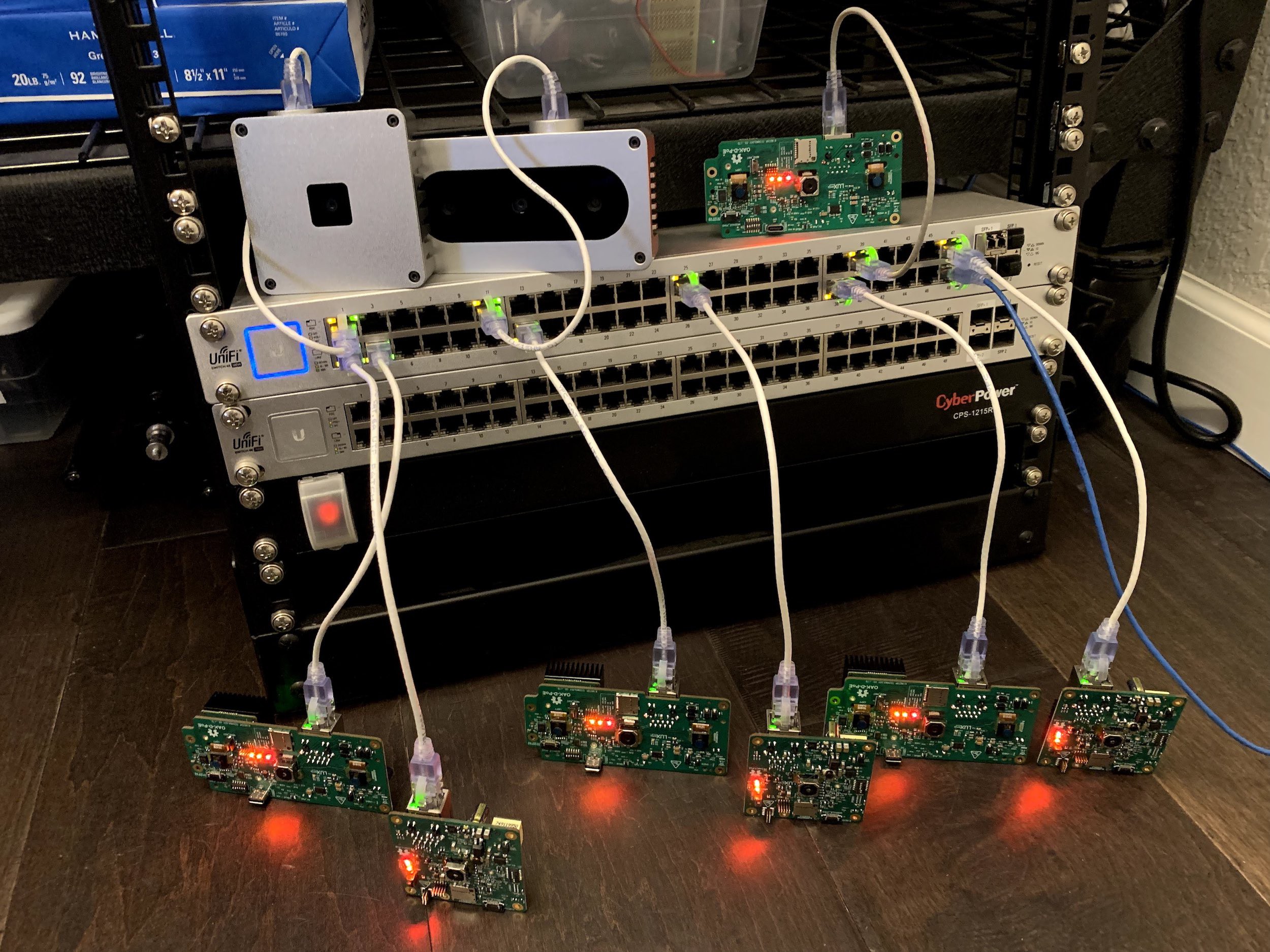

And to allow you to use this power right away, and with your favorite OS/platform, we made 3x editions of DepthAI, which serve as both reference designs for integrating the DepthAI module into your own custom hardware and also as ready-to-use platforms that can be used as-is to solve your computer vision problems, as-is.

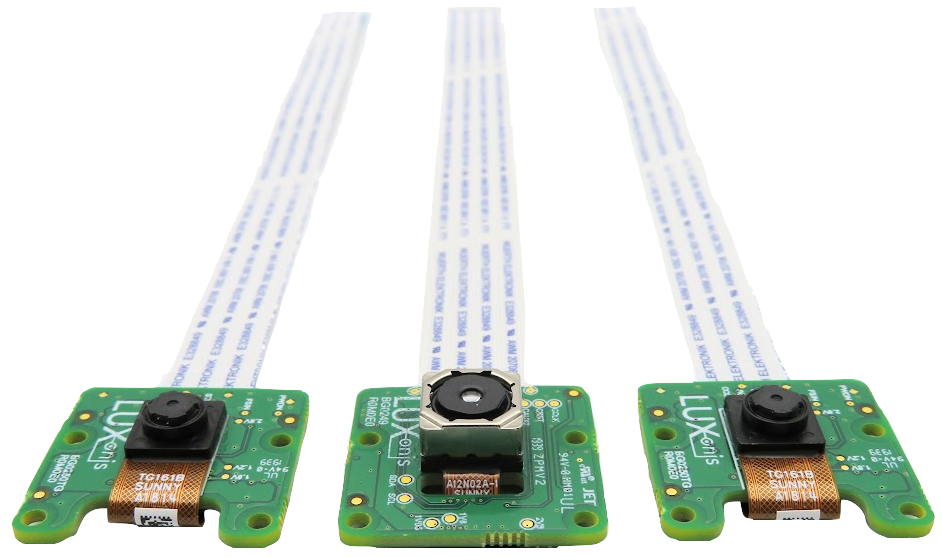

- Raspberry Pi Compute Module Edition - with 3x integrated cameras

- Raspberry Pi HAT Edition - with 3x modular cameras

- USB3 Edition - compatible with Yocto, Debian, Ubuntu, Mac OS X and Windows 10

All of the above reference designs will be released should our CrowdSupply campaign be successfully funded. So if you'd like to leverage these designs for your designs, or if you'd like to use this hardware directly, please support our CrowdSupply campaign:

https://www.crowdsupply.com/luxonis/depthai

Development Steps

The above is the result of a lot of background work to get familiar with the Myriad X, and architect and iterate...

Read more » Brandon

Brandon

Awesome project! However, 3D is not enough. Use TIME - the fourth dimension. I describe why and what that means in this short paper: https://github.com/rand3289/PerceptionTime