-

Day 2 : First Steps for Human Follower

11/08/2016 at 16:52 • 0 commentsDeveloping an autonomous human follower that doesn't rely on additional sensors or an environment map is a problem of relative localization. The position of user relative to Dobby needs to be tracked and known at all times to allow Dobby to follow the user without requiring an environment map. Further the user's smartphone needs to communicate this data to Dobby via Bluetooth.

We have started work on Bluetooth communication between Dobby and user on GATT protocol. Currently we have experimented with following libraries but haven't found a reliable one :

We have also developed an Android app for user's smartphone that can track his location by using the phone's magnetometer as a compass and by using dead-reckoning to get user's displacement and speed. Dead-reckoning relies on natural bounce of human body which can be measured using phone's accelerometer. Peaks in this data give information about user's speed and displacement. We are relying on a previously published work done by us on human gait analysis.

-

Day 1 : Indoor Localization of Dobby

11/08/2016 at 16:38 • 0 commentsSince our localization idea to use Estimote for indoor localization of Dobby did not work, we are currently testing out a compass based approach. A simple compass app can be made using a magnetometer and along with information about Dobby's speed of motion, it can be used to localize Dobby's position in an indoor environment. We have followed this tutorial at Instructables to integrate a simple digital compass to our Raspberry Pi.

-

Day 0 : Hackathon Idea

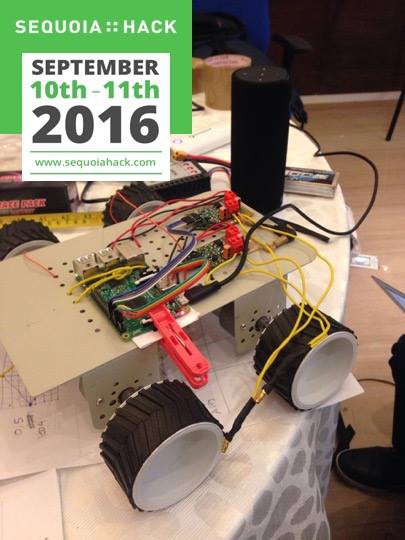

10/22/2016 at 17:08 • 0 commentsThe project idea started with the Sequoia Hack 2016 at Bangalore, India where we decided to use two the devices they were providing -Amazon Tap and Estimotes to make an indoor navigable voice controlled robot - Dobby.

![]()

The plan started as using Estimotes for indoor-localisation and using Alexa API to process and voice-control to map commands to intents. Once we setup the hardware, we created the Lambda top map the commands to intents and the next challenge was to process the commands and send them to the raspberry-pi on-board to execute them. For the purpose of solving the prototype in 24 hours, we found out about adafruit-io and IFTT triggers which helped us easily convert voice commands to GPIO signals.

Next we spent hours trying to test Estimote during the hackathon for localisation purposes but the data from Estimote BLEs were horrible and highly uncertain which despite 5 hours of trying to hack up a localisation solution using estimotes, we had to give up on it.

We concluded the Hackathon with a voice controlled robot.

Next, we created the Github project and added Issues to it in order to work to make it a complete indoor-assistant robot.

More logs as we work more on it!

P.S. Dobby also took many Selfies during the Hackathon.

Dobby - DIY Voice Controlled Personal Assistant

Voice Controlled Personal Assistant robot to help you stay lazy using Raspberry Pi and Amazon Alexa

Dementor

Dementor