-

Motorised Program Counter

07/16/2024 at 02:07 • 1 commentLeaving this here mostly for myself...

(Whoops! writing this up, I realize I fell for the classic blunder! But I won't share that bit, here...)

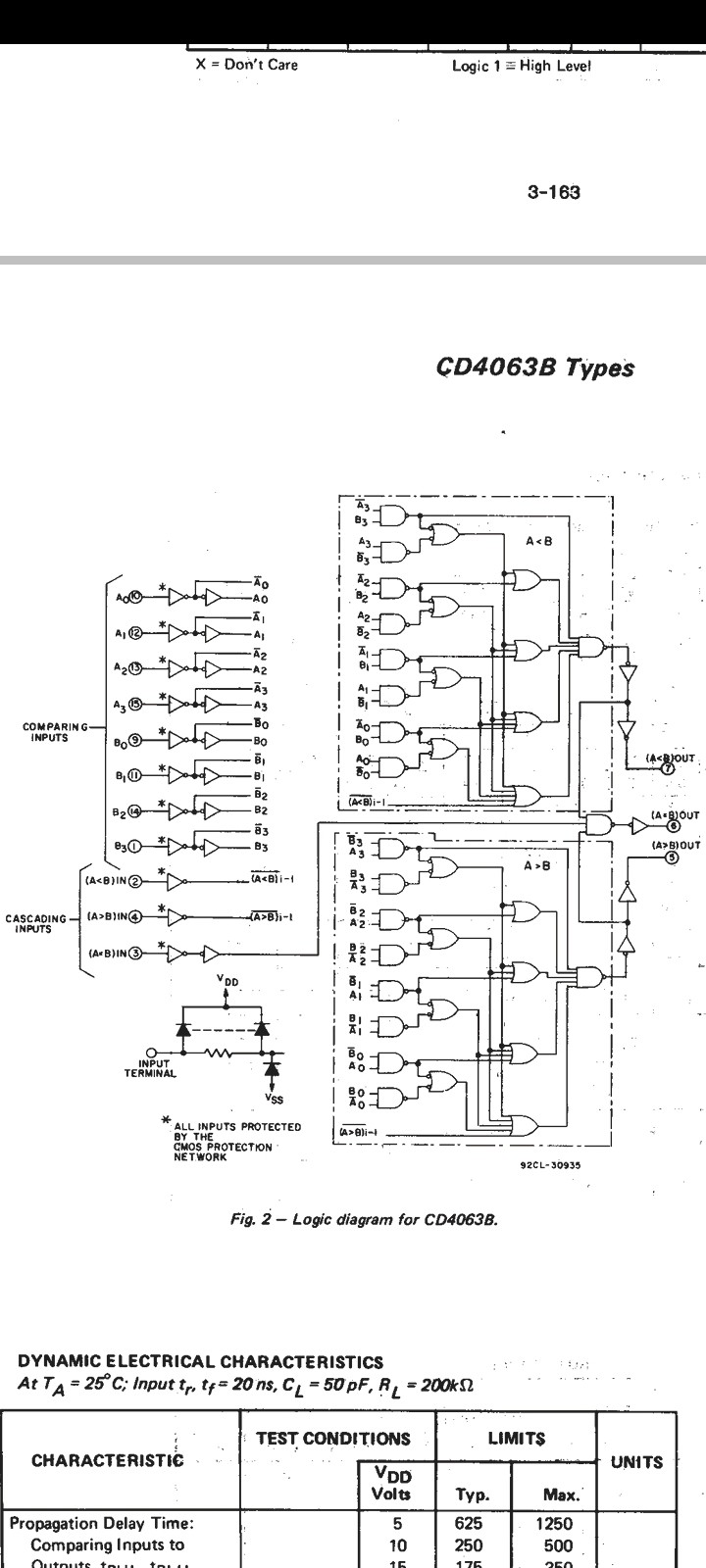

Instead, I'll just say that couldn't this circuit be tremendously smaller if they ran <&= or >&= instead of <&>?

![]()

(I mistakenly thought I could reduce that and he rest of my circuit to *only* use =... by assuming that 90% of the time != means >, but then how would I account for the 10% where != means <? Duh. Still, <,=,neither is still fewer gates than this, so I guess that's an improvement)

-

20 YEARS

02/04/2023 at 13:04 • 0 commentsI was one lucky bugger in my youth in that I had a buddy who worked at HP and brought me surplus gizmos [I thought] he acquired for dollars...

As I recall, he brought me two flatbed plotters, two 8.5in floppy drives, an ISA HPIB card with full manual (and BASIC instructions!), and a whole slew more that I was not at all worthy of.

I loved that plotter... watching two interlocked doughnuts form from seemingly-random trapezoids was mesmerizing. I've saved (and modified) and replotted that file countless times over the past 25+ years. From the much more studio-apartment-friendly HP7475A [paper-fed] plotter I eventually acquired, to the wall-hanging plotter made of legos, to the Silhouette vinyl-cutter I got from Goodwill, to #The Artist--Printze wherein I wrote code to treat an inkjet like a pen-plotter...

But nothing beat those old flatbeds, which I STUPIDLY took apart.

They were exceptionally fast, and precise. The mechanics, if I understand correctly, we now call "Core-XY" but were far superior to anything I've seen since. (I later figured it out, from memory, and managed to extend it to three dimensions with legos and pulleys... long before I'd ever heard the term Core-XY). I liked that mechanism so much, after I finally pieced-together in my mind how it worked literally two decades later, that I tried to design a "business card" plotter around it #2.5-3D thing...

Somehow STUPIDLY I took both those flatbeds apart. STUPIDLY. And STUPIDLY only managed to keep a few random pieces...

Including the stepper motors, which are amazingly strong and fast, as I recall, and the weird oil-filled dampeners which nearly look like nothing more than "spare no expense" "eye-candy" yet I remember how smooth those stepper motors were, and fast, and maybe somehow those seemingly physically-useless oil-filled flywheels on bearings actually made that possible.

![]()

Anyhow... WAY side-tracked. Man I was STUPID back then. Just downright stupid.

Watching it switch pens was beautiful in and of itself. Such precision and delicacy and yet such speed. What the HE** was I thinking when I scrapped them?!

....

In addition to the bipolar [4-pin] steppers, I also somehow managed to keep a stepper driver-board...

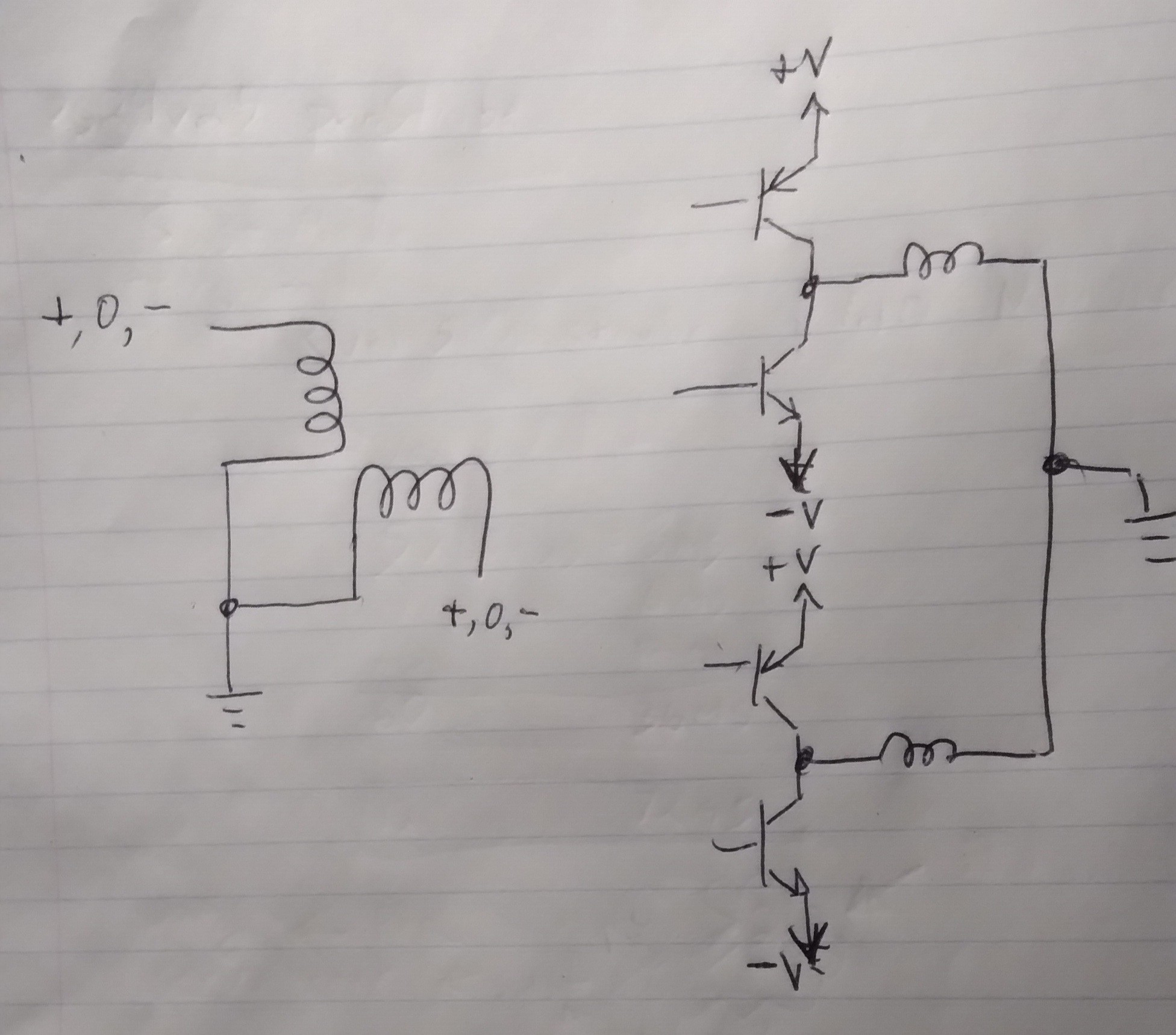

And when I was finally smart enough to understand what a bipolar stepper was, it occurred to me that.... waitaminit. How could 2 bipolar steppers be driven by only 8 power transistors?!

An H-Bridge is 4 transistors... And you'd need two for two windings in each motor! That'd be 16 power transistors, not 8!

...

I mean, it's not like I *really* sat and thought about it, but it's been percolating for probably 20 years, until just now.

And now I feel a bit stupid, again. But not nearly as stupid as I feel thinking about younger-me scrapping those amazing machines.

Anyhow, a big build-up for something probably obvious...

![]()

-

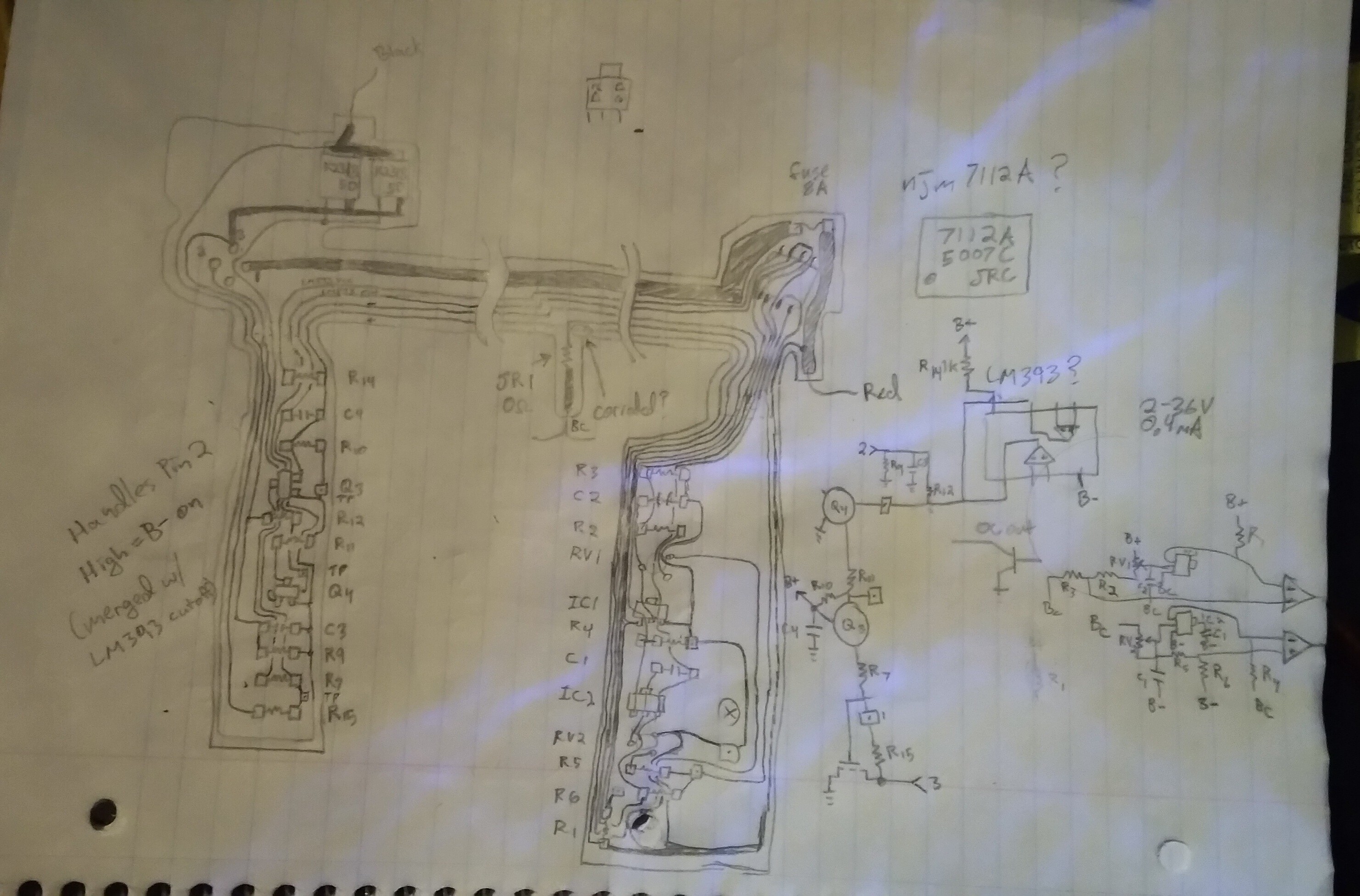

Early Li-Ion Protection Circuitry

09/30/2022 at 05:35 • 0 commentsI'm trying to fix the battery pack for an old 486 laptop. If I understand correctly, this was the first on the market with Li-Ion (as well as TFT!).

I replaced the original 18650's a couple years back, then let it sit for a year or so in storage... And sure-enough one of the two pairs of year-old cells was completely kaput.

So this time I looked at the circuitry in the pack to see what could've happened... and... well, I think I have a vague-ish idea.

![]()

It would seem this thing's protection circuitry is very limited compared to today's.

An actual fuse takes care of over-current. But otherwise it seems all that circuitry does only two things: cut off discharging when one of the parallel-pairs in the series is too low, and cut off output when it's not in the laptop (don't want it shorting on a paperclip!).

Unless I'm missing something *that's all* it does.

No charge balancing, despite its having a wire on each cell-pack.

No thermal cutoffs.

In fact, nothing to prevent it from charging (since the cut-off MOSFETs have body-diodes).

I dunno what all.

Funny thing is, the connector has four pins. Only three are used.

Basically the entirety of this circuitry could be in the laptop itself, excluding the paperclip-fire-preventing transistors. Their gate could be on one of those pins... along with another pin for measuring in the middle of the series.

And then...

Well... I think this would've prevented what I guess must've ruined one of the parallel-cell-pairs:

The over discharge circuit is *always* powered. From the entire series.

Meaning:

A) I probably forgot to charge it before storing, so the cells were already low.

So, after a year of the LM393 comparator sipping 0.4mA, the pack was just *empty*

B) I used cells that were not brand new, nor had been used the same number or depth of cycles.

So, as the series cells discharged, one pair reached zero before the other, then began charging backwards from the other.

...

Which most cell chemistries do not like. Heh.

But the reason this intrigues me is that allegedly Li-Ions are basically unchargeable if allowed to drop too low, something like 1V. Yet I've been running experiments on many old packs and finding, repeatedly, that they definitely can be recharged even if they've been sitting for years and measure less than a diode-drop.

So, it makes me wonder about the thought-process at the early-days of the Li-Ion...

NiCd cells, for instance, are known to be fine even if stored with their terminals shorted for years. In fact, I've heard that if you're planning to store them, then that's the best way.

I wonder if that's basically what the designers of my 486 laptop were thinking... (though why would they bother with a low-voltage cutoff circuit? maybe that's not what it is?)

BUT that logic only works if they're Not In Series. Because, if you have one cell at 0.8V and another at 0.78, and put a resistor between to drain them, then eventually you'll have one cell at 0.01 and the other at -0.01... and that negative charge is what does a cell in. As I Understand.

...

I dunno this laptop is pretty decent quality, and wasn't cheap when it was new, so I can't quite picture they were cutting corners.

...

Anyhow, again, I did manage to recover one of the pairs, even though it was at something like 0.15V. The other, though... Nothing doing. For a moment I got it up to 0.8V, but then despite pumping current in its voltage began to drop.

I'm no expert, so I figure that's time to call it.

..

whoa, if I'm reading my notes right, the surviving cells are holding nearly the same charge as a regular pack of the same make of cells with its "proper" protection circuitry that never goes lower than 2V. (?!)

...

In other packs...

Well... I've looked at one from an iBook G3, and another from a pentium 150 (I think)... both had been sitting for years and definitely way below 1V per cell.

MOST of the cells definitely have useable capacity left in them... Though, definitely diminished from their "new" capacity.

Also a tremendously larger output resistance.

But, still plausibly useful, somehow.

I keep thinking: these were basically useless, as I recall, giving about 5minutes runtime on the laptops.

I ran experiments on single cells, loading them with 1A, and they cut-out at about 3-5min, with the "proper" under-voltage protection.

But, when I parallelled those same two cells, I got 45min, at 1A.

... TEN-FOLD...

I imagine several factors at play, here...

Basically increased output resistance and the low-voltage cut-off. But a few others a bit more vague in my mind... Something about "surface charge" maybe? E.G. after the load is removed, after the low-voltage cut-off, the cells measure around 3V, but after about five minutes they measure more like 3.8(!) [allegedly that's a "full charge!"].

...

I haven't really figured out how to make use of these cells. To get the most out of them, I think, a really sophisticated load-balancing scheme would be ideal...

But, I should point out that Li-Ion cells can be dangerous, and I really have no idea whether it's a good idea to continue using them like this, when they're definitely way past their prime, but still holding a useful-enough charge for repuposing.

...

Though one thought keeps coming to mind: E.G. if you've got an old phone with a couple old batteries that barely even run long-enough to boot without being plugged-in... *paralleling* them might be the difference between not being able to make even one call and its working fine for possibly several long-winded calls.

But, again, consider possible risks, here... Doing-so with two decent batteries (with low output resistance) could send a LOT of current from one to the other, even if their voltages are near identical.

-

Homebrew Computers & Transistors, and surely more...

04/16/2022 at 08:05 • 6 commentsMid-May, just came across this draft from about a month ago... Still pondering the idea off-n-on.

...

I'm almost certainly in no position to be, right now, but the past two months have finally calmed a bit, and I desperately need to feel like I'm accomplishing something, which I don't get outside my realm... which basically includes everything I probably should be, or have been forced to be, doing.

...

So, I've been revisiting old ideas, one of which is that of building *something* from old CPUs I have laying around, but haven't the experience with to just throw into a project.

Before the crazy-shizzle started (crazier than normal, which has been crazy itself) I'd spent months (more?) on my TI-86, learning about z80s and more... #Vintage Z80 palmtop compy hackery (TI-86) . (Really, I'd probably kill two birds with one stone if I'd just pick up where I left off on that... but my brain doesn't switch tasks like these easily, there's a huge process involved, this is probably that process). Anyhow, one of the results of that project thus far is a renewed interest and much better understanding in the make-use-of-processors realm.

So, now, I have a pretty well-mulled-over idea of a sort of "homebrew computer" build-process that builds it in pieces, each step along the way doing /something/ blinky and interactive. And a sort-of general-path that should work with a wide variety of CPUs I've stumbled on in my collection; z80, 6800, 6502(?), 8088... even up to the 486.

E.G. The first step is an easily hand-wired board that merely shows LEDs for the address bus (maybe just 8 bits), and simply wiring the data-bus to NOP (jumper-disableable)... Oh, AND the circuitry necessary for single-stepping. This board will have a header for an IDC-Connector-cable (E.G. an old IDE cable?) connecting the necessary pins for 16bit addressing and 8 bits of data. Though, I'm half-tempted to make it even simpler by just using a chip-clip to breakout to the next boards.

The second board will connect there, and add switches (and LEDs) for the data bus. Unlike other switch-driven systems like the Altair, this won't (alone/initially) be used to enter a program /into memory/ to be run later. Instead the idea is to have a small program which will be entered in "realtime." The processor requests an address, the user toggles the switches for the opcode, then hits "Step," repeat.

Now, with two tiny boards we have 8 bits of the address bus visible on LEDs, 8 bits for data on switches and LEDs, and a step button.

From there, I think the next board will contain a jumper-enableable diode-ROM (and couple/few-bit address demultiplexer) for a program maybe as simple as "jmp address 0". If I get this right, the Diode-ROM containing these instructions (this instruction?) could be mapped in various locations in the address-space via jumpers, and the default NOP from the previous board would be accessed otherwise. Now we have a binary counter (the program-counter) visible on LEDs and resetting at, say, a selectable count of 4+3=7, 8+3=11, 16+3=19...128+3=131. Basically +3 because all the CPUs I've encountered so far have a 1-byte NOP and a 3-byte absolute-jump. And then, powers-of-two since its easiest to decode with one jumper.

Anyhow, the idea is... I don't want to throw these efforts away, and they're too big to hang on the fridge... So, if Imma build a homebrew computer, maybe I can make each "step" along the way /useful/ even in the "end-product". Each step will introduce more features... When RAM's added, the switches/LEDs on the data bus from the second board will be used to load the memory. The RAM board then will probably add switches and 8 more LEDs for the address bus...

One board early-on may add line-drivers/buffers, then break out to a new header with the same pinout...

Another board down the line might add memory-mapping for more than 64k, So, those same boards from before could be moved /after/ this addition... same pinout. Maybe a second IDC cable could be added for decoded higher addresses, which could then be connected in place of the jumpers on the earlier boards.

Anyhow, I kinda dig the idea, and I /think/ the "bus" can be generalized-enough to work with all these processors... Of course, the NOP and Diode-ROM (and CPU card!) are processor-specific, but the memory and LEDs/switches, line-drivers, and even memory remapping should be doably pretty universal. And I dig the IDC cable over, say, a backplane... Want to add a new card? Just crimp another connector. Want to move your 32K RAM to a mapped address? Unplug from main cable, plug into mapper cable.

Yeah, some of it will be a bit redundant (using only 64k address space from an 8088? 486?! Never switching out of "Real Mode?!")...

But, herein lies another thought-process: ALL these CPUs seem to share a sort-of base level of commonality... The 650x was developed with the idea that the 6800 instruction-set was just too complicated. I feel the same about the 8088 and the z80. Their idea was to /Reduce/ the 6800's Complex Instruction-Set down to only a few necessary instructions... Hmmm.... Sounds RISCy to me.

So, what if I did the same with these /other/ processors? Or at least /treated/ them as though they'd done the same... Suddenly those 486 386 286 chips floating around, the 186s in weird embedded systems long-forgotten, the z80s sitting in old Hayes-Compatible modems that'll never see a landline again, the 6502s in scrapped/dead Commodore floppy drives or old CD players, and So Much More, (what about 8051's?) now become darn-near identical in functionality... Which, yeah, would've been stupid back when the whole point was performance, but no one's turning to these things for performance anymore, right?

NOW they're relegated to groovy-vintage nostalgia which is near unobtanium, OR ewaste.

Hey, these are great learning tools. And, hey, in a world where "Right To Repair" is being fought in courts, maybe we could benefit from a few folks' seeing the benefits of once-standardized parts...

We don't /need/ top-of-the-line performance anymore, from specialized parts with one-off manufacturers we're now subserviant to.

I bet there's even some plausibility ARM devices could be "Reduced" a bit like this.

So, now, say we've got a Reduced Instruction/Register Set (RIRS?) that maps almost universally across all these devices... Then, aside from Op-Codes and memory-layouts, RIRS code written for a z80 could be simply converted to run on a 6800 or 8088.

Maybe that's silly if you're talking about trying to load a 3C905 DOS driver on a Kaypro, but it's not silly at all if you're talking about trying to get folk to connect whatever weird-old processor is sitting in their bin to Hyperterminal as the beginnings of a homebrew computer. Maybe load BASIC on a DVD-ROM drive's undocumented "Mediatek" processor...? Or even on an old phone with a broken screen?

I dunno... Maybe I'm the only one with an old modem that realized its processor is the same as the full-fledged computer it was connected to, and wonders what-all it could be made to do.

...U8B, the Unified 8-bit BIOS could plausibly make all 8bit CPUs (and 8086s?) capable of at least running a terminal, BASIC, maybe an OS...? At least a simple game? Well, how would it be accessed? Addresses 000 through 0ff? What about systems with interrupts there? Addresses at the 32KB boundary? (Don't forget some systems expect ROM at 0xffff, others at 0x0000)...

NOP at boot: EAEA would be the 6502's jump-address... hmmm...

Friggin Paper Tape, DUH.

....

Oh, then I got back to homebrew transistors... It keeps coming up. Thoughts on what happens to the electrons that are deflected by the grid in a triode... If I understand, it basically equates to the cathode is shooting off electrons no matter what, right? So the cathode current remains pretty much constant, then the Anode current is what varies... And then the "amplification" is purely between the gate and the anode, but has little if anything to do with the actual current used in doing-so...

-

Elderly batteries, Lappies, and other devices

07/31/2020 at 22:31 • 16 commentsI've two old laptops I've been trying to use with some old software necessarily connecting to old hardware via old ports... thus we're talking a 486 and a p150.

The 486 is actually a great machine; quality. I used it last year for #OMNI 4 - a Kaypro 2x Logic Analyzer

BUT, after a year in storage, even though it worked great after the twenty years in storage prior, it started acting *really* weird; claiming it was running off the battery, though it was reporting 0% charged, and though it was plugged-in to AC... and not even attempting to charge the battery... and, if I recall [it's been *many* days fiddling with it, since], after just a few minutes' use, would briefly warn about a low battery and planning to drop into standby, but would actually shut off completely.

The p150, as I recall, was doing similar, which is why I reverted to the 486... since I'd used it more recently.

Long story short: It appears that:

* upon losing BIOS-settings due to a dead [actually, removed, so-as to avoid leakage] CMOS battery, the default option for the power-button is standby, rather'n power-off. Thus, apparently the machine patiently waited to be awoken, for a year, sitting at the "now safe to shut off" screen, until sipping both the main battery and the standby battery dry...

* the system apparently does not recognize that the main battery is connected if it detects 0.14V where /at worst/ the 7.2V battery shouldn't go below something like 6V... maybe it can't even measure that low... [interesting; two diode-drops?] Thus, won't even attempt to charge a battery it doesn't think is there.

* I still can't explain the "Power source: battery" when plugged into AC; but

* I *think* the reason it'd run for only a few minutes at a time is due to the *standby* battery; which is three NiCd[?] heatshrunk coin-cells, deeply hidden inside. AC would charge them while the unit was powered-off, then the machine would run off three friggin' coin-cells for a few minutes before they ran too low.

.....

THUS.

It's not that I /need/ the thing to run off the battery, but that it's near /useless/ if it can only run a few minutes at a time... and...

In many cases such an issue might wind-up an otherwise fully-functional machine in eWaste, presuming something internal like a faulty charging circuit...

Well, I looked-er-over inside and out [which was not easy, mind you!], and besides a long-broken screen hinge and a couple stupid plastic tabs I broke off trying to open it, etc. this thing is near-pristine inside, going-on 25years old!

So, then, I broke apart the battery pack and found 6 18650 li-ion cells [li-ion existed 25 years ago?!]... three parallelled, in series with another three parallelled. And, each parallel-combination measuring 0.07V. Yikes.

Now, if you believe the hype... a li-ion lower than something like 2V is "useless" and unusable, some even say "dangerous," and must be immediately disposed and replaced... Something about dendrites shorting plates, thus never being able hold a charge. Something else about big-enough dendrite-shorts causing huge currents and flames?

While those theories may have merit in some extreme cases, I'm sure there are varying-degrees of "dangerous" and "useless." And I beg to differ, regarding their uselessness; I think the scenario I've described above is a *perfect* use-case.

[Similarly applicable, I imagine, and have unwittingly encountered, with many cell-phones, tablets, netbooks, etc.--portable devices of all sorts!--which literally won't turn on without a battery, regardless of a decent charger/power-supply]

...

The question then becomes, how to get my laptop [or other device] running... and /now/?

After a bit of digging, I found that there are many rebels and dare-devils out there [thank goodness] who've had and reported various successes [and failures] trying various methods to revive Li-ions which've been depleted *far* below 2V, some even measuring /negative/.

YMMV, but in a situation like mine, where external power is more than acceptable, but won't work without an at-least /slightly-functional battery [whose form-factor may be hard to find, expensive, or may just take too long to ship], I'd suggest at least /trying/ to get that battery charged to a level the system will accept...

FIRST: Sure, be cautious... li-ions are explosive [so is rubbing alcohol, like *many* other things we'd be stupid to swear off on account of "safety." Use some friggin' common sense and take the level of precaution you're comfortable with, while also considering others]

I started with a 5V usb charger, threw in a series 50-ohm resistor, and plugged that into one of the parallel-cell packs... that's 100mA, maximum. And, sure enough, that battery-voltage rose from 0.07V to 2V in minutes. [though, also depleted, once removed from the "charger," in minutes, back down to below a volt]

I tried a couple other experiments, ultimately finding myself comfortable with around 3A /max/ [for three cells, mind-you, 1A apiece, +/-], still using just a 5V source and a resistor.

Eventually I measured around 3.7V, only slowly self-discharging [or maybe just settling?] on each parallel-pack, and tried it in the laptop... and lo and behold, lappy's charger kicked in!

For a few minutes. Then it went back to not charging.

So, maybe one pack self-discharged a bit while charging the other, or... who knows, but I figured I'd give it another go... a little more "balanced..." [and, I remembered, around this point, that 3.6-3.7V is the /nominal/ voltage, while *not* charging... so, I'da prb had more luck if I let the 5V+resistor method get 'em up to at least 4V /while charging/]

So, in an attempt to balance the two, and whatnot, I used the charging circuit removed from a USB power-pack... and... let it top them off until its lights were solid.

Here, I was unsure of the "safety" and also *plausibility*... from what I gather [they're not marked] my cells are rated 3.6V. My USB power-pack's cells are clearly marked 3.7V. So... I mean... would it sit forever *trying* to reach 4.2V, but limited by the battery-chemistry to 4.1? Or would it overheat the battery, trying to force more charge into it than it could take?

I did a bit more research [while letting it run and measuring reasonable current, and below 4V]

The key takeaway, I gather, is that basically some are labelled 3.6V and some 3.7 depending on /purpose/; charge to 4.2V, get 11% more Amp-hours, at the cost of half as many recharge cycles [lifespan]... good for a quadcopter, but maybe not a laptop.

Lifespan's not my concern, here... overall system functionality is... [Though, I'm impressed with a brand who chose the former in their design! Per the 7.2V marking on the pack. And, I suppose those sorts of design decisions explain why this 25y/o laptop is still with us /at all/].

So I let this setup charge to completion [monitoring the current/voltage /and temperature/, regularly]... four and a half hours later it actually showed a full charge. Then a few minutes later started charging again [which it doesn't do with its own cells]. Though, only for about 30 seconds every few minutes.

So, we might very well be talking about internal dendrites shorting plates with some resistance, or some other sort of self discharge. Or maybe the 3.6V vs 3.7V theory is bunk, and these guys never could /hold/ a 3.7V-cell's full charge, and they're just settling to their normal voltage... I dunno...

I do know the lappy booted, said it was on AC, said the pack was at 100%, and ran for *hours* today.

The battery, interestingly, reportedly dropped from 100% to 8% in the blink of an eye, and early-on, but the system seems to have no qualms "charging" that, now, nor running off AC.

....

So, all in all, a decent experiment with results that at the least could be useful for other such things; say you've a phone that just won't power-up nor charge due to a severely drained [or dendrite-discharged] battery, and all you need to do is download your photos or look up a phone number... try a 50ohm resistor, yo! See where it gets yah!

....

Longer-term, this gives me ideas for how to repurpose phones, tablets, etc. or keep these two lappies functional in situations where an external power-source is available, and maybe just disposing of the dying cells altogether. Something about a diode and resistor and a coin cell? super-cap? Some drop-in method may be achievable with no risk of leaks or flames, or even depletion-related non-power-up, over the next 25+ years.

-

Starter battery, wee!

07/14/2020 at 00:36 • 0 commentsforgot to turn off my headlights, and listening to the radio... guess what happened.

So, I figured it was time to run an experiment brewing in the ol' noggin' since I saw here of someone charging their car starter battery from an 18V cordless drill battery, and not long after seeing that, @Ted Yapo tried [nearly successfully] to get enough charge outta a coin-cell...

Well, I've got a *bunch* of USB power-packs... three in series gives 15V.

Nice thing about these over, say, a regular ol' battery [even a cordless drill battery] is they have boost-converters built-in... so as they discharge, they still put out 5V. It's a bit like a built-in joule-thief. Also, built-in current-limiting, which could be a mixed blessing depending on how it's implemented... oh yeah, and thermal-protection!

So here's a weird culmination of coincidences:

the way I wired 'em up was via bulky usb power cables I built a while-back... their housing hot-glued to the connector.

Then I inserted my ammeter between it and the cigarette lighter outlet...

2.4A --Perfect.

The batts are rated for 2.1, but I looked up the chips once, and they're set to 3A.

I thought maybe it was actually limiting, 'cause 15V -> 12 would mean for a lotta wire resistance to only be 2.5A...

So I figured the limiters doing their job and plugged it in straight. Nope. One of the batts quit outputting. Grabbed another, tried again with the meter, 2.4A... again trying direct, again kaput batt.

OK, so, apparently, again in the last few completely unrelated projects, my ammeter's resistance had a big effect; this time quite handy.

I estimated ~1ohm between the car batt and my usb batts, to give that 2.4A... so, yahknow, whatever tiny resistance is in my meter was *just* right.

There's a coinkidink!

These things got *hot*... enough to melt the glue, still, not so hot as to shut down... though i watched pretty closely.

I estimate about 8W lost in the hookup, and 24W, then, into the car batt... 24Wh, actually; it happened to take almost exactly an hour to drain the batts 'till one went kaput.

Weird thing, someone walked-up needing a jump of his own... and just when my charging completed he got his started. I tried mine, and... yeah! I guess it worked! Nice to've had the backup of his services-offered, anyhow.

Other interesting observations: the one holding the least charge [as indicated by its bargraph] seemed to drain fastest [makes some sense, lower voltage, higher amperage to boost]. Though, oddly, the one with the most charge, seemingly draining the slowest, [and, oddly, with the most airflow for cooling] went kaput a couple times. Can't quite wrap my head around it, but I guess it was prb for the better, as I bet the others probably didn't mind a break. ...and let that glue cool/harden.

Interesting metaphors of man-hours, differing-abilities [and stubbornness], John Henry, and managers insisting on coffee-breaks...

----

Random-ish thought also revealing the coinkidinkness of it all... that if I'd tried four batteries at 20V? Well, if my setup was otherwise unchanged, we'da been at ~7A... and... it just wouldn'ta worked. So, I guess some amount of luck or coincidence or something that put pretty much all those variables pretty much randomly thrown together not only at a functional point, but also near-maximal.

-

DC grounding, real-world measurements

07/04/2020 at 03:02 • 0 commentsScenario:

12VDC [car battery]

a computer [Raspberry Pi Zero, + LCD + hub/keyboard, etc.] with internal DC-converters... ~1A, 12V

a USB-attached external hard drive, ~1A, 12V

each with identical five foot heavy-gauge power cables powered from the same point.

devices not yet connected via USB

---

Measurements:

~20-30mV difference at negative input to computer vs. negative input to powered-off drive [at the devices]

Thus ~20m ohm [0.02 ohms] on the negative power wires...

...

Both devices powered, the mV difference between their negative inputs varies between ~+15mV and -30mV depending on what each device is doing.

No biggy, right?

Connect them via USB!

NO.

Here's a resettable fuse on the drive's negative input:

<todo, grab picture from old phone with good macro mode>

Description: got so hot it looks nothing like a fuse anymore, more like a piece of burnt charcoal, complete with burn marks all around on PCB.

[

Now, I'm not certain that came from this setup, or the fact that my stupid DC Barrel Jack on compy was all-round metal, allowing one to touch tip-to-shell on insertion.

BUT: continue with me regarding the previously-described setup/measurements.

]

~20mV difference at negative terminals

~0.02ohms on power cables' negative wire

~1A, 12V to each device

Devices NOT connected to each other via USB cable [yet]

Connect ammeter between USB-A port's shell at compy, and USB-B port's shell at drive.

~70mA.

...

"OK, so a couple LEDs, big deal! USB ports handle 500mA!"

...

How does Ammeter measure current?

Puts a small resistor in series with probes and measures voltage across the resistor.

[a resistor whose value has to be greater than probes, which are roughly the same gauge, and together about the same length as the power cords... so they're prb ~0.02ohms, then per 10-to-1 rule-of-thumb series resistor is probably ~0.2 ohms, which would make sense being that the minimum voltage-setting is 200mV and the amp-setting is 10A "max" but displays 15A if pushed to do-so]

So, it's not measuring the current that would go through the shield/VBus-, but some *fraction* of it... plausibly 1/10th!

700mA might surge through that when the drive starts writing/seeking!

AND: that may go *either* way, the port may handle 700mA, but what about -700mA?

----

That's just the tip of the iceburg.

----

But, continuing that experiment, imagine the shield braid is roughly the same gauge as the power cords [when not wrapped around the USB signals] and the cable about 5ft... ~0.02ohms, just like the power cords' negative wire... so, now, if drive is idle/off and compy's running full-tilt at 1.5A, 1A will go through compy's power-cord's negative [0.02ohms], and 0.5A will go through the usb shield into the drive, and out its' power cord... [0.04ohms]. not so great. And, anyhow, 1/3rd of compy's current won't return down compy's power cord, when the drive is off/idle! And vice-versa!

---

Think that's not a problem? Still OK with a self-powered USB device sinking and sourcing 500mA whenever?

OK, how 'bout this:

Compy has a DC-DC converter which was designed with a far underrated input capacitor... [I figured this out later. Bear with me]...

So, imagine a DC-DC buck converter...

How does it work?

it has an SPDT switch and an inductor.

In one position the inductor current "charges" from the input-voltage [12V], and flows into your device/load.

In the other position the inductor current "discharges" into the load.

It switches *really fast* so that inductor barely has a chance to "discharge" before getting "recharged."

Thus, in both switch positions the current through the inductor and into the device/load remains roughly constant, and so then does the voltage.

...

"OK, what's the problem?"

When the inductor is charging, the full device current [say 1A] flows from the source and back to it. So, half the time the battery supplies 1A at 12V [for a 1A 5V load].

The other half of the time there is no current to/from the battery; the /inductor/ acts as the battery.

....

"Sounds brilliant! No wonder they caught on so fast! So, what's wrong with that?"

The battery sees a load switching between 0A and 1A at, say, 100KHz... that's a LOT of ugly, but we'll focus on the present scenario:

Remember how compy is using 1.5A... roughly half the time, 1.5A goes down compy's power-cord's positive wire. 1A of that goes through its power-cord's negative wire. Ugly-enough.

0.5A goes through the USB cable into the drive and down its power-cord's negative wire. UGLY.

But atop that, it's 0.5A /switched/ at 100KHz! Half the time it's going through the USB cable and half the time it doesn't, because the inductor "battery" is inside compy!

----

Now, if you don't think 0.5A at 100KHz is going to cause problems with data, then I've got even UGLIER stuff for your consideration.

The hard drive ALSO has a switching supply.

So, say both devices are running at full-tilt, 1.5A each...

And for simplicity, let's say their power supplies are synchronized, 180degrees out of phase. So, when compy's drawing its 1.5A from the car battery, the hard drive draws its 1.5A from its own internal dedicated inductor "battery". The real battery sends 1.5A from its positive terminal, up compy's power cord, into compy, then gets 2/3 of it returned via compy's power cord, and the other 1/3rd goes from compy to the drive and back to the battery through the drive's power cord. [Negative wire. Also Note: no current is flowing on the drive's positive wire, because the drive is running off the inductor].

OK, now the two switching-supplies switch, the whole scenario is reversed. INCLUDING: 1/3rd of the /drive's/ current is now flowing from the drive down the USB cable, and into Compy.

Whereas before we were concerned [well, I was] about 0.5A going through the USB cable, whereas then I was concerned about 0.5A and 0A alternating down the USB cable, /now/ that concern has /doubled/ to 0.5A and /negative/ 0.5A alternating down that cable.

BUT IT GETS WORSE!

What happens when the supplies *aren't* synchronized? Or run at different frequencies, entirely? And different loading? [All of which is pretty much guaranteed to be the case].

...

OK, still think that measly 20-30mV difference in "ground" is no big deal? Or how about how that 20mV somehow turned into 70mA? Or that 500mA? Or a 1A peak-to-peak square-wave alongside your data?

...............

UPDATE:

After much deliberation and research, I've decided to isolate each device's power supplies.... the normal way. Use 120VAC [thus, an inverter] and switching-wallwarts. I've checked, their outputs are indeed isolated.

It seems shielding, especially of USB cables/connectors/devices, is really quite an art. None of the resources I found consider a case like mine, where host and device both run off a single supply, where power *doesn't* go through the USB cable, because they draw too much power.

There was a bit of speculation from various engineers in various forums. This situation being somewhat similar to industrial control panels. Also automotive [duh] applications like CAN-bus. In such situations grounding/shielding is a considerable consideration, apparently for exactly these reasons.

The basic conclusion seems to be that USB was never intended for such situations. Some of the typical practices in such situations *can't* be applied to USB. E.g. shielding might be connected at the sender, but *not* at the receiver. But USB is bidirectional.

It seems there are USB isolators for such situations; I haven't looked into them, I imagine they're not cheap [bidirectional opto-coupling? signals which are only /sort-of/ differential, sometimes?]

Before I decided to revert to AC I was *almost* convinced to do the following: shield connected at host's ground, but connected to device's ground through a parallel resistor and capacitor.

The capacitor would keep the two ends essentially "shorted" to both ends' grounds during high-frequency events, like the ringing caused by switching power supplies. WITHOUT allowing the device to draw ground current through the shield and other device's ground. Thus, when either switcher switched from "internal battery" [inductor] to the external real battery, and suddenly a large current would flow down its ground wire to the real battery, that device's ground voltage would raise sharply [due to the wire resistance], coupling into the signals, etc. With the capacitor, both grounds would raise together, smoothing that edge. And, being that my wires were tens of milli-ohms, even when that capacitor settled, the difference in grounds would still only be tens of millivolts. Just not a *sharp*-edged difference.

And, thus, each device's return current goes down its own wire, NOT through the USB cable, and any sharp differences in ground get smoothed.

And the resistor? I was thinking somewhere around 3-33ohms, about 100-1000 times that of the wire-resistance. A tiny portion of the devices' return path would go through the cable, but minimal. More importantly, try to keep that shield "grounded" at both-ends, as much as feasible, by the original design... since both ends transmit. [Maybe 3ohms || capacitor at *both* ends woulda been smarter?]

It was probably hokey, all that thinking, but sorta made sense to me, and not unlike many others' suggestions [several suggested *mega*-ohm resistors!], but, again, were shielding designs for *other* signalling methods applied to a fundamentally different one. As were many other suggestions.

Frankly, from the sounds of it, design of such shielding is *very* application-specific... there are Numerous ideas from smart folk suggesting *entirely different* and even contradictory "best practices". Some focus on EMI radiation, some on EMI susceptibility. Some on high frequency, some on low, few I found focus on DC [like my concerns]. But, it seems those best-practices vary widely and depend dramatically on the needs...

AND, it seems, USB basically flies in the face of all that by, essentially, assuming that any device is essentially an extension of the host... e.g. shielding guidelines basically rely on the idea that the device is enclosed in the same faraday cage as the host, with no openings [e.g. no separate power source, etc.]. the shield on the cable, then, is essentially an outshoot of the host's cage into another such cage, making essentially *one*.

They get away with it, mostly, I think, because "self-powered" devices are usually powered by *isolated* power sources. Kinda like throwing a battery in the ol' faraday cage.

And when two devices are both earth-grounded at the shield *and* at their power source [which actually is rather rare, I think; maybe corporate printers, but they'd more likely use ethernet], I guess the idea is that no current from the device's power supply has reason to flow through the shield, nor earth, since the power source is at the device end, and otherwise isolated from the other device's power source, and at low voltages like these current likes to flow in circles right back to the source. [Thus "circuit"/circle]

Mine, again, is different because my devices' sources are *not* isolated, and are, in fact, the same [well, roughly 50% of the time, when they're not powered by the inductor "battery"]. So, current will take any and every path back to the source. Including right down that hefty shield.

Thing is, it seems, the majority of USB cables/devices don't really even follow that single-faraday-cage principle; mostly, probably, due to manufacturing costs, but also design-costs of understanding *exactly* what's going on, vs. following "best practices," and design based on trial-and-error, which, too, can switch-up concerns for other concerns [e.g. practices implemented in order to pass EMI-radiation testing don't seem at all concerned with current-flow *internal* to the system].

So, frankly, in a way it's kinda miraculous so many devices/cables actually function as well as they do. I suppose some of that has to do with error-checking and retries that're usually invisible to users. E.G. my two switching supplies running at ~100KHz, might've imparted data-destructive impulses 400,000 times per second... but at 480,000,000 bits per second, and packet-sizes of less than 1000 bits, the odds.... wait a minute... no... it's in fact *quite* likely a [small] 512Byte [4096bit] packet would occur during those impulses! Huh.

I suppose big-ass capacitors at the DC-converters probably helped.

...

Anyhow, this is too much for me... i'da had to modify all my devices' shielding-to-ground interfaces, and add bigger-ass-caps to all the DC-converter inputs, then consider the brownouts caused by switching those devices' converters' capacitors on, and so-on... and, once having done, wonder about those effects if/when I have a regular ol' AC source to work with.

It's surely less efficient, which'll drain my battery faster, but I'm going back to the ol' tried-and-[allegedly]-true method of isolated power sources, which can be off-the-shelf afforded by using a friggin' inverter to friggin' bump my friggin' 12VDC source up to 120VAC just to bump it back down to 12VDC with regular ol' power-bricks/wallwarts already in my collection, came with the devices, or could even get from a thrift-store, one for each device. Weee! So much easier and cheaper.

Still, it's probably wise to /make sure/ each power-supply is isolated, and the inverter, too.

[BTW: why do even cheap 120VAC->DC-Converters get all the isolated-output-fun when regular ol' DC-DC converters with isolated outputs are comparatively rare? They still use transformers! BUT: they can get away with MUCH smaller ones than the ol' wallwarts because: they can simply use a 1:1 transformer; fewer windings and even the same gauge wire for input and output. Sure, there are methods to put a 1:1 transformer on the *output* of a DC-converter; but consider the high current those windings would handle, and the wire gauge necessary! Putting it on the 120VAC side [they're, really, isolated-/input/ converters] means the windings handle 1/10th of the current, so the gauge can be much smaller... and, thus, so cheap as to throw into even cheap switching wall-warts. And thus removing all them common-source/unisolated woes I've encountered.]

-

Data, still, and DC grounding

06/29/2020 at 06:13 • 0 commentsAnother setback, rather major, being the connecting of the external drive... eventually not only completely blew out a USB port, but damaged the entire hub it was connected to wnough to cause frequent I/O errors.

It would seem there's quite a bit to be considered in DC power distribution.

Imagine a 5 foot long power cable to the computer and another to the external hard drives, both connected to my 12V car battery... say that's 1A@12V for a lowly PiZero+display and 3A@12V for two hard drives... say there's one ohm in those cables... now "ground" at the computer is 1V above battery-ground, and "ground" at the drives is at 3V!

Now, imagine what happens when that USB cable is connected!

... worse, still, I did some falstad circuit simulations today to see about the best place to put a ground when using buck converters... and I was reminded of another factor I hadn't been considering; powering up a buck converter causes a huge power surge.

So, Usb drive has a power switch, let's ground 'em through the USB cable *before* powering up.... compy-on, no biggy... maybe draws a little current through the usb shield/gnd wires, but certainly most through its power cable... now, switch on the 3A drive... heh. Huge surge spinning up, of course, but also huge surge charging the buck converter's input capacitor, huge surge charging up the inductor, huge surge charging up the output capacitor, and the motors aren't even spinning yet!So... I replaced the hub, and *thankfully* that seems to have been the only damage.

But, I need to do some serious grounding thoughts before continuing.

...

Stupidly, I had forseen some of this; I was building a power-bank last year[?] which has numerous floating-output DC converters for pretty much *exactly* the purpose of preventing such things... but, ultimately, it was such a hassle, and I guess I *really* needed to get some actual compy-time in... feel like I was actually progressing toward my original goals... and, well, I think I was more cautious about it in the beginning, then kinda forgot about it... and I guess it finally came 'round to bite me in the ass.

...

Thankfully, again, I think the only damage was to the hub. Although, I ran some extensive tests [copying a large file 100 times] and found that, in fact, *somehow*, despite *numerous* checksums and retry-mechanisms all along the process [sending the command over USB to read the sector, reading the sector, transferring it over USB, transferring it *back* over usb, writing the sector, nevermind the process reading it back for comparison], somehow it did the job once or twice *without* 'cp' complaining, and *with* erroneous data! I don't understand how that's possible! There should've been a checksum failure somewhere, wherever that was it should've automatically reattempted until there wasn't, or /errored/... but no error, and still bad data. HUH.

So, now, no idea whether all those millions of files transferred to my backup drive, and from there to my main drive, are accurate... am I really going to 'diff' them all? Especially after the reorganizing? UGH. I was *SO* ready to wipe those original drives so I could finally put this *years*-long process behind me.

...

And I still haven't figured out what to do about grounding, short of dangling a wire off each component and installing a bunch of friggin' banana-jacks in the van's wall.

...

And, I'd much rather be working on #The Artist--Printze, [surprised I didn't have grounding issues with that!] or finally getting to #Floppy-bird, or several others... but... not without a compy... a /properly-grounded/ compy...

-

Data

06/14/2020 at 06:26 • 0 commentsThere've been *big projects* that maybe deserve their own page... but tonight I'mma rant about a "little" [160 friggin' Gigabytes little] discovery...

I'd fought for literally *months* to first consolidate all my old machines' data onto one drive, then slowly organize it better... this was a big process, requiring going through each drive in reverse-order [newest to oldest], making sure they were organized similarly for comparison/merging/deleting duplicates/keeping the oldest dated duplicate and so-forth via home-brew script, yet retaining the original directory structures /as well/ should I ever have one of those "I remember where it /was/, now where did I put that?" moments... so, now two copies of everything [well, hardlinked] from three different machines, then a copy of the newest machine... then merging/comparison, etc. Then *finally* [literally months later], when that was finished, I could move all that stuff from that latest machine's directory-structure to one that makes more sense now. Doing all this with hardlinks is key, as it means each machine only occupies a little more space than original, despite there being two+ copies on the new machine [and far more on the backup drive] for some time.

Anyhow... I *finally* finished all that merging after months, then finally got rid of those old dir-structures... and finally started on, and got a ways in, reorganizing how I'd rather it be organized... despite the nagging feeling something was missing... just couldn't place my finger on what. And, surely that *couldn't* be the case, because I was *very* methodical and meticulous in my process, been literally working-out how I was going to go about it *for years*. I backed-up each machine's *actual* drive to my backup drive before starting. So nothing could be missing... right?

Well, shit... some brat reminded me of an old project I kinda intentionally forgot... [as best I could, anyhow, being that it affects me still, every day]... which, I guess, is to say it's getting near the time the trauma's worn off enough that I might take a look at what happened...

and...

It's GONE.

Nowhere to be found... like, except for my HaD.io project-page for it, and a way-outdated github upload, like it never friggin' existed.

Which, frankly, I was /kinda/ OK with, not wanting to revisit the trauma... but if *that* was missing, what else might be...? And so I went looking, and sure enough my favorite project of all-time was missing, as well. So then I was panicked... and *thankfully* eventually found that somehow I just forgot to update its version number, so it was stored in a previous project's folder...

Whew.

but... still that nagging feeling... so tonight I got out an old backup drive... and... Yeah.

Somehow, I can't even begin to recall, the drive from my newest machine, prior to my current one, is *very* *very* different than the last backup made of that machine. I mean *very*.

And... the last backup...?

Well, it looks like about 160GB of sorting, merging, looking for duplicates, etc. again... only this time I've got two *very* different directory-structures to deal with. WEE!

But, yeah, there's a *lot* of stuff in there to confirm that "something's missing" feeling I had.

Lessee... I think I topped-out at 10MB/s between drives before... so... 16,000sec... about 4-5 hours? to transfer to the new backup drive...

The backup drive and computer drain the car battery in about that, but here with two drives... Can't start the engine to recharge midway; the starter drops the batt voltage too low to keep the inverter running = unhappy drives... so, I've gotta do this in steps. I *think* rsync, as setup in my backup script, can resume where it left-off. Though I had a problem with CTRL-Cing /something/ like this, then resuming... I think that was 'cp', which left a half-written file whose date was newer than the original, so didn't "update" it when I tried again... yeah, I think my rsync backup script'll do it. Of course it will; start a /new/ backup, link-desting to the incomplete one. I've done this. OK. That'll also give the opportunity to --link-dest it with some other backups so maybe it won't take 160 friggin GB...

That gets it on the "everything backup" drive... then I can do basically the same as before, rsync portions to the new machine, link-dest as appropriate, keep the original, hardlink to a new folder, and sort those in...

Ugh! I thought I was done with this!

OK, now, at this point I *really* wonder what the heck happened four years ago that my machine's actual drive *barely* resembles the last backup. What was I doing?! upgrading the OS? Transfering to a long-lost bigger drive? Is this *not* that system's drive after-all? And, if so, where is that one?

And, you *know* if I do this from a *backup* rather'n the actual drive, I'll never stop wondering what's missing!

Ugh!

----

Mwahahaha! I've got this....

My backup script keeps a log of the differences between two backup runs. So, I can do this process from the latest backup on old backup drive to the new one... then do the merge-process on the new machine... then if I find out there's a *different* drive from that old machine, I can back it up to the new backup drive, link-desting to the old backup, and look at the log to see which files to merge onto the new machine...

Sheesh!

----

Three days later:

Apparently I'd had *two* drives in that machine; one was full at 160GB, with Debian 7... then, apparently, when I finally finished debating *how* to upgrade to deb8, I decided to wipe an old 250GB backup drive and start fresh. Apparently, I never physically mounted that drive, [I keep saying "apparently," but the memories are coming back like patchwork] it was sitting in the bottom of the tower, sidepanel off, for well over a year... so, when I packed-up when moving, I disconnected it and wrapped it in a loose/crumpled-enough paperbag as to protect it, then shoved it between the mobo, bottom of the tower, and bottom of the drive-bays, then sealed up the long-open side panel.

Then, years later, when I grabbed what I thought was the main drive, I accessed it by opening the front panel, and slid it out [on its easy-remove screwless system] without ever opening the side to find that paper bag. HAH!

Funny thing is, when I slid out that drive, I thought it a bit odd it wasn't wired-up.

But here's the real kicker... I now remember... I spent *so long* debating whether to use that backup drive [and wipe those backups of a long-defunct machine], and/or how else to go about the upgrade-process, that I renamed the machine "deb8ed" in honor of that long debate... which, if I'da remembered... sheesh! I mean, I've had *months* looking at that name several times a day, even wrote it on the debian 7 drive by mistake!

But that whole old-machine merger process I'd been doing these past months kinda relied on my working from newest to oldest... and turns out *the* newest will be the last to be merged, and harder, still, because since I *thought* I was done merging, I started flat-out hard-core /reorganizing/. Which'll make the merger a pretty big job, trying to figure out where things already exist. Wee!

Once this is done, boy-howdy, am seriously contemplating wiping the old drives and using them as duplicate/triplicate backups... this *should be* *everything*, now, sheesh.

today's assorted project ramble "grab-bag"

Assorted project-ideas/brainstorms/achievements, etc. Likely to contain thoughts that'd be better-organized into other project-pages

Eric Hertz

Eric Hertz