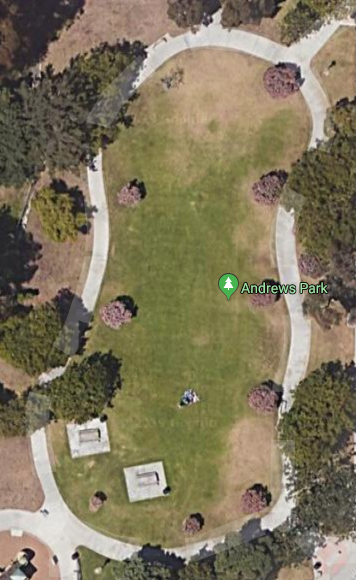

Yesterday my autonomous robot successfully drove itself (*) around the park. 90 minutes to do a loop. There's more information on what I've done and my plans below.

Yesterday my autonomous robot successfully drove itself (*) around the park. 90 minutes to do a loop. There's more information on what I've done and my plans below.

One detail I realized is that the image I was taking at 10 degrees left of the current path was problematic. I defaulted to including that image in the "turn right" class, but in many cases going straight when seeing that picture would be a perfectly valid choice and would often result in the robot following the left edge more closely.

So this time when I ran my training, I left out the 10 degree left images all together. Training with the 3 previous loops around the park took about 60 minutes with images on an SSD and a K80 GPU. I got to about 93% accuracy on the validation set.

Taking this to the park, I ran the existing code which takes all 7 pictures, shows me the predictions, and then waits for me to take action. It's about 185 steps around the park and this cycle takes about 30 seconds. So a 90 minute loop.

(*) There were about a half dozen spots in the loop where I had to correct the behavior of the robot. In a couple places it would get caught in a loop doing repeating left, right cycles. I noticed that often the drive straight choice was a close second option in one of the two cases. Therefore I believe this can be solved by noticing this pattern and picking the drive straight case in the correct position.

My robot's other nemesis is a concrete bench that sits on the path near the bottom right of the image. That spot requires that the robot move to the middle of the path to traverse the bench, then return to the left edge. It is learning the behavior, but is not quite there yet and needed a couple human "nudges."

My goal at this point is to lower the lap time. There's a few modifications that should make a big improvement.

- Don't take all 7 pictures. Turning, waiting to stabilize, and storing the images are time consuming.

- Don't wait for human intervention. Give the operator a moment to veto the operation, but trigger the next step automatically.

- Currently the prediction loop stores the image on the SD card, then reads it back to run predictions. Keeping the current image in memory should be faster.

- I'm currently training an InceptionV3 network for the image processing. That network is large and slow to run on a Raspberry Pi. I'd like to try using MobileNet V2, which should remove a few seconds per iteration.

- A bit more training material may handle a few of the problematic cases better.

I think getting operations down from 30 seconds to 10-20 seconds should be doable pretty easily.

Daryll

Daryll

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.