-

Emotional Core: in details

10/16/2020 at 21:06 • 0 commentsNow after some polishing of the basic emotional core I can tell about how it works and about it's structure.

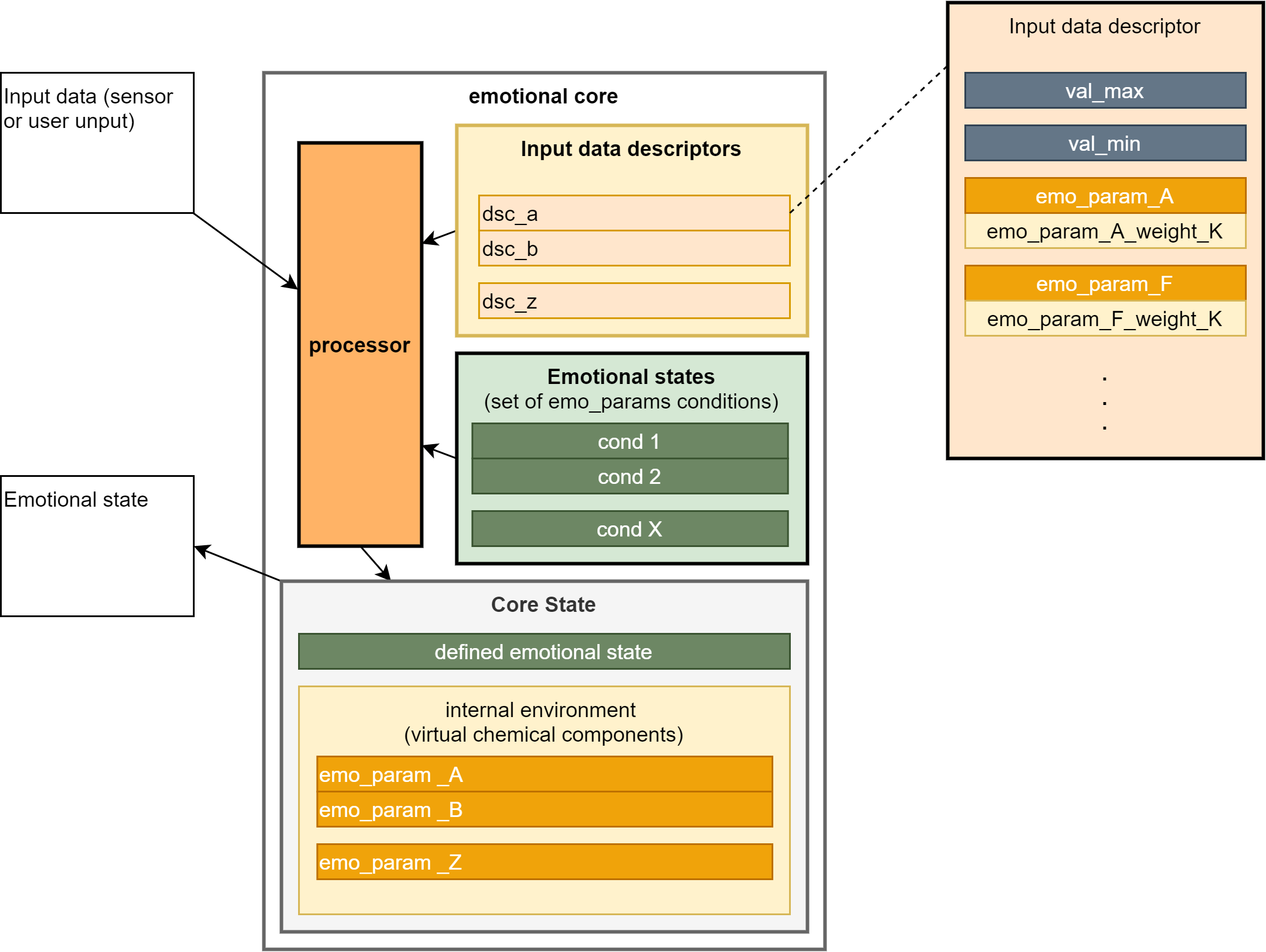

Emotional core consists of three main parts (see the picture bellow):

- Input Data Descriptors - describes data from sensors and how it should affect the core

- Emotional States Descriptors - named states of the core described by specific values of core parameters

- Core State - contains core parameters, value of sensors and pointer to relevant to parameters Emotional States Descriptors

![]()

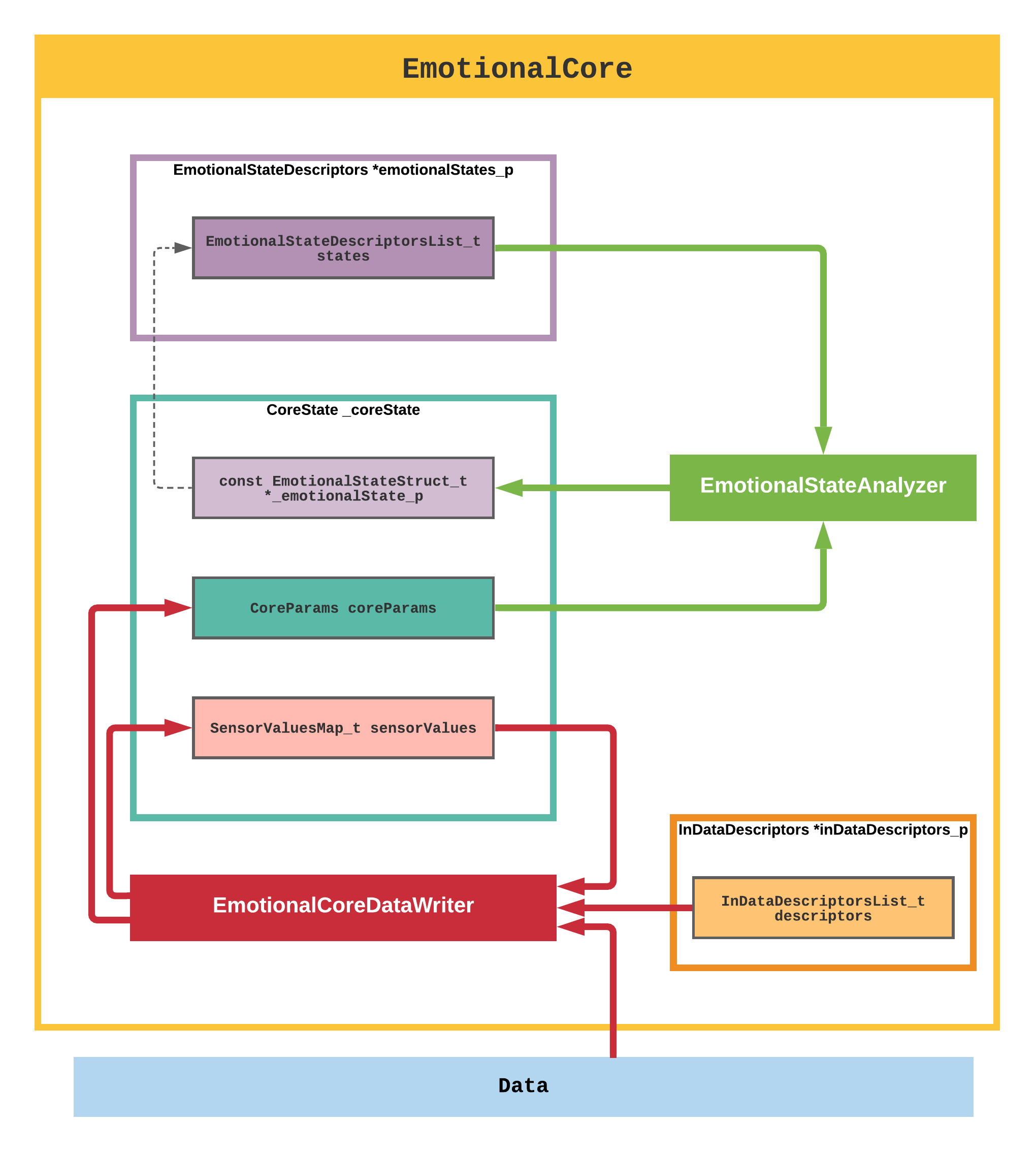

Or more detailed:

---------- more ----------![]()

When you write the data, the core does the following:

- updates saved sensor value

- updates core parameter: param += (new_sens_val - old_sens_val) * weight

- updates the current core state based on updated parametersHere are some examples of data that could be used with the core.

Example of core parameters:

[ "cortisol", "dopamine", "adrenaline", "serotonin" ]Example of a core's emotional state:

{ "name": happiness, "conditions": [ { "param": "cortisol", "op": LESS_THAN, "value": 10 }, { "param": "serotonin", "op": GREATER_THAN, "value": 100 } ] }Example of input data descriptor:

{ "sensor_name": "temperature sensor", "val_min": 0, "val_max": 255, "weights": [ { "core_param_name": "serotonin", "weight": 0.5 }, { "core_param_name": "cortisol", "weight": -0.5 } ] }Input data example:

{ "sensor_name": "temperature sensor", "value": 120 }My next step will be in looking appropriate parameters and weights that can adequately describe (roughly of course) some human emotions.

Source core: https://github.com/an-dr/r_giskard

-

The Emotional Core Test (attention: pretty boring!)

09/26/2020 at 23:02 • 0 commentsTesting the new Emotional Core for the R.Giskard project

The code is here: https://github.com/an-dr/r_giskard/tree/feature/emotional_core

-

Emotional Core Sketch

09/19/2020 at 18:24 • 0 commentsWell, the third milestone is in progress.

It's time for the most interesting part of the project - emotions and reflexes. Now, let's talk about emotions, but in short.

In my view and my understanding of what should be going on here, emotions - it is just the name of our mental state. We are going to have a set of such emotional states - to use those states as a simple indicator for our systems using my emotions implementation.

In the animal case, each state is defined by the specific chemical condition of the brain. The virtual analog of those chemical components probably should be a set of parameters.

Input sensorial data could affect the parameters with different weights. E.g. the huger amount of light should decrease the parameter reflecting the level of anxiety.

If the level of anxiety is low enough, we could declare that the code in a calm state.The pretty rough structure of the emotional core is on the picture:

![]()

The work is going at the branch of my r_giskard repository, where I'm going to implement and the core and the simulator to test it on desktop systems:

https://github.com/an-dr/r_giskard/tree/feature/simulator

I'm implementing it in C++ because in that scenario the core would be used in embedded systems as well as in python programs.

-

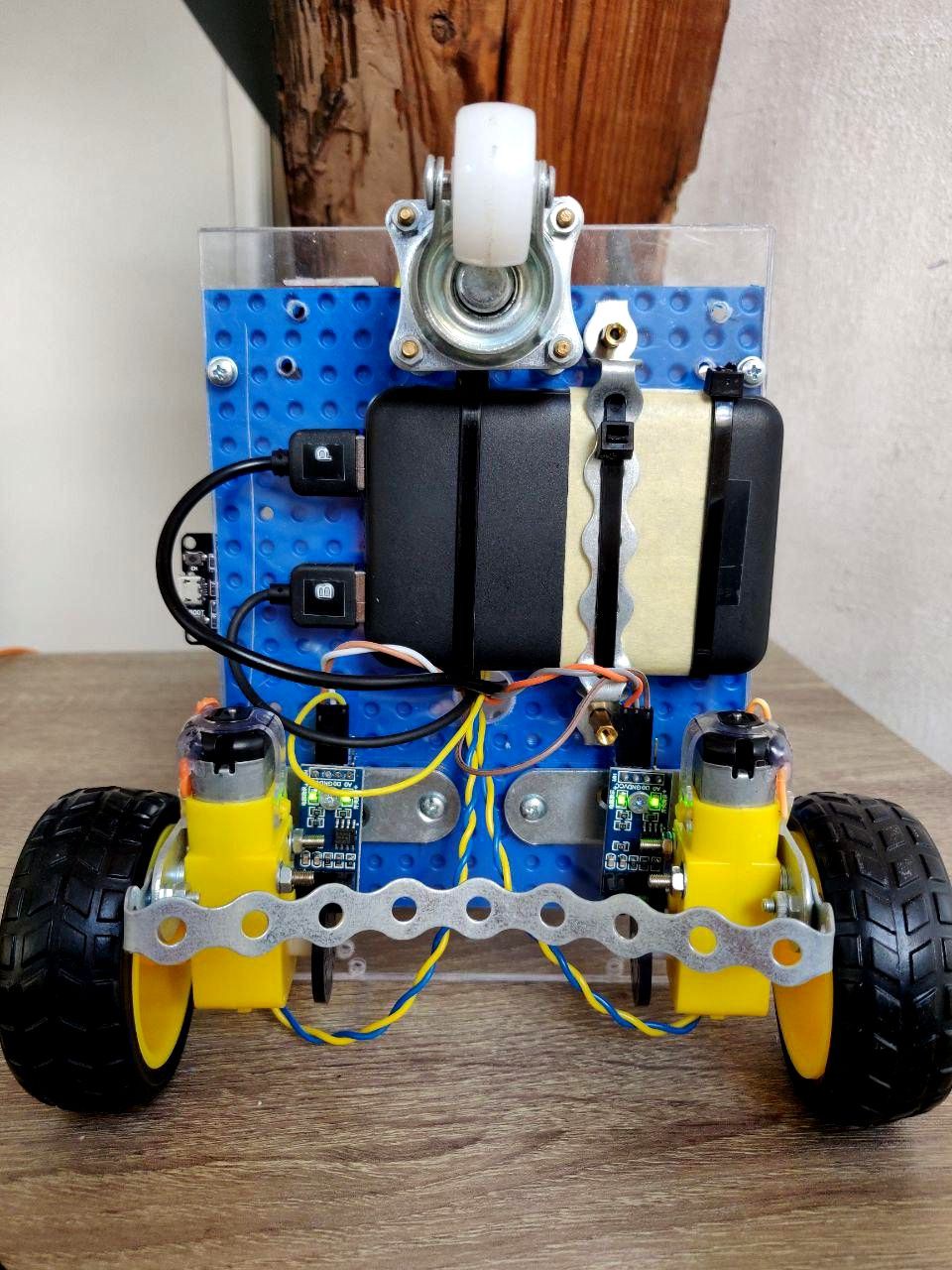

Zakhar Milestone: Zakharos

08/29/2020 at 21:01 • 0 comments🎉🎉🎉

Changelog since the Reptile Demo Milestone:

Common:

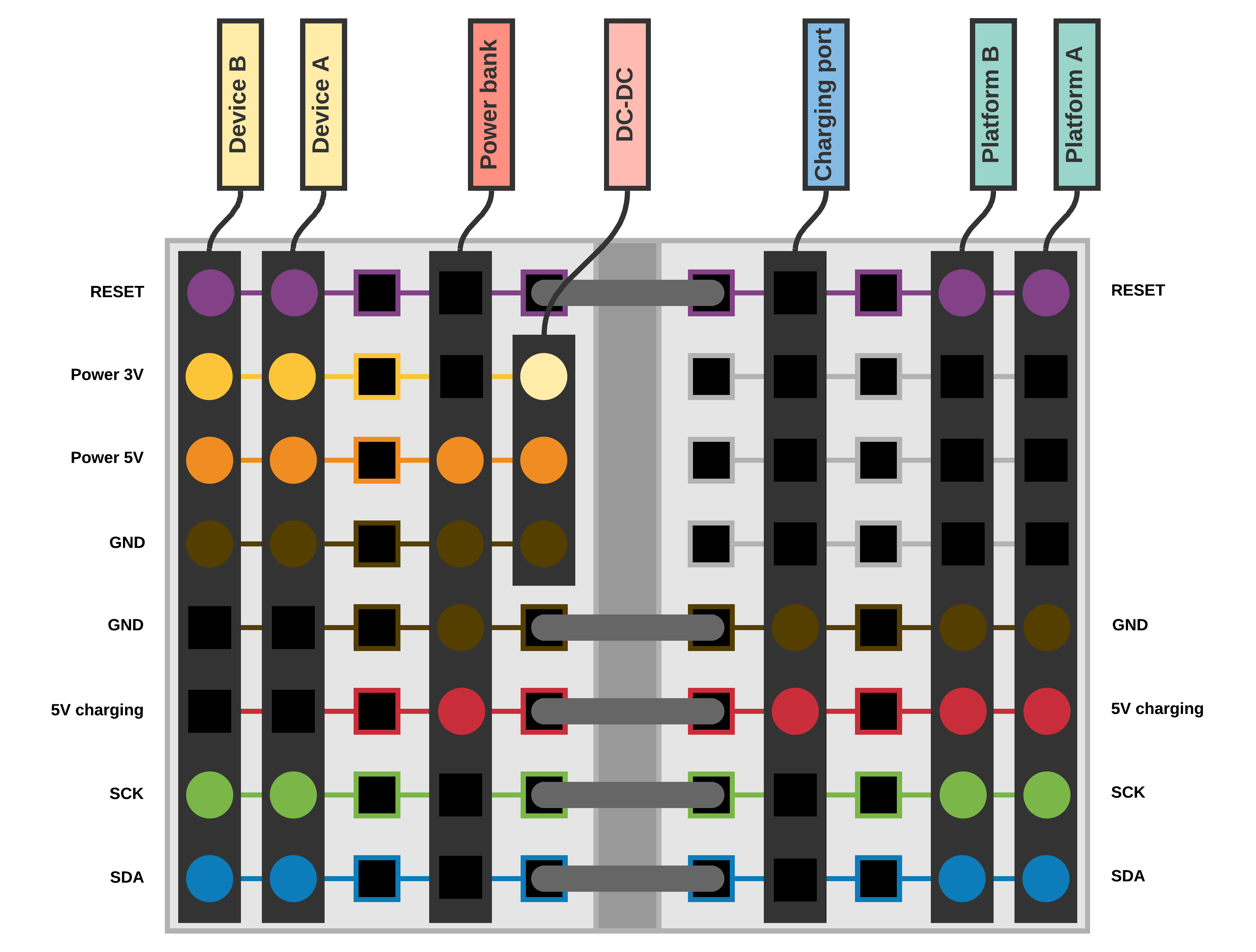

- Updated cable system with 8-pin connectors (see: Power post. Part one.).

- Added union connectors for charging of all platforms at once.

- Added switchers for each platform.

- Created a new repository including the sensor platform and the face module: zakhar_io

Sensor platform:

- Used Arduino Nano v3 instead Pro Micro - for better stability

- United with face module as one platform

- Added Ultrasonic Sensor HC-SR04, but without software support yet.

- Updated face module with stabilized voltage

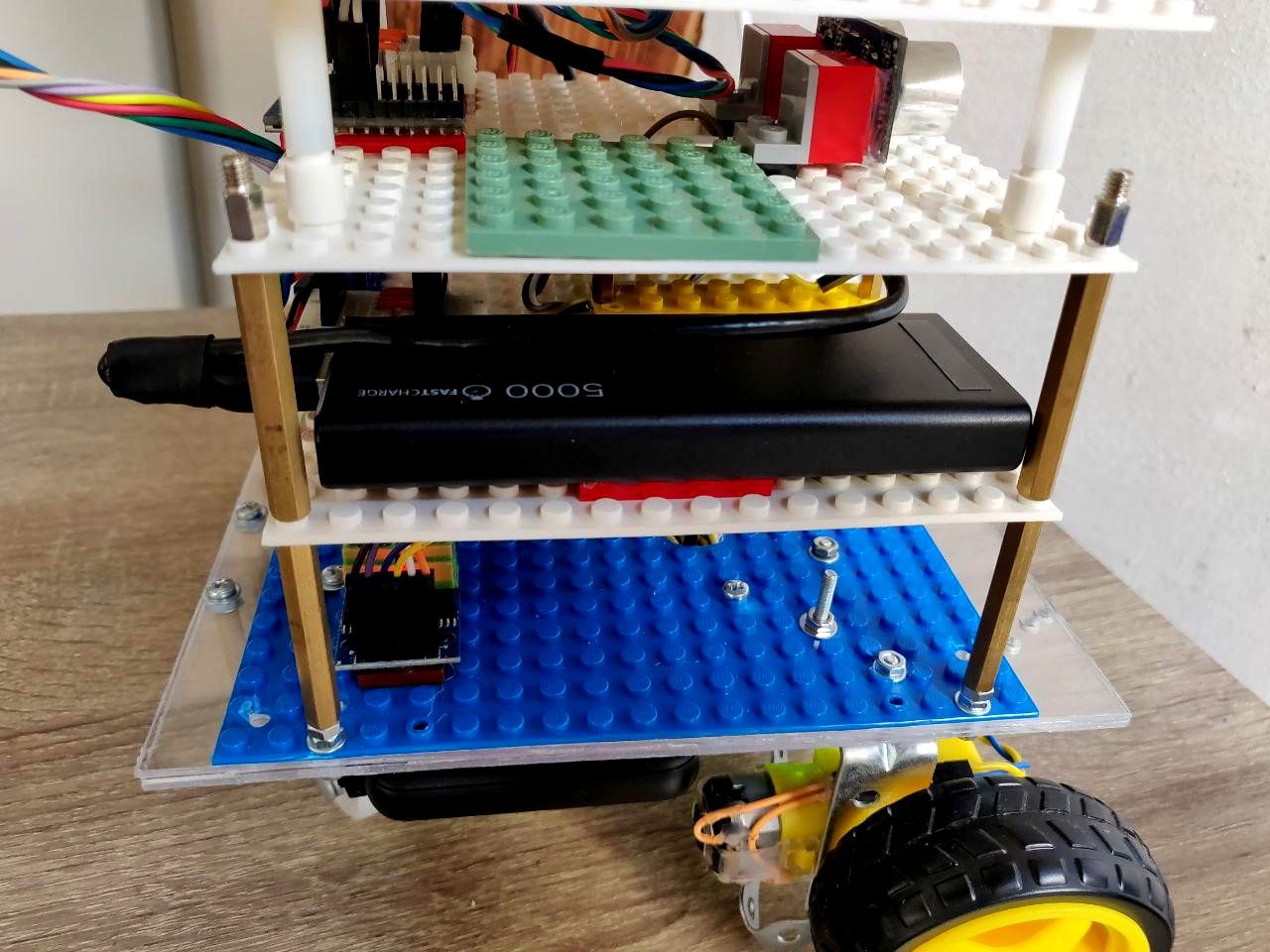

Computing (brain) platform:

- Raspberry Pi 4, ROS Noetic with Python 3

- New ROS-based application architecture

- OLED as status display for the computing platform (see: Startup check)

- Achieved stable (finally) I2C connection. Thanks to lower frequency (10KHz) and the new connectors.

- Added Raspberry Pi Camera, but without software support yet.

- Created a new repository for the platform: an-dr/zakhar_brain: Software for Zakhar's brain

Moving platform:

- New chassis with a stand

- ESP32 instead of Arduino Nano v3

- Bluetooth connection control

- Position module MPU9250 with ability to turn for a given angle (see: New ESP32-based platform testing: Angles!)

- New third wheel - with rubber

- Removed rotation encoders as useless

- Updated motors by the modification with a metal reductor

Repository: https://github.com/an-dr/zakhar

-

Startup check

08/29/2020 at 19:42 • 0 commentsThe startup system check is shown on an OLED display. The display has its separated i2c bus (I2C-3) to communicate with the Raspberry.

The startup process is the following:

- Greeting

- Test I2C-1 devices: moving platform, sensor platform, and face module. If something is not represented on the bus, the error will be shown.

- Waiting while ssh service will be loaded

- Waiting while the network will be connected

- Waiting while roscore will be launched

- Infinite loop showing the network and project info. The robot is ready!

Gif of the process:

![]()

Code of the startup check process:

https://github.com/an-dr/zakhar_service/tree/feature/display_n_startup/display

-

Noctural

08/15/2020 at 23:34 • 0 comments -

[Bad Idea, canceled] Power post. Part one.

08/08/2020 at 15:14 • 0 comments![]()

There are a lot of issues when you connect low- and high-current devices to the same electrical circuit. Basically, you are getting:

- Noise at sensors' output

- Freezes and resets of MCUs

- Artefacts on LCD and backlight's flashing

- Other unexpected issues

That's really annoying that's why I spend last several weeks thinking how to implement power supply for Zakhar. There are two things I want from this update: to avoid things mentioned above and to simplify development allowing me to work with every platform separately. There is also thing that I don't want, to develop a reliable power system. Please get me right, it is cool and interesting, but it is not something new. So, what I want is:

- Use as many ready-to-use components

- Make an isolated power supply system for each platform

Sounds like power banks!

---------- more ----------I've been already using a cool power bank with two low- and high-current ports for motors and the rest electronics:

![]()

I will use it for the moving platform only.

One months ago, I've already added another one for the computing platform with a Raspberry:

![]()

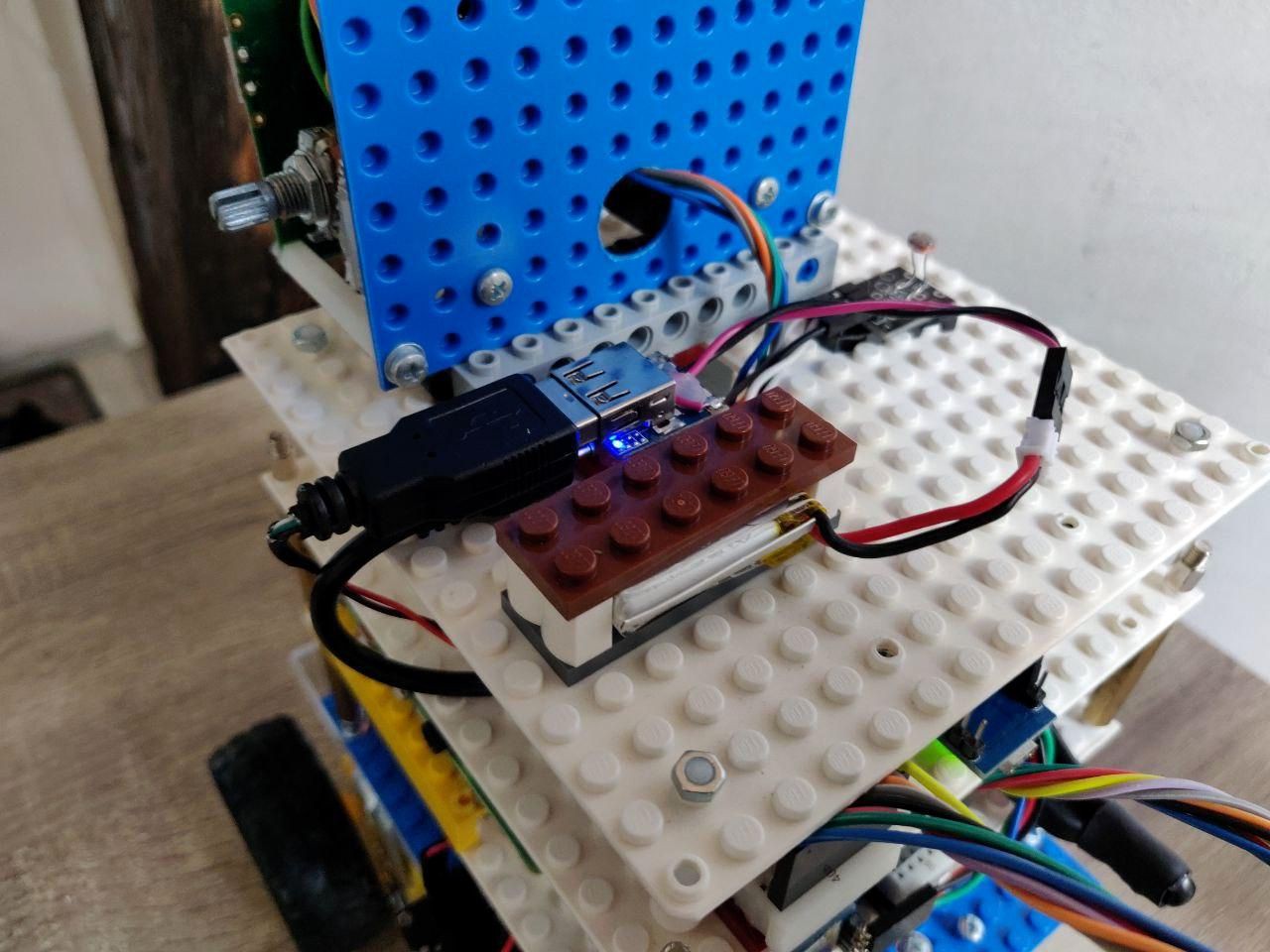

Since the face module and the sensor platform haven't been separated almost never during the development, I decided to add to them only single common power bank. Unfortunately, I haven't found anything, so I had to make own one:

![]()

(I know, LEGO AGAIN!)

Okey. Now I must charge 3 power banks. Easy! Since we are not interested of using four pins of inter-platform connectors for power delivery anymore it's time to update the connectors for delivery of charge to power banks.

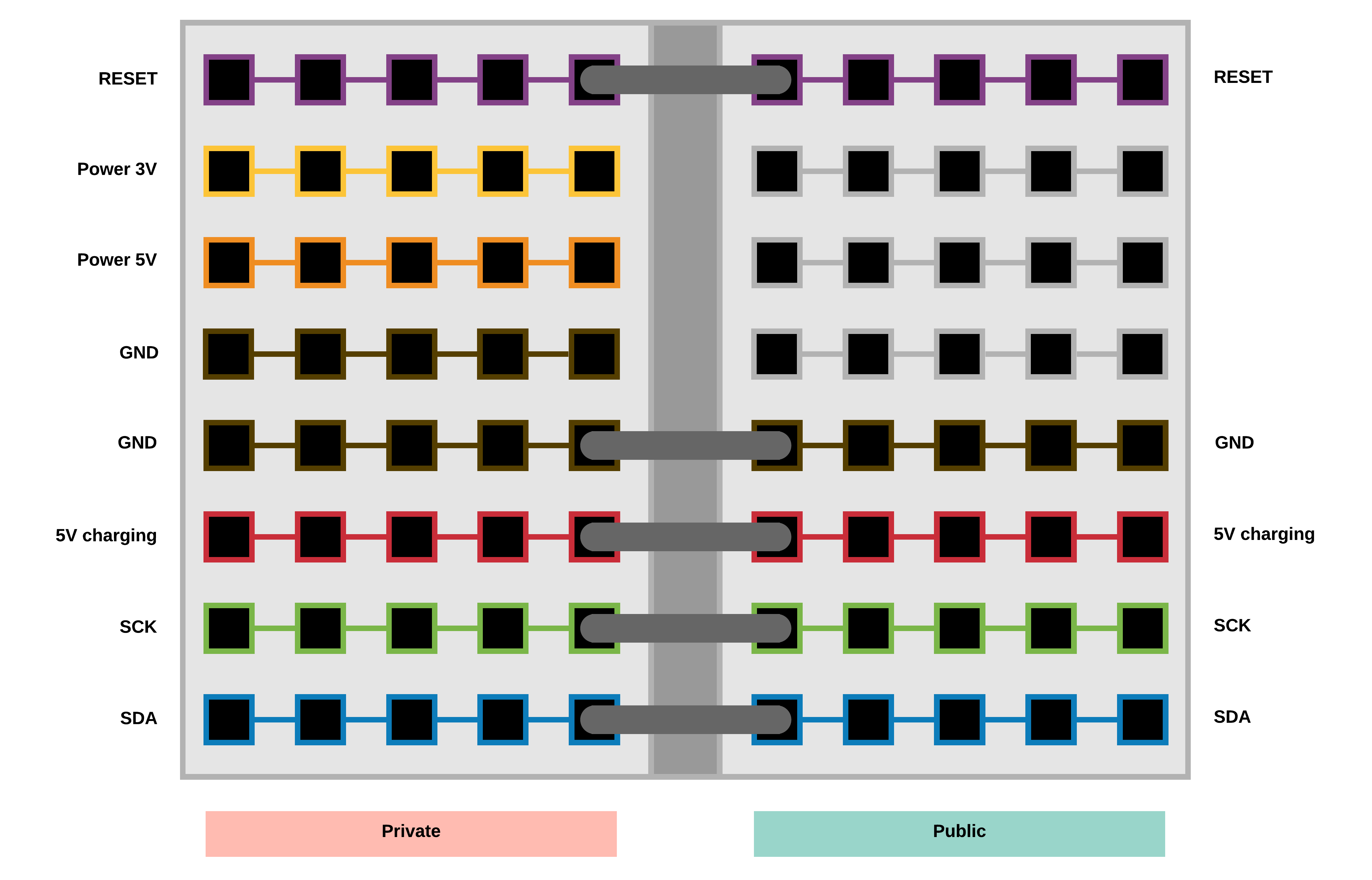

Again, to not spending the time for development of physical connectors, let's use best practices.

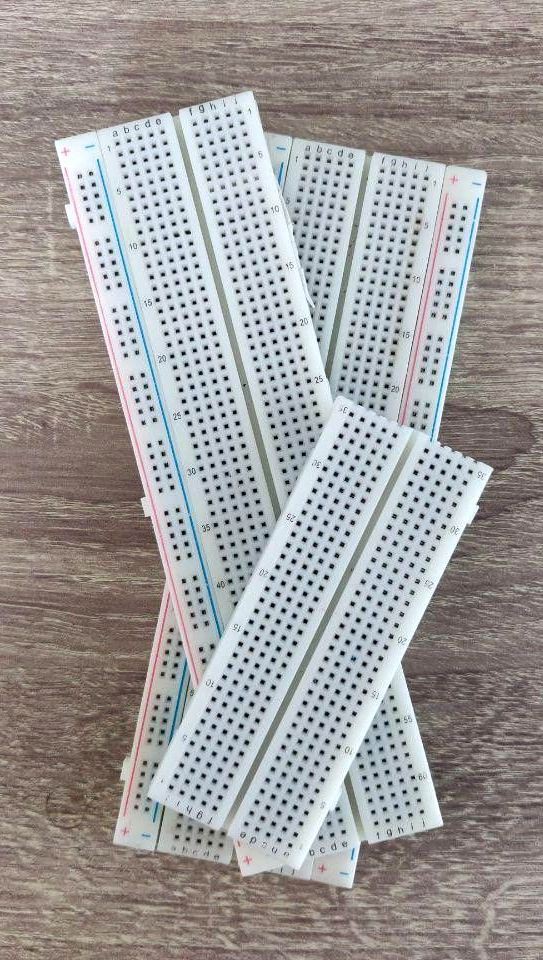

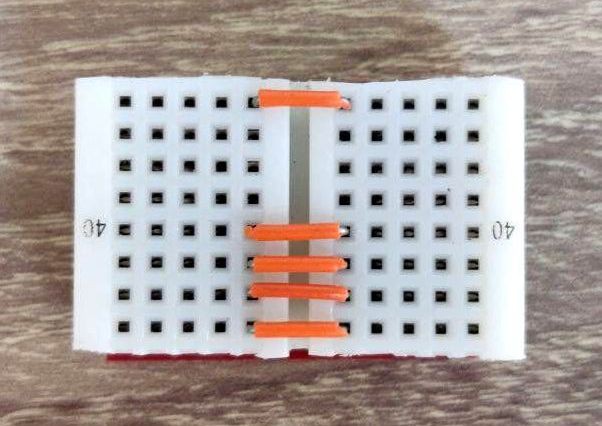

1. Take breadboards:

![]()

2. Think a little

![]()

3. Cut!

![]()

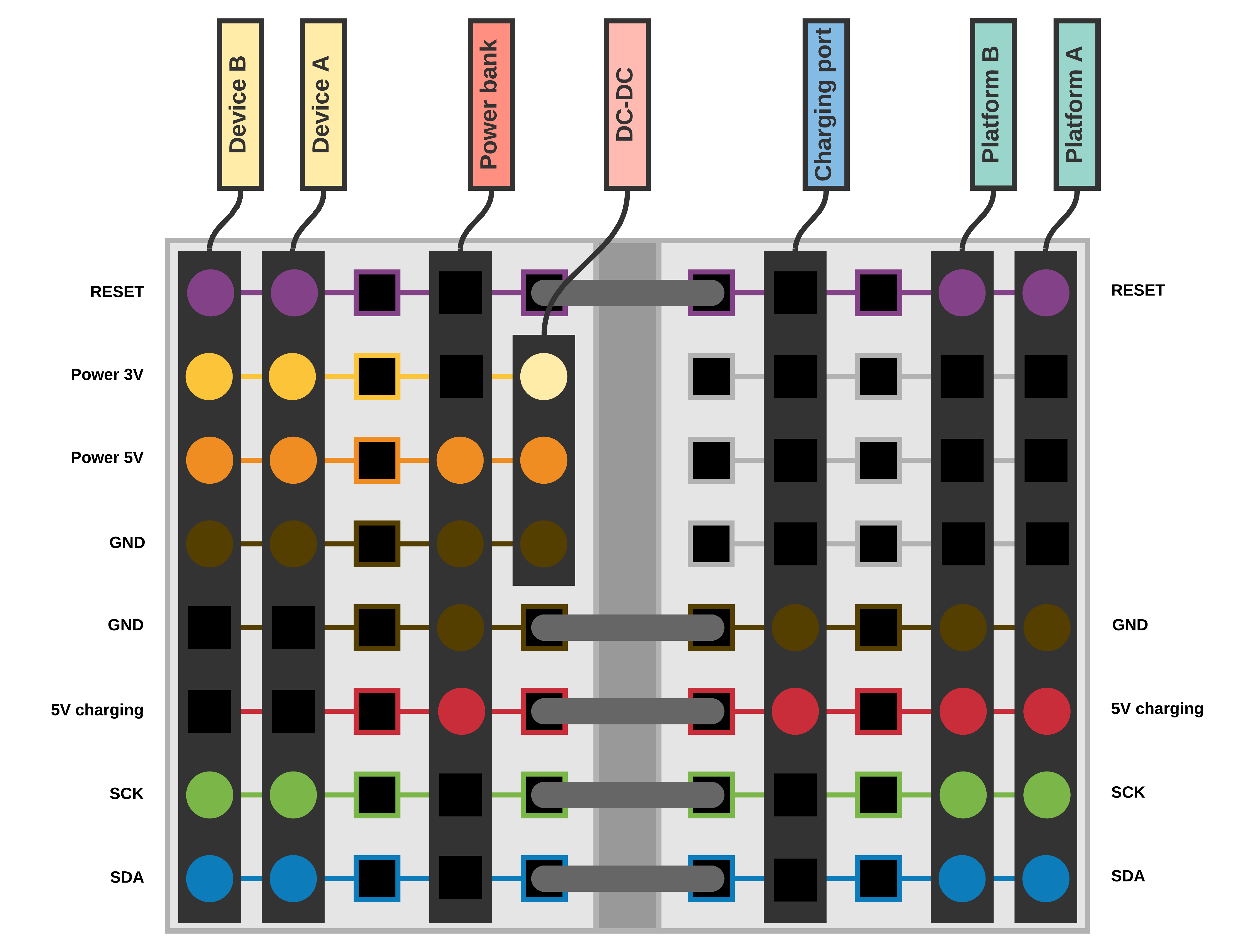

And here what and how we can connect:

![]()

As a result, two platforms connected look like:

![]()

And no more blinking of LCD backlight while the Raspberry is thinking, or motors are turning!

I'm waiting a bunch of cables and switchers so next time I'll show the final variant of Zakhar's power supply solution and we, having a stable and plug-and-play set of flatform will continue to investigate the life behavior and how to implement it for the robot!

Stay tuned!

P.S If you have any better solutions let me know!

-

Turns: -45, +45, -90, +90 degrees

07/21/2020 at 17:07 • 0 commentsUpdated code here: https://github.com/an-dr/zakhar_platform/pull/2

-

Assembled with the new moving platform! Turn 90 degree

07/19/2020 at 18:43 • 0 commentsFrom the Raspberry the moving platform gets an argument 0x5a (90) degrees, then a command to turn right. If the argument is 0x00 command is executing during 100ms then the platform stops.

-

New ESP32-based platform testing: Angles!

07/11/2020 at 18:20 • 0 commentsWorks:

- GY-91 through I2C: gyroscope + accelerometer

- Serial for external control

- I2C slave for external control

- Wireless connection - Bluetooth serial port

- Motors control

- PWM for motors - three speeds

- RPM encoders

- Position data handling - calibration, filtering, conversion in angles

To-do:

- Code refactoring and configuration with menuconfig

Work in progress here: Feature/esp32 by an-dr · Pull Request #1 · an-dr/zakhar_platform

Andrei Gramakov

Andrei Gramakov