-

End of line

05/24/2021 at 19:00 • 0 commentsThe research in this project is completed.

Please go to the new project instead.

-

Native VSYNC event

04/11/2021 at 21:26 • 0 commentsApparently there is a way to tie a function to hardware VSYNC event. I found it in a closed issue for Raspberry Pi userland: https://github.com/raspberrypi/userland/issues/218

#include <stdio.h> #include <stdlib.h> #include <stdarg.h> #include <time.h> #include <assert.h> #include <unistd.h> #include <sys/time.h> #include "bcm_host.h" static DISPMANX_DISPLAY_HANDLE_T display; unsigned long lasttime = 0; void vsync(DISPMANX_UPDATE_HANDLE_T u, void* arg) { struct timeval tv; gettimeofday(&tv, NULL); unsigned long microseconds = (tv.tv_sec*1000000)+tv.tv_usec; printf("%lu\tsync %lu\n", microseconds,microseconds-lasttime); lasttime = microseconds; } int main(void) { bcm_host_init(); display = vc_dispmanx_display_open( 0 ); vc_dispmanx_vsync_callback(display, vsync, NULL); sleep(1); vc_dispmanx_vsync_callback(display, NULL, NULL); // disable callback vc_dispmanx_display_close( display ); return 0; }To compile it you need to add paths to /opt/vc and link with libbcm_host.so, like

gcc test.c -o test -I/opt/vc/include -L/opt/vc/lib -lbcm_hostI have copied this code into my repository https://github.com/ytmytm/rpi-lightpen/blob/master/vsync-rpi.c

That issue is closed and this snippet works for me. The output is about 20000us but not stable at all.

There is a lot of jitter because the time is saved only in the userspace, not as quickly as possible in kernel. Even if I try to tie my routine somewhere in the kernel, I don't know how much time is needed to service these events. This is some kind of message queue between CPU and GPU. Unfortunately it's not as simple as a direct IRQ.

So it's a good way to wait until VSYNC but it's not precise enough for lightpen needs.

-

Software stack cleanup

07/05/2020 at 12:28 • 0 commentsI have reorganized the code and pushed everything to Github.

There are only three source files:

- rpi-lightpen.c - Linux kernel driver that will timestamp IRQs from VSYNC and the light pen sensor. From the time offset between those two events it calculates the difference in microseconds and divides it by 64 (timing of scanning one PAL line) and shows this as Y coordinate (row). The remainder from division is the X coordinate (column). The third result is the lightpen button state. All of this can be read from /dev/lightpen0 device.

- lp-int.py - this python3 pygame program will start with calibration and then will just show a text that follows light pen position

- lp-int-uinput.py - this python3 pygame program will start with calibration and then will convert numbers from /dev/lightpen0 into uinput events as if coming from a touchpad/touchscreen/tablet with absolute coordinates

I tried to use lp-int-uinput.py with classic NES Duck Hunt game but the crosshair shakes uncontrollably. This is very strange considering that lp-int.py uses exactly the same code and the position of the text on the screen is rather stable.

-

Shaky software stack

07/02/2020 at 09:45 • 0 commentsThe software side of this project is a hack on a hack right now.

On the very bottom we have gpiots kernel module to timestamp interrupts from GPIO.

One layer above there is a C program that I wrote that does the timing calculation and output x/y/button status to the standard output.

One layer above there is a Python (Pygame) program that I wrote that takes this information from stdin and actually does something: a test/demo to show where it thinks lightpen is pointing at.

That wasn't enough so I wrote another Python program that does the calibration and then passes the x/y/button states back into Linux kernel through uinput interface.

So we've come a full circle - some information is decoded from gpio and timing inside the kernel, then goes through two userland processes just to be passed back into the kernel uinput subsystem to be made available for any software.

I'm so proud of myself and modern ducktape software engineering.

---------- more ----------The clean solution will be of course to fork gpiots module, do the calculations there and pass events without leaving kernel space. This new module needs an interface where calibration info would be provided from a user program.

I have included everything done so far into "lp-20200702.tar.gz" archive that you can find in the "Files" section.

The way it's supposed to work is

- go to gpiots/ folder and build the module, as root run the insmod.sh script to copy the module into proper space and load it into kernel with GPIO 17 and 23 options

- do "make" to compile lp-gpiots.c program

- pip install uinput library for Python

Once you have it, in a shell pipe lp-gpiots output into lp-demo.py to see a simple demo on the framebuffer:

./lp-gpiots | python3 -u lp-demo.py

Or you can pass the events to uinput:

./lp-gpiots | python3 -u lp-uinput.py

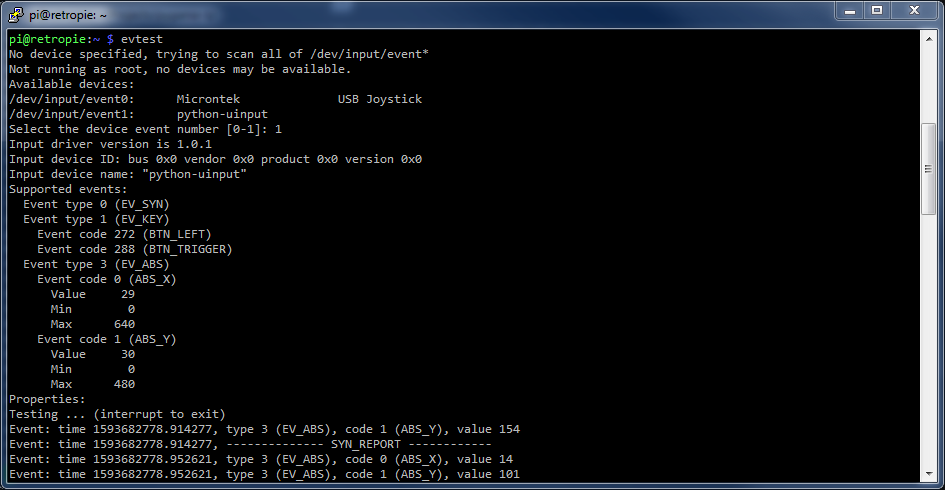

These can be viewed using evtest program:

My calibration is way off, but this setup already allows to play NES lightgun games, like Duck Hunt.

-

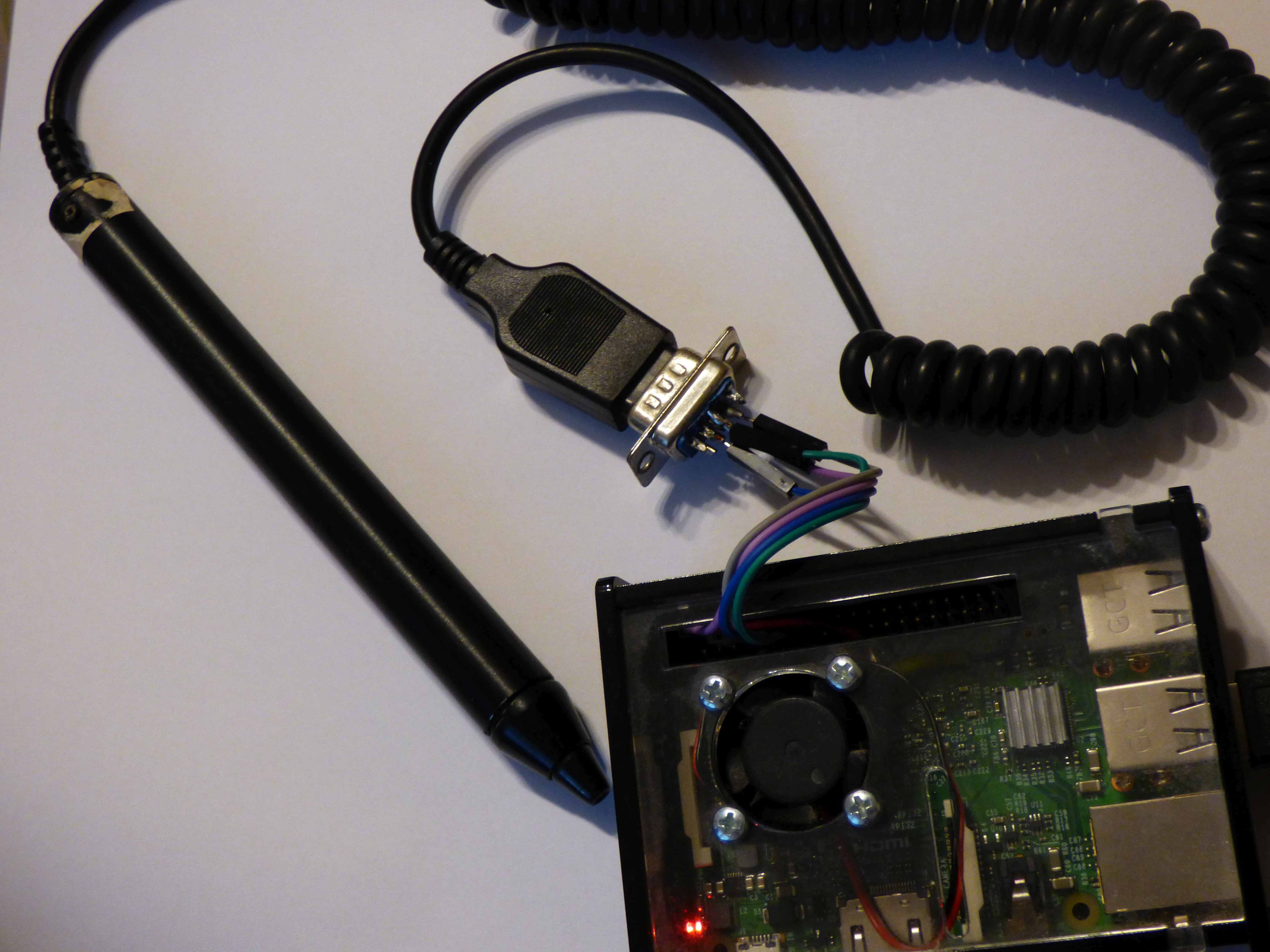

The prototype, it works!

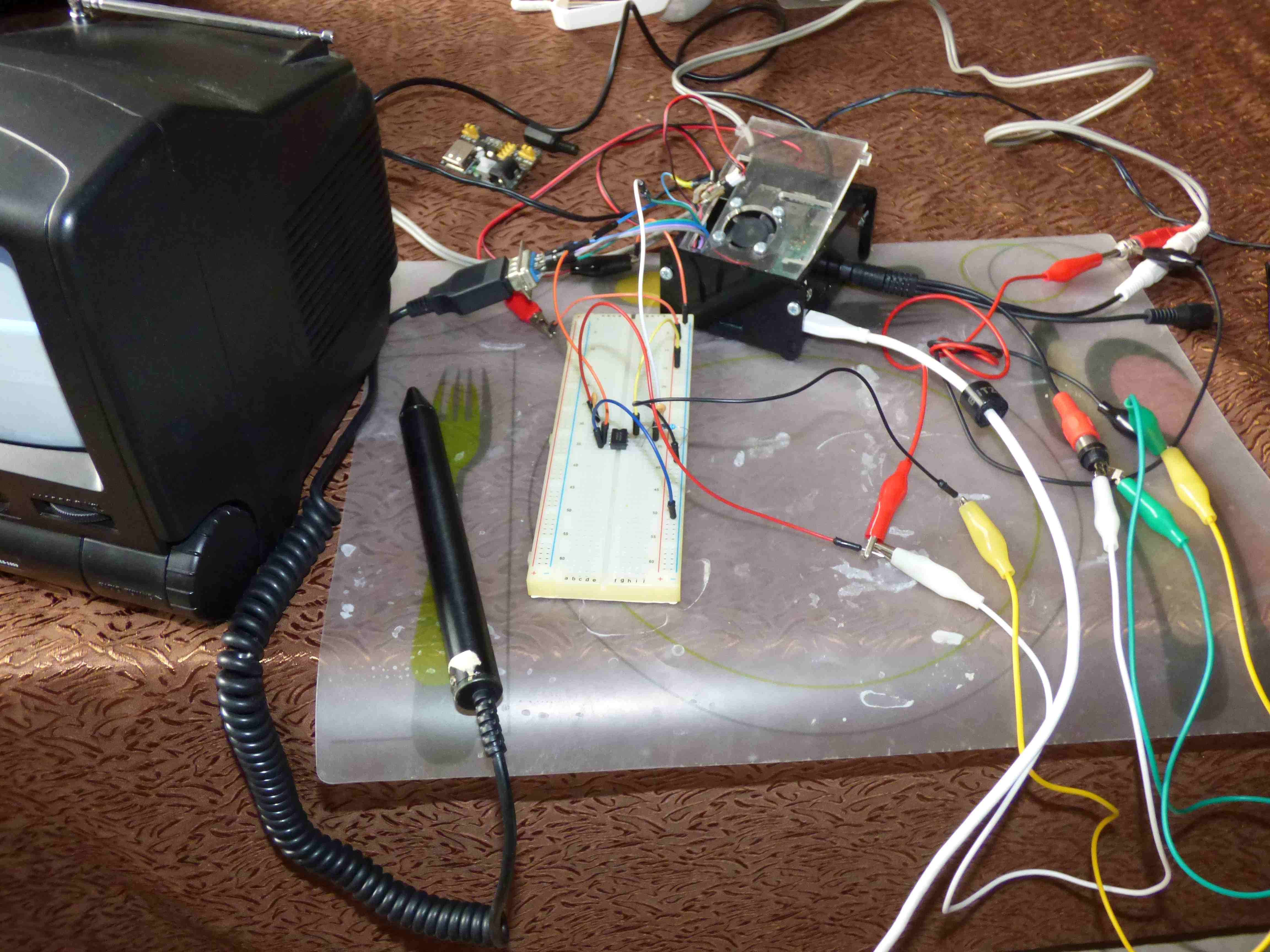

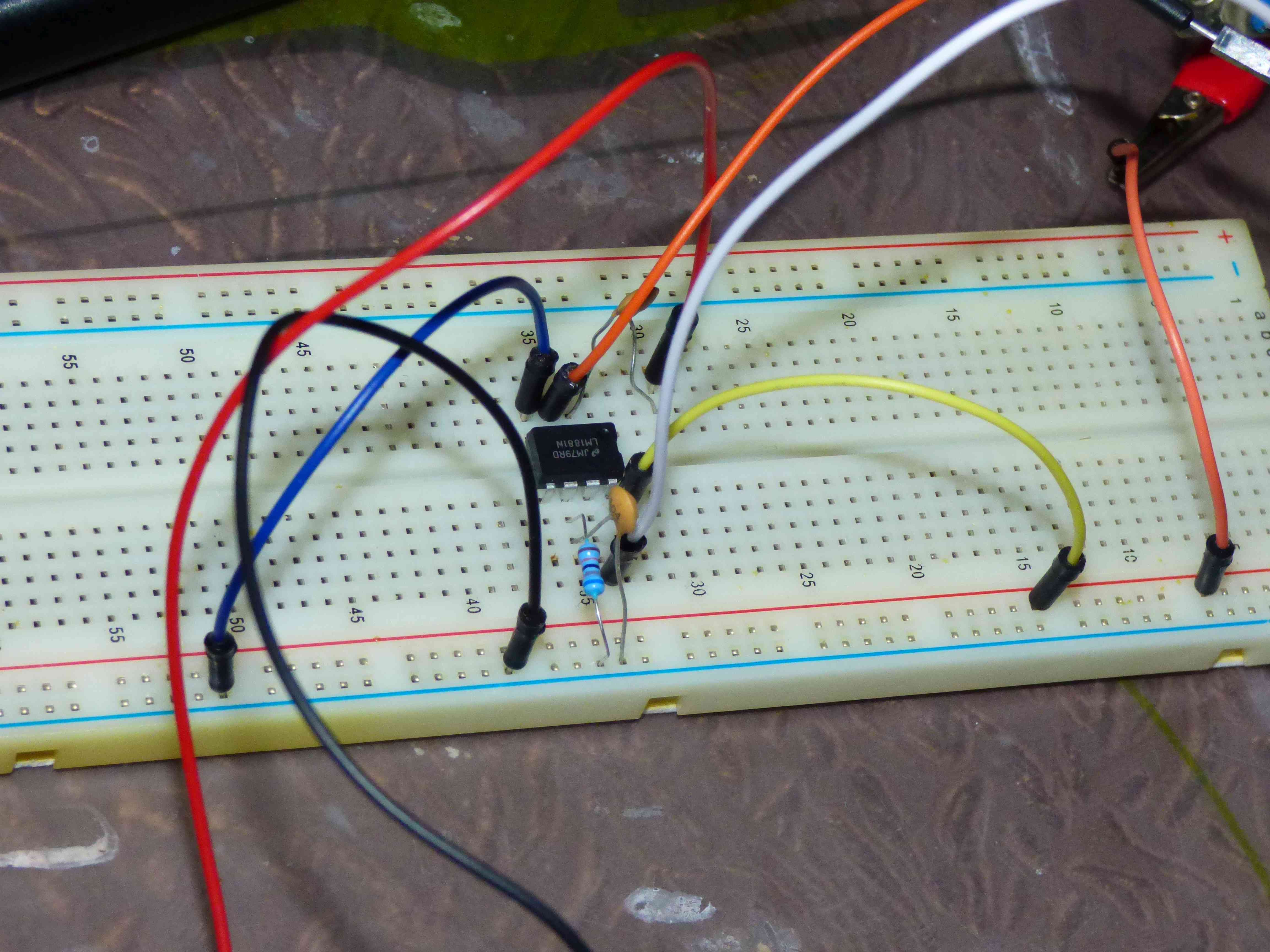

07/02/2020 at 08:46 • 0 commentsThe LM1881 chips have arrived, so I was able to continue with this project.

I started with wiring LM1881 directly to RaspberryPi and it turned out that with gpiots I get quite stable timings so I think I will abandon the idea of separate AVR microcontroller for preprocessing.

This is how the prototype looks like:

![]() ---------- more ----------

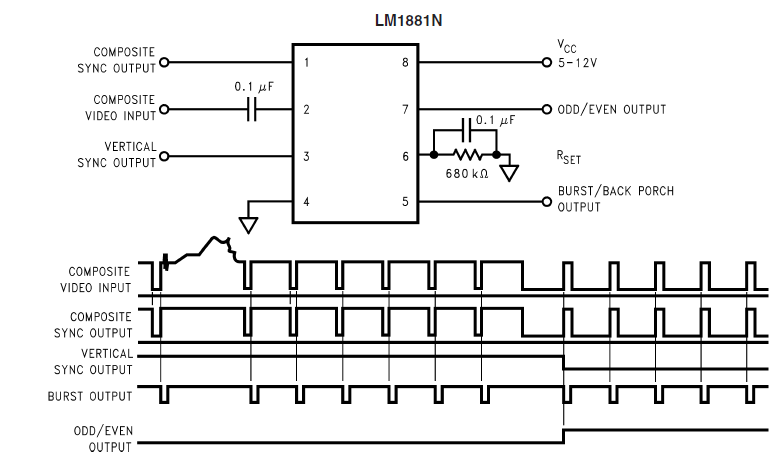

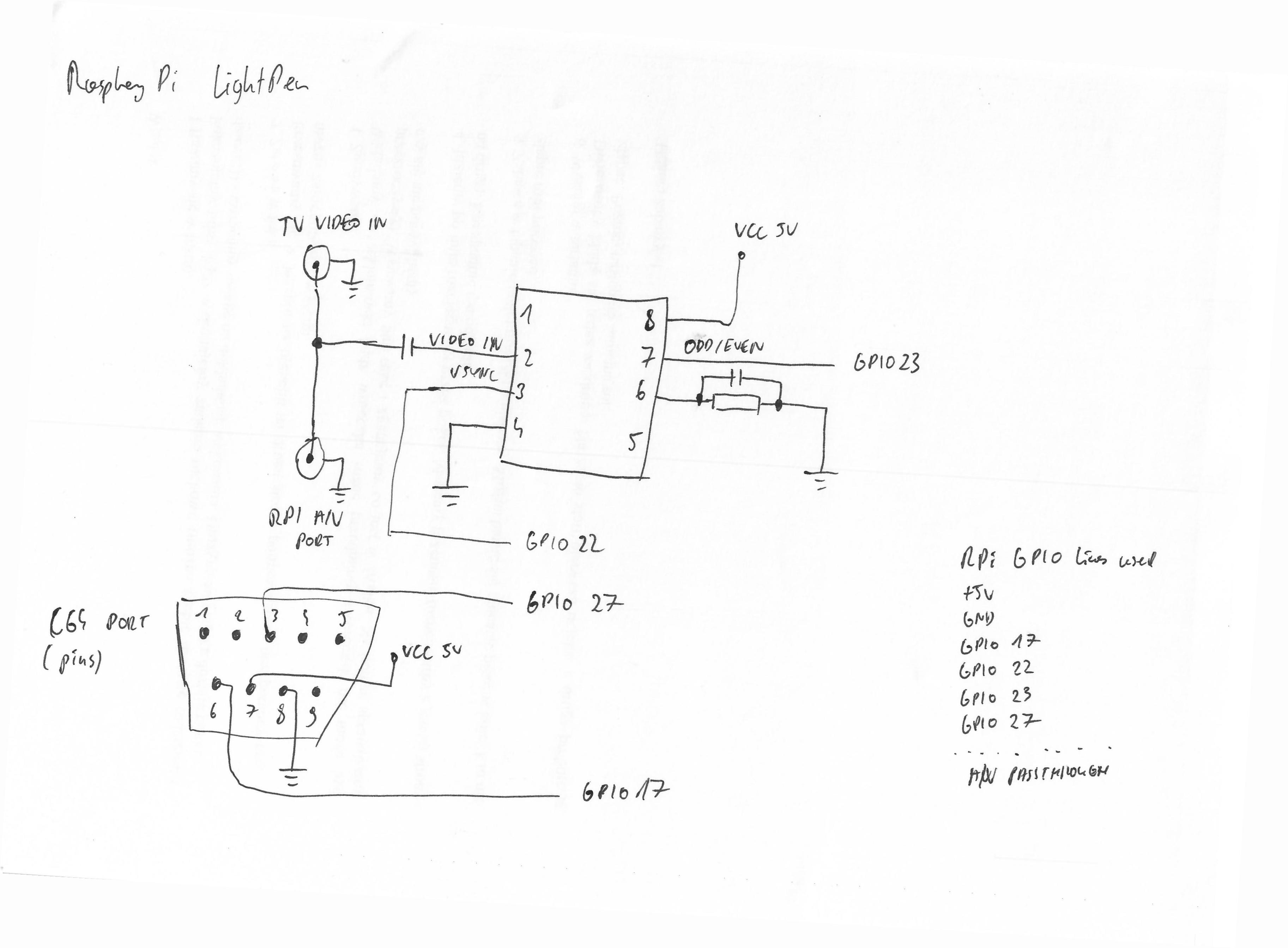

---------- more ----------I have used the circuit taken directly from the datasheet:

There is one 680K resistor, two 0.1uF capacitors. I needed also one RCA plug and one socket to tie into Raspberry Pi video signal.

![]()

Here is the complete ciruit diagram prepared in PaperCAD

![]()

As mentioned earlier I'm using GPIO27 for the lightpen button and GPIO17 for the phototransistor.

New connections are VSYNC (pin 3) from LM1881 that goes to GPIO22 and ODD/EVEN (pin 7) that goes to GPIO23.

We have also a passthrough (plug and socket) to tie into composite video signal coming out of Raspberry Pi's A/V port.

We need to enable timestamping of interrupts on GPIO22 and GPIO17:

sudo modprobe gpiots gpios=17,22We want to calculate the time difference between interrupt on GPIO22 (VSYNC) and interrupt on GPIO17 (light pen phototransistor) - when electron beam scanning the screen will reach the position we're point at.

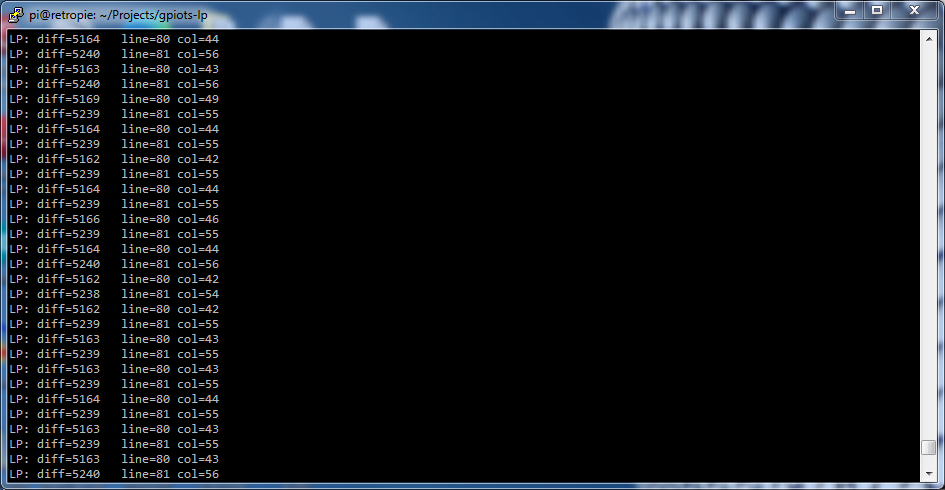

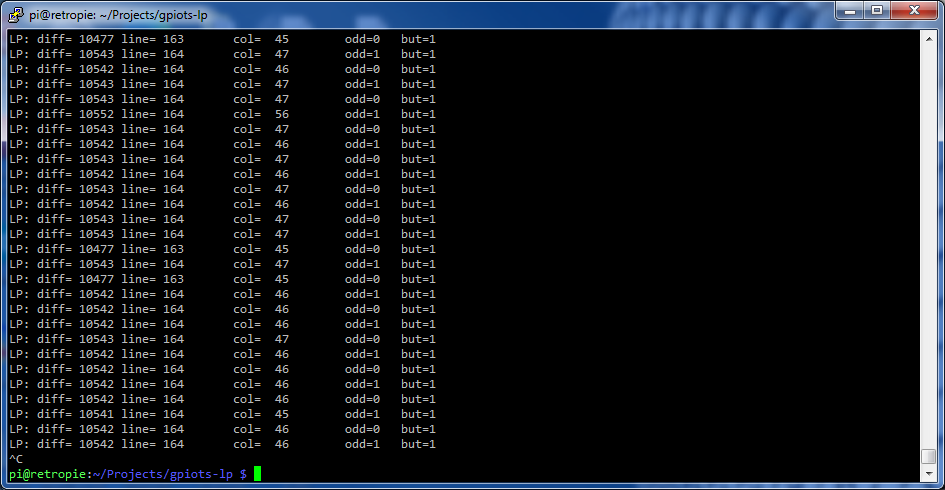

Here is what I got:

![]()

Can you see the issue? The timings appear stable but there is a difference between every second interrupt.

Of course I forgot that the image is interlaced (even with all the flickering going on). The C64 outputs non-interlaced image so it just didn't occur to me.

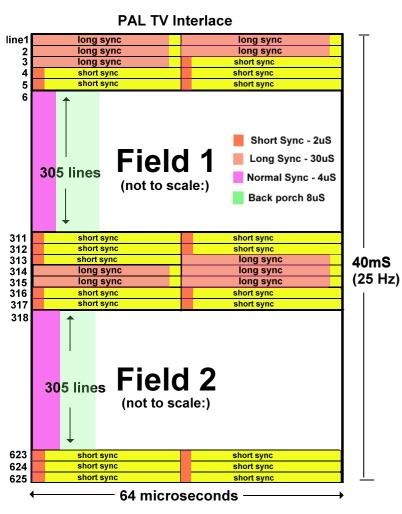

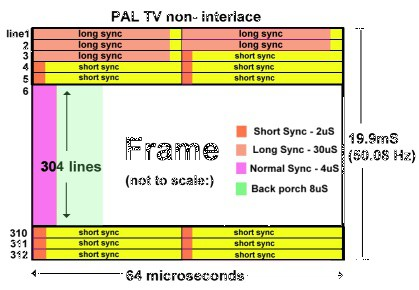

This is the proper timing diagram:

![]()

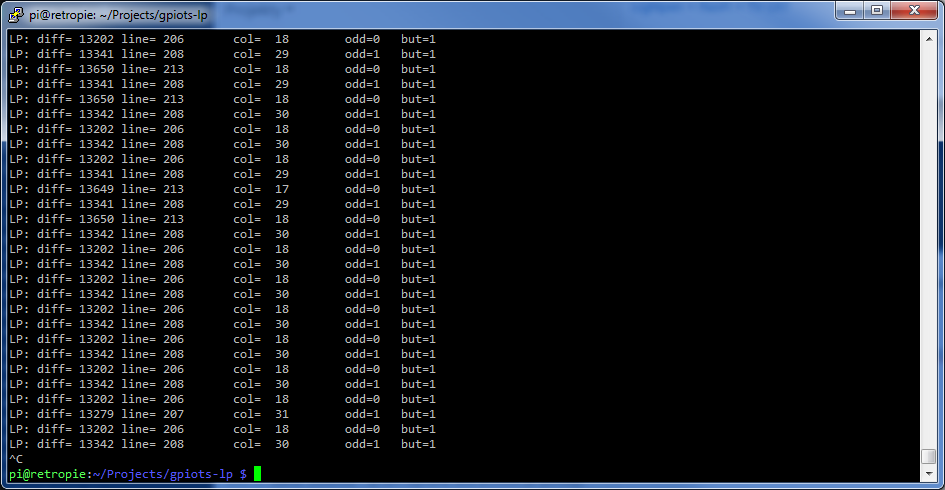

I have used information from ODD/EVEN line from LM1881.

![]()

I tried to add some 77us offset to get result like this:

![]()

In the end it wasn't stable over whole screen area and I decided that I will just take only even or only odd frames anyway.

This reduces the problem to 25 readings per second and I can get at most about 300 lines resolution. That's plenty enough for a lightpen.

-

No VSYNC? No problem.

06/07/2020 at 17:20 • 0 comments(I'm waiting for delivery of LM1881 chips so this is all just speculation).

Plan B

Since I don't know how to use native VSYNC IRQ from RaspberryPi on Linux, and no timing is really guaranteed, obviously I need extra hardware for the lightpen support.

My plan is to use an Arduino Pro Mini (ATTINY would do but I don't have any) with a specialized LM1881 chip to detect VSYNC out of composite signal delivered from RaspberyPi.

LM1881 is video sync separator. It is able to decode composite video signal and provide sync signals. The datasheet is here.

The Arduino should:

- Start internal timer

- Reset timer on VSYNC interrupt (just before that store internal timer value for calibration)

- Wait for light pen sensor signal interrupt

- Read internal timer

Using the timer value between two consecutive VSYNCs and time between last VSYNC and light sensor we should be able (after some calibration) to determine X/Y coordinates of the electron beam with an accuracy at least as good as on C64/128.

There is plenty of time for calculations, as the light pen sensor event happens only 50 (or 60) times per second.

The communication of Arduino with RaspberyPi would happen over I2C. Arduino would provide timer values or calculated coordinates on demand.

There are some Arduino projects with LM1881 already:

https://www.open-electronics.org/a-video-overlay-shield-for-arduino/

-

Wait, VSYNC

06/07/2020 at 17:11 • 0 commentsIf you had a look at the code attached to the previous log entry you will see that the way I try to determine the length of one frame is quite convoluted.

This is all because there is no easy access to VSYNC interrupt on the Raspberry Pi under Linux.

All I found was an exchange that the support is there in the firmware:

https://github.com/raspberrypi/firmware/issues/67

and someone was able to use it when running bare metal software, not Linux:

https://github.com/rsta2/circle/issues/50

But I don't know how to hook and timestamp this IRQ in Linux kernel.

If I could do it, I would simply take the difference between timestamp of light trigger IRQ and VSYNC IRQ and calculate beam position out of that.

If someone knows how to do it, please let me know.

Time for plan B.

-

Timing is everything

06/07/2020 at 17:05 • 0 commentsThe operation of the light pen depends on accurate time measurements. On a C64/128 this is realized in the hardware - the VIC chip latches X/Y position when light sensor is triggered.

The plan

I didn't have high hopes for Raspberry Pi running Linux to be able to measure time with required accuracy. This is the timing diagram for PAL:

---------- more ----------![]()

The refresh rate is 50Hz, so we have 1/50s=20ms for every frame. Every line takes 64 microseconds. I would be very happy with being able to detect at least 4 regions on the line, not even aiming for pixel-level resolution.

This means that I need to be able to accurately measure time since a fixed time 0 (e.g. middle of the screen, when I release the button after calibration) with known time per frame. I need better than 64us resolution to determine lines and 16us resolution to determine 4 regions in every line.

It's not possible to do from userspace. Fortunately I found a very neat project on GitHub where someone else had the same need.

Enter GPIOTS

This is a kernel module that creates /dev/gpiotsXX devices and provides timestamps for interrupts coming from selected GPIO pins.

Initially I tested it on another Raspberry Pi which is connected to a GPS receiver with PPS line. The numbers from gpio_test.c were promising:

SSS MMM uuu 428 966 912 429 966 914 430 966 912 431 966 911

The PPS signal arrives every second, so the difference between every number should be exactly 1000000. It's not exactly that, but it's close. After running for a long time I determined that the resolution was about 3us, so I should be able to detect not four fields per line but up to 16.

Dreams shattered

Unfortunately it doesn't work like that on a real RPi connected to a real CRT. There is a lot going on and I'm still not sure if I didn't overlook anything obvious.

Well it kind of works. After connecting light pen and trying it out I was able to:

- detect long delay (~19ms) between frames

- detect short delay (~64us) between lines - light pen has large opening and will pickup the electron spot scanning neighbour lines

...and that's about it. I tried to count a fixed amount of time since time_0 for every frame and use the difference between the elapsed time and the time when light sensor interrupt arrived to calculate what should be the offset.

I got no consistency at all, the numbers are all over the place, it was not possible to determine even the Y position to any accuracy. I was expecting to have drift or low accuracy, but not something that seems to be random.

You can find the source code for my play/test program attached.

This somewhat contradicts the GPS PPS test, and I'm not really sure why.

-

Light pen to Raspberry Pi's GPIO

06/07/2020 at 16:41 • 0 commentsAt start let's try to connect the light pen directly to Raspberry Pi's GPIO.

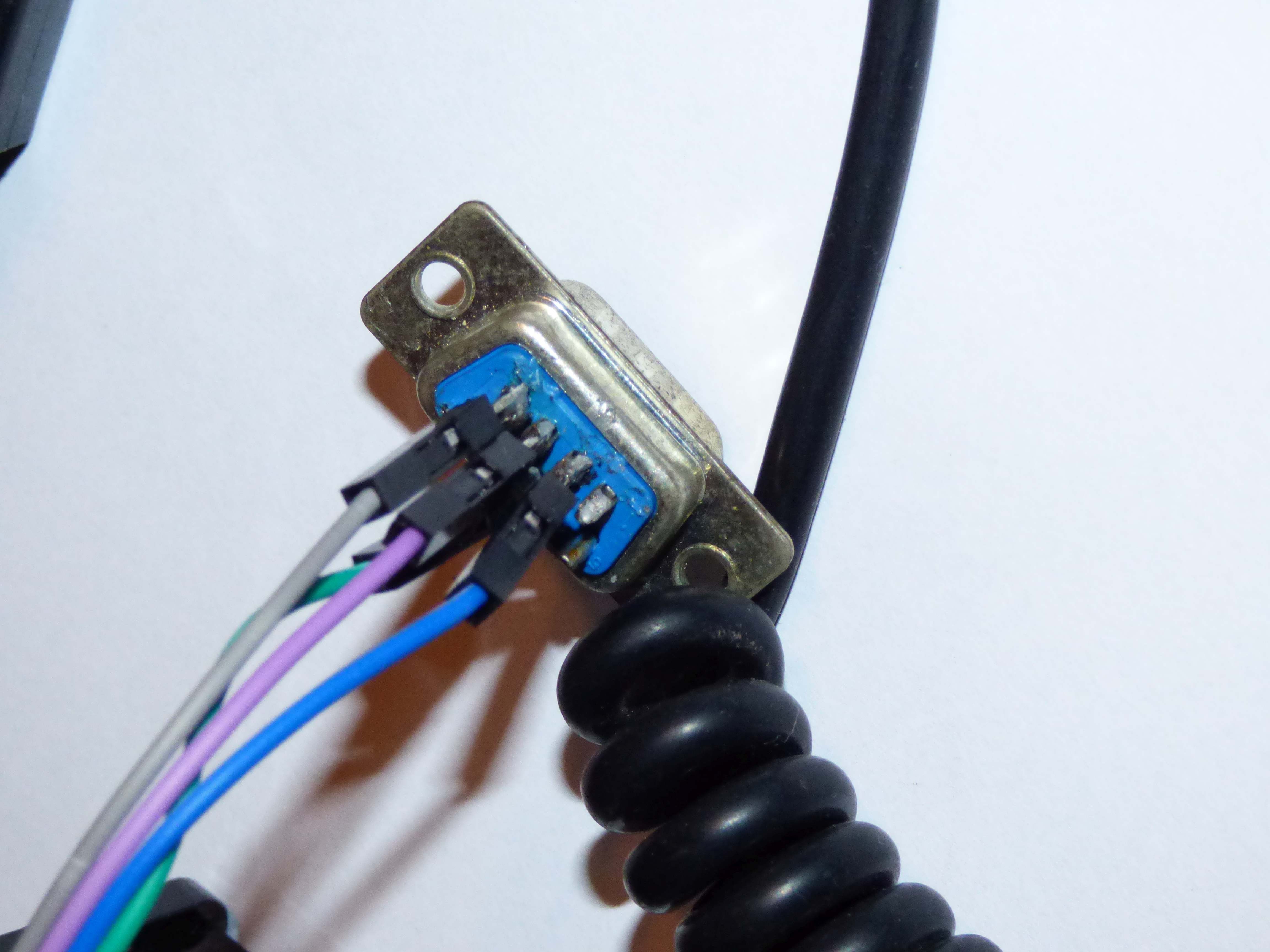

The plug of my light pen already had hints that we are only interested in four lines: GND, +5V, button and light detector.

![]()

Here is how I wired a simple adapter.

Power line goes to the +5V line from GPIO because there is a TTL chip inside that requires +5V as its power supply.

![]()

I chose to connect the button line (pin 3 on the plug) with GPIO 27 and light detector to GPIO 17.

![]()

-

Raspberry Pi setup for CRT

06/07/2020 at 16:31 • 0 commentsI'm using Raspberry Pi 3B. I will not go into details of RetroPie installation and configuration. There are much better resources on that.

Here is what I use to make RetroPie display image over composite video.

# If your TV doesn't have H-HOLD potentiometer then position of the left # screen edge can be controlled with overscan_left setting. overscan_left=16 #overscan_right=16 #overscan_top=16 #overscan_bottom=16 # composite PAL sdtv_mode=2 # I'm using black and white TV, disabling colorburst is supposed # to improve image quality on monochrome display, # but I don't see any real difference sdtv_disable_colourburst=1 # possible aspect ratios are 1=4:3, 2=14:9, 3=16:9; obviously this is 4:3 standard sdtv_aspect=1This way I get a 640x480 image with a glorious interlaced flicker of 50Hz refresh rate.

Light pen support for RetroPie

Can a Raspberry Pi with CRT display support 8-bit era light pen?

Maciej Witkowiak

Maciej Witkowiak