-

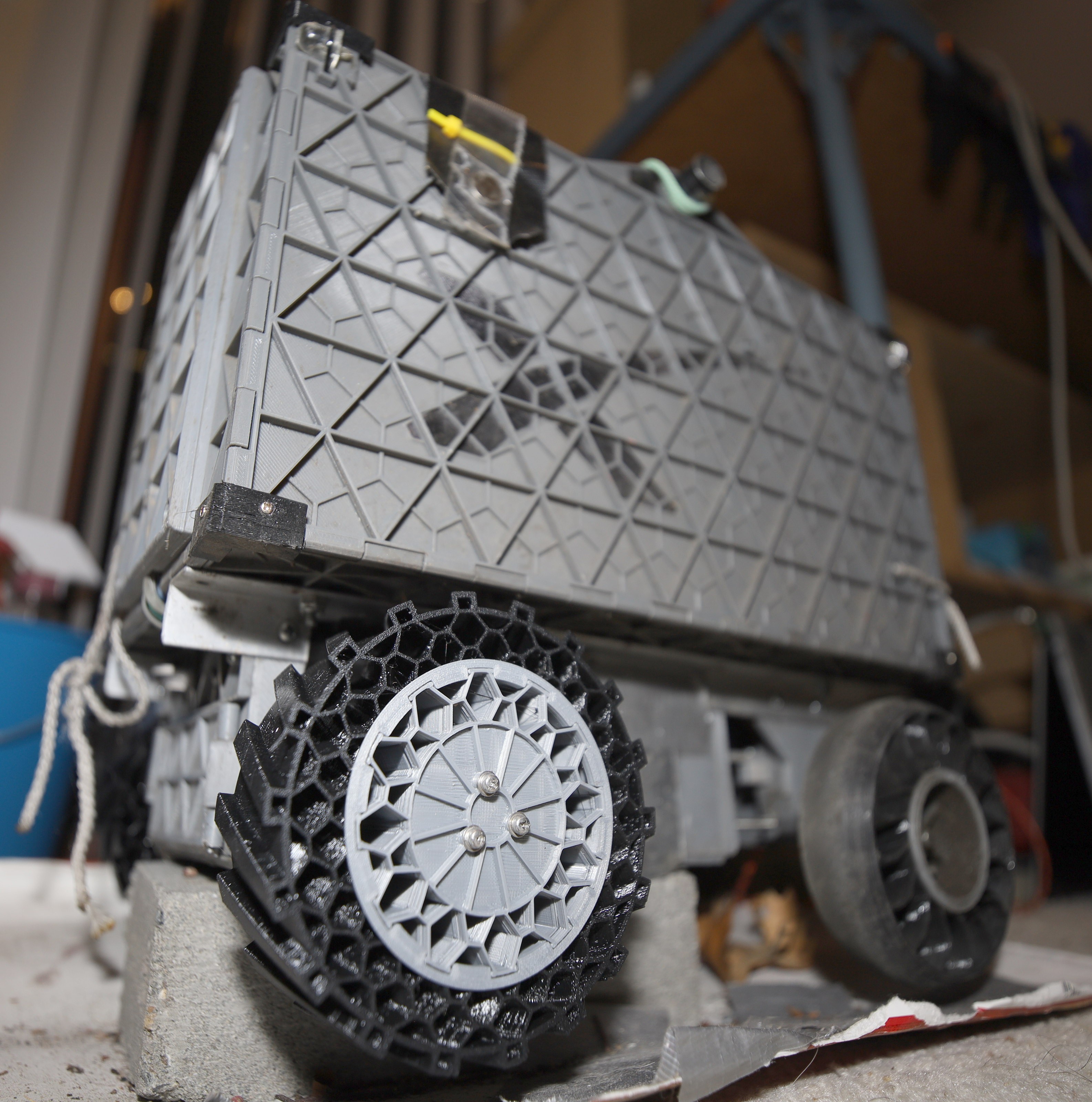

Mechanical improvements

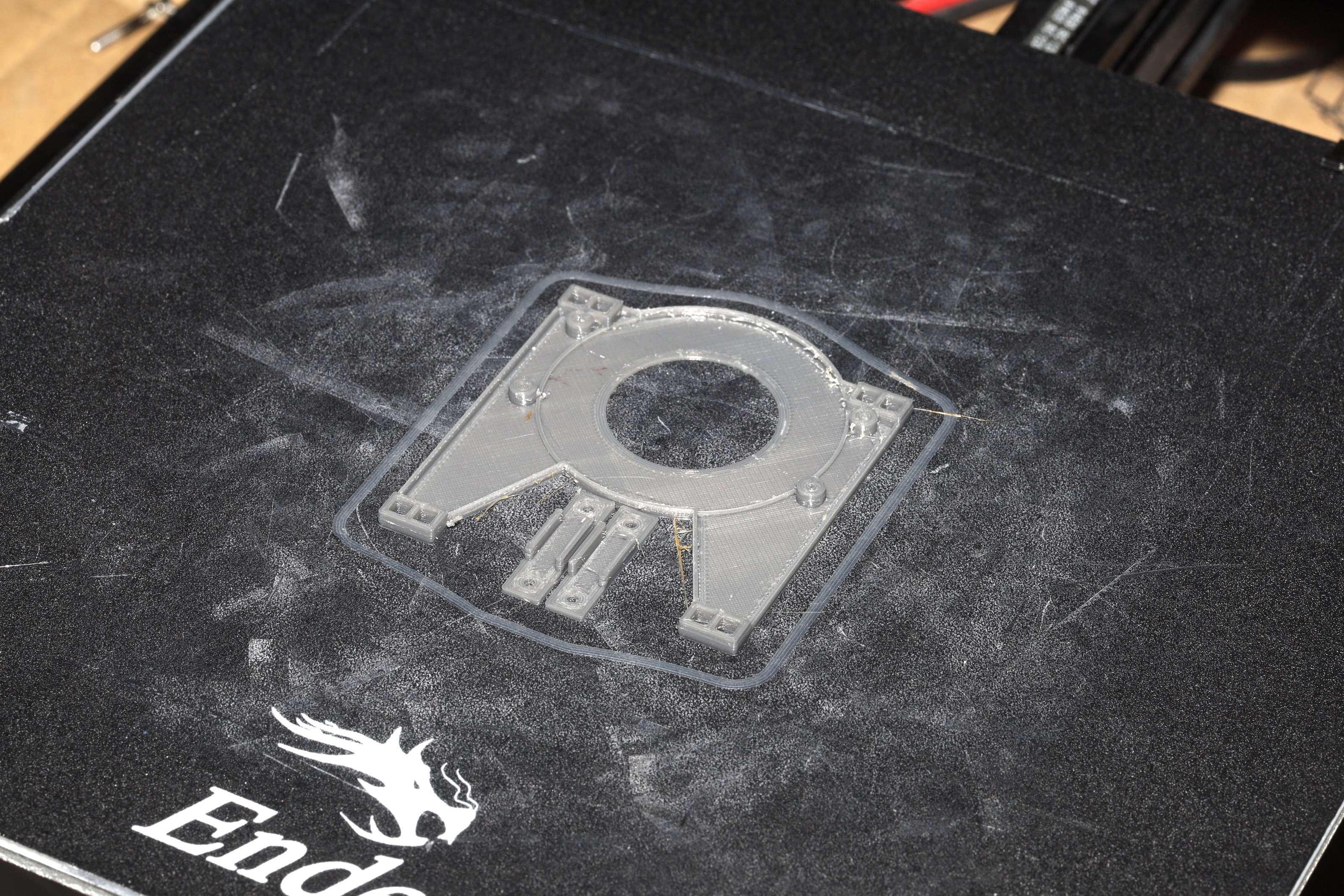

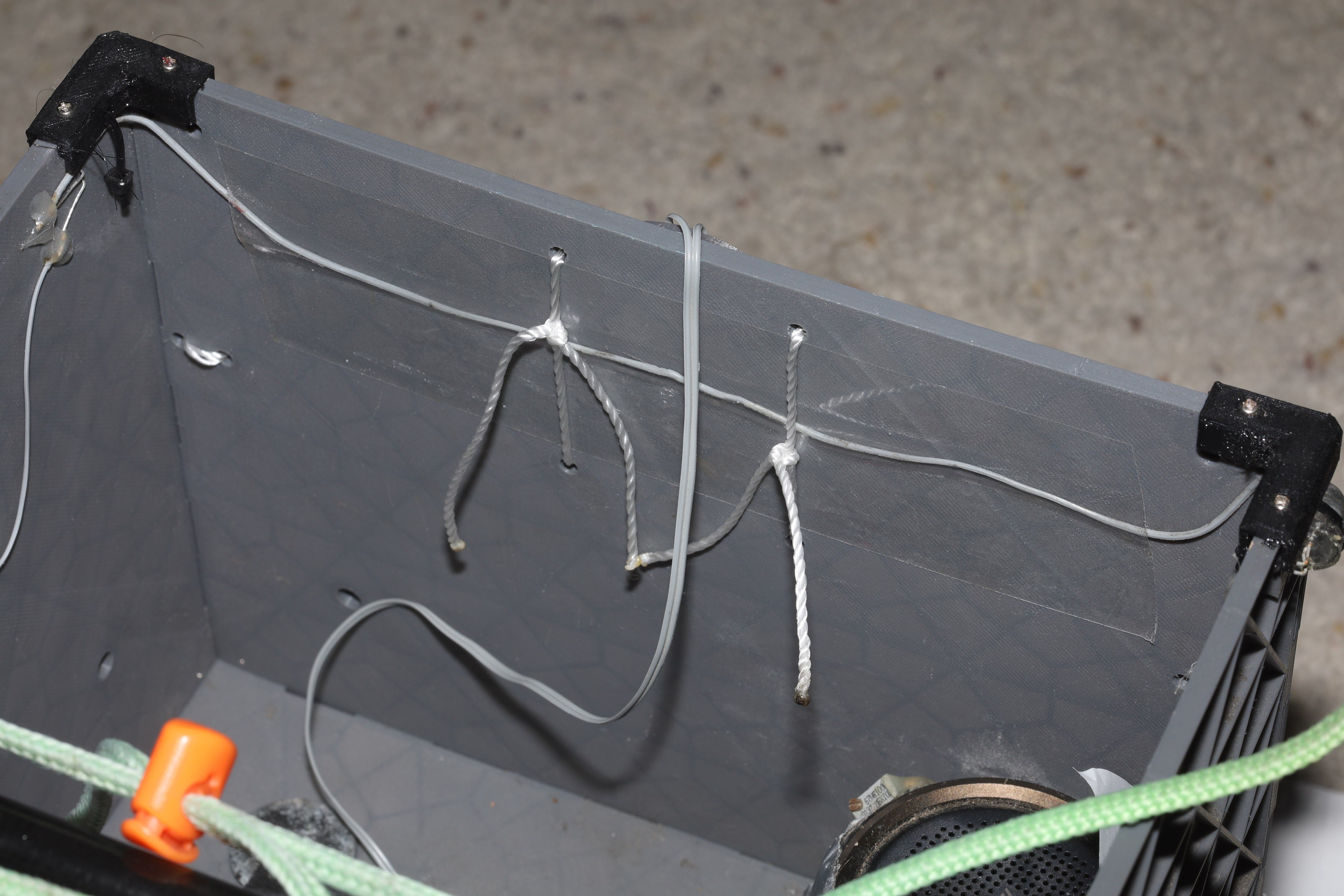

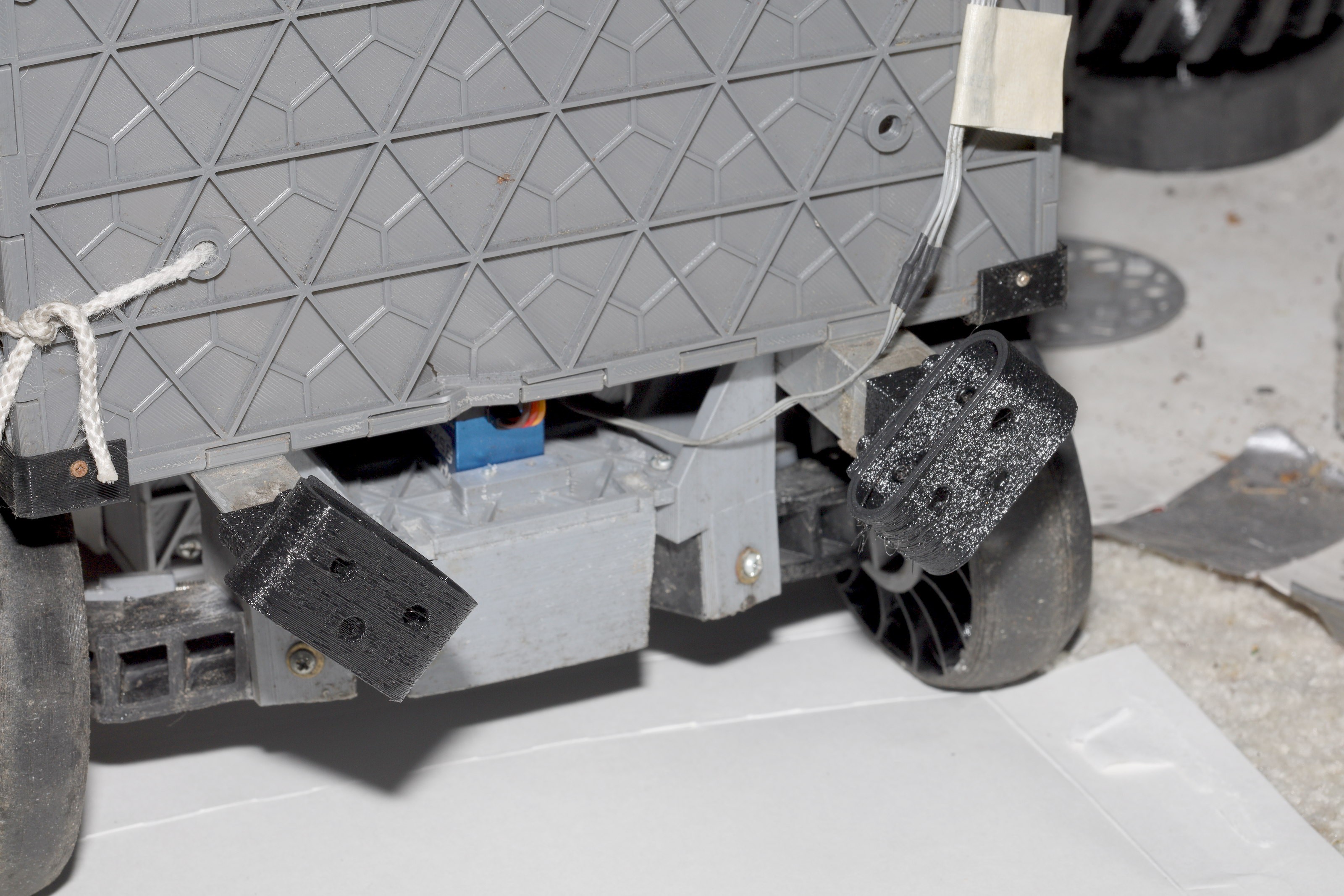

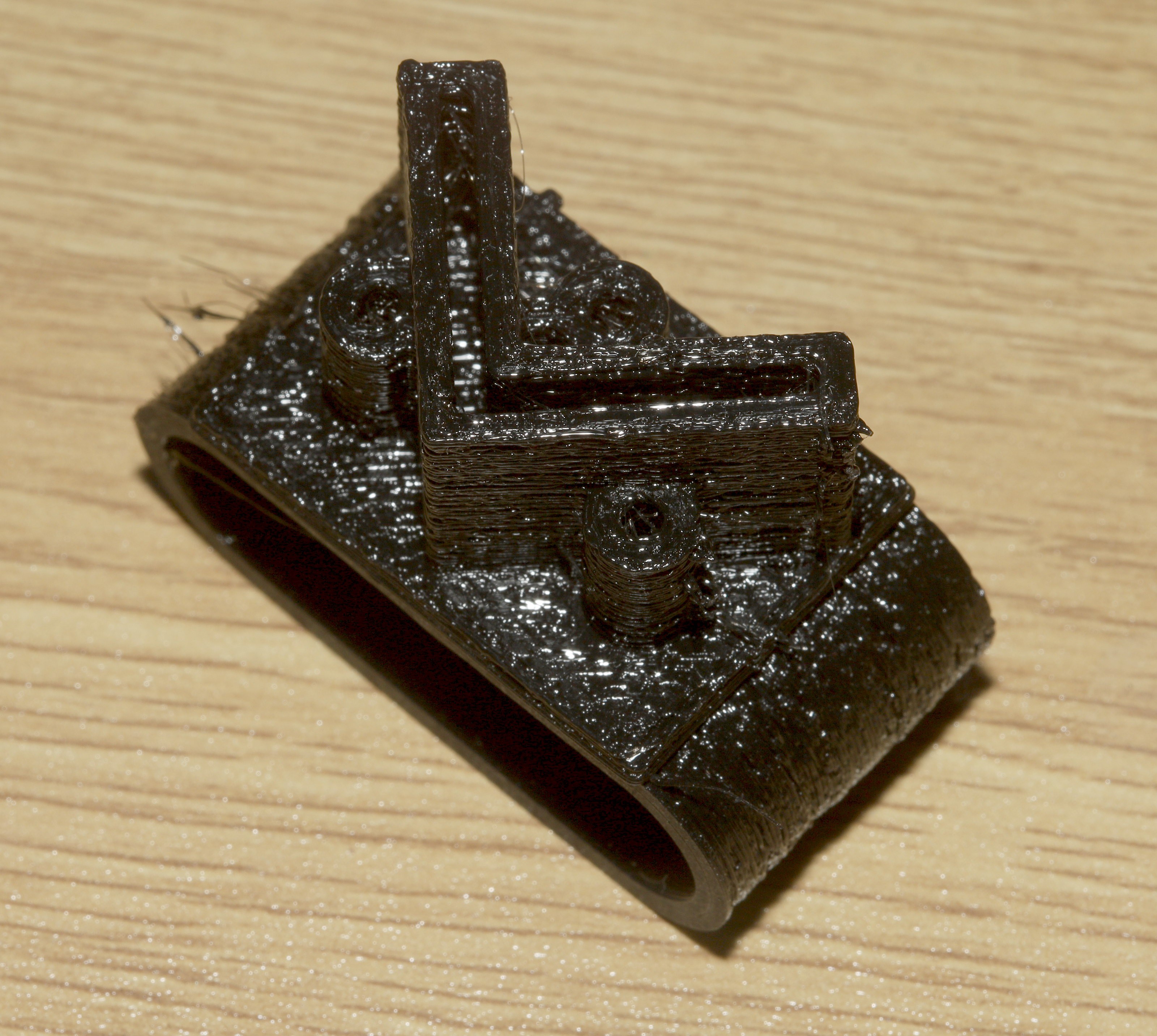

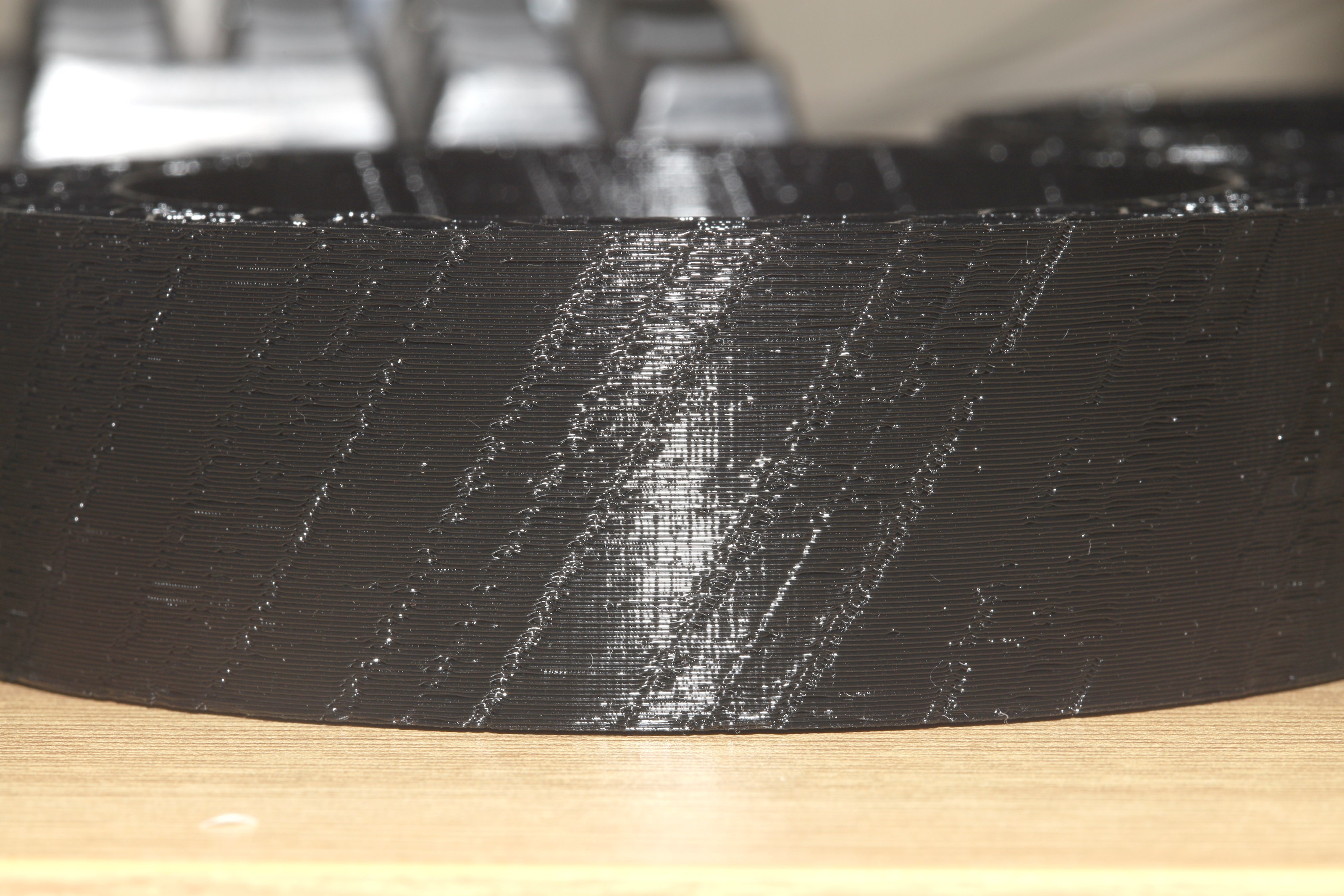

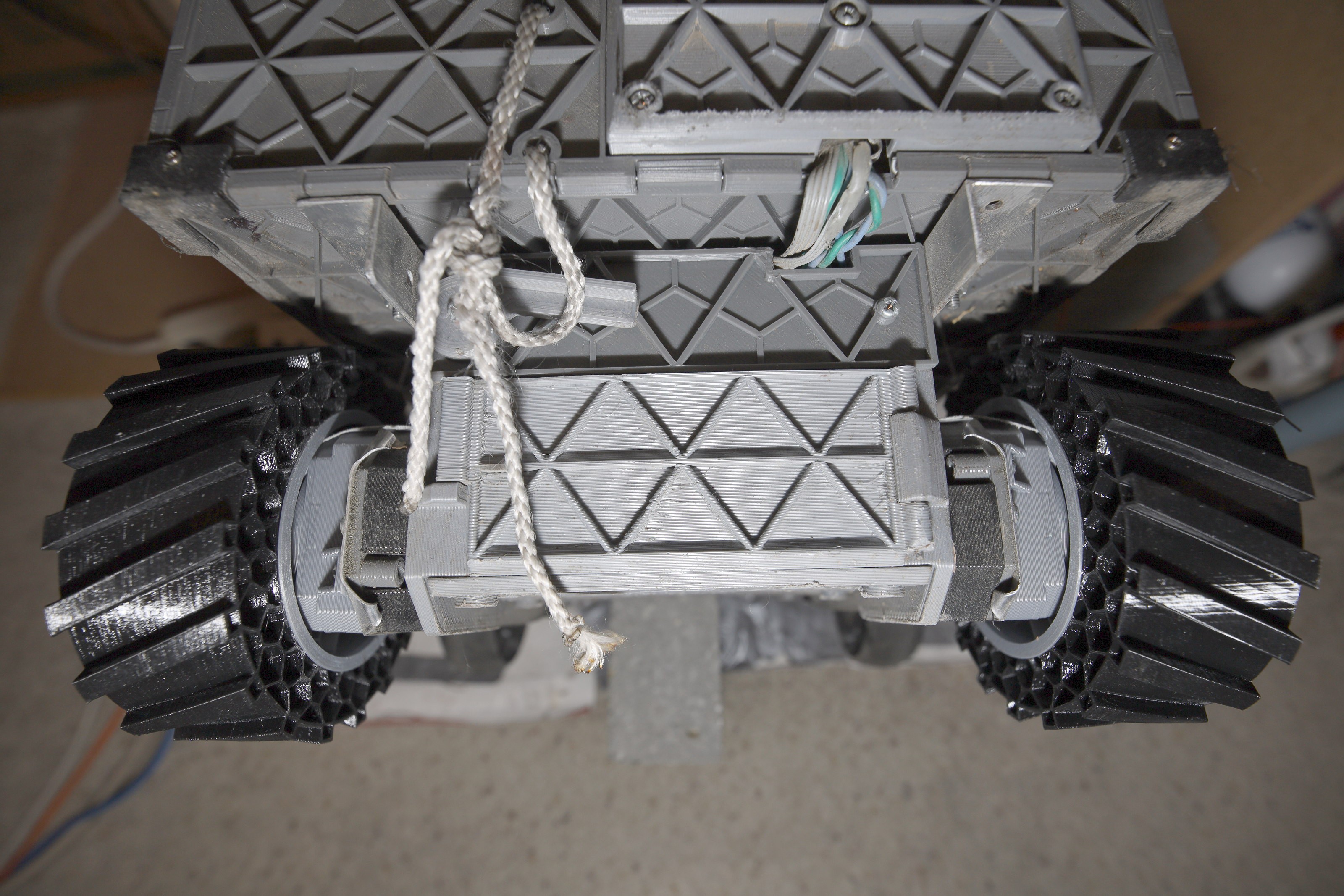

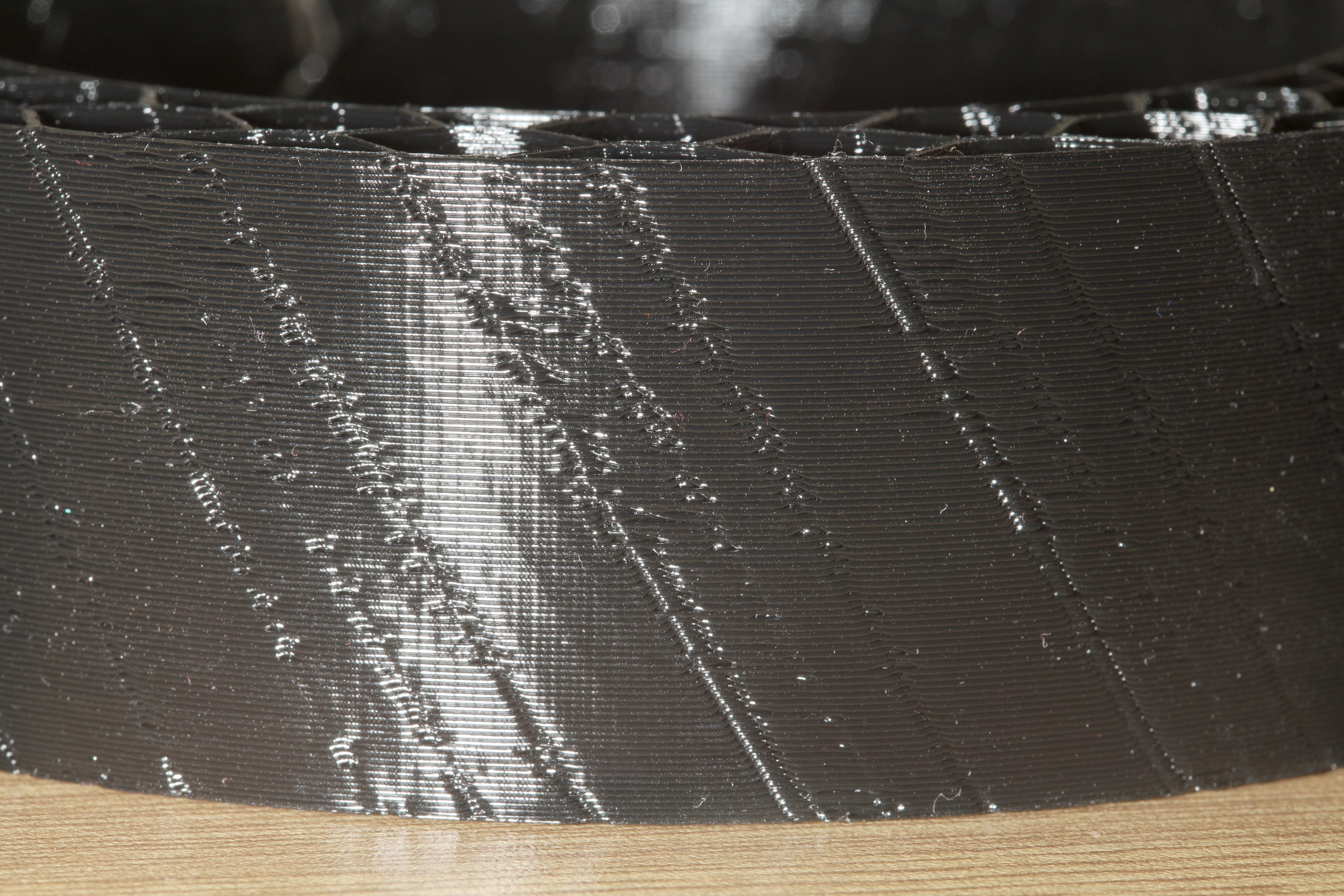

04/04/2022 at 18:46 • 0 commentsThe old bumpers ended up delaminating & having CA glue release. The new bumpers use screws instead of glue & were printed at 250C instead of 220C to improve layer adhesion. The roll of filament was as hydrated as it gets.

![]()

![]()

![]()

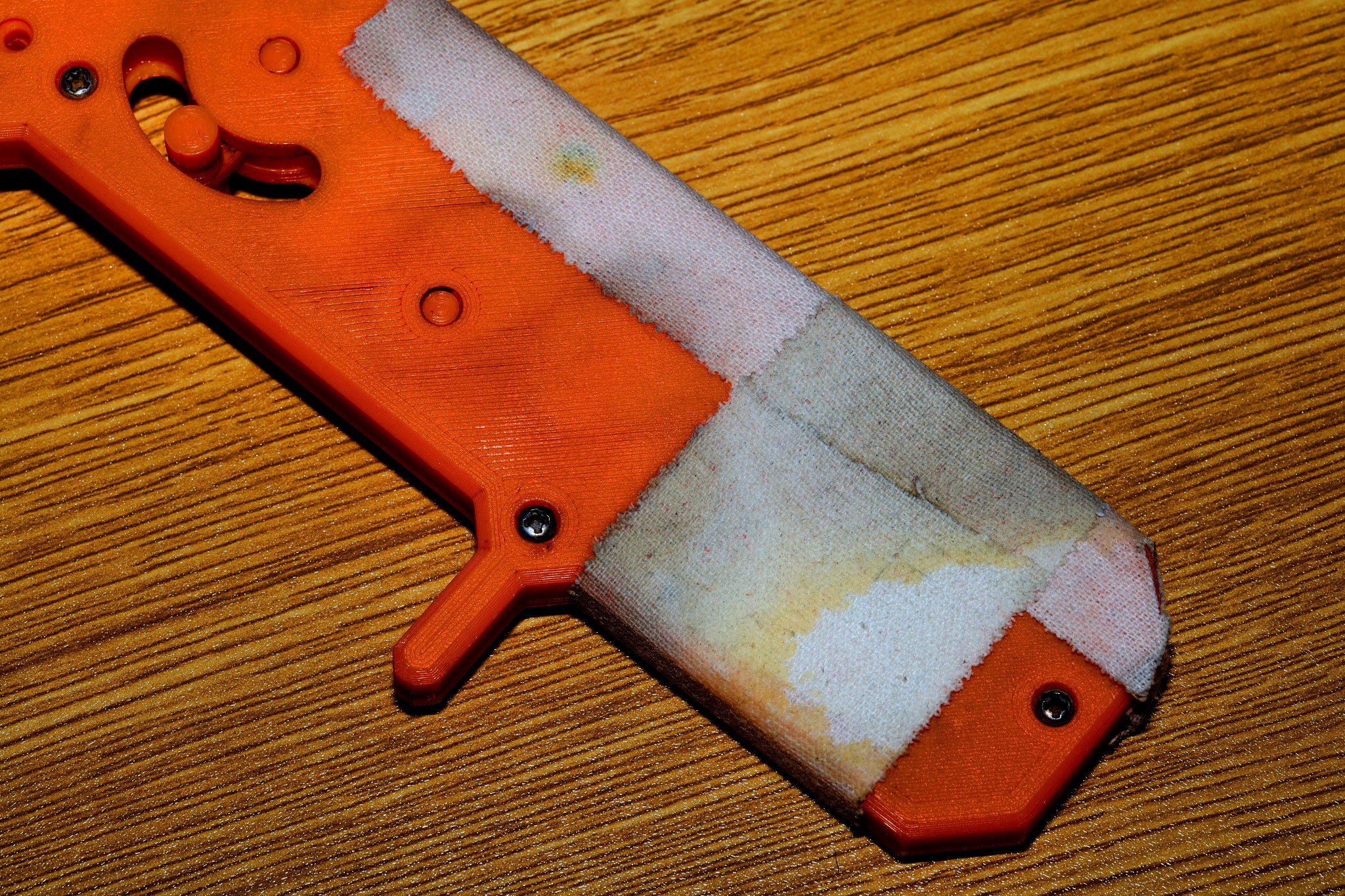

Water intrusion continued to be the paw controllers mane problem. The best performing solution evolved to a fabric sock to absorb water & routinely drying it out after every run. The mane problem is it can't dry out when it's on the charger. The inductive solution doesn't allow enough space on top of the inductor for any airflow, so that area just collects mold.

![]()

![]()

![]()

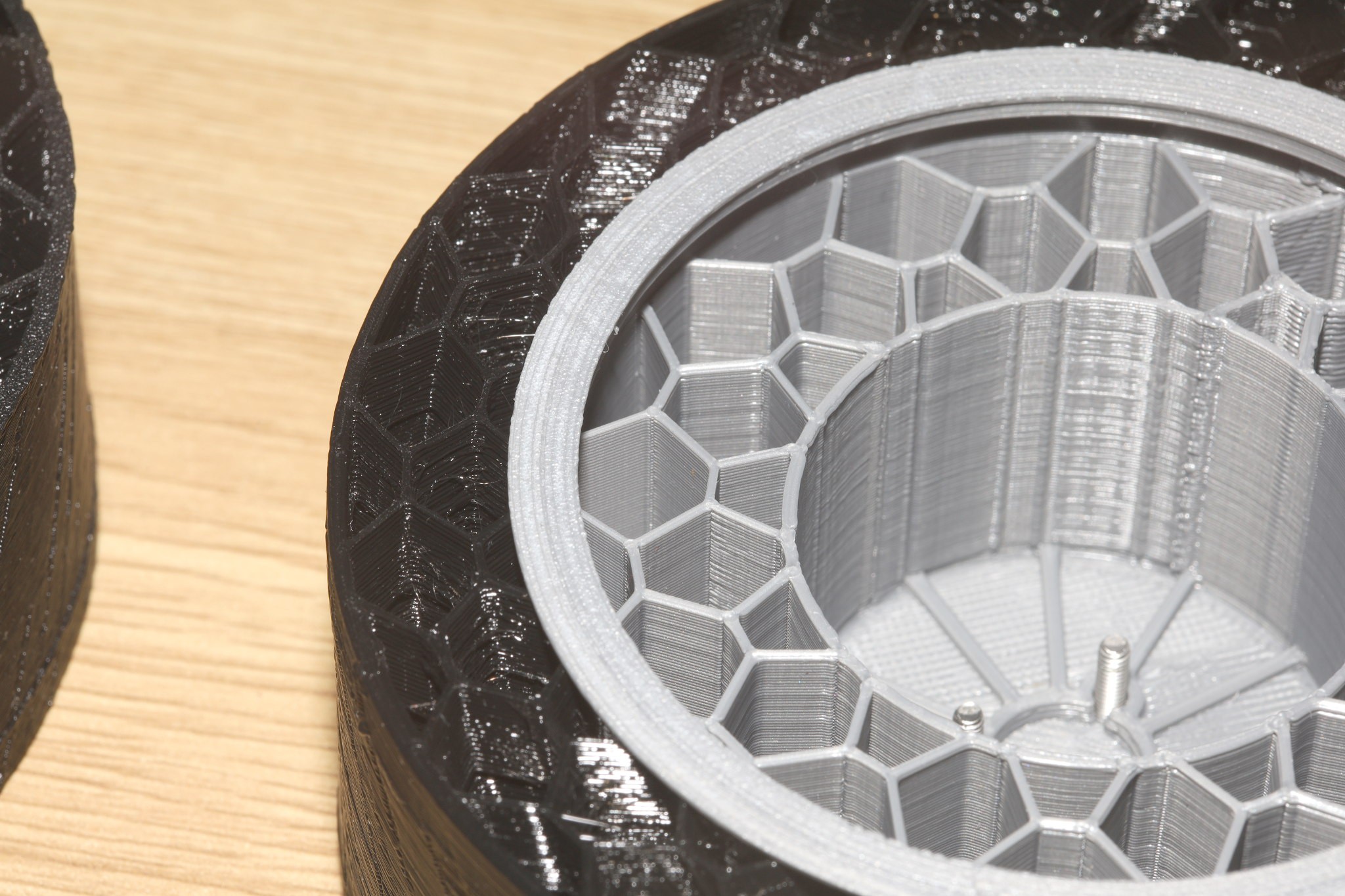

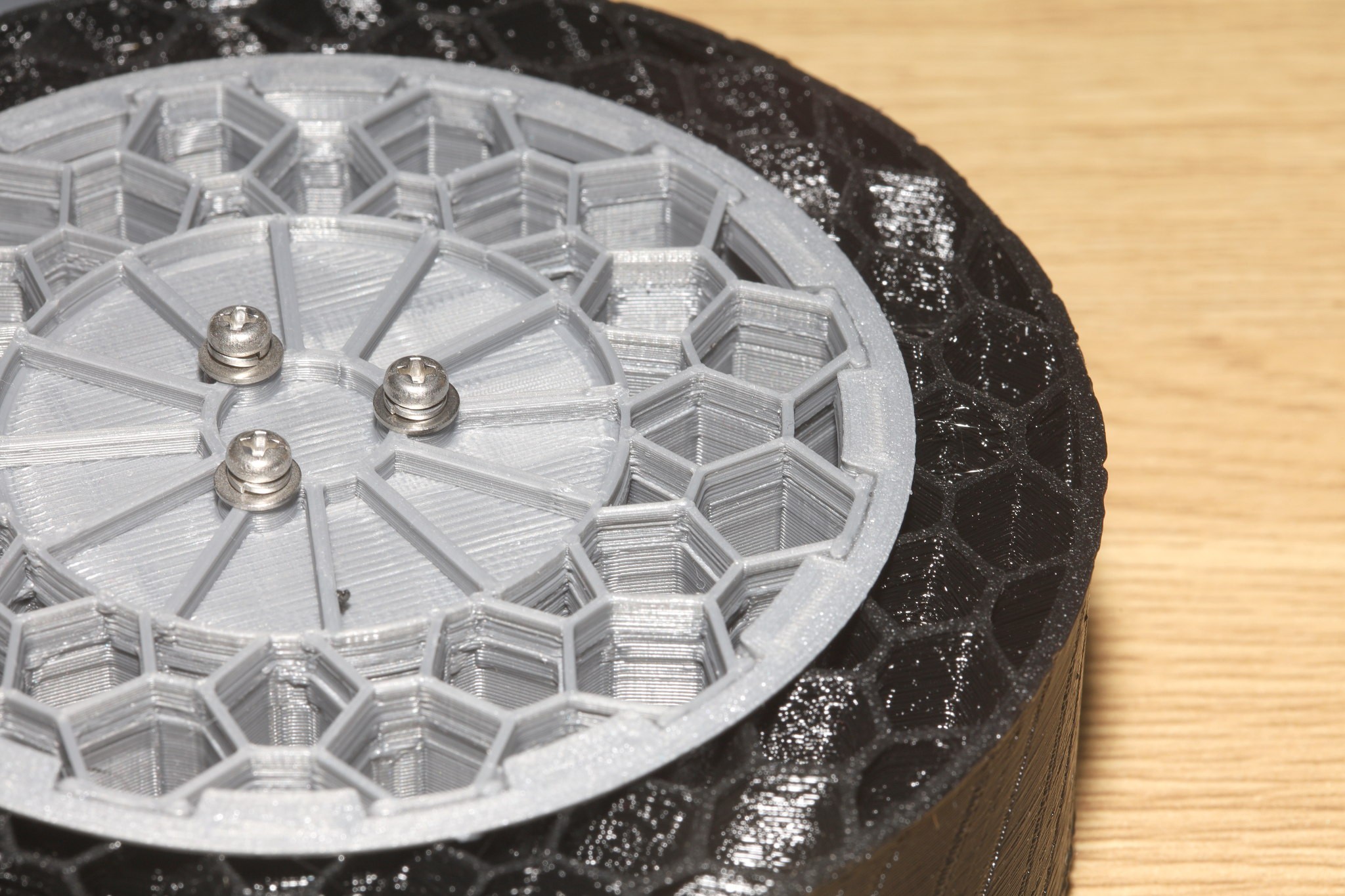

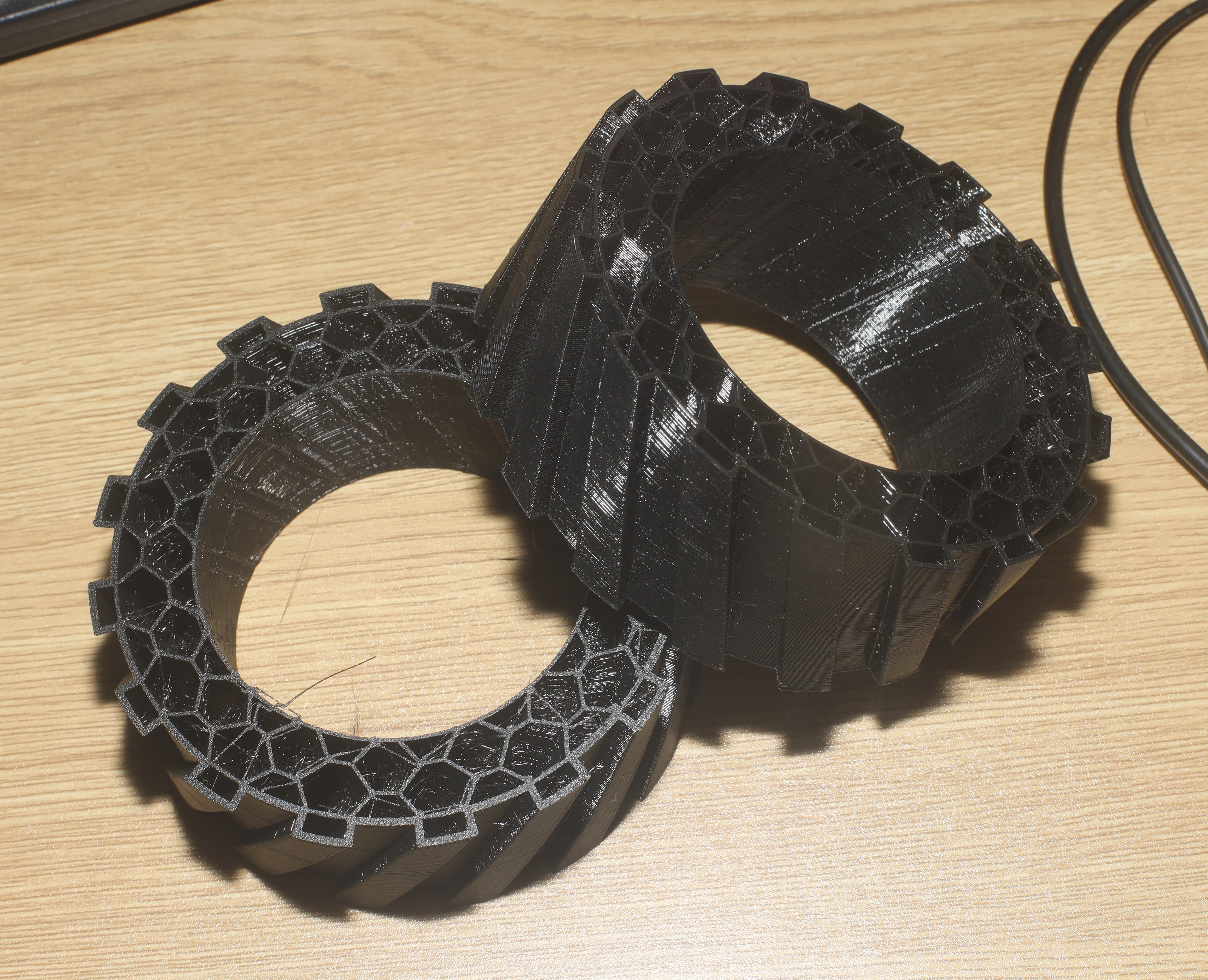

Calif*'s driest winter in history & solar powered filament drying yielded improved TPU tires. These were printed at 250C, to yield much better layer adhesion.

![]()

![]()

![]()

![]()

![]()

![]()

![]()

A wider, more aggressive offroad tire design emerged.

![]()

![]()

![]()

![]()

![]()

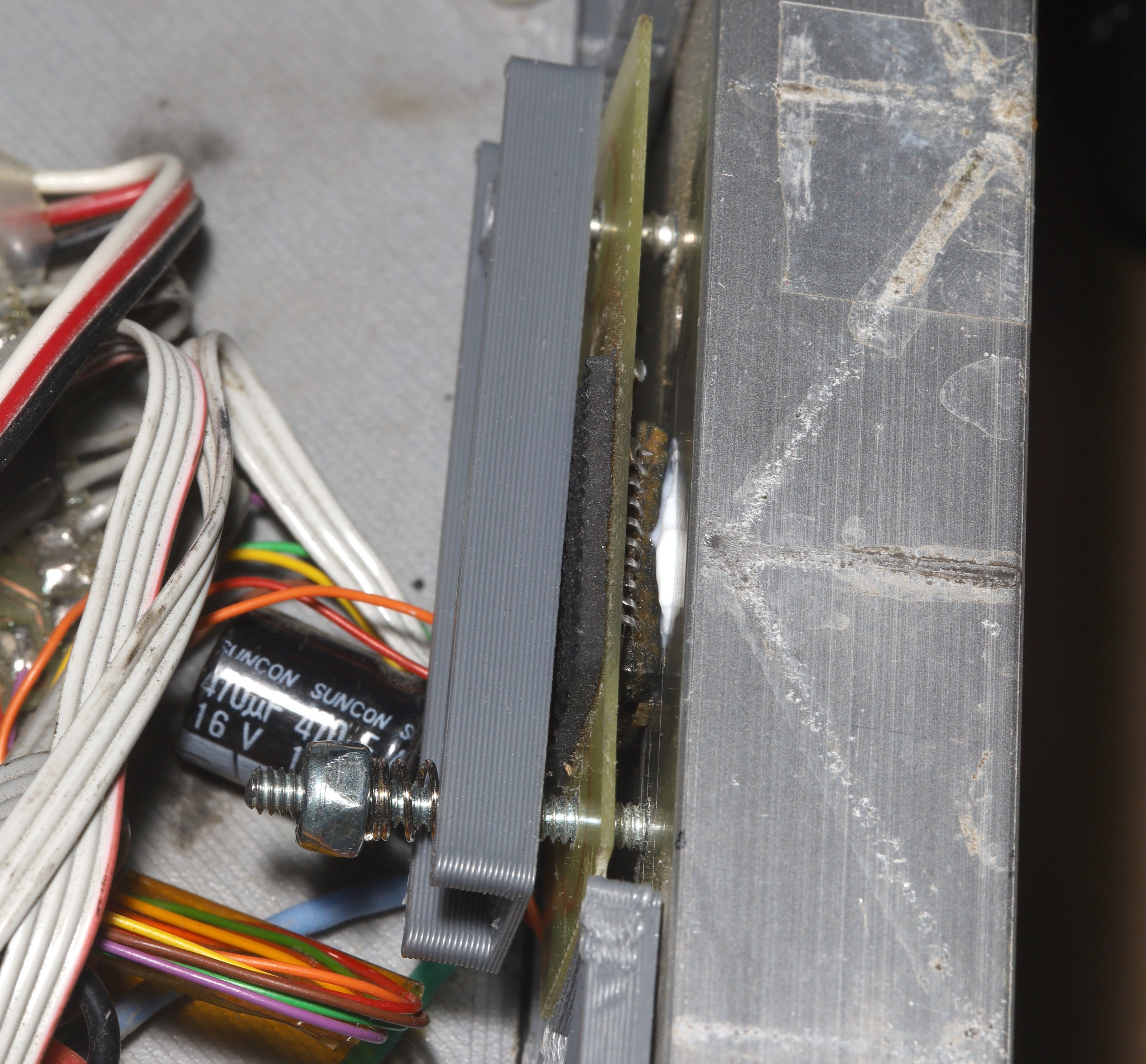

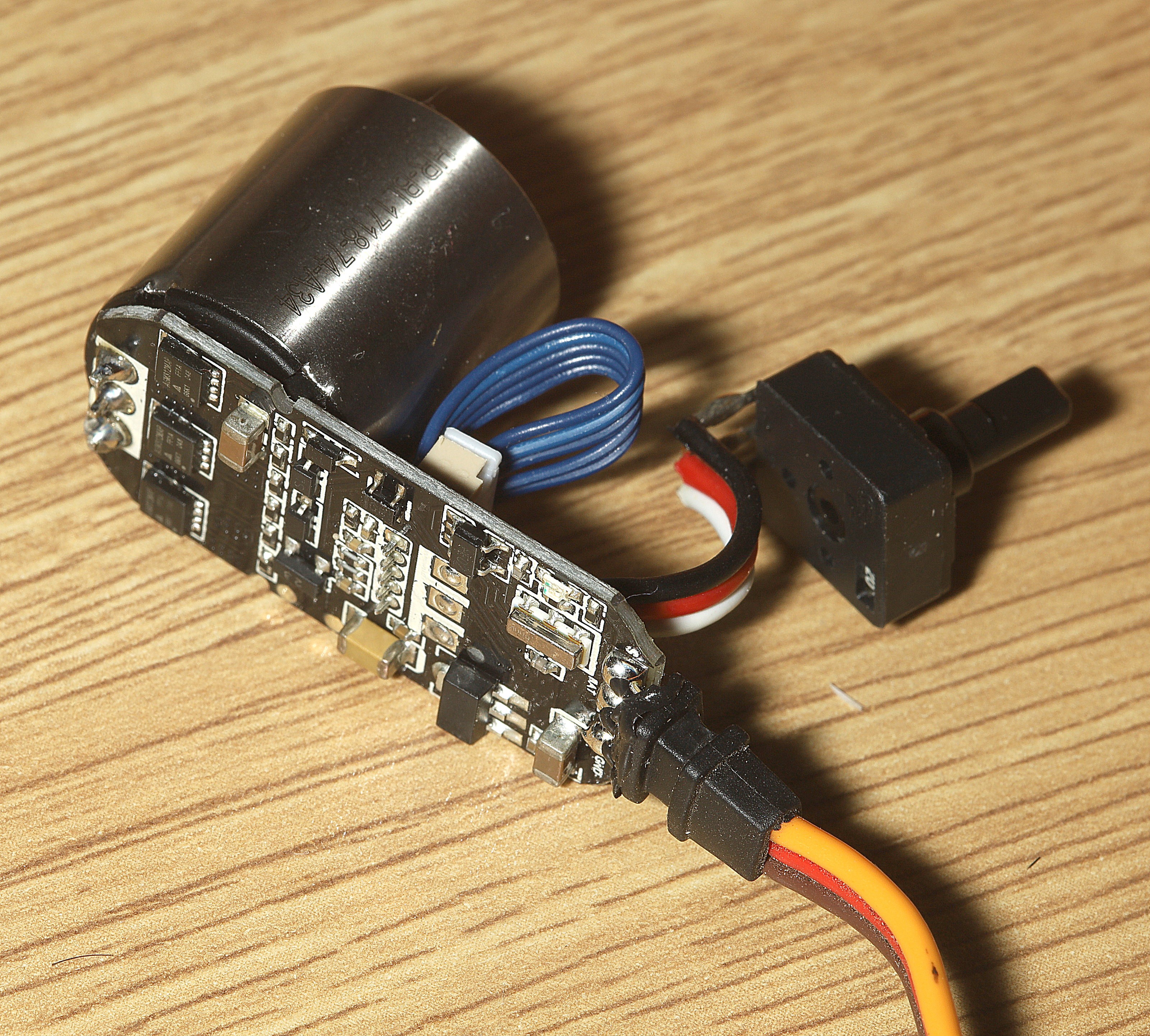

The motor drivers finally got an improved, spring loaded farstener to improve the heat sinking. Springs were how vintage graphics cards mated to their heat sinks. In this case, a lack of 3D printed metal required a spring farstener.

![]()

![]()

![]()

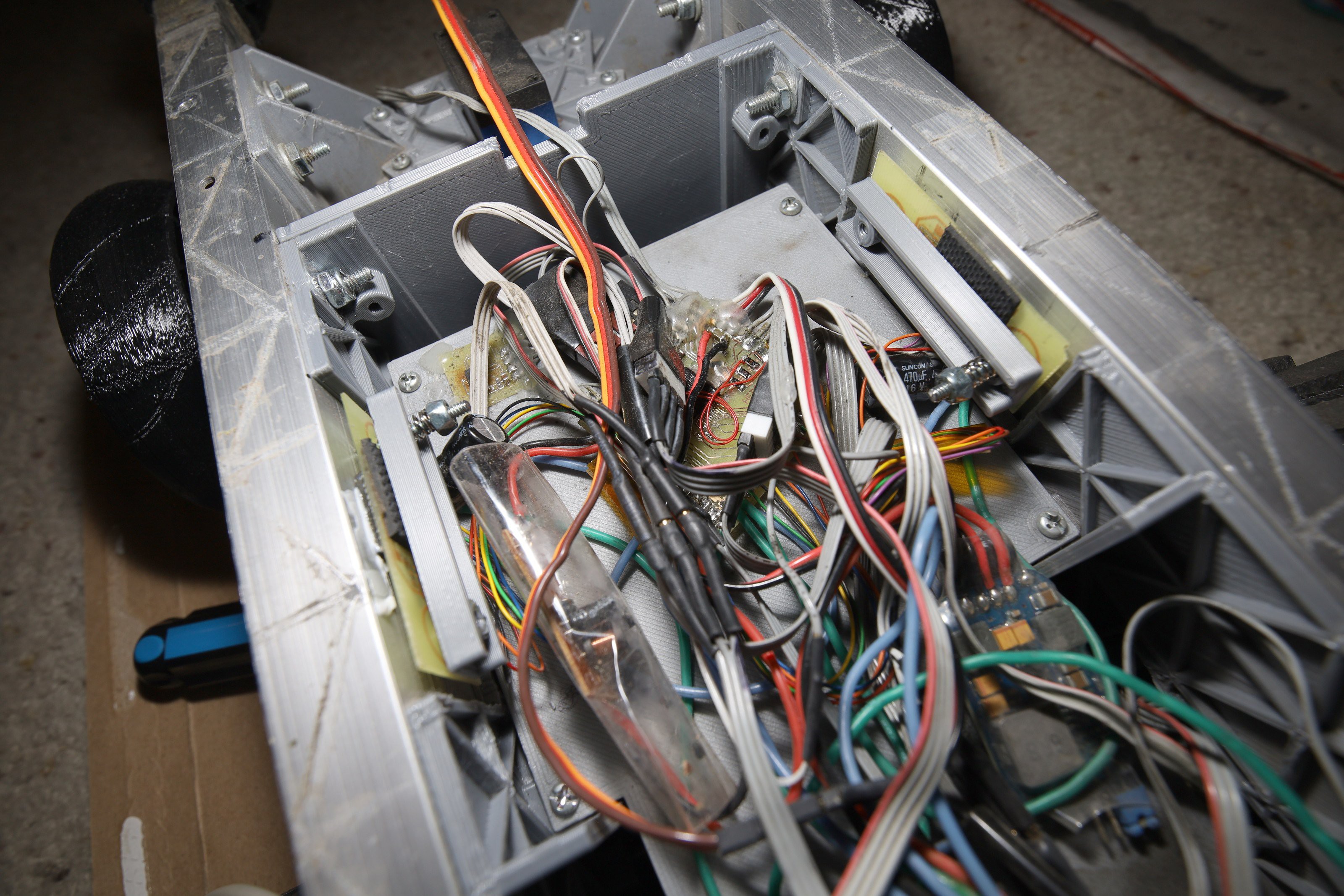

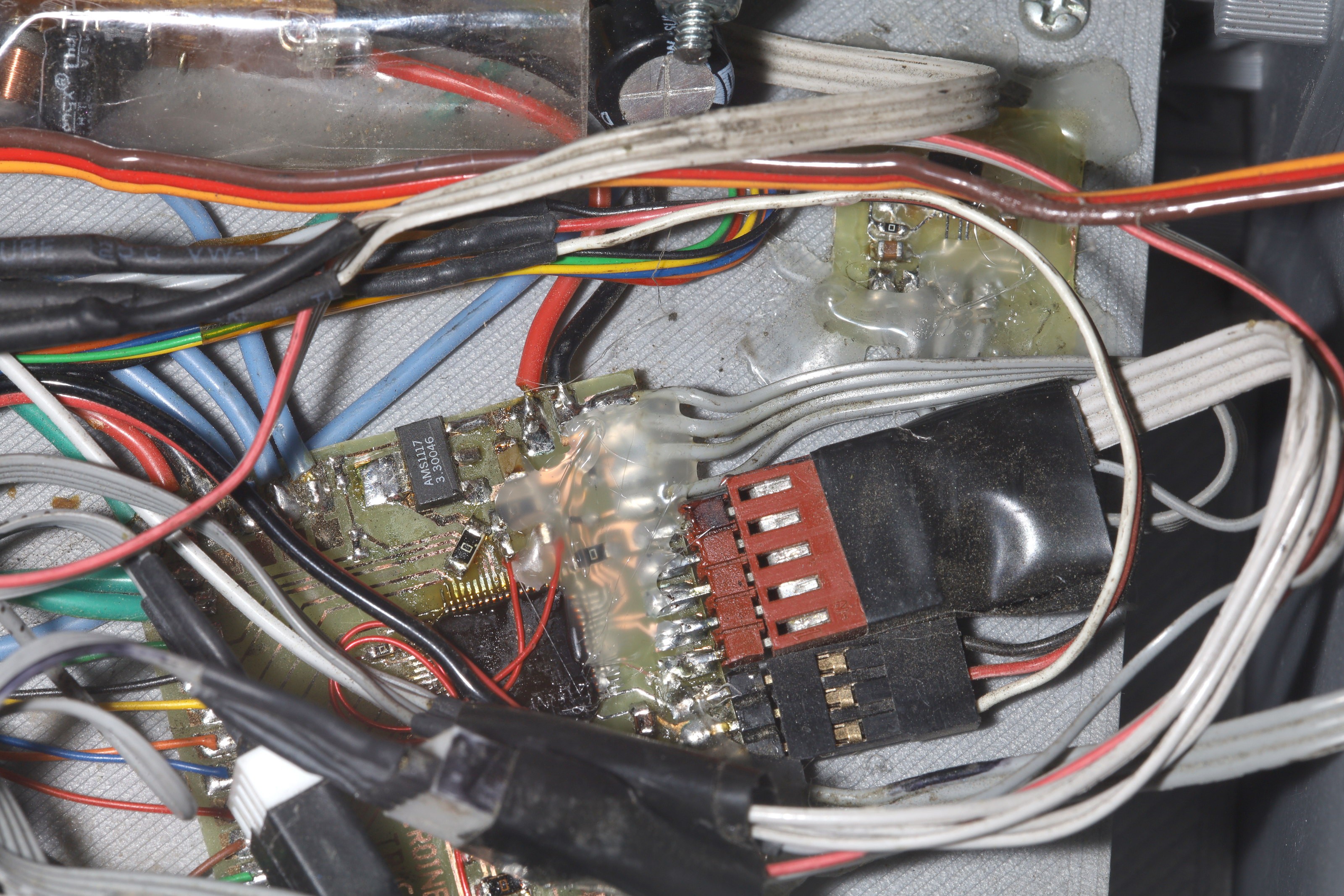

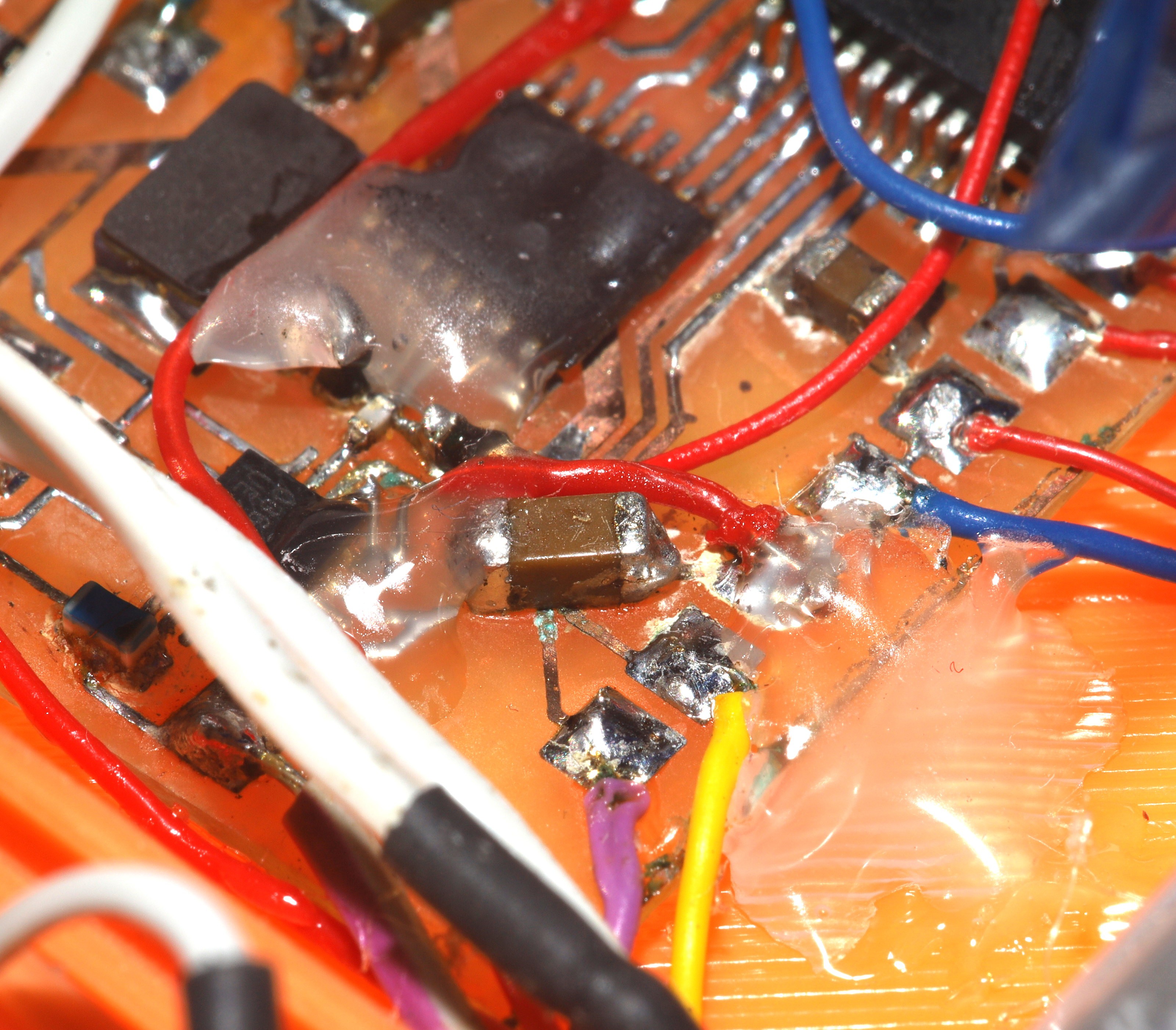

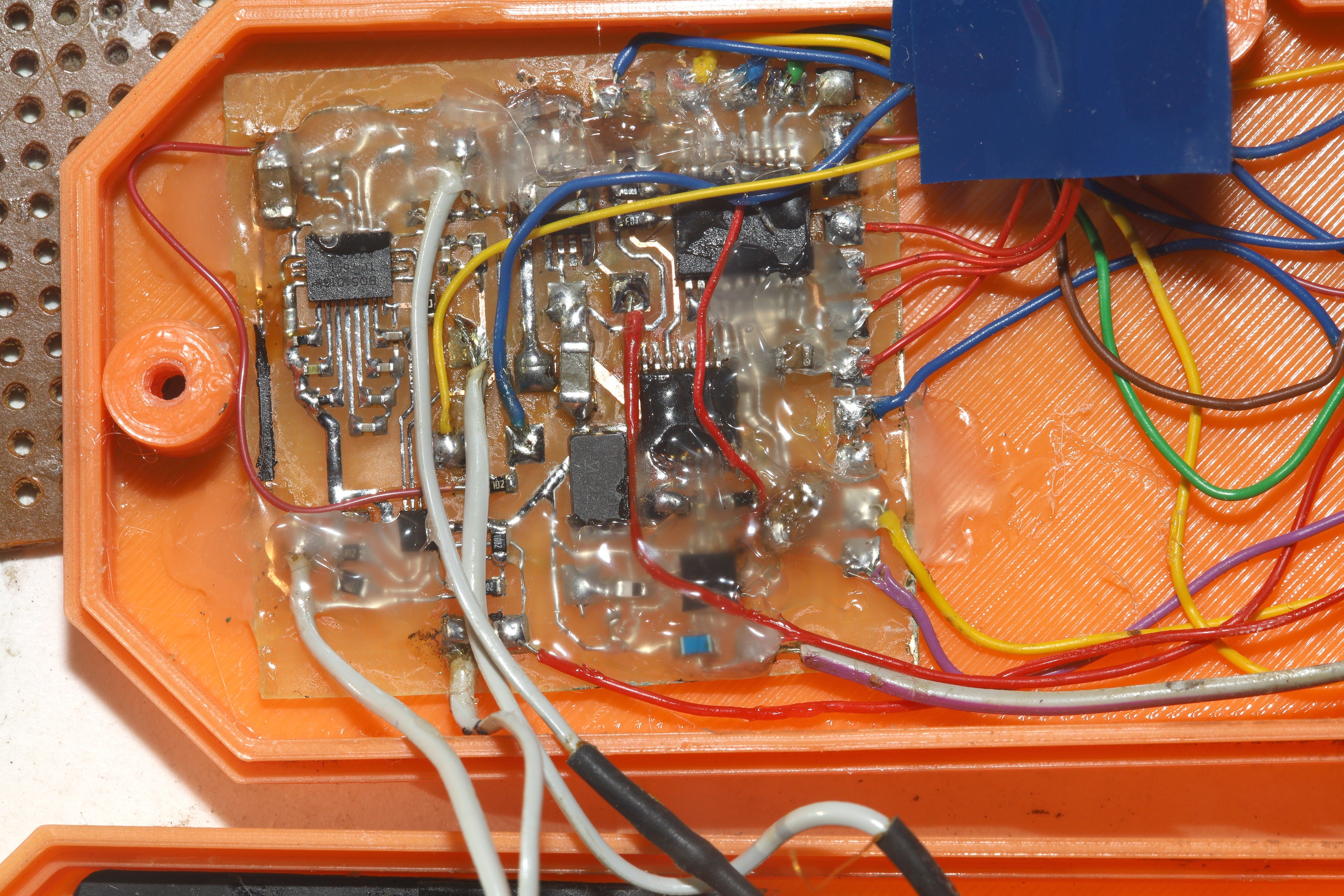

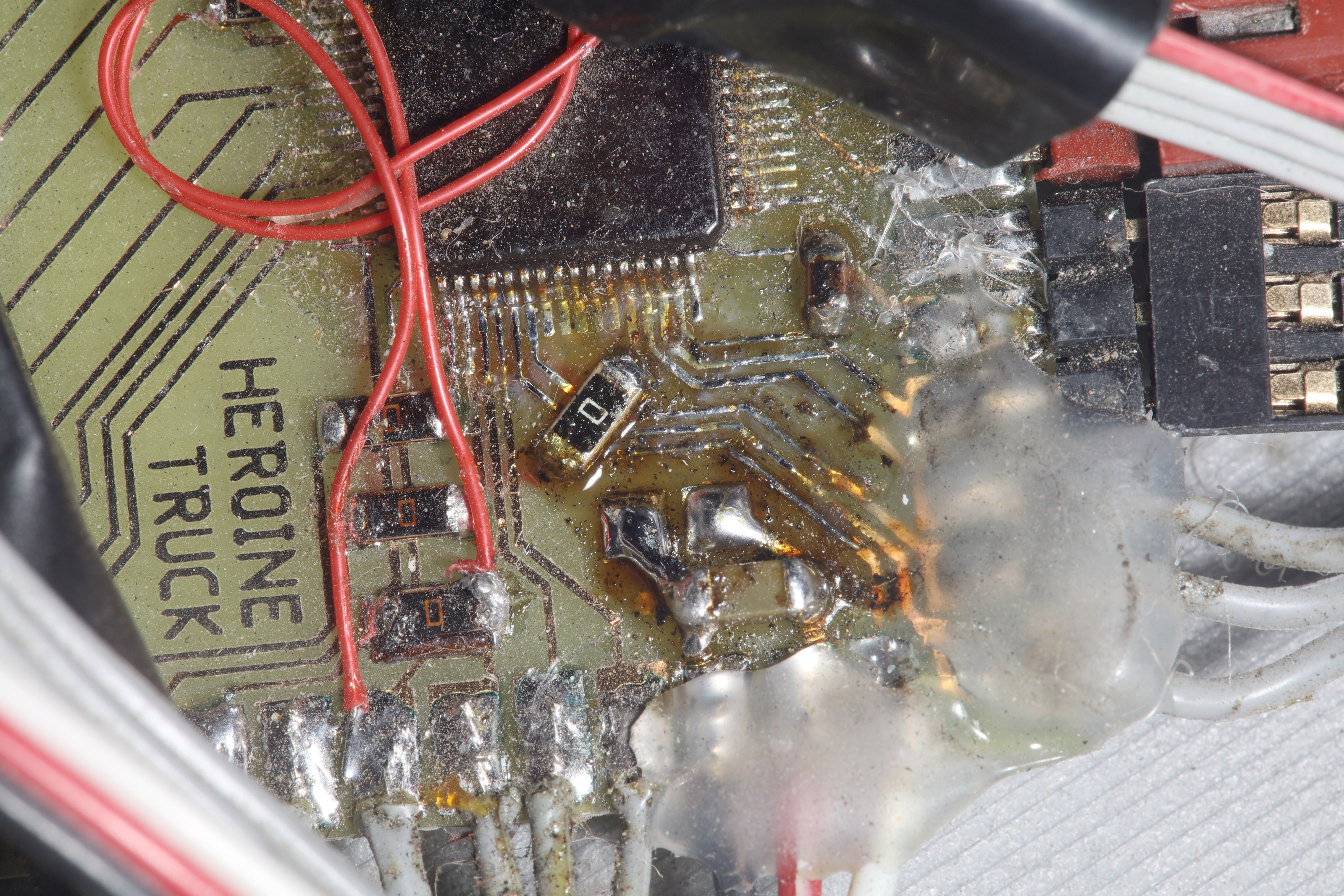

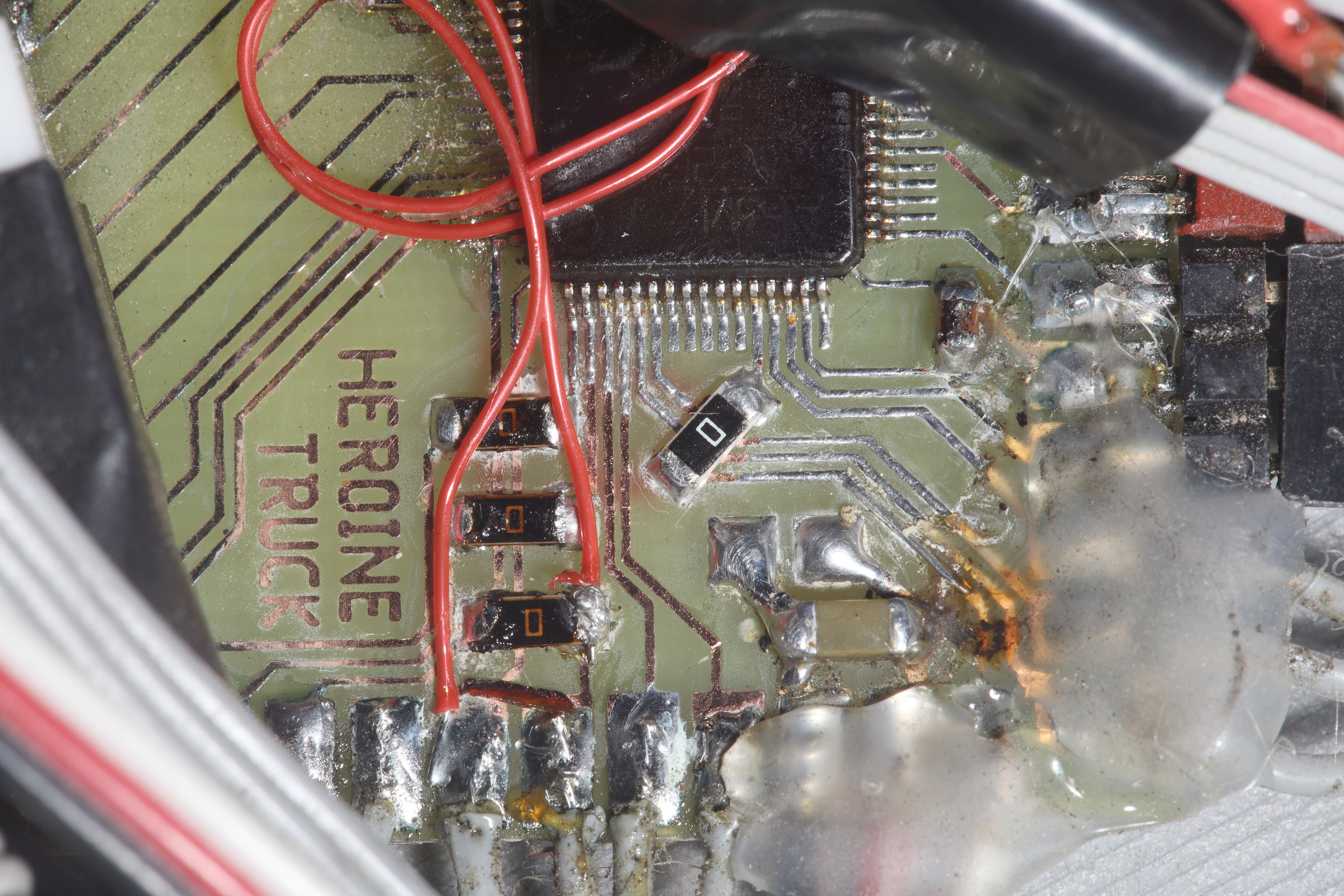

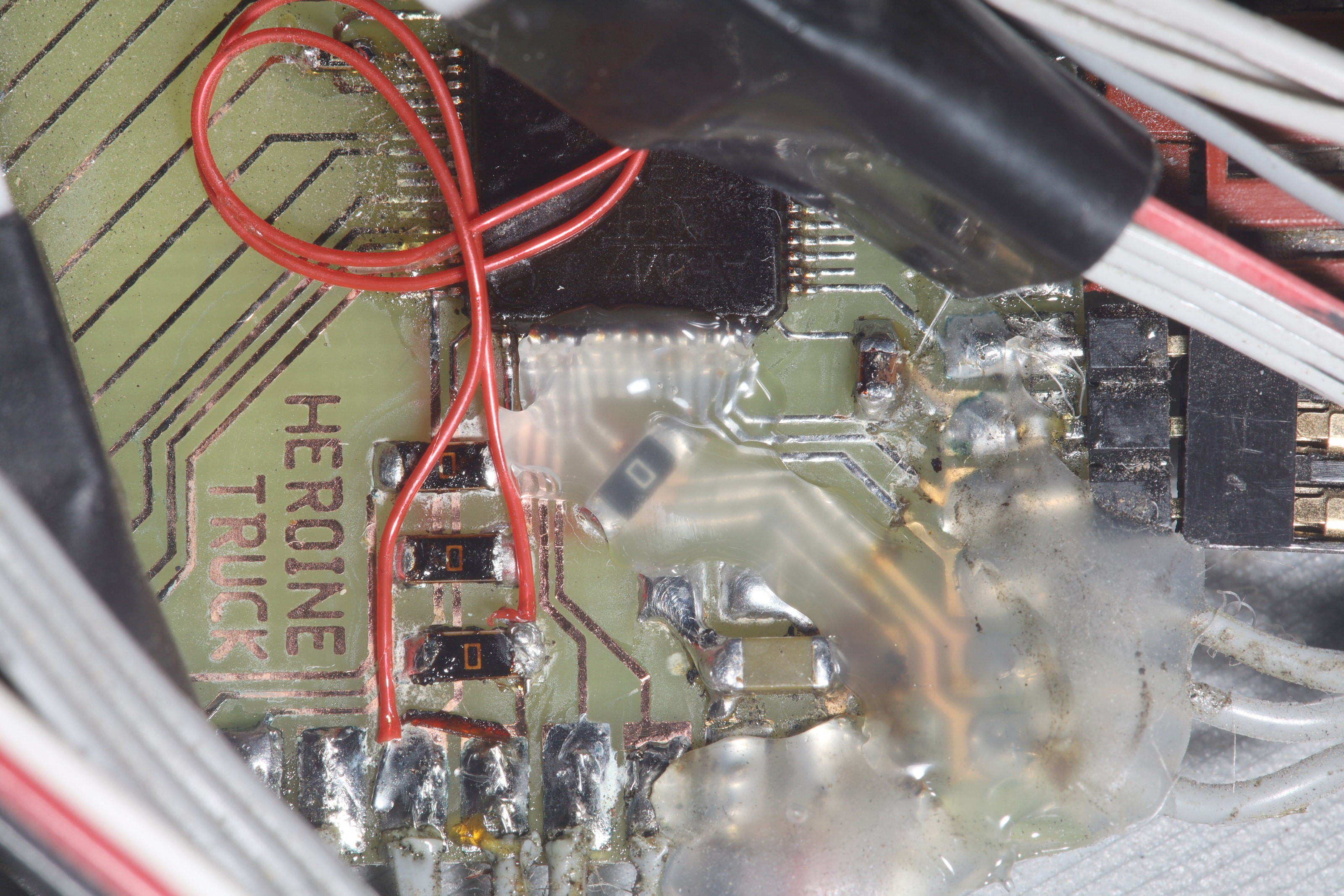

Steering problems reappeared after 3 uneventful months. The IMU got potting over the I2C lines. On the photos, it clearly never had conformal coating, so maybe the power rails also need potting. It didn't matter because 7 miles later in warm dry weather, it still glitched the same as before. This time, it stayed hard left regardless of paw control & after exiting heading hold mode. A few minutes later, it started working again. That left the death of the servo itself.

The SPT5835 went 1300 miles since going in on 9/7/21. Brushed servos went 400 miles if they were lucky.

![]()

![]()

The pots in the servos are believed to be wearing out. After years of searching, standalone replacements started appearing.

Premium Noble potentiometers:

https://www.aliexpress.com/item/1005002686938555.html

Cheaper potentiometers:

https://www.aliexpress.com/item/1005002495476576.html

https://www.aliexpress.com/item/1005002075687768.html![]()

![]()

-

Backlight compensation

01/23/2022 at 00:52 • 0 commentsThe embedded GPUs are out there. They're just 3x the price 2 years ago.

![]()

The lion kingdom believes the biggest improvements are going to come from improving the camera more than confusing power which no longer exists. Improving the exposure would improve every algorithm, so back light compensation is essential. Exposure could be adjusted so 99% of the pixels land above a certain minimum on the histogram. Unfortunately, the GeneralPlus has limited exposure control. Polling the video4linux2 driver, you get some settings.

id=980900 min=1 max=255 step=1 default=48 Brightness

id=980901 min=1 max=127 step=1 default=36 Contrast

id=980902 min=1 max=127 step=1 default=64 Saturation

id=980903 min=-128 max=127 step=1 default=0 Hue

id=980910 min=0 max=20 step=1 default=0 Gamma

id=98091b min=0 max=3 step=1 default=3 Sharpness

id=98091c min=0 max=127 step=1 default=8 Backlight CompensationThe only functional ones are contrast, saturation & backlight compensation. Backlight compensation is really some kind of brightness function. Saturation & contrast are some kind of software filters. The values have to be rewritten for every frame. Color definitely gives better face tracking than greyscale. The default backlight compensation is already as bright as possible. Changing saturation & backlight compensation from the default values gave no obvious improvement.

![]()

Face tracking could use higher resolution. There was also using a bigger target than a face by trying other demos in opencv.

openpose.cpp ran at 1 frame every 30 seconds.

person-reid.cpp ran at 1.6fps. This requires prior detection of a person with openpose or YOLO. Then it tries to match it with a database.

object_detection.cpp ran at 1.8fps with the yolov4-tiny model from https://github.com/AlexeyAB/darknet#pre-trained-models This is a general purpose object detector.

Obtaining the models for each demo takes some doing. The locations are sometimes in the comments of the .cpp files. Sometimes your only option is extensive searching. They usually fail with a mismatched array size. That means the image size is wrong. The required image size is normally given in a CommandLineParser block, a keys block, or in the .cfg file. The networks sometimes require a .cfg + .weights file or a .prototxt + .caffemodel file or a .onnx file.

Since only 1 in 4 frames are getting processed, there could be an alignment & averaging step. It would slow down the framerate. It wouldn't do well with fast motion.

-

Face tracker 2

01/21/2022 at 06:20 • 0 commentsFace tracking based on size alone is pretty bad & nieve. It desperately needs a recognition step.

There is an older face detector using haar cascades.

opencv/samples/python/facedetect.py

These guys used a haar cascade with a dlib correlation function to match the most similar region in 2 frames.

https://www.guidodiepen.nl/2017/02/detecting-and-tracking-a-face-with-python-and-opencv/

These guys similarly went with the largest face when no previous face existed.

Well, HAAR was nowhere close & ran at 5fps instead of 7.8fps. Obviously the DNN has a speed & accuracy advantage.

There was a brief test of absolute differencing the current face with the previous frame's face. When the face rotated, the score of a match was about equal to the score of a mismatch. The problem with anything that compares 2 frames is reacquiring the right face after dropping a few frames. It always has to go back to largest face.

The Intel Movidius seems to be the only embedded GPU still produced. Intel bought Movidius in 2016. As is typical, they released a revised Compute Stick 2 in 2018 & didn't do anything since then but vest in peace. It's bulky & expensive for what it is. It takes some doing to port any vision model to it. Multiple movidiuses can be plugged in for more cores.

Another idea is daisychaining 2 raspberry pi's so 1 would do face detection & the other would do recognition. It would have a latency penalty & take a lot of space.

The next step might be letting it run the recognizer at 3fps & seeing what happens. The mane problem is the recognizer has to be limited to testing 1 face to hit 3fps. Maybe it could optical flow track every face in a frame. The optical flow algorithm just says the face in the next frame closest to the previous face is the same. It could use the optical flow data to recognition test a different face in every frame until it got a maximum score for all. Then, it could track the maximum score face while doing another recognition pass, 1 face per frame at a time.

Optical flow tracking is quite error prone to reliably ensure recognition is applied to a different face in each frame. At 3fps, the time required for the recognizer to pick the maximum score might be the same time it takes the nieve algorithm to recover.

The mane problem is when the lion isn't detected in 1 frame while another face is. It would need a maximum change in position, beyond which it ignores any face & assumes the lion is in the same position until a certain time has passed. Then it would pick the largest face.

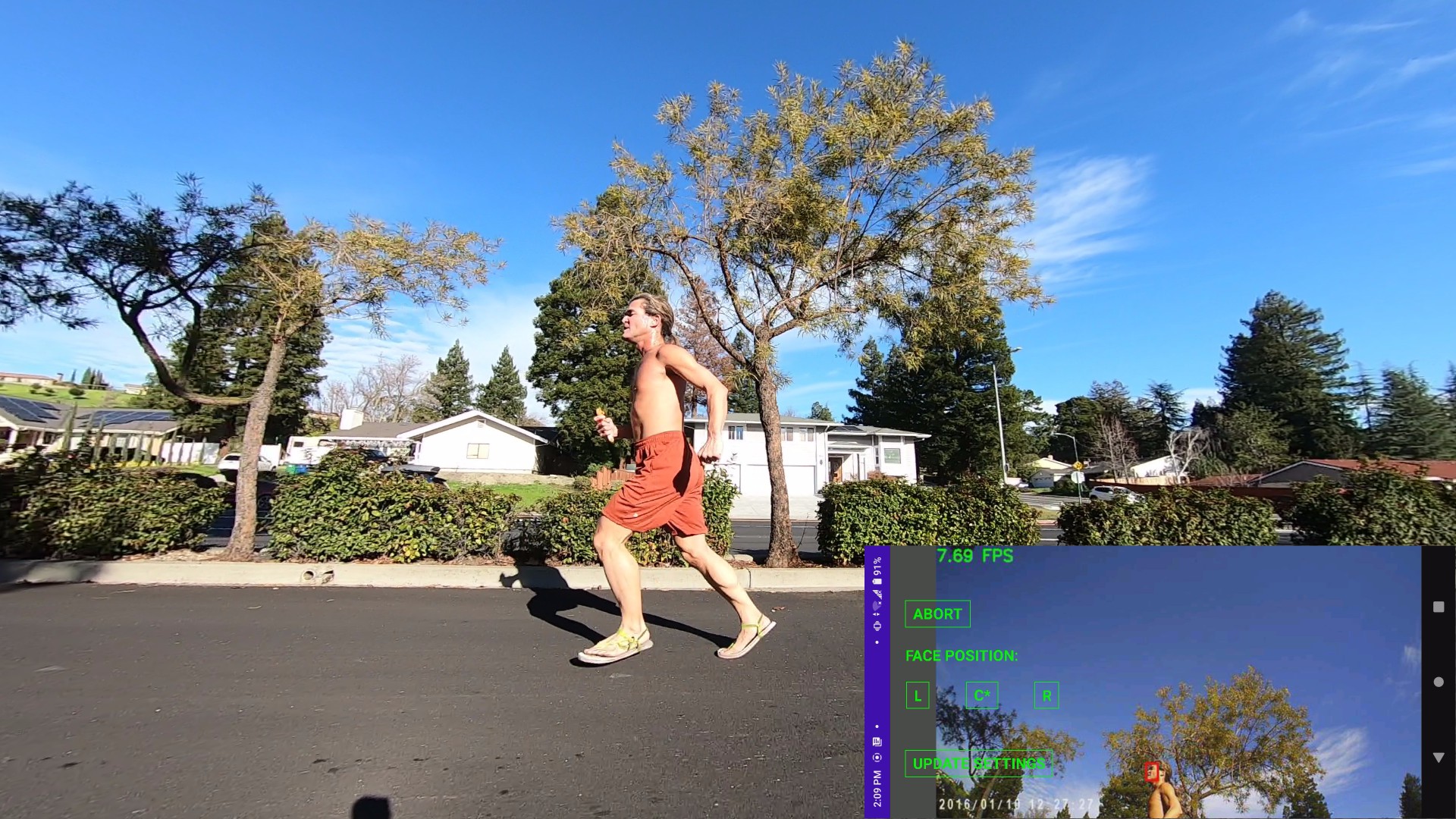

The only beneficial change so far ended up being simplifying optical flow to tracking the nearest face instead of enforcing maximum change in position, size & color. It would only revert to largest face if no faces were in frame. This ended up subjectively doing a better job.

Any further changes to the algorithm are probably a waste of time in lieu of waiting for better hardware to come along years from now & running a pose tracker + face tracker. Face tracking is used on autofocusing cameras because it's cheap.

![]()

![]() 2 hits where the lion was detected continuously.

2 hits where the lion was detected continuously.![]()

![]() 2 misses where the lion wasn't detected at all.

2 misses where the lion wasn't detected at all.![]()

![]()

![]()

Complete face tracked run

Face tracking failures

It continued to manely struggle with back lighting & indian burial grounds while at the same time, the optical flow tweek might have saved a few shots. The keychain cam blacks out fast when the sun sets. It might benefit from an unstabilized gopro + HDMI as the tracking cam. It would be bulky, heavy & require another battery.

There might be some benefit to capturing the face tracking stream on the raspberry pi instead of leaving the phone's screencap on. It would be another toggle on the phone app. The quality would be vastly better than the wifi stream but it might slow things down. The screencap stops at 4.5GB or around 50 minutes. This would be applicable to better hardware.

-

Face tracker

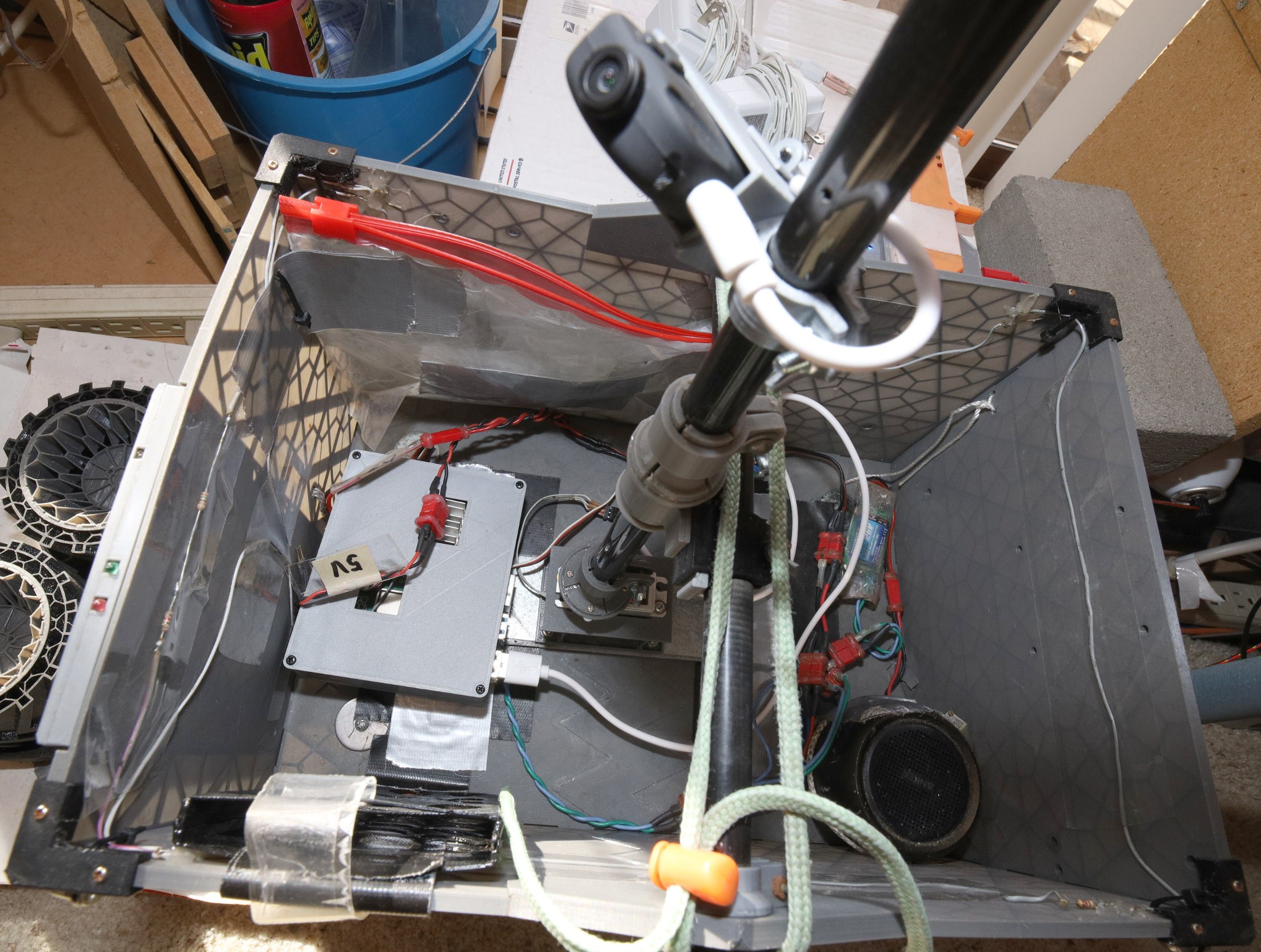

01/16/2022 at 05:02 • 0 commentsThe decision was made to transfer to using a face tracker instead of manually pointing the pole cam. Manual pointing would override the face tracker if the remote control was powered on, but it was expected to disappear. The lion kingdom found itself running more often without powering the servo, since carrying around the 2nd paw controller was a hassle. It was manely used for pointing the camera at the lion instead of viewing anything else. The most desirable shots were tracking the lion during a turn, but this wasn't possible with manual pointing.

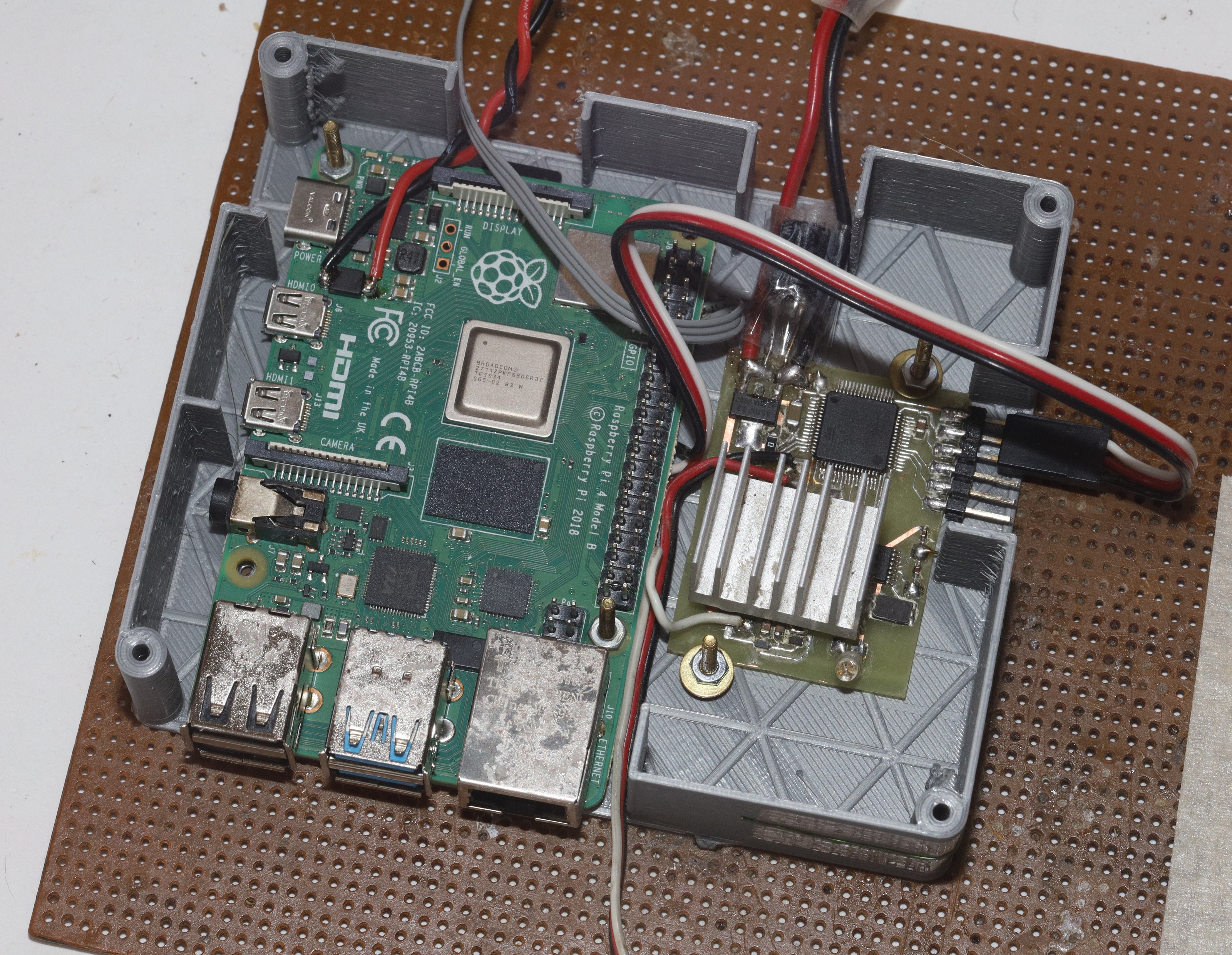

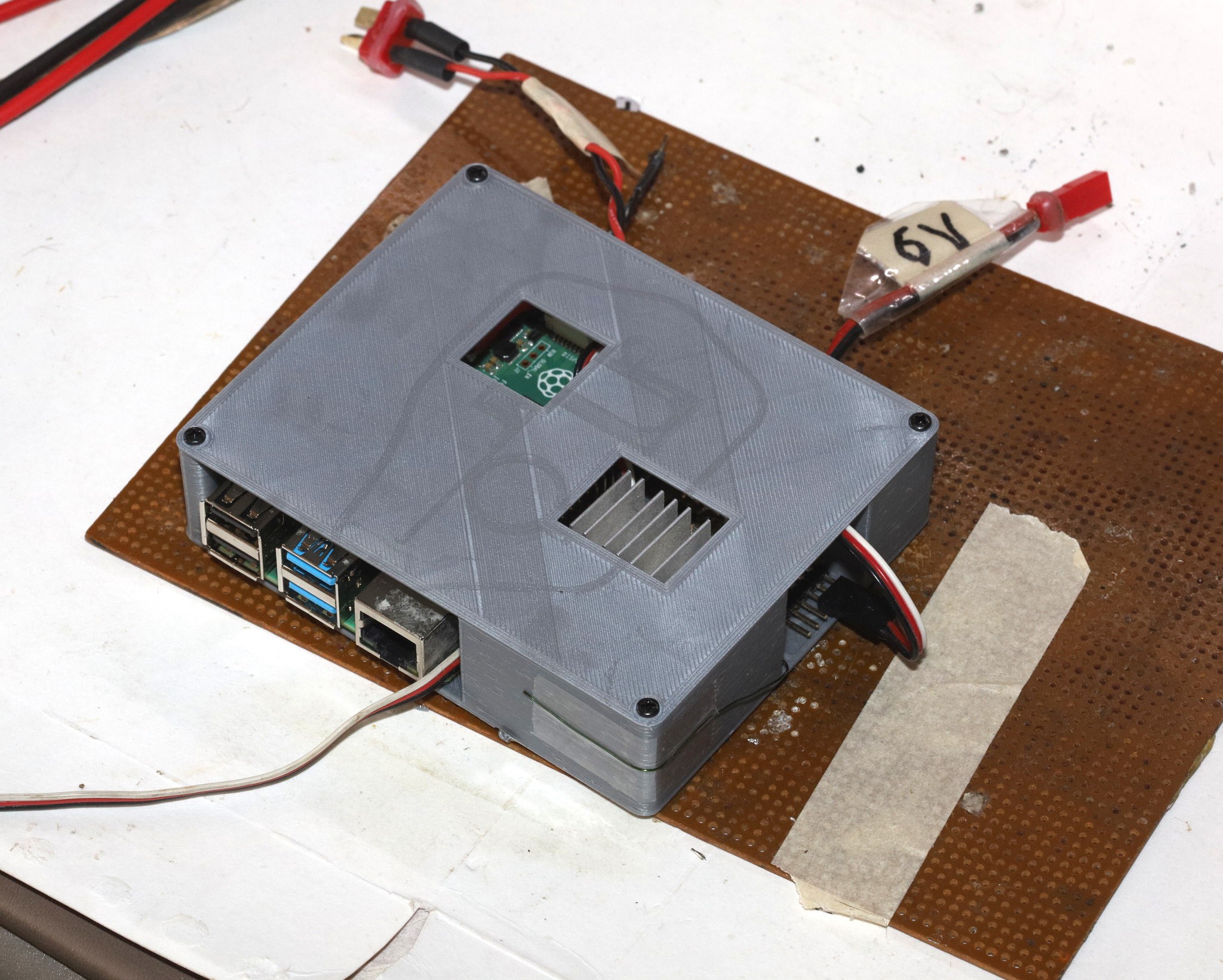

The ideal tracker would run a pose tracker & face tracker. This can run reasonably well on a 4GB GPU. The face tracker would pick out the lion & the pose tracker would track any visible body part when the face wasn't visible. Unfortunately, the embedded GPUs of the past were all discontinued so the lion kingdom settled on just the opencv face tracker on a raspberry pi 4B.

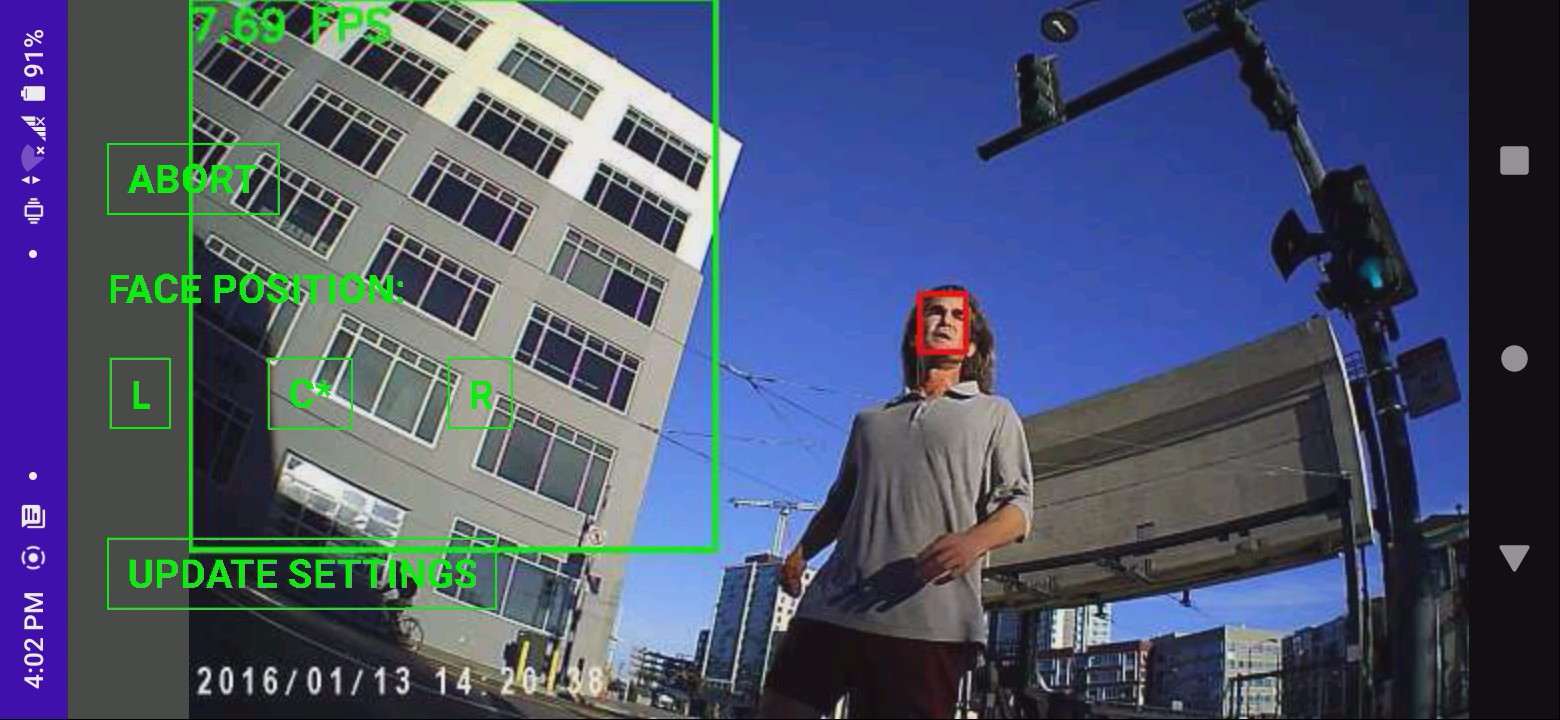

Opencv face tracking is split into face detection & face recognition. Face recognition goes at 3fps while face detection goes at 7fps, so just the largest face is tracked. There is an optical flow step which keeps tracking the same face for a short time if a larger face appears. Optical flow works better at higher frame rates, but the best the pi can do is 7.8fps. Further optimization for slower modern confusers involved scaling down the video to 640x360.

![]()

The gopro 7 delays its HDMI feed by 2 seconds, so a webcam is dedicated to face tracking. It lighter than having an HDMI cable go to the gopro, but it's very cockeyed. Some experimentation with the webcam height & angle is required.

![]()

![]()

An enclosure is required to keep other junk from smashing the face tracker & motor driver.![]()

The mane problem with this is the evil USB connector glitching.

![]()

The raspberry pi runs an access point & the phone runs an app to control it. There's a text file with low level configuration parameters.

![]()

The cockeyed webcam & low resolution make face tracking pretty bad in the corners. The face detector is not rotation invariant & there's not enough resolution to do an equirectangular projection. The bottom center would have to get smaller or the top corners would have to be cropped instead of cockeyed. Frame rate could be sacrificed to get more resolution. There's also the matter of how much a lion should invest in replicating what surely some Chinese robot can do.

![]()

![]()

Practical tests could mostly track in ideal lighting & even in some hard backlit lighting. Backlit lighting was less robust. It was so common for face detection to drop out for a single frame, optical flow was manely useless.

![]()

![]()

The mane problems were indian burial grounds causing spurious face detection.

![]()

Cases where the lion wasn't detected while another face was, on top of indian burial ground interference. Face recognition would be a big hit, if there were embedded GPUs.

![]()

Cases where it should have detected but missed abounded. These might be from rotation.

![]()

Less ideal lighting, but still rotated. The L & R positions were worthless because the amount of rotation caused by a face on the left & right made it always drop.

Power consumption was 330mAh/mile without any speaker or headlights.

There are alternatives to the opencv face tracker. While embedded GPU's are no more, Intel is producing a USB stick.

YOLO can detect an entire body at 5fps with USB stick.

https://www.pyimagesearch.com/2020/01/06/raspberry-pi-and-movidius-ncs-face-recognition/

Face recognition with USB stick goes at 6fps.

Changing vision algorithms is like writing an mp3 player in 1995. It's entirely locked into 1 piece of hardware & there's no abstraction.

-

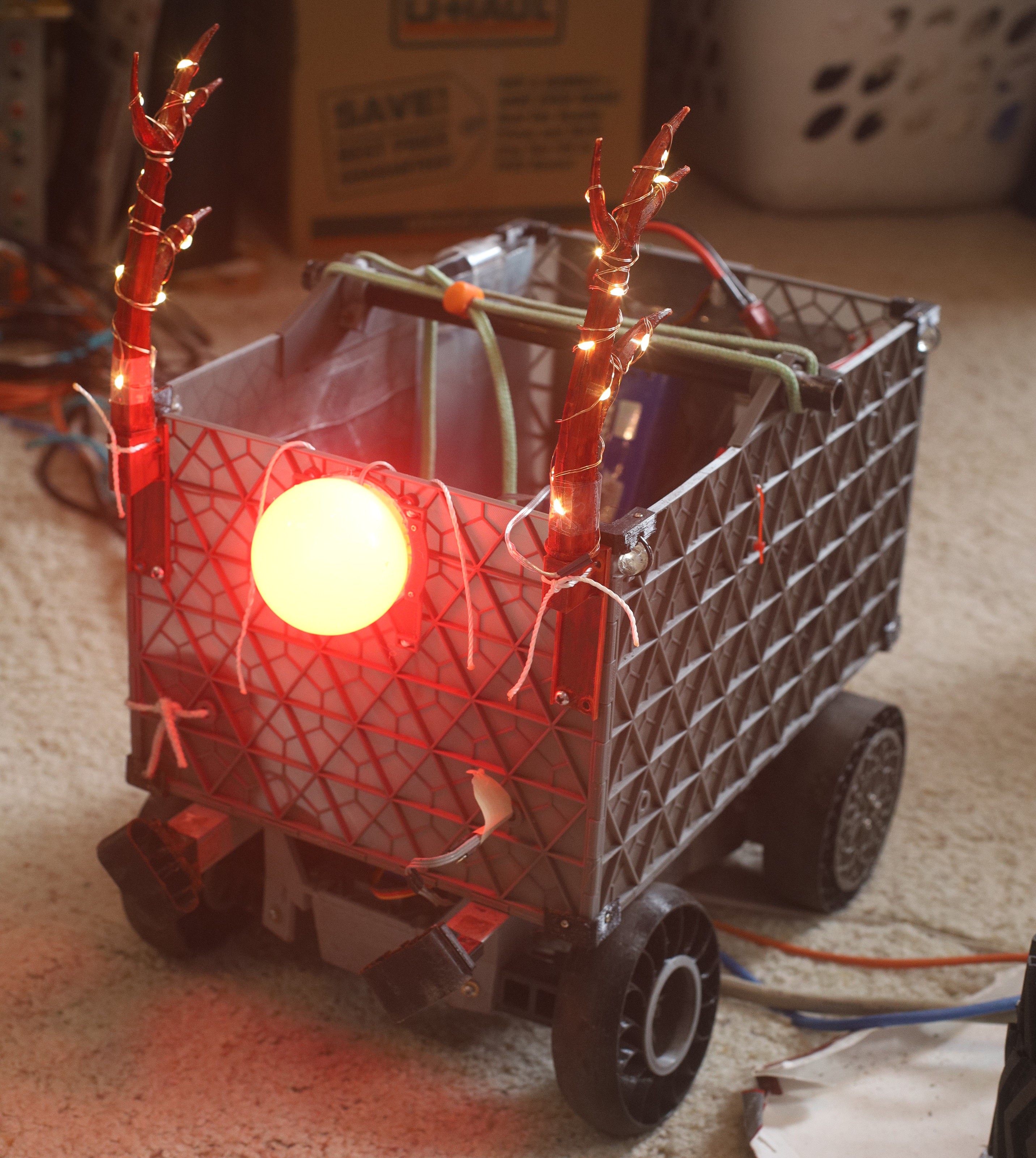

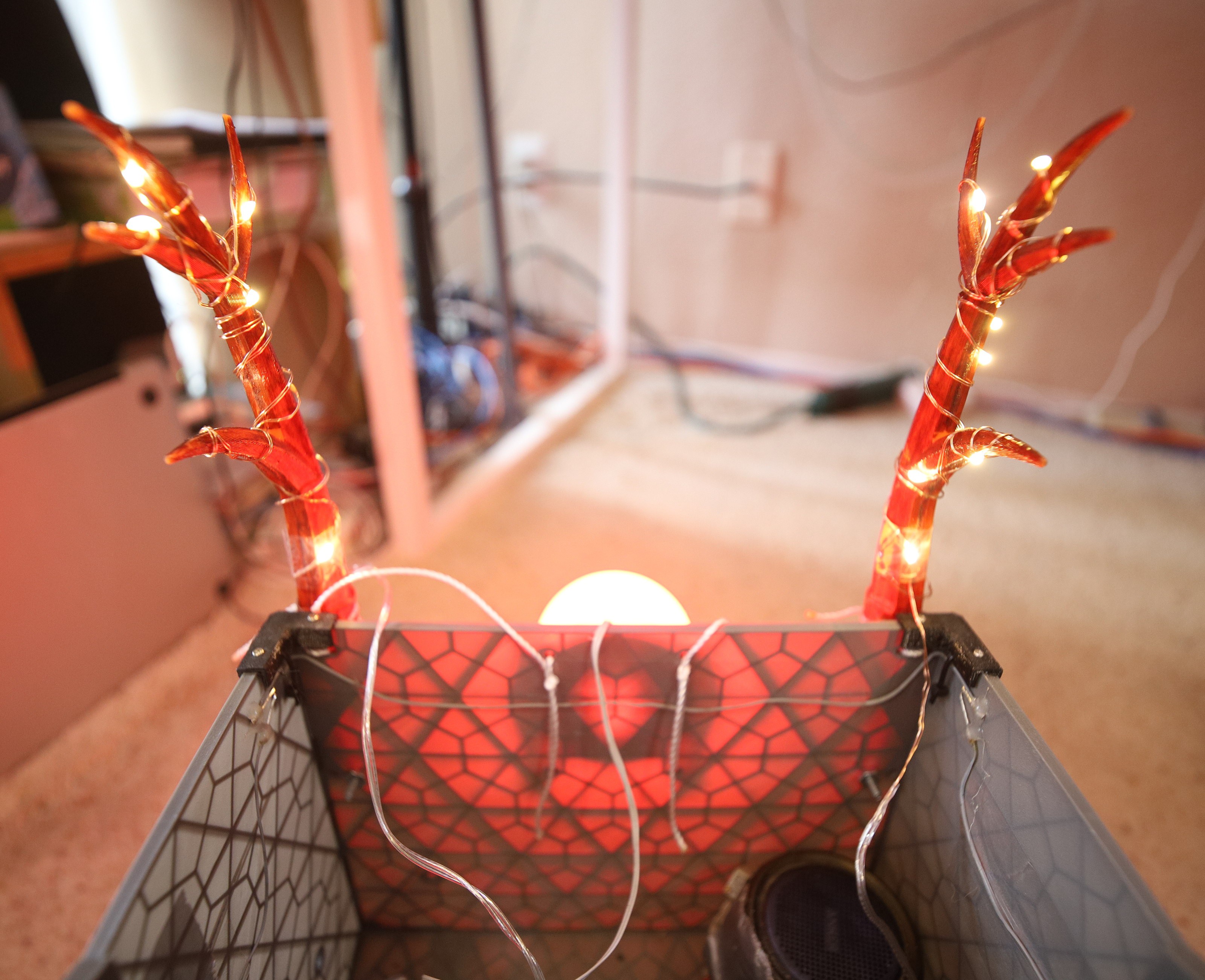

Rudolf summary

12/22/2021 at 00:44 • 0 comments![]()

The rudolf gear came off right after xmas. In reality, it could have stayed on until new years day. It was better than any of the SUV decorations lions had seen for 20 years. The key was that string of 3V LEDs which was bought on a whim at Homeless Despot. No SUV has ever had lit antlers. It ended up quite easy to take down the antlers on the road. They never fell out on their own as feared. The solid core wire never broke, but they could have used a few wrappings of stranded wire.

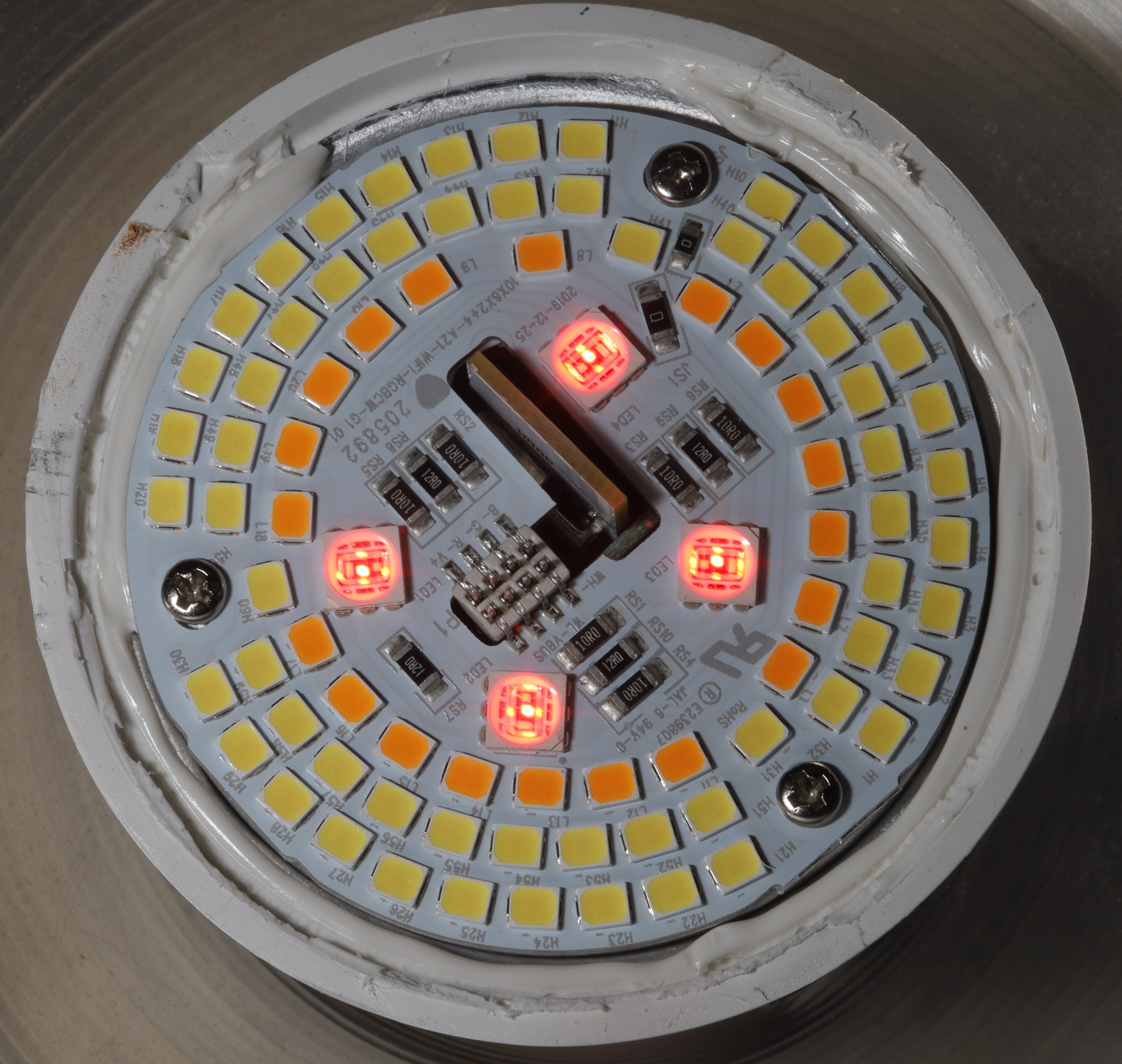

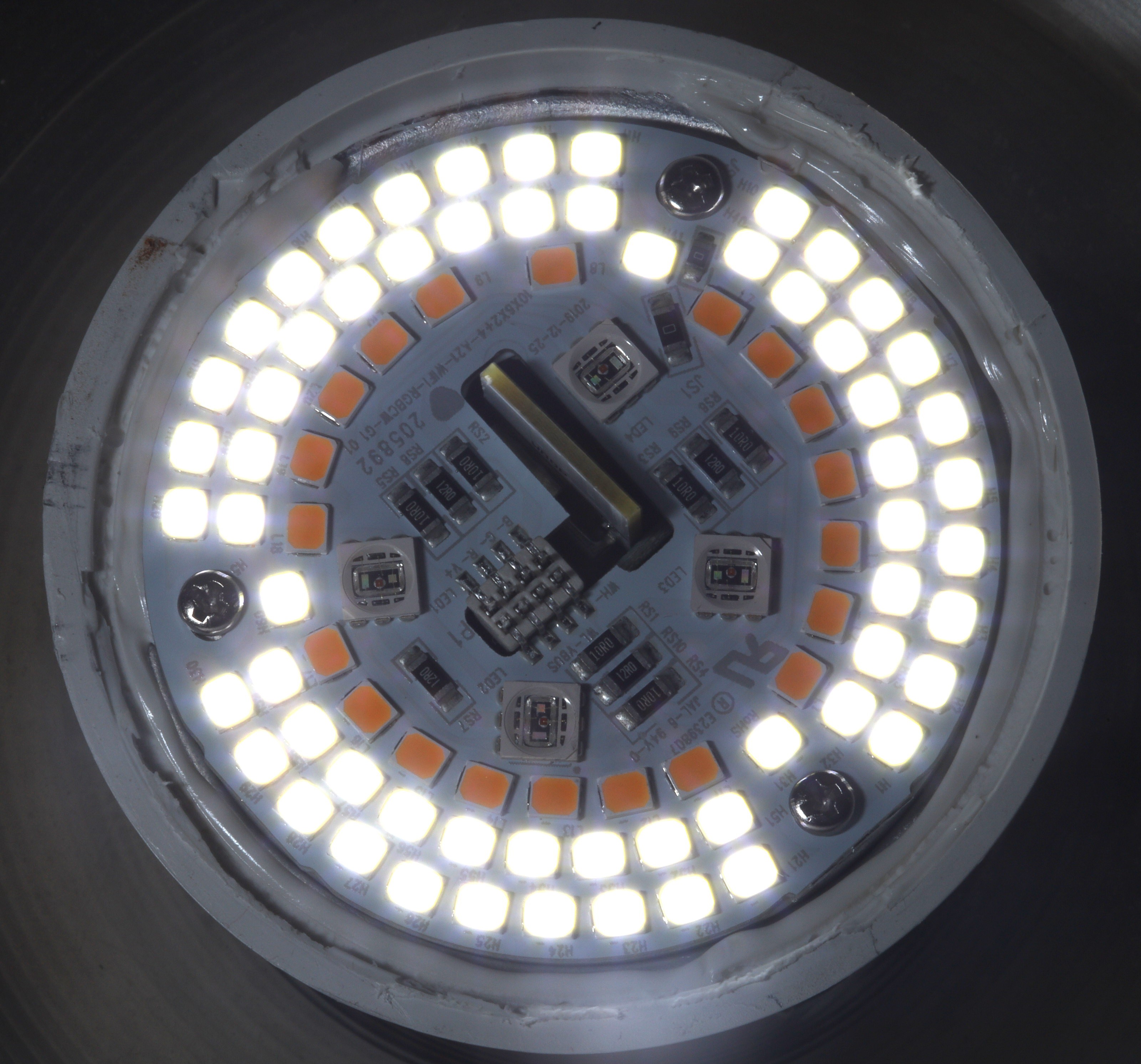

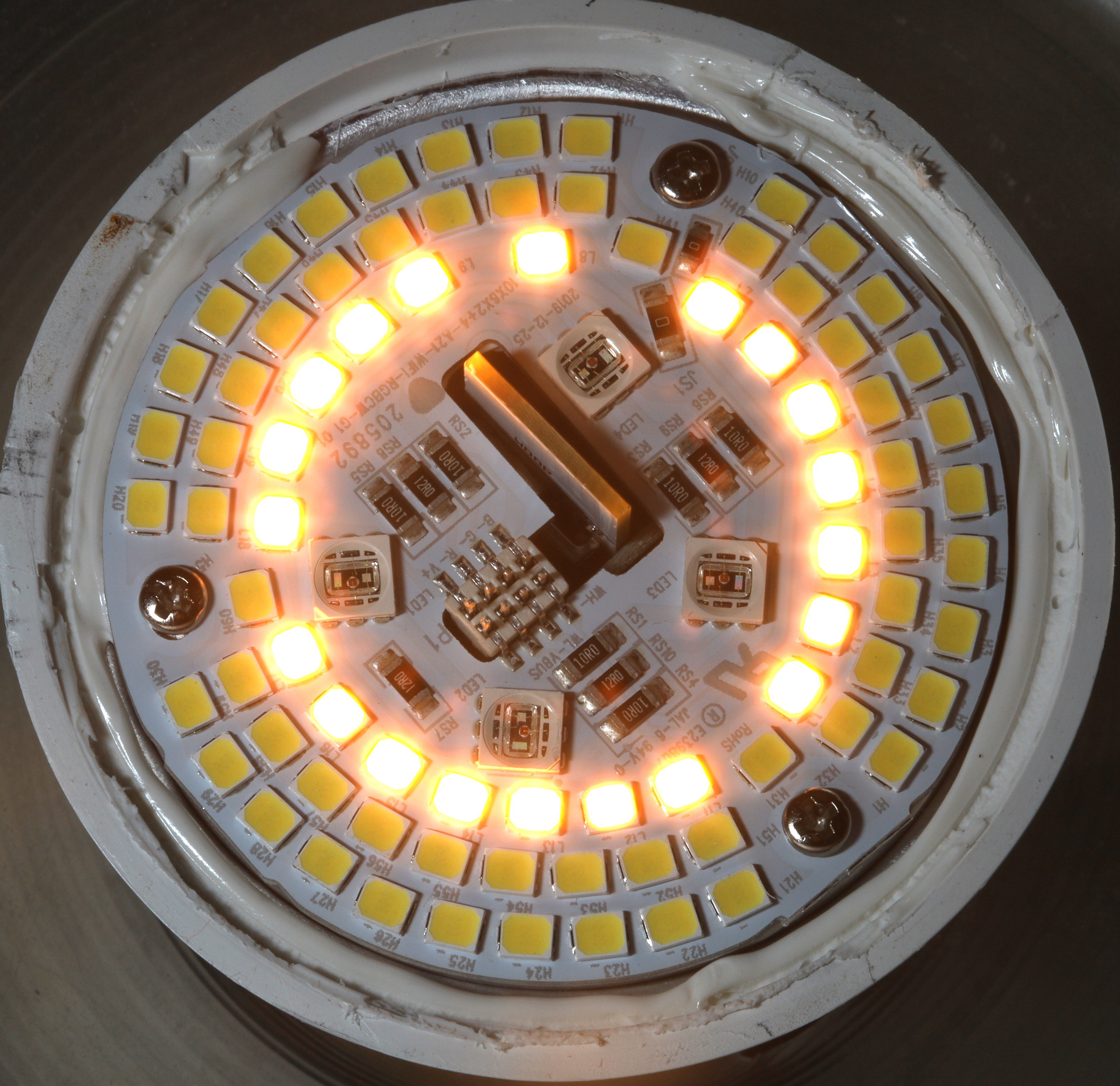

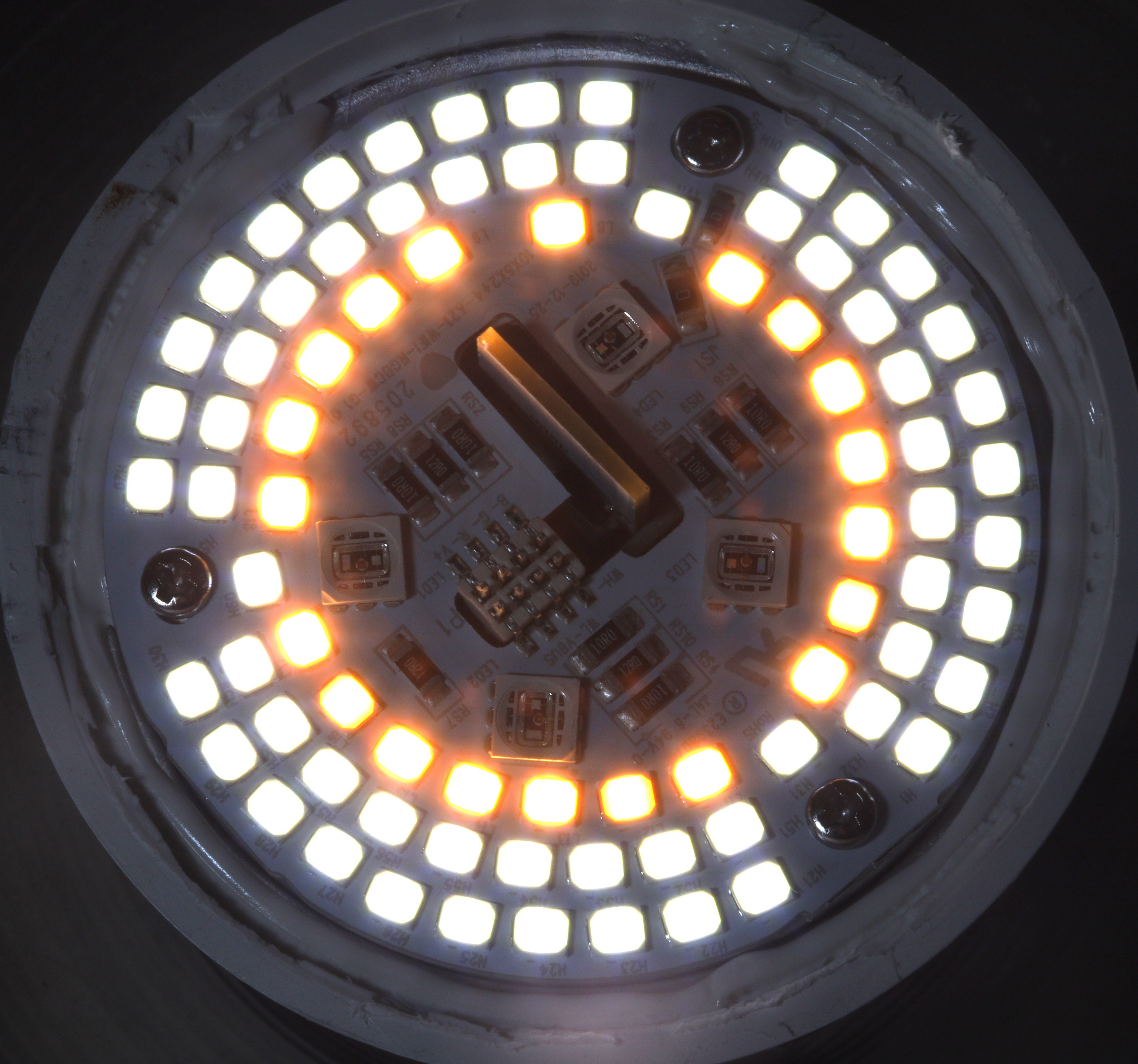

Then there was the 15 mile run to Lowes to find a cheap red light bulb, the brief taste of rich millennial gootuber opulence before tearing it apart, & the discovery that RGB bulbs don't produce their rated brightness from colored LEDs.

The only thing which might improve it is sleigh bells & even more red LEDs.

Something must be done to make the paw controller chafe less. The 23 mile run was brutal.

-

Steering update

12/17/2021 at 05:47 • 0 commentsSteering problems continued after the trace fix.

https://hackaday.io/project/176214/log/197699-death-of-pla-retaining-rings-servos

These mounted as the weather cooled. The next idea was corrosion in the paw controller.

![]()

![]()

![]()

![]()

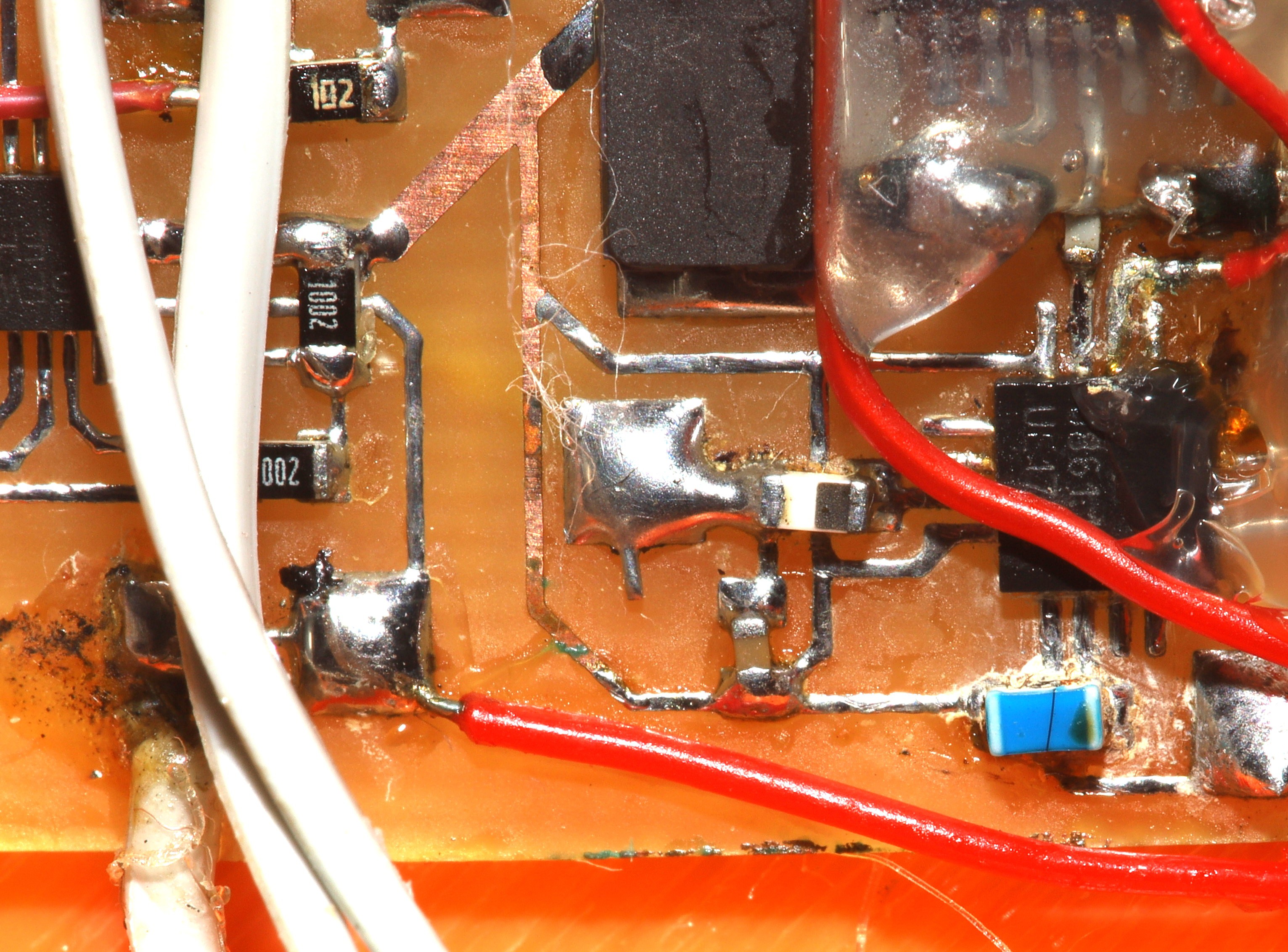

That didn't fix the problem, but obviously wrapping it in tape didn't stop the corrosion. Progressive failure of steering continued as the weather cooled. After a momentary loss of servo control, it regained its original heading, so the IMU was still working & the paw controller wasn't causing it to deviate. It always failed in higher elevations, never on the valley floor. That left a failure in the servo or mane board. The next idea was cleaning the mane board.

![]()

![]()

![]()

There's no reason for cleaning the mane board to make any difference because it wasn't affecting any other signals. The servo was rated as waterproof.

![]()

Another 30 miles in the cold damp didn't have any steering problems, so cleaning the board seemed to buy it some more time. Clenching PLA for 4 hours made the lion paws mighty raw.

-

New tire

11/13/2021 at 07:49 • 0 comments![]()

The last tire was real fragged.![]()

![]()

So along came another one at 230C, with a 1.6mm tread. It still had voids. Some guys leave their filament on an enclosed print bed for 5 hours. Another thing which might improve layer adhesion is breaking up the smooth tread with slight ridges. A smooth tread still has the best traction with TPU.

![]()

As rough as printed tires are, it pays to remember what lions did before 3D printing. Those were desperate times.

-

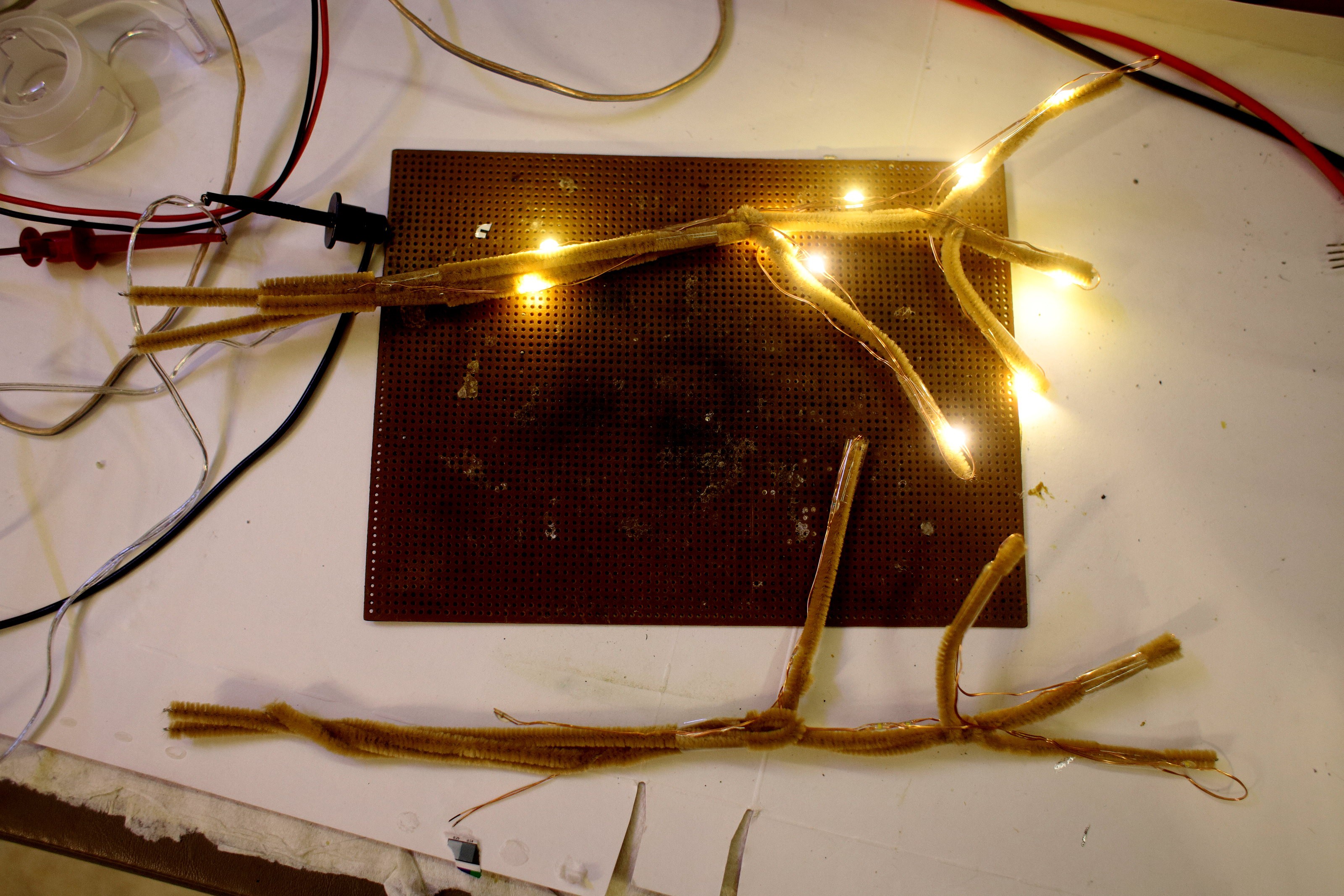

Antlers

10/30/2021 at 03:05 • 0 comments![]()

![]()

After much anguish, new antlers rolled off the printing press like an economic stimulus package. There was another fruitless search for cheap commercial options. A design was reached which didn't need support or infill, could be easily popped off in the field.

Orange PLA with a brown sharpie made a surprisingly realistic woodgrain texture. The lion kingdom couldn't afford to dunk it in wood stain. It was surprising to find brown sharpies still in existence, in a time when 31 flavors of ice cream are the enemy of equality.

![]()

They went in series, off of 5.5V. The original package ran on 2 AA's. The next step was the nose mount & final assembly.

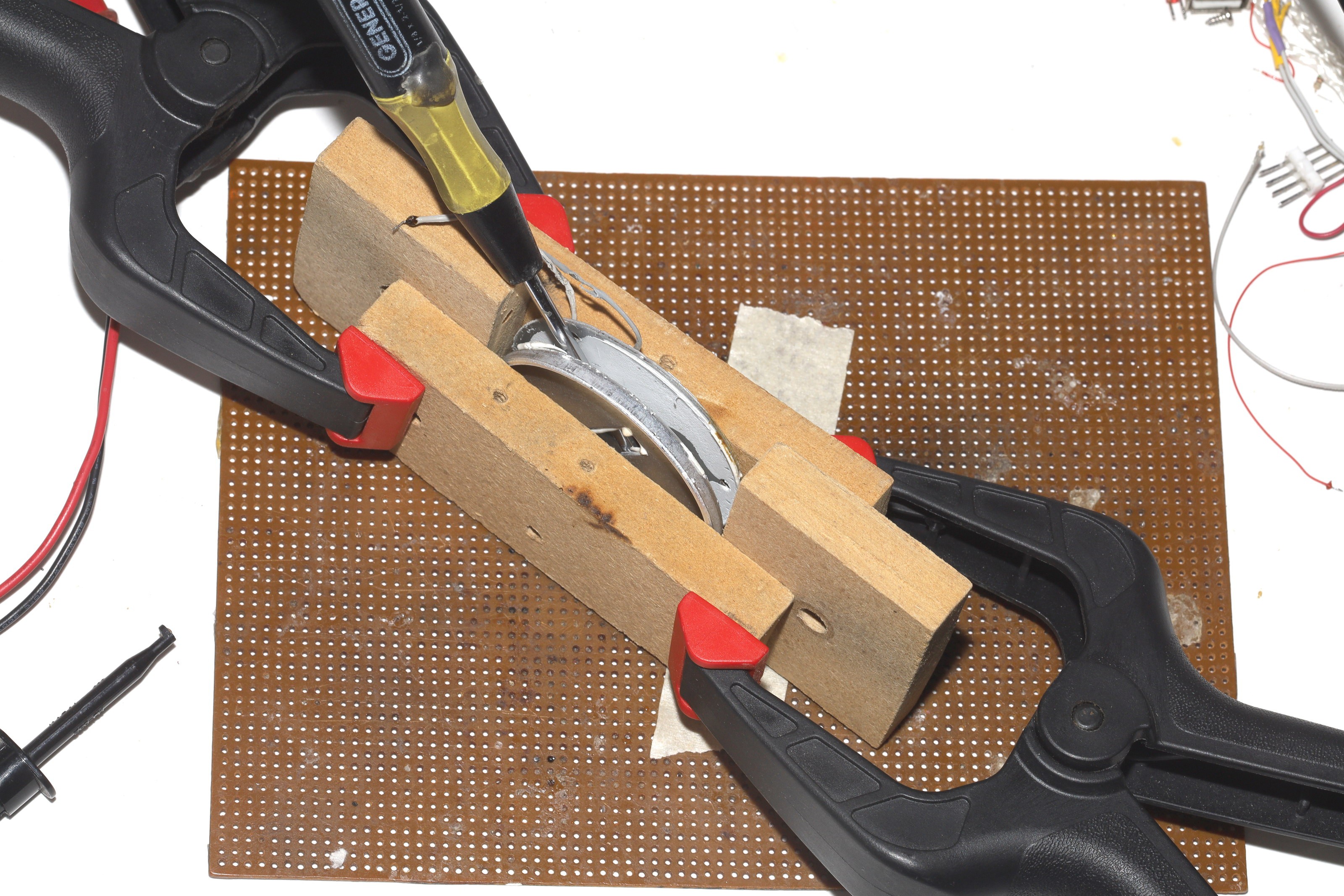

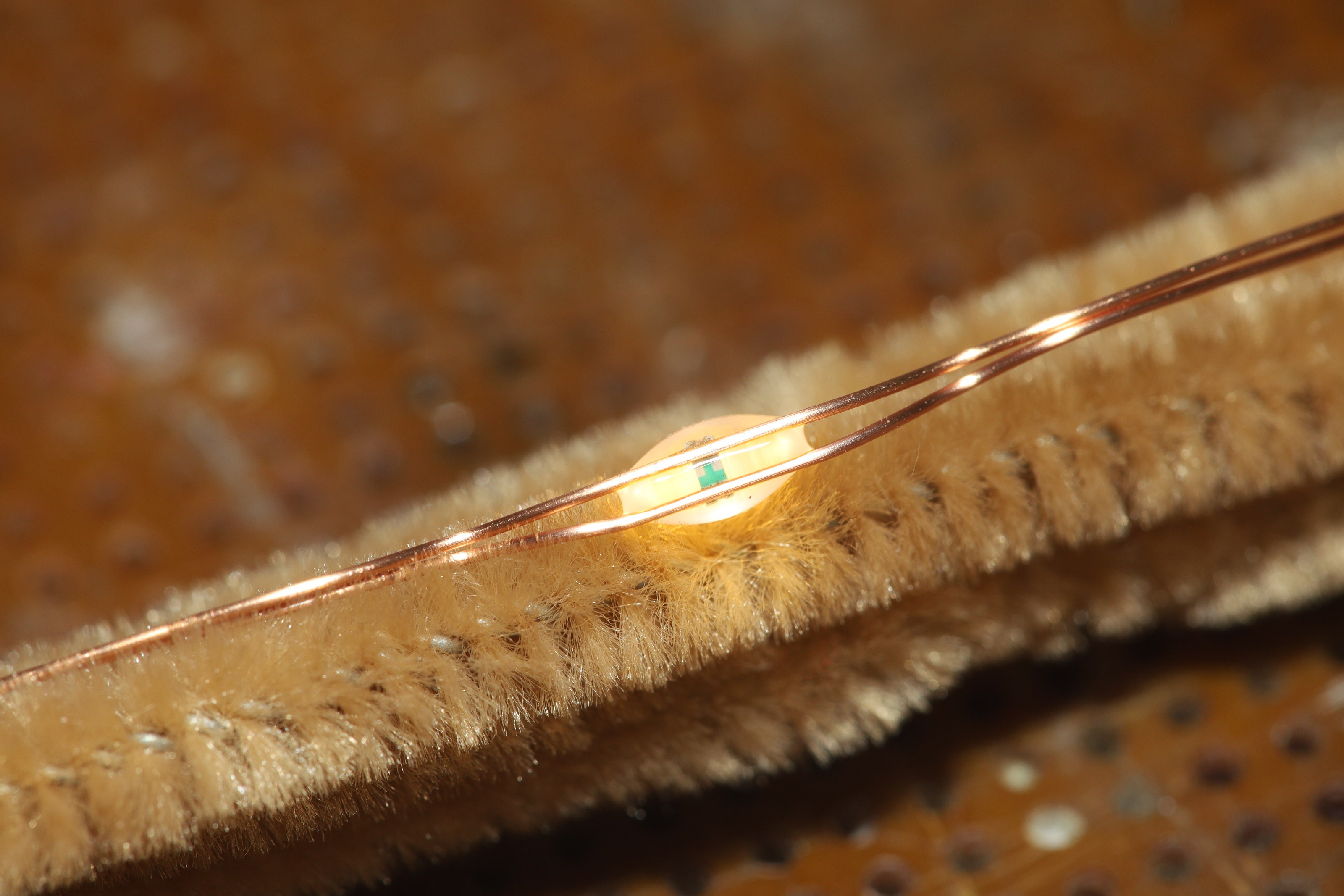

![]() Heating to 280C in a special jig managed to release the selastic just enough to pry off the heat sink.

Heating to 280C in a special jig managed to release the selastic just enough to pry off the heat sink.![]()

![]()

The new nose mount could use a lot of changes to make it easier to screw in the clamps & use string instead of zip ties.![]()

![]()

It was designed in a time when 25 zip ties were $1. 3mm zip ties aren't even made anymore. A better design would use bolts.

![]()

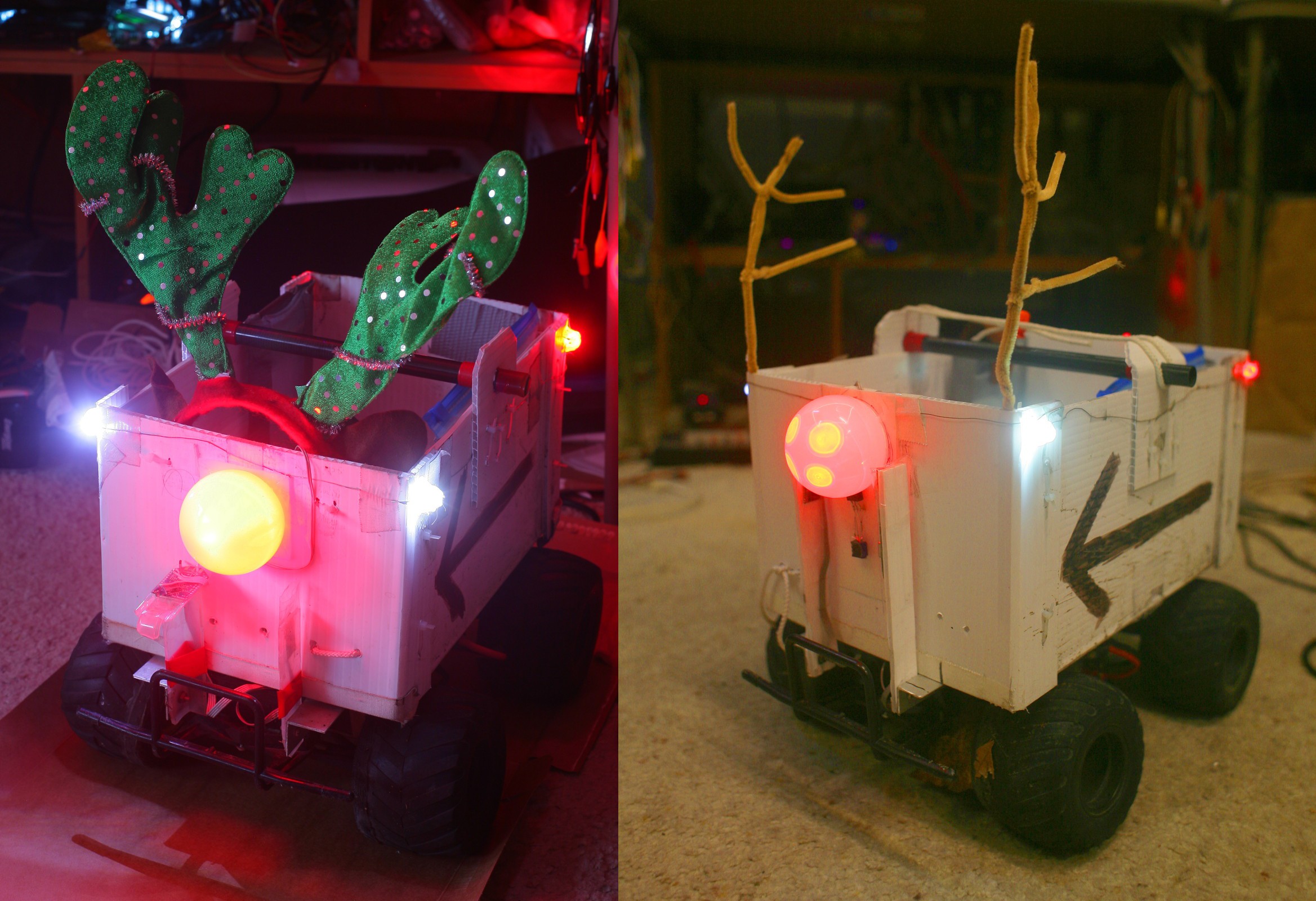

Every iteration of robo rudolf gets better & this year was definitely a big leap.

-

Rudolf update

10/26/2021 at 04:31 • 0 comments![]()

The decision was made to electrify the antlers. The copper is so heavy, it might be time to replace the pipe cleaners with copper or orange PLA. Brown is just dark orange.

![]()

![]()

These cheaply soldered, unregulated battery powered LEDs were $4 at the homeless despot, but the same hot snotted, unregulated, battery powered goodness can cost up to $30, depending on length.

![]()

Brightening the nose is a harder problem. 4 10mm LEDs in a diffuser aren't bright enough.

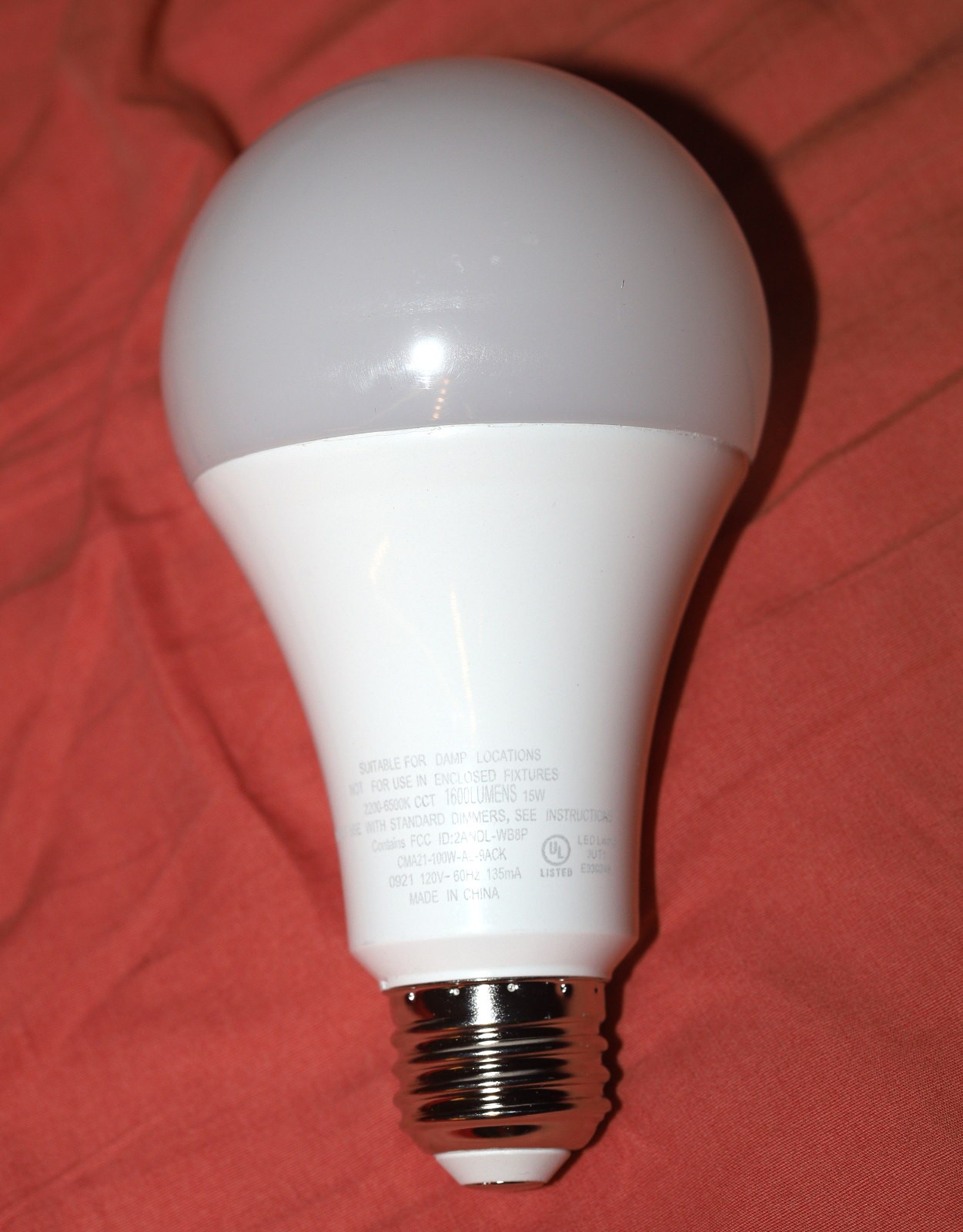

There was enough desire to immerse the trail in bright red like the movie to spend more than the minimum but not $50. Thus arrived the $15 methusela of lightbulbs, the 100W equivalent RGB Cree.

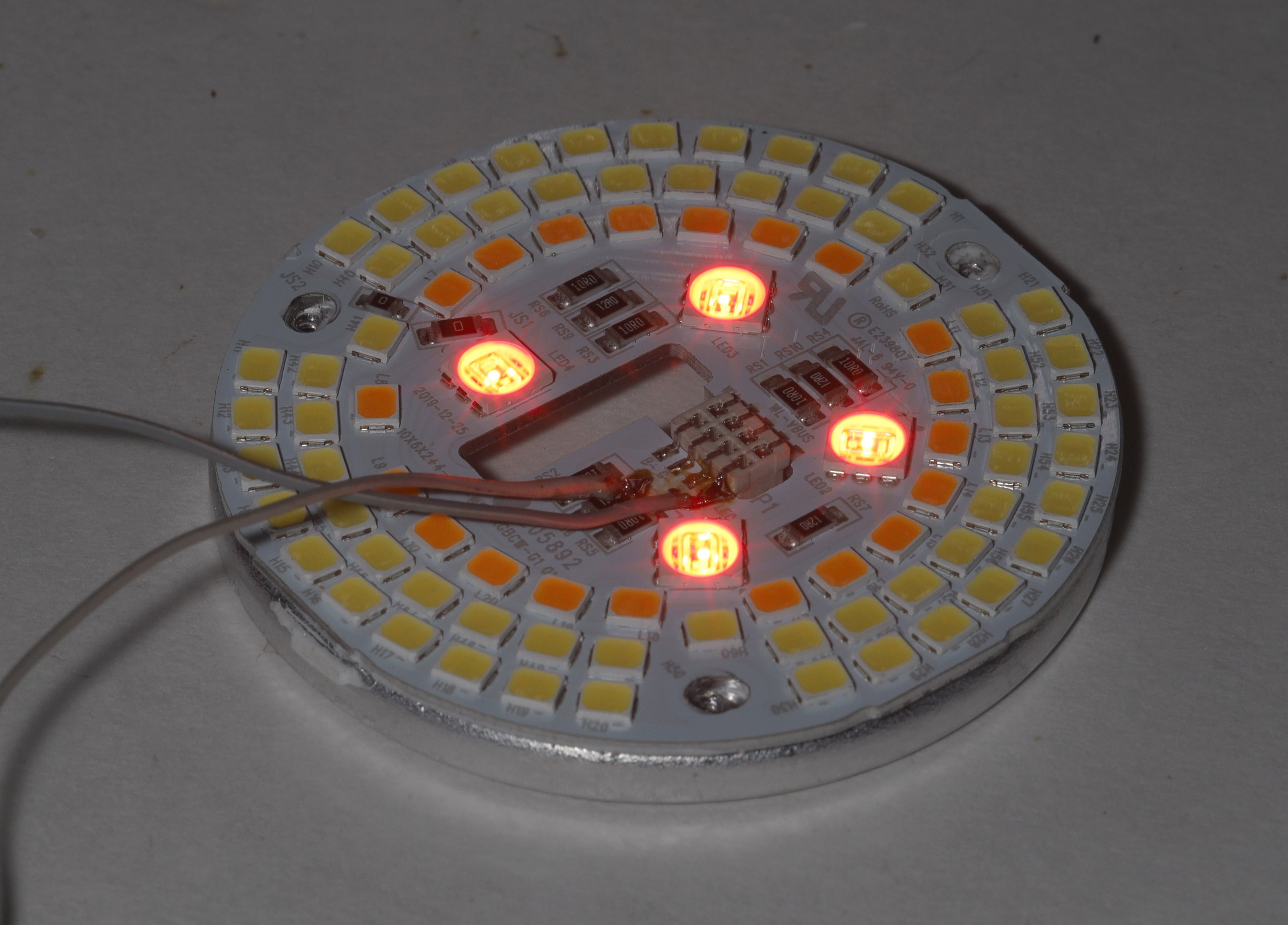

![]()

![]()

![]()

After much onboarding, passwords, & configuration, it was compared to the lion kingdom's previous 4 LED nose with conventional bulb glued on.

![]()

It became clear that the brightness per area is the same, but the methusela outputs more light by lighting a larger area than a standard bulb.

![]()

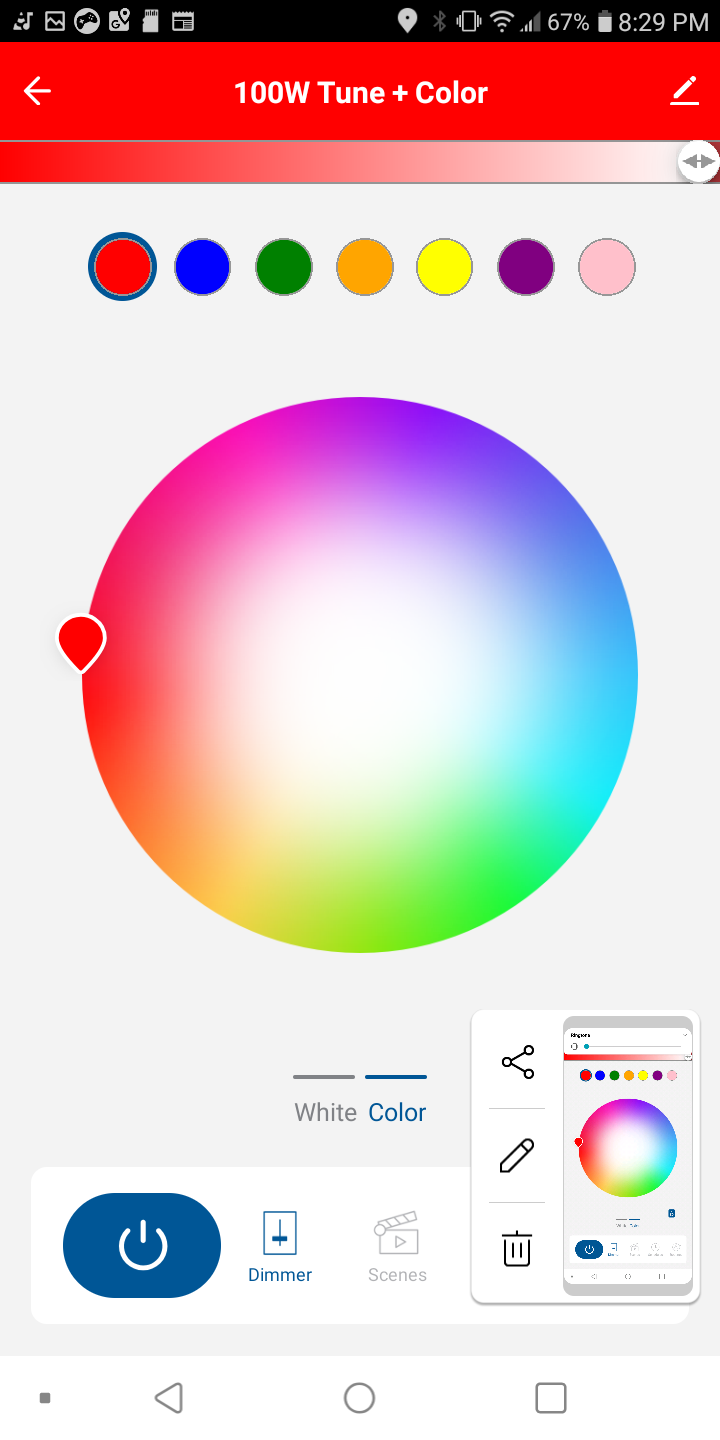

The app is an extremely painful way to change colors. It's obviously intended for someone who wants the light to change color based on time. Despite all its features, there's no simulation of a fire, a candle or a strobe. It's just aimed at a multi millionaire homeower or rich gootuber.

![]() It's a lot brighter than the house lights, but it's a lot more expensive, so lions can't complane.

It's a lot brighter than the house lights, but it's a lot more expensive, so lions can't complane.The lion kingdom doesn't have any other use for it & already has a drawer full of unused spiral lights. Despite its cost, it's actually comparable to the $10 lions paid for the 1st spiral lights. Generation X was really schmick with its fluorescent spiral lights in 2001.

![]()

![]()

![]()

![]()

![]()

![]()

![]()

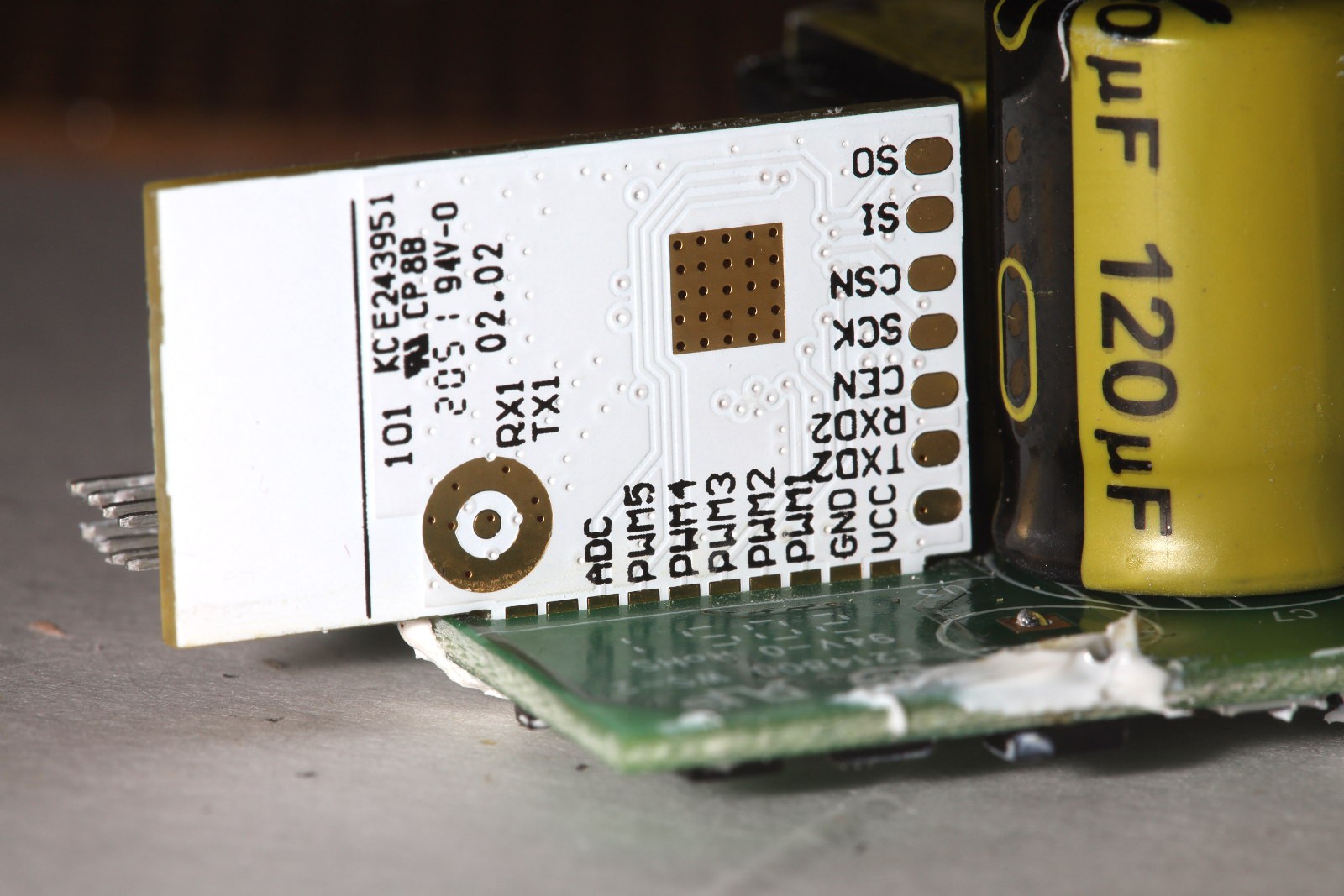

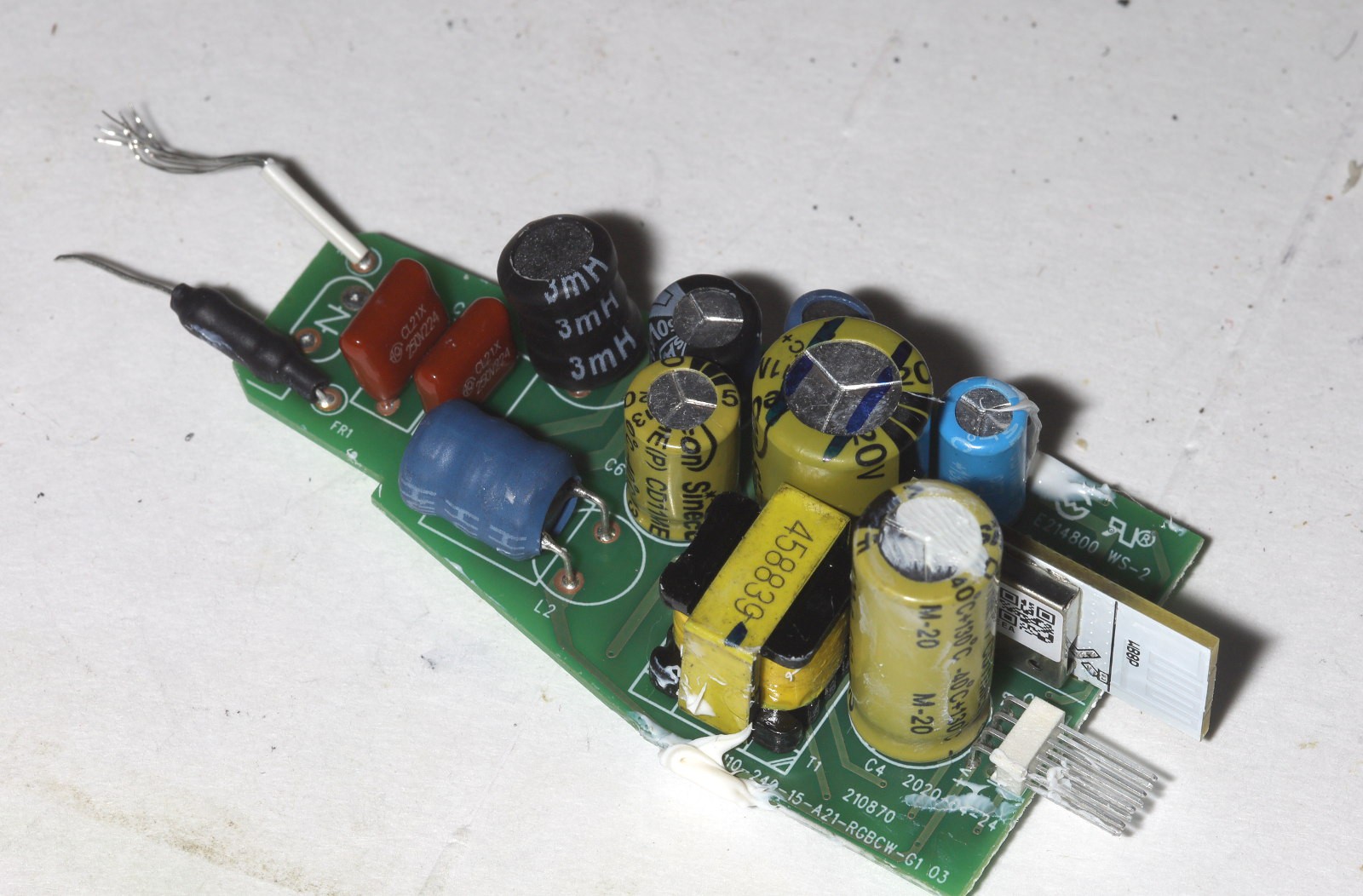

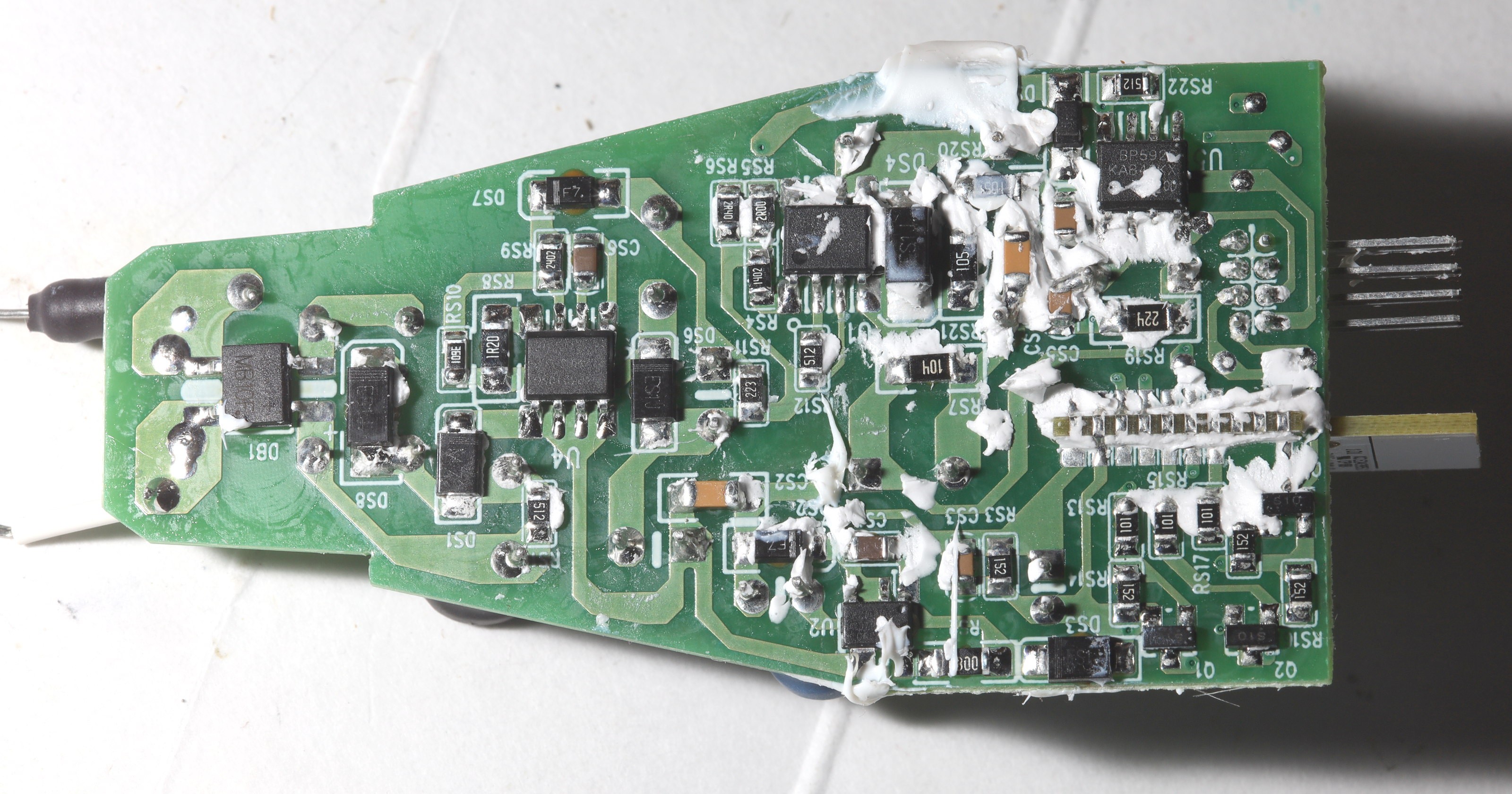

The teardown revealed it to be junk.

Only the 4 center LEDs are used in color mode. The white ones are only used in white mode, even if the color mode is white. The rated brightness on these is for white light only. Definitely not worth it for color, but still worth it for adjusting the temperature of white light. The sane way to get colored light is the good old snail mailed COB.

https://www.amazon.com/Chanzon-Inten...01DBZJZ7EV+ - R- is 13.9 for full red

VBUS is 140 & obviously powers the white LEDs through WH- & WL-. It would not be possible to run it in white mode without an inverter.

The LEDs are glued on a heat sink which can't be removed.

The money being permanently spent, the only solution was to whack on the $1 dome & call an end to the brief taste of multimillionaire luxury.

The rich gootuber with the $50 Philips Hue lights (not sponsored) & the $1/2 million cabin:

![]()

![]()

![]()

![]()

-

Cyclops light

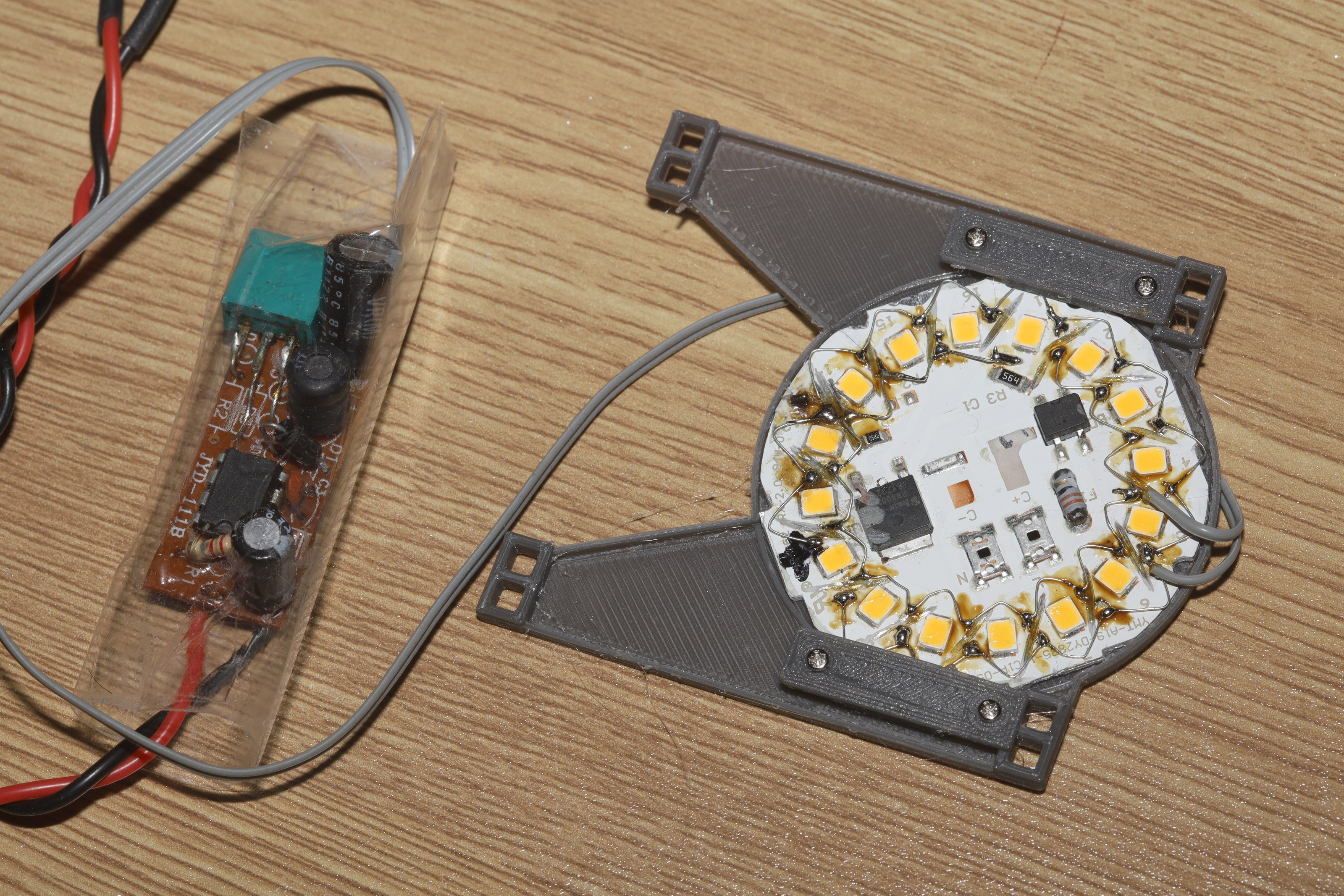

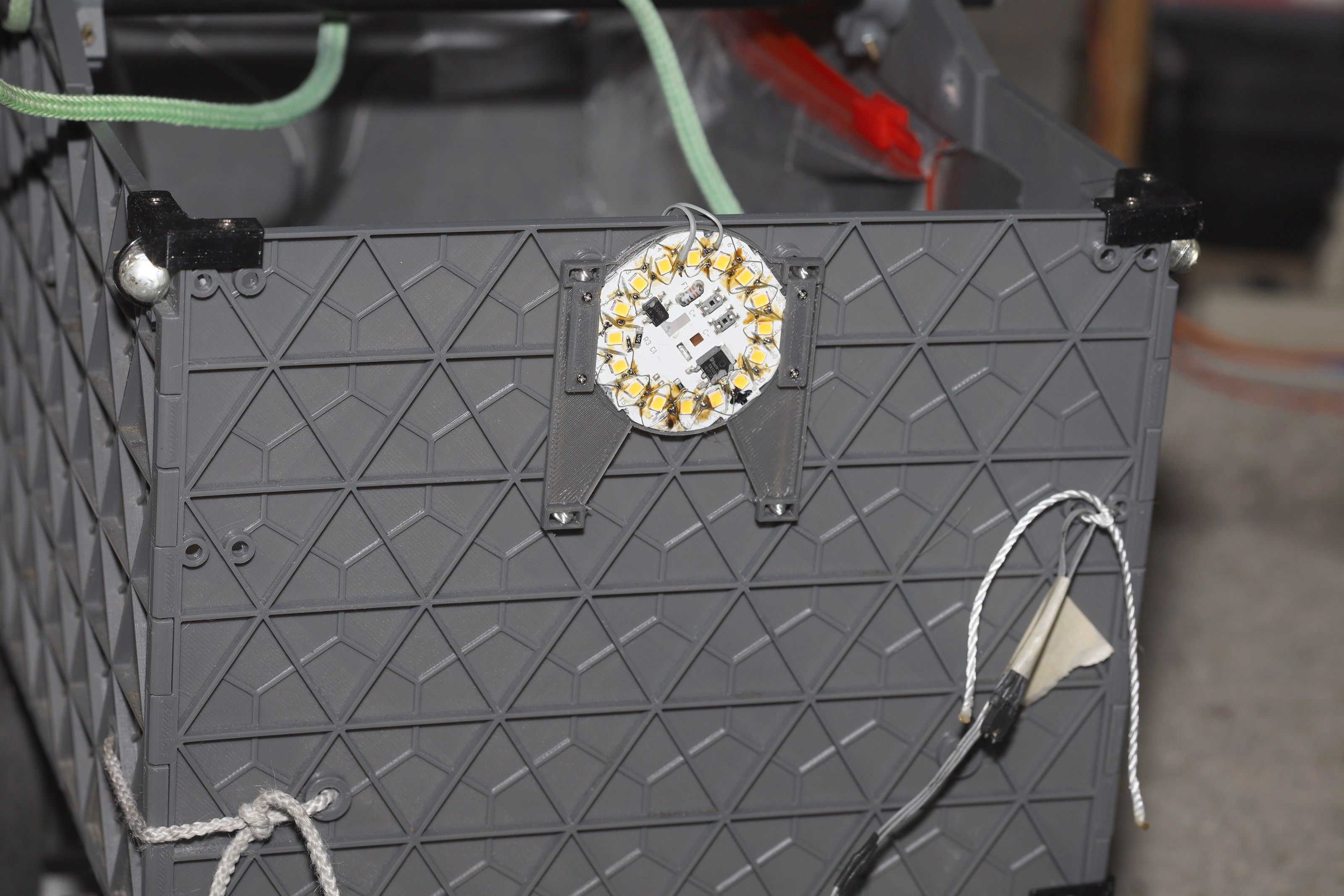

10/24/2021 at 03:30 • 0 commentsAfter an unfortunate toe injury, the decision was made to install a very bright, removable light to try to improve the visibility of the path. A 60W LED bulb was converted to 8.2V 200mA.

![]()

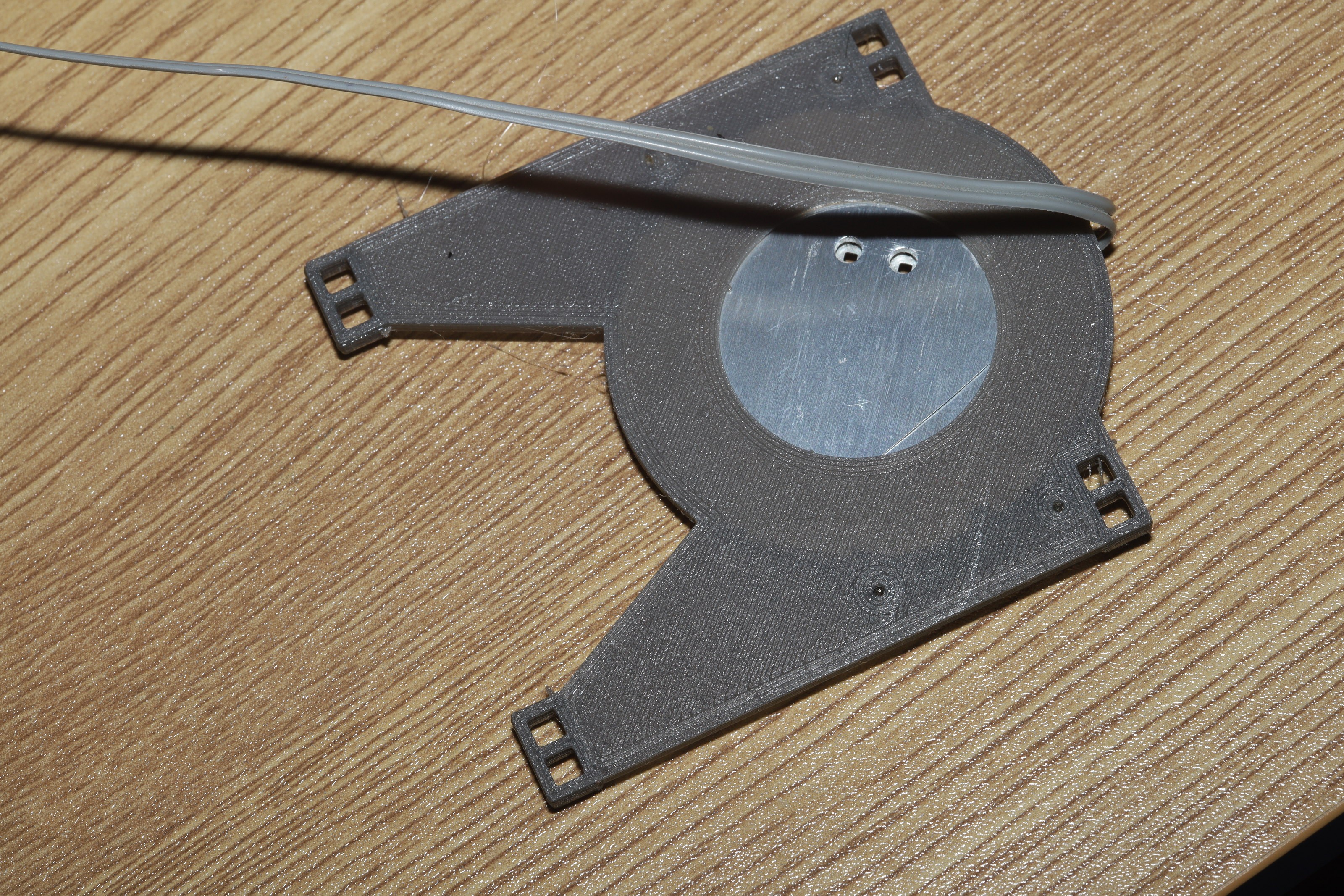

Then a heat resistant mount was printed out of PETG.

![]()

![]()

![]()

![]()

The prolific jump in zip tie prices has led to string.

![]()

It was hard to make any conclusions in a parking lot. That was the last of the dry weather for 36 hours. The mane problem is without a reflector, it's very diffuse.

lion mclionhead

lion mclionhead

2 hits where the lion was detected continuously.

2 hits where the lion was detected continuously.

2 misses where the lion wasn't detected at all.

2 misses where the lion wasn't detected at all.

Heating to 280C in a special jig managed to release the selastic just enough to pry off the heat sink.

Heating to 280C in a special jig managed to release the selastic just enough to pry off the heat sink.

It's a lot brighter than the house lights, but it's a lot more expensive, so lions can't complane.

It's a lot brighter than the house lights, but it's a lot more expensive, so lions can't complane.