-

Reading a page of a book

07/20/2022 at 12:47 • 0 commentsI started getting the TX2 all set up by updating this and that and ensuring OpenCV was wrapped by python3. I chose the reading a book as the first one to take a crack at and made some good progress or at least I know where I need to go from here. I also played a bit with saving images and depth maps from the ZED camera.

Improvements that I need to work towards-

Switch to a offline text to speech converter that sounds a bit more natural. Google is cool, but I have a nice processor onboard, and would like Sophie to work even when internet is down. You cannot comfort a child in a storm if when the internet goes out, you magically loose the ability to read.

Speed up the translation time, It seems if I crop the area with just text it significantly drops to like 2 seconds to translate. I will keep working on this, though I have a feeling it will be a whole new ball game when the child is holding the book. I think the robot will need to use a lot of processing power to even stabilize the incoming image first then divide up the image into what is a book page and what is not and then box those sections. Just need to start optimizing as I go, no kid (at least not mine) would wait 12 seconds to hear a robot read the first page I'm afraid lol.

Pros-

- It works, it can take in a page of a book and translate to speech, it even does well at ignoring images (think kid picture books)

- Did I mention that it works?

Cons-

- It is slow (It takes on average about 10-12 seconds to convert a page of text)

- It translates twice in this code, though that is more for debugging purposes

- It uses a online converter for text to speech

- It sounds a little too unnatural

Here is the "get it working code" (their is much clean up to go and the debugging of the pipeline is still in place)

# By Apollo Timbers for Sophie robot project # Experimental code, needs work # This reads in a image to OpenCV then does some preprocessing steps to then feeds into pytesseract. Pytesseract then translates the words on the image and prints as a string # It then does it again to pipe it to Google text to speech generator, a .mp3 is sent back and is played at the end of the translated text. # import opencv import cv2 #import pytesseract module import pytesseract # import Google Text to Speech import gtts from playsound import playsound # Load the input image image = cv2.imread('bookpage4.jpg') #cv2.imshow('Original', image) #cv2.waitKey(0) # Use the cvtColor() function to grayscale the image gray_image = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) # Show grayscale # cv2.imshow('Grayscale', gray_image) # cv2.waitKey(0) # Median Blur blur_image = cv2.medianBlur(gray_image, 3) # Show Median Blur # cv2.imshow('Median Blur', blur_image) # cv2.waitKey(0) # OpenCV stores images in BGR format and since pytesseract needs RGB format, # convert from BGR to RGB format img_rgb = cv2.cvtColor(blur_image, cv2.COLOR_BGR2RGB) print(pytesseract.image_to_string(img_rgb)) # make request to google to get synthesis tts = gtts.gTTS(pytesseract.image_to_string(img_rgb)) # save the audio file tts.save("page.mp3") # play the audio file playsound("page.mp3") # Window shown waits for any key pressing event cv2.destroyAllWindows() -

Sensors

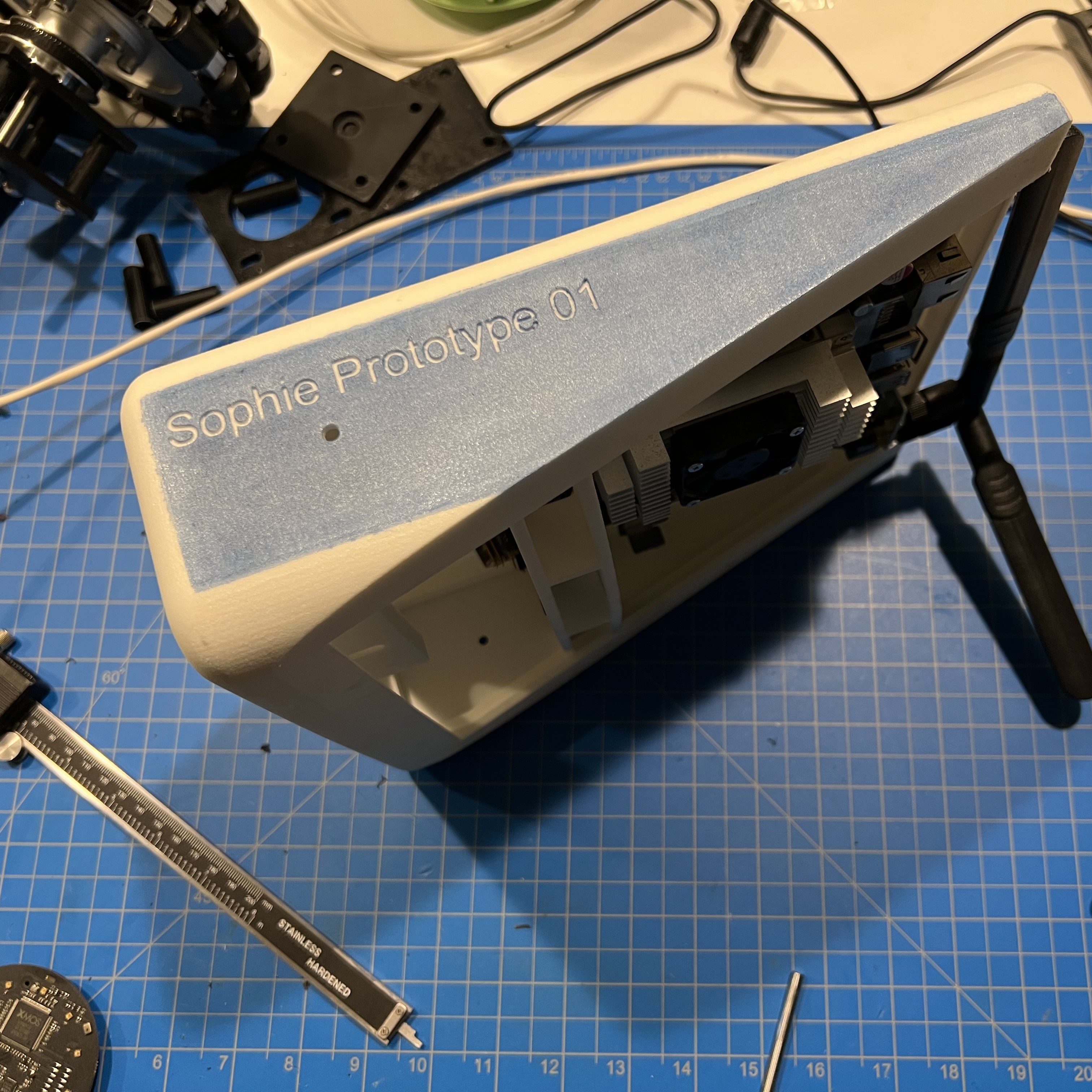

07/19/2022 at 02:22 • 0 commentsThe head got a bit of a dress up to better match the original CAD design. Added the blue sides and then applied a clear coat to them.

![]()

The head will contain quite a lot

Currently the ZED has a stereo camera. When I update to the ZED2 (very cool camera/sensor package) I should then have the following sensors. This should already help with a bunch of to do items like a IMU needed for localization and allow for a indoor environment sensing using the barometer/temp sensors.

Built into the ZED 2 -

- IMU

- barometer

- magnetometer

- temperature sensors

Additional sensors -

- Respeaker Mic Array v2.0 - Far-field (4 mics)

- Knock sensor

- Mini encoder (will connect direct to neck shaft, and will provide positional feedback for the soft actuators that power the head tilt)

Long ago I bought a Seed Studio ReSpeaker microphone array that should be able to be intergraded and give the robot the ability to sense and hear far field voices and the originating direction. I played with it on the pi a bit and was pretty easy to get initially set up. This should help the robot be more attentive to the environment and allow the ability for voice control. It could also turn to the direction of sounds initially to better respond or see/record something that happens.

I had another thought for a rather fun sensor where a cheap knock sensor (SW-2802 vibration switching element) could be mounted to the head and you could "wake up the robot" by knocking on its head. Not sure what It would do when you wake it up, but you could initially just have it say a random phase from a programmed list. (Hello, Did you need something, I'm awake, I see you...) Start giving it a basic personality.

-

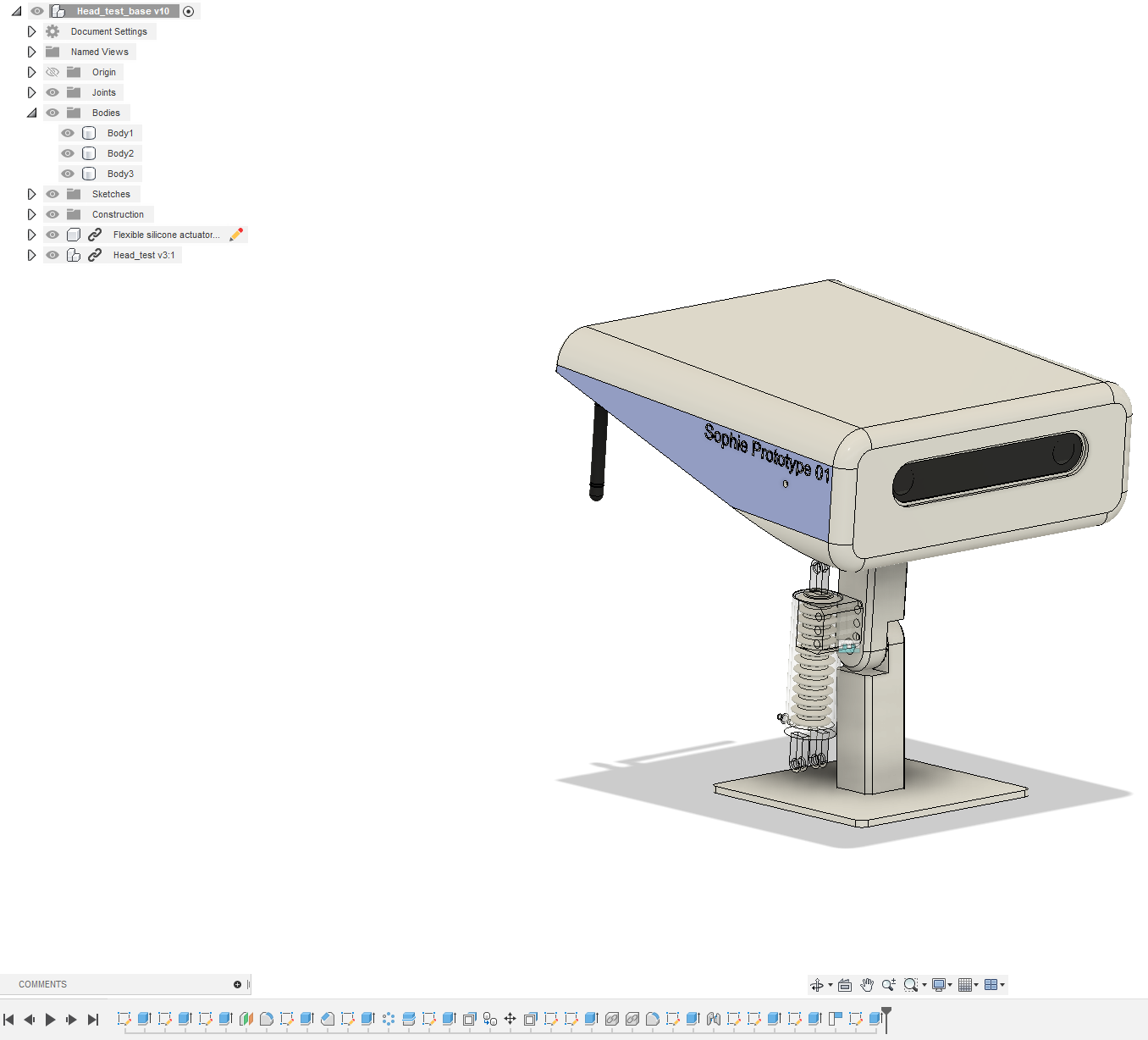

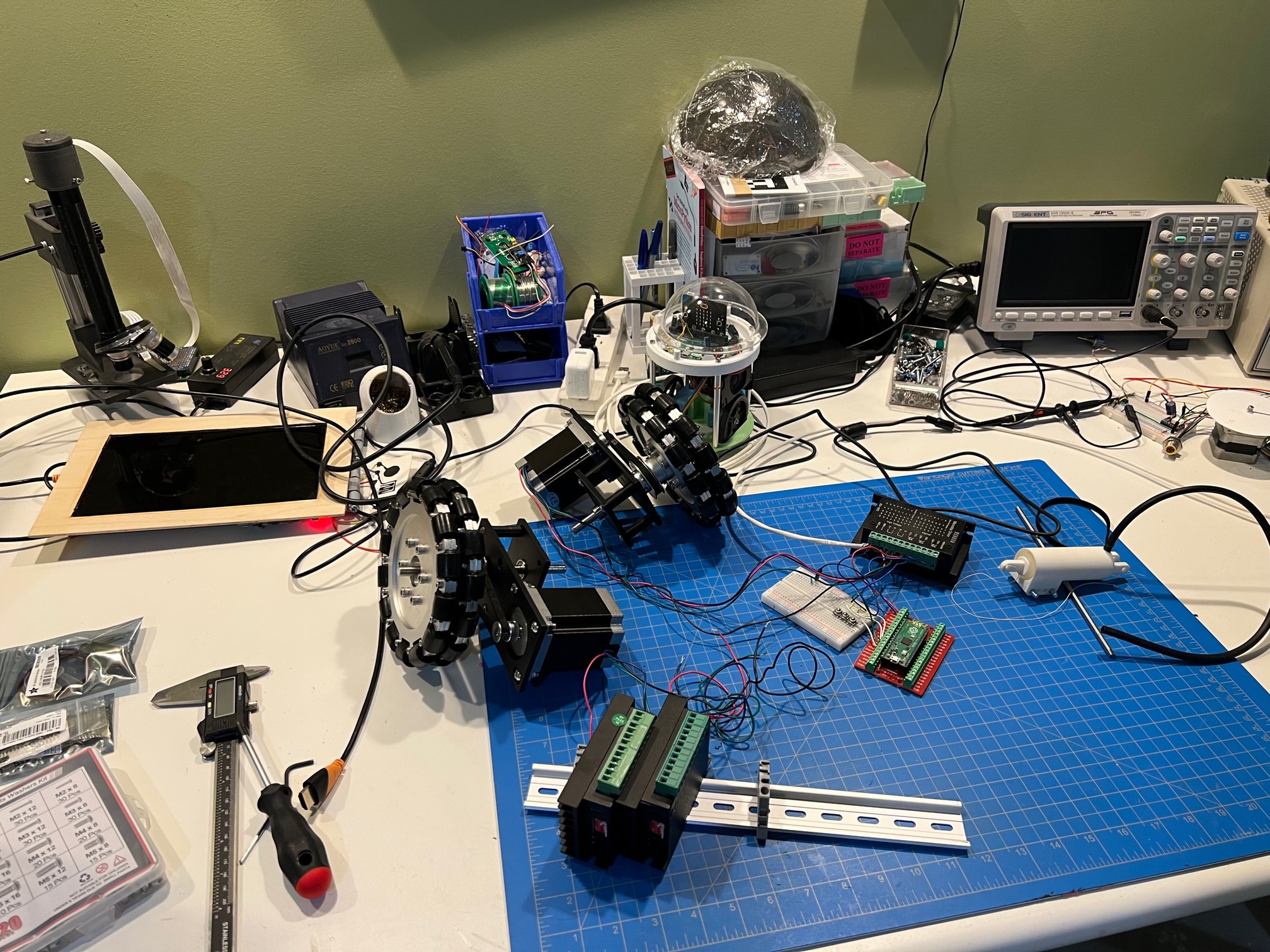

The Lab

07/17/2022 at 15:51 • 0 commentsI cleaned a bit of my lab up, well... at least mostly. I have a few projects on-going and finished ones around. Thought I'd post some pictures of it. Though, I was able to get another drive unit going today, and designed out the mounting solution for the stepper controllers and finished out the test bench head mount design. This will provide a way for the head to mounted in the correct orientation and start hooking up the main computer to the control subsystem and start the process of programming. Will need to start making some process on this will likely to get a rudimentary vison pipeline setup and a test to speech program. (Then the robot should be able to respond to external stimuli by reporting information via speech. See Fig 1.

Currently, I have the plan of 3D printing the stepper mount then slide them onto a section of din rail. I also purchased some end stops as well to ensure everything stays in place. The din rail will be populated with stepper controllers, industrial USB 3 hub and Pi Pico that will be mounted to the central extruded aluminum v-rail. I'm keeping a eye out a bit for some nicer copper wire as I will likely make a wire bundle to connect them to the pi. Nice wire = expensive... :(

Lab photos Fig 2. and 3.

Fig 1.

Fig 2. and 3.

![]()

![]()

-

The build continues...

07/16/2022 at 17:21 • 0 commentsI have been working on getting the 3 base drive units up and working. More on that soon. Though, I would like to post a bit of a progress update.

What has been done-

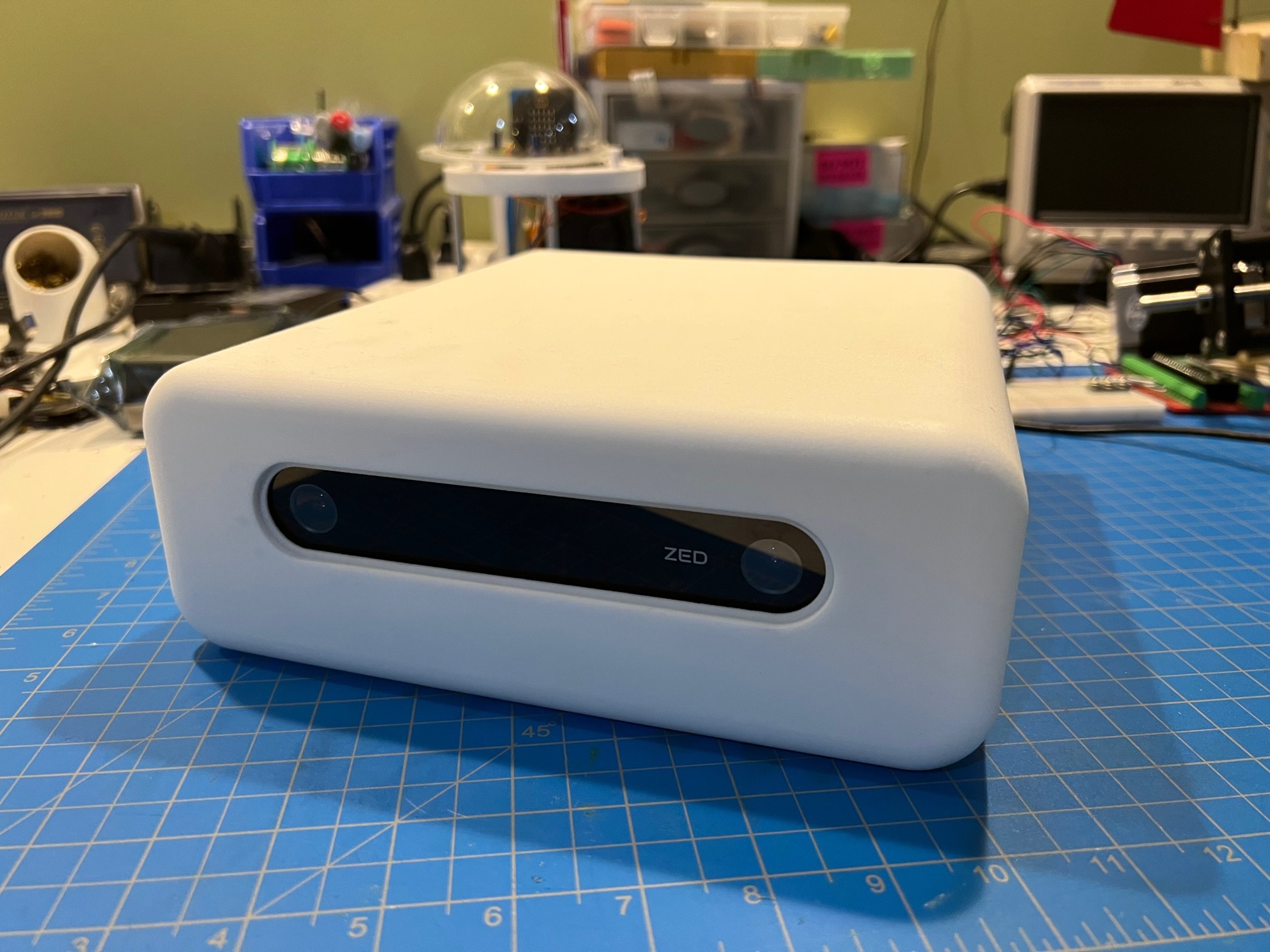

- The 3D printed head is back from the 3rd party printer service

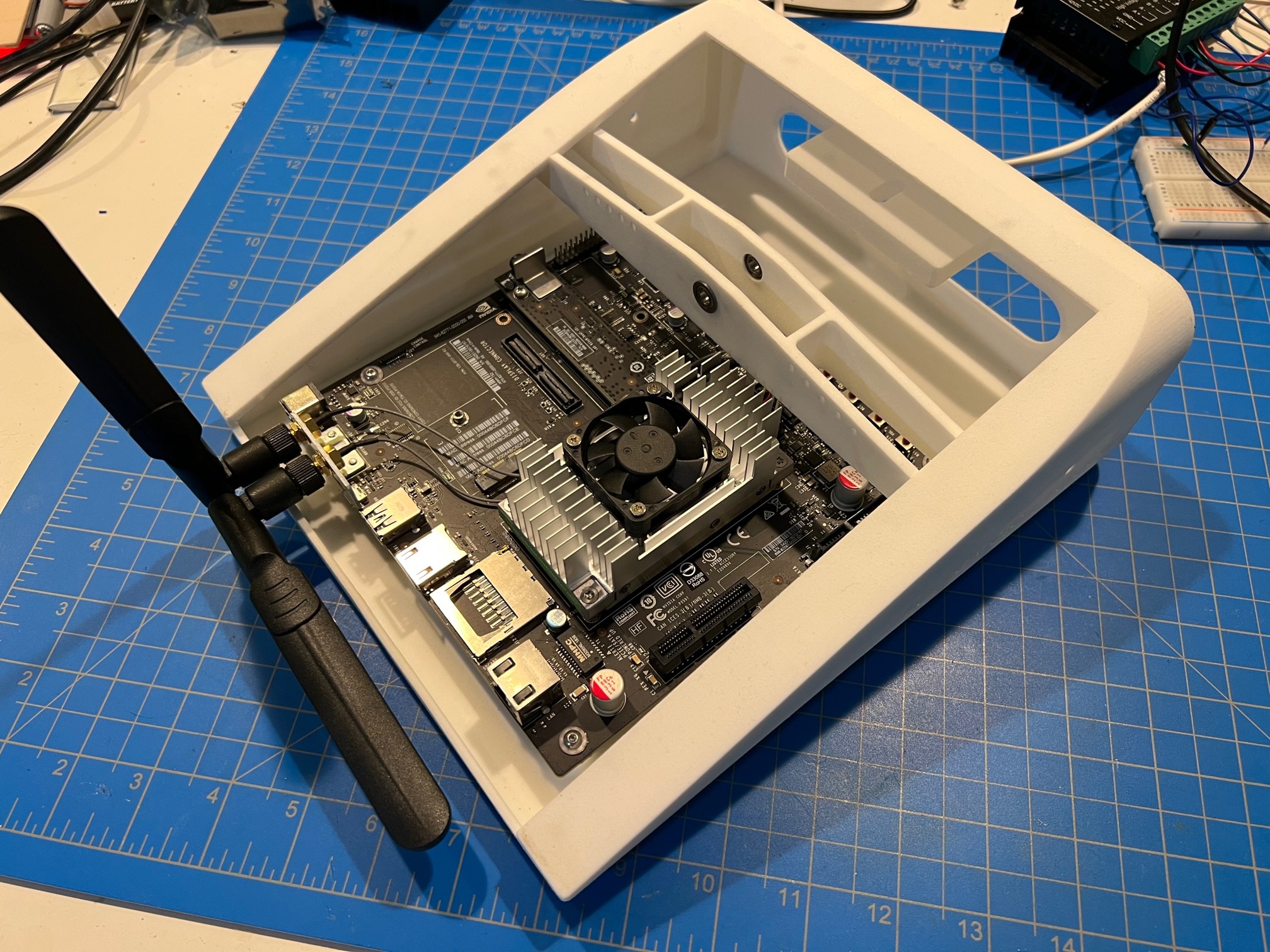

- I mounted the Jetson (Main computer) into the head (I placed in metal helicoil's to mount with metal machine screws)

- Went to a local hacker space and got the wheel hubs milled down a on a lathe. This just removed weight and allowed them to move in closer to the drive unit. I think they had the version/size I needed so it was accounting for a purchasing mistake and or them being out of stock.

- Finished 1 drive unit prototype with 2 other on the way (The parts, once verified will be made out of metal)

- Ordered and received a medical grade vacuum pump for the soft actuators (neck actuators), Will need to do more to build out the soft actuator control circuitry.

- Got the ZED stereo vision system working with the Jetson and created a fresh calibration file for it.

Overall the project is now in full swing, I'm currently racing to finish out the prototype base that will contain three drive units, batteries and stepper controllers. (a rolling chassis so to speak) The 6 axis arm is still being produced though I hope to have it in hand soon. Need to purchase a DC-DC converter for it so that I supply the both clean and the correct voltage/amperage to it.

Finishing the base will allow me to start programming the low level controller to handle all three drive units and start having a better plan for mounting certain components. I'm currently looking for a good source of lithium iron phosphate batteries (LiFePO 4 battery) as I have used them in my robots, in the past and they have held up very well. (plus less risk of fire, bonus!)

Here are some in progress build photos of different "modules" coming together.

![]()

![]()

![]()

![]()

-

Motor Drive Units

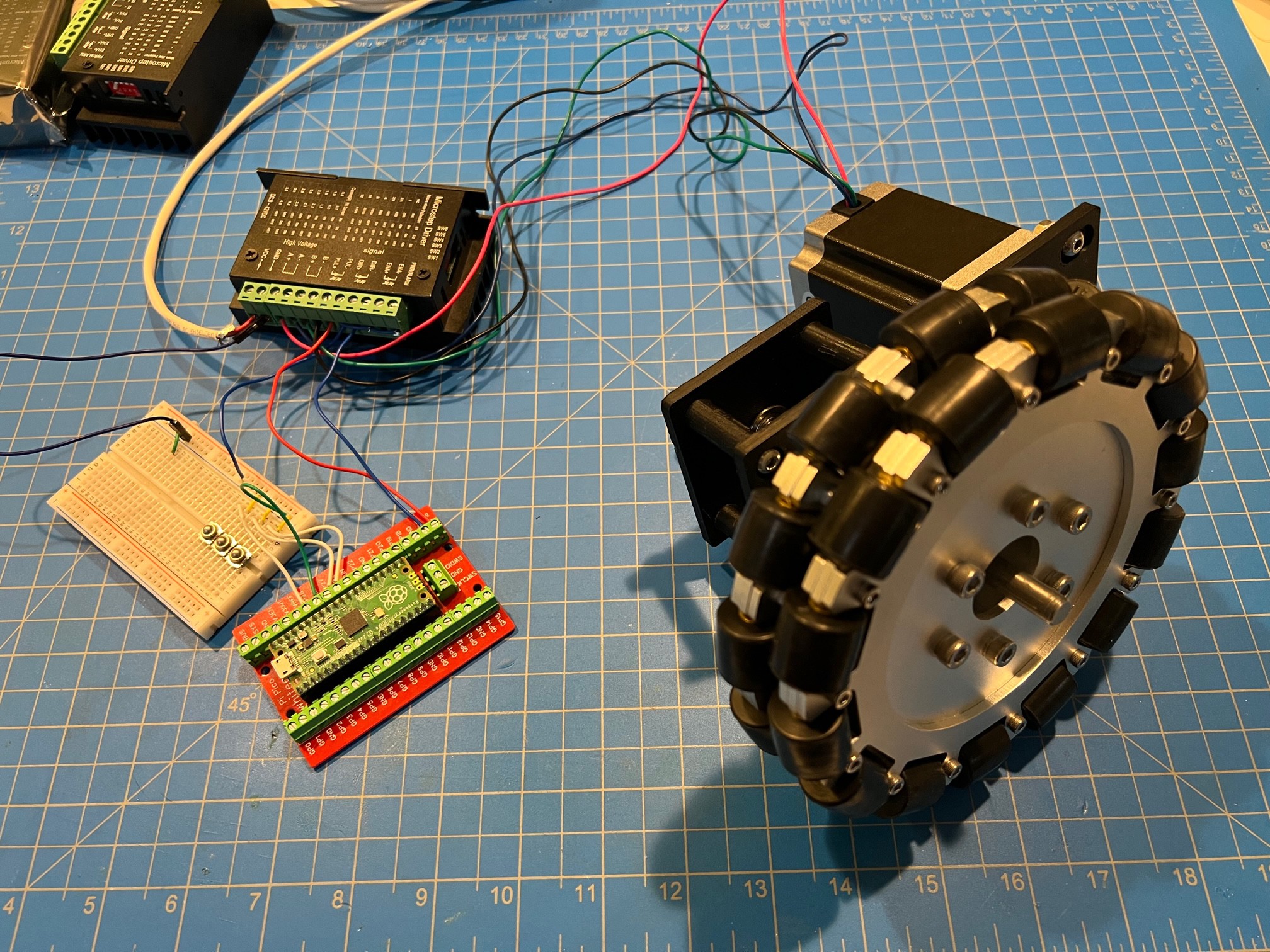

01/22/2022 at 17:10 • 0 commentsCompleted some work on the design and a prototype of a critical piece needed for the robot. Was able to get the stepper motor running and tested out the design. Currently these are built with 3D printed ABS parts though once the design is nailed down I will get them machined out of metal. Belt is a hacked together one at the moment and the correct ones are on order. The driver is being controlled with a Raspberry pi Pico running a C++ test program. Credit of the test code goes to KushagraK7 with a link to using it here ---> https://www.instructables.com/member/KushagraK7/

-

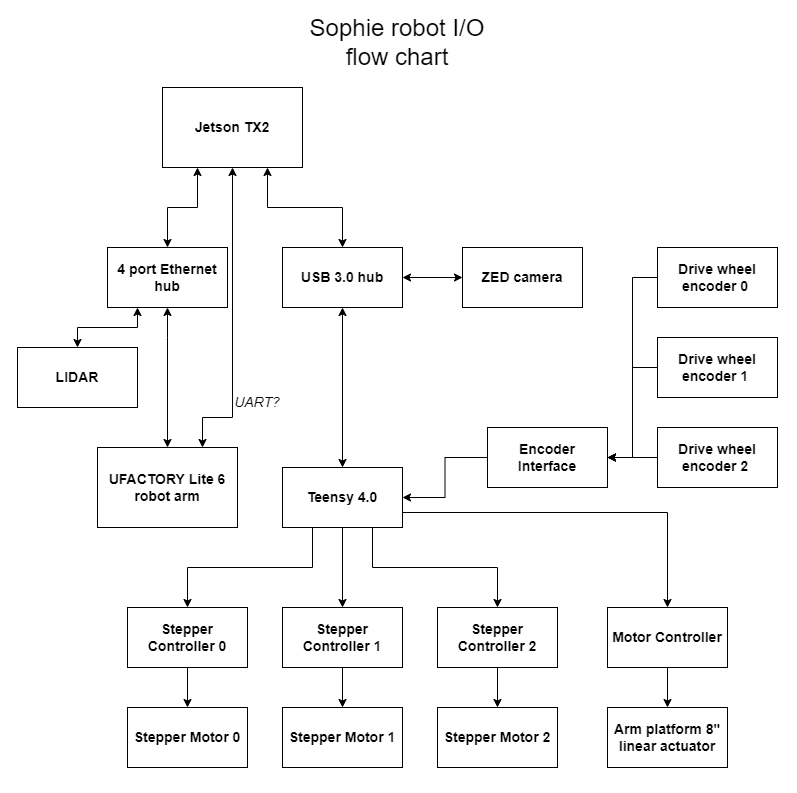

Flow Charts are fun!

11/22/2021 at 23:41 • 0 commentsI made a bit of a goal for today to at least create the flow chart of I/O for the robot. As this is a prototype, please remember this is experimental and use at your own risk. That being said, I have created a Jetson powered robot before and I'm a bit more comfortable with the the architecture.

A power flow chart will also need to be created as with any robot it starts to get complicated. Having the charts makes it a bit easier to build and troubleshoot the robot later on.

For progress I have also printed some test pieces for the main drive units bearings and will post a bit about the drive units in the next project update.

![]()

-

Beginnings

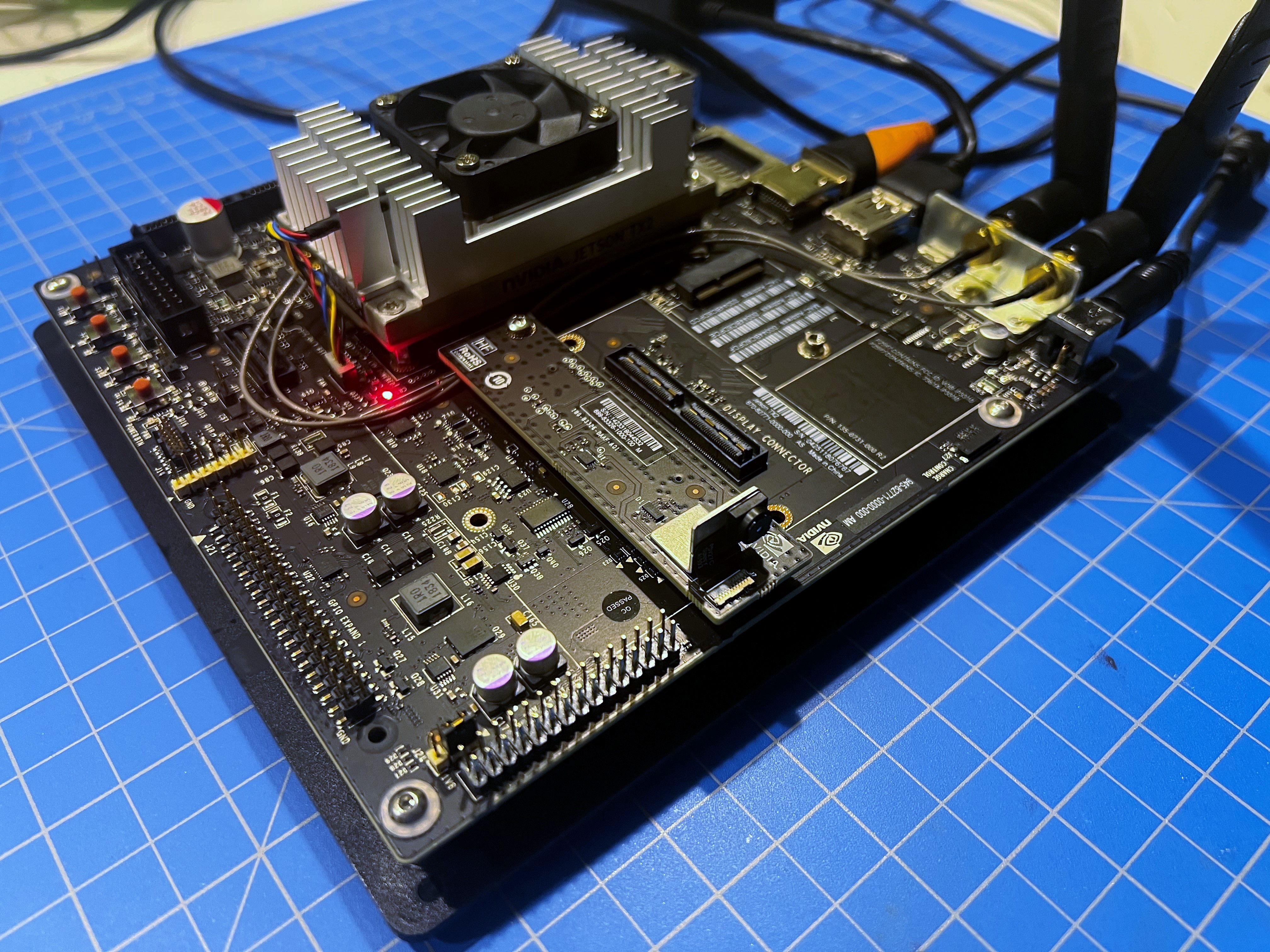

11/20/2021 at 20:49 • 0 commentsI have so far purchased so far the Jetson TX2 and a collaborative robot arm from UFACTORY (https://www.kickstarter.com/projects/ufactory/ufactory-lite-6-most-affordable-collaborative-robot-arm) This arm was the prefect choice for price and weight capacity (1kg). It also features a built in controller and runs on 24volts.

I have the Jetson TX2 and have run benchmarks and I'm attempting to pipe the onboard camera to a OpenCV program. I also have a older version 1, ZED camera for the main stereo vision.![]()

Home Robot Named Sophie

A next generation home robot with a omni directional drive unit and 6 axis collaborative robot arm

Apollo Timbers

Apollo Timbers