-

90's LED Matrix -- Weird Decisions

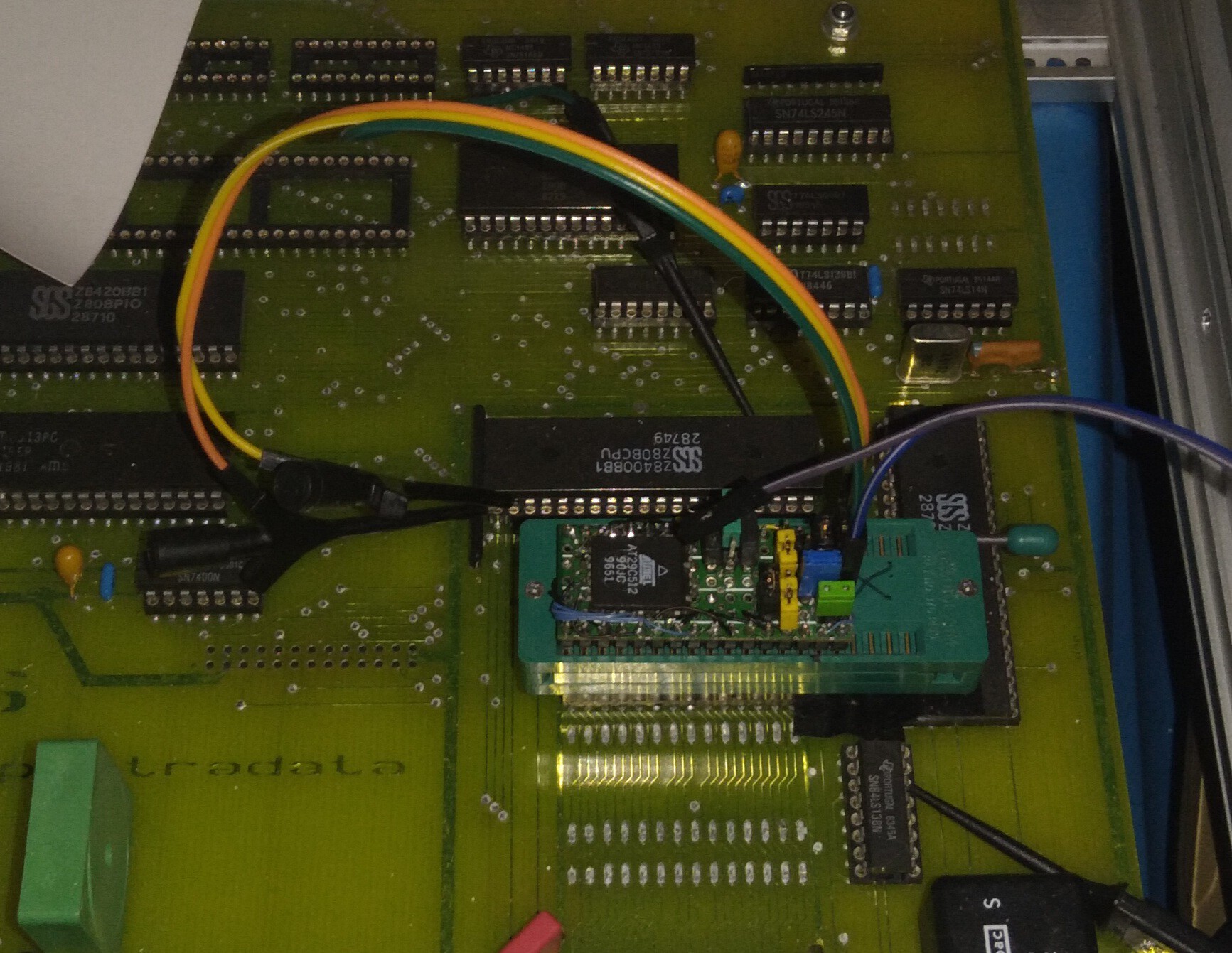

08/30/2023 at 12:51 • 0 commentsI've acquired and am rebraining/repurposing a Red/Green LED matrix sign, made in the early-mid 1990's with a densely-peripheralled Z80 processor board (which, later, might be fun to also repurpose? Sorry @ziggurat29, I haven't gotten you those ROMs, yet!)

The matrix boards are pretty simple: 74HC164 serial-in parallel-out shift-registers drive the columns via NPN darlington arrays. A couple simple 3in-8out demultiplexers choose the row, and drive some big ol' discrete PNPs. Pretty simple to interface.

Each board is a matrix of 45x16 bicolor LEDs, and as I understand, it had four of these boards, and one 45x16 red-only board at the end. So, we're talking some 400+ columns need to be shifted-in, then the row gets enabled. Their software design (which I didn't test before designing mine near identically, I guess the hardware is a huge determining factor) drives it at 60Hz. It loads all the pixels at about 4MHz shift-clock, then stops shifting and enables a row, briefly. Each row, then, is lit for about 0.7ms, while shifting takes about 0.1ms.

Note that shifting that much data that quickly is no easy task for a z80, so the CPU board looks like it has dedicated shift-registers (and associated clock timers) as well as RAM-addressing circuitry. Really, quite a lot of circuitry.

Interestingly, they used one 74HC4094 in place of the last '164 on each board, apparently for the sole purpose of resynchronizing the clock and data signals over such long distances(?).

An interesting feature of the 4094 is that it has a second set of latches on the shift-register outputs, thus if they'd have used 4094s instead of all the 164s, they could've shifted-in data *while* displaying the previous row.

I found this intriguing-enough to consider desoldering the 164s and replacing them all with 4094s... and, to be honest, right now I can't exactly recall *why*, because I came across a much bigger discovery while running through this mental exercise...

(Oh, I think it had something to do with the annoying flicker at 60Hz... I thought maybe If I latched while shifting, then bumped it up to 120Hz, maybe 240, by decreasing each row's on-time, the 4094s' built in latches would allow for its not dimming dramatically by not increasing the ratio of load/off-time vs on-time).

But I discovered something far more dramatic, in terms of improvement than just that...

I'd first looked into investing in 74HC4094's, to handle the 4MHz clocking... They're not too bad, maybe 30bux to replace all the 164's. Would be worth it to get rid of the flicker!

So keeping on with the redesign mental exercise, I pondered *how* to plug these new 16pin chips in the densely-packed 14pin spaces... Turns out the pinouts are *very* similar, requiring only two bodge wires, which also happen to be the same signals, from the same sources, on all the chips. (Latch-Strobe, and Shift-Clock) so would be very easy to bodge half-deadbug-style by just bending up two pins. OE is active high and in the 164's V+ position, so soldering the 4094's pin15 to pin16 takes care of the "overhang" where there are no PCB pads for the larger chip... Also, it turns out the 164's plastic cases are actually the same length as the 4094's, despite the extra two pins, so there is enough space between the chips for the extra pins! It's coming together quite smoothly.

...

But we still haven't gotten to the kicker...

I'd also thought it might be nice to add an extra data bit, two for each color (red and green), in my design's framebuffer... Doing so at 60Hz would just make the flicker worse for the 33% and 66% shades, but a higher refresh rate would probably keep it pretty smooth.

But we have another problem... with a 16MHz AVR, and 4MHz SPI, we've basically got zero time to process anything other than loading the next SPI data byte while the previous is shifted-out. (especially since I bumped it up to 8MHz, despite being a bit uncomfortable with such speeds over several feet, unshielded). So, during the time, currently, when it's shifting in a row's worth of pixels, the AVR basically can't do /anything/ else... and that amount of time is far longer than two bytes sent from a PC via the UART... which means allowing interrupts is necessary *during* row-shifting, even though doing-so would make flicker even worse (and, now, inconsistant, which might be a problem for folk with epelepsy?) But, /where/ such delays are allowed is also rather important, because they *really* went out-of-spec with overdriving these LEDs... I can't recall, but I think it was something like absolute max 90mA at some tiny percentage duty-cycle, and they're doing 140mA at 1/16th. So, I don't want a slew of UART interrupts extending that any further! These are just tiny little LEDs, after all!

So, then, if I allowed interrupts when loading row data, the row would dim and/or the timing would shift... Not ideal. But if I don't, then UART data would be lost. Not an option.

So another aspect of using the 4094s' latches would be that I could just set some arbitrary amount of time to display each row, via timer peripheral, and then the actual row-loading could be interrupted any time, as long as the loading process is much shorter than the displaying time.

Great!

No, I mean, it gets even better...

I planned to use 74HC4094's which are rated for 20MHz... but then I looked in my stock to see if I could experiment before/without placing an order... and... a whole board's worth of CD4094's... which... are only rated to 1.25MHz (4000-series is SLOW!)...

But then, it occurred to me to math... and it turns out that the 4094's latch means I could do 200Hz /no problem/, even at 1MHz SPI. In fact, if I only do one panel (90 col) per AVR I can do nearly 200Hz with an SPI divisor of 64?! That means each bit takes 64 CPU clocks, that means each byte is 512 CPU clocks, meaning I can use an SPI-interrupt to load the next byte, no problem, and still improve the refresh rate dramatically, and use regular interrupts [UART, etc.] relatively freely, *and* even process main loops *while* loading row data! All this by LOWERING the SPI row-upload rate, and using *slower* components, which were surely available cheaper than those used when this was designed. And, I mean... AVRs are significantly faster than Z80s, and have significantly more inbuilt peripherals than this custom CPU board has customly designed on it... Surely, if they'd've used the 4094's instead of '164's, they could've reduced quite a bit of the precision timing and custom circuitry needed on the CPU board... And reduced flickering, and increased duty-cycle (so reduce the huge surpassing of abs-max for the same brightness)... Heh. And they even *did* use the chip that would've eneabled all this, just in a weird way that didn't even take advantage of its best feature. Heh. Weird.

Sometimes, I guess, slower is faster? (Oh, I just saw them say this on NASA's livestream of the launch. Hah!)

I think I'll put in sockets, and leave the bodgery to deadbugging bent pins, then it can have the 164's reinserted, for posterity.

-

"The Linker does just that!"

09/04/2022 at 17:43 • 14 commentsBack in the early 2000's I had a project which went quite smoothly, until suddenly, and for months, eluded the heck out of me, and still that bit haunts me to this day.

Prior to that project, I'd been doing stuff with AVRs for years, fancied myself pretty good at it. This new project was just a minor extension from there, right? An ARM7, the only difference, really, was the external FLASH/RAM, right? No Big Deal. Right?

So I designed the whole PCB, 4 layers. Two static RAM chips, FLASH, a high-speed DAC and an ADC... even USB via one of those stupid flat chips with no pins. I'd basically done all this with barely the semblance of a prior prototype... The ARM7 evaluation-board was used for little more than a quick tutorial and then for its onboard jtag dongle for flash programming.

Aside from one stupid oversight--wherein I used tiny vias coupled to the power-planes through thermal-reliefs without realizing I needed to go deeper into the via options to consider the "trace" size used to create the copperless spacing and wound-up cutting the connection due to the traces' overlapping round ends--Aside from that (mind you, at the time the cheap PCB fabs offered 4layer 4in x 4in boards for $60 apiece, if you bought three, as I recall, and free shipping was not a thing)...

So, aside from that $200 mistake, the board worked perfectly. I even figured out how to solder that stupid USB chip without a reflow oven.

Even my wacky idea about a strange use of DMA (which I'd never messed with, prior), enabling the system to sample both the DAC and ADC simultaneously at breakneck speeds and precision timing... even that all worked without a hitch. As-planned.

Amazing!

What *didn't* work, then?

The friggin FLASH chip was, of course, slowing the system. I hadn't considered instruction cache (who, coming from AVRs, would?), and, frankly, I don't even recall its mention in any of the devkit's docs, aside maybe a bullet-point in the ARM's datasheet.

As far as I could tell, this thing was running every single instruction from the ROM, just like an AVR would... But, that meant accessing the *external* chip with a multiple-clock-cycle process (load address, strobe read, wait for data, unstrobe read), made worse by the number of wait-states necessary for the slow read-access of the Flash.

So, every single instruction took easily a half-dozen clock cycles, rendering my [was it 66MHz?] ARM *far slower* than the 16MHz AVRs I was used to.

.

Whew, I wasn't planning on this becoming a long story.

Long-story-short, I needed to move my code to RAM, and even after several months near full-time I never did figure it out. And, now, (well, a few weeks ago) I ran into the same problem again with this project.

This time it's not about speed, it's about the "ihexFlasher", which allows me to reprogram the firmware in the [now] Flash-ROM in-system. (Pretty sure I explained it in a previous log). Basically: set a jumper to boot into the ihexFlasher, upload an ihex file via serial, change the jumper back, reset, and you're in the new firmware.

Problem is, the flash-chip can't be read while it's being written, so where's the Z80 gonna get the instructions from that tell it how to write the new firmware *while* it's writing the new firmware? RAM, of course.

Somehow I need[ed] to burn the flash-writing-function into flash, then boot from flash, then load the flash-writing function into RAM, then run it from there.

Basically the same ordeal I never did figure out with what was probably my most ambitious project ever, and with the most weight riding on it, back in the early 2000's.

...

Well, I figured out A way to hack it, this time, but I still can't believe how much of an ordeal it was.

Back Then the internet was nothing like it is today, the likes of StackExchange were barely existent, and certainly not as informative, nor the answers as-scrutinized. Forums were the place to go... And the resounding sentiment from folk was that I needed to learn/use "The Linker."

So, I tried. For months, nearly fulltime. And I never did figure it out. After all these years, and countless similar projects under my belt, I still don't get it.

...

But I found some ideas on one of those Stack pages, this time, and worked-out a hack that works for this one function on this one system...

The key, in this case, was to just forget the linker, and TryAndSee whether this particular combination of C-compiler [version!] optimization settings, and machine architecture worked as I needed. Oh, and a bit of the Assembly-reading-ability I've picked-up since Back in the day (much thanks to @ziggurat29 ). And, thankfully, with this particular setup, it seems it worked like I needed, with only a couple hiccups.

The basic jist is to write a simple function, in C, that copies byte-by-byte starting from the function-pointer (in ROM) to a uint8_t array in RAM, then cast the array-pointer to a function-pointer, and call that.

Simple-enough, yeah?

Many hurdles:

First: NO, according to The Stacks, this is not at all portable, and not at all within the standards. TryAndSee in *every* situation, even if all you did was change optimization settings, or upgrade your C compiler to the next version. "Functions are not objects."

Second: Apparently there's no way to tell my C compiler to Not use absolute jumps. So, even though I got it running, initially, from RAM, as soon as it entered a loop, it jumped not to the top of the loop, but to the top of the loop *in ROM*.

There were a few other gotchas that required hand-editting of the assembly output. Which is fine for this.

...

But, it really gets me wondering how this can possibly not be a well-established standardized thing. I mean, when you run a program off a hard disk, it gets copied to and run from RAM... And the same was true for all those programs run from cassette tapes... Is it darn-near *always* the job of the OS, even in embedded systems?

Linux wasn't yet ported to ARM, as I recall, when I worked on that devkit... So, then, how would folk even use that thing if they were expected to hand-code an OS to those extents? And how would they even assure their code *could* be run from RAM (i.e. no absolute jumps )?

SURELY, there must be some "normal" way of doing this, but here still, some twenty years later, I'm not finding anything other than "code it in assembly," or TryAndSees like mine, or the magickal handwavy "use The Linker!"

So, herein, as far as I understand The Linker, all it does is *link*... So even if I was smart enough to understand its syntax, it still would only result in my telling the linker to put the function at that [RAM] address in the hex-file. And when I burn that hexfile to flash, the portions that are addressed in RAM will, well, be outside the flash chip. [If I use my ihexFlasher it might get written to the RAM, but of course get lost after reboot. Though this gives me an interesting idea about loading test code without flashing... hmmm] But, The Linker, surely, isn't inserting code into my file, before my code, to do the actual copying of the code from ROM, to RAM. How could it? My boot code is in handwritten assembly, it's got .orgs, as necessary, for things like interrupt vectors, a jump at 0x0000 around those to the actual boot code, and so-forth. So, even if I informed "The Linker" about all those, in its crazy-ass syntax, it'd somehow have to not only modify those to inject a function-call to copy my function to RAM, but then it would also have to add code to actually do the copying. That code's gotta come from *somewhere*... So, now, again, intuitively, it doesn't seem like the sort of thing "The *linker*" would do (generating code).

So, now, twenty years later I have a vague idea that code might be something well-standardized, in a library, of sorts, not unlike printf, or stdio... (Oooh, maybe I should dig out my K&R). Maybe it's *so* standardized that we usually just pretend it doesn't exist... like... how the appropriate integer-multiplication *function* is *called* when we type the '*' character.

Similarly: I've a *vague* understanding, now, especially after having seen the assembly-output so many times, that initialized global variables (int globalA = 0) require some external routine, called *before* main(), to actually load those RAM locations with a table of values stored in ROM. Similar idea. But *Something* has to actually *do* that copying... Something, code I didn't write, has to inject itself into my code *before* my code. And so, I take it, the linker merely tells everything where to *find* my RAM function (e.g. in calls), and maybe even adds that function to a list of bytes that need to be copied to RAM locations... But, still, I *don't* gather that the linker actually *does* the copying. Something else, some library most folk seem to barely even acknowledge exist, must do that.

OK, I'll buy it. Though, it's a bit unnerving to think about... it means all *my* initializations, in main(), before the while(1) loop come *long* after boot. Things like initializing Chip-Select pins that keep two devices from trying to write the bus at the same time. Or the watchdog timer, etc. Sure, the former can be handled by pull resistors, and should, anyhow, during resets... But I've little doubt there's plenty potential for unexpected-consequences when one thinks main() is *the first* thing that runs in an embedded system. Heh.

Anyhow, Somewhere there must be a resource that covers the entire boot process step-by-step, and similarly the build-process... The tools involved, the purpose of each step we ignore in the background, the functions called, and where they're supplied, and what to write and how to name them, if you're working with a system that doesn't already have them... etc.

But as it stands, I've yet to find the proper "M" to "RTF." So, this is the process, thus far, for me... Piecing stuff like this together gradually over 25 years.

I gather, thus far, that a "C-Runtime" must be responsible for e.g. initializing global variables, and eventually, later, calling main().

But, I can't imagine that to be anything but *highly* architecture-specific... It has to know the memory-map, at the very least. Maybe that's where the linker comes in. And *something* has to be responsible for things like the ISR tables, and jumping to the actual ISR functions, and, upon reset, jumping *around* that table to the *actual* boot-code... "The Compiler" fine. But, then, "The Compiler" must also know when to do all that, or when to output code that's compatible with an already-running OS, which, surely, would require an entirely different "C Runtime" (or whatever's responsible).

So, so far, this is where I'm at. Some weird intermediate state between having been "pretty good at" "low-level" embedded programming, which, apparently, was quite a bit higher-level than I gathered, and, now, having gotten somewhat-familiar with "the lowest level" assembly, ISR tables, jumping from the boot vector to actual boot code... And finding that this intermediate step between those seems to have a comparatively *huge* learning-curve of even higher-level languages and APIs and even more cryptic than raw assembly syntaxes, and things so deep behind the scenes that finding info about them is akin to looking up a word in the dictionary only to find that you have to look up another, only to find that *that* definition uses the word you were looking up in the first place. Hah!

...

I don't think this is where I intended on going with this... but, sufficed to say, for me learning to code in C was easier. Which could make sense, since it's apparently pretty high level (even if you only consider, again, function-calls injected in place of things like "*", as opposed to the even higher-level of hiding things like the boot process injecting things like global initializations! Almost like a BIOS injected in your hexfile).

Learning to code in assembly, too, was easier... at least, for me... And, I suppose that could make some sense for the opposite reason. There's *very little* hidden there.

But, now, that hidden part, between the two... That's where things get weird. At least, from my perspective. Considerations like whether a C Compiler even *can* be told *not* to use absolute jumps in a particular function... And how to do-so (#pragmas?)... Or how to tell it to put a function at a specific memory location. Or whether a string can be stored in ROM, or whether it needs to be initialized into RAM, and if it needs to access them differently. Whole new and separate languages for such things that, frankly, are *easy* to do in raw assembly, and surely should've been concerns even way back in the K&R days, maybe even moreso then, that seemingly *aren't* standardized from one C compiler to the next(?!), nor from one Architecture to the next(?!).

An aside: did you know that Two's-Compliment is *not* guaranteed in the C Standard? That even INT8_MIN may have different values on different architectures (-127, -128), that testing bits, or bit-shifting in such a case might give entirely different results on different hardware or even different compilers for the same hardware?

Hah!

Anyhow, again, I think I've gotten *way* off-topic.

Presently (off topic again) my technique of merging the Assembly boot-code with my C code is to basically compile my C code into assembly and remove nearly everything related to the linker, and hand-code, or otherwise not use, everything the C compiler tries to call from whatever it is (C-Runtime?) that handles things like global inits or integer-multiplication functions.

It's not *particularly* hard to do, and is surely easier for me than doing the same in raw assembly. It also has the benefit of portability (i.e., again, the integer-multiplication function), at, of course, the cost of not being hand-optimized...

Except, of course, in the odd case where things apparently *can't* be done in standard C, like inserting a "jp main" at a specific address, which has to be added to the assembly output by hand. Or copying a function to RAM. Or telling the compiler not to use absolute addressed jumps in loops. Or telling the RAM usage to start *after* that used by the assembly boot code.

Again, I've no doubt there *is* a way to do much of this ("The Linker!" "C-Runtime!" "#pragmas!") but my head just can't wrap around all that any time soon.

And, maybe that's OK? I mean, I got my start a couple years before Arduinos came onto the scene... Arduino is even higher-level than avr-gcc... And then, the "retro"/"8-bit" (and even homebrew CPU) trend seems to be stemming from former Arduinite, trying to grasp the lower-level... I'm betting there are many folk, now, finding themselves similarly "in the middle" trying to piece-together the hidden details, that seem so easy from their newly-learned assembly-perspective, that are far more complicated than one might guess... Plausibly leaving many feeling like Bare-Metal Assembly and C are two separate worlds. Maybe this hurdle can be overcome... Or maybe it's just like so many things in my life, I've fallen into some crack that most even experts aren't aware exists; the one weird mind that can understand both C and Assembly, but can't grasp the linker? I dunno.

...

Sheesh, I think I went way off topic.

I have found *some* resources that at least confirm some of my assumptions...

One specifically states that my C Compiler *does not* have the ability to output relocatable code (can't tell it not to use absolute jumps), which, frankly, is a bit of a surprise.

Another, I think I linked a few logs back, I think goes through the entire compilation process, from gcc to linker to assembler and surely more. It took *years* to stumble on one like that, and yes, I am planning to read it at some point. But, it also appears to be somewhat specific to one particular toolchain, which I again find a bit odd, as it seems like this process should be well-established. OTOH, if it *weren't* specific to a toolchain, it might be too high-level for me to try-out.

Who knows.

I am, however, still of the minset, reinforced by much of this latest experience, that there needs to be an intermediate step between Assembly and what most folk think of as "C"...

C, itself, or at least its syntax, would do nicely, it just means whittling it down to those things that don't require libraries without our knowingly/exclusively including/calling them (again "*" comes to mind, and global inits). Oh, and some sort of standard for things like .org.

Assembly, too, would do, if there was a standard syntax across architectures. I've discussed this in other logs, and it's a bit more difficult than the C-stripdown idea, since different arcitectures have different numbers of registers, etc. But, I think, not impossible.

Neither, of course, would be nearly as optimized as hand-written architecture-specific Assembly... But that's not the point.

"What is the point?"

Heh, frankly, I dunno anymore. It had something to do with promoting good programming techniques through awareness of underlying functionality... Something to do with a core set of universal libraries which *aren't* mere autogenerated binary (or assembly) blobs, which are heavily-documented inside and out, portable to all systems, can be learned-from.

Another idea including potential for what I'mma call "2D-Programming", wherein... Code itself is 1D: lines of text read left-to-right, line-by-line, executed pretty much the same... The 2nd dimension, then, being Time (or learning-process), wherein, say, a function evolved over time. Its first iteration might've been "brute force." E.g. integer multiplication might've been first implemented simply as a loop adding A to A, N times. So, using/viewing the integer-multiplication-library would show this to a newb, in all its well-explained glory. Content with that, they use it, sure why not... But later they're curious about what's next, or need some speed-up, so can dig into their used-libraries to learn more, about whichever topic/library interests them, and select version 2.

The second version might be the use of left-shifts... So forth...

It's a reference-manual/text *within* the *usable* code itself. History, links, links to other libraries/versions that inspired those progressions, etc... In order of the way these things progressed. Being that, frankly, most of these things *have* progressed in that order for a reason...

It doesn't make sense to throw kids into calculus before they know algebra, but I feel that's *very much* an analog for how computing goes these days. And, frankly, I think there are consequences...

Granted, no one could wrap their head around *every* library and all its versions... even those merely at this low-level. But all the information is there, then, for whoever might take on a bugfix or see some obvious improvement along the way...

I dunno, it's probably crazy, nevermind the potential for near-infinite branches...

But, this sorta thinking is very much where my brain's been at, in this realm, since I started coding some 25 years ago. Which, frankly, is a bit ridiculous, because I tend to reinvent the wheel rather than learn from others' examples... So why would it make sense a person like that would in any way know how to reach folk of an entirely different learning-style?

Maybe I should go eat breakfast, then it'll all be clear.

-

Character-LCD graphing, more

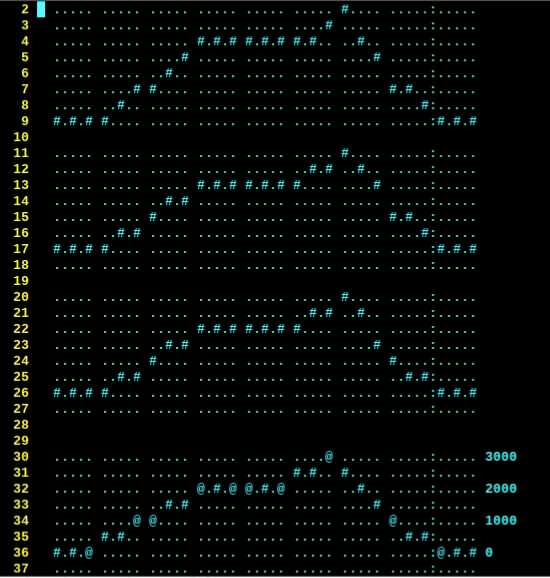

09/03/2022 at 03:52 • 5 commentsTrying to graph, say, y=mx+b in so few pixels is turning into more art than science. Heh!

The goal is we have 8 values, which are each connected with a three-pixel line-segment. So, y=mx+b is actually used twice for each segment. First to interpolate the three steps from one point to the next. Then, again, to scale from the input range (0-3000, in my case) to the number of pixel rows (8).

It looks great, but not excellent.

![]()

The most notable funkiness is the discontinuity between the sixth and seventh line-segments. (ignore the eighth, it doesn't belong there). I found that this is a result of the weird scaling necessary: 0-3 scaled to 0-7. If I don't use all 8 rows, and instead use 7, it looks far better.

(Yes, BTW, I did take integer math and rounding into account... Surprisingly, that discontinuity isn't rounding/truncating error.)

Another example is the fifth character/line-segment. Sure, it looks nice, but it's not really representative of what's happening.

The horizontal section to the left is at 2000. The line-segment goes from 2000-3000. Thus, ideally, the three dots should be at 2250, 2500, and 2750. But, because there's only two rows to fit them in, of course there's loss of resolution. But, it gets weirder because the third row from the top isn't 2000, it's something like 2333, (from memory of a lot of experiments yesterday). So, the 2250 step gets lost. And so does the 2500 step, because 500 is closer to 333 than 667, or something. So, instead of looking like a ramp from 2000 to 3000, it looks like it's staying at 2000 until halfway through, then ramps to 3000 (at a far steeper angle than is real).

So, A quick fix was scaling across 7 instead of 8 rows, then 0 is at the bottom row, 1000 is two rows up from there, 2000 is the fifth row, and 3000 is the seventh.

This looks pertydurngood, But, these are fake values... (and, still, it's not without misleading visual artifacts).

(also note the first and second segments, these start at 0, the first goes to 1000, the second goes from 1000 to 2000, so they should look the same, only shifted vertically).

So, somewhere begs the question... How much "fudging" should be done to carry across the right meaning, visually?

Mathematically, the above graph is actually "right"... but obviously it doesn't look right, at all. So, then, it's not really right, is it?

If this were spread across 100 pixels instead of 8, it wouldn't be nearly as visually-wrong, but technically, would still contain such glitches. they'd be more hidden by factors like multiple-pixel thick lines, and maybe antialiasing... Boy howdy them kiddos used to 320x480 on a 4in screen have it so easy.

(Actually, my first homebrew function-grapher was in the late 90's with Visual Basic and 1024x768, so I had no idea the difference a pixel makes, either).

So, I'm debating how to go about this, we're not talking "visually-appealing" here, we're talking visually-representative, maybe, wherein the mathematical approach is actually very misleading.

What a weird thought.

I've drawn out, by hand, on paper, all the possible "straightest line" representations between two values; between one row to another. I think they can work-out. BUT, then there's a bit of visual misleading going-on when switching from one slope to the next (e.g. making some abrupt slope-changes appear smoothed).

So then I tried a by-hand for this specific example and came up with a sort of "algorithm" for choosing a line-segment pattern that is *very* visually-representative, *but* requires that some points be at the last column in one character, while other points may be at the first column of the next.

In this particular system, that would, actually, be less misleading. Even though, in a sense, I'd be stretching and shrinking the "time" (horizontal) axis willy-nilly. And, the two extremes are pretty extreme: In one case, one single character (three pixels) might represent two points, whereas in another extreme, the first point might be at the end of the previous character, and at the beginning of the next (five pixels!)

Heh! What a weird conundrum. /time/, however, is not really what we need to know. Nor is *value*. I think what really needs to be gleaned from this system is whether it's increasing or decreasing (or constant), and a rough idea of the rate in comparison to other points. A cursor sliding left to right will show where we're at presently...

![]()

I'm still debating whether to do this the math-way (which is ugly but done) or to try coding-up the handwavy-lookup-table artsy-way and accept its crazy time-stretching.

-

That doesn't look half bad!

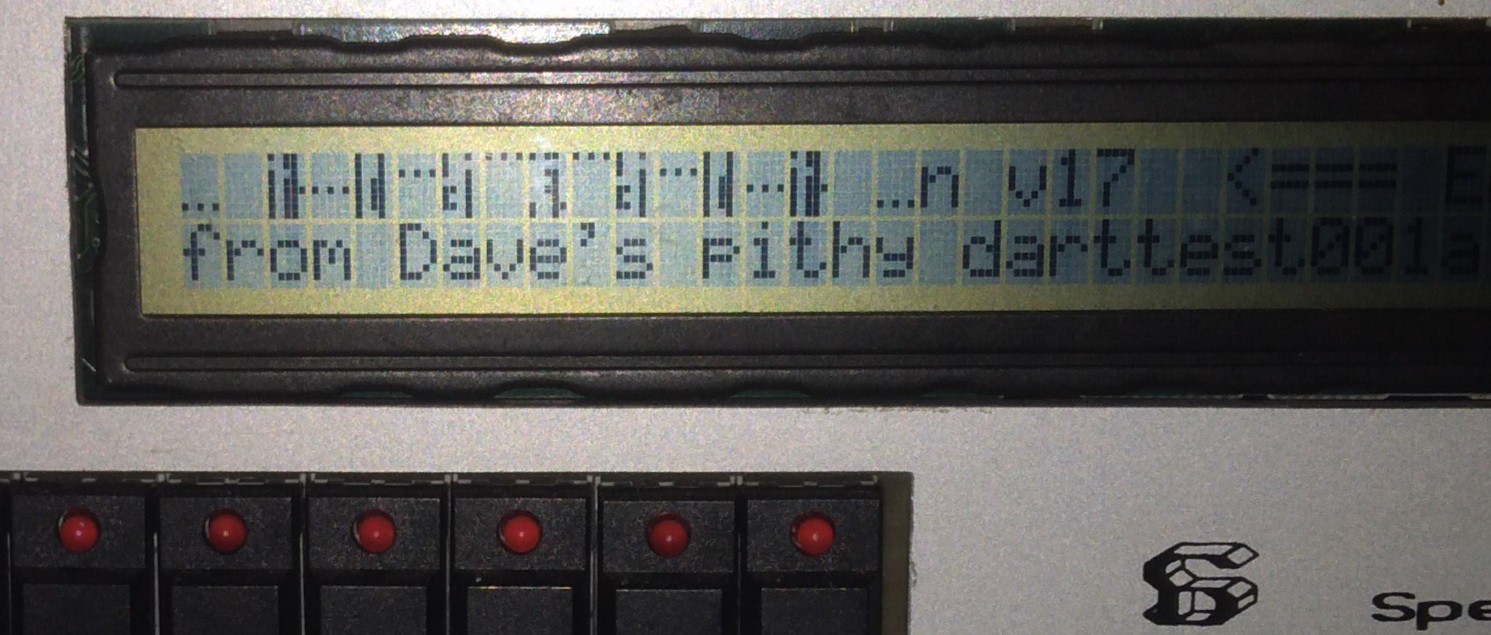

09/01/2022 at 03:33 • 2 commentsUsing a text-lcd to draw a graph...

The first discontinuity is maybe a math bug... The second is just random leftover from old code. In all, this might could work.

Of course, the HD44780 only has 8 custom characters, but A) technically, that's all I need to plot 8 data-points, and B) I've a few ideas to squeeze more outta it if I need to.

For this proof-of-concept, though, I'm quite pleased with how it looks. I was somewhat-concerned it would be too sparse to recognize as a graph, or that it would be hard to see. Not bad at all!

![]()

-

Well that didn't quite go as planned..

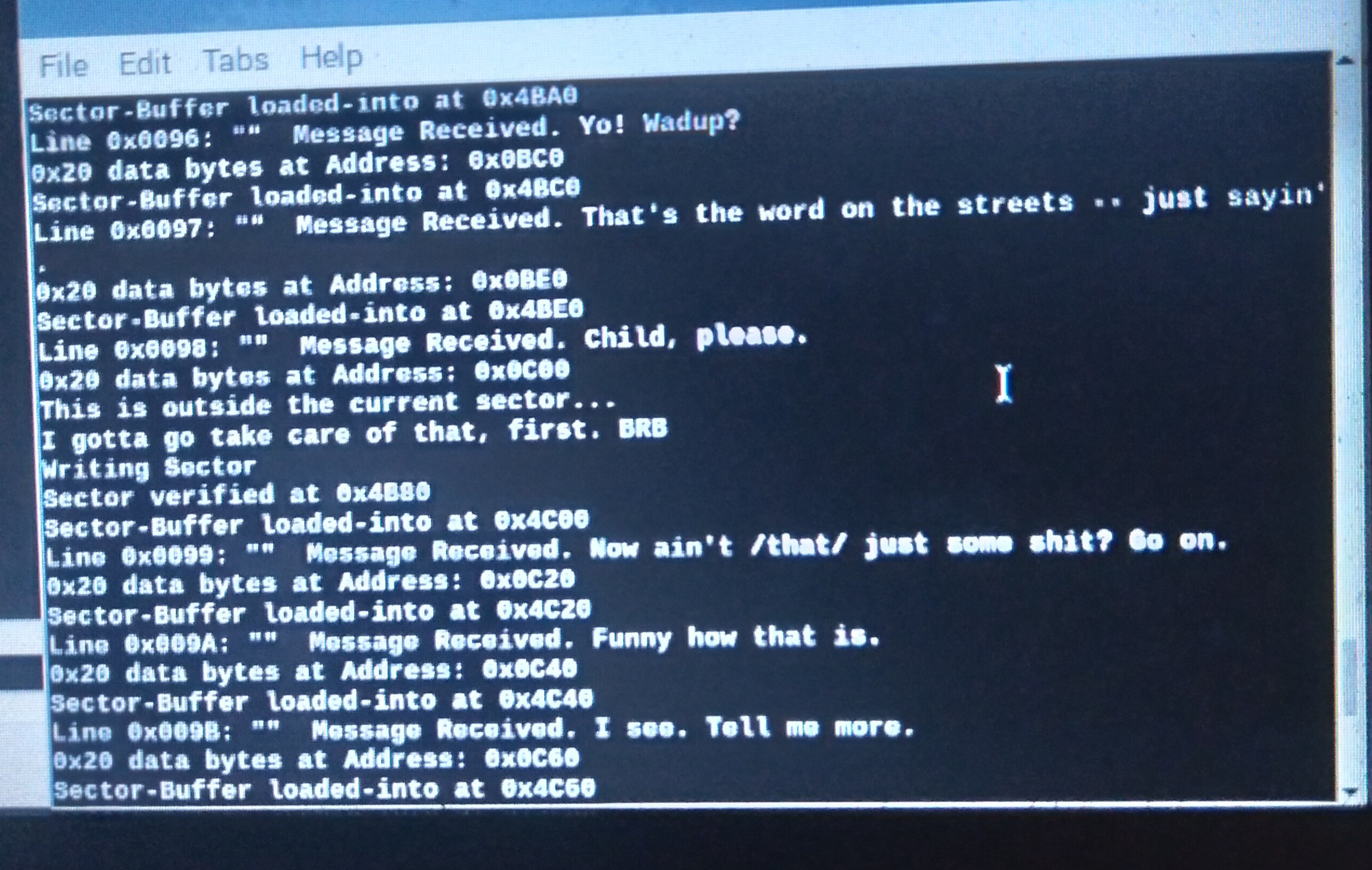

08/31/2022 at 10:49 • 0 comments![]()

Dave's Pithy DART test program is now a bootloader/BIOS of sorts...

With a little work, it loads a main() function compiled in sdcc.

Previously, the ihexFlasher, which has had a little improvement, and is being used to test my LCD-Grapher (shown above).

It's supposed to display 8 custom characters, the first is "OK", followed by 7 steps up and seven back down.

Since OK is missing, I think somehow the second custom character overwrote the first.

Interestingly, just before I coded this up I happened upon something I never recall seeing previously, about these displays, nor ever had trouble with... Allegedly the BUSY flag actually deactivates after data's written, but 4us *before* the address-increment occurs(?!)

I suppose this could explain it... combined with maybe a few timer-interrupts slowing it down sometimes, and not others...?

Too tired to look into it right now.

Really, I mainly just wanted to see if a graph would look OK if I skipped every-other pixel, so it wouldn't have solid lines broken between every five pixels. Which, I think, would look especially bad if, say, the graph needs to be four pixels per step. I think skipping pixels should work. It'd be two pixels per step, then, and no breaks.

...

I kinda dig the weird characters that resulted from the glitch... Looks like some ancient script. It always amazes me there can be patterns in a measly 5x7 grid that I haven't seen before.

...

Oh, the now-pithy ihexFlasher...

![]()

-

Beat...

08/17/2022 at 04:52 • 2 commentsWrote up a draft what seems quite some time ago, basically about this same thing... Why not just post it? Dunno, maybe my brain's in a slightly different place, now, and I can go back and compare...

...

I guess it boils down to "I'm beat."

I mean, it seems fair, considering this project was pretty much the only thing I was doing for months, aside from... well, Let's just call it a fulltime nonpaying job, with a lot of overtime.

I'm not exactly incapable, now, of seeing the project-ideas I had for this, the ideas which the ihex-flasher was to enable... the ideas I'd built-up all last year while working on #Vintage Z80 palmtop compy hackery (TI-86) ... but I sorta can't see them, either.

I guess the question, somewhere, was what was the goal...? And, well, I really dunno.

Flashy doodads were supposed to be the icing on the cake after the ihex-flasher, but then my flashy-doodads turned out to be a huge amount of work; 3.3V level-shifters aren't so bad, unless they need to be wired to FFC connectors, and so-forth. I've, unquestionably, done these sorts of things with point-to-point wiring before, was worth it, then... but right now just doesn't seem that way.

Oh, I remember... One idea was printing highlighter-ink in red and green in a grid on a transparency, and backlighting that with blue-LEDs... Turning a B/W display into color... Actually, that's kinda intriguing just to see how it looks. But, yeah, gotta either get a color inkjet that has highlighter cartridges, or hack mine...

I had actually looked into that a little, way-back... the oldschool B/W bubblejet I've got has such a standard cartridge that they can still be bought, and the service manuals actually show the pinouts and timing(!). Turns out the color cartridge is nearly identical, they basically just rewired a couple pins and divided up the 64 B/W nozzles into 48 total for CMY. It'd probably be an easy hack.

Top that off, when I worked on #The Artist--Printze , one of the important factors was modifying the driver because, at the time, I thought the cartridges were surely too old to still be available... Printze's cartridge was empty and easily hadn't been touched in twenty years. After refilling it with ink from the cheapest cartridge I could find locally ($5), it turned out that the first 16 nozzles were non-functioning. So, I modified the driver to print with the 48 remaining.

HAH!

So, I guess, it'd be pertydurnsimple to go from there to use that color cartridge with its 48 nozzles.

Heck, I wouldn't even need to make the driver color, knowing which nozzles to use. Hmmm....

Oh, wait... no... dropping different colors in the same row means printing that row three times... heh. Well, I also have a pretty thorough understanding, now, burried in there somewhere, of how to control it manually (via my own software)... Which, actually, might be better, as I have no idea whether the fluorescent ink drops would be thick nor dense enough to convert all the blue.

Heh. Well, this is *sorta* a welcome aside from the weeks of "beat."

I dunno, it seems a bit crazy.

And, well, the idea of a fluorescent display with a blue backlight, it turns out, was actually patented, many moons ago.

And, dagnabbit, look at those mofos on the youtubes... I didn't even choose to make a "short" of the next vid, wherein I had three colors, I even tried everything I could to *not* make it a "short"... Now analytics says most its views were from "the shorts feed" (youtube *seriously* shared *that* with *everyone*?!), and I got friggin downvotes! I feel kinda bad right now, TBH. Sheesh! Youtube is a friggin' bully, trying to draw in other bullies! Good thing I've got 30-some years of slightly thickened skin, I wonder what the next generation gets out of this!

.

Anyhow, I know it'd be very different from "the real thing". Real color LCDs put the filter *inside* the glass panel next to the liquid crystal so that the viewing-angle doesn't have to be *perfectly* head-on, lest you see colors that weren't intended from light from, say, a green pixel going through a red filter when the glass is tilted.

So... I'm *expecting* that effect, and actually thought it might be kinda interesting to see what it looks like. "filter" on the back, vs on the front might be best, and could plausibly allow for an interesting look with solid-color pictures, like cartoons... Filter on the front might be interesting, similarly, but maybe with a "sharkskin" effect, which could actually be pretty interesting for, e.g. my idea of using *several* of these displays, each displaying a large single letter.

Heh.

None of this, by any means, requires a z80 machine... I just figured since it's already running, I might-as-well use it for experimenting with ideas like these.

I guess I'd kinda contemplated coding such experiments on the machine itself, but I guess there's really no reason for that.

...

Well, it's an idea, anyhow.

In the "draft" log from a while back I started a list of such ideas. Most were far more specific to the z80 and the machine, itself. I even looked into interfacing a Virtual-Memory chip meant for the Motorola 68K series with it, as well as ISA cards. I dunno...

Another idea is streamlining the "bring-up" process a bit, so it could be used for other devices, which, actually, was a major driving factor in this project... The fact output from C can be hand-tuned means that as long as your chip has a C compiler, you could bare-metal it from the getgo in C, without a pre-provided CRT, headers, etc.... Which would mean that pretty much the exact same learning-process could apply to most chips/systems basically immediately after one's first toggle-switch experiments.

I've gone on about that one several times (maybe limited to drafts?)... And, well, it gets a bit lofty; suggesting a sort of "universal assembly language" and a C compiler that compiles to it.

But, since the ihex-flasher, I guess, it started snapping me back to reality. I mean, sure, such a thing might be doable, but what's the use? E.G. with the ihex-flasher, it *requires* a Von Neumann architecture, because the flash chip can't be both read and programmed at the same time, so the flash-programming code has to be run from RAM, unless you've got two chips... which... is kinda the point. The hardware attached to the device, and how it's attached, has a tremendous impact on how it gets programmed. So... Even with some sort of universal *language*, there's still a need for *very specific* coding....

...And... Here I'm beat.

At that point, yah mightaswell have a C-Runtime, and write drivers for linux. Or Arduino. Arduino on a Z80? 6502? I guess, now that I think about it, I was sorta going for something like that. Hah! I'm really not even particularly fond of what arduinos have brought to the table... as far as inspiring good coding practices and low-level understanding.

I dunno.

Maybe that's why I'm beat. I dunno.

-

? Spectradata SD-70 in the wild ?

08/05/2022 at 20:07 • 0 commentsDid You Buy it?

As far as I've been able to gather from the interwebs, only two of these machines may've ever existed...

I'd been contemplating buying the second to keep them together, and also maybe to find out a few unknowns. (E.G. What is that AMD chip's part number? Did the other unit have the GPIB chips installed? Did they hand-etch the front-panel board on both of them? Were they built at the same time, e.g. for the same customer, or...?)

Anyhow, if you bought the second one (or just happen to have another, or know anything about it) it would be great to hear from you!

eric -> wa -> zhu -> ng

Via:

gm -> ail

Of the dotcoms

(Why can't I unbold that?!)

I'm curious what others' interests may be, or what they may do with it... Reverse engineering endeavors of their own? Actually use it as intended (Do you know anything about the equipment it attaches to?) Figured it was a great price for a project-box, a bit like I did at first (wanna sell its guts?)? Did you find it through the logs, here? If you put anything on the net about it, I'd be happy to put some links in here. So-forth.

For the search engines:

spectra data SD-70

Spectradata SD70

-

The Rominator: Overkill maybe.

08/01/2022 at 09:11 • 0 commentsIt works!

As I recall (shyeah right... like I'mma go back and read all that!) the ordeals of the last log basically amounted to really weird luck prompting me to realize some variable initializations never occurred.

After I fixed that, it all seemed to work as-tested on a PC, up to the point of actually performing the Flash-writing, which couldn't be tested yet because the SD70 has separate chip-selects for each of its 4 16k memory-sockets, and the Flash I've been using in the first socket requires an "unlock" procedure that requires accessing 32K of its address space.

The plan since I started this flash-endeavor has been to have a jumper on "The Rominator" which selects one of two 16k pages to boot from. Simply switch the jumper to boot into the firmware-uploader, switch it back to boot into the new firmware.

I went a little overboard because, well, the simplicity of the idea grew in complexity quite quickly.

E.G. the SD70's ROM sockets obviously don't have WriteEnable... So I need a wire and test-clip for that.

The A14 line is pulled high, as for smaller ROMs that pin has another purpose, so I already needed/had a jumper to disconnect it and a resistor to pull the chip's A14 low... But now I need a way to actually control it (for unlocking the flash-write) AND a way to invert it (for selecting which image to boot from). OK, three jumpers.

The Output-Enable is connected to the Chip-Enable (maybe it decreases access time?), but the flashing procedure requires /OE to be high. I ran into the same with #Vintage Z80 palmtop compy hackery (TI-86) , so if I want to use The Rominator in another project, I might benefit there, as well, from now another jumper and another test-lead.

The Chip-Enable, now, has to have *two* inputs ORed together, and another test-lead.

Surely I'm forgetting something.

While I was at it, I decided to give A15 similar treatment as A14... Now the original "stock" firmware can be booted via jumper, as well. Oh, right, and A15 is also pulled high at the socket, so had to have a jumper and pull-down.

OK...

Now, I didn't have much space left on the board, so was quite pleased to find an SOIC 74F00 in my collection... Until I realized 100k pull-down resistors would not be anywhere near spec (600uA out of the input, 100kohm, 6V?!). Alright, well, at that point I was beat (how long has this been, a week?!) So I decided I'll just require that A14 and A15 are always driven by either an output or by power rails. A14 already has a header, now, for a test-lead, but I didn't do that for A15, so I fudged another header-pin next to the jumper for grounding.

Heh. This has gotten crazy. BUT: The previously-unnamed Rominator was of great use in #Improbable AVR -> 8088 substitution for PC/XT , and now again in this project, so I guess its new versatility could be useful in another project down the line.

(Did I mention I spent Hours fighting my printer several weeks back so I could print its schematic small-enough to strap to it? I guess I'll have to do that again. Thankfully this time I think I know why it was so difficult last time. Though, this time there's quite a bit more information to fit in there).

![]()

![]()

Oh, right... So I finally put it in the socket for the first time in what seems like forever (I seriously expected this to take an afternoon, not DAYS) and it still booted the old firmware just as I expected... But, it didn't boot all the way... What?

Took me a while to remember that ordeal of the last log... right, trying to hunt down incredible odds led me to load an earlier version. So, while flashing the latest version, I also flashed the stock firmware at 0x8000... Tried the latter first. No problemo. Tried the firmware-flasher next at 0x0000, burnt an old test-program to 0x4000 (over the serial port!). Not a hiccup. booted from that for some pithy nostalgia from who can remember how many weeks ago...

Holy Moly, I can In-System-Program, now! And I can program in C!

...only... It's been so long, I can't remember what I wanted to program...

Eric Hertz

Eric Hertz