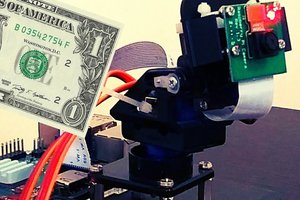

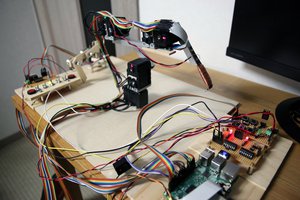

This project creates a functional guardian with a servo, a camera, some LEDs and the Viam ML Model service and Vision Service.

Guardian Robot that tracks Humans and Dogs

Print, Paint, and Program a Guardian to Track Humans and Dogs Using a Pi, Camera, and Servo

Naomi Pentrel

Naomi Pentrel

Richard Sand

Richard Sand

Norbert Zare

Norbert Zare

Afreez Gan

Afreez Gan

Tobias Kuhn

Tobias Kuhn