-

Portable Demonstration Device for Supercon 2023

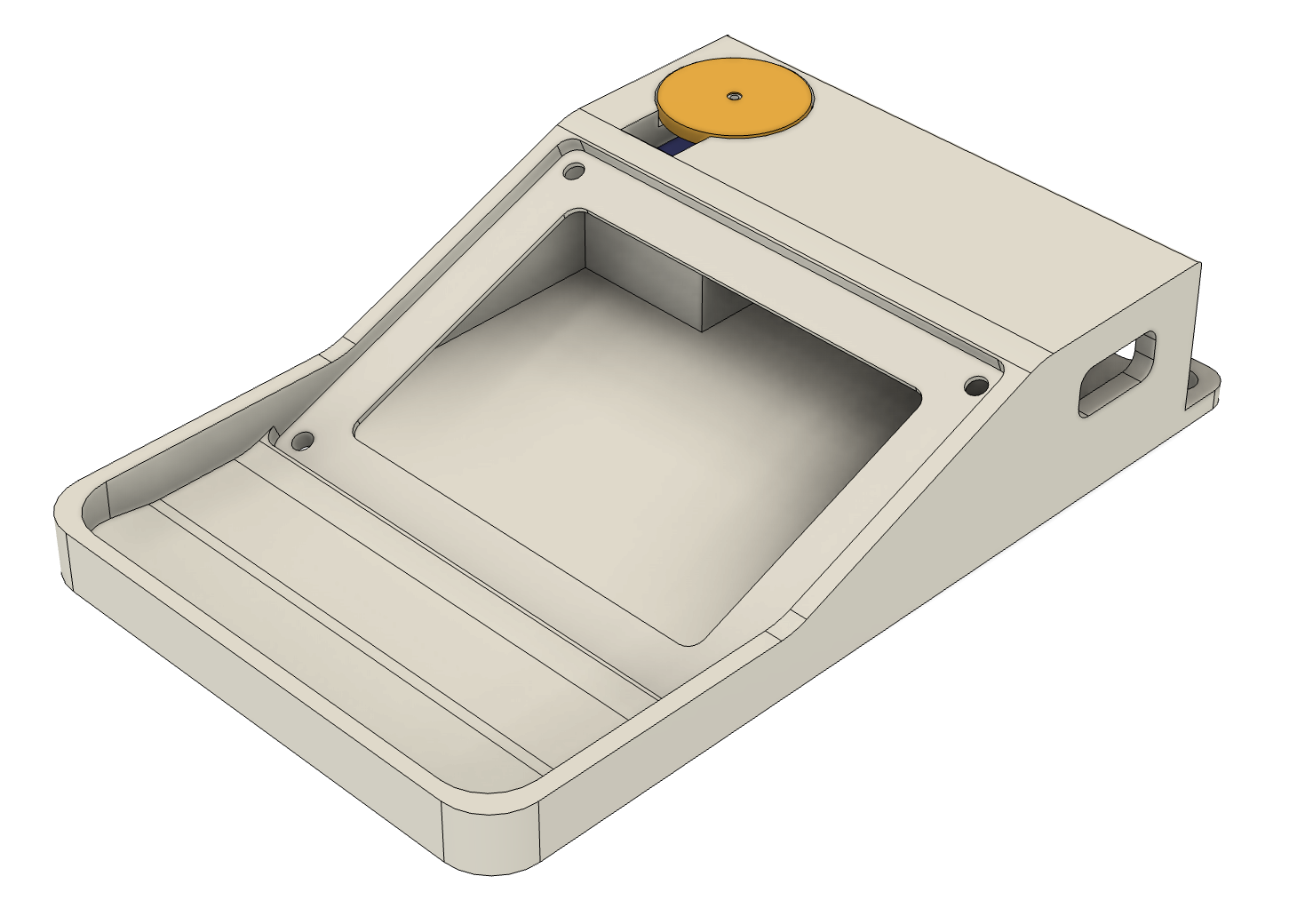

11/03/2023 at 15:02 • 0 commentsI'll be attending Hackaday's Superconference this year! As much as I wanted to bring the AI Audio Classifier Recycle Bin to the Supercon, it is very impractical to do so. So, I have prepared a small device to demonstrate how the AI Recycle Bin works. This way I don't have to carry to bin with me on a 9000+ Miles trip to Pasadena from Surabaya.

![]()

![]()

By the way, the two holes can be attached to a lanyard, so does it mean this device counts as a Supercon badge..?

-

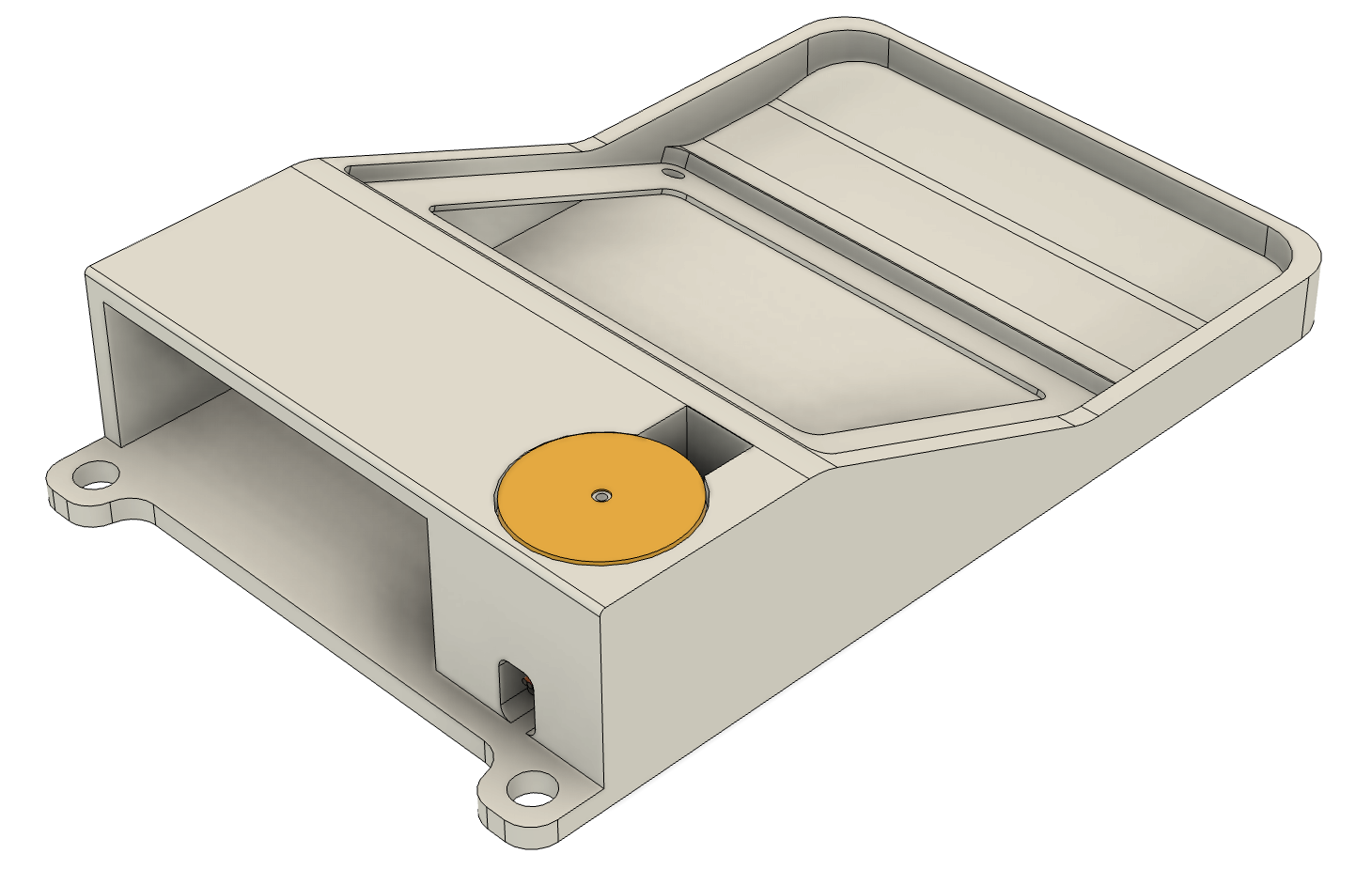

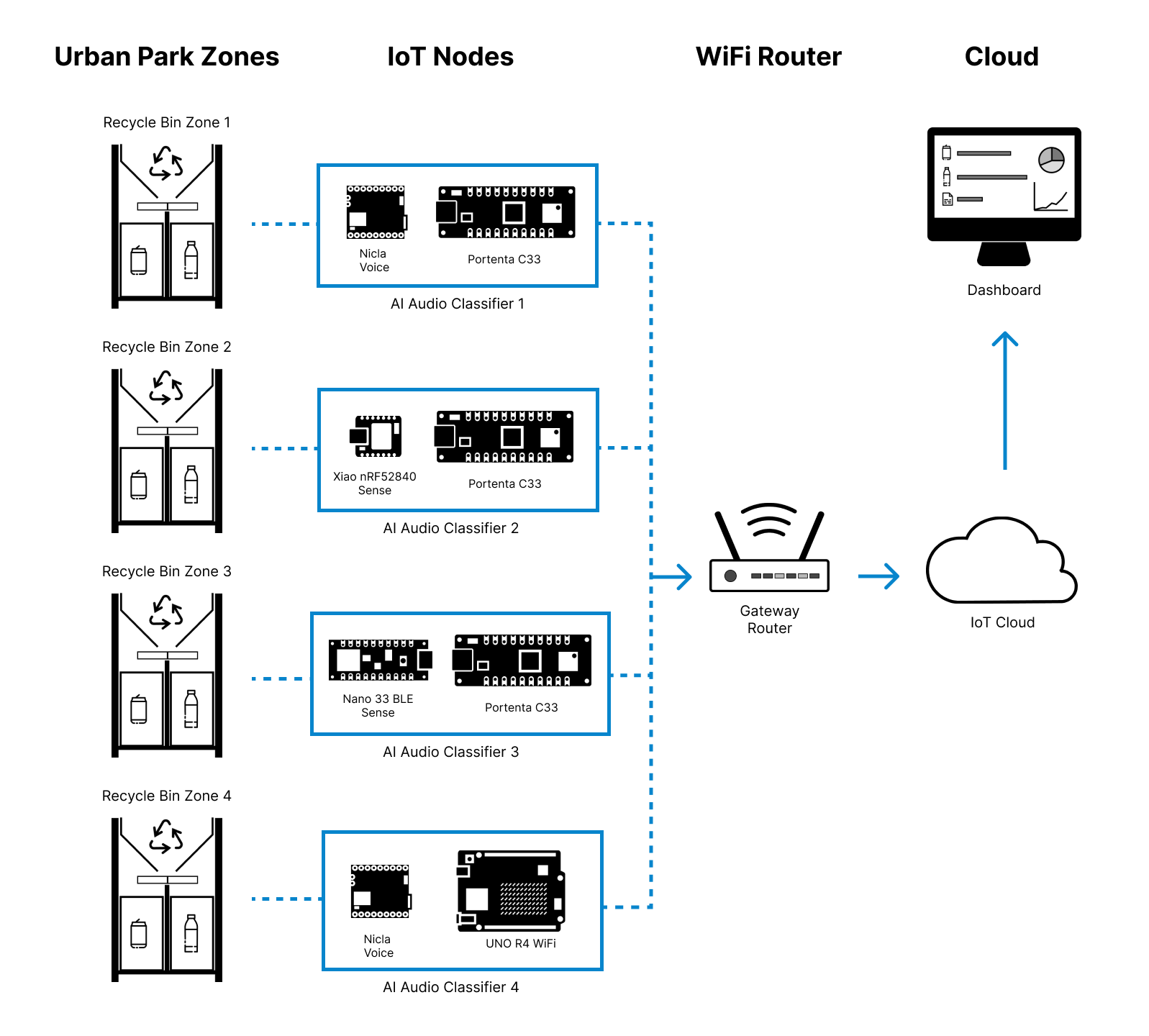

Arduino Cloud Integration (AI + IoT for Smart & Sustainable City)

10/10/2023 at 08:33 • 0 commentsThe Arduino Portenta C33 is Arduino Cloud compatible. By using Arduino Cloud we can make the AI Audio Classifier Recycle Bin IoT enabled. The benefit is we can create a network of Smart Bins that can be deployed in an urban park environment or another public open space. We can monitor that number of items in each compartment by counting the number of sound each item makes, once the compartment is full we can create an alert so that we can collect the item.

![]()

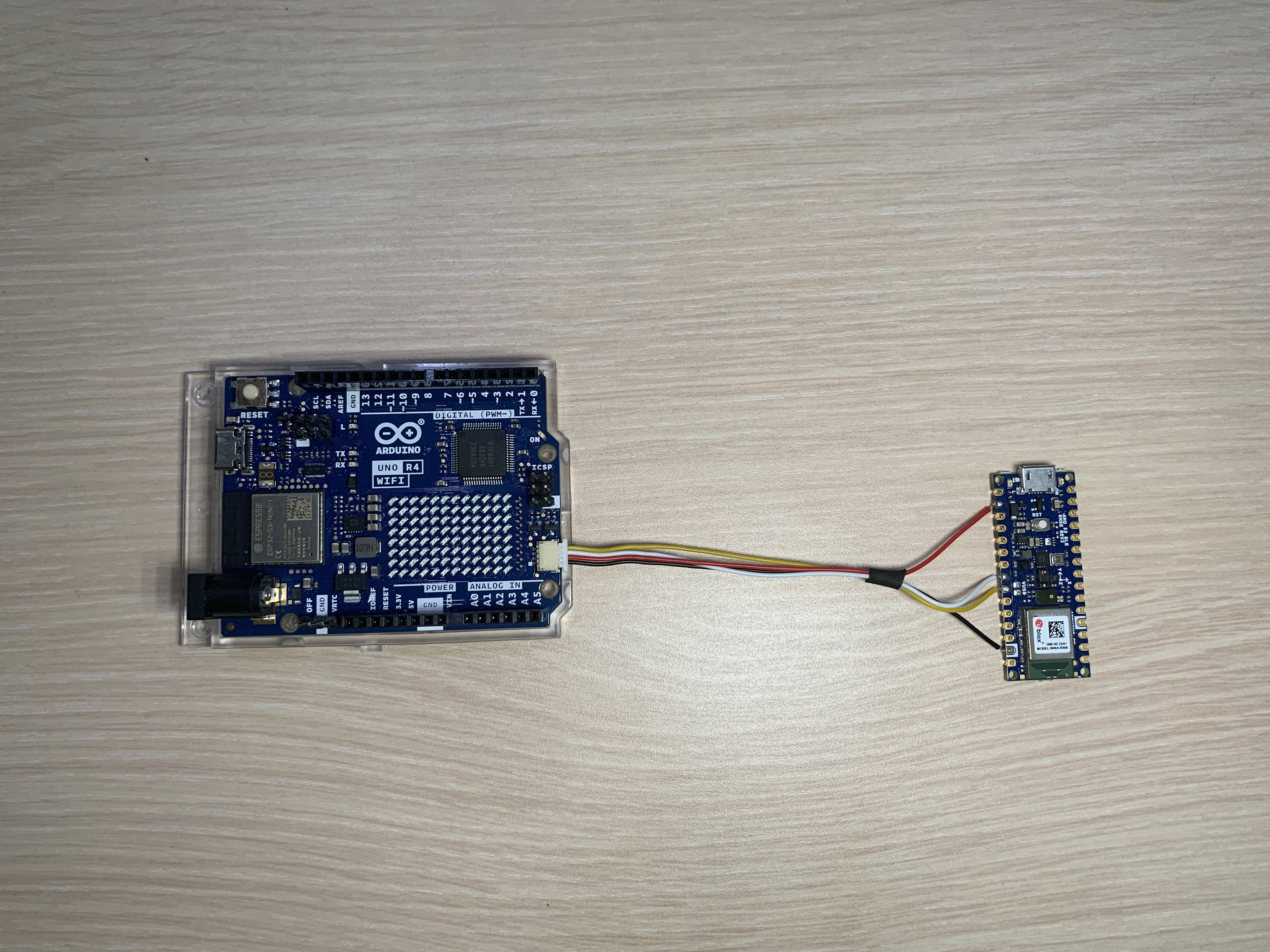

For the purpose of testing the Arduino IoT cloud I'm using an Arduino UNO R4 Wifi first before deploying the code into the Portenta C33. This is just to test and see how everything goes before moving the code into the main hardware for this project.

![]()

Once ready to be deployed to the Portenta C33, we can connect the WiFi antenna to make sure better and stable wifi connection.

-

Tuning DSP Parameters (simple trick to improve accuracy, decrease inference time and decrease memory usage)

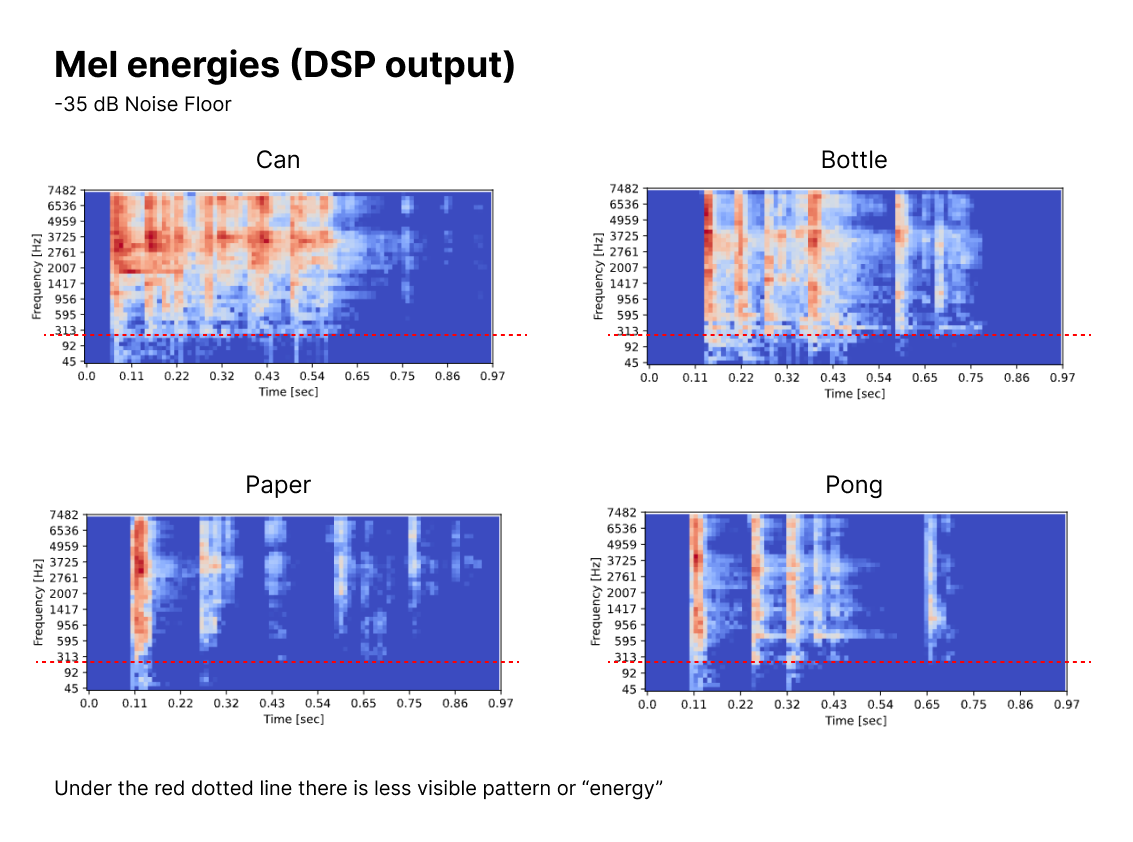

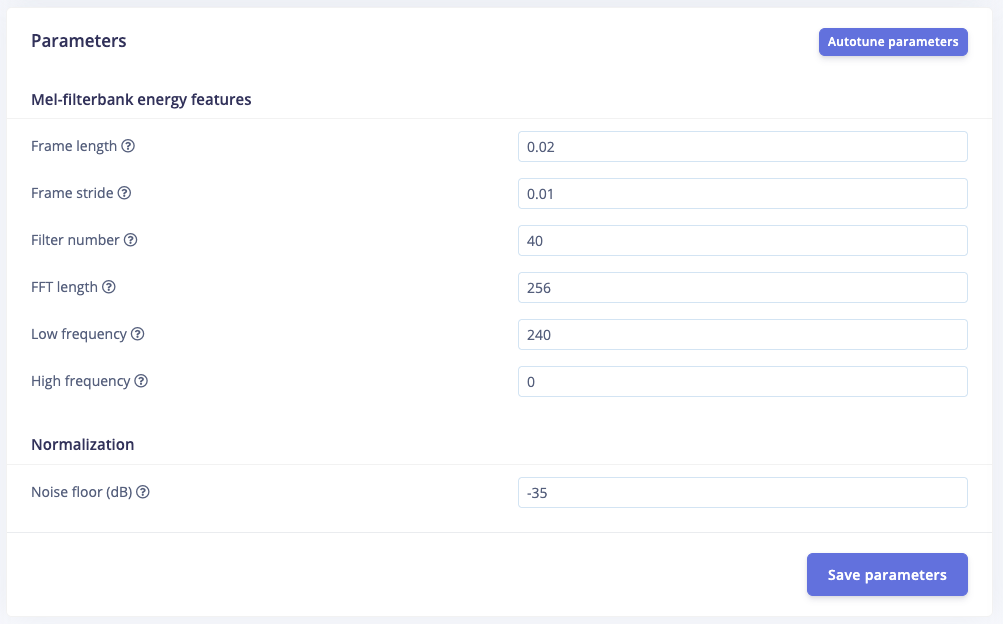

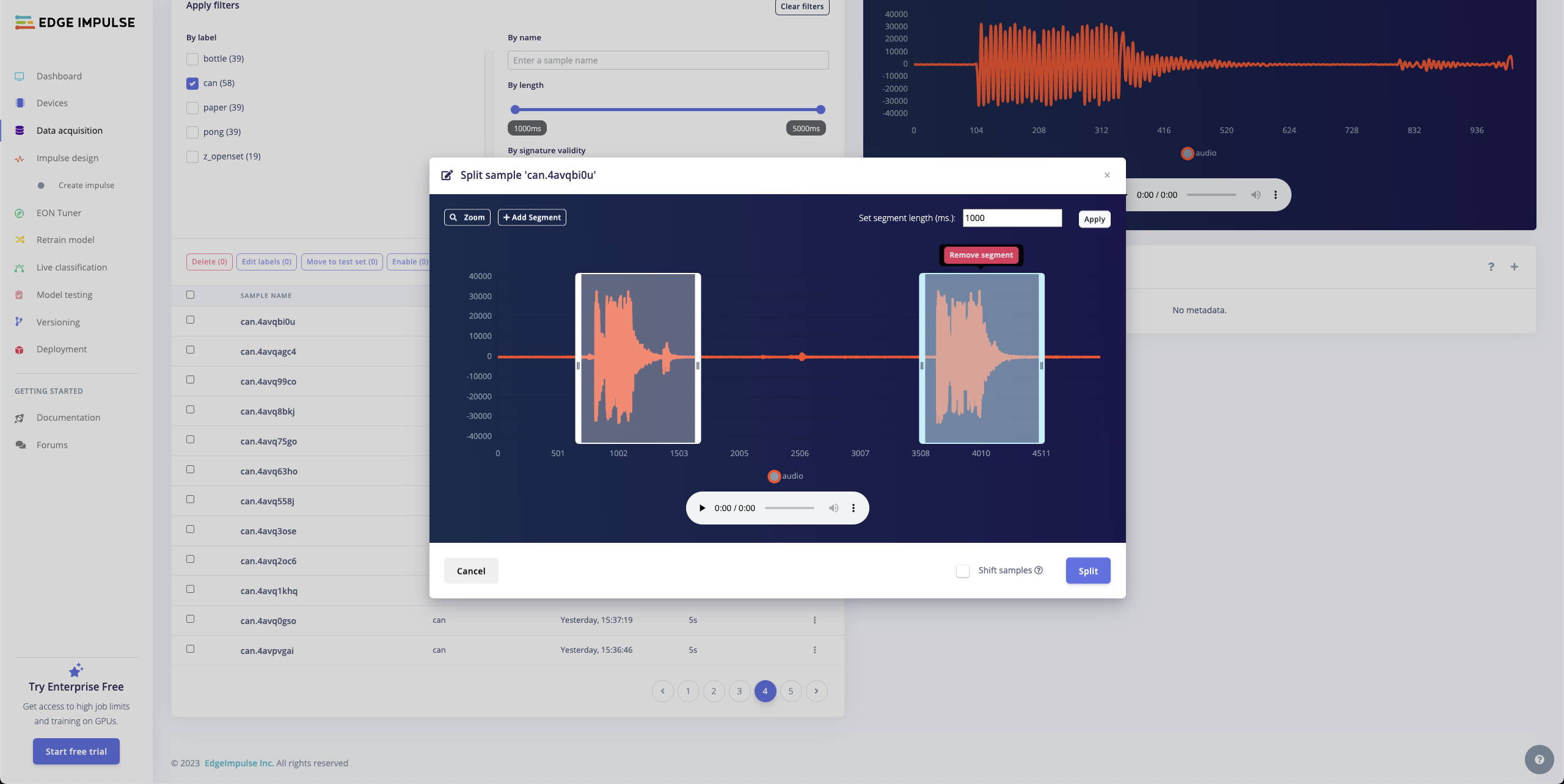

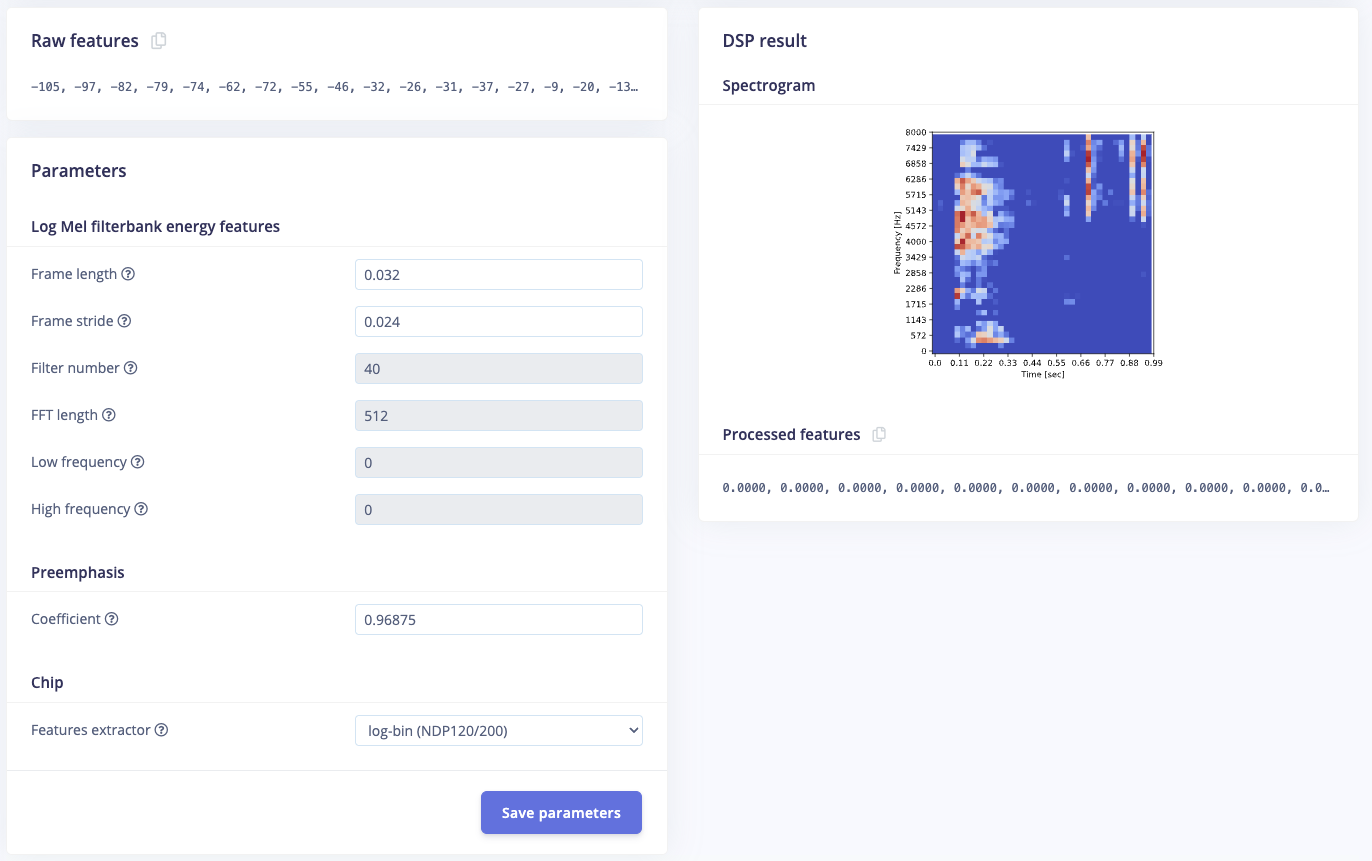

10/09/2023 at 13:10 • 0 commentsIn the Edge Impulse DSP (Digital Signal Processing) parameter tuning we can see the Mel energy levels and FFT Bin weighting. From here we can adjust and optimize the parameters to lower the memory usage and processing time (DSP + Inference time).

The first step is to adjust the noise floor until the highest Mel energy (red) and lowest Mel energy (blue) becomes visible. I have settled with -35 dB (Initially it was set to -55 dB.

![]()

From the diagram above we can see that there is not much pattern under 240 Hz frequency for all four different objects. So we can set the Lowest band edge to 240 Hz.

![]()

The benefit of doing this is we can eliminate wind noise almost entirely because the signal energy of wind noise is typically concentrated below 300 Hz. Setting the lowest band edge to 240 Hz will also filter out Humming noises before we use the data for inferencing.

-

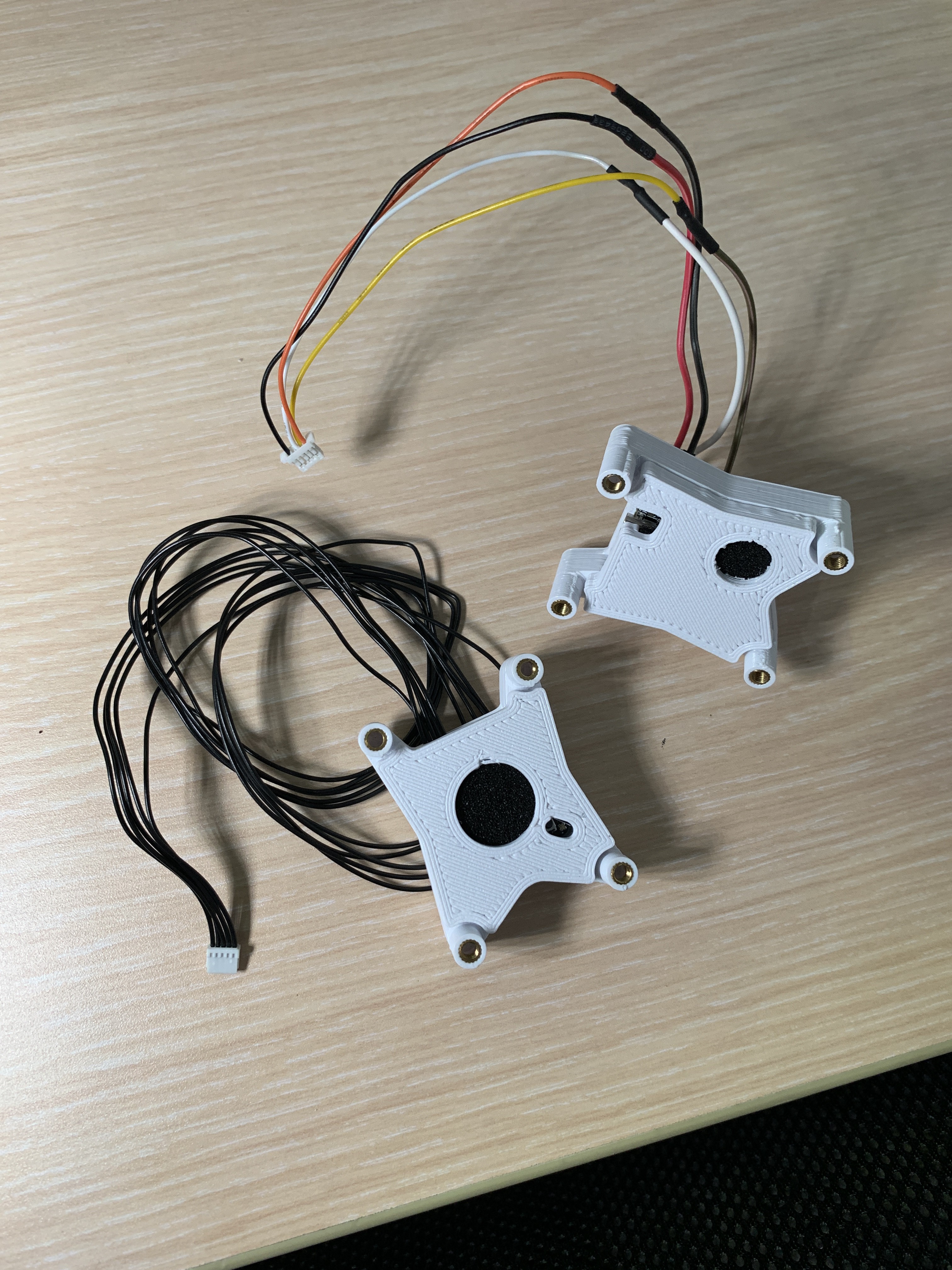

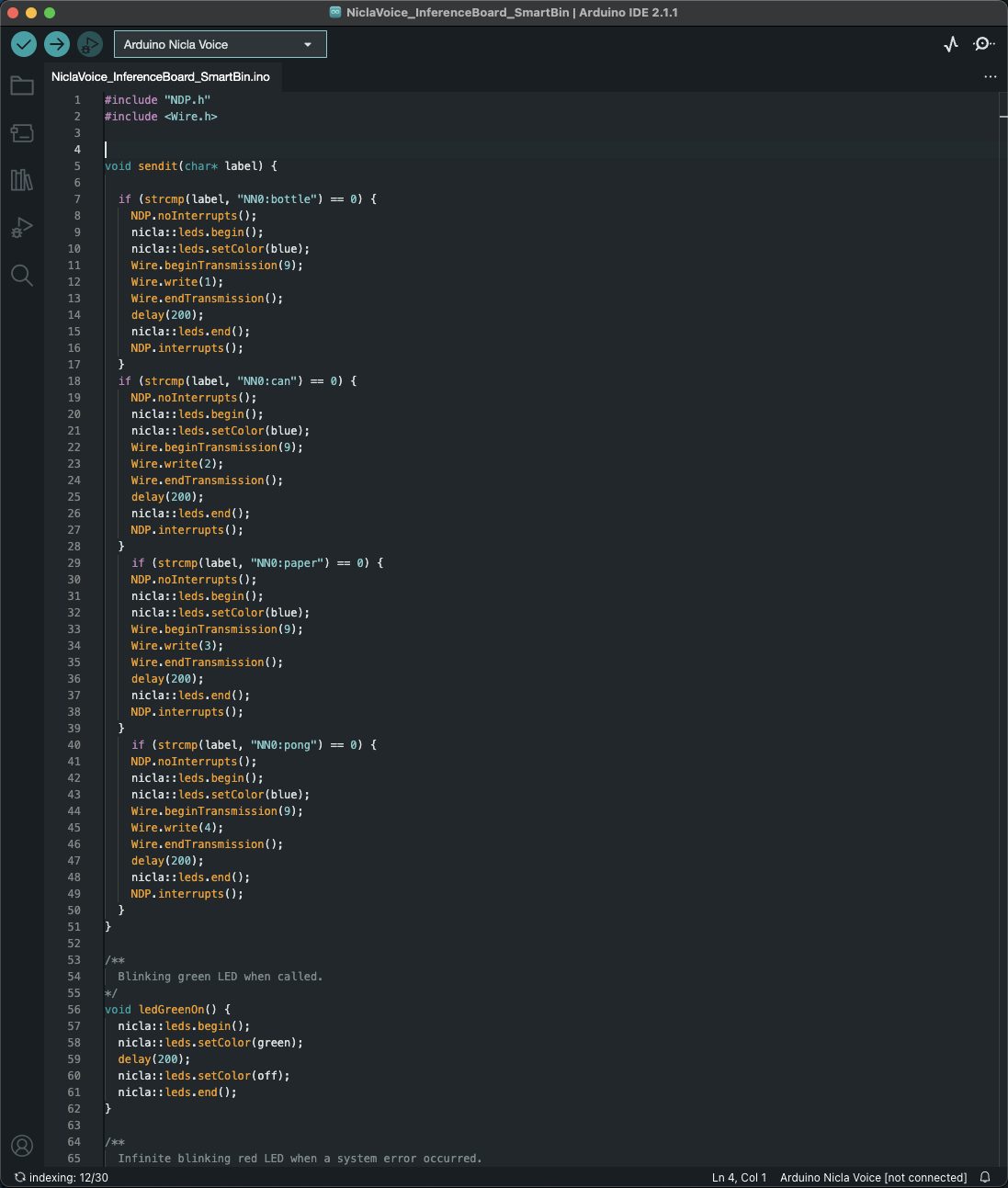

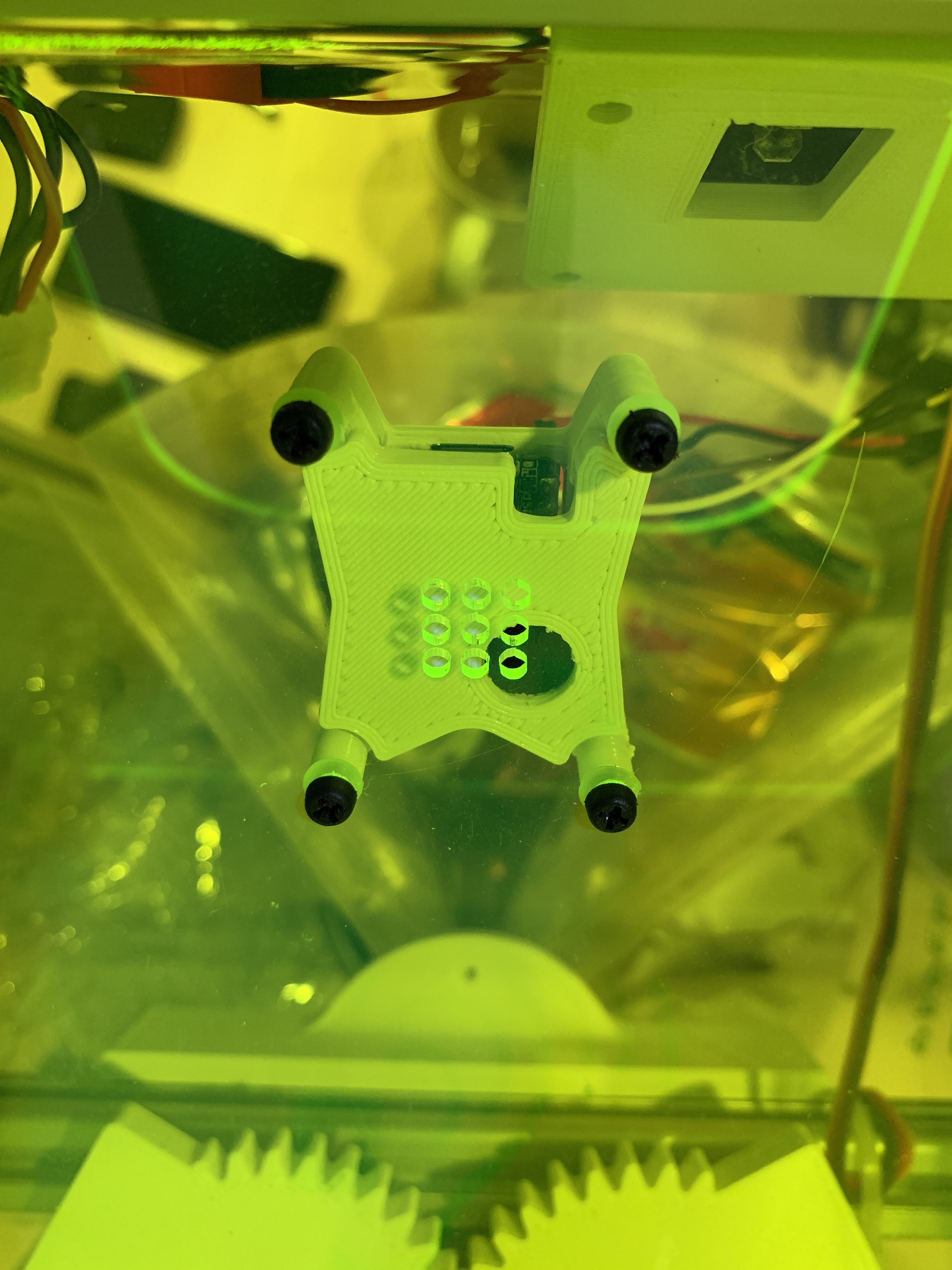

Inference on the Arduino Nicla Voice

10/09/2023 at 07:42 • 0 commentsThis log will discuss how to use the Arduino Nicla Voice as the inference microcontroller board. The Nicla Voice features an NDP120™, which is an ultra-low powered and special-purpose deep-learning processor. Since this project is battery powered and will require the device to be always-on, this board makes it a really good use case. The board also features the Nordic nRF52832 which we will program via the Arduino IDE to send the inference results to the Actuator controller board (Portenta C33) via I2C using the ESLOV cable.

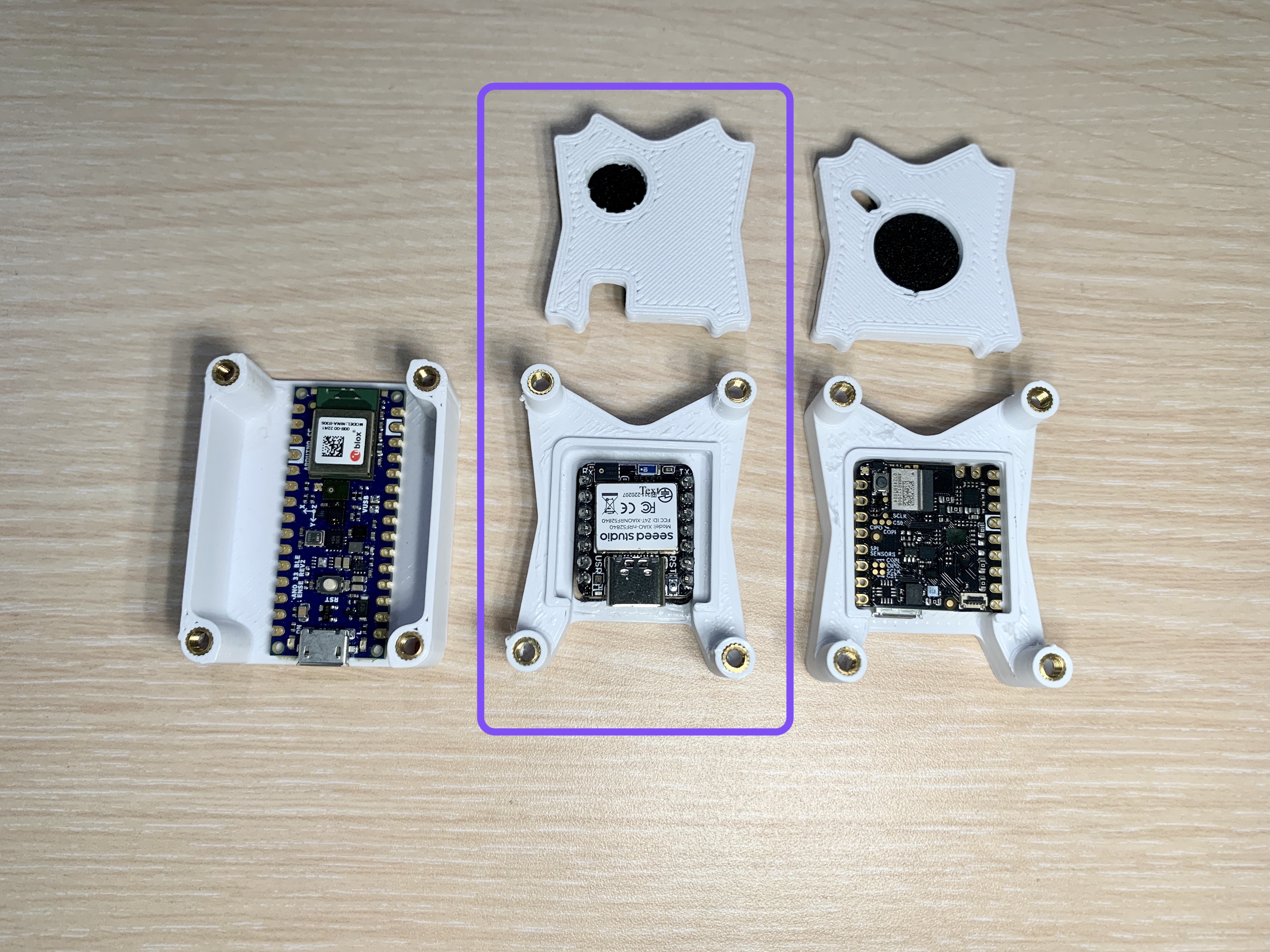

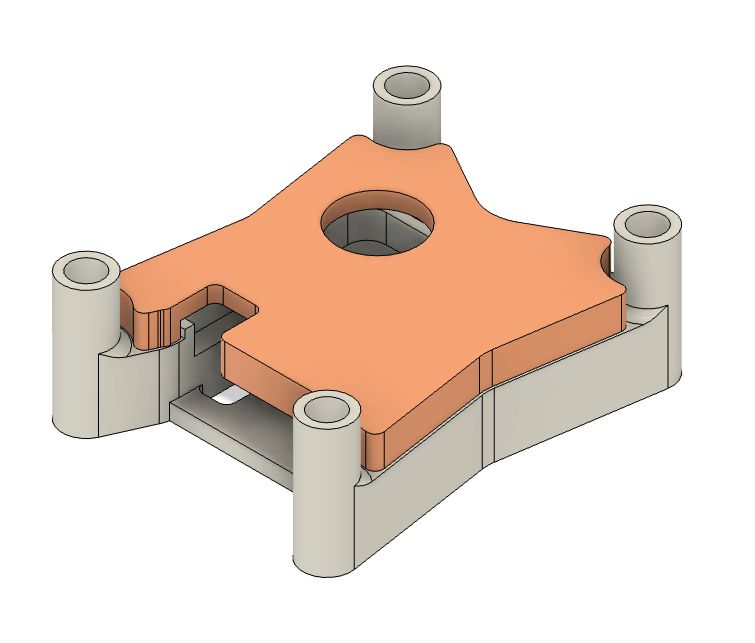

The .stl and editable CAD project files for the case can be downloaded in the "Files" section in this project, the .ino code can also be found there.

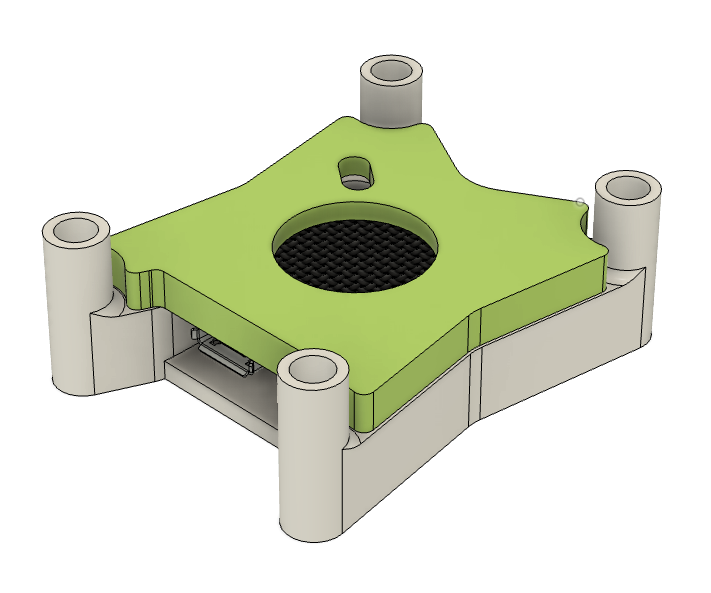

![]()

![]()

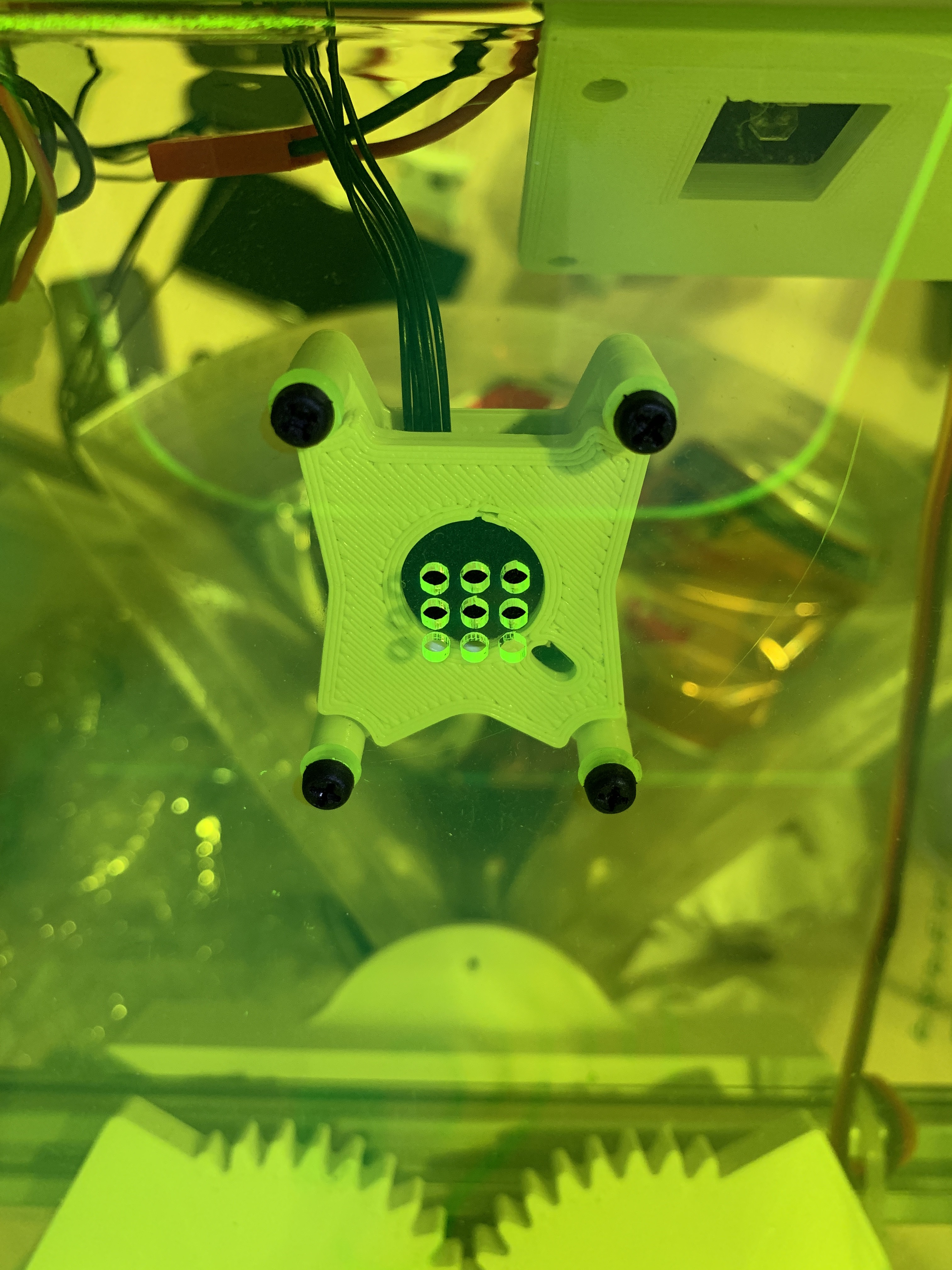

![]()

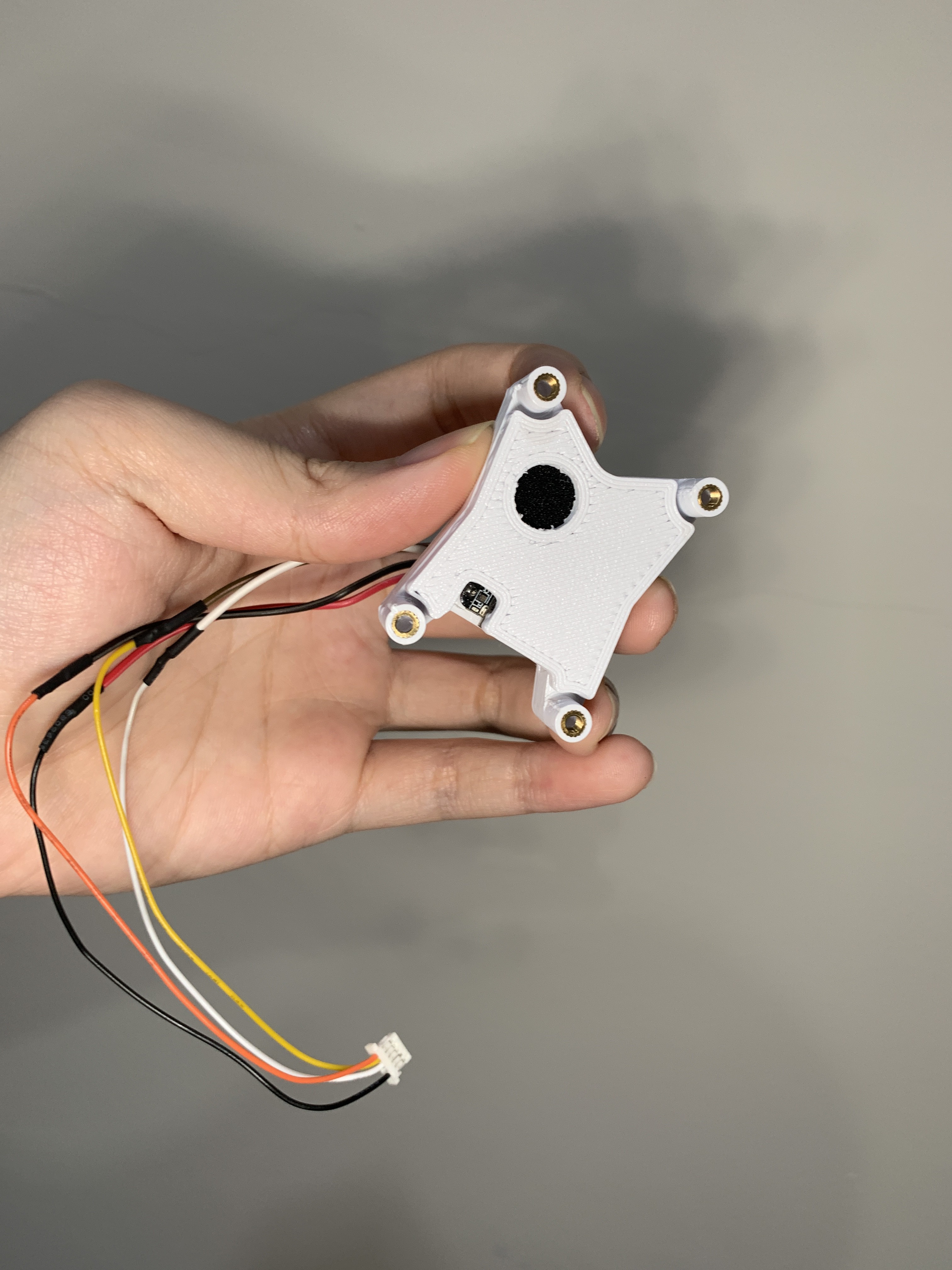

Just like the Seeed Studio nRF52840 Sense, the Nicla Voice also has a snap-fit case that enable us to mount the device to the acrylic "pyramid sink". Between the board and the top case there is a thin sheet of foam to filter unwanted wind noise and also protect the Nicla Voice from dirt and moisture.

My Edge Impulse Project for the Nicla Voice: https://studio.edgeimpulse.com/studio/286429

![]()

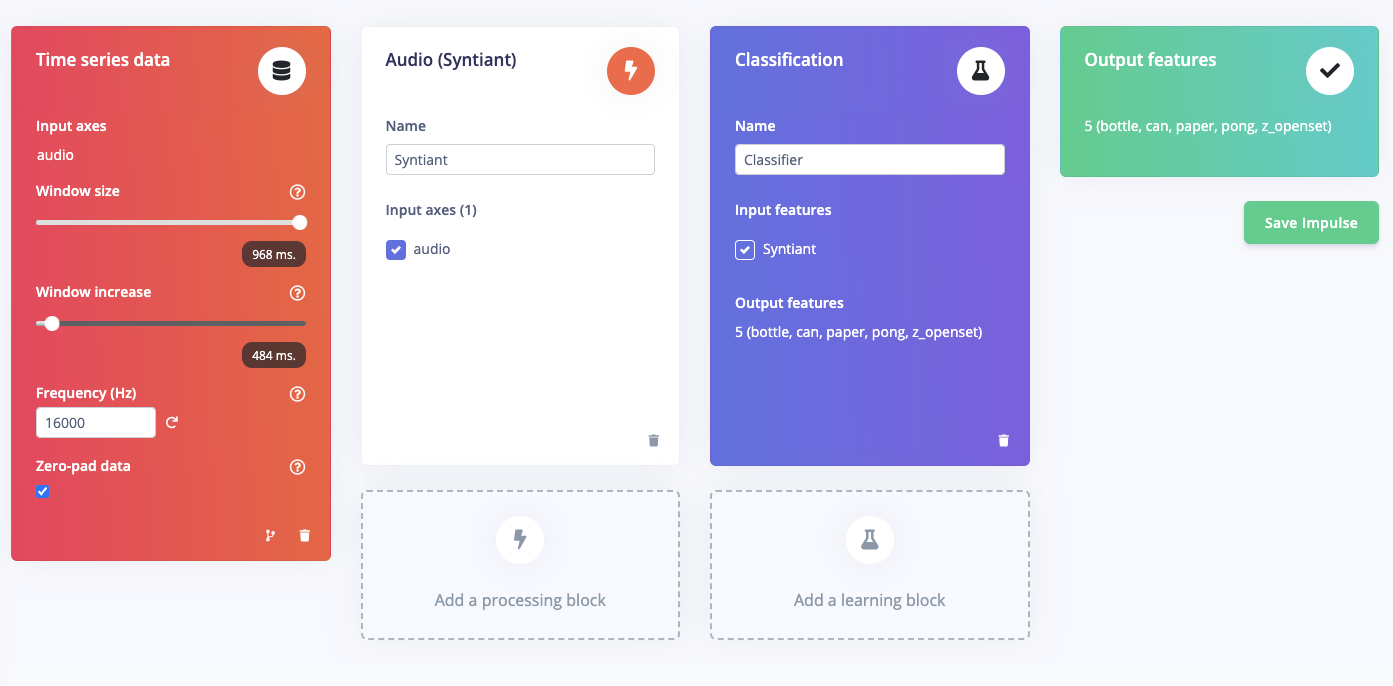

The steps for creating the AI Audio classifier model for the Nicla Voice using Edge Impulse is a little bit different than when using the Nano 33 BLE Sense and Xiao nRF52840 Sense because the Nicla Voice must use a Syntiant compatible pre-processing blocks.

![]()

![]()

In the "Syntiant" parameters tab features extractor change to log-bin (NDP120/200).

![]()

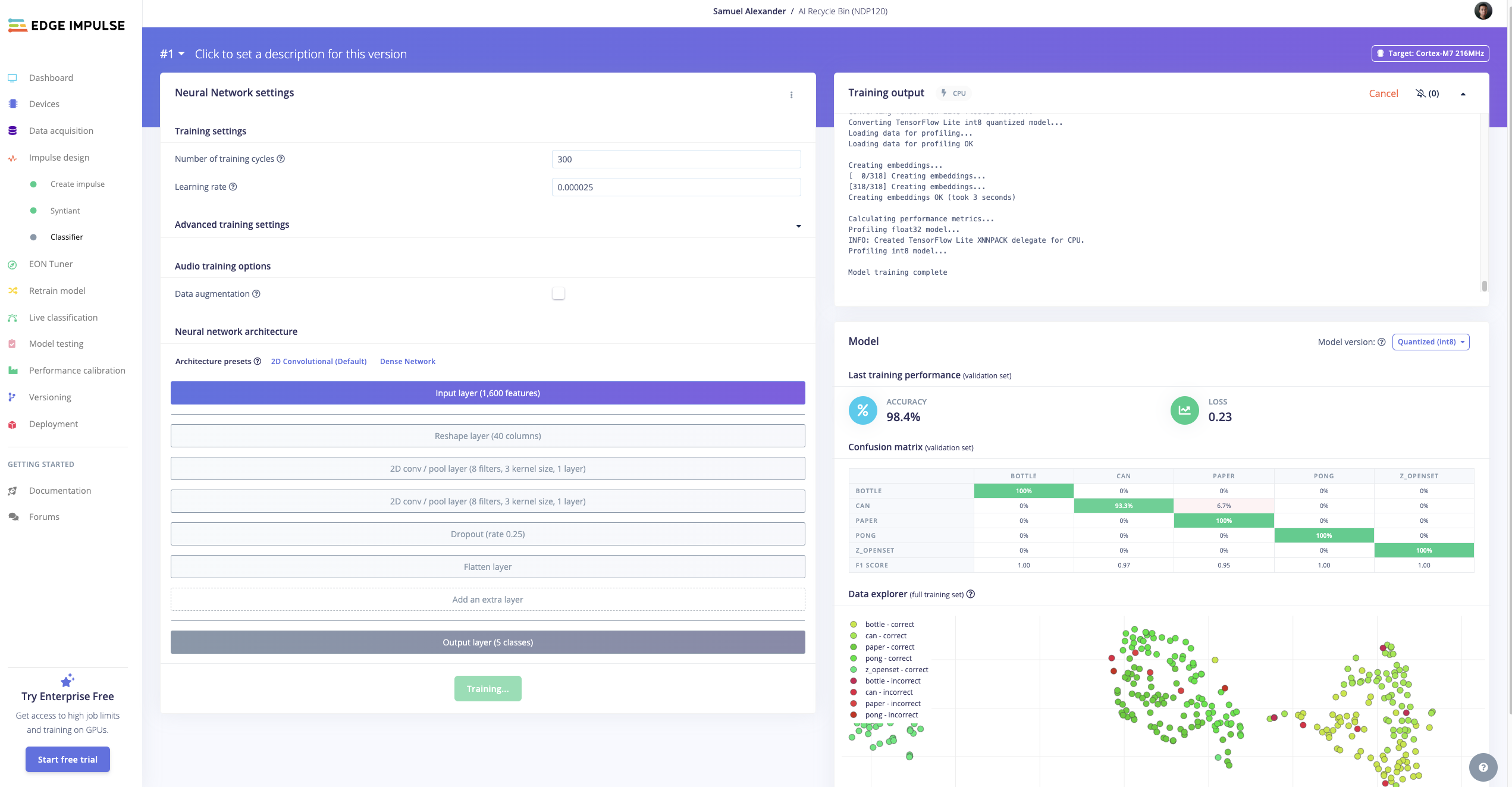

For the model parameters, I found that 300 cycles with 0.000025 learning rate give the best accuracy and lowest Loss value.

![]()

![]()

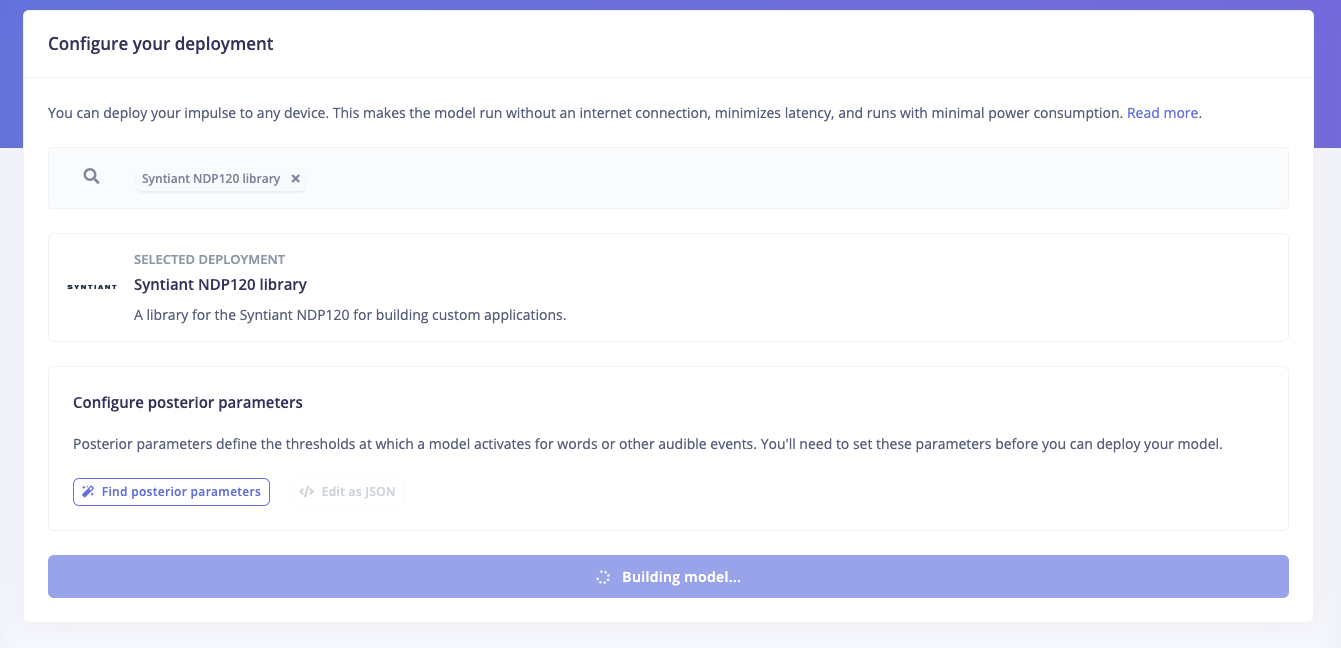

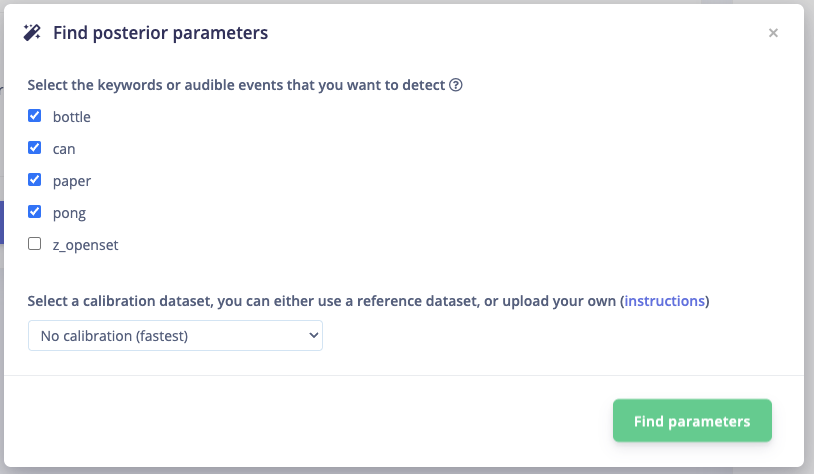

In the deployment tab click "Find posterior parameters" this is special for the Nicla Voice, since we want to ignore unknown/noise we can uncheck "z_openset". The way it works is very similar to wake word detection for virtual assistant in our smartphones or smart-home speakers, the difference is instead of saying "siri", "ok Google", or "Alexa" the event will be initiated when the collision sound of bottle, can, paper, or pong is detected.

After the Posterior parameters have been configured, click build model. The model will start building and will be automatically downloaded when it is done. Once done, flash the firmware, upload the Arduino code, mount the device on the AI Audio Recycle Bin and then everything will be ready to go!

![]()

-

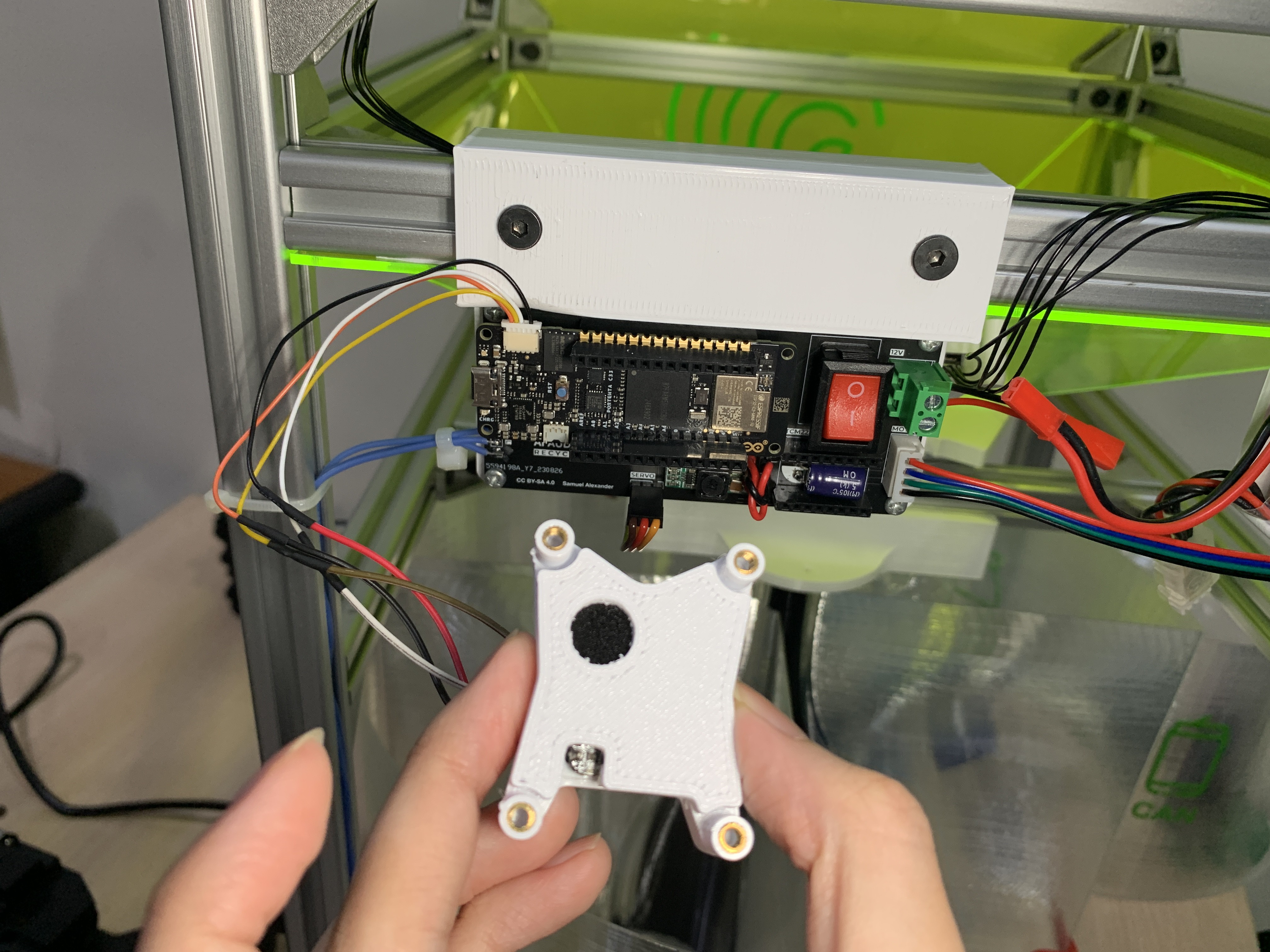

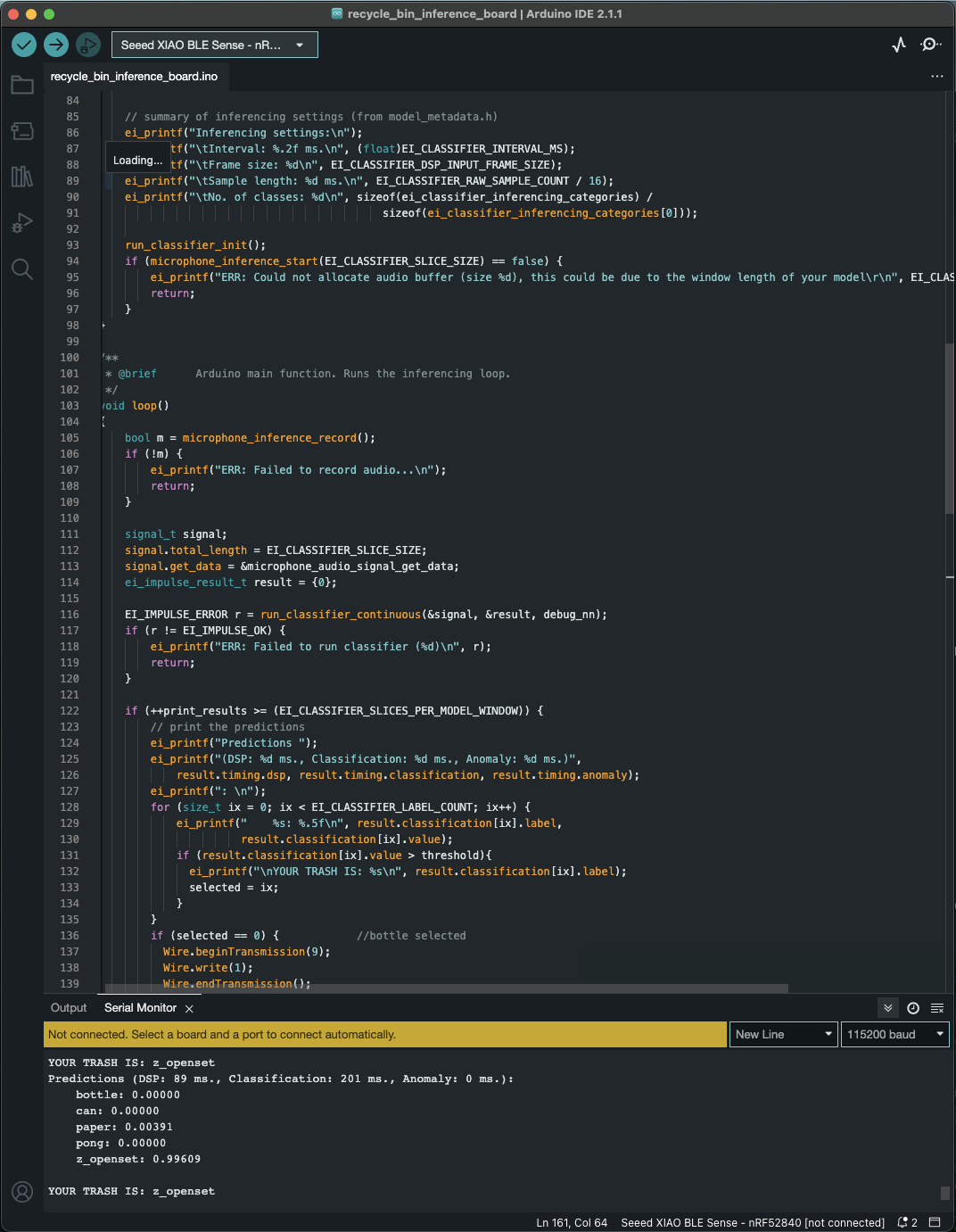

Inference on the Seeed Studio XIAO nRF52840 Sense

10/08/2023 at 15:47 • 1 commentI've tested different microcontrollers for running the Audio Classifier inference (and DSP). The project initially uses the Arduino Nano 33 BLE Sense for inferencing and Actuator controls. Since now I have upgraded the PCB to include an additional microcontroller for driving actuators, there is more flexibility to switch between different microcontrollers for running inference.

In this log I will discuss about using the Seeed Studio Xiao nRF52840 sense (In the next log I will focus on the Arduino Nicla Voice). Since the Xiao nRF52840 Sense dev-board uses the same nRF52840 with the Arduino Nano 33 BLE Sense, the same Arduino code can be reusable without making any changes.

![]()

My Edge Impulse Project for the Seeed Studio Xiao nRF52840 Sense: https://studio.edgeimpulse.com/public/289422/latest

The difference between this Edge Impulse project and the original one is the data acquisition for this one was using the Xiao nRF52840 Sense. Technically the original one should work, but I just redo the process since now I'm using the filter foam and case, so the audio sample can be a little bit different.

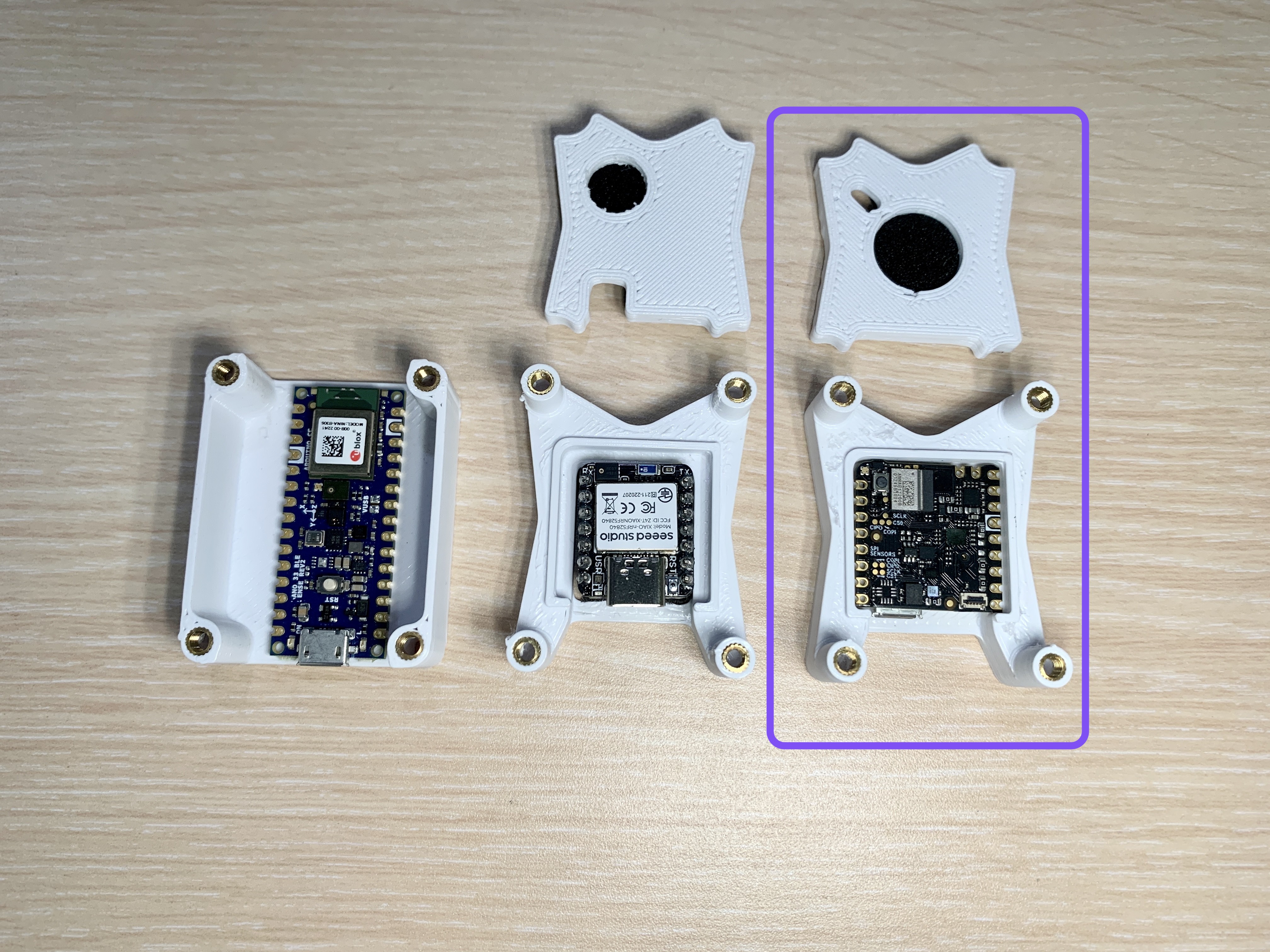

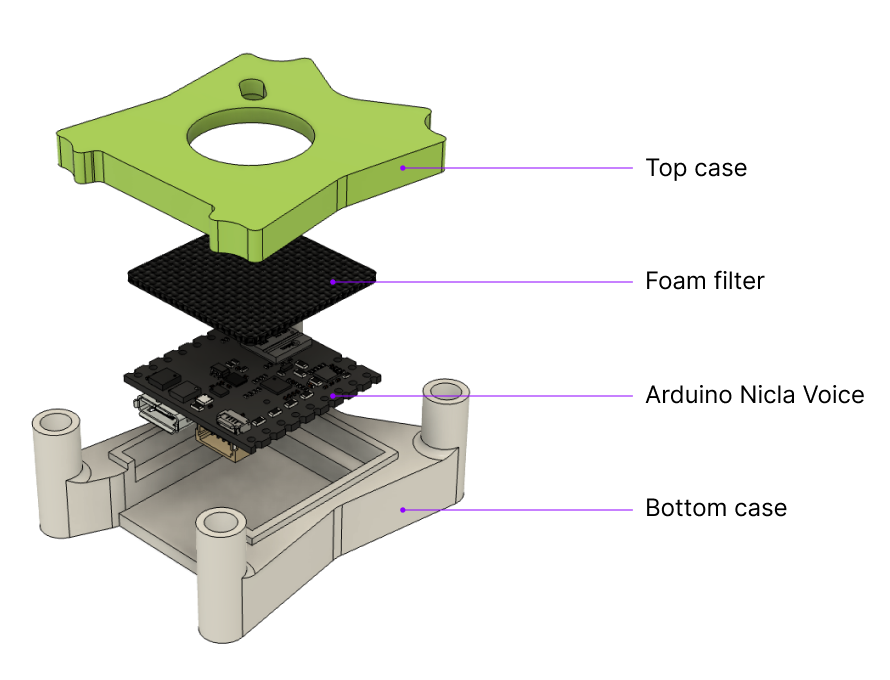

The 3D printed case is designed very similarly compared to the case for the Nicla Voice, the difference is on the hole placement for the microphone, the cutout for the built-in LED indicator, and the cutout on the bottom for the header pin layout. Between the top case and the Xiao nRF52840 Sense I placed a small sheet of foam to filter wind noise and other unwanted noises.

![]()

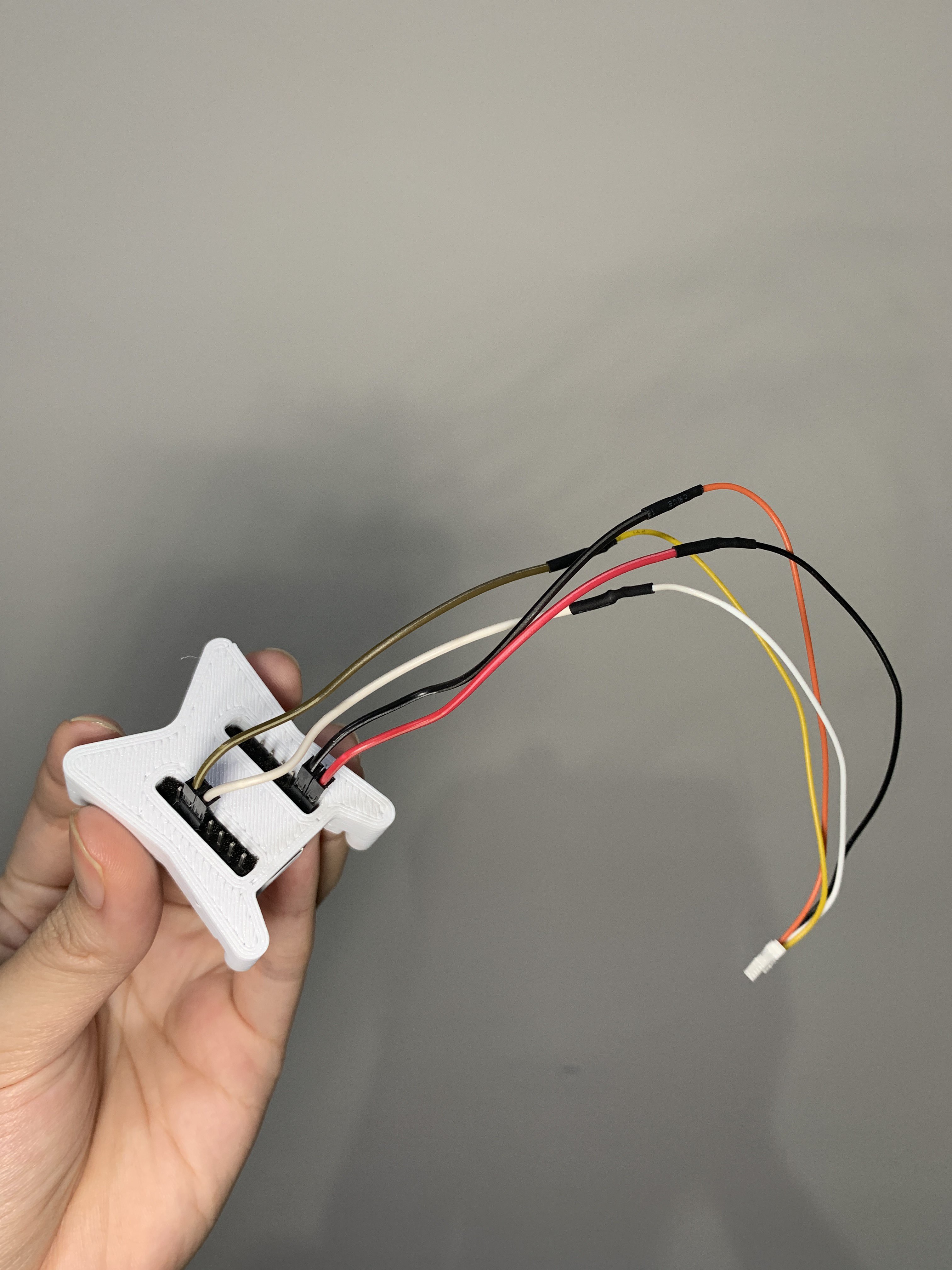

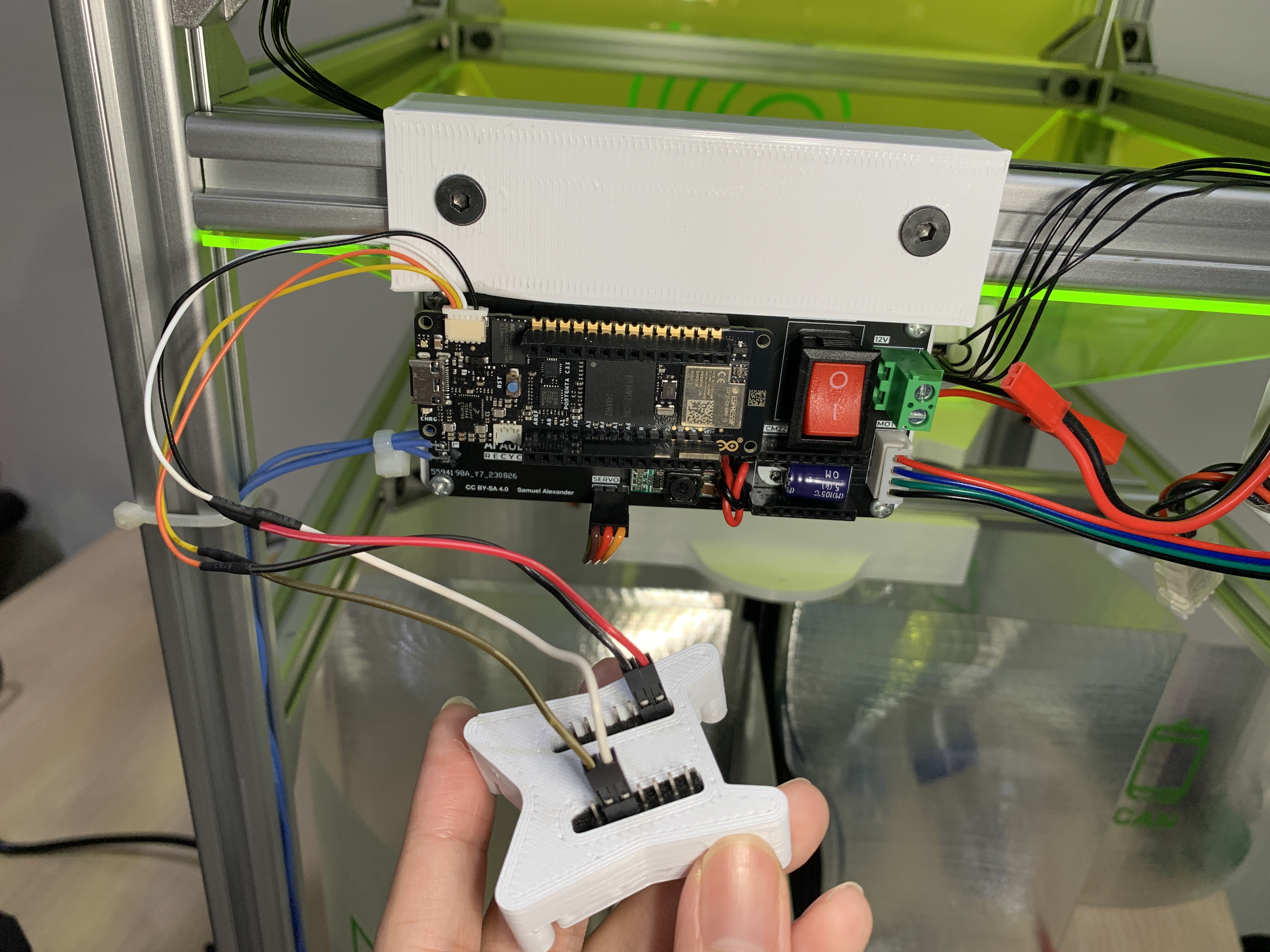

Female header pins soldered to female ESLOV connector is used for connecting the Xiao nRF52840 Sense to the actuator microcontroller. These two microcontrollers will communicate via I2C.

The inference microcontroller is hot swappable, in this case I'm swapping the inference microcontroller from the previously connected Nicla Voice to the Xiao nRF52840 Sense.

![]()

-

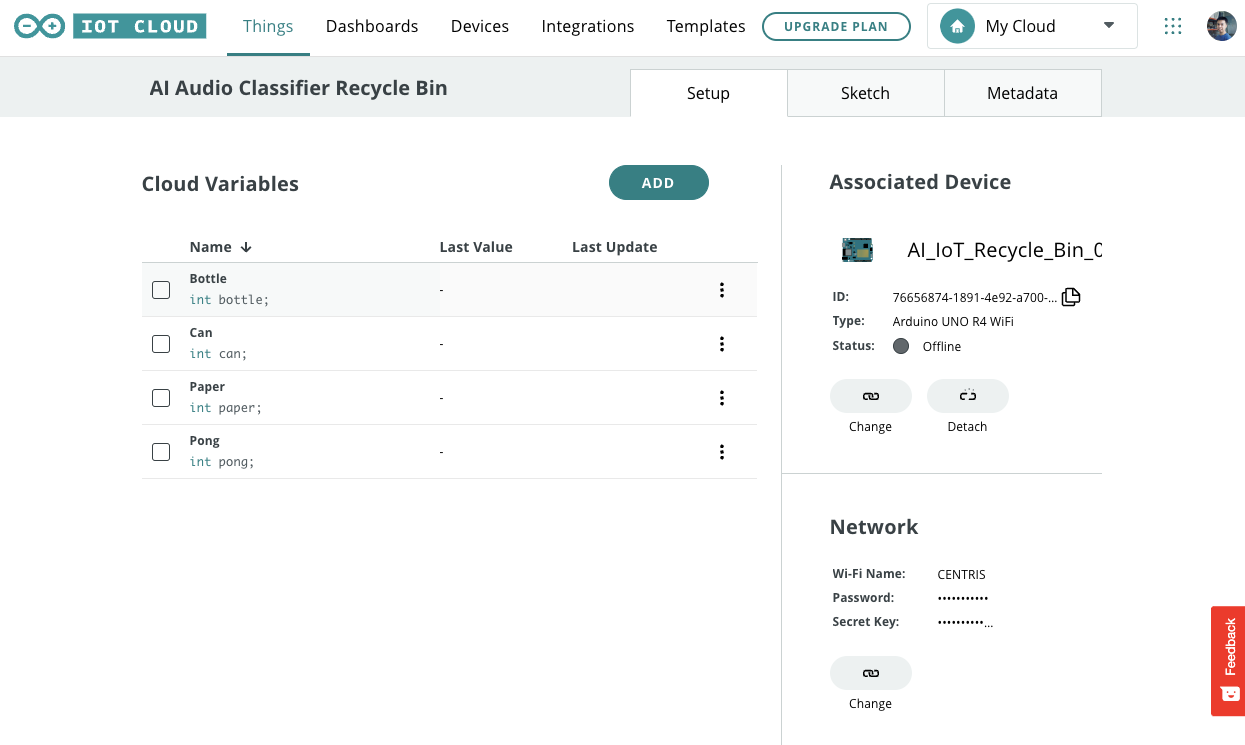

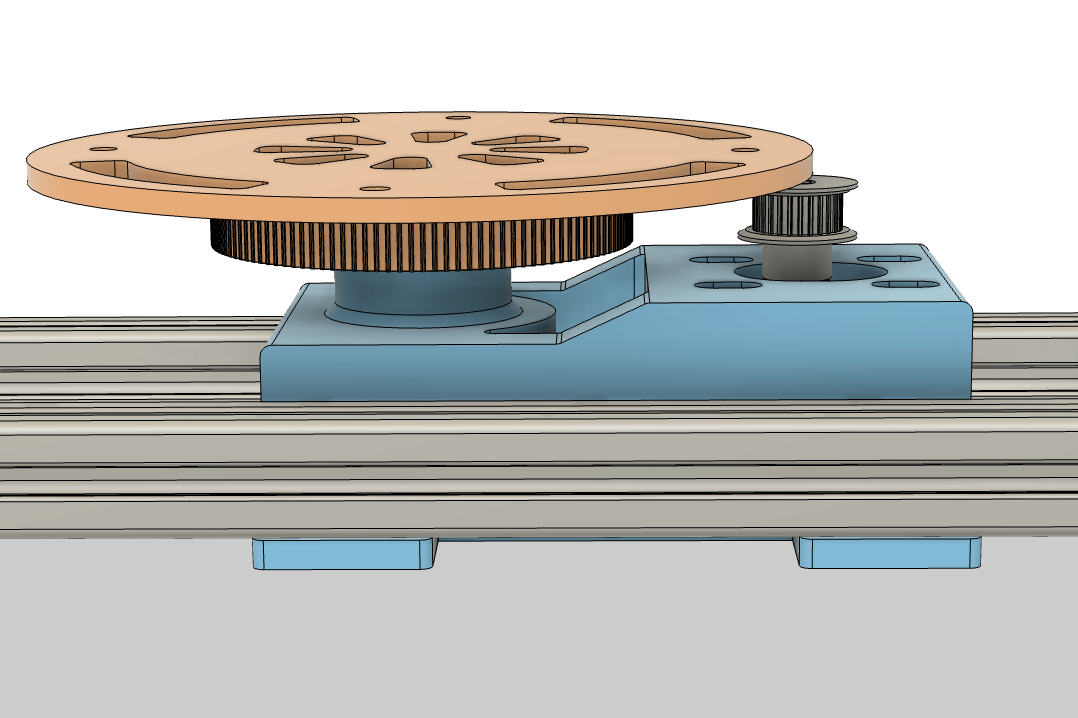

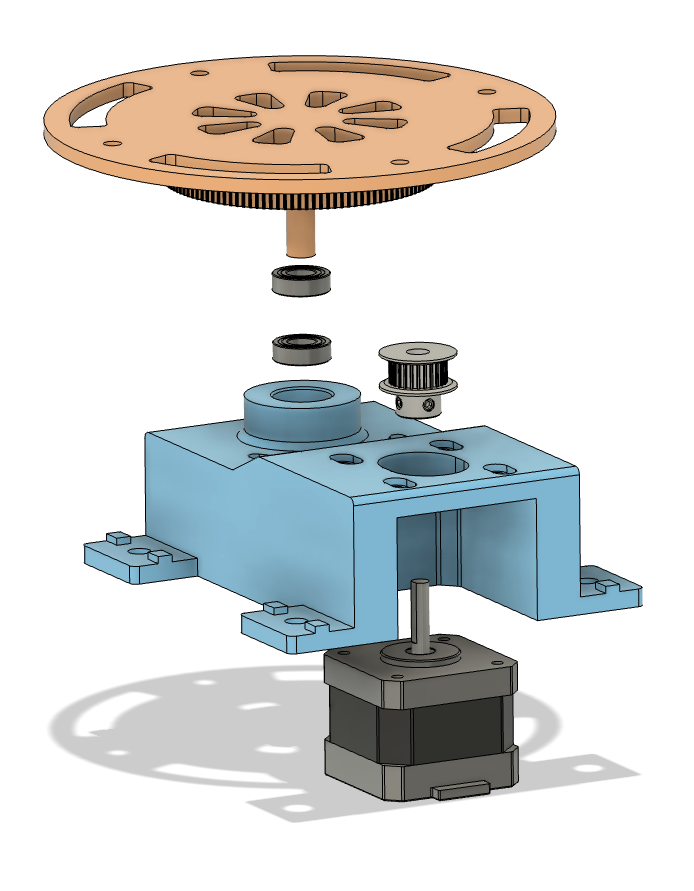

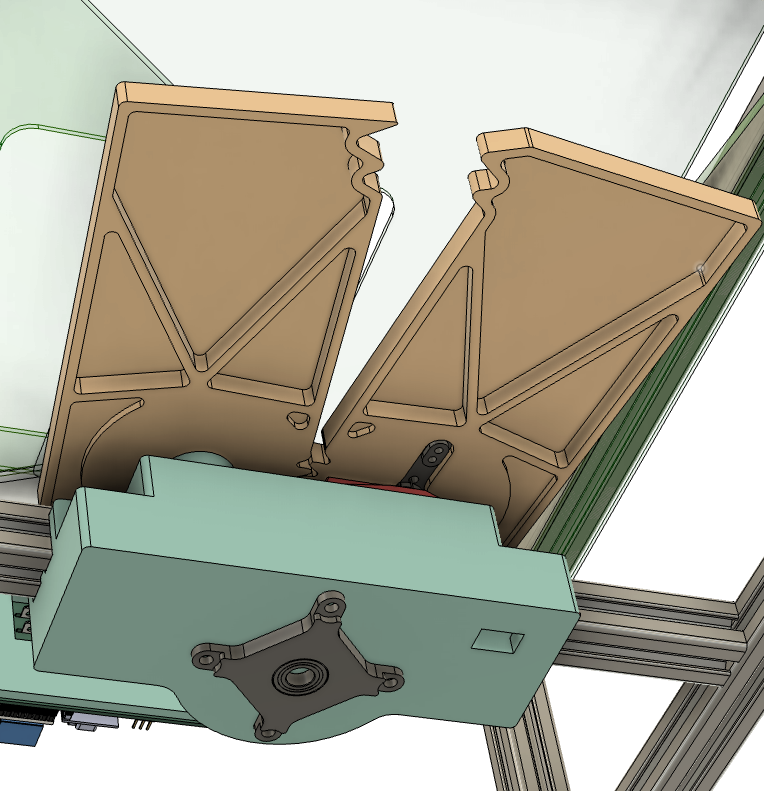

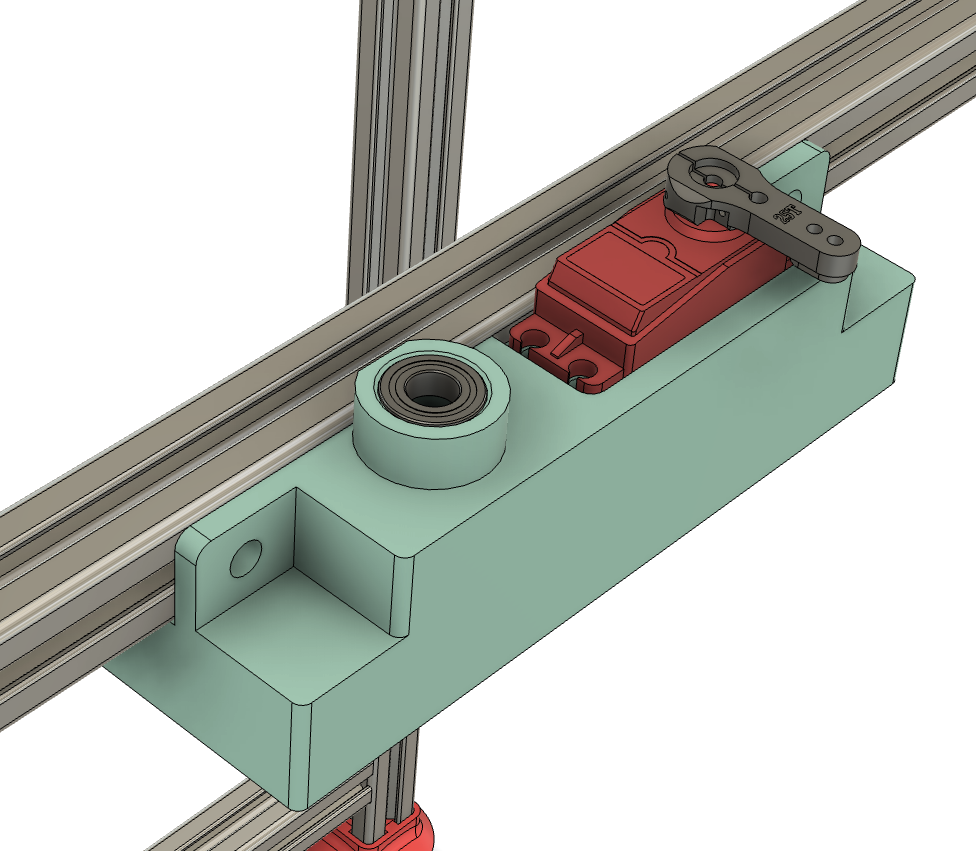

CAD 3D Model using Fusion 360

10/08/2023 at 09:28 • 0 comments![]()

Just like the PCB and codes, all the 3D files are available in the "Files" section both as the .STL file ready to be 3D printed and also as the Fusion 360 project files, so you can modify or add more stuff from my design based on what you need.

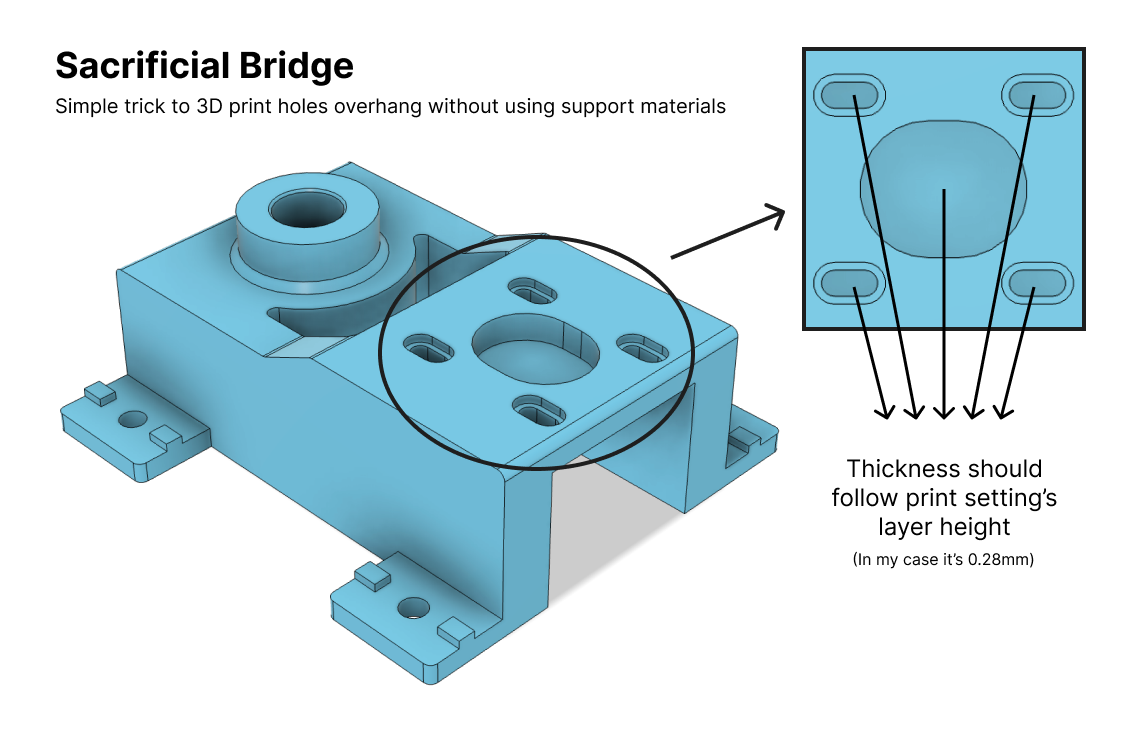

This log will focus on discussing some of the design decisions that I've made and some manufacturing techniques that I've used throughout this build.

![]()

![]()

By simply adding one layer of extrusion, we can complete the print without any support materials, this is called sacrificial bridge. After finished printing, the "bridge" can be trimmed using scissors or cutting pliers.

![]()

![]()

![]()

All of the 3D printed parts are mounted to the aluminium extrusion frame using M5 bolt and T-Nut.

![]()

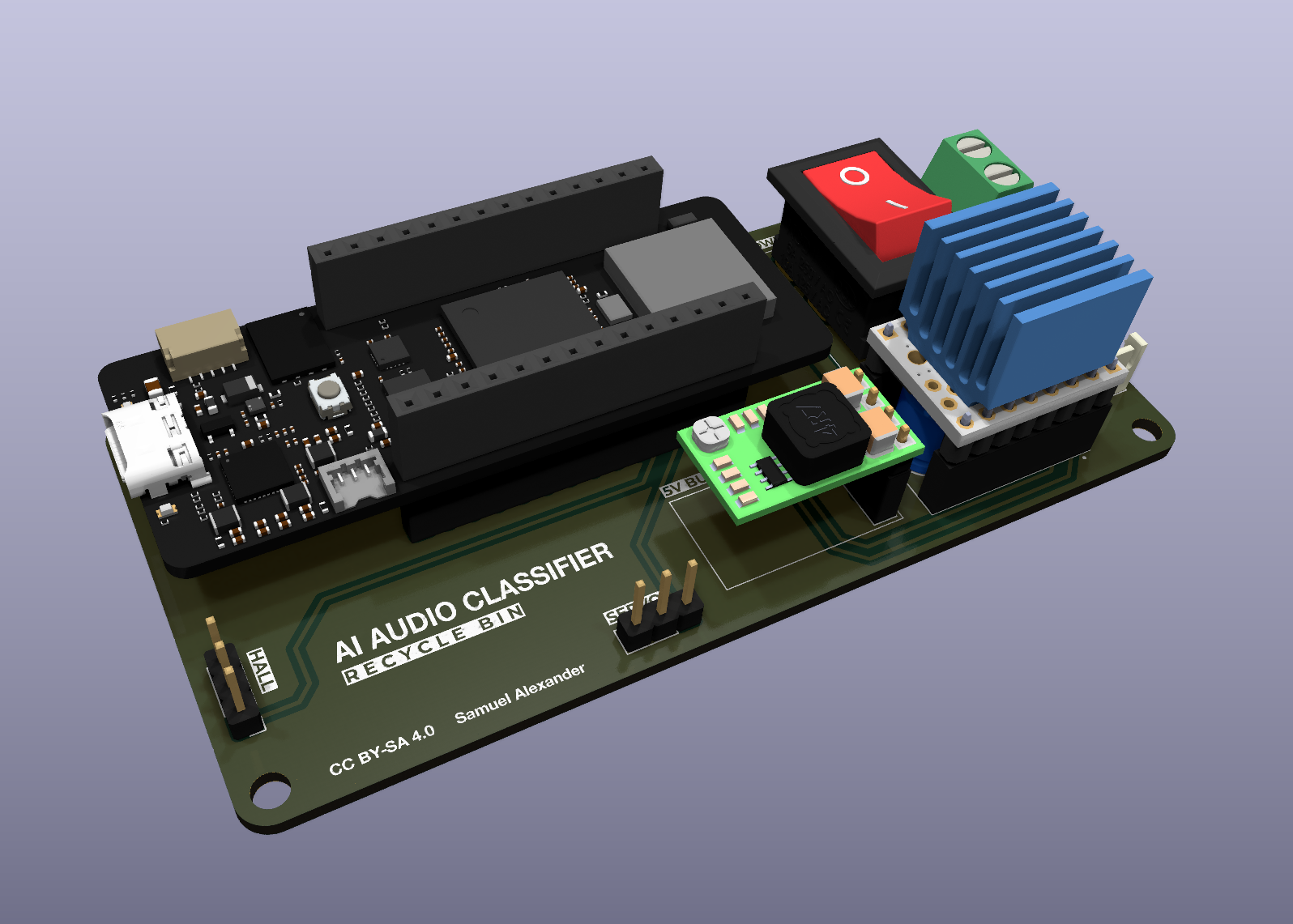

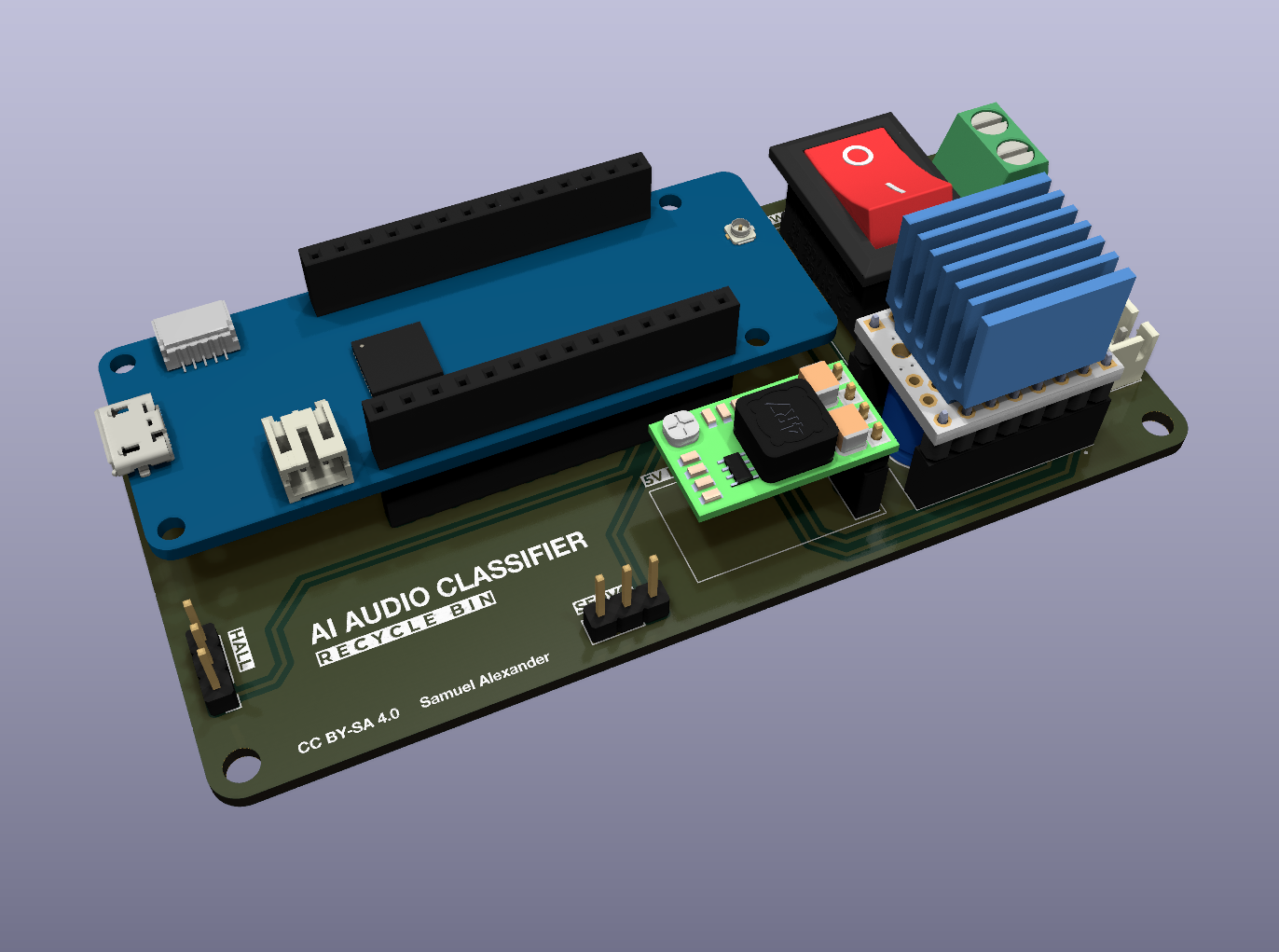

The PCB design from KiCAD can be exported as a .STEP file which can be imported to the Fusion 360 project file. This will make it easier to create the mounting plate to the aluminium extrusion frame.

-

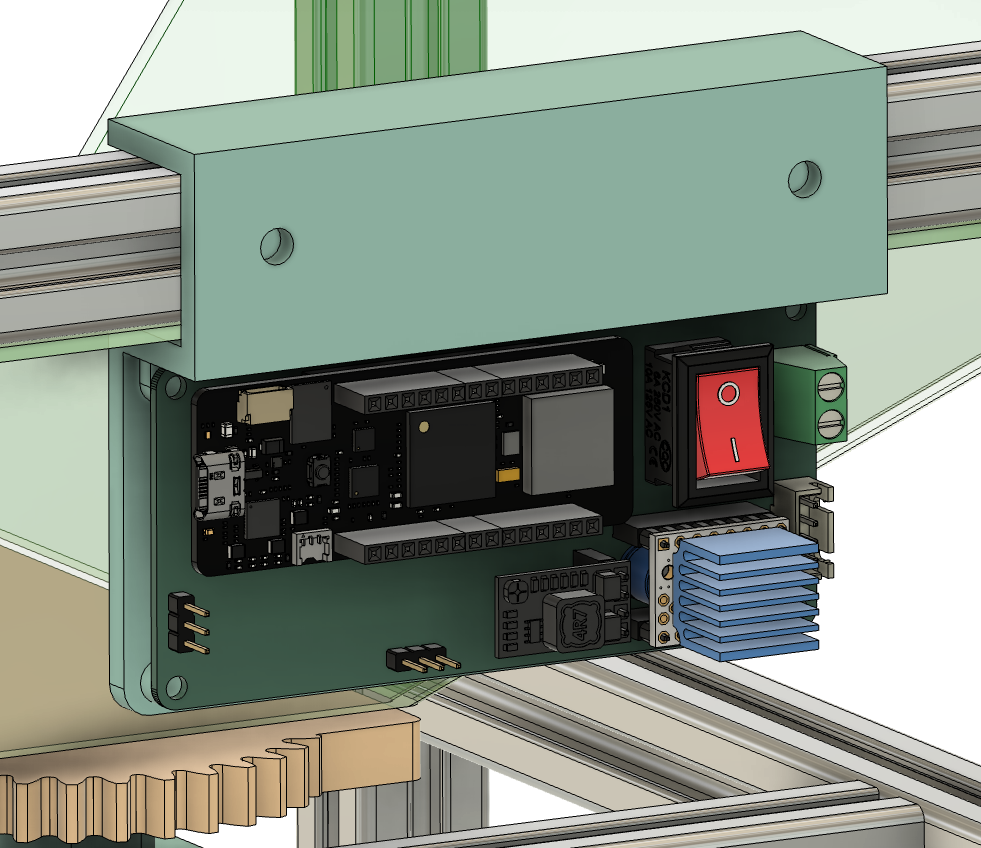

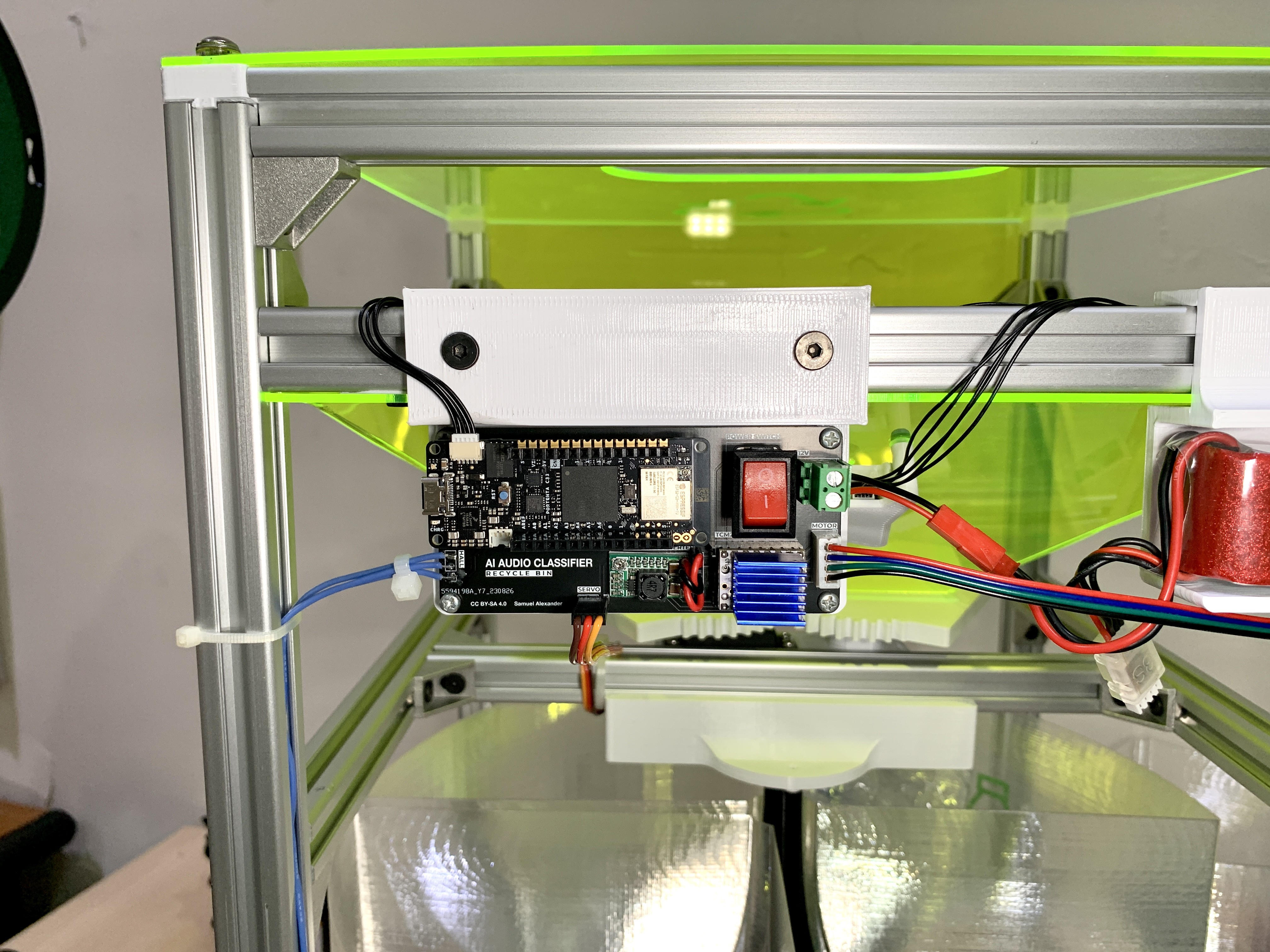

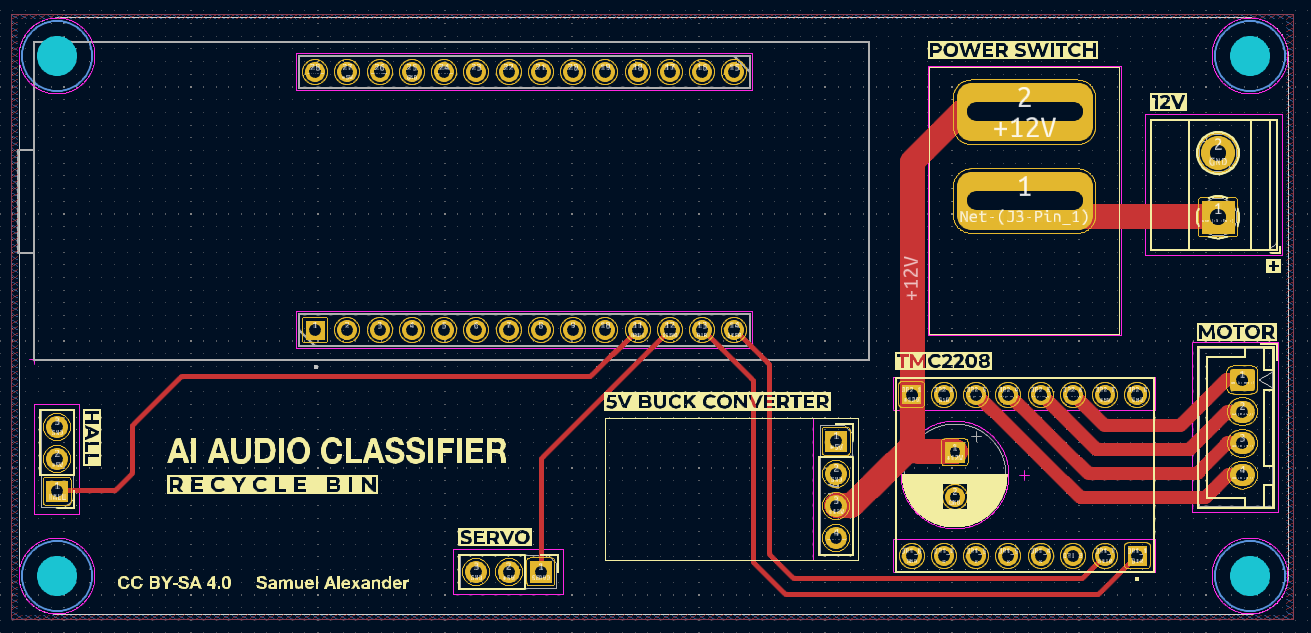

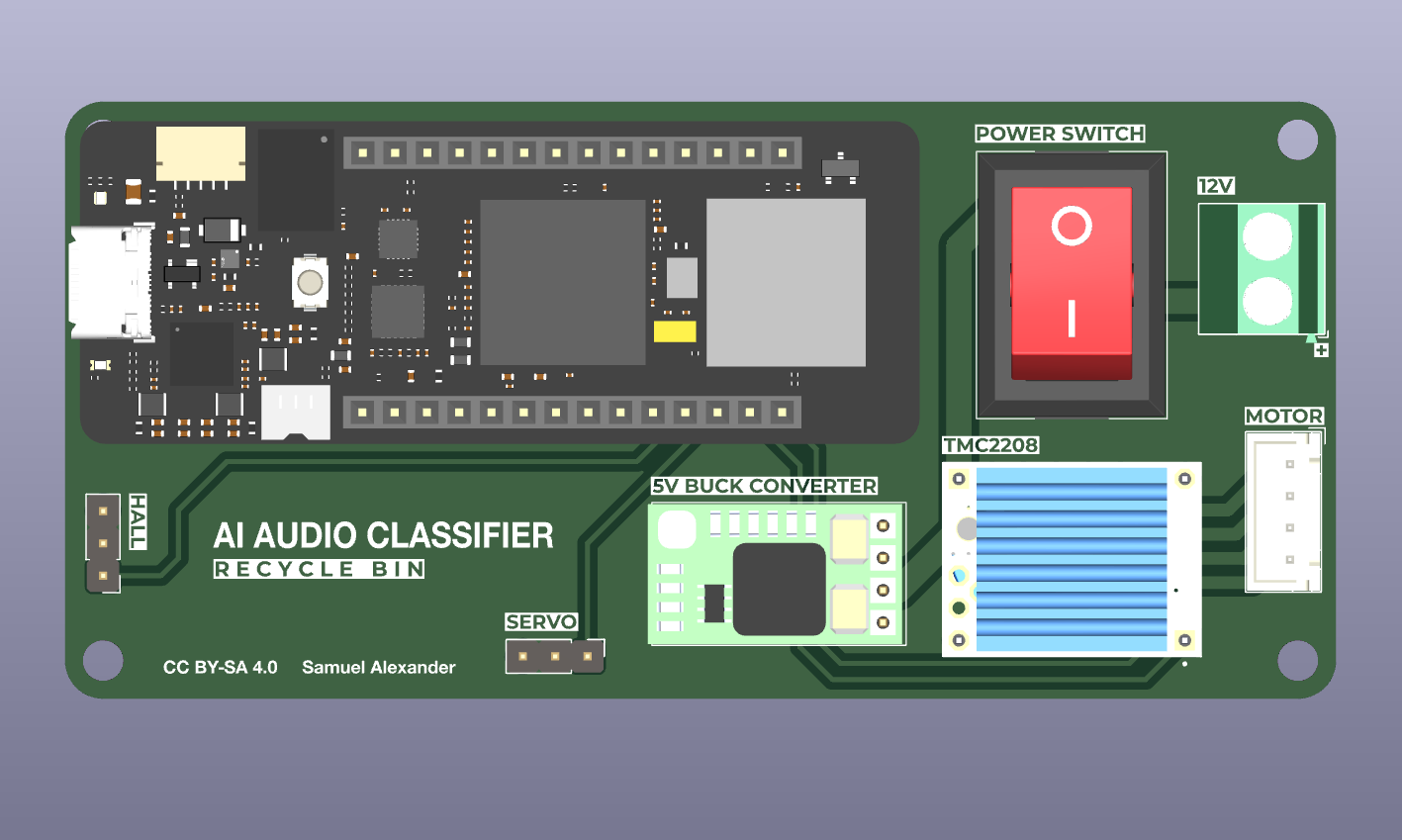

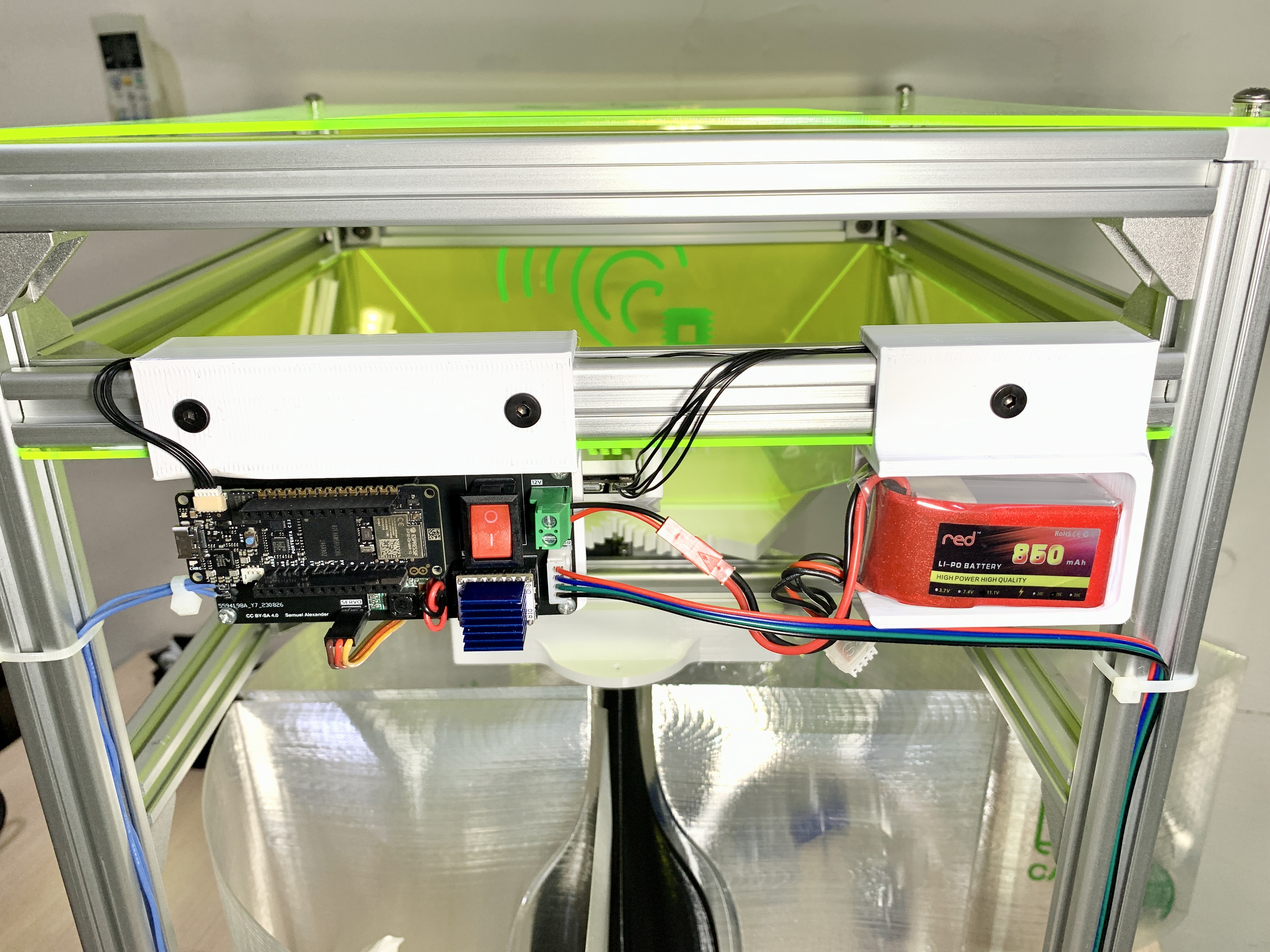

PCB Upgrades

10/08/2023 at 07:14 • 0 comments![]()

The initial version of the AI Audio Classifier Recycle Bin project only uses a breakout PCB stepper driver. The motor driver and the Audio Classifier model inference are both run using a single microcontroller (Arduino Nano BLE Sense).

This upgrade offloads the stepper motor, servo motor, and IoT connectivity, so the main microcontroller just run the audio classifier inference and the result will be sent to the microcontroller that is onboard the PCB. All the manufacturing (gerber) files and KiCAD design files are included in the "Files" section of the project page.

This new PCB also includes a buck converter, to supply 5V for both microcontrollers from a 3S LiPo battery. 12V from the battery is used for powering the stepper motors through the TMC2208 stepper driver.

![]()

![]()

![]()

The design allows for switching to different microcontroller dev boards in the Arduino MKR and Portenta family, because they use a similar styled pinout pins. In this case, I'm using the Arduino Portenta C33 because we will use internet connectivity through WiFi. Here are some other options you can choose from:

Arduino MKR WiFi 1010 or Arduino Portenta C33 for WiFi

Arduino MKR WAN 1310 for LoRaWAN

Arduino MKR GSM 1400 for GSM Cellular

![]()

![]()

![]()

-

Potential Implementation for Industrial Scale Waste Management Facilities

10/07/2023 at 17:19 • 0 commentsWaste management in industrial scale facilities is a complex task due to the diverse and large volumes of materials generated. The AI Audio Classifier can be implemented as a sustainable solution for this problem.

![]()

Given a set of waste with materials that produce different sounds, the AI Audio Classifier excels in efficient waste sorting and recycling. It identifies materials based on the unique sounds they produce upon disposal, optimizing recycling efforts and diverting waste from landfills.

Each waste sorting facility can handle different types of waste objects, the Audio Classifier can be tuned and programmed specifically to recognize hazardous waste sounds, the system enhances safety and ensures compliance with disposal regulations, reducing risks of accidents and contamination. Precise sorting minimizes contamination risks, guaranteeing cleaner and safer waste disposal. The technology aids compliance by ensuring waste materials are disposed of in accordance with legal mandates, reducing non-compliance risks.

The bin can offer real-time fill-level data for efficient waste collection scheduling, reducing operational disruptions, this can be done by summing the quantity of sound window for each object type or placing an additional sensor.

The AI Audio Classifier Recycle Bin if implemented properly at an industrial scale, could be a useful solution for the waste management management problem with its automation, precision, and efficiency. It promotes responsible waste management and sustainability while reducing operational costs.

-

Comparing Audio Classification vs. Image Classification Models for Waste Sorting

10/02/2023 at 17:44 • 0 commentsThe challenges posed by the ever-increasing volume of waste require innovative solutions that are not only efficient but also cost effective and environmentally friendly. Modern industrial sorting facilities commonly uses AI Image Classification model, but this solution is not effective for waste management due to its high computing requirements, expensive hardware, and energy intensive process. One solution to this problem is the AI Audio Classifier Recycle Bin. This log will explore the benefits of using Edge AI Audio Classification model compared to a more traditional image classification model for waste management, highlighting how this technology contributes to sustainability and a cleaner urban environment.

![]()

1. Cost effectiveness

Edge AI audio classification offers a cost-effective solution for waste sorting. Compared to image-based systems that require high-resolution cameras and extensive computational power, audio classification systems are often more affordable to implement and maintain.

Let’s take an example a PDM MEMS microphone breakout board costs less than $5 (The MP34DT06JTR mic if bulk purchased as SMD Reel can cost less than $1) now compare that to a low cost camera sensor like the 2MP GC2145 which costs more than $7. If a higher resolution camera is required, the cost will increase even more.

Since image classification inferencing requires more clock speed and memory, the hardware to support will also be more costly.

2. Compute requirements and energy efficiency

Edge AI audio classification offers a faster and more efficient waste sorting process compared to image classification. The technology can process and classify items in real-time as they are thrown into the bin, without the need for additional time-consuming steps like capturing and processing images. This efficiency leads to reduced wait times for users and ensures that waste is sorted swiftly and accurately. The Digital Signal Processing and inferencing time for audio is less than it is for image.

3. Accuracy and reliability

One of the primary advantages of using edge AI audio classification for waste sorting is its precision. Unlike image classification, which relies on visual cues, audio classification can discern subtle differences in the sounds made when objects are thrown into the bin. This precision results in a more accurate sorting process. For instance, it can differentiate between a glass bottle and a plastic bottle, even if they look similar, by analyzing the distinct sounds they produce when landing in the bin.

Image classification relies heavily on the appearance of objects, making it susceptible to errors when items are damaged, obscured, or have ambiguous visual features. In contrast, edge AI audio classification is not affected by such limitations. It can accurately identify and sort objects based solely on the sounds they generate upon impact. This non-reliance on visual appearance ensures a reliable and consistent sorting process, regardless of the condition of the waste items.

The AI Audio Classifier Recycle Bin, powered by edge AI audio classification, represents a significant advancement in waste management technology. Its precision, non-reliance on visual appearance, increased efficiency, and cost-effectiveness make it a compelling alternative to image-based sorting systems.

-

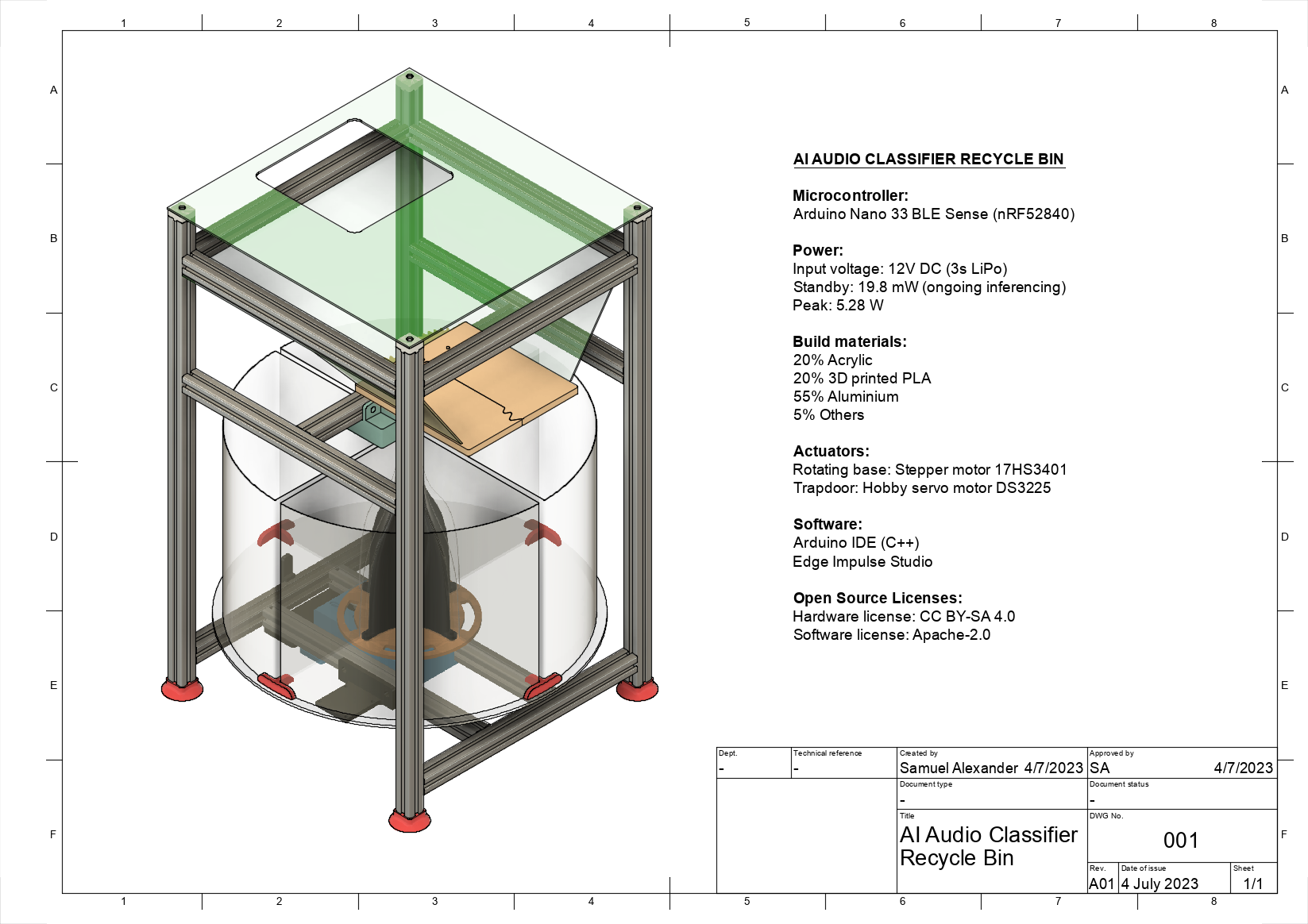

Engineering drawing and project specifications

07/04/2023 at 12:07 • 0 commentsAdded engineering drawing and project specifications.

![]()

AI Audio Classifier Recycle Bin

Recycle Bin that sorts rubbish based on the sound of collision using Edge AI audio classification.

Samuel Alexander

Samuel Alexander