-

Avatar and AI Video Generator

10/09/2023 at 09:36 • 0 commentsAlright, I reckon it'd be nice to look back a bit and explain how you can create AI generated video for free.

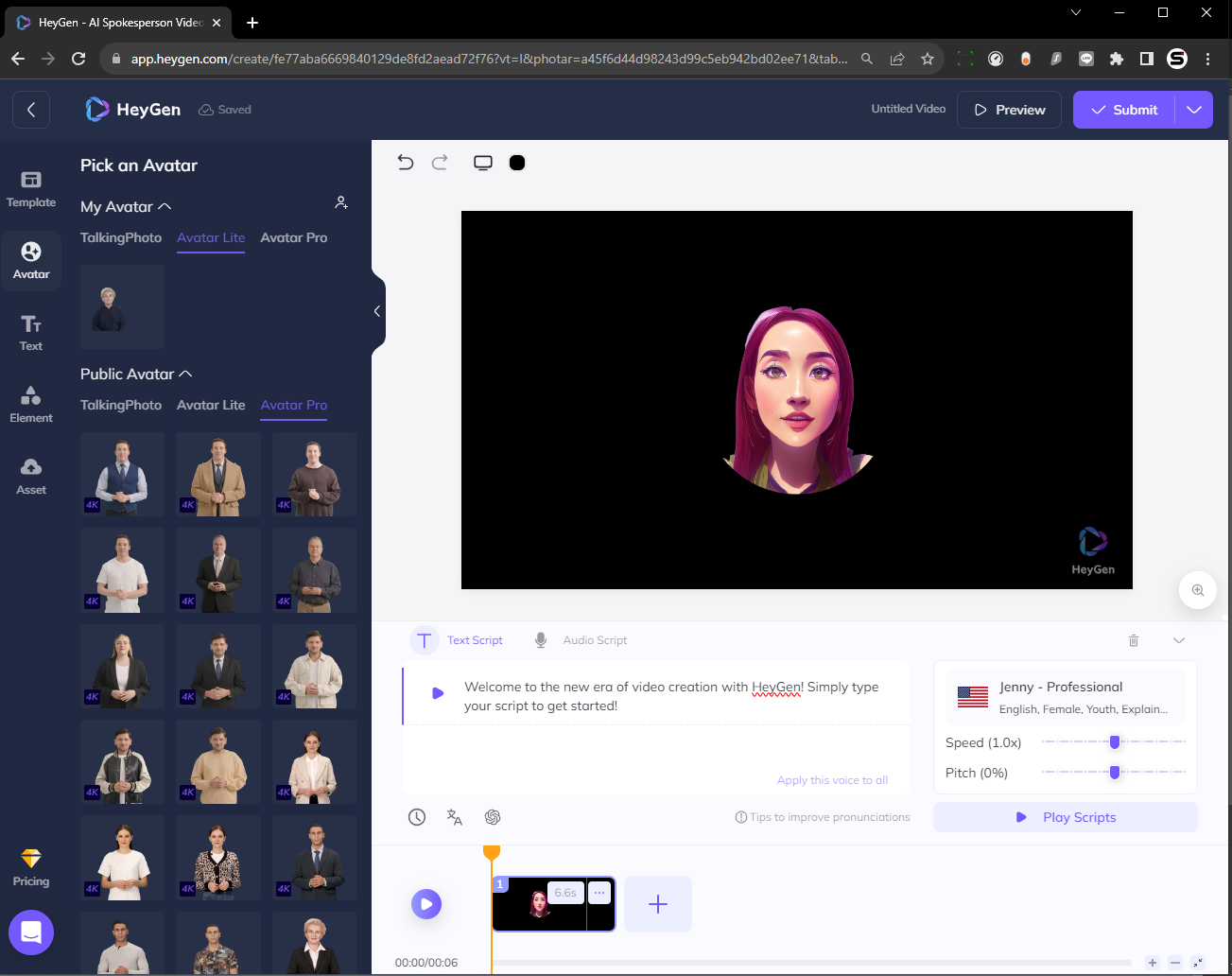

As you can see in the my earlier videos and photos, I've made a cool virtual assistant with its own custom avatar. To make it, I used HeyGen, this AI video tool that turns a picture and some text into a cool animated video. Let me tell you, it was my first time trying HeyGen, and I was seriously impressed by the awesome videos it churned out. The voice sounded super natural, and the avatar's facial expressions and lip-syncing were surprisingly lifelike. I ended up creating a bunch of videos where the avatar said different things for my project and saved them as video files. Of course not all is entirely free, for starter at the time making it, I received 2 minutes free credits.

![]()

In essence, what you need here is just a static photo + texts, Heygen will render it for you for preview which later on you can download. You can use avatar photo just like I use or a normal human face, either from their free options or you can upload it yourself. The UI is extremely easy to use and very straightforward. The 2 minutes free credits is rounded up to 30 seconds, so I highly recommend you to make a long 30 seconds text and use other video editing tools to crop it This way you'll get full 2 minutes free credits :)

![]()

So from a static avatar above, you can create a narrative video within minutes such as this one from my github.

-

October SW Release and Finals Here We Go

10/08/2023 at 23:22 • 0 commentsHey there! It's been a crazy few weeks for me, with all the traveling and work stuff. I spent a whole week in the US, and when I got back, I had a ton of work-related projects to handle. But hey, I'm back now, and I'm super excited to share that I'm one of the finalists in this year's Hackaday prize wildcard challenge!

To celebrate, I wanted to give you a heads up about what's coming. In honor of the HaD Prize final round, I'll be sharing all the cool new features I've rolled out and what's on the horizon for our October software release. Stay tuned!

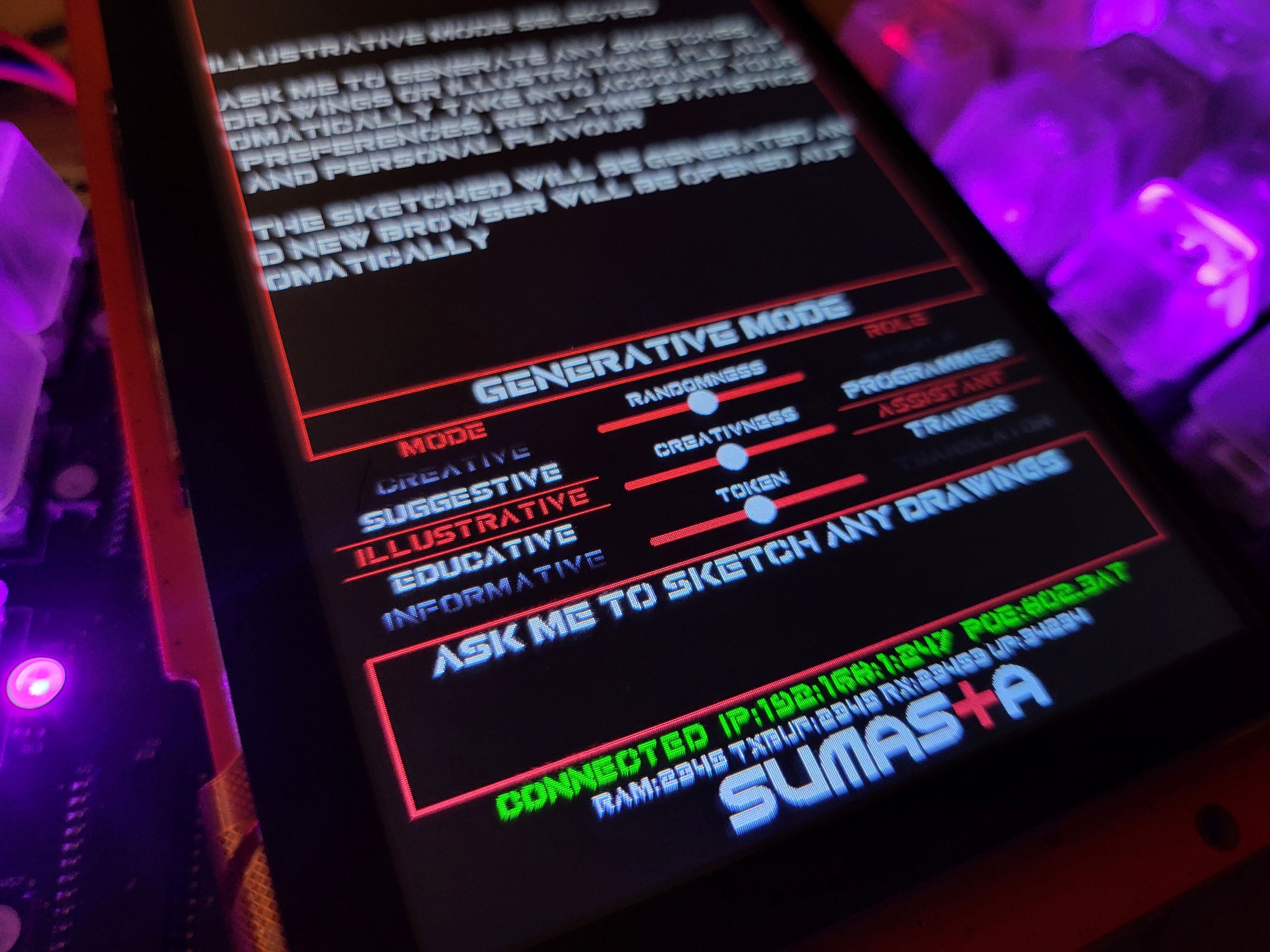

5 New Generative Modes

![]()

You got it, I've decided to organize the functionality of the Generative kAiboard into clear categories. On a high level, as you might have seen in my previous release post and video, it's basically split into two parts:

- Passive Keyboard Mode: This mode acts like a regular keyboard but of course with some extra cool features.

- Generative Mode: This is where the keyboard truly shines. It's the opposite of passive – an active mode where the keyboard generates content based on your prompts.

But here's the exciting part: I've expanded the generative functionality into 5 distinct categories:

- Informative Mode.

- Creative Mode.

- Suggestive Mode.

- Illustrative Mode.

- Educative Mode.

I bet you can already guess what each mode does by their names, but let's see how close you are! I'll go into more details on the next project logs but in a nutshell here's what each mode does.

Informative mode: it is the simplest from of generative mode, as it essentially provides you with a simple answer. Pretty much just like how you'd use chatGPT to ask simple (factual) answers. However, as you are prompting it via the generative kAiboard, under-the-hood it automatically embeds real-time information, user statistic and much more that is relevant with what you are asking at that particular moment.

Creative mode: this is a bit more of an extension from the informative mode, as this mode is aimed more towards content generations e.g. generate paragraph or story. But this time it tries to mimic your writing style, your word selection etc. Essentially learning from your own style of writing when you are in passive keyboard mode.

Suggestive Mode: you can call this mode a seamless hybrid so-to-speak. What it does is essentially suggest you with better or improved or alternative sentence along the way as you type with the keyboard. So when you are typing on a computer, the on-board screen will provide you with possible alternative sentences as you go, cool right?! Of course at the end it is up to you whether to incorporate the suggested output or not.

Illustrative mode: if you've heard about DALL-E then you probably can guess where I am going with this. Yes, this mode essentially convert your text prompt into an illustration, a drawing or a sketch. It of course try to incorporate some of your personality parameters as you type along and use the generative kAiboard. The results is streamed automatically to your browser.

Educative mode: last but not least, this is also one of my favorite feature, is the educative mode. I call this educative is because it will give you a quiz with of course the correct answer and some contexts. You'll need to only provide the topics and perhaps some optional specifics you'd like to incorporate into the quiz. This is fun!

That's it for the short update from me now. See you in other log soon!

-

New Features and Adaptive Prompting

09/12/2023 at 14:16 • 0 commentsSince the time I've posted my initial video on Youtube, there are few new features I've added:

- Open-AI Stream feature. This allow the reply from chatGPT to be shown real-time instead of at once.

- NTP Clock. Enable real time clock pulling from NTP server.

- New Clock UI.

- Finalize keyboard layout and functionalities.

- Improve Logic and animation features.

- Improve network interface and buffer robustness.

- More importantly, I have started implementing the adaptive real-time hardware prompting.

Adaptive Hardware Prompting

Well if you still hasn't grasp the concept of what I meant by adaptive hardware prompting, I can give you an example below. Perhaps first, if you have chatGPT open, try asking, what time is it right now. Or where do I live or where do I work etc. I guarantee they cannot provide you with an answer, that is because the LLM simply does not have provide real-time information inherently.

In the example below, however, as you can see If I ask the prompt how long have I work in my current employment, it can provide me directly with a clear answer. So essentially what's happening under the hood are:

- User ask the prompt/query.

- The Generative kAiboard checks and validate the prompt. In this case it "understood" context about the time and work.

- The Generative kAiboard capture relevant information from it's local knowledge server about current time and info about my current occupation.

- The generative kAiboard combine the original user prompt as in steps one together with information collected in step 3.

- At last, the user prompt is transmitted to openAI and the appropriate reply is returned.

![]()

And like you've perhaps seen in the video, your answers can then transmitted directly to your computer as though you are typing yourself.

![]()

Will keep you posted on more updates, I'm almost everyday making a commit update to my Github repo. So stay tuned.

Generative kAiboard

Beyond Typing: An Adaptive Hardware Prompter for the Age of Generative-AI. Internet-connected. Built-in ChatGPT.

Pamungkas Sumasta

Pamungkas Sumasta