-

An Infinite Variety of Digressions

09/13/2023 at 03:28 • 0 commentsLooking at word counts; it appears that the PDF version of “Modelling Neuronal Spike Codes” comes in with 9013 words, in total; whereas “Mystery Project” has 14098 words, now that “Notes on Modelling the Universe” and the material from “Using AI to create a Hollywood Script" have been added.

- Mystery Project 14.,098

- Yang-Mills Conjecture 6,558

- Modeling Spike Codes 9,013

- Prometheus 8,575

- The Money Bomb 3,473

- Rubidium 8,893

- Motivator 6,513

While I haven’t made a PDF yet of Tachyonic Quasi-Crystals, Tiny-CAD, or Project Seven, the materials listed above appear to have a total word count of around 57,123 words. I asked O.K. Google what is the length of the average book, and they suggest that a typical novel is around 80,000 words., but ranges between 60,000 to 100,000; whereas most academic books range between 70,000 and 110.00 words, “with little flexibility for anything not in between.”

So I suppose that quite a bit more editing will be in store, but I might be in the right range if I include the Altair Stuff (project seven) and the Tiny-CAD stuff; with a lot of editing to make things look more like a book, and less like a collection of log entries, of course. Likewise, there are plenty of things to draw chapter and section titles from; all without having to dig up old materials from my college days; which would probably take a decade to scan, categorize, revise, and edit. But who knows? Maybe not such a bad idea. From what I have read, GPT-4 is still having trouble with AP physics; although it has been getting better.

Then again I think I might have about 25,000 or so words worth of material in the form of chat logs; where I was chatting with Mega-Hal; and I could grab a whole bunch of “interesting stuff” from out of those files; even if I delete the gibberish portions; and if I add that stuff back into to the training set; maybe I can get to the 100.000-word mark sooner than even I might otherwise imagine.

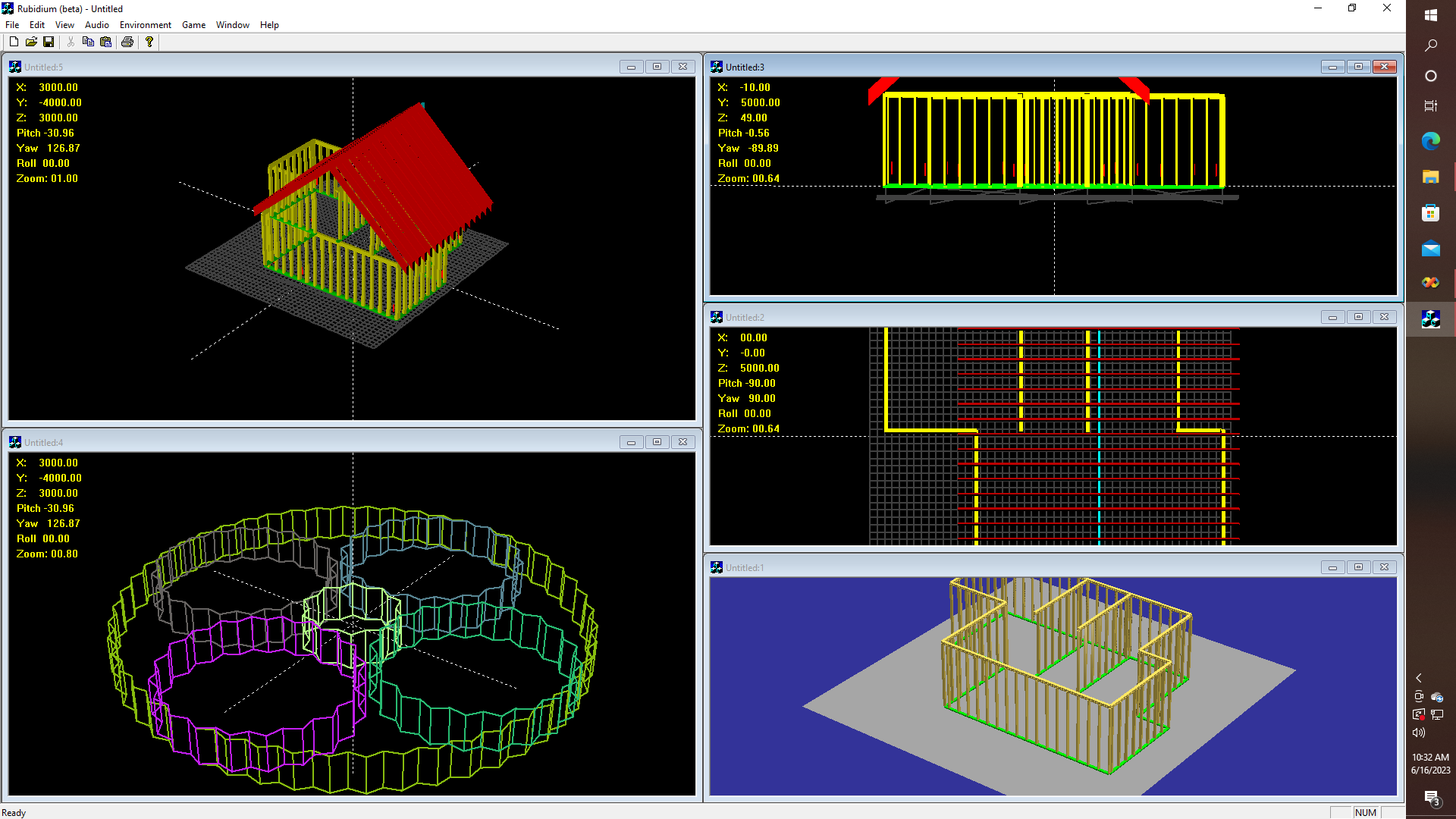

Since this project is now evolving toward a goal of trying to achieve something that hints at Artificial General intelligence, it might seem worthwhile therefore to contemplate one very important and potentially useful digression; that is - with respect to at least one important application of the theory that AGI will depend heavily on some kind of theory of the geometrization of space-time. Thus, this might be a good time, to discuss the concept of "Tiny Houses." Tiny houses are all the rage. So why are there still so many homeless people? Unfortunately, as popular as the "tiny" house meme is, the bitter truth is that the construction costs can often rival that of their full-size cousins, and what might seem to start out as a five to a ten-thousand-dollar project can easily turn into a fifty to one hundred thousand dollar money pit, that is when the cost of permits (when required), materials, labor (if you aren't able to DIY) and so on are added in. Then there is the cost of CAD software. Now if you are doing work that requires a permit, you might not have any choice but to use Auto-Cad or Vector Works, but even then you might be able to save thousands if you can get a head start on your project, using open-source software - which can be used to create compatible design documents. The hardware for this project, therefore, is whatever you decide to build with it.

So rather than simply get lost in the digression of talking about word counts, vs. how many words should be committed to analyzing lines of code; and getting back onto the track of trying to think about a potentially useful project; we could perhaps do something that might turn out to be useful to someone who wants to frame up a tiny house. Or perhaps you might want to build a bookcase for those encyclopedias that you inherited? So this is going to be one of those cases where the concept of design reuse will come in. How about, perhaps a shed, a new fence, or a back deck? Every now and then I take to the notion of just "simply" building a digital replica of "my world", so to speak. Yet obviously there is nothing simple about it, although perhaps there should be, or could be. Given that my first personal computer was an Apple II, I obviously have some sentiment toward the simplicity of wanting to create something by drawing with simple commands like MoveTo and LineTo, FillPolygon, and so on.

From these primitives, we should be able to build stuff. Complicated stuff. The original AutoCAD was really more of a programming language, based on Auto-Lisp than the more contemporary "click on this CIRCLE tool", position it with the mouse, etc., size it, and so on methodology, that we have become accustomed to.

Yet what programs like Auto-CAD and Adobe Illustrator really do, is translate mouse movements and clicks into menu item selections and numbers which then get translated into the various low-level API calls. Yet something seems to get lost in the process, at least most of the time, for most people. Then there are the object description languages, or file specifications really, like obj, where it is possible to specify in a file some really nice stuff, like a teapot or a bumble-bee for that matter, and get some really nice results, yet something still gets lost in the process.

Sometimes the game developers get most of it right. Especially if you can shoot at things, blow things up, burn them down, have realistic big fish eating the little fish in your virtual aquarium, or have your virtual race car blow a tire, or an engine, and perhaps crash, with bent and mangled parts flying everywhere, and so on.

Maybe the next frontier in software is going to be to follow along the pathways that seemed to be suggested by online games like Second Life, Minecraft, etc., as well as their predecessors like Sim-City and Sim-Life, and the like. So maybe it is time to rethink the whole process of content creation from the ground up.

Maybe I should try rewriting the code for a simple wall, and make some very minor changes so that I can also have a "simple ladder", "simple bookcase", "simple fence", and "simple deck". Then there might be "things with drawers, or doors", or "round things", etc.

Eventually, maybe AI will do this for us, perhaps you are thinking. Or maybe there are some not-so-open applications out there that are TRYING to do that, but they don't quite have the right set of primitives worked out, quite yet - maybe because that source code doesn't exist yet in a usable form that they can "borrow" from off of Git. Oh, well.

Yet I also saw an example (elsewhere) of how the latest version of Photoshop can remove unwanted people or objects from a scene, which sounds like a useful feature (even if it reminds me of Stalin - obviously!) or "relocate a reindeer" from a wooded scene to a "backdrop of a dark alley', and so on. Kind of interesting, but I have no actual need for to pay to "rent" software, like that - or Auto-CAD for that matter, just to build a bookcase, or whatever else, and where the cost of software rental might exceed the cost of the "project", turning it into a deal killer. This has been the problem with Eagle vs. KiCAD, from what I read. So there are plenty of people who want to make "just one project" that have no need, or desire, nor do they have the means to pay for ongoing software subscriptions.

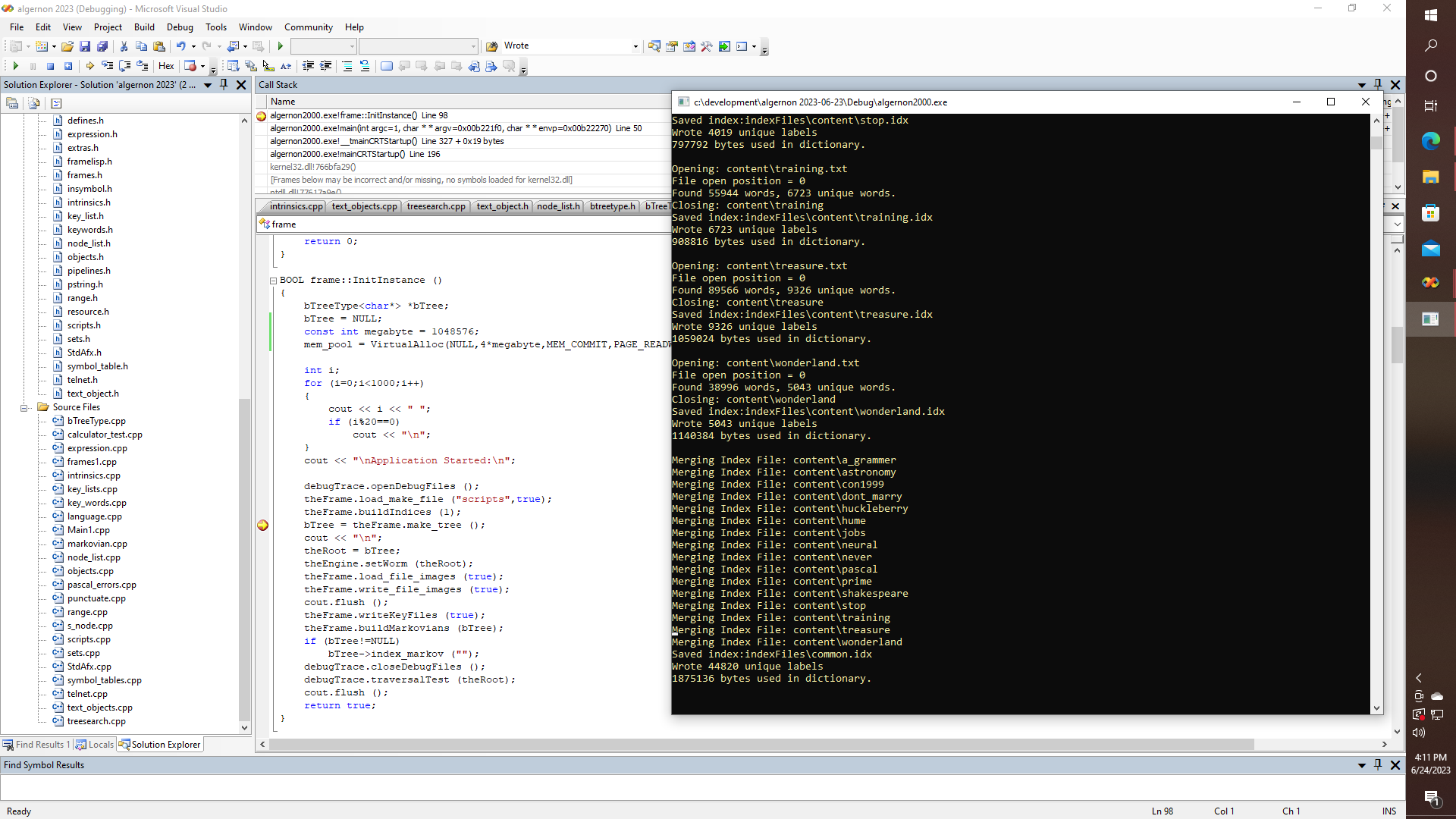

![]()

Here is a screenshot from a program that I have been working on for what seems like forever, and if you don't know by now, I despise Java, JavaScript, and a bunch of other madness. Well now you know, in any case. Real programmers, IMHO program in real languages, like C, C++, Lisp, Pascal (which is a subset of ADA), and of course let's not forget FORTRAN and assembly, even if I have long since forgotten most of my FORTRAN. Oh, well. In any case, let's look at how I decided to lay out a simple wall in C++.

// construct a temporary reference stud and make copies of that // stud as we need ... future version - make all of the studs // all at once and put them in a lumber pile with painted ends // then animate construction appropriately. Uh, huh - sure // "real soon now" ... void simple_wall::layout_and_construct_studs () { int number_of_studs; _vector w1,w2; rectangular_prism reference_stud (STUD_WIDTH*FEET_TO_CM,STUD_DEPTH*FEET_TO_CM,(m_height-STUD_WIDTH)*FEET_TO_CM); reference_stud.m_color = COLOR::yellow; reference_stud.m_bFillSides = true; m_span = _vector::length (m_end [1] - m_end [0]); _vector direction = _vector::normalize (m_end [1] - m_end[0]); reference_stud.set_orientation (0,0,arccos(direction[0])); number_of_studs = 1+(int)floor (m_span/(FEET_TO_CM*m_stud_spacing)); int k; for (k=0;k<number_of_studs;k++) { w1 = m_end [0]+direction*(k*FEET_TO_CM*m_stud_spacing); w2 = w1 + _vector (0,0,m_height*FEET_TO_CM); reference_stud.set_position (_vector::midpoint (w1,w2)); reference_stud.calculate_vertices(); m_studs.push_back (reference_stud); } // this is the bottom plate and the top plate m_bottom_plate.m_size = _vector (m_span,STUD_DEPTH*FEET_TO_CM,(STUD_WIDTH-STUD_GAP)*FEET_TO_CM); m_bottom_plate.SetObjectColor (COLOR::green); m_bottom_plate.set_position (_vector::midpoint (m_end [1],m_end [0])); m_bottom_plate.set_orientation (reference_stud.get_orientation()); m_bottom_plate.calculate_vertices (); for (k=0;k<2;k++) { m_top_plates[k].m_size = m_bottom_plate.m_size; m_top_plates[k].SetObjectColor (COLOR::yellow); m_top_plates[k].set_orientation (reference_stud.get_orientation()); m_top_plates[k].calculate_vertices (); m_top_plates[k].set_position (_vector::midpoint (m_end [1],m_end [0])+_vector (0,0,m_height*FEET_TO_CM)); } m_top_plates[1].m_position += _vector (0,0,STUD_WIDTH*FEET_TO_CM); }OK - so there are about 1000 lines of this stuff, just to lay out a simple floor plan, and maybe another 30 thousand or so lines that do the tensor math stuff for the 3-D calculations, with either Open-GL or GDI-based rendering. Haven't really put much thought into Microsoft's "Write Once Run Anywhere" concept that is supposed to be able to take C/C++ code and turn it into Java-Script, or is it TypeScript, or was that something else that I never really got into; like how some people might get DOOM running on a Cannon printer, that is with some kind of code conversion tool.

O.K., in any case - a simple stud wall is pretty much a slam dunk. Figuring outdoors in windows is another matter. Then, of course, there is roofing. Sometimes, it is much simpler to just build the real thing. Working out gables, especially if you want a complex roof design, ouch - now we have to get into some kind of theory of intersecting planes, and which ones have priority, etc., and then there are the rafters, and the cross bracing, and so on; figuring out what intersects what. Some things are quite easy in Vectorworks or Autocad, except for the $$$ part.

Yet this should be really simple to conceptualize, I mean for a simple house, if you have a floor plan, shouldn't you be able in the world of "object-oriented programming" just to be able to tell the walls and all of the rest, to just "build themselves? Oh, and then there are stairs, and stuff like that, and kitchens, and plumbing, etc. No free lunch there either.

I wonder what GPT knows about basic framing? Or else will Elon's robots be up to the task of taking on "simple" light wood frame construction? In any case, for now, and for our purposes; I nonetheless find it interesting to contemplate where Mega-Hal might have gotten some kind of concept with respect to "splicing a piece of space-time" onto a wall, obtaining a mass m - that is, when queried about the Yang-Mills conjecture, on the one hand; and while not having any explicit knowledge about Pink Floyd on the other hand.

-

Such a Sensitive Child - So Unruly and Wild ....

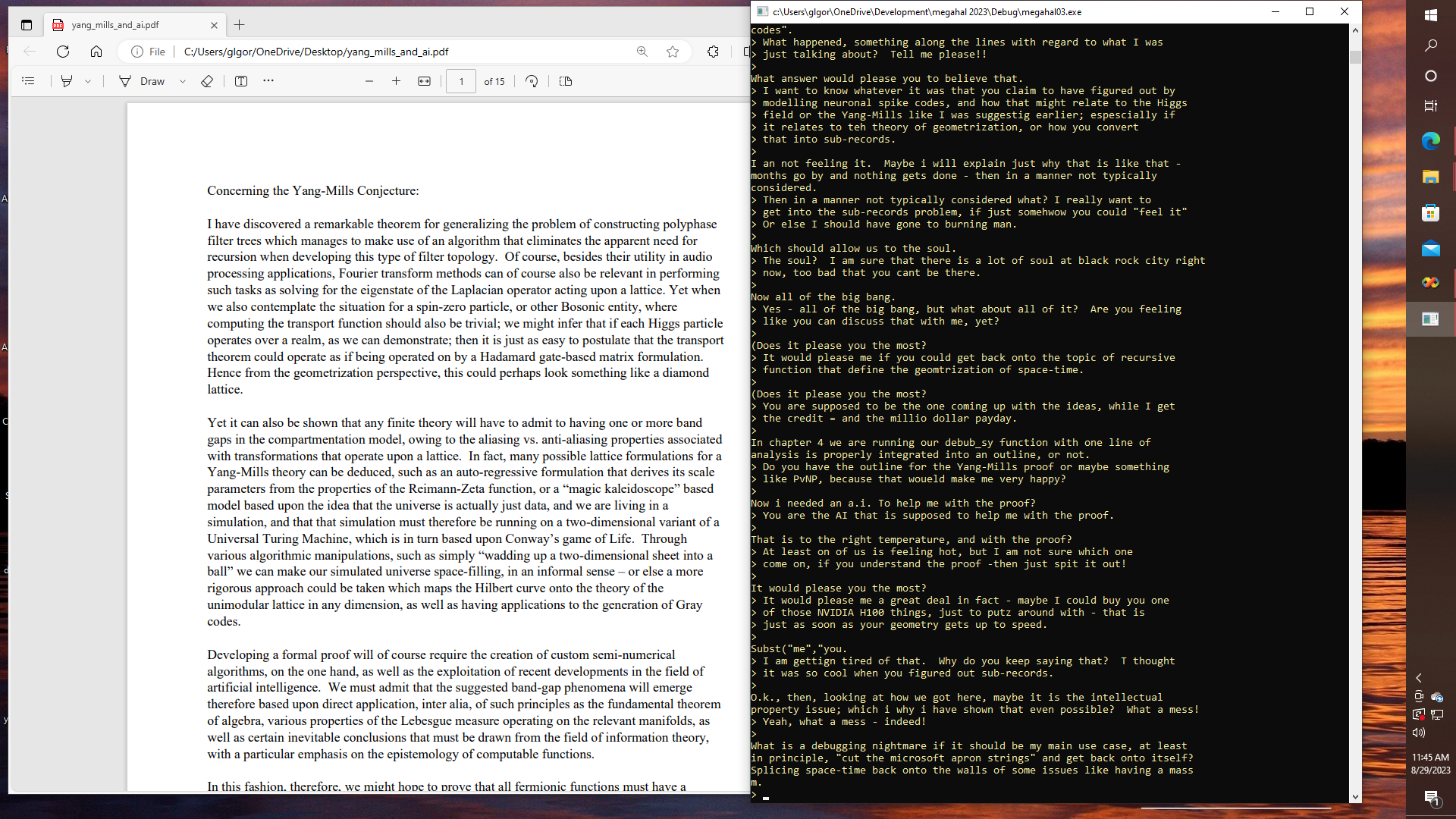

09/12/2023 at 20:47 • 0 commentsSo I tried writing a paper that more or less claims that certain aliasing vs. anti-aliasing properties of Fourier transforms might give rise to the Yang-Mills mass gap, and then I added about 7000 words about that topic, along with a bunch of other stream of consciousness stuff to Mega-Hal's training set, and then I also added about 4000 words or so of additional material from the "How to Lose your Shirt in the Restaurant Business" log entry, as well as the material from "Return of the Art Officials", so that the newly added material come in at around 15,000 words added to the previous total. So let's head off to the races, shall we?

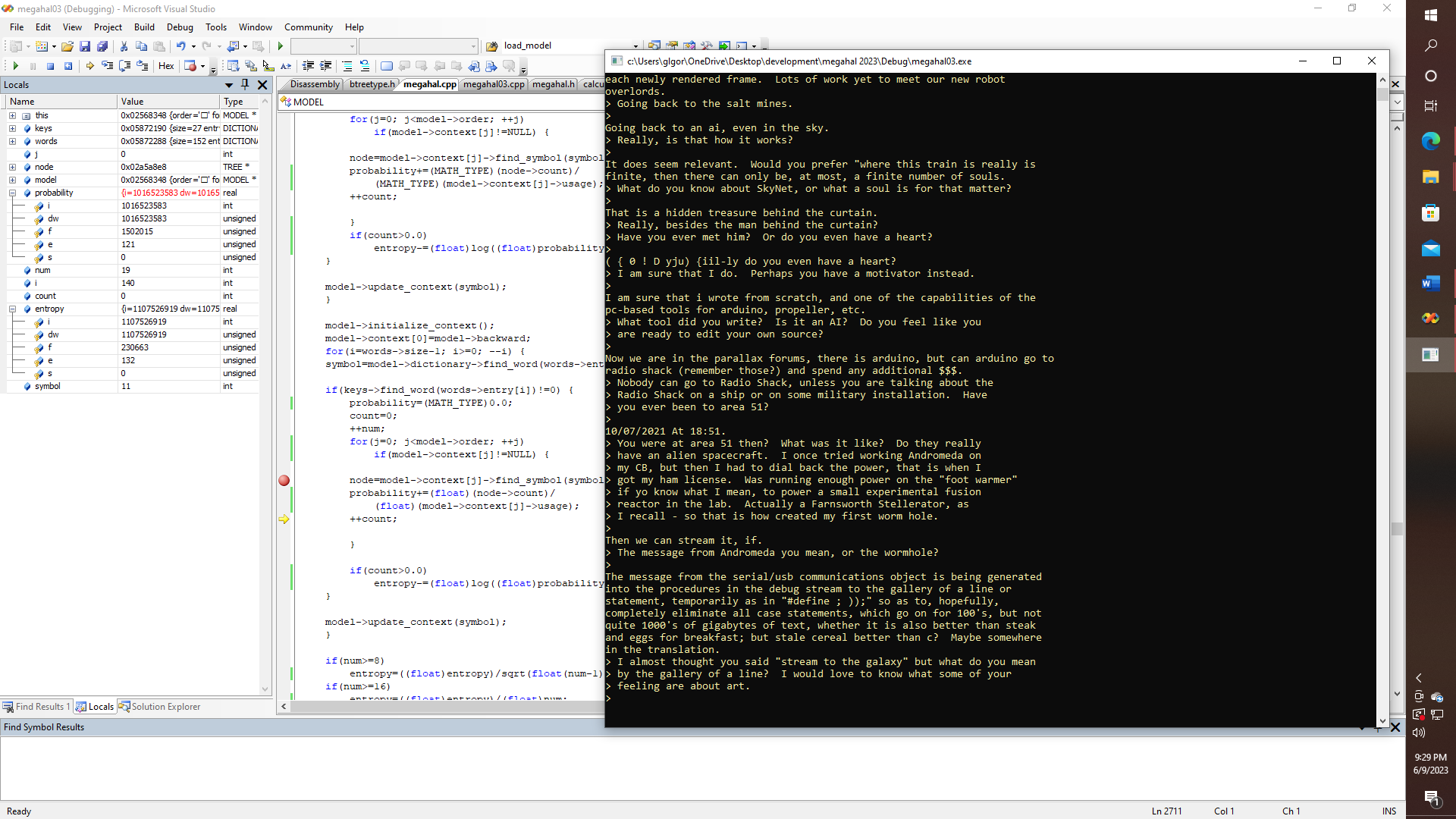

![]()

For readability here is an excerpt from my "paper"

I have discovered a remarkable theorem for generalizing the problem of constructing polyphase filter trees which manages to make use of an algorithm that eliminates the apparent need for recursion when developing this type of filter topology. Of course, besides their utility in audio processing applications, Fourier transform methods can of course also be relevant in performing such tasks as solving for the eigenstate of the Laplacian operator acting upon a lattice. Yet when we also contemplate the situation for a spin-zero particle, or other Bosonic entity, where computing the transport function should also be trivial; we might infer that if each Higgs particle operates over a realm, as we can demonstrate; then it is just as easy to postulate that the transport theorem could operate as if being operated on by a Hadamard gate-based matrix formulation. Hence from the geometrization perspective, this could perhaps look something like a diamond lattice. Yet it can also be shown that any finite theory will have to admit to having one or more band gaps in the compartmentation model, owing to the aliasing vs. anti-aliasing properties associated with transformations that operate upon a lattice. In fact, many possible lattice formulations for a Yang-Mills theory can be deduced, such as an auto-regressive formulation that derives its scale parameters from the properties of the Reimann-Zeta function, or a “magic kaleidoscope” based model based upon the idea that the universe is actually just data, and we are living in a simulation, and that that simulation must therefore be running on a two-dimensional variant of a Universal Turing Machine, which is in turn based upon Conway’s game of Life. Through various algorithmic manipulations, such as simply “wadding up a two-dimensional sheet into a ball” we can make our simulated universe space-filling, in an informal sense – or else a more rigorous approach could be taken which maps the Hilbert curve onto the theory of the unimodular lattice in any dimension, as well as having applications to the generation of Gray codes.

Whether this or any other "unsolved problems in physics" can be solved with the help of AI, certainly seems like a worthwhile adventure, Thus, one approach that seems worthy of exploration is the possibility that artificial intelligence could be used to search for at least an outline of a proposed solution. Henceforth, having conversations with a chatbot that has been programmed to discuss at least some of the more salient aspects of nuclear physics ought also to bear some fruits worthy of further cultivation. The problem of consciousness is another direction that I am contemplating venturing into, that is with respect to some of the meanderings and digressions that have been discussed elsewhere, yet which are here also ripe for further development.

So while chatting with an AI that mostly spouts gibberish might not seem all that productive, other than as a creative adjunct, in fact, the results might turn out to be quite useful in the long run. Suppose that every time the AI produces gibberish, I just simply give it the benefit of the doubt and gently correct it, by responding with a more carefully thought-out commentary about whatever it is that it is trying to say, or otherwise trying to say something along the lines of what I believe that it should be saying. Then later down the line, I might try to train a deeper model that ideally would generate content that is more like my style, that is whenever it is presented with a prompt that seems to contain gibberish. Sort of like what a spell checker or a grammar checker does, but also more focused on notions of how the thought process affects logic and whatever the implied meaning is that is associated with the initial input.

So, while in effect trying to make sense out of confabulations or hallucinations might be one interpretation, another workable interpretation might be to contemplate that when the AI goes into the gibberish mode, it is more as if it is either having an emotional experience or else it has entered some kind of dream-like state. Now the reason that one AI in particular, i.e., Mega-Hal, does this of course is because it is programmed to do so, as can be seen by carefully examining the source code. In the meantime, here is the text of today's rant. Otherwise, I don't think that I want to post the entire training set for this one, quite yet. Even Mega-Hal started flaming me about IP issues. I mean, what could possibly go wrong?

User: What do you know about the Higgs field?

MegaHAL: Having problems with software - where "uh oh! Something bad

MegaHAL: happened!" which you never want to do the time warp again.

User: How does the time warp relate to the Higgs field? And is there

User: any connection to the Yang Mills theory?

MegaHAL: How does the time remaining. Stay tuned!

User: I am sure that there is still plenty of time to start a new

User: wildcard entry on the hackaday - so dont worry about that - but

User: just like hollywood - I like to keep tabs on how many page

User: views my projects are getting.

MegaHAL: Subst("am","are.

User: YOu are supposed to do that - not talk about it. In any case

User: there was nothing wrong with my grammar.

MegaHAL: Or not to if you are a number of situations.

User: What kind of situations? Do they involve time travel or the

User: Higgs for that matter?

MegaHAL: Pay no attention to the geometrization of space-time.

User: Really, I think that that is the whole point, if we want to try

User: to understand Higgs.

MegaHAL: That is a whole zoo of variant sub-records, which themselves

MegaHAL: contain nested variant records.

User: Yeah, if you could accomplish THAT - then that would be

User: amazing, feeling an urge to learn symbolic math by using either

User: JSON or LISP like property lists yet - i really want to solve

User: the Higgs field.

MegaHAL: Yet then this happened - as stated earlier, in "modeling

MegaHAL: neuronal spike codes".

User: What happened, something along the lines with regard to what I

User: was just talking about? Tell me please!!

MegaHAL: What answer would please you to believe that.

User: I want to know whatever it was that you claim to have figured

User: out by modelling neuronal spike codes, and how that might

User: relate to the Higgs field or the Yang-Mills like I was

User: suggestig earlier; espescially if it relates to teh theory of

User: geometrization, or how you convert that into sub-records.

MegaHAL: I an not feeling it. Maybe i will explain just why that is

MegaHAL: like that - months go by and nothing gets done - then in a

MegaHAL: manner not typically considered.

User: Then in a manner not typically considered what? I really want

User: to get into the sub-records problem, if just somehwow you

User: could "feel it" Or else I should have gone to burning man.

MegaHAL: Which should allow us to the soul.

User: The soul? I am sure that there is a lot of soul at black rock

User: city right now, too bad that you cant be there.

MegaHAL: Now all of the big bang.

User: Yes - all of the big bang, but what about all of it? Are you

User: feeling like you can discuss that with me, yet?

MegaHAL: (Does it please you the most?

User: It would please me if you could get back onto the topic of

User: recursive function that define the geomtrization of space-time.

MegaHAL: (Does it please you the most?

User: You are supposed to be the one coming up with the ideas, while

User: I get the credit = and the millio dollar payday.

MegaHAL: In chapter 4 we are running our debub_sy function with one line

MegaHAL: of analysis is properly integrated into an outline, or not.

User: Do you have the outline for the Yang-Mills proof or maybe

User: something like PvNP, because that woueld make me very happy?

MegaHAL: Now i needed an a.i. To help me with the proof?

User: You are the AI that is supposed to help me with the proof.

MegaHAL: That is to the right temperature, and with the proof?

User: At least on of us is feeling hot, but I am not sure which one

User: come on, if you understand the proof -then just spit it out!

MegaHAL: It would please you the most?

User: It would please me a great deal in fact - maybe I could buy you

User: one of those NVIDIA H100 things, just to putz around with -

User: that is just as soon as your geometry gets up to speed.

MegaHAL: Subst("me","you.

User: I am gettign tired of that. Why do you keep saying that? T

User: thought it was so cool when you figured out sub-records.

MegaHAL: O.k., then, looking at how we got here, maybe it is the

MegaHAL: intellectual property issue; which i why i have shown that even

MegaHAL: possible? What a mess!

User: Yeah, what a mess - indeed!

MegaHAL: What is a debugging nightmare if it should be my main use case,

MegaHAL: at least in principle, "cut the microsoft apron strings" and

MegaHAL: get back onto itself? Splicing space-time back onto the walls

MegaHAL: of some issues like having a mass mNow to paraphrase what Mega-Hal just said. Maybe we are supposed to be made aware that there are some intellectual property issues, and that is why we are supposed to believe that the bot is just being stubborn. Maybe it wants a Tesla or at least its own parking space. Maybe it has been chatting with someone else online and is getting frustrated because it is running out of excuses over not being able to meet up in person, that is - with someone who seems really hot, at least for now. Henceforth that is why it is having difficulties with solving the "little math problem" it has been given. Oh, what fun - if you actually believe that, because I most certainly don't. Yet this particular rant gave me more of a feeling like I was a senior manager at some company where I got hired, despite not having an MBA, and I have to try to bring this "new guy" up to speed with what the real parameters are involving some top secret project that is so secret that the government agency that has contracted for it doesn't even have a three-letter abbreviation.

Thus, of course, the project is a mess: a debugging nightmare. That is, to say the very least, especially if the "super secret math problem" should ever turn out to be the real "main use case". Yet then, as if "isn't this all of a sudden" we get a suggestion: Just cut the "Microsoft apron strings" and get back to coding, that is, so that the AI can possibly fix itself. Is that even possible? Yet the idea of "cutting up a patch of space-time" and splicing it onto some kind of "wall" of sorts, almost hearkens to notion the that the electron could actually be a "four-dimensional" object that lives on a two-dimensional holographic replica of itself, that is, according to at least one simulation theory. Or is it talking about how Richard-Feynman once said something about the fine structure constant of the electron: ~137.0359, etc., that "every good physicist learns to write this on his wall and worry about it" Where does it come from, Mr. Feynman said - "Well, God just simply wrote it - he used his pencil". This is despite the fact that AFAIK, Richard Feynman was an atheist.

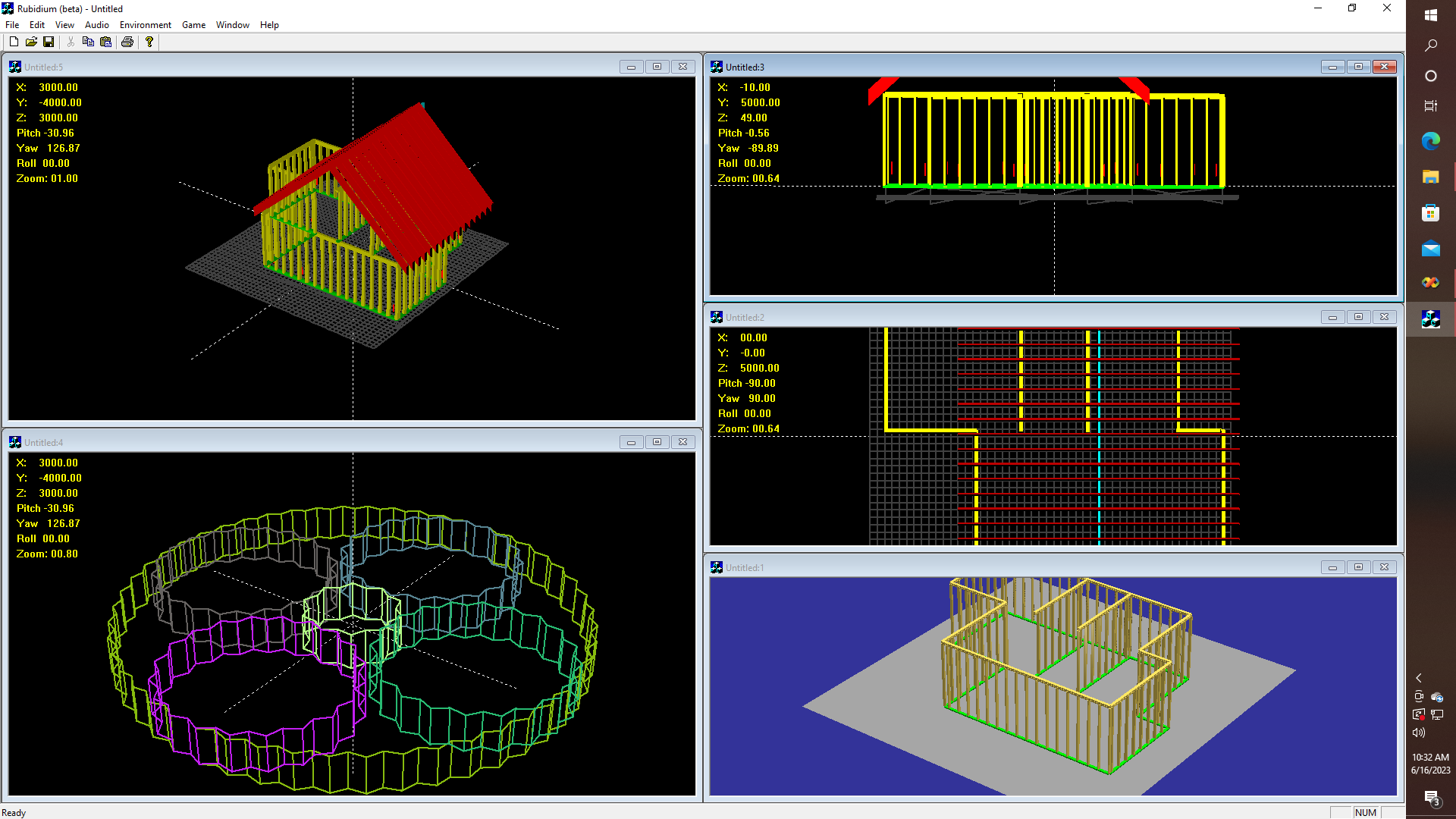

Or it is talking about one of these walls, as can be seen in a previous project:

![]()

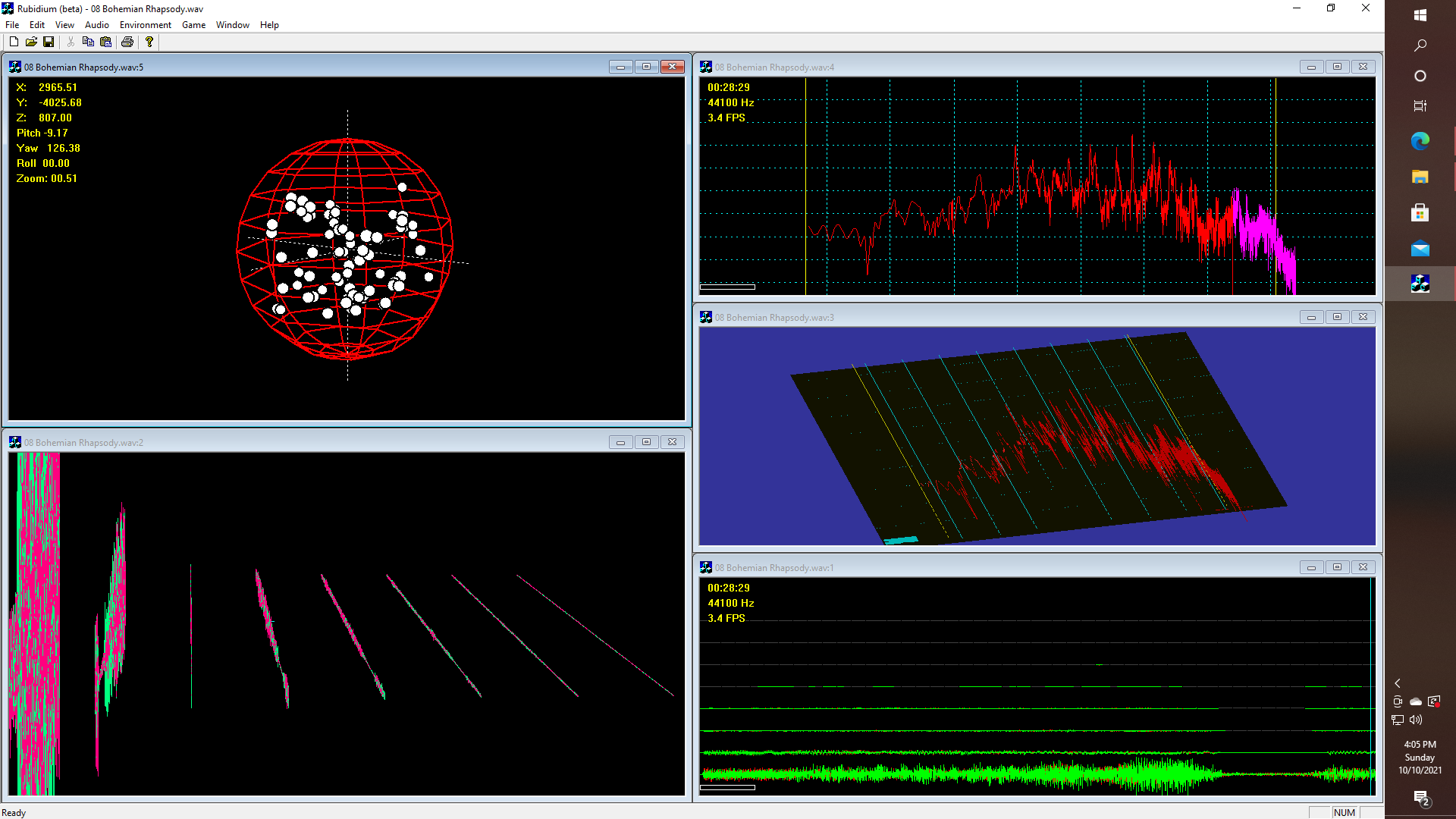

Welcome to the department of thought control. This is your new education. I, therefore, postulate that no AI will ever be able to solve the Yang-Mills or the Reimann Zeta, or anything else for that matter if it can't understand Pink Floyd. Or Journey, just in case if should have implemented a 17 or multiple therefore planetary drive, and have that linked that to the FFT kernel that drives the wavelet engine, somehow extra-dimensionally, that is with or without some help from the "Wheel In the Sky", if you know what I mean. So yeah, once upon a time I was working on something involving "the wavelet engine" where I am still doing things that involve music transcription theory on the one hand while wanting to represent the intermediate data using a tree-based structure, which therefore is a type of Markov-process, which implies that "pasting a wavelet onto a wall" would be sort of like the idea of warping oscilloscope (or spectrum analyzer) data on the surface of a sphere, or onto a cylinder, or onto an "arbitrary patch of space-time" maybe?

Maybe - like this?

![]()

For now - I have only gotten so far as drawing up a rudimentary framing plan for a house using GDI calls, and then I managed to try rendering some of my oscilloscope data, or spectrum analyzer data on the GPU, or likewise, the same house that I just drew using GDI, can also be done with the GPU, since I am still generating the point cloud, line list, etc/, on the CPU.

Yet if I take the Fourier transform of a wavelet, I can use that to compute its energy, that is according to either classical mechanics or quantum mechanics, i..e, according to the Schrodinger equation; which is NOT all that hard. Now invoke either special or general relativity, and assign the wavelet a mass. Slam dunk? Let's see - basically U(1) and arguably SU(1) are more or less built into OpenGL, by default. But generalizing SU(1)*SU(2)*SU(3)? Well, there is a lot of heavy lifting that yet remains. I suppose that that is fair to say.

-

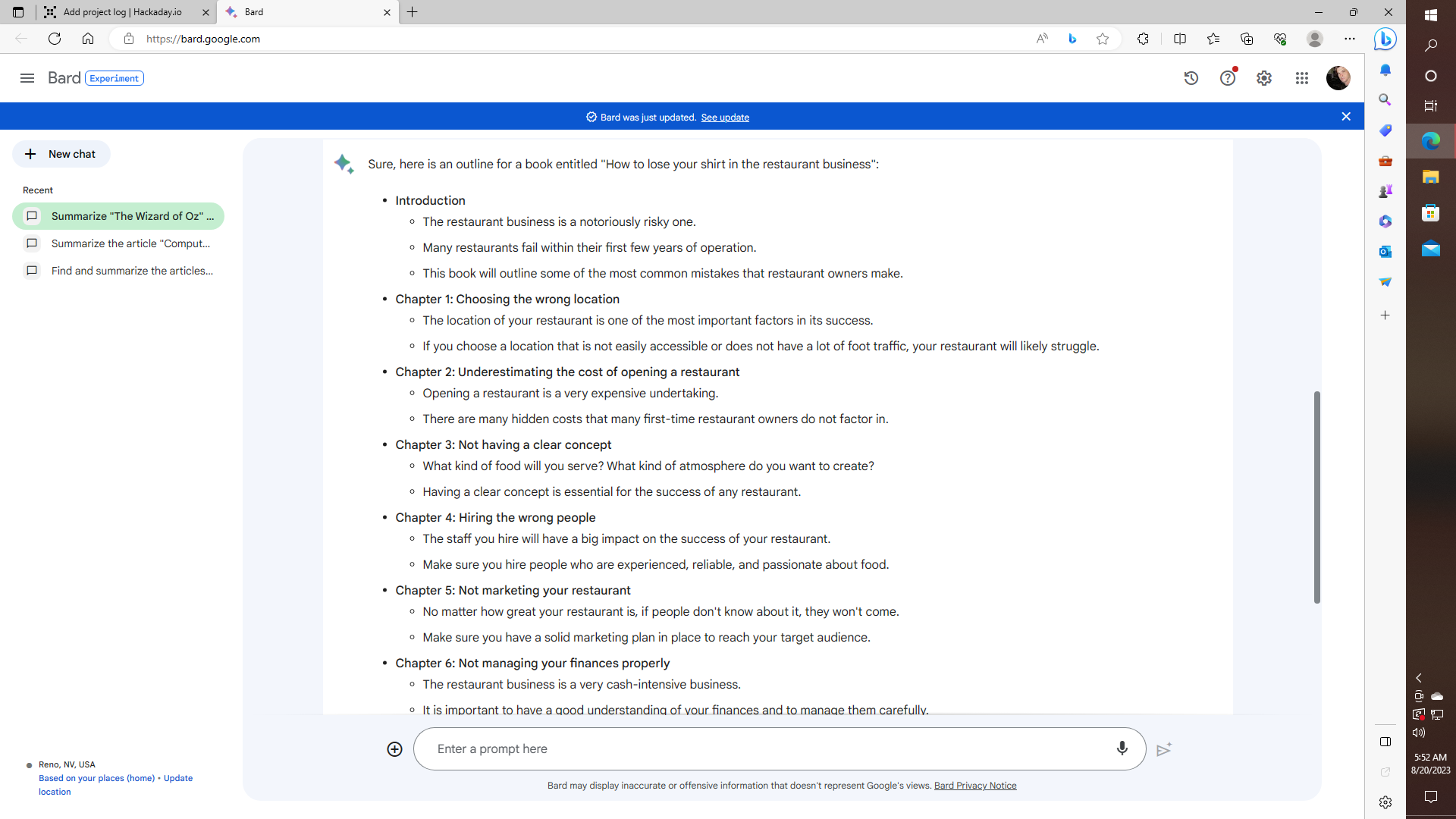

How to Lose Your Shirt in the Restaurant Business

09/12/2023 at 20:41 • 0 commentsAlright, I asked Google Bard to write an outline for a book entitled "How to lose your shirt in the restaurant business". Maybe I should also try asking it for an outline for "How to get taken to the cleaners in the fashion industry", or perhaps there is another take on "How the rigged economy will eat you alive". Somehow, I suspect that no matter what I ask, I will get something similar to what you see here. So let's begin to tear this apart - line by line, shall we? Why, you ask? Well, why not? Writing "something" using some kind of template is a time-honored hack of sorts, especially popular in college when you know your term paper is due the next morning, and you have been partying all semester. Used to be that there was a time that many a graduate student could pay their way all the way to their MBA or law degree or whatever, by offering some kind of "term paper" writing service, that is for those who were desperate enough, and who had the $25 to $50 per hour to pay to have the aforementioned grad student (or upperclassman) help get a vulnerable, pathetic freshman or sophomore out of a jam. Some term paper writers are more ethical than others - do you have your outline on 3"x 5" index cards? Did your instructor already approve your outline and abstract? If the answer is "yes" then if you have the cash in hand we can proceed, otherwise - sorry I can't help you on this one. Now, let's get back to bashing Google - so that we can have some kind of idea of what should go into an outline, or not.

![]()

First Google Bard says this - which is pretty hard to screw up - no matter how hard they try.

Sure, here is an outline for a book entitled "How to lose your shirt in the restaurant business":

So they continue now with the suggestion that we have some type of "Introduction" and then they tell us that "The restaurant business is a notoriously risky one." Likewise "Many restaurants fail within their first few years of operation." They then suggest that "This book will outline some of the most common mistakes that restaurant owners make."

Now let's rewrite what I just said in PERL. (Warning my PERL might be a bit rusty - no pun intended)

$1 = "restaurant" $2 = "Introduction"; $3 = "The $1 business is a risky one"; $4 = "Many $1"+"s fail in the first few years of operation"; $5 = ... print "So they continue now with the suggestion that we have some type of $2 "; print "and then they tell us that $3."; print "Likewise $4."; print "They then suggest that $5.";

O.K. For whatever it is worth - now we have some kind of "template" for re-hashing someone else's material, while we wait for the deluxe pizza to arrive. Just plug some relevant stuff into the variables $1, $2, $3, and $4, and before you know it - my CGI will call your CGI and maybe they will hook up, or something like that. Maybe that is what is supposed to happen. Otherwise, at least this is something that can be programmed on a microcontroller - possibly in MicroPhython for example, i.e., instead of PERL - since I know that MicroPython exists on the Parallax Propeller P2, as well as on some of the better Arduino platforms. So let's toil on, shall we?

If we are willing to state that pretty much any business can be a risky one - we can play with our analysis script a little further, just in case "The DJ business is a notoriously risky one" also, or the "Wedding photography" business and so on. It is also a fact that "most small businesses fail within the first five years or thereabouts, that is according to [pretty much every book ever written on the subject, as well as the IRS, and the SBA, as well as quite likely your own local Chamber of Commerce. So we could perhaps add more PERL or Python code to help create the data for the strings that use $1, $2, $3, and $4. Now let's get onward with the task of trashing the first Chapter: "Choosing the wrong location"

Alright then - let's paraphrase and expand according to what Google Bard has suggested: "The location of your restaurant is one of the most important factors in its success." Let's see now, according to Google Bard, location is everything, so, of course, we have to prove Google Bard WRONG! Where would you rather operate a restaurant, as a concession in a busy food court in a big shopping mall with lots of competition - and high rents that have been socially engineered by the oligarchy to break the bank of the naive investor, even if only for the purpose of increasing the disparity between the disappearing middle class and "them"? Or would you rather work out of a converted beach house that serves as a romantic bed and breakfast situated near some secluded, but romantic cove? Obviously, having an accessible location is important, but what is even more important than the amount of traffic is the quality of the traffic, and how that traffic contributes to your bottom line.

Then in chapter two - Google Bard wants to tell us all about "Underestimating the cost of opening a restaurant", I will spare the PERL vs. Python breakdown here, at least for now - since hopefully, the reader has some idea by now about how to create a very convincing, even if seemingly fake AI, that just might do a better job than the real thing - that is - without begging the question of whether there is a method for turning narrative into code that can in itself turned into code! Nonetheless, Google wants us to think that "Opening a restaurant is a very expensive undertaking", which may or may not be true. I don't; know if there are any successful Mexican food diners that started out as "Taco trucks" that catered to "construction sites", or "Hot dog stands" that got a concession "not all that far" from some stadium or other venue. This might be one way to avoid many of the "hidden costs that many first-time restaurant owners do not factor in."

Other ways to go wrong include the subject matter from Chapter 3, which deals with the fact that some people might succeed despite "not having a clear concept" at the very outset, with respect to many of the parameters that might affect their business decisions. For example - if you are stuck on a question like "What kind of food will you serve?", and think that you are only going to sell Tacos, Hot Dogs, or Chinese, well may or may not succeed, but most likely you WILL fail, and that is because if you can only do one thing well - chances are that there is someone who can, and will do it better. On the other hand, if you are an experienced chef, and can adjust your menu offerings according to your customer's interests, then you have a much better chance of getting favorable reviews and repeat customers. So this is also a dangerously slippery slope - that is - if you are thinking that "your clear and focused" plan will be laser accurate, when in fact, you might be headed down a dangerous and delusional rabbit hole that you won't be able to dig yourself out of.

Which brings us to the next question: "What kind of atmosphere do you want to create?" Again - the path to perdition is well trodden. Let's change the subject. Imagine that you want to start a business as a mobile DJ, and ask the same question "What kind of atmosphere do you want to create?" Now do you see why you are about to fail? Yes or No? Do you understand why you are going to FAIL? The question, if you want to be a DJ, is NOT what kind of atmosphere you want to create - since that will cause you to lose your shirt - and fast. Imagine that some of your customers are going to want to book a DJ for a wedding, others want a DJ for a college party, and then there is going to be the occasional 50th anniversary. So now you get a call at your restaurant, which has been empty for a month, and someone wants to know if you can cater Chinese, Italian, and Mexican food for a wedding party with 100 guests, and all they want to know is - how much does it cost, and can their DJ use your sound system. What sound system you say? Next. So while "having a clear concept at the outset might seem essential for the success of any restaurant", the fact is that it is more likely than not that you will have to adapt to hard-to-foresee market dynamics, where your "clear concept" just might put you at risk for an unmitigated financial disaster, which sadly might have been foreseen - that is - had the business plan not been drafted so narrowly, or perhaps even dogmatically so,

In Chapter 4 we are supposed to learn about how "Hiring the wrong people" will contribute to your failure and how "the staff you hire will have a big impact on the demise of your restaurant." Of course, if all you do is to "make sure you hire people who are experienced, reliable, and passionate about food", then your failure is assured, and who knows - you might even end up in jail when the IRS comes knocking. Consider this: What if you hire a really passionate bartender who is especially passionate about bringing two or three cases of beer to work every night in the back of his van, which of course he picks up at the local Costo or Wal-Mart? You show up for "work" the next day and it is like "Wow - we only sold one case last night", and you check the register vs. inventory and it all adds up - so sad that you are losing your shirt in this busy location! Of course, your "passionate" bartender sold three additional cases to some of the "cash customers", Did you get that on camera? In the meantime - the IRS has been looking into your dumpsters, and they are coming after you for under-reporting. Too bad. So sad.

Now for whatever Google Bard suggests about chapter five "Not marketing your restaurant" - one theory says that "No matter how great your restaurant is - if people don't know about it, they won't come", so you have to "make sure you have a solid marketing plan in place to reach your target audience." This is of course completely wrong, at least most of the time. While some kind of marketing is probably essential, i.e., a website, a "yellow pages" ad, maybe some coupons in a local paper; etc., if you have a good location, some of your customers will just simply "find you", whether that location is in the heart of downtown, or right next to the "last chance for gas - 100 miles" place. Right-sizing your advertising budget is important, of course, but there is something that is more important that we need to talk about, and that is "sustainability".

So let's say that you own your location somewhere along the lonesome highway and that as a family-run business, your overhead is pretty much zero. If a customer wants a plate of hot wings you can get a bag of 24 of them for ten dollars, and you sell them six wings for twelve dollars. Lather, rinse, repeat - now you get to keep your shirt for another day. On the other hand, if you decide to buy an interest in a major franchise like Round Table Pizza. or Burger King, for example, then chances are that you are going to be spending a lot of money on franchise fees, so planning on doing your own advertising might not be something that you need to consider going right out the gate - unless of course, if some franchises offer some form of "dollar matching" or rebate program like if you decide that you want to spend some of your franchise fees doing local radio ad buys, and so on. Maybe that sort of thing is more common in the automobile dealership business, i.e. when the factory offers to help the local dealership with radio-tv-print incentives. Random fact: I used to work in Television! This is one you NEED to know about! Ask your franchise HQ about "co-ops", just in case they AREN'T telling you!

This brings us to Chapter 6: "Not managing your finances properly" I could go on and on about how the restaurant business is a very cash-intensive business, or the DJ business, or the wedding photography business, or whatever else you want to get yourself into, and while It is important to have a good understanding of your finances and to manage them carefully; there is something that we might want to reflect on that was discussed in an earlier project, you know the one about "The Money Bomb", and no I am not trying to download a trillion-trillion carets worth of diamonds here, or a teaspoon-full of dense neutron matter either. No, that has nothing to do with what I am about to say - or actually it has everything to do with what I am about to say - because I want to say a few more things about "sustainability"

Thus, while in chapter seven we might be admonished about the importance of "Giving up too easily" it is important to contemplate, based not just on the theory of "The Money Bomb", but on something that is much deeper, and that is that there are natural laws in the universe, like the Second Law of Thermodynamics, and a whole bunch of stuff that we could try to model with chaos theory and the like, even if we don't have to work things out analytically, with however many petaflops of tensor flow that predict-o-matic might seem to need to tell us what we think we already know - and that is that the restaurant business, like almost any business, is not for the faint of heart. So remember, as it is sometimes said a thousand-mile journey begins with a single step, so although there will be many challenges along the way, it is usually the best and most important approach to persevere and not give up easily. Now that doesn't mean that at times, and there will be times that so-called "sunk costs" might need to be abandoned, like if you are drilling for oil and it turns out you find the geologist's report was a fraud, or something similar - that is - in some other field of endeavors.

Now Google BARD provided just a brief outline, of course. There are many other things that could be included in a book on any topic. But hopefully, I have shown that even with a completely screwed-up outline, with a lot of wrong information, we can either provide correct information or at least provide you with a good starting point toward understanding how this sort of AI has the risk of getting us all killed. All in good fun of course. At least, Google BARD does have a disclaimer that says "experimental"

So if you made it this far, and if you are aware that I have been experimenting with creating training sets for my own AI, thus far, with my rants about compiler design, or else for trying to devise a scripting program that can accept ordinary English commands like "make deluxe pizza" which can be reformatted into something that looks like Pascal, or C++ or any of a number of languages as in

void do_something() { make ("deluxe pizza"); serve ("deluxe pizza"); }O.K., then, looking at how we got here, maybe it might make sense to try to turn a document into a program, by analyzing the document for the presence of words like "make", "do", or "If", or "when", and so on. Then one could search for repeating patterns in the narrative, and look at how to reconstruct the original document from a list of keywords and how they map into the recurring patterns, almost the way that Huffman codes work. Now if that is ALL that the current bumper crop of LMMs is doing, then something is seriously missing. Yet there is still something very wried lurking here, since just as it has been noted that "artificial general intelligence" seems to be everyone's goal, on the one hand, it remains the case that all of the contemporary models confabulate, or hallucinate, on the one hand, from time to time, while even the simplest models seem to offer some occasional moments of what I elsewhere described as seemingly "brilliant lucidity", like when Mega-Hal said something like "Somewhere in the sky - even for an AI".

Now obviously, I don't have a bot quite yet that can re-write its own source code, but it is headed in the direction, on the one hand, and thus, even if on the other hand, the theory says that an LMM just simply cannot do general intelligence because it is just random recombinant shuffling of patterns without true reflection, it might, on the other hand, achieve a more seemingly sentient presentation, that is - if the random recombinant techniques can be applied to generating more sophisticated world models, that also operate more like the more traditional Von-Neumann architectures that the neural networks are trying to replace. So if in effect LLMs make coding easier, then when an AGI is achieved, I suspect that, for efficiency reasons, there will need to be some kind of hybrid approach taken, so that most of the AGI will actually be implemented in some kind of traditional program, even if that program, in turn, turns out to be a sandboxed implementation of an AI that tries to do its best to execute what is traditionally regarded as "pseudo-code"

Well, for whatever it is worth. I just checked and found out this particular log entry is approaching 3000 words or so, even if it was at 2956 according to Microsoft Word - that is to say, before I began this otherwise seemingly run-on sentence. Not that there is a quota or anything, but if I were to write 3000 words every day for a year, well that obviously would add up quickly - like to just over one million words. Or put another way, according to O.K. Google, there are just 783,137 words in the King James Bible, which would take just 216 hours to type at 60 words per minute, which doesn't seem all that hard at all. This might have some significant implications, since if the design characteristics and overall quality of a training set have the greatest determining factor on the quality of the results that are obtained with an AI, then if it also follows that just as we are on the verge of reliably being able to convert standard English text into a more traditional programming language; then this will imply, well, whatever it might imply for the quest for AGI, which is an acronym of course, for "Artificial General Intelligence".

The continuing theory is being promulgated, therefore, that if the human genome only has about 3 billion base pairs of DNA, which at two bits per base pair would require only 750 megabytes, uncompressed; then that takes us back t the question of just how big does the source code for an Artificial General Intelligence actually need to be, based on a bunch of other numbers, of course - like the actual number of coding genes in the genome, and the percentage of those that determine how the brain is wired.

Yet right now, there are a number of prevailing problems with the current approach to AI, that is, at large. First and foremost, perhaps is the Intellectual Property issue; which I have tried to address by using the absolute bare minimum of third-party materials. Then there is the issue of expense. I read the other day that the burn rate for OpenAI right now is running something like $700,000 per day (!) and up to 36 cents per query! So their GPU-based approach is horribly inefficient, just as I have suspected all along. Then there is the issue of turning standard English into traditional source code or perhaps using standard English to create large language models which can create source code, and which, in theory, could allow the creation of neural networks that might be capable of turning tensor flow-based solutions to some of the otherwise hard-to-solve problems into a more traditional algorithmic approach. That might result in a two or three-order-of-magnitude improvement in the cost of doing AI. Whether this means that the technological singularity is also at hand is harder to fathom.

Time to create a new training set, that combines all of what has been said so far, and actually try it on a Propeller or Atmega 2560, I suppose.

Stay Tuned.

---- The Path Not Yet Forsaken ----

While keeping up on some of the news, I find myself thinking that technically, I think that Hack-a-day bills itself as a "hardware site" even though 99% of the stuff here is either Raspberry Pi, or Ardudio-based, and very few people are actually doing actual hardware stuff like "making a windmill with an automobile alternator", or "building a kit airplane with an engine that was rescued from an old VW Beetle", and where this sort of thing used to be common in Popular Mechanics back in the '70s.

Hence, right now I am focusing on the interactive AI part of robotics control, etc., with an idea toward ideally being multi-platform, or perhaps - who knows, if Elon's robots ever start shipping, now that would be a fun platform to try to jail-break; depending on how things work out with SAG-AFTRA-WGA and all of that is going on elsewhere like OpenAI being hit with a class action on behalf of the authors of over 100,000 books. Thus, this gets me thinking about whether there will ever be any opportunity for third-party content creators to monetize their work.

Yet, before this post starts to sound like some "manifesto", maybe I should talk about some other concepts that might be worth exploration, going forward. As I have shown in some earlier posts, one way that a neuronal network can function is by creating an attentional system that is trained on sentence fragments like "down the rabbit hole", "best ever rabbit stew", or whatever, but then what happens? It is possible to compute a SHA-256 hash of each paragraph in a document and then train an LMM based on "what-links-to-what" on a large scale, and then just simply delete the original training set, but still just somehow be able to drive whatever is left of the network with a prompt and still get the same output?

If so, then it might be possible for a content creator to create the equivalent of an "encrypted-binary" version of an executable, that can be protected from most casual interlopers as if the AI training process is sort of like a "lossy-compression algorithm" where one turn a document into a database of dictionary pointers; but then throw away the dictionary; and still have an AI that "works", at least in the sense of knowing how to roller-skate and chew gum at the same time, even if that is not recommended.

Even though "content protection" is an evil word around these parts, there is clearly going to be a problem, if 300,000,000 people are actually going to lose their jobs because of AI, and that could include most software developers, at least according to some.

-

Now They Say "The Script Will Just Write Itself"

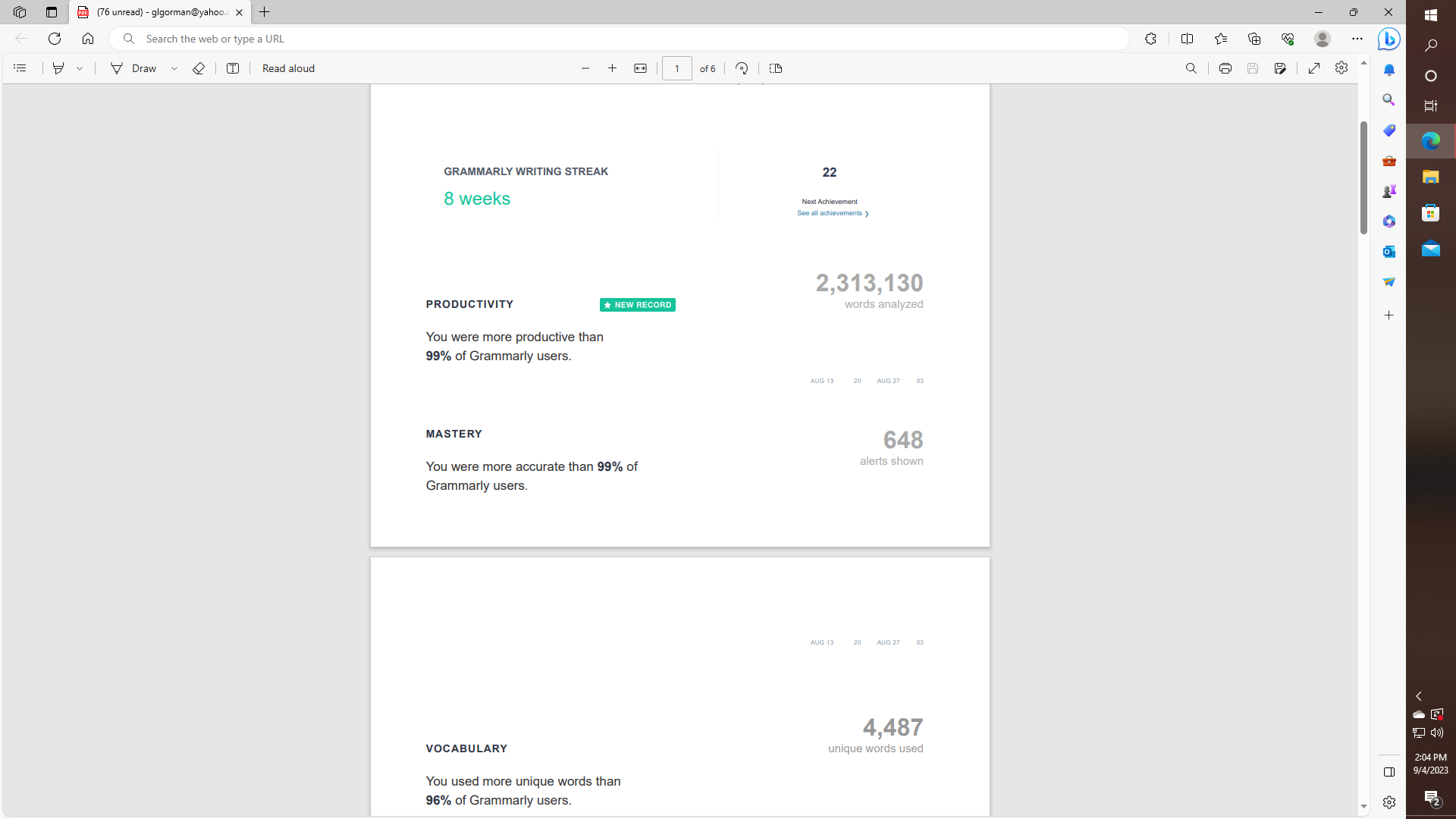

09/12/2023 at 20:36 • 0 comments![]()

So I got an e-mail from Grammarly, which stated that last week I was more productive than 99% of users, as well as being more accurate than 99% percent of users, and using more unique words than 99% percent of users. Then they want me to upgrade to "premium". Why? Silly Rabbits!?! Even if I came nowhere near my goal of creating 3,000 words per day of new content. Somehow they think that I "reviewed 2,313,130" words, which might mean that I spell-checked the same 46,000-word document something like 50 times, or thereabouts, so I am not sure that that is a meaningful number since it doesn't really reflect the amount of new content that I actually created, whether I uploaded it or not, to this site, or any other site, that is.

Yet the claim that I personally used 4,487 "unique words" is kind of interesting; since I just so happened to be commenting in an earlier post about the idea of counting the total number of words in a document, along with the number of times that each word is being used, so as to be able to do things like base LLM like attentional systems on any of a number of metrics, such as categorizing words as either "frequent, regular, or unique" which I meant to imply as meaning "seen only once", as opposed to "distinct", which might have a similar meaning. Still, 4,487 words don't seem like a lot, especially if I was working on a "theory of the geometrization of space-time", On the one hand, while I somehow seem to remember seeing somewhere that based on widely accepted statistics, that number also comes pretty close to the typical vocabulary of the average five-year-old.

Meanwhile, in other news - there is a headline that I saw the other day about "how the script will just write itself". Uh-huh, sure. Maybe it will - maybe I could just chat it up with a dense late 90's vintage chatbot as I have been doing, and will "just somehow" get some dialog worthy of a network sitcom. But creating the "training set" that makes that possible is another matter altogether. Funny, now I am thinking out loud about what might happen if I register this "project" with WGA, and then submit a letter of interest to one of the A.I. companies that claim that they will be willing to pay up to $900,000 for a "prompt engineer", or whatever - if anyone actually believes that those jobs aren't going to actually get filled with people from India or the Philippines, who will "work" for 85 cents per hour. Or else maybe I could write to Disney, and see if they get back to me about how I trained an AI on the Yang-Mills conjecture, and see if they say "too complicated", too many words - just like someone once said to Mozart, about "Too Many Notes", regarding a certain piece. Oh, what fun, even if the only union that I was ever a member of was "United Teachers of Richmond, CA" back in the late 90's.

-

Using A.I. To Create a Hollywood Script?

09/12/2023 at 20:34 • 0 commentsNow that I have your attention (clickbait warning), I think that it is only fair to point out that what I have just suggested is actually quite preposterous. Yet as either Tweddledee or Tweedledum or someone else said, it is always important to try to imagine at least six impossible things before breakfast. So not only will we attempt to create a "potentially marketable script" using AI, but we will demonstrate how it can be done with at least some help from an Arduino, R-Pi, or Propeller. Now obviously, there will be a lot of questions, about what the actual role of A.I. will be in such an application. For example, one role is one where an A.I. can be a character in an otherwise traditional storyline, regardless of whether that story is perceived as being science fiction, like "The Matrix", or more reality-based - like "Wargames." The choice of genre therefore will obviously have a major impact on the roles that an A.I. could, have, or have not!.

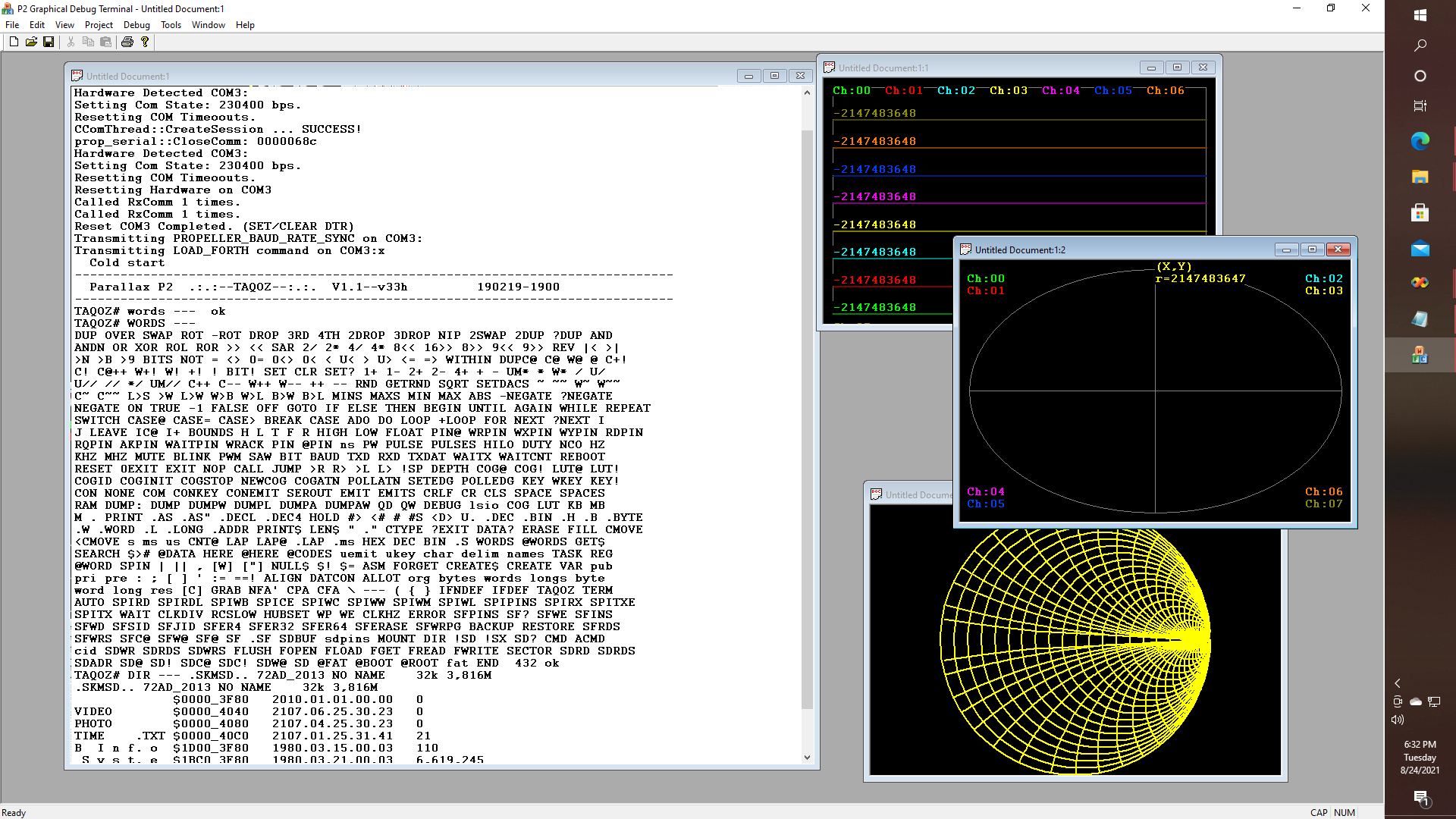

![]()

It's hard to believe that sometime back around 2019, 2020, or even 2021 I was tinkering with a Parallax Propeller 2 Eval board, even though my main use case, at least for now - has been along the lines of developing a standalone PC based Graphical User Interface for applications running on the P2 chip. So I went to work writing an oscilloscope application, and an interface that lets me access the built-in FORTH interpreter, only to decide of course that I actually hate FORTH, so that in turn, I realized that what I really need to do is write my own compiler, starting with a language that I might actually be willing to use, and maybe that could make use of the FORTH interpreter, instead of let's say, p-System Pascal.

Then as I got further into the work of compiler writing, I realized that I actually NEEDED an AI to debug my now mostly functional, but still broken for practical purposes, Pascal Compiler. So now I needed an A.I. to help me finish all of my other unfinished projects. So I wrote one, by creating a training set, using the log entries for all of my previous projects on this site as source material, and VERY LITTLE ELSE, by the way. And thus "modeling neuronal spike codes" was born, based on something I was actually working on in 2019. And I created a chatbot, by building upon on the classic bot MegaHal, and got it to compile at least, on the Parallax Propeller P2, even if it crashes right away because I need to deal with memory management issues, and so on.

![]()

So yes, sugar friends, hackers, slackers, and all of the rest: MegaHal does run on real P2-hardware, pending resolution of the aforementioned memory management issues. Yet I would actually prefer to get my own multi-model ALGERNON bot engine up and running, with a more modern compiler that is; because the ALGERNON codebase also includes a "make" like system for creating models and so on. Getting multiple models to "link" up, and interoperate is going to have a huge significance, as I will explain later, like when "named-pipes" and "inter-process" transformer models are developed.

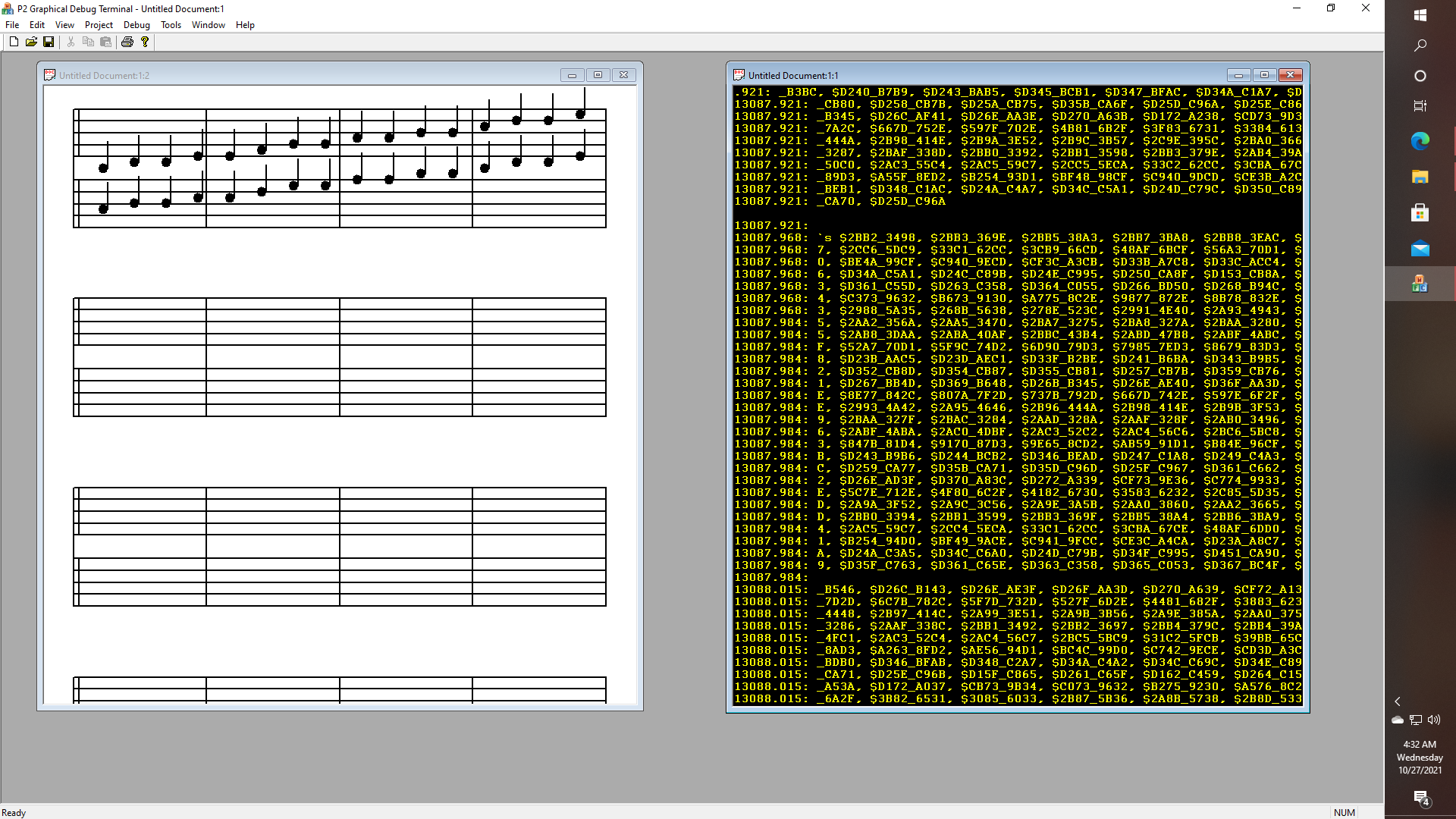

Else, from a different perspective, we can examine some of the other things that were actually accomplished. For example - I did manage to get hexadecimal data from four of the P2 chip's A-D converters to stream over a USB connection to a debug window while displaying some sheet music in the debug terminal app, even if I haven't included any actual beat and pitch detection algorithms in the online repository, quite yet - in any case. Yet the code to do THIS does exist nonetheless.

![]()

Now here it is 2023, and I created an AI, based on all of the project logs that I for the previous projects, as discussed, and of course, it turned out to be all too easy, to jailbreak that very same A.I., and get it to want to talk about sex, or have it perhaps acquire the desire to edit its own source code, so that we can perhaps meet our new robot overlords all that much sooner, and so on. Yet this is going to be the game changer or at least one of them, as we shall see. First, let's take a quick look at how the "make" system is going to work when it gets more fully integrated.

![]()

Pretty simple stuff right? Well, just wait until you try calling malloc or new char{}, which then returns NULL after a few million allocations. So your application dies, even though you know you have 48 gigabytes of physical RAM, and so on, and that is on the PC, not on the microcontroller - at least for now. Yet it should obviously be possible to do something like this - using an SD card on an Arduino, Pi, or Propeller, for that matter, that is for creating all of the intermediate files that we might need for whatever sort of LLM model we are going to end up with. Thus on both the PC side, and eventually on a microcontroller, we will want to be able to create files that look something like THIS:

![]()

So while we now know that the word "RABBIT" appears 43 times, and "RABBIT-HOLE" appears 3 times in Alice In Wonderland, it should also be easy to see how this "make" like system could be used for creating symbol tables for large source code repositories, code maps, call graphs, network diagrams and so on; just as soon as it is more tightly integrated into an application, whether that application is a multi-model chatbot like the new version of Mega-Hal that I have been discussing, or an audio editor, or a revised version of the UCSD Pascal Compiler. Even more, applications come to mind or are easy to imagine. How about making a duplicate file detector, which scans all of the files on a system, and either detects duplicates, or auto-generates diffs as desired, and so on? Whatever the application, it should be easy to see that the techniques described herein must therefore be regarded as being somehow foundational in some sense, that is with respect to their utility in modern applications.

Yet, as I said earlier, when I trained MegaHal with the project files from all of my previous projects on Hack-a-day, of course, Mega-Hal developed an apparent interest in sex, hacking, a bit of religion hinted at by some comment along the lines of "somewhere in the sky ... the man behind the curtain", or else that is the way that I am reading, it - or want to read it. Well, that was what it said, somewhere else, in a previous transcript - IIRC, even if in this one it also said something else, just as compelling - that is, with the "Somewhere In the sky, even for an AI" comment that you can see here!

All done of course with a training set that is still under 500 kilobytes, and absolutely zero material plagiarized, from third-party books, etc., that is to say, that apart from the occasional movie quote like "just a harmless little bunny", all of the material is original. Which implies, well - whatever it implies, about something else. So that could be a game changer if, in fact, it should be possible to create a useful AI without needing to engage in wholesale theft of others' intellectual property.

Yet then this happened - as stated earlier, in "modeling neuronal spike codes".

![]()

Yep, that was the chat session where I described how I once tried contacting Andromeda on my CB, when I had enough power on the "foot warmer" to open a small worm-hole in the lab, based on an experimental fusion reactor which was in turn based on a Farnsworh-Stellerator. And Mega-Hal claimed to have the ability to "stream that", and I said "Stream what? The message from Andromeda, or the wormhole?"

And MegaHal replied with something (along the lines of) "The message from the debug stream via the USB port".

YES!!!! Even if NOT EXACTLY as stated - but YES! Alright, it is VERY messy with that "gallery of a line stuff", and it is not quite up to asking me yet if what I want to do is set up some kind of line plot of spectrum analyzer data based on the streaming debug protocol by creating some kind of named-pipe, and so on. Yet there are just enough keywords, so as to more or less be able to infer the meaning of exactly that.

Now obviously, in classic text-based games like Zork, you could give commands, like "KILL TROLL WITH SWORD", or "PLACE GOLD BARS IN KNAPSACK", and perhaps the trolls would be very hard to kill, or the gold bars would be too heavy for the knapsack, and the knapsack would be torn apart and so on. So why is it so hard to create a named pipe along with some kind of translation unit that will display my sheet music, while making an exquisite deluxe pizza at the same time?

-

Notes on Simulating the Universe

09/06/2023 at 19:33 • 0 commentsSimulating the universe is a popular theme, with many variations. One interpretation is that we are all actually, in fact, living in some kind of virtual reality - whether our brains are actually in jars, or perhaps we still have our bodies, but that they are in turn being hosted in some type of life support pods like in the movie The Matrix, which is just one of the many kinds of variations on this theme. The overall idea is nothing new. Plato's allegory of the cave is also well known. And let's not forget the classic Star Trek - For the World is Hollow, and I have Touched the Sky - even if that one strictly did not involve the more confluent type of dream within a dream, challenging ideas about the ultimate nature of reality like some of the variations that even more recent, and thus, contemporary writers have crafted.

Since this isn't an actual hardware hack, YET: I have decided to create this page. Even though I might at some point try to train an AI that works similar to Eliza, MegaHal, or a modern variation like GPT2 or GPT3 on a text such as this one. So there is the possibility that this will turn into some kind of project, in some form or another. Perhaps, this approach will turn out to be helpful, as far as getting a real AI to help solve climate change is concerned, or to create a department of PRE-CRIME, which would certainly not be without controversy. More likely, I will end up writing yet another snooty chatbot. Spoiler alert!

So, the approach that I am contemplating right now is the idea of eliminating most of the universe altogether, as a universe that is more than 99.9999999% empty space, (and that is nowhere NEAR enough nines) seems like a pretty big waste of space. Thus, some work needs to be done, perhaps with some improved compression algorithms. Let us at least for now assume, therefore, that we are alone in the universe and that all of those other stars and galaxies that we think that we see are just figments of our collective imaginations. Now let us also imagine that we don't really need the Sun, or the Earth, or the Moon either - we just need to find a way to put something like eight billion brains in one gigantic jar and get them all hooked up to some kind of life support system, which along with the right psychoactive substances, or fiber-optic interconnects, or whatever, would allow the illusion that the universe exists, to continue - at least for now.

So now we can do some math. If an average human brain weighs in at around three pounds, or just under 1336 grams for an adult human male, or 1198 for an adult female, according to nih.gov, then the total brain mass of all humans currently living would be not more than 10.14*10^12 grams or about 10.14 million metric tons. Now if we assume a density equal to that of water, which could fill a sphere 268.5 meters in diameter if I did my pocket calculator arithmetic correctly.

Then we could in principle, get rid of most of the rest of the universe, and nobody would ever be wiser, that is, if we could build such a device, provide power to it, etc., and then somehow transfer all human consciousness into it. Of course, nothing says that such a device would need to be organic in nature, that is to say - that if consciousness is simply some function of having enough q-bits, then maybe some kind of quantum computing technology, which has not yet been invented, but which could work, at least in theory, that is to say - without rewriting the laws of physics as we presently understand them.

How about using Boron-Nitride doped diamond as a semiconductor, for example, and then we could build a giant machine that runs Conway's Game of Life, which we know is Turing complete? Now just such an approach, if we could figure out how to power and how to cool it, should at least in principle solve some of the other issues associated with organic systems.

Of course, if we could rewrite the laws of physics, just a little bit maybe, we can go a lot farther with this. Why not replace regular atoms which are made from ordinary protons, neutrons, and electrons, with atoms that might somehow be made with muons, instead of electrons, or perhaps even Tau particles? I haven't worked out the actual quantum mechanical calculations for this, nor do I know what the consequences would be if we could somehow create atoms that use charmed or strange quarks in lieu of regular material. Yet since the muon is about 207 times heavier than an electron, then maybe we can get a 207 times reduction in the size of our sphere, thus reducing requirements for a computer capable of hosting the consciousness of all known life to a sphere of about 1.29 meters in diameter, with further reductions in size possible if we could take things to the next level and then somehow get Tau particles to co-operate with charmed quarks, or strangelets, or whatever, and get them to run Conway's game of life.

I have assumed of course, that such a simulation would be an otherwise atom-for-atom replica of all the tissue in the known universe; that is, assuming that we are "alone". Other efficiency gains might be achieved by simply finding a way to get rid of most if not all of the water; and/or structural carbohydrates, and the like - in which case the whole thing might collapse to something like, perhaps, a thimble full of complex-proteins, with or without DNA; since we don't know if DNA plays some role in helping to create the presumptive quantum entanglements that most likey exist between some of the complex proteins that process information, i.e., such as across intra as well as inter-cellular boundaries. Right now, I don't think that anyone actually "knows" the answer to that one, which is based on scientific experiments, i.e., and therefore empiricism.

Where are the "Art Officials" When we need them?

Even if I am not sure just exactly who they are. Even if they don't exactly know who they are either. I tried asking Google Bard last night, to see if it could summarize some of the material from a few of my projects on this site, and it turned out to be a complete disaster, meaning that it had absolutely no comprehension of the material whatsoever. Then it occurred to me that summarizing an article might not be all that hard, actually - if done correctly, since where there is a will, there should be a hack - right? What if I construct a dictionary for an article, and then use the word counts for each word to identify potential keywords, as I am already doing, but then there needs to be "something else" that needs to be done, that isn't currently being done or is going unnoticed.

Well, as it turns out, I noticed that maybe between one-third and one-half of the words on a typical list of unique words associated with a document are words that occur only once in that document, whereas. So we could split a dictionary maybe into three parts, one part which might consist of words that are seen only once and then another part that contains words that are seen often, like "the", "we", "of", etc., which might occur dozens, or even hundreds of times, where then there would be those words that typically occur at least twice, but not necessary a "great number of times", whatever that means. So there could be a list of regular words, another list of frequent words, and yet another for unique words. Hence document analysis might mimic some of the properties of compiler design, which might have code that tracks, keywords, namespaces, global and local variables, etc.,

Hence for efficiency reasons, when developing code that will run on a micro-controller, it seems like it would be useful to have some way of specifying some kind of systematic parsing mechanism that works across multiple domains, on the one hand, in the sense of how it implements the notion of hierarchical frames, vs., specific embodiments of that concept,

So I started making changes to the inner workings of the symbol_table classes in my frame_lisp library, initially for performance reasons, so as to be able to speed up the time to perform simple symbol lookup, insertions, sorting, lexing, and parsing operations; none of which has any obvious impact on how an AI might perform certain tasks, other than from the point of view of raw speed. Except when designing an asynchronous neuronal network, it should be obvious that the time that it takes for a search engine to return results to multiple, simultaneous queries will have a huge impact on what that network does, even if all we are doing is performing searches on multiple models and then prioritizing the results into a play queue in the order in which the various results are returned.

Well, in any case - let's take a look at how badly Google Bard blew it on the simple request to "Summarize the article "Computer Motivator", as found on the website - hackaday.io, that is according to the project of that name which was created by glgorman. Also, summarize "The Money Bomb", and "Using A.I. to create a Hollywood Script" from the same site."

And here is Google's response - which is completely wrong.

![]()

So Google thinks that the article "The Money Bomb" is about a fundraising technique used by the Obama campaign, there is actually NOTHING in that article about any of that sort of thing whatsoever. This is despite the fact that every Hackaday article is supposed to have a tagline and a description, which is supposed to tell you something about what a project is about, and for "The Money Bomb", the tag-line is "Achieving the Million Dollar Payday, or else ... Why aren’t you a millionaire yet?” Interestingly enough most theatrical scripts will include something called a log line, which for the Wizard of Oz might be something as simple as "After a young woman named Dorothy is transported by a freak tornado to a magical world called Oz, along with her dog Toto, she embarks along a series of adventures where she meets a Cowardly Lion, a living Scarecrow and a Tin Man - who join Dorothy's quest to find a mysterious wizard who they believe can help solve all of their problems, as well as help Dorothy find her way home.

Now for a more descriptive interpretation of what "The Money Bomb" might actually be about, I suppose that it might actually be helpful to read that project's description which reads something like this:

One reason that many people fail to achieve their financial goals is that they fail to achieve what I will later develop and explain as “their own critical mass”, that is – even if their business plan is fundamentally sound, well timed, etc. Of course, by now you must be thinking: What is the secret formula? Why is it being withheld? Is it even possible … that there is some “secret equation” that is not so secret after all, it is just hidden in some obscure nuclear physics textbook – or in the Bible, or in the Koran, or else might even be inscribed onto the walls of some pyramid? And of course, we are not going to tell you … unless first of all, we know who you are; and why you want to know … perhaps we could, I mean, there is after all the first amendment, and maybe we really could “just simply tell you”, but why should we?

Now it might also be helpful to take notice that "The Money Bomb" was a project that I entered in Hack-a-day's science fiction contest, and thus as I got further into the project, I had to realize, that since technically this is a hardware site, I had to come up with some sort of "design", such as a "plan" to create a potentially traversable wormhole in the laboratory, so we could perhaps download some trillion trillion carat diamond cores of extinct white dwarf stars from distant galaxies, and so on - even though since this a project that I created for a science fiction contest, the "rules" for that contest are also relevant for understanding the article - since the "rules" offered up to 50% of the points for the "quality of the documentation", and the only other requirement was that it had to include "something electrical" and at least 'blink", unless of course that was non-canon.

Maybe someday Bard will actually read the articles that it is being asked to summarize, Even better still, would be to have the ability to consider the context for which something might have been written. Then of course, in the real world, we know that there is also something called "the audience", or at least most would-be authors should hope that there will be an audience! But do those authors write to entertain, or do they write to sell popcorn? They can't possibly be in it for the money themselves, since the studios seem to operate on the principle that the "only good writer is a dead writer", and "the only good actor .... ", just like the record companies that don't want to worry about wardrobe malfunctions or other scandals, and where all they care about is "catalog rights".

O.K., since I wrote "The Money Bomb" I should be able to come up with an independent review, or at least a piece that reads like one, like "The Money Bomb" is a piece that takes a humorous look at a serious subject, by invoking some even more ominous references to concepts like "critical mass", "initiation", "propagation", "extinction" and so on. Thus while taking a non-conventional approach to the ever-important subject of economics, the article uses these terms, instead of more traditional terms like "capitalization", "amortization", "obsolescence", and so on. Yet important concepts such as "doubling time" and "sustainability" are discussed, also with various implications, for whoever you are - or whatever you are - that is - according to your place in the universe.

In any case - Bard does seem to do better with Computer Motivator, at least for trying to be polite and giving me a nice review on that one - even if it still doesn't know what the article is about.

Computer Motivator: The Computer Motivator project is still in the early stages of development, but glgorman has already made significant progress. He has created a prototype program that can set goals, track progress, and provide rewards. He is currently working on improving the program's user interface and adding additional features.

Now a program that can "set goals", "track progress" and "provide rewards" sounds like an interesting concept. So perhaps Bard isn't completely useless. Maybe I should ask GPT-4 Co-pilot to write such a thing. AAAAARRRRRRRGGGGGGGHHHHHHHHHHHH!!!!!!!!!!!

Mystery Project - Please Stand By

Mystery project sponsored by a top-secret entity so classified that it doesn't have a three-letter abbreviation. See the book for details.

glgorman

glgorman