-

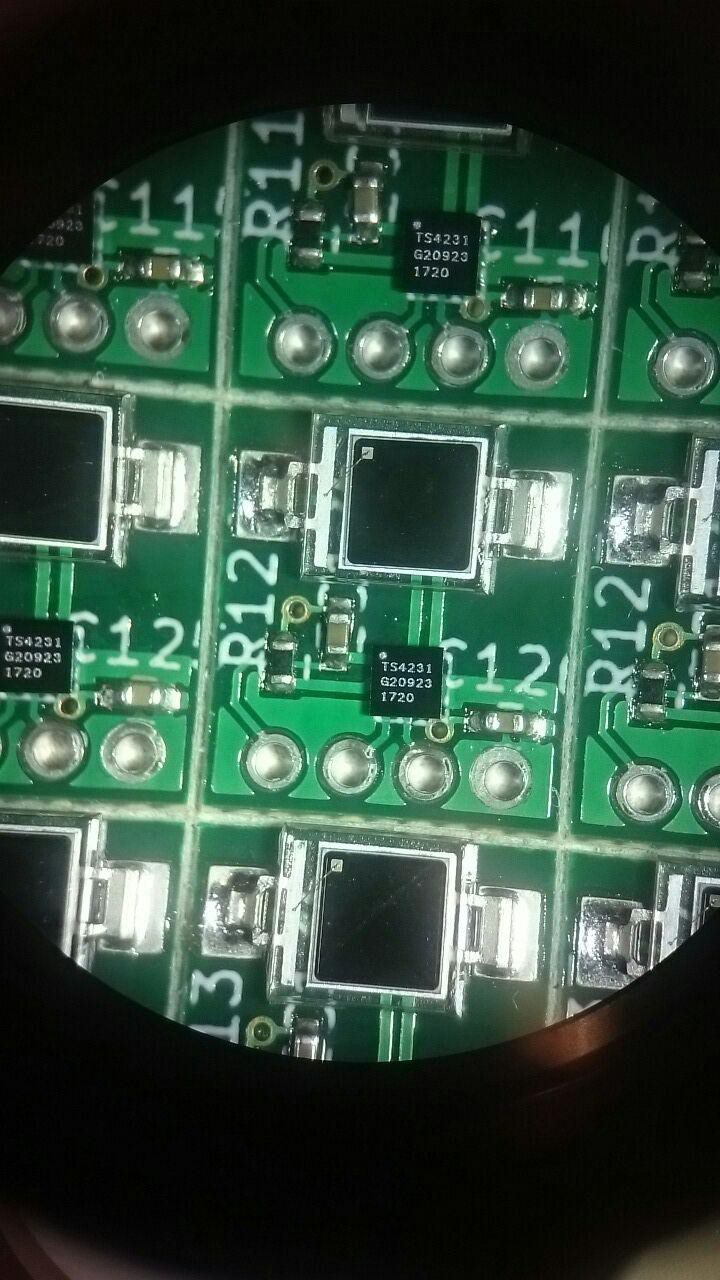

TS4231

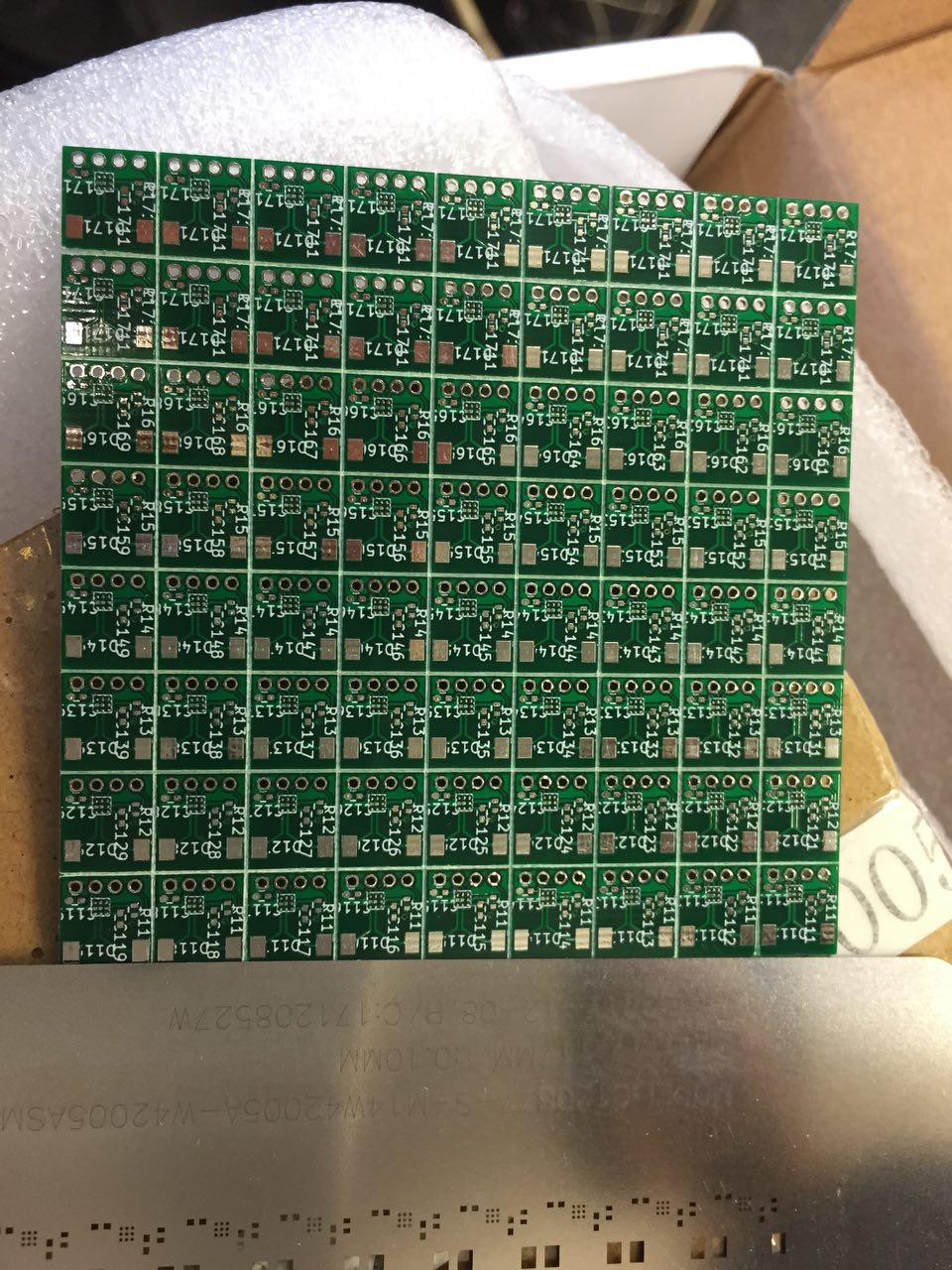

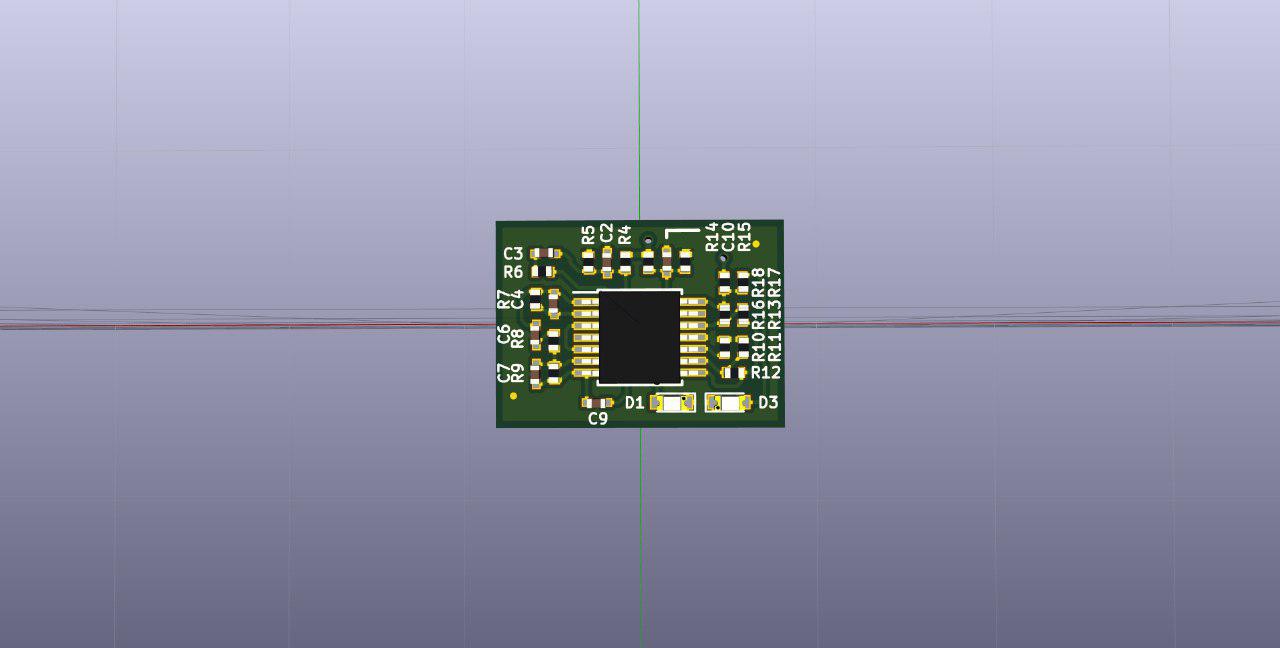

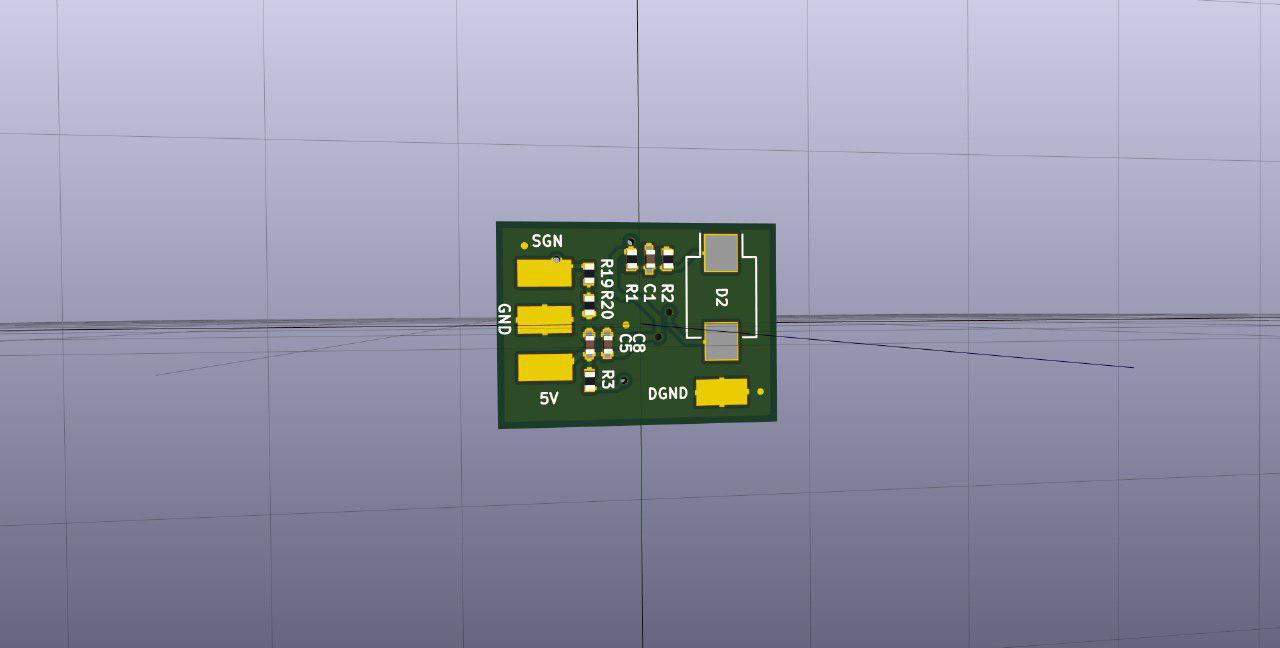

05/14/2018 at 23:18 • 0 commentsSo eventually we have moved to the much more integrated TS4231 from Triad. The reasons are size, price and assembly time. While Luis chip works like a charm, the time to assemble the two-sided PCB with 30+ components has been very annoying. The TS4231 only requires 2 caps, a resistor and the photo-diode. We assembled 63 of the new sensors in approx 2 hours. You can find our kicad layout here.

![]()

The sensors are cheaper now aswell, like 1.50 euro per sensor. If you make more it will get even cheaper.

The size of new sensor is approx 10x9 mm, so it is smaller aswell. If you are crazy enough to go for double sided assembly, you might be able to half that size.

One downside of the TS4231 is, that it needs to be configured via its two cables. On power-up it goes into a state, where the envelope signal is output on the data line (which caused major confusion, when we started using them). It does that for about a minute or so, then goes to SLEEP. We have written a small verilog module called ts4231.v, that automatically configures a connected sensor to go to WATCH state, in whatever state it is. This module essentially does the same as the arduino sample uploaded by Triad. I dont know, why Triad decided to design the sensors like this. It would have been cool, if the sensor would just output the envelope signal on whatever single line, without going to SLEEP state. But the way it is designed you will need two wires for sure for each sensor. Which sucks of course, but I guess thats the price for this pretty little fellow, that also gives you the modulated infrared signal on the data line (lighthouse v2 we are coming).

So far, we are very happy with the new sensors. They seem to be a lot more robust against shallow incident of light and to be in general less noisy than Luis design.

Currently, we are designing tracking sleeves, with an integrated fpga+esp8266 solution, so stay tuned...

-

Masterthesis

03/16/2018 at 14:09 • 0 commentsSo I finished my thesis yesterday, it covers the motor control and communication infrastructure of Roboy 2.0, but also describes in more detail how our custom lighthouse tracking works. I directly compared our system with the HTC Vive by mounting a Vive controller onto our calibration object. We are pretty close and it will get even better for sure.

You can find the thesis in the files section.

-

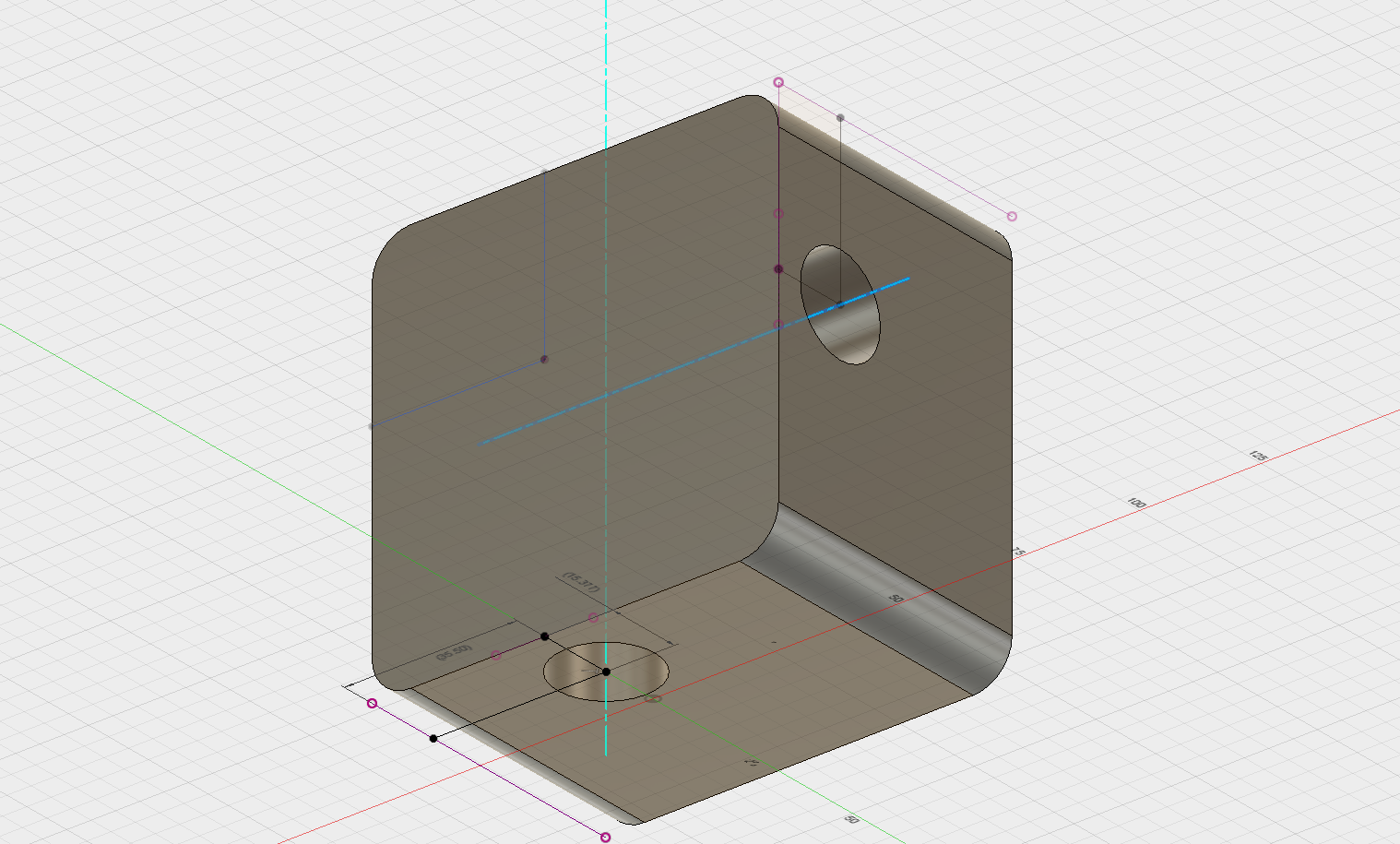

lighthouse coordinate frame

01/11/2018 at 22:02 • 0 commentsOpening the ligthouse reveals the motor rotation axis

![]()

![side dimensions side dimensions]()

![bottom dimensions bottom dimensions]()

![front dimensions front dimensions]()

We have to take this into account for the calibration...

-

in your gibbous phase

01/10/2018 at 23:55 • 2 commentsThe sensors are probably hold up in customs...

So far, we haven't seen anyone actually using the mysterious factory calibration values. Although it might be very handy to just use the ootx values.. in the end, calibrating your 'camera' is something one has to do all the time. For the lighthouses unfortunately there is no nice opencv example.

The plan is to calibrate them with our own correction function (might be close to the original or not, but should do the job).

The function we are using is

elevation += phase[lighthouse][VERTICAL]; elevation += curve[lighthouse][VERTICAL]*pow(sin(elevation)*cos(azimuth),2.0) + gibmag[lighthouse][VERTICAL]*cos(elevation+gibphase[lighthouse][VERTICAL]); azimuth += phase[lighthouse][HORIZONTAL]; azimuth += curve[lighthouse][HORIZONTAL]*pow(cos(elevation),2.0) + gibmag[lighthouse][HORIZONTAL]*cos(azimuth+gibphase[lighthouse][HORIZONTAL]);The phase is a constant angle offset. The curve scales a quadratic function that curves the lighthouse plane. The gibbous magnitude and phase describe a distortion with respect to the motor angle.

We are using a least square optimizer to minimize the measured angle to a ground truth over the above parameters.

The result looks good...in simulation ;P

Now we gotta build a real life calibration platform with know angles...

UPDATE:

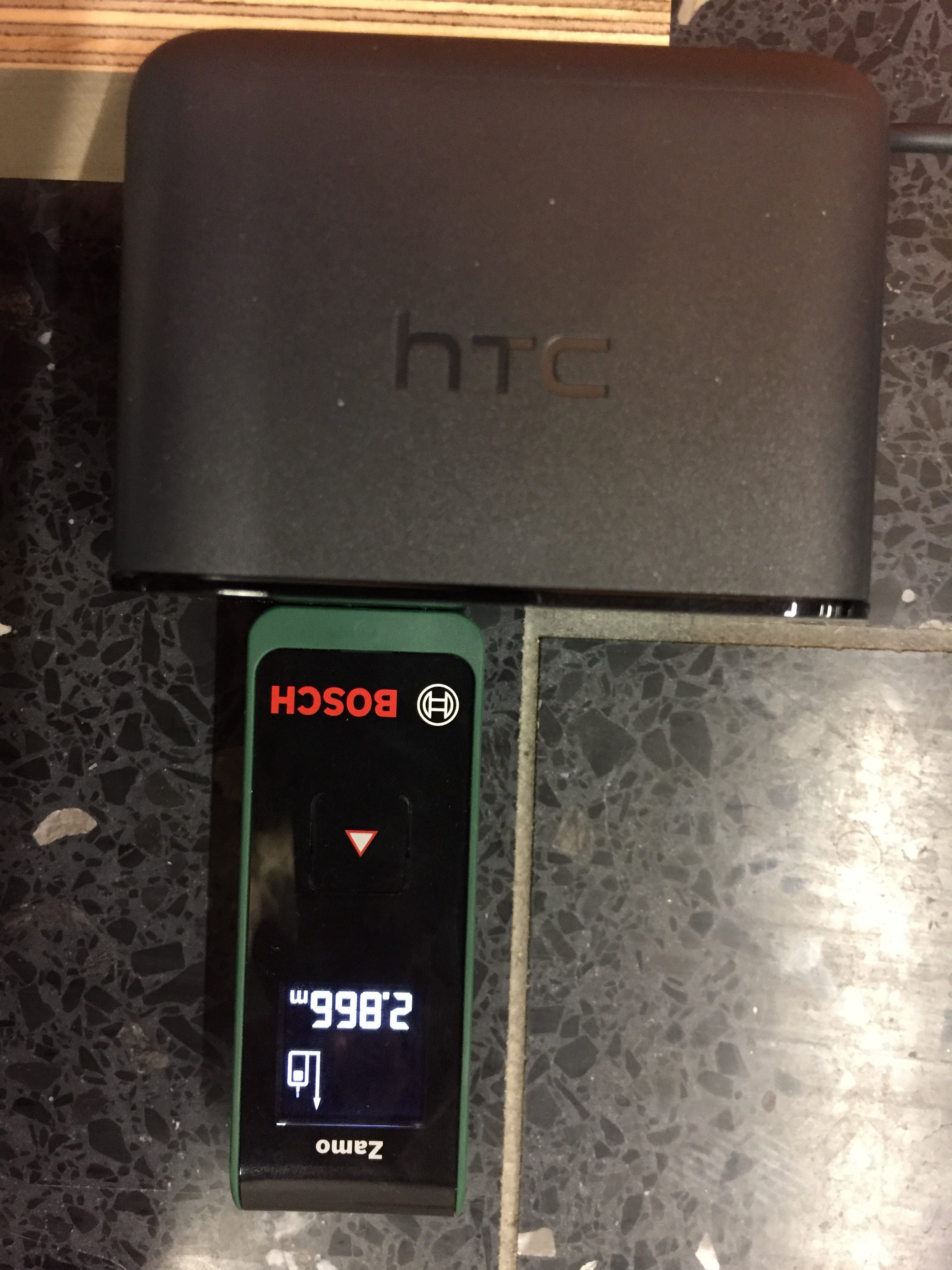

We decided to laser cut a plate, such that the photodiodes are at known distances.

![]()

Using the seams between the tiles and a laser distance measurer, we tried to place the board as precise as possible wrt the lighthouses. We calibrated each lighthouse individually using the minimizer mentioned above. However, we adapted the optical model to be closer to reality. So instead of using spherical coordinates (ie pin hole camera), the motors are treated to have their respective offsets and as sweeping planes. This is important because otherwise any estimated curve parameter would be wrong for sure using the pin hole model. So far we left tilt aside.

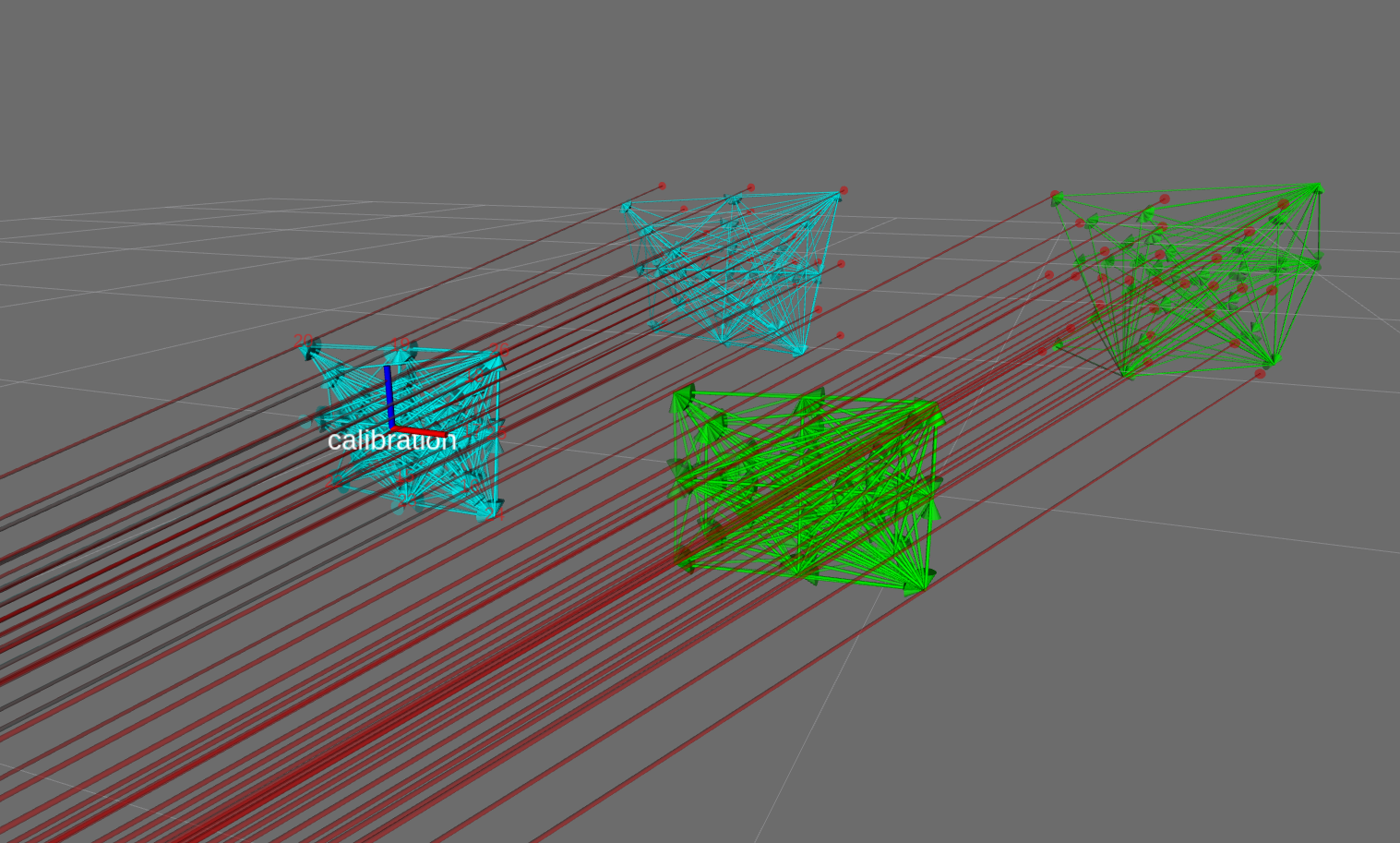

After the calibration the relative pose estimator also finally started to produce reasonable results

![]()

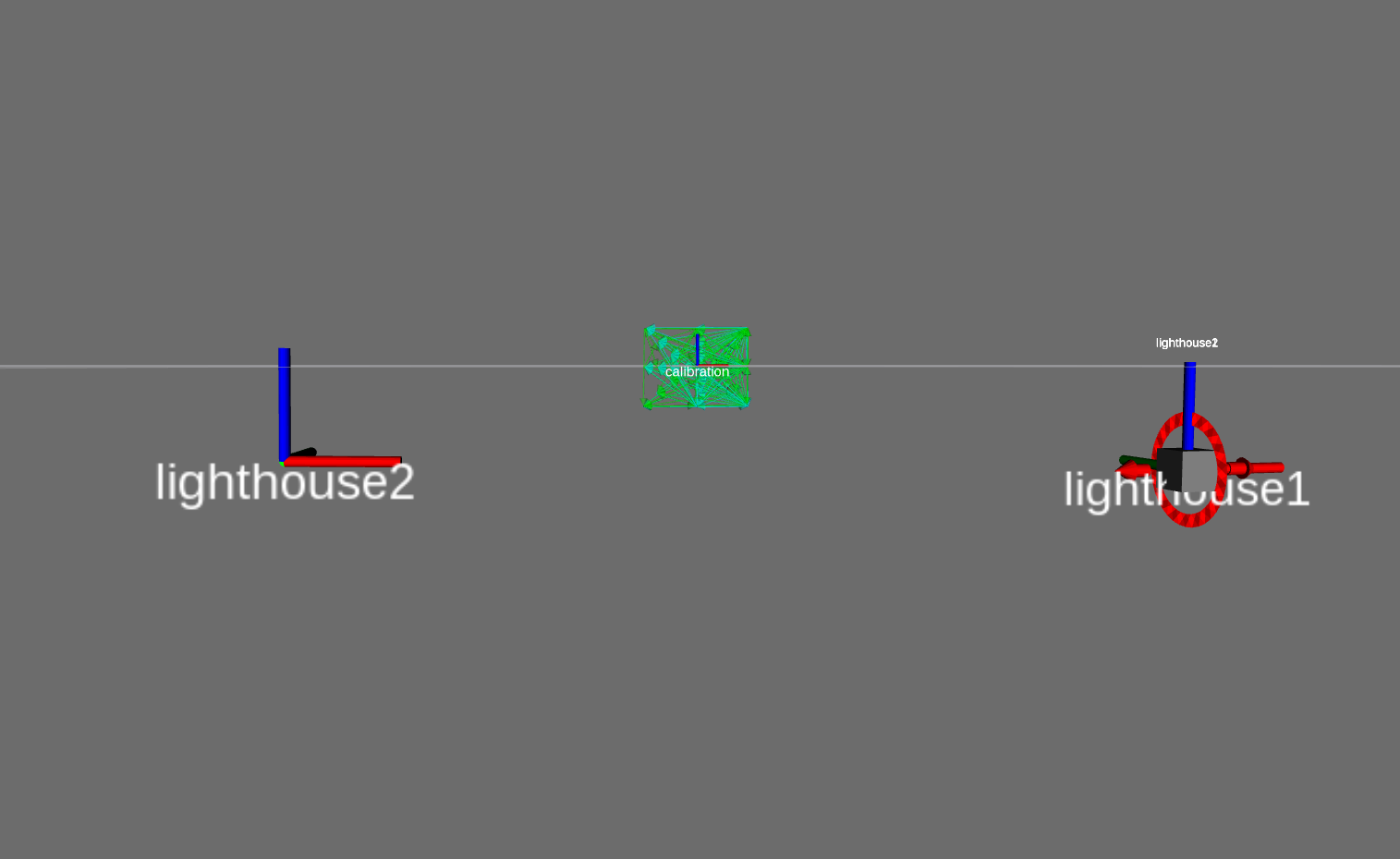

Which in turn results in a relative lighthouse pose correction that is not so bad

![]()

The pose estimator for the calibration object produces ok results too.

This truly feels like some light at the end of the tunnel. The pose estimation has never worked properly with real sensors. So the calibration seems to be crucial for any tracking application. The calibration is rather annoying, since it highly depends on how accurately you are able to put the calibration object relative to the lighthouse. At one of the institutes at TUM they have a vikon system. So that might be the next step, to get a lot of data and get very accurate calibration values. It might also be possible with a lot of data, to figure out which kind of permutation of sin/cos/+/- and in which order the original HTC factory values are used.

-

Pushing simulation while waiting for sensors

01/02/2018 at 01:00 • 0 commentsSo now we are at a state, where we want object pose estimation from our sensor data. This is kinda problematic, because the lighthouses are not perfect. They are optical systems with certain manufacturing tolerances. There is a reddit discussion concerning the factory calibration values and their meaning. This videos is explaining the values to some extend, while this video describes some of the strategies to cope with those values.

First of, we had to implement an ootx decoder in fpga, that continuosly decodes the frames from both lighthouses. That was fun and the decoded values are sent via a ROS message to our host.

So far, we have been using exclusively our own custom sensors, and are pretty confident that those produce comparable signals to the original HTC ones. But you never know until you try. Also Valve has announced their second generation of the lighthouses which will do something called sync-on-beam. We are not sure what exactly that is, but we have the strong believe that this might be related to a modulation of the MHz infrared light to convey information while the beam sweeps each sensor. This would allow for an almost arbitray amount of lighthouses. Our sensors are not able to decode such a signal. Triads second generation of the sensor amplifier TS4231 are. So we ordered those and we had the pcbs manufactured.

![]()

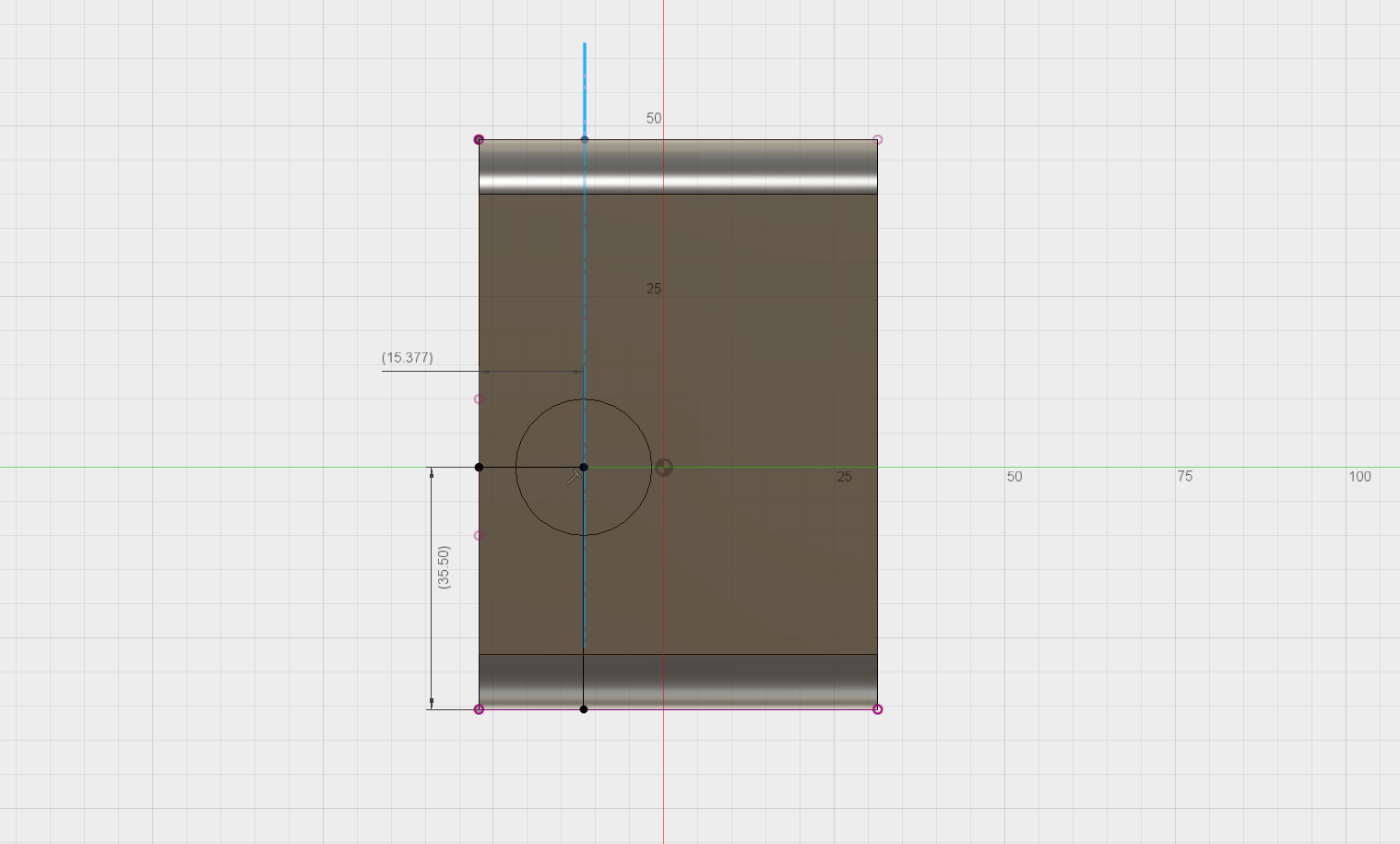

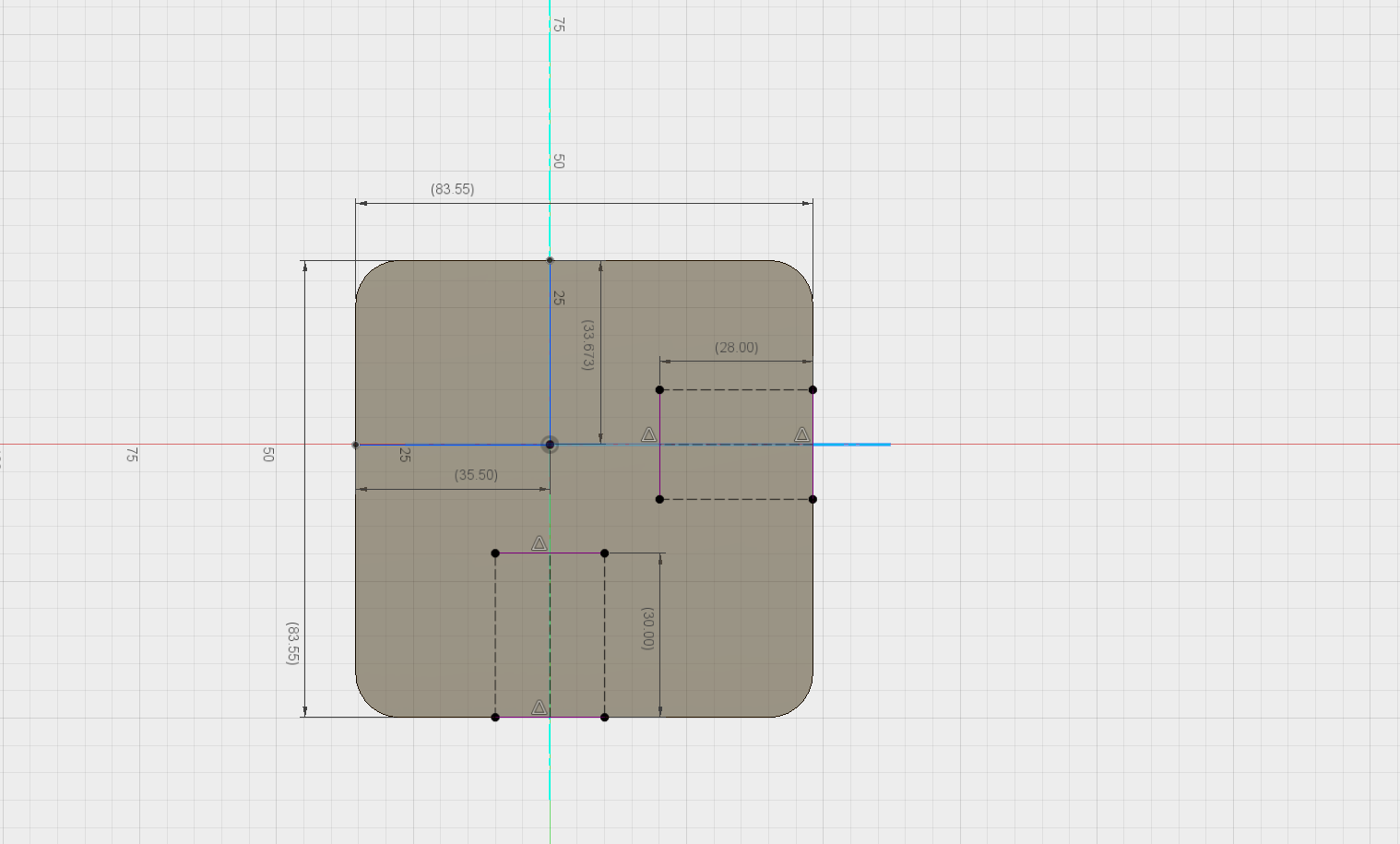

Over the holidays our pick-and-place machine is resting. Which is boring of course, but we decided to push simulation further and extend the overall system to be able to track multiple objects. We are using autodesks fusion 360 for CAD design, because it is free for students and it has an extensive python API. The API allows you to write your own plugins for fusion. We are working on tendon robots, so those plugins come in very handy when defining attachment point of the tendons for example.

So the first thing we did, was to implement a plugin for the sensor positions on our robots. When you run the plugin, it lets you choose for which link you want to define the sensor positions and then you simply click on your model where you want those sensors. I might upload a video later. You can find the plugin here: https://github.com/Roboy/DarkRoomGenerator

Another plugin we have written is a sdf exporter. It lets you export your robot from fusion to sdf. Then you can load that model into the gazebo simulator. This plugin can also export the lighthouse sensor positions. These can then be loaded by our system and used for pose estimation. You can find the plugin here: https://github.com/Roboy/SDFusion

In the following video you can see a simplified model of the upper body of roboy2.0. The green spheres are the simulated sensor positions. Currently our pose estimator tries to match the relative sensor positions (as exported from fusion) to the triangulated 3D positions. The resulting pose is quite ok, although fails for some orientations. This could be because of ambiguity, but needs further investigation ( EDIT: the pose estimator works fine, it was just my crappy pc that couldn't keep up with the computation. This caused the simulated sensor values to be updated asyncronously)

Because the visual tracking alone is not good enough, we extended our system to also include IMU data. This is done via the ROS robot_localization packages' extended kalman filter. So now, everything is prepared for the real data. Once the new sensors are assembled we will try to figure out, how to use those factory calibration values to get good lighthouse angles.

-

custom sensors

09/01/2017 at 22:58 • 0 commentsThe circuit of the HTC original sensor is out there. You can also buy those sensors from the US. We decided to build our own sensor anyways. As it turned out, our sensor has a pretty cool property. More on that later...

The HTC sensor works by measuring the envelope signal of modulated light pulses. Luis sensor is based on cascaded amplification stages and signal conditioning filters.

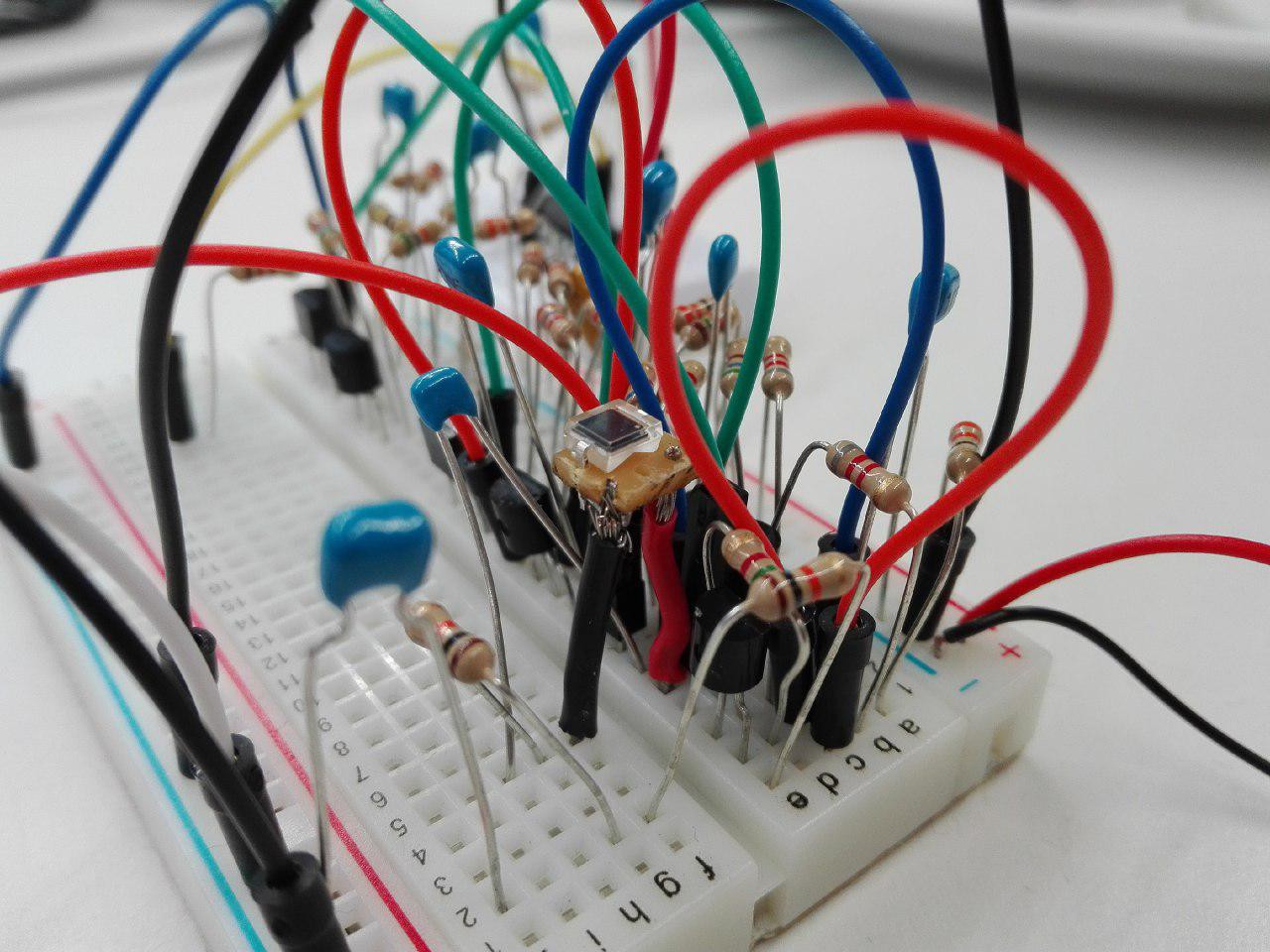

After some breadboard prototyping, Luis tweaked the different amplification levels.

![]()

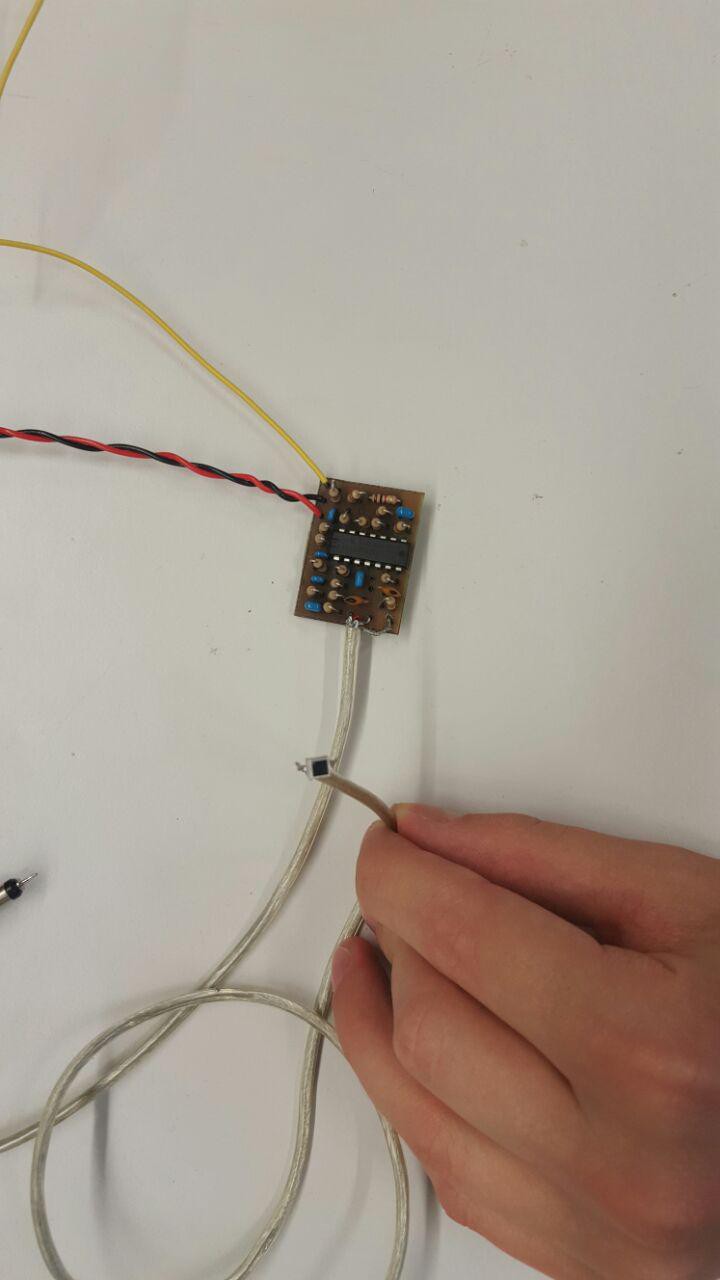

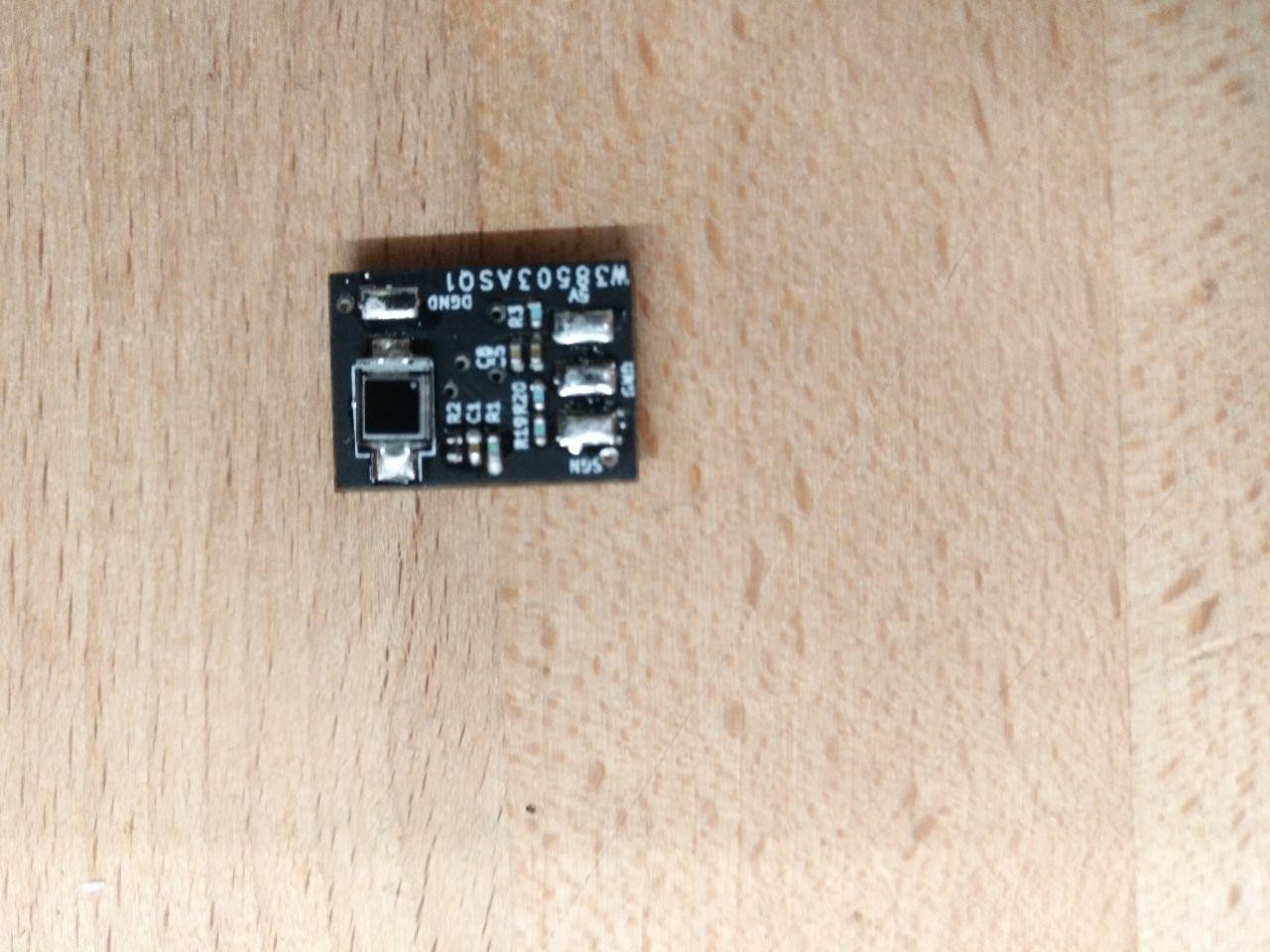

We etched the circuit and build a more compact prototype.

![]()

On the picture above you can see the cool feature of our sensor. The photodiode can be placed up to 1 meter away from the amplification circuit using coax cable. We are planning on building a full body morph suit.

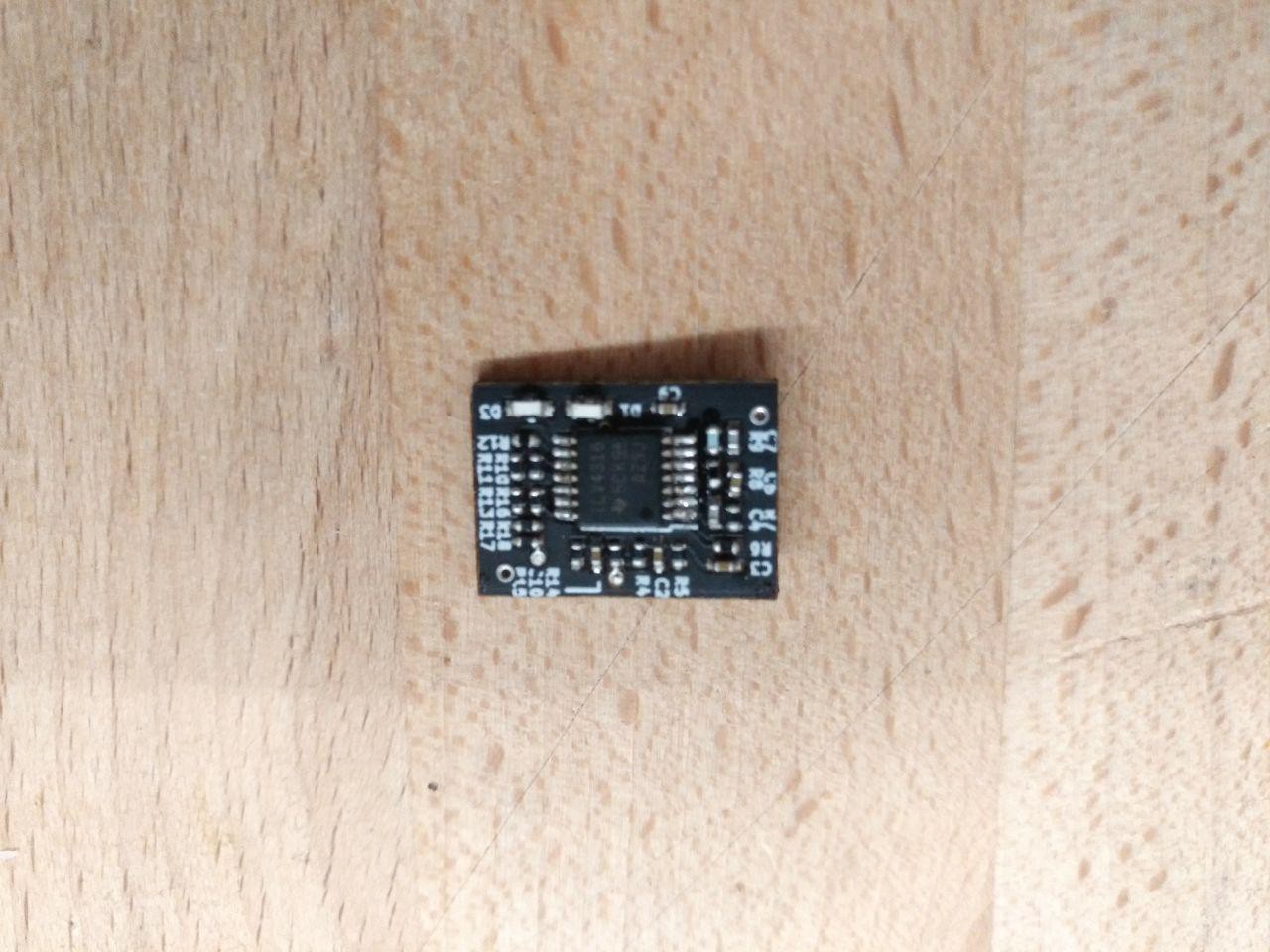

Then we miniaturized the sensor further. Check out the design here.

![]()

![]()

![]()

![]()

We ordered the pcbs in china and assembled them in makerspace. The result is a 16x12mm sensor. We moved from de0-nano to de10-nano-soc. The sensor data is retrieved from the fpga via altera master/slave interface and sent to the host via ROS message.

-

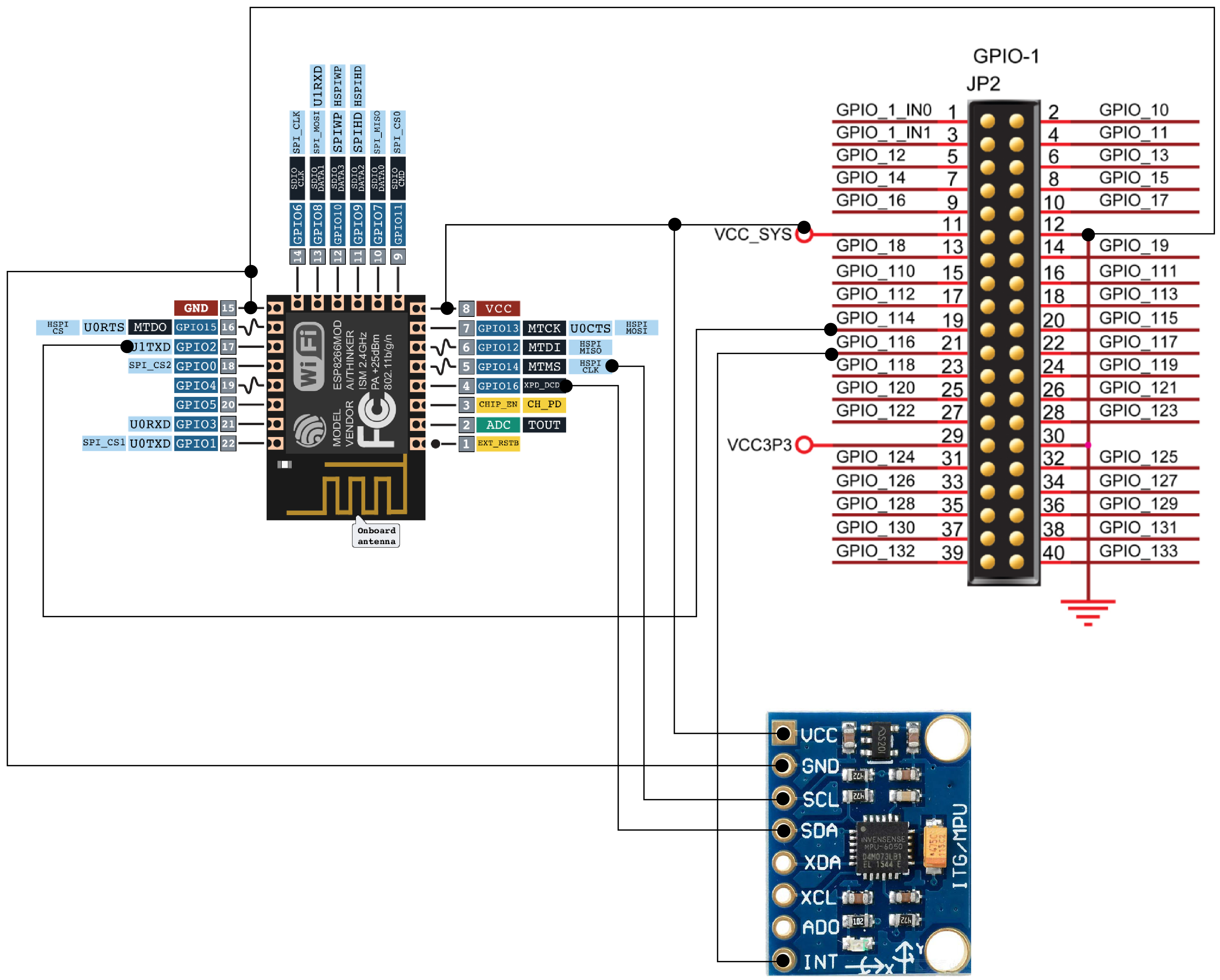

IMU MPU6050

03/08/2017 at 23:04 • 1 commentThe next step was to retrieve IMU data from our tracked objects. Even though the de0-nano comes with an accelerometer, we are using an external MPU6050. They are quite cheap, like 3 euro and come with accelerometer, gyroscope and the Digital Motion Processor, which fuses the sensor values to useful stuff, like eg quaternion, gravity. The data is retrieved on the ESP8266 which uses I2C to communicate with the MPU6050. The MPU6050 has an extra interrupt pin, which signals data availability. This interrupt pin is routed into the fpga and the connection controlled there. This is necessary because we are using gpio2 on the esp, which needs to be low on boot from internal flash (if gpio2 is high, this means boot from sd card). We also progressed on the command socket infrastructure, which allows rudimentary control of hardware from the GUI (we can now reboot the esp for example, or toggle IMU streaming). In the video below you see the quaternion from the MPU6050 DMP estimation streamed into our rviz plugin and visualized with the red cube.

The orientation looks very stable, so we hope to skip the bloody epnp stuff...we will see.

Below you can see how the MPU6050 is wired up to the esp and the de0-nano.

![]()

-

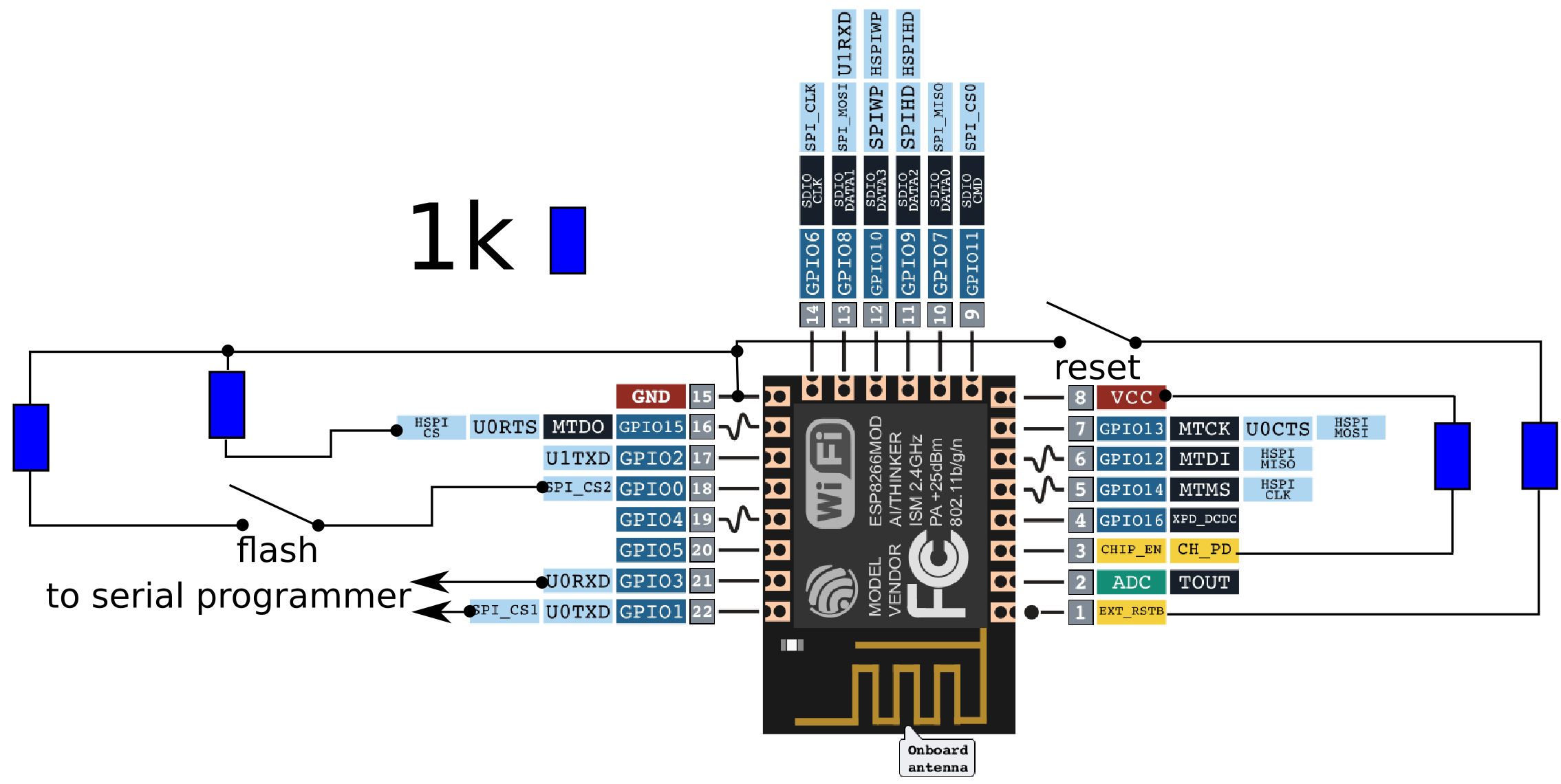

using esp8266 instead of mkr

02/28/2017 at 23:49 • 0 commentsThe MKR1000 has always been an intermediate solution. even though he is quite small, there is an even smaller wifi chip, the esp8266. it has a programmable micro-controller and just needs some resistors, two buttons and a serial programmer to be programmed.

NOTE: there seems to be a lot of confusion out there, about how to correctly wire the little guy in order to program it. therefor the following should help you get started:

- do not use the vcc 3.3V of your serial programmer (usb does not seem to provide enough current for the esp, which can peek to 200 mA). instead use a voltage supply with enough current. The esp seems to be very sensitive towards incorrect voltage, which leads to undefined behaviour causing major headaches

- the slave_select pin is GPIO15 which needs to be low on boot

- use the following wiring scheme:

![]()

When programming the esp (be it from arduino ide or commandline) hold both buttons pressed, start download, release reset, then flash. your bin should start downloading, if not try again. (make sure you pay attention to the NOTE above).

We removed the SPI core we were using so far, simply because we didn't trust it. We noticed some glitches in our sensor values and one suspicion was the SPI core. anyways...here is how it looks now:

![]()

The esp is acting as the SPI slave (which makes a lot more sense, because the fpga should control when stuff is send out). One SPI frame for the esp consists of 32 bytes = 256 bits = 8 * sensor_values. In each lighthouse cycle, a frame with up to eight sensor values is transmitted to the esp via SPI. The code for the esp hasn't changed much compared to the previous MKR code, except for the SPI slave and that we send out 8 values via UDP at one go, instead of each sensor individually.

![]()

HTC vive lighthouse custom tracking

3d position tracking using our own design of the sensors, an altera fpga for decoding the signals and custom code for triangulation and poseestimation

Simon Trendel

Simon Trendel