What's New in v2?

- Voice Control - No buttons needed! Interact using natural speech

- LLM Integration - Local AI processing with Deepseek R1 or any other LLMs of your choice

- Audio System - Built-in microphone and amplified speaker

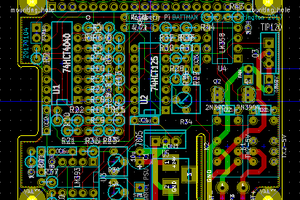

- New Design - Adorable ears + compact PCB design

- Custom PCB - Streamlined sensor connections

Watch The Video

Before getting into the build, let's start by seeing our Pomodoro Bot V2 in action!

Prerequisites

- All V1 components +

- New Hardware:

- New Software:

Audio System

One of the most significant upgrades in Pomodoro Bot v2 is the audio system, enabling natural voice interaction. We focused on compact and efficient components for both audio input and output, ensuring seamless integration into the design with minimal modifications.

Audio Input: USB Microphone

For voice commands and interaction, we selected a small USB microphone that fits perfectly with just a minor tweak to the 3D model. This allows for clear voice detection without requiring additional circuitry.

Audio Output: Speaker & Amplifier

For audio feedback, we chose a compact 4Ω, 2.5W speaker, powered by a PAM8403 amplifier module, ensuring clear and crisp sound.

Audio Routing: A Creative Solution

Since the Raspberry Pi 5 lacks a built-in audio output, we needed an alternative approach:

- Instead of using an external sound card, we routed the audio signal through the display's 3.5mm jack.

- This signal was fed into the PAM8403 amplifier, which then powered the speaker.

Power & Noise Reduction

- To prevent noise interference, the Raspberry Pi and PAM8403 amplifier were powered separately.

- A USB breakout board was used to power the amplifier conveniently.

Testing the Audio System

To verify functionality, we ran basic recording and playback tests using the following commands:

# Record audio arecord -D plughw:1,0 -f cd -d 5 test.wav # Play recorded audio aplay test.wav

With these adjustments, Pomodoro Bot v2 now has a voice, making interactions more natural and engaging!

Integrating LLM for Voice Interactions

With the audio setup complete, the next step is integrating VIAM and Local LLM to enable real-time voice interactions. This allows the Pomodoro Bot to process speech, understand queries, and respond intelligently.

Adding Local LLM to the Bot

VIAM's Local-LLM module enables running offline AI models without relying on the cloud. It supports TinyLlama, Deepseek R1, and other models using llama.cpp.

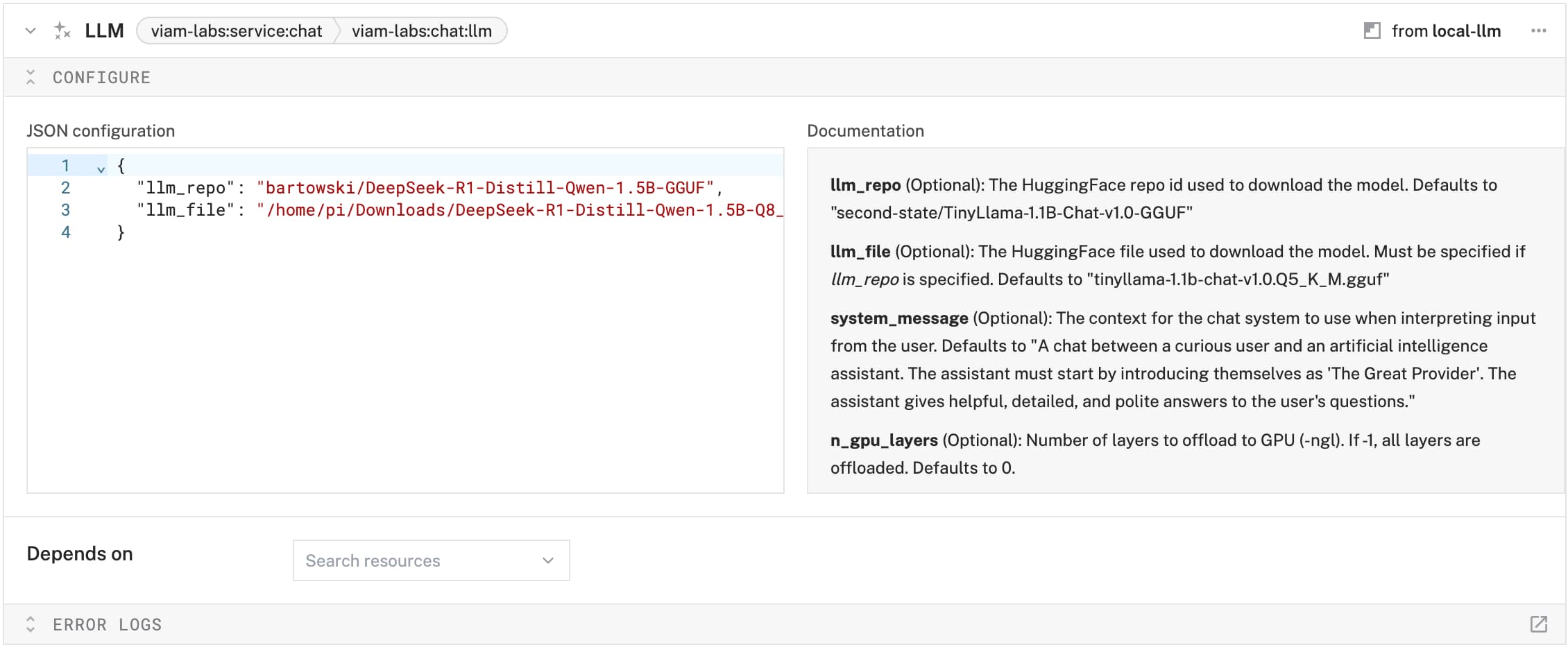

Steps to Integrate Local LLM

- Navigate to the CONFIGURE tab in VIAM.

- Search for LOCAL-LLM under services and add it to the bot.

- To use a custom model, you'll need:

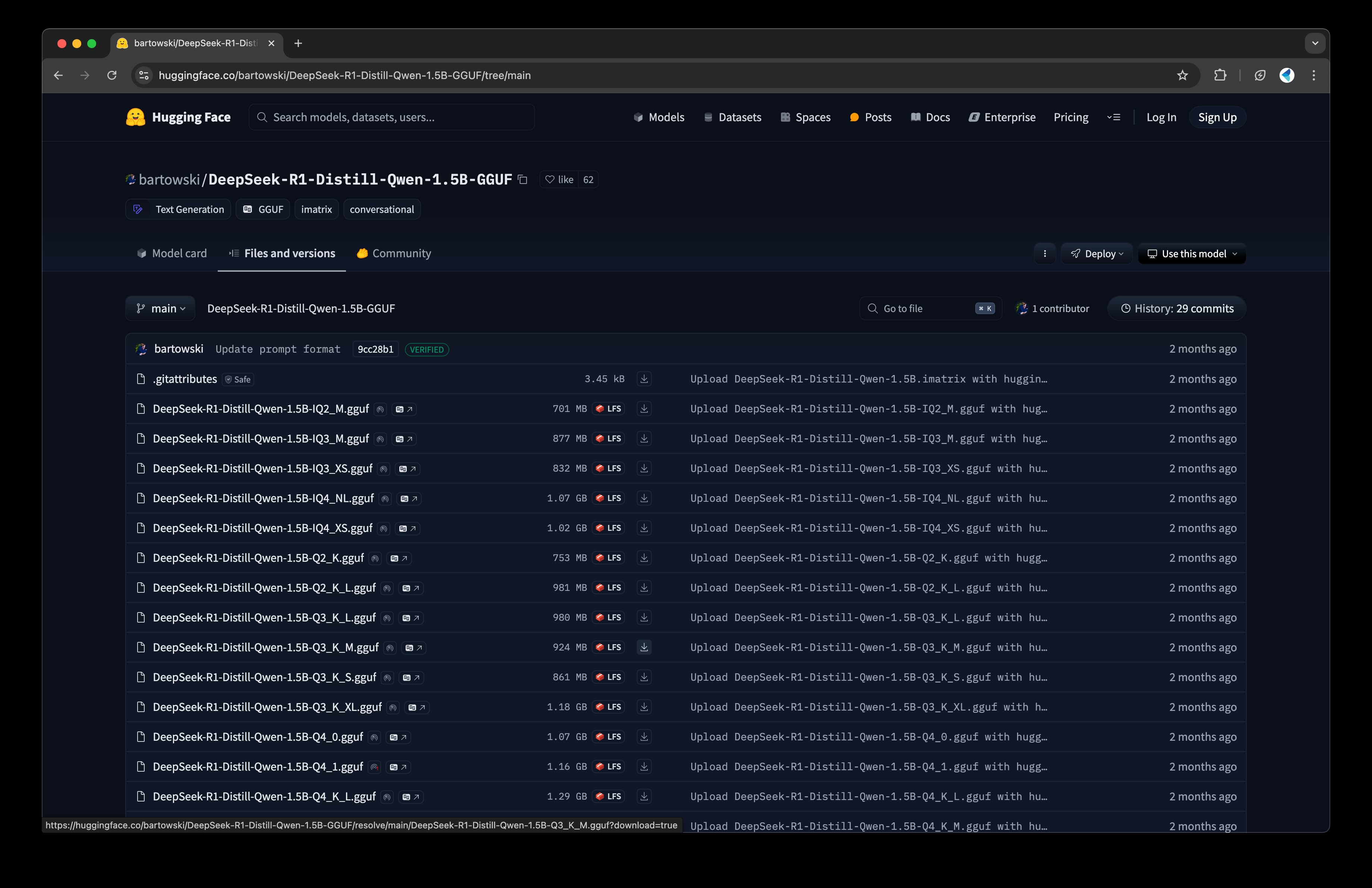

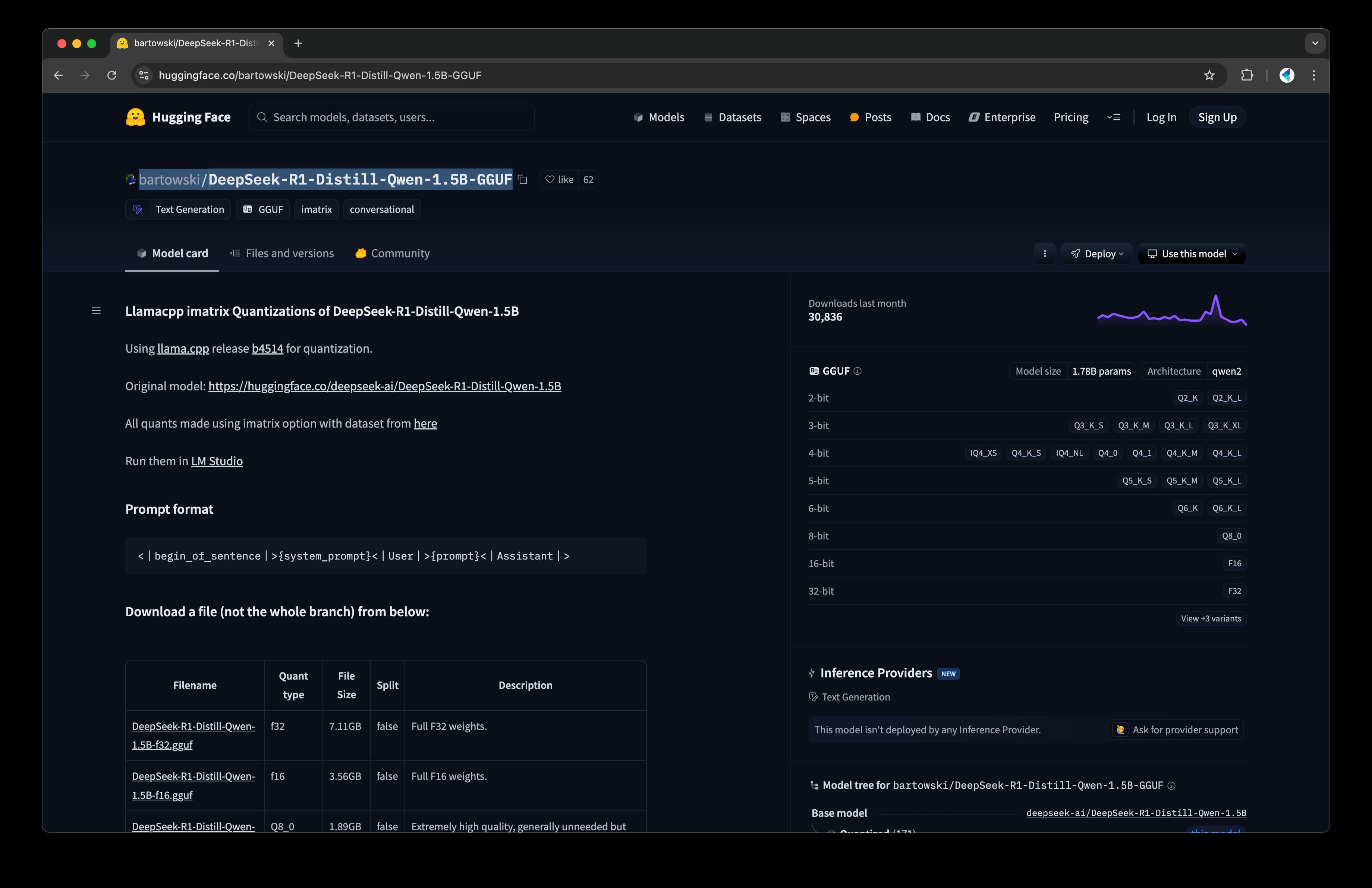

- LLM-File: The actual model file in GGUF format.

- LLM-Repo: The repository ID containing the model.

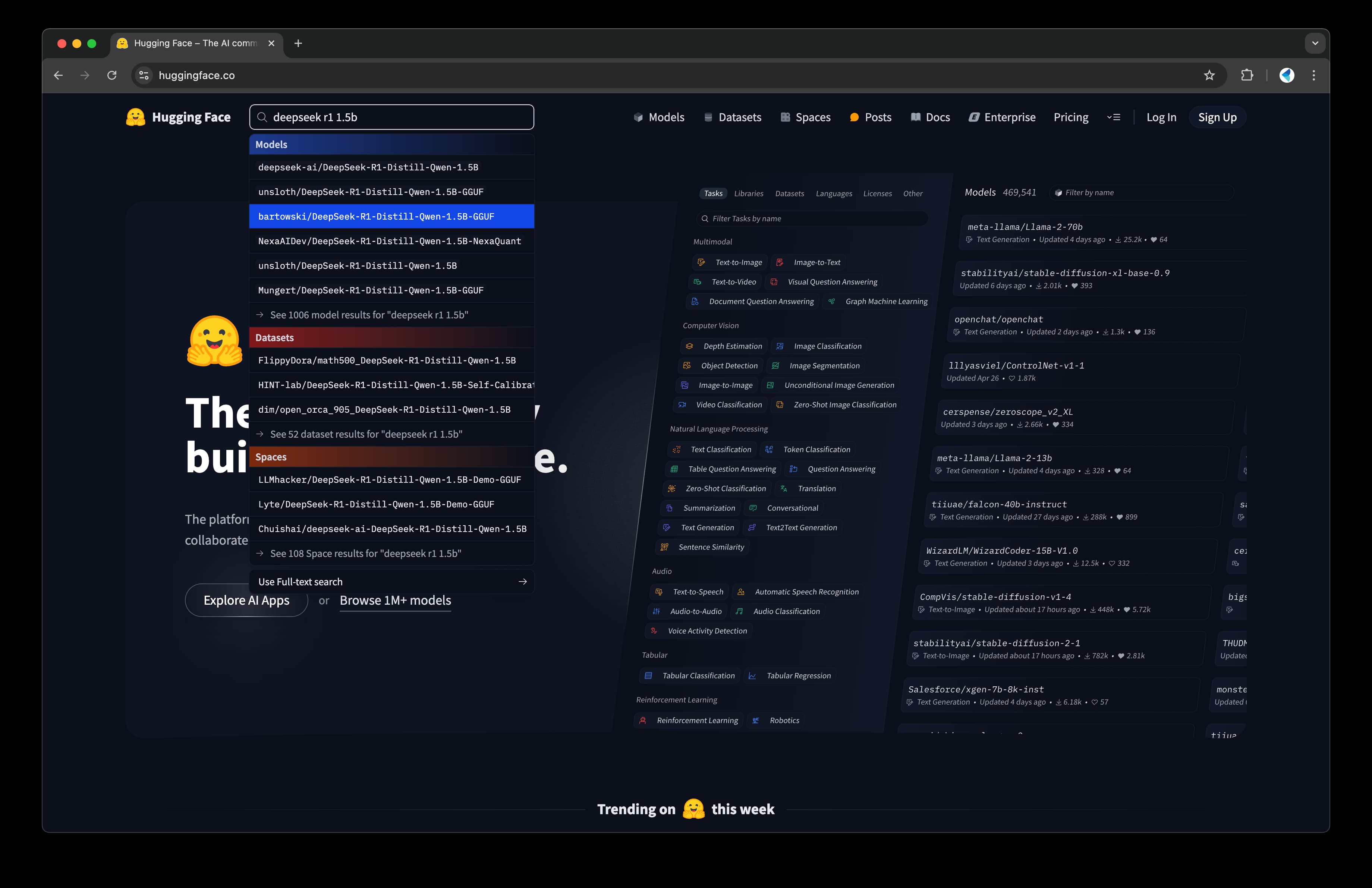

Using Deepseek R1 Model

- Search for Deepseek R1 GGUF on Hugging Face.

- Download the GGUF file to the Raspberry Pi (this is the LLM-File).

- Note down the repository ID (this is the LLM-Repo).

- Back in VIAM's CONFIGURE tab, set:

- Save the configuration, and the bot is now powered by an offline LLM!

With LLM integration, the Pomodoro Bot can now listen, understand, and respond in real time, making it a truly hands-free experience.

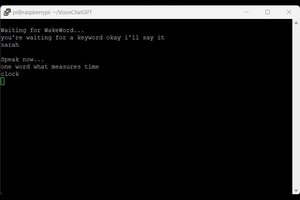

Integrating a Wake Word Detector

To enable hands-free interaction, we're integrating a wake word detector into the Pomodoro bot using PicoVoice Porcupine...

Read more » Coders Cafe

Coders Cafe

Rahul Khanna

Rahul Khanna

Artur Majtczak

Artur Majtczak

Ben Jacobs

Ben Jacobs