Forgotten history in your chips

Un[der]documented long-forgotten features rediscovered in more modern devices

Un[der]documented long-forgotten features rediscovered in more modern devices

To make the experience fit your profile, pick a username and tell us what interests you.

We found and based on your interests.

Advanced warning: this refers to STN, not TFT displays... It *might* apply to "bare" TFTs, lacking deserializer chips (LVDS, HDMI), as-in "parallel interface" displays, I dunno... Experiment!

STN displays don't usually have inputs called "hsync" nor "vsync" nor "pixel clock" which are common in raster-drawn displays like TV, VGA, HDMI, and FPD-Link displays...

Instead they usually have weirdly similar yet weirdly-specific names like "latch pixel data" (what I usually call "pixel-clock"), "latch/load row" ("hsync"), and "start frame" ("vsync").

Some of the weird naming becomes obvious pretty quickly...

E.G. These displays don't have inbuilt grayscale ability, so instead of loading one bit at a time with each pixel clock, you usually load four or eight consecutive pixels simultaneously.

(This gets weird with CSTN displays which often consider R G and B pixels just like they're B/W... So the first "latch pixel data" in a 4bit-interfaced panel loads R0,G0,B0,R1, then the second loads G1,B1,R2,G2, and the third loads B2,R3,G3,B3... )

Thus, not "pixel clock" but "load pixel data".

(And, yes. it could be *very* difficult to convert normal VGA-style (or hdmi) timings to work with that!)

....

But... here's where it gets interesting, some 25 years since my first STN graphics controller design (640x400 B/W).

...

"Latch Pixel Data" (*not* "pixel clock") merely stores the data into a row-register.

"Load Row" (*not* "hsync") then writes the entire row of pixels to the display *simultaneously*.

(Thus, NES's Duck Hunt and Light-Pens don't work with LCDs, because they look for individual pixels' lighting in realtime... not an entire *row*. (Nevermind, of course, the slow response))

...

NOW, why is this intriguing to me, as a "hacker"?

....

Because, when I made that first STN controller (my first large timing-sensitive project), I thought that pixel-data had to be precisely-timed, as it would on a VGA display...

But Why?

All it does is load 4 or 8 bits at a time into a 640-bit register!

AND that register's contents aren't read, at all, until the "load row" pulse!

...

So, it's *entirely likely* the most difficult aspect of that project... the most timing-critical... wasn't timing-critical *at all*.

It's entirely plausible I could've sent that pixel-data at any speed/times I wanted, as long as it was fast enough to latch the entire row's-worth of data before the load-row pulse (which is *somewhat* timing-sensitive).

...

E.G. in the old CGA/VGA days, it coulda been like loading new data to the framebuffer during the "h-blanking" period at the end of the pixel data.

Or even easier and more versatile, I coulda just *stopped* loading new pixel-data whenever I wanted, to load new data into the frame buffer, then resume loading new pixel data (as long as all the pixel data got loaded in time for our misnicknamed "hsync").

....

That would've reduced circuit and software-complexity *tremendously*.

And, frankly, now it seems like there was no reason it couldn't have been done that way.

....

So, on to "hsync"... does the "load row" signal have to be precisely-timed at regular intervals in the same way "load pixel data" apparently doesn't?

I think row-timing is a bit more specific because it corresponds to when the pixels receive their voltages...

And, well, most specs say that DC does gnarly stuff to LCDs.

So, the row-timings *also* control the weird AC-ish voltage waveforms that actually drive the pixels...

So, sure, in the simple sense "they're just shift-registers, clock 'em whenever you want!"... in the technical sense, well, that may be hard on the panel.

(In my admittedly plausibly cruel-to-TFT experiments, I never had any visible degradation, even with a panel refreshed only once per second 24/7 for well over a year... but I didn't yet think to mess with timings the way I have, now, with *at least* the "load pixel data* input)

...

...

...

Now... These sorts of experiments...

Read more »Look in the last logs for more info on these weird(?) LCD column-drivers which contain an internal framebuffer...

Weird Thought:

This display [most likely] has 120 rows (and 160 cols)... (one row-driver chip, one col-driver chip)

The column-driver chip can handle 160 columns, and its internal framebuffer can handle up to 240 rows (two row driver chips).

OK, since this only drives 120 rows, that means its row-driver chip is not wired for daisy-chaining...

Which very-well *could* mean that if you sent the timing signals for a 160x240 display (instead of the 160x120 it likely actually is), it'd show the first 120 rows, then automatically recycle to the first row, showing rows 240-479 on the original 120 rows...

Essentially getting *two* refreshes for every Vsync.

But Wait!

It'd be *easy* to hack a normal 160x120 driver for this setup (if it's true), by simply putting a divide-by-two counter on the VSync...

So, the graphics-controller doesn't have to do anything special to accomodate this double-refresh-per-vsync "mode".

NOW, IF such were implemented....

let's pretend the graphics controller chip, designed for B/W (on/off) pixels has inbuilt frame-by-frame dithering to acommodate 3 shades of grayscale over two consecutive frames (at 60Hz, maybe 120, up to 240!).

first frame: the pixel can be on or off

second frame, same.

So, off off = 0%

off on (or on off) = 50%

on on = 100%

That's actually pretty common for graphics drivers designed for LCDs at the time...

Now, if I'm right about the automatic cycling of the row-driver at row 120, that means the col driver's internal framebuffer (which can handle twice as many rows as we have) can store the actual grayscale information, and automatically refresh it, when new frame-data isn't being sent!

Coool!!!

If it *wasn't* done that way, I don't think it'd be a huge hack to make it that way...

Maybe a pull-resistor tied to the daisy-chain-in input on the row driver, tied also to its daisy-chain output...

As I understand, it's basically just the "load TRUE" into the row shiftregister for one hsync clock" input.... so daisychaining it *back* to itself would cause it to loop (if it doesn't already) for that second "frame"...

Could be groovy!

(I should clarify that we're talking about STN displays which don't actually call their inputs "hsync" and "vsync", though they're very similar in concept... So I usually just call them such. Especially since different manufacturers call them different things.

BUT this isn't a great habit, because it implies timings similar to TVs... and LCDs don't *have* to be so strictly-timed since they have shiftregisters to store the pixel data whenever it's available... which is for the next log).

In both cases mentioned in the previous logs (SDRAM and LCDs' column-drivers), it seems newer devices sometimes carry-over features once thought worthy of designing-in to the early products, and basically forgotten and undocumented in the later products.

In the case of SDRAM's full-row burst mode, it seems it was a design-idea early-on, amongst the brands involved in such designs/standards (maybe related to JEDEC?). But by the time SDRAM was common-enough for other brands to start making it, too, some weren't mentioning it in their datasheets.As I gather, by then Intel had written a reduced standard for desktop PCs... and that's what most manufacturers put in their docs. Yet, why, then, would they still have full-row burst-mode at all?

At this point in my archaelogical dig, I begin wondering... Obviously SDRAM DIMMs were *very* common in consumer products. And so many "no-name"/just-starting chip-makers wanted to appeal to them... BUT, SDRAM chips were somewhat standard, before PCs standardized their needs. So, then... marketting? Why would they go to the trouble to make their chips compatible with a bigger standard that they weren't marketting to in the first place? High hopes? I dunno.

But this is the "history" aspect of it all that gets me wondering.

I think I explained similar in the last log about LCDs' column drivers pretty well... But I could take it further...

In my experience, column-drivers were pretty simple, I'd've doubted there's a JEDEC-style standard for them, but all the ones I'd encountered before were very similar. Basically a huge shift-register capable of outputting numerous different voltages for "1" depending on the timing (LCDs prefer AC, not DC).

So... what weird unique features would a column-driver designer try to throw into something so otherwise well-defined? How could something like that be revolutionized?

That's why finding this new-to-me design is so interesting... Obviously, it didn't "catch on" in the mainstream. Yet, it actually might have.... MIPI displays, for instance (many *many* generations later), have a similar ability, to stop streaming data and instead enter self-refresh. That *seems* like a feature of the MIPI-receiver/controller chip, no? A built-in framebuffer would be a fair amount of RAM if it were centralized. But, maybe not, if we're talking about the comparatively small/few rows each of the numerous column-driver chips handles.

Maybe they finally just tapped-in to the feature already in column-drivers mostly-unused for so long?

(Whoa, and that too could help explain those single-chip VGA-to-LCD doodads for $15 from ebay.. Vertical scaling is a big ask from such a tiny chip from the era those came out; a chip that *also* has 3 100+MS/s ADCs, and presumably a dual-port framebuffer, and has to *change* the input-pixel/line-rates (and even framerate) to work with a different-resolution panel... hmmm... You know what wouldn't be so hard? alternating which row gets the data during each refresh... or telling the column-driver shift-register to hold the data from the previous row and repeat it to the next. And, now that I think of it, I had seen that effect in many panels I experimented with... Definitely not in the panels' datasheet! Maybe the col-drivers', if we could even figure out their partnumbers!)

So, maybe this isn't so much a dig through history as a quest to find data on epoxy-blobs and unmarked silicon...

The history portion may be more about thinking about how these things are/were used in less-well-known ways. E.G. many later SDRAM manufacturers were probably aiming at the biggest market (PCs), but also didn't want to be left-out in other markets (maybe servers, or Macs, or GPUs? Medical equipment?...).

Maybe those column-drivers in that 64inch gaming LCD with 120Hz refresh are the same ones, or slightly improved, once used in a highly power-conscious PDA...

Maybe these "undocumented" features are actually well-documented...

Read more »In my experiments with TFT LCDs I did a LOT of taking-advantage of the fact that *by-design* (and by name) Thin-Film-Transistor LCDs have a transistor (and dynamic RAM in the form of a capacitor) at every pixel.

Essentially a write-only DRAM framebuffer, which is why they are so much less prone to flicker than STN LCDs... each pixel *holds* its value until the next time it's refreshed.

Or at most, until the capacitor discharges, which I've found to be on the order of one second... (as low as 1/4, as high as 5!)

Yes, I have several projects whose refresh-rate is about 1/2second per frame, or 2Hz (And you need 120?!).

(Handy for slow microcontrollers to make use of an old 1024x768 laptop LCD)

....

But, frankly, that wasn't really unexpected. That was the whole reason I started those experiments.

I did, however, run into some *very* unexpected odditities which I really wanted to be able to take advantage of, if they were reliable...

One such oddity occurred when I messed-up the number of Hsyncs; too few hsyncs resulted, as one would expect, in the top portion of the display being refreshed. The unexpected part was that the bottom retained its image, without fading.

Only one of the 4 displays I was working with had this "feature". The others eventually faded where they weren't refreshed.

But it got even weirder, because several times it would refresh in the middle of the display, and as if that wasn't weird enough, both the upper and lower unrefreshed portions retained their images without fading.

Almost like it would be somehow possible to *intentionally* refresh any arbitrary row[s] at-will...

...which, of course, would be perfect for a slow microcontroller...

say one had a graph in the upper half, showing power-usage throughout the day... or maybe a weather-display... It only needs to be updated once a minute. Below that there could be a user-interface, or textual information changing/scrolling regularly.

...

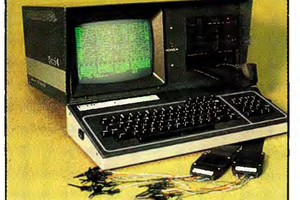

The other day I came across a really cheap source of displays used in PDAs, like the B/W Palm series. These are STN, which I actually like the look of and daylight-readability... I got so excited I completely forgot that TFT is what made all my prior projects possible, and these aren't TFT. Heh!

But I ordered a lot, so I started thinking about how to drive them with my slow microcontroller-of-choice...

Yeah, well, we're not talking 1024x768, nor 18bits per pixel, we're talking 1bpp at maybe 160x160. Even my slow-uC-of-choice could surely handle that and other tasks as well.

So I started looking into the undocumented pinout... trying to derive it from things on-board. Looks like it's a typical dot-matrix STN, row drivers, col drivers... essentially continuously raster-drawn... The row/col drivers are silicon on ribbon cables, no chip numbers I can see.

But there is a separate chip, which I gather is responsible for the weird voltages LCDs require....

And it, apparently, is somewhat specialized in some way I don't quite understand, but apparently some of that specialty carries-over to the row/col drivers. So, I gather, it's *very* likely the unmarked row/col drivers are the ones recommended in the marked chip's datasheet.

So, I dug up their datasheets, and sure-enough they look pretty typical.

Wait, What did I almost scroll over?!

"160x240bit RAM"

RAM in a column-driver?!

What a read followed thereafter!

This otherwise normal-looking column-driver has a full-on [write-only] framebuffer.

You treat the display like any other, continuously sending raster-info at 60Hz... Timing is important, yahknow... not easy for slow uC's doing other things...

But these column-drivers *remember* the rows you last sent... so if you decide to stop sending, it refreshes automatically from the internal framebuffer!

The idea makes sense... It's advertised for power-savings. The display-driver can go to sleep without affecting the display.

Alright, still, even one frame in...

Read more »A perfect example is the burst-length option in SDRAM (which, stupidly, I hadn't really thought could apply to DDR, and others thereafter, until just now).

PC-66/100/133 SDRAM officially has burst lengths of 4 or 8 bytes/columns. But I have experimented with *numerous* from *numerous* manufacturers and have found that they *all* support bursting of the entire row (often 512 or 1024 bytes, but I've encountered as low as 128 and as high as 2048).

I used this technique extensively in projects like #sdramThingZero - 133MS/s 32-bit Logic Analyzer

....

The key, here, is that many of the datasheets for the chips *don't* list row-burst as an option.

Many brands seem to write their datasheets to match generic specs, rather than actually documenting what their product is capable of. Weird.

...

There are many other tangents I could go on, regarding how it seems SDRAM was somewhat intentionally designed with *backwards*-compatibility with *non*-synchronous DRAM e.g. that from 72-pin SIMMs... that never really caught-on since dedicated controllers quickly became commonplace...

But one example is that all the instructions (except the Load Mode Register, ironically) are explicitely-allowed to occur repeatedly back-to-back. The effect of which is akin to holding the Read-pin on normal DRAM... it just keeps outputting the same data until it's released; *asynchronously*. Except, of course, in the case of SDRAM, it just waits to release until the approrpriate number of clock-cycles later.

But, you can imagine, if the SDRAM is running at 133MHz and it's replacing a DRAM in a PCXT running at 4.88MHz, then for all intents and purposes, the SDRAM treated asynchronously would look asynchronous to the 8088... And... frankly...

I think there was intent behind that. Long forgotten intent

I've documented a lot of my SDRAM findings elsewhere on this site... And right now I'm excited about a new finding regarding LCDs, so I'mma get to that next.

(Am also reminded of some great hackery I've seen elsewhere... was it EDO DRAM can be tricked to look like FPM (or vice-versa?) by simply repurposing one of the pins... So, the only thing keeping them from being drop-in compatible is the fact they're usually soldered onto a carrier-board for one specific mode... Great discovery that opens a lot of doors for retrocomputing. But part of me thinks it was actually by-design, and was probably even documented that way in early datasheets, but long forgotten thereafter as the newer tech replaced the older)

Create an account to leave a comment. Already have an account? Log In.

Become a member to follow this project and never miss any updates

By using our website and services, you expressly agree to the placement of our performance, functionality, and advertising cookies. Learn More

Eric Hertz

Eric Hertz