-

It's been a long strange trip

12/14/2018 at 00:35 • 0 commentsHowever, V3 is now operational. Stereo vision, LIDAR, integrated PID control of yaw and pitch, yadda,yadda,yadda. I have decided to open source the entire system, in spite of reservations of my own, and frantic advice to the contrary. After all, if you want to pry open Pandora's box, might as well not pussyfoot around.

Here's a teaser - this is a vertical LIDAR/imaging scan cycle of the full camera head. Images, github links, etc, will follow over the holiday season. -

OK, we've got results, and they were good!

05/28/2018 at 18:26 • 0 commentsSo of course the first two write-ups went to the old Google Tango community for old friends, and to LinkedIn, cause this is business, at least to some degree.

Over the coming weeks I'll be updating the project information here and providing both general architectural details of the system as whole, and more specific details for those who wish to take advantage of the fundamental camera management system that supports image rectification. The former depends on some work I'm not disclosing publicly, the latter is completely backed by the open source Dantalion and Procell projects and should be directly replicable or I will have a very stern talk with them.

Tango Board (vaguely more technical, and much less formal)

Final Output Vis

-

At the end, you're always trying to pound the back side of an elephant, through a knothole, in 5 minutes.....

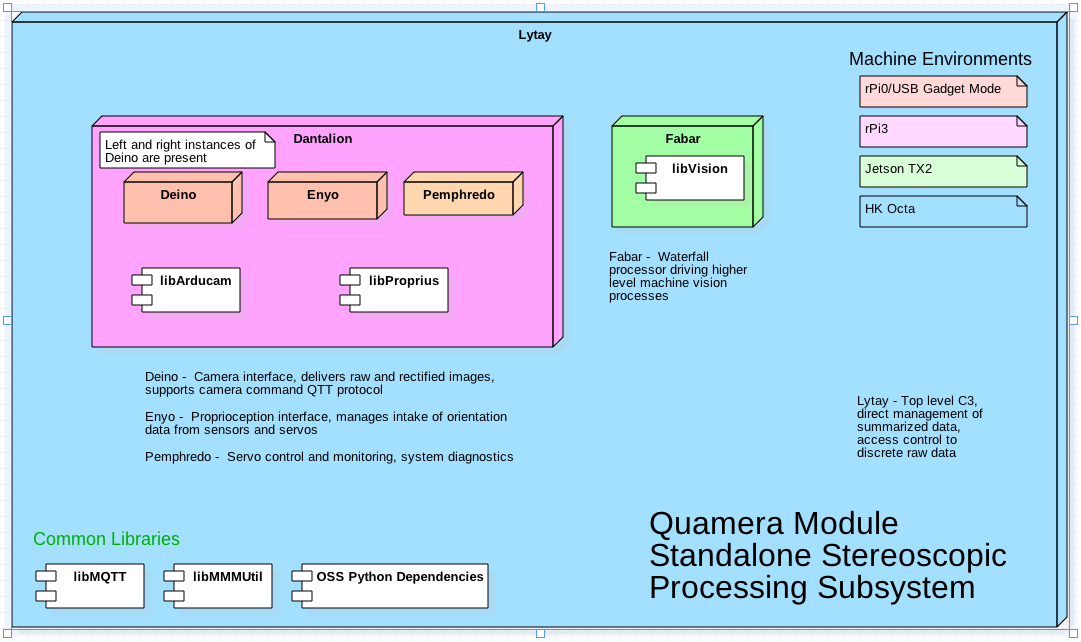

05/03/2018 at 23:10 • 0 commentsGeneration 2 is much smarter than gen 1. Also meaner and more stubborn. For the moment, here's a pic of the working system architecture

![]()

-

A *lot* has been going on behind the scenes

03/20/2018 at 00:14 • 0 commentsSo, we're going to do machine vision with low cost imagers, and by machine vision we mean integrated stereo depth. Hmmmm. Houston, there's a lot of noise in this data :-( Hence the long hiatus whilst a genetic algorithm was used to solve the problem of exactly which images might be good calibration images. Soon, a description of the new stereo camera assembly will be arriving, and that one should (hopefully) spin up quicker. And its a hell of a lot smarter than G1.

Here's a poster showing the results of a successful stereo fusion to depth integration

Here's a movie showing the genetic algorithm solving image selection for calibration

-

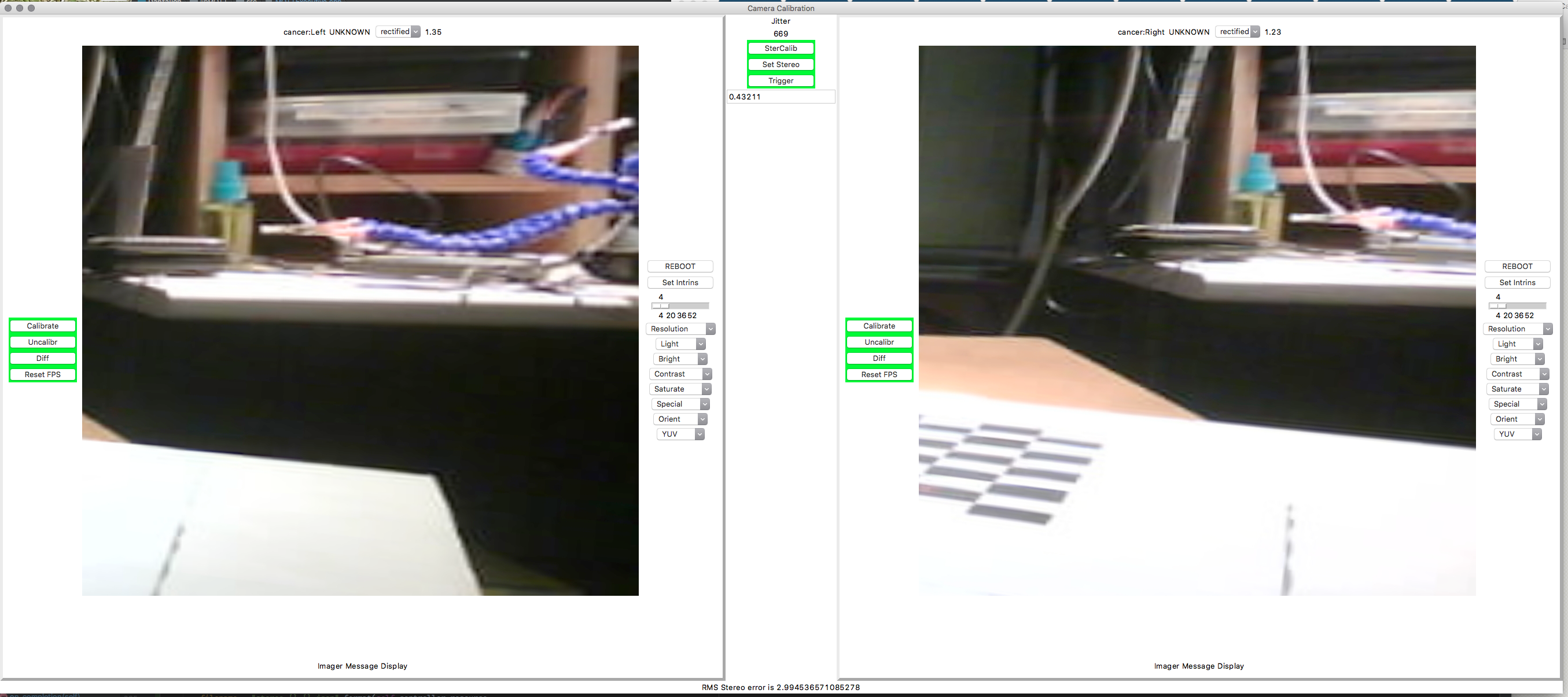

Stereo rectification trying to exit development

01/28/2018 at 01:28 • 0 commentsI may have been quiet here, but much has been going on in the github side of the house. Refining my previous claims, the second generation will consist of an as yet undetermined number of stereo camera pairs on elephant trunks. Before worrying about grander things, one needs a working stereo camera.

The patient is still on the table, wires dangle out everywhere, and organs keep getting swapped in and out. That said, here's a zoomed picture of the systems current ability to rectify a stereo image pair in order to do successful depth measurement.

![]()

This shows a high delta visual region AFTER (mostly) successful stereo rectification (sliding the images around so everything aligns just right). This is the sweet spot for where it's got the strongest depth measurement abilities, which it does by measuring the horizontal offset between equivalent points. These images have been aligned vertically to allow for measurement of distance by measuring the horizontal angle between equivalent elements of the image.

In short, with the depth map that can be derived from these pictures, this is how you keep your robot from bumping into things. :-)

All the unique code to do this is on, or will be on github soon. I'm working on trying to document the plethora of library and external system dependencies, as well as just making an OS image available in case I missed anything. None of this is a secret, much that is good comes from others, so if I can help light the way, it's in my interests too.

-

Baseline G2 Beaglebone Control Library

11/18/2017 at 14:54 • 0 commentsOK, Generation 2 has officially commenced - a number of the prior libraries on GitHub have been archived as they are now superseded by Dantalion, which is the integrated first level executive that runs on the BeagleBones and directly controls the cameras. The most significant change is that each Beaglebone is now used to control a single pair of cameras and openCV is now part of the operating environment at this level. You can find Dantalion here.

-

Noise,noise, and damn noise

10/14/2017 at 13:56 • 0 commentsAfter a month and a half of solid misery, I'm having to change the design - the original design had 4 cameras operating on a shared SPI bus, meaning each Beagle controlled 8 cameras over two busses. Unfortunately, this led to an analog nightmare of noisy circuits and corrupted data. I'm a software guy, I did what I could and decided a strategy change was better than falling down the rabbit hole of analog EMI interference and other such mental anguish. Right now, I am firmly of the opinion that Kirchoff can go straight to hell. :-)

So, the design is being reworked to drive single cameras off the SPI buss, i.e. there will be 12 Beaglebones driving 24 cameras. This is actually a good thing, indications from the downstream processors was that they'd be a lot happier if more and better work was done upstream on the images, and we've got enough spare cycles now to dump OpenCV onto the bones.

So stay tuned, next generation rings are being ordered and built out - indications are that real time video will be available in lower resolutions, and that max resolution of 1600x1200 still comes in at a solid 3 frames/sec

Oh, and I would have tried with Raspberry Pi Zeros, but apparently, you can only order one at a time - I elected to completely remove all Raspberry Pi's from all aspects of the project, because I think the vendors are playing games. I can get bones, I trust bones, so that leads to a nice uniform environment.

-

I'll be damned -- it's running

08/24/2017 at 19:19 • 0 commentsMeet B.O.B. the robotic computer eyeball

-

A bit of history....

08/24/2017 at 16:15 • 0 commentsIn getting ready for the exhibition at the Dover Mini Maker Faire, I rounded up the various suspicious ancestors in the Quamera project, along with Bad Old Bob (B.O.B) - here's a short video into how an afternoons fun turned into a complete monster --

-

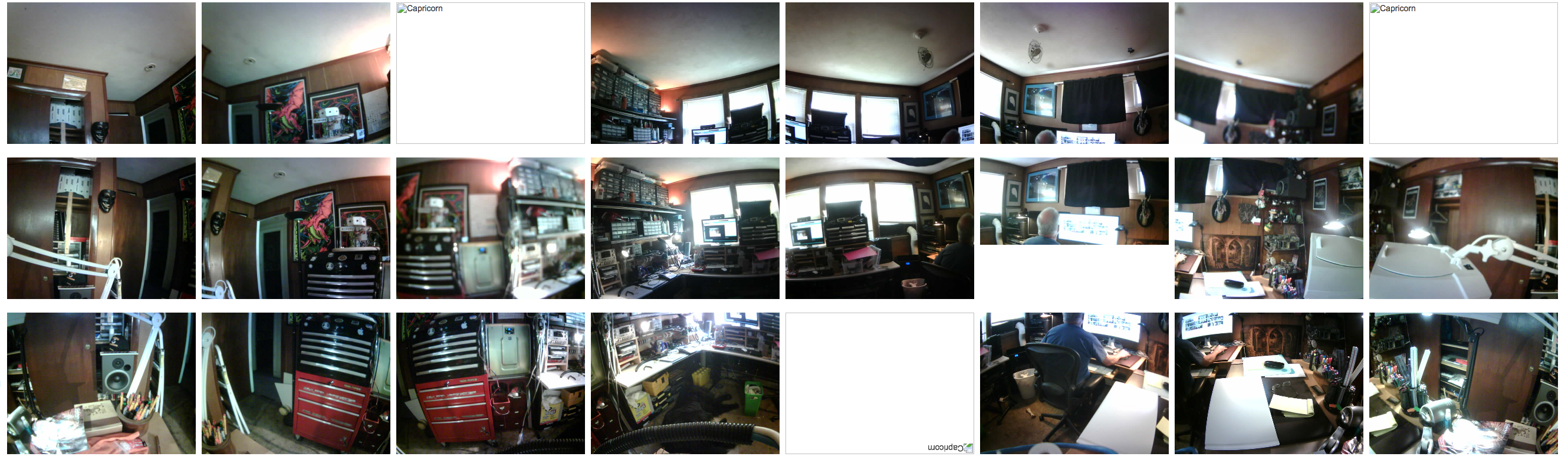

We're up, sorta, barely...... fingers crossed

08/23/2017 at 19:37 • 0 commentsOK, we have 3 problem children cameras and focusing everythings quite the pain, but we've got a monitoring grid up - of course, if that's all I wanted to do I'd just buy a spherical lens

![]()

Mark Mullin

Mark Mullin