-

cie_2000 color diff

08/19/2015 at 17:55 • 0 commentsColor diff. is now implemented and give a nice easy numb of how close 2 "objects" are in color. No more RGB rough approximation.

Tonight I will get the code on GITHUB so anyone can look at the rough code. It needs lots of tweaking (format, comments, faster routines, etc....).

-

Entry complete! (I hope)

08/17/2015 at 18:04 • 0 commentsLICENSING:

OFFICIAL 2 MIN VIDEO:

HIGH LEVEL DOC:

https://github.com/TheOriginalBDM/Lazy-Cleaner-9000/blob/master/docs/clean_sweep_diagrams.pdf -

Found what I was after

08/14/2015 at 15:05 • 0 commentsAnother quick search on Inverse Kinematics for Raspberry Pi turned up a project for the meArm that is exactly what I was thinking about. I have forked a copy to my account in case I need to make changes. Once this is implemented I can put X,Y,Z (fake Z) coordinates on the trash and have the arm navigate to the specified location. Once I have this implemented it will complete Phase 1 of the project in being able to detect trash and pick up. The next milestone will be getting the robot to move to trash out of reach, pick up, and navigate to next piece until it looks clean.

-

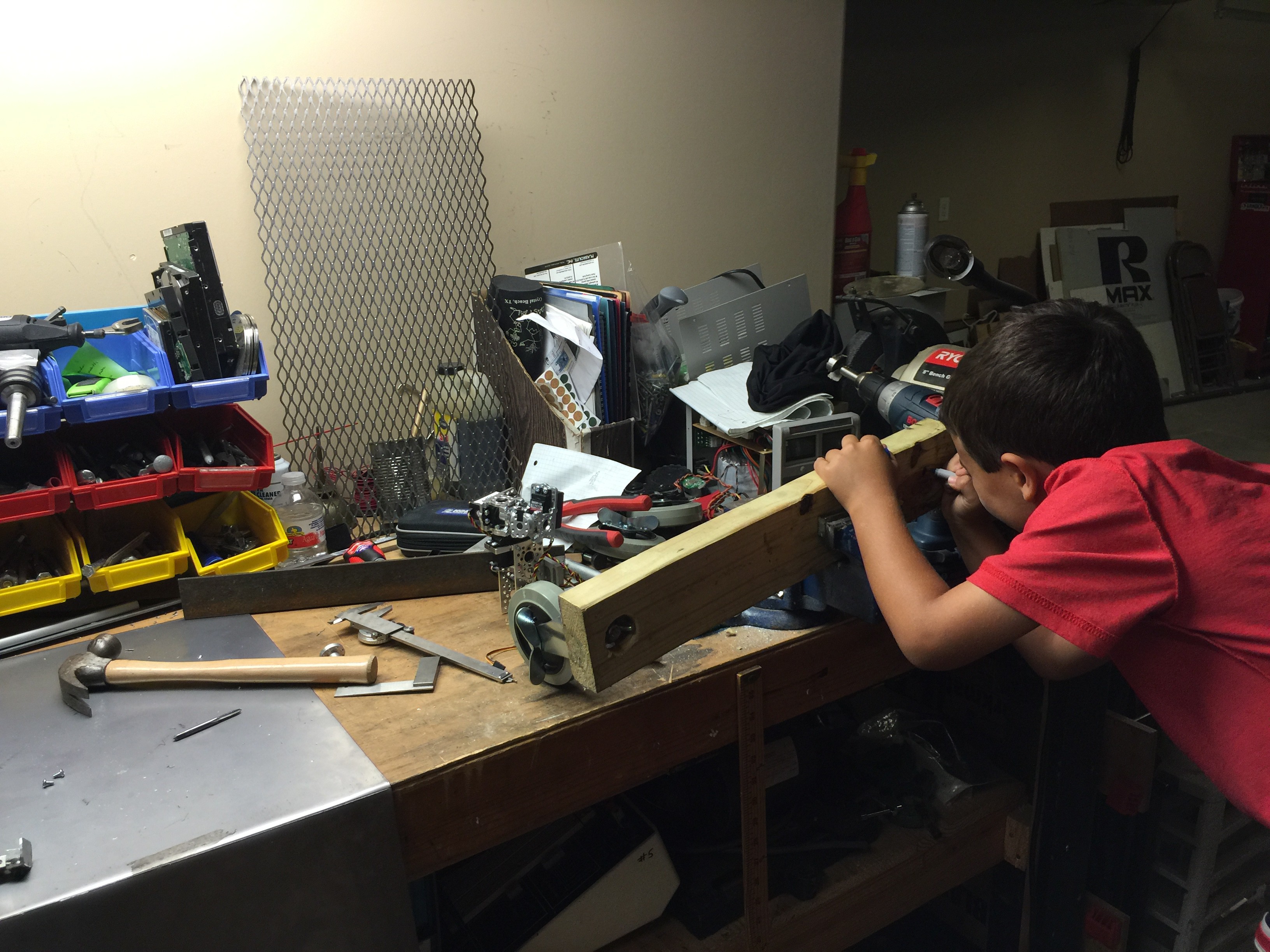

Back at it again

08/02/2015 at 03:38 • 0 commentsBig dog and I were finally able to get into the garage and finish building the arm. Went smoother than I thought even though it was at least 100 degrees in the garage. Enjoy the pics. Yes big dog left me 10 minutes into building to do his own thing.

-

Machine learning arm?

06/27/2015 at 15:16 • 0 commentsI did a little research yesterday in how to figure out the best way to have the robot pick up objects and place into "the mouth". This weekend @ night I'll look more into this but I may need to install PyBrain onto the Pi to assist with this. It seems like one way to to this. I'll also post more details on the mouth. I have some roomba wheels which will feed pull objects in :).

I am new to robotics to I have a theory (which is probably wrong). Do I really need depth? Can it be simulated with a single camera? Is everything just a co-ordinate since all distances are limited by the arms (move arm to approximate coordinate, distance measure to object on arm, finish grasp)?

just in case I'm keeping these links safe for later.

Kinect stuff:

http://openkinect.org/wiki/Main_Page

Machine Learning Links:

http://www.cs.ubc.ca/research/flann/

-

Claw in action.

06/17/2015 at 01:18 • 0 commentsTomorrow I will add the elbow and hopefully by Friday I will have a shoulder working. That would complete the arm's since the other side is just built in reverse.

-

Name change and greenlets

06/15/2015 at 18:11 • 0 commentsFamily said change the name back to my lazy 9000 series. Done! Moving on I sort of mapped out the flow of data and much is to be done. I am looking for an python actor framework to use since so many moving parts and checks are involved. For now I am going to use gevent to keep moving ahead. The servo controller is out for delivery today as well.

-

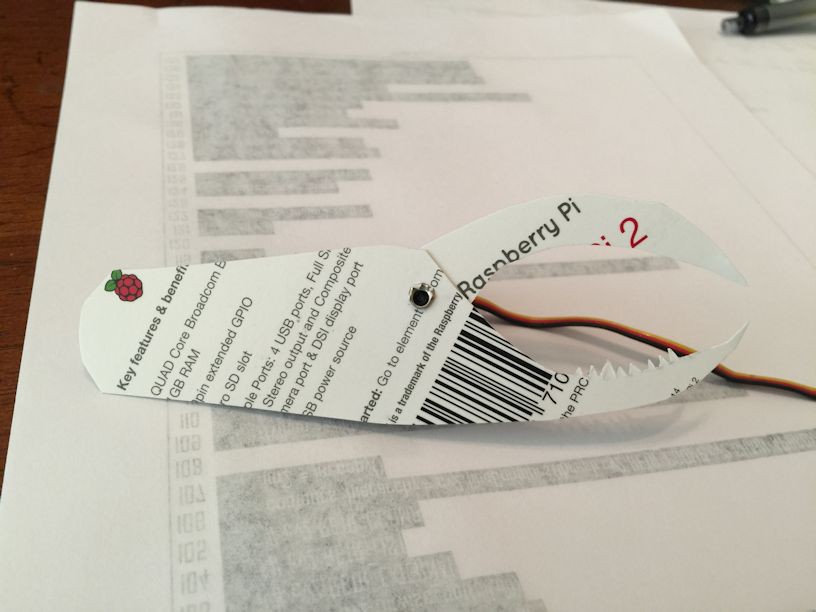

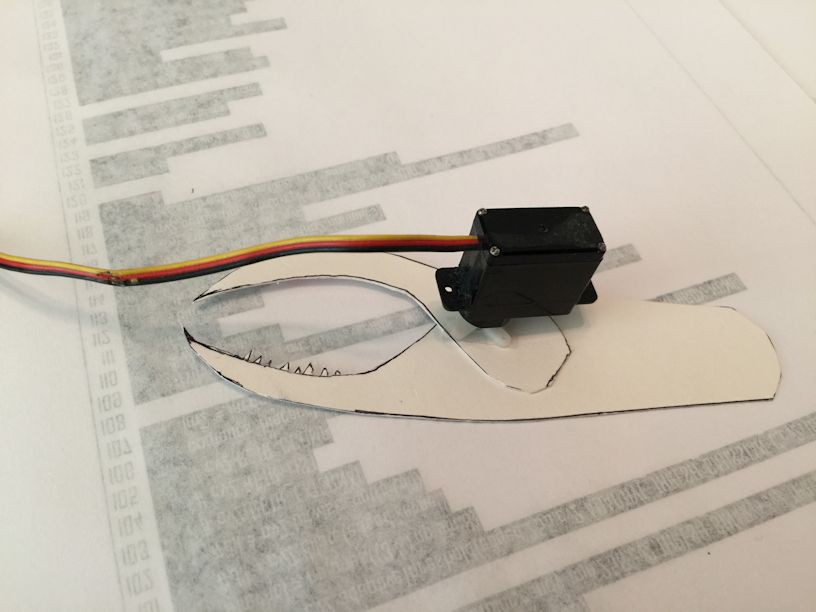

Paper arm test

06/12/2015 at 19:43 • 2 commentsBuilta quick prototype of what the claw will be to grasp objects. Once I can have a demo working I will move to aluminum. PWM code coming this weekend.

![]()

![]()

-

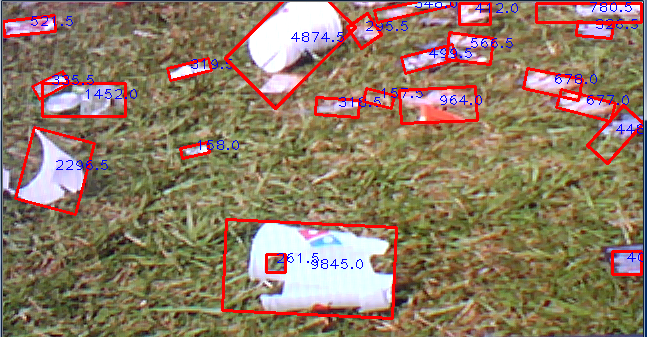

trashbot or poopbot, you decide...

06/06/2015 at 02:15 • 0 commentsUploaded a few pics for Mike but the garbage eating beast is still in hiding. Just think of a crab w/o legs and other features, that is my proto bot. Images shows easy identification of objects of non-earth tones with area as the number presented for each rectangle. Per wife's suggestions if this does not make it to the next round it will be a pooper scooper for our 3 dogs that crap about 50lbs (seems like) a day in the backyard.

![]()

-

Pi time

05/29/2015 at 00:31 • 0 commentsHardware has arrived. Time to upload what I have worked on and see what happens.

Lazy Cleaner 9000

A robot that can clean an area of human liter in an autonomous fashion for easier disposal.

BDM

BDM