If anyone is able and willing to help with this - I'm happy to take advice, even if it's about totally different approaches. No aspect of this (apart from the overall goal) is carved in stone.

About the HDEV experiment

Here's a link to the live stream and some info:

https://eol.jsc.nasa.gov/ESRS/HDEV/

It's supposed to include some tracking as well, but that doesn't show up in my browser. I like to open

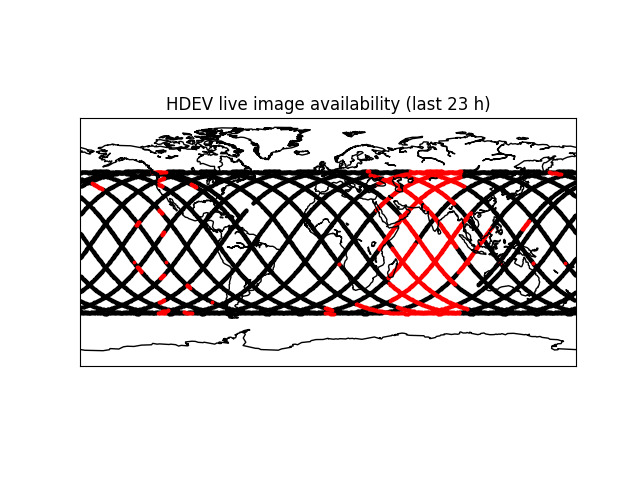

along with the video to get an overall feeling for the station's orbit and where live video is available.

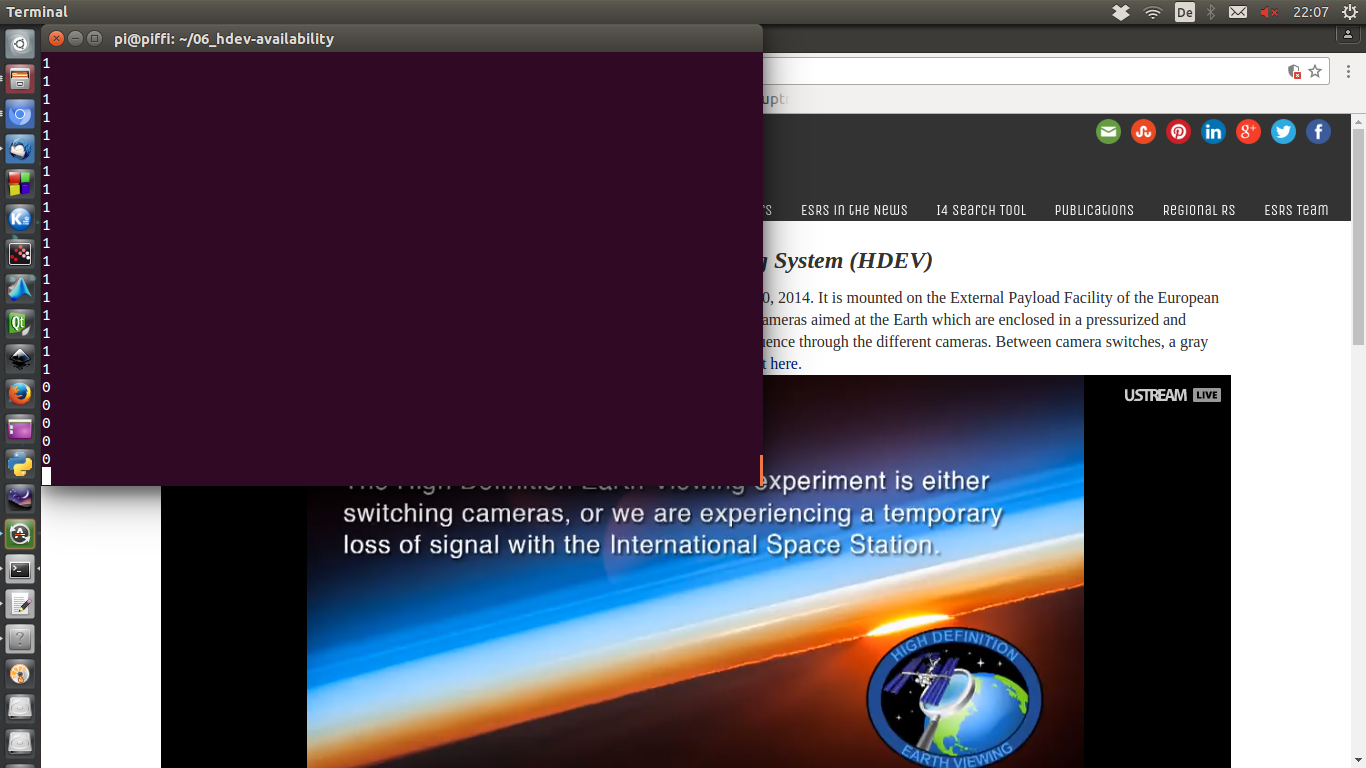

If you watch the live stream for a while you'll notice that it doesn't always show live images. Sometimes a still image is shown, stating that there's currently no live video available:

That's boring and a wall-mounted display, for example, could show something else during that time. That's the initial thought behind this project.

What I'm aiming for

Well my far goal is to have a small screen in my living room that shows either the HDEV stream - when an actual live image is available - or some other interesting stuff that I haven't specified yet.

Current state

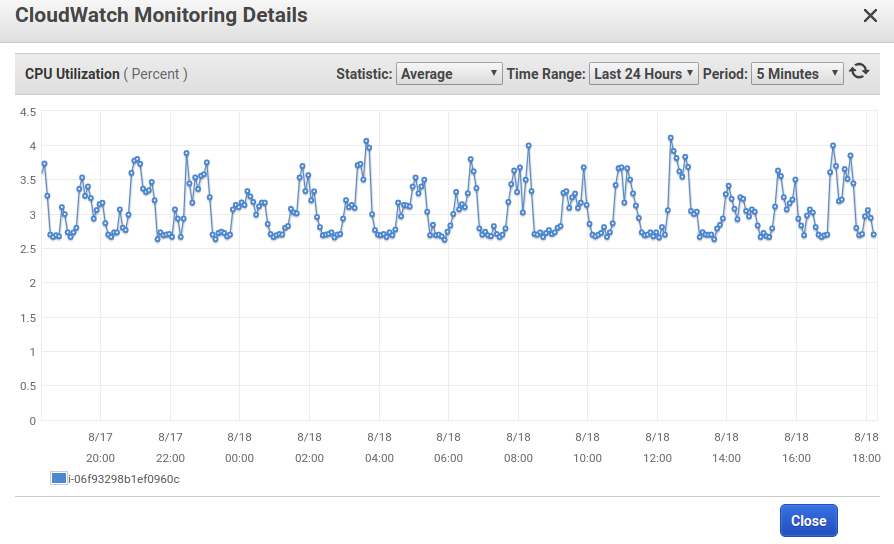

I created an amazon web services account and use one of the free plans to run a linux system. The first step is to grab an image periodically, which is done by the following command:

streamlink -O http://ustream.tv/channel/iss-hdev-payload worst | ffmpeg -i - -r $fps -f image2 -update 1 out.jpg- streamlink receives the stream in low quality and passes it on to ffmpeg

- ffmpeg converts to jpg at 5 fps (that variable is set in a script) and writes to out.jpg

The whole command is wrapped in an endless loop, so it automatically restarts when there was a problem with that caused streamlink or ffmpeg to abort/crash/whatever.

The second step is to analyze out.jpg whenever a new image has been grabbed and written. This circles around inotify. A python script uses pyinotify to get a notification whenever out.jpg was modified. The image is then read and compared against the "no live image available" image using structural similarity (I use this algorithm because I found a simple example here: https://www.pyimagesearch.com/2017/06/19/image-difference-with-opencv-and-python/ - seems to work ok!).

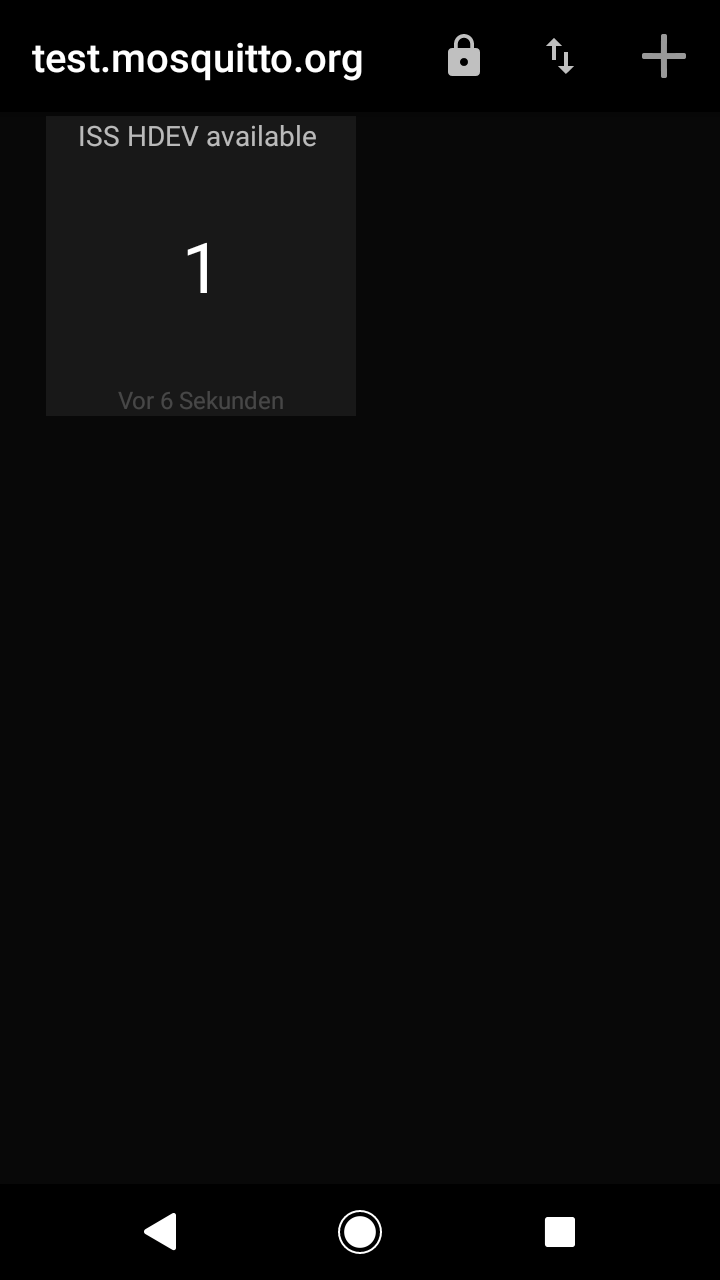

The result of this comparison is a bool value that is published via MQTT:

- server: test.mosquitto.org

- topic: iss-hdev-availability/available-bool

- messge: a single character, either "0" or "1"

Making use of it

The first vaguely useful thing I did was to combine live image availability data with the ISS' current location, to draw and availability map. The corresponding project log is here:

https://hackaday.io/project/14729-iss-hdev-image-availability/log/151304-availability-map

Christoph

Christoph

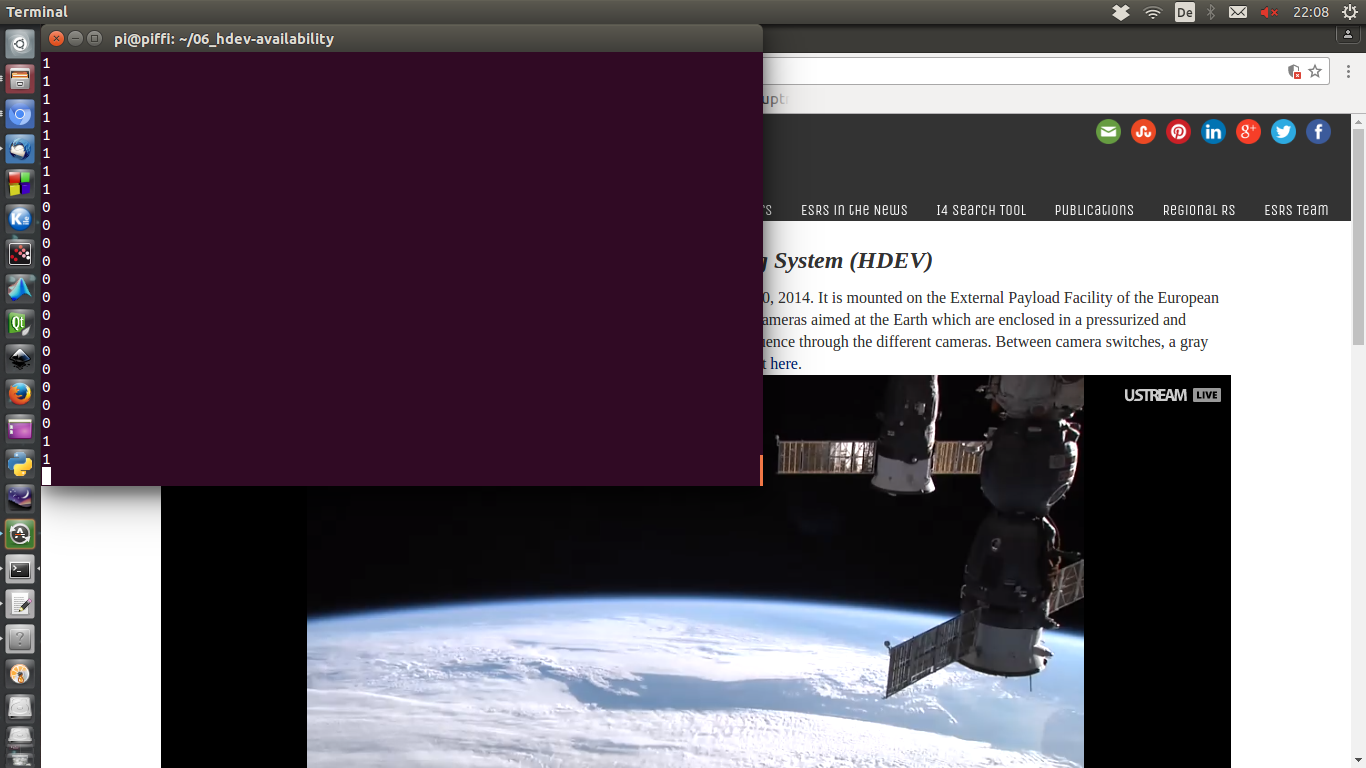

The output shows five zeros in a row, meaning that, at 0.2 fps, the still image has already been there for about 25 seconds. After a while the signal is back and we get this:

The output shows five zeros in a row, meaning that, at 0.2 fps, the still image has already been there for about 25 seconds. After a while the signal is back and we get this: The script spits out 1's again after about 13 x 5 = 65 seconds. Great! In the meantime, the ISS passed by somewhere over argentina or the south atlantic.

The script spits out 1's again after about 13 x 5 = 65 seconds. Great! In the meantime, the ISS passed by somewhere over argentina or the south atlantic.