-

Escam Q8 notes

01/31/2019 at 21:01 • 0 commentsThe answer is no. It doesn't provide a USB webcam interface as sometimes claimed by the internet. It has no USB device port & doesn't even allow access to the SD card over USB. We can assume the venerable USB webcam is dead & everything is now using TCP/IP.

It only provides a delayed H.264 stream over wifi. It claims to support a standardized protocol for security cams called ONVIF. More confusingly, IP cams have shifted to being marketed only as security cams, rather than a replacement for the venerable USB webcam.

Source code for a Linux viewer is on https://gitlab.com/caspermeijn/onvifviewer but it's a hideous nest of dependencies just to extract a simple URL.

Neither could iSpy for Windows or Onvifer for Android access it.

After thousands of worthless answers on stackoverflow, it finally streamed to ffmpeg using

ffmpeg -i rtsp://admin:password@10.0.2.78/onvif1 -vcodec copy /tmp/test.mp4

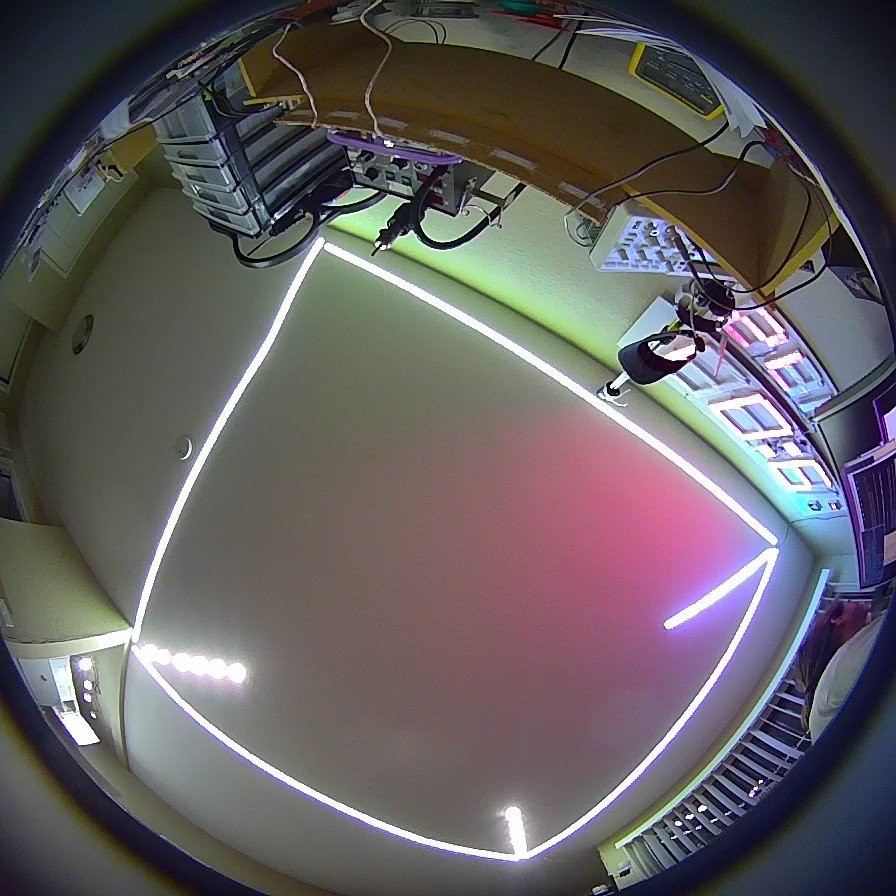

The resolution was 896x896.

![]()

It requires an access point to stream video. It initializes as an access point only to allow the user to configure it to use an external access point. Then, it converts to a station for the rest of its life.

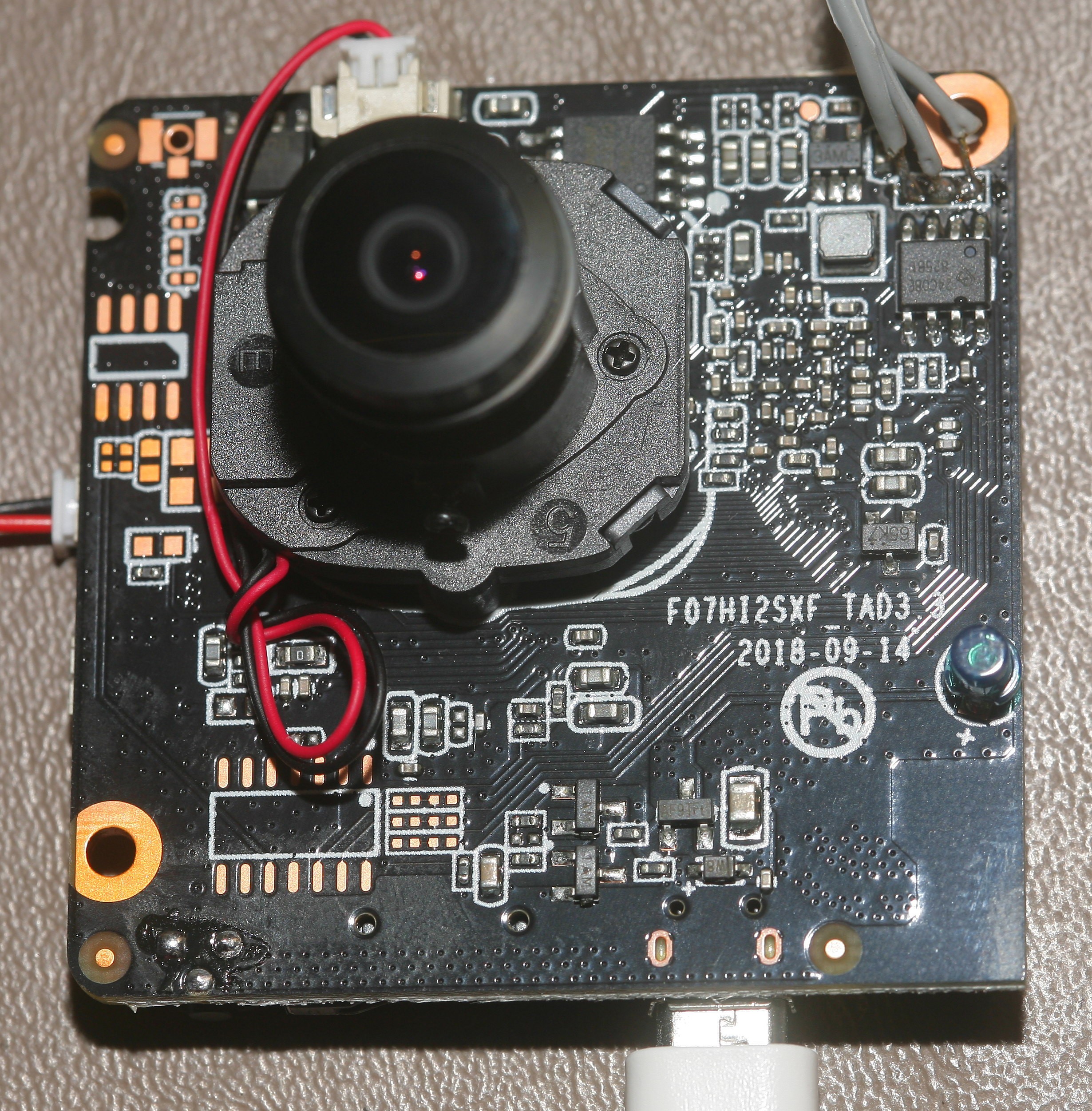

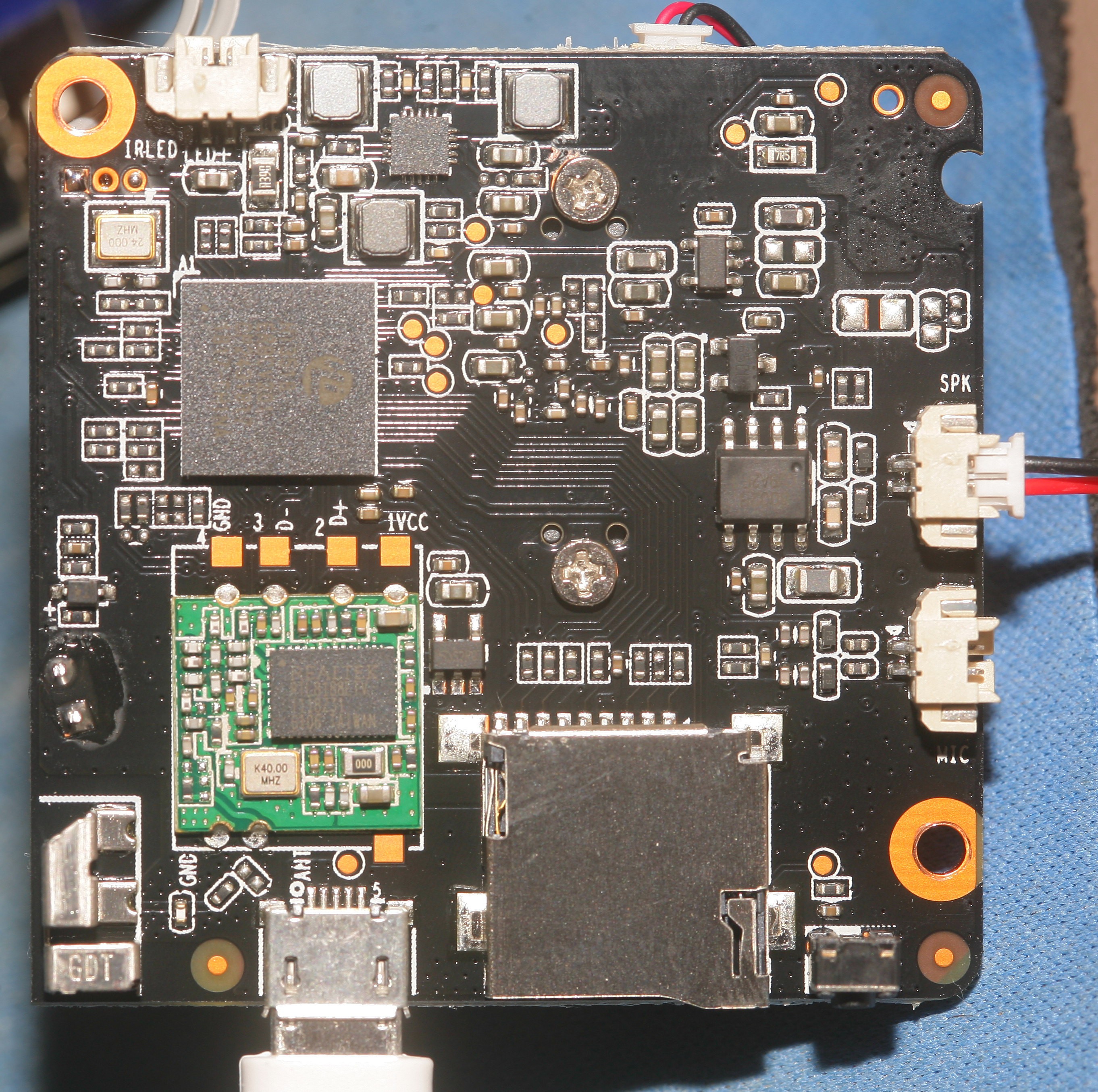

Once the ugly case was removed, a much smaller Hi3518 board was revealed, with RTL8188 USB wifi dongle.

![]()

![]()

The serial port for debugging was easily spotted. Helas, the internet doesn't actually develop with it. They only use the debugging console to run ifconfig or list directories. The debugging console outputs diagnostics for RTSP requests, the IP address, & the MAC address, which are very useful for troubleshooting.

There are some notes on using the debugging console.

https://felipe.astroza.cl/hacking-hi3518-based-ip-camera/

https://www.gadgetvictims.com/2014/05/outdoor-ip-camera-amovision-am-q6320.html

http://mark4h.blogspot.com/2017/07/hi3518-camera-module-part-1-replacing.html

The datasheet for the SOC:

https://cdn.hackaday.io/files/19356828127104/Hi3518%20DataSheet.pdf

The latency with H.264 & the app varies from 1-10 seconds. The chip physically supports JPEG for no latency, but it's not exposed on the app. The macbook can't provide a wifi access point unless it's hard wired to the internet. A raspberry pi would have to be used as a portable access point. It's not as bad as it sounds, considering the only alternative would require connecting the USB host on the Hi3818 to a USB bridge before connecting it to the macbook.

-

Lion core workout with automated counting

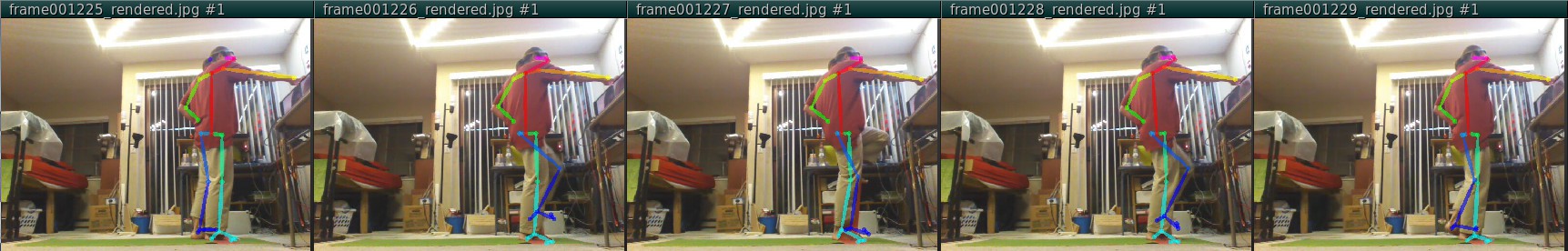

01/19/2019 at 09:46 • 0 commentsThe complete workout with all the body positions shows how reliable that counter eventually became. No more lost counts. More attention spent on the TV. Just can't use wifi for anything else.

Made the GPU server just send coordinates for the tablet to overlay on the video. This was within the bandwidth limitations, but it still occasionally got behind by a few reps. It might be transient wifi usage. The next step would be reducing the JPEG quality from 90. Greyscale doesn't save any bandwidth. There's also omitting the quantization tables, but by then, you're better off using pipes with a native x264 on the tablet.

It never ceases to amaze lions how that algorithm tracks body poses with all the extra noise in the image, even though it has many errors. It's like a favorite toy as a kid, but a toy consisting of an intelligence.

-

Countreps connectivity

01/13/2019 at 21:30 • 0 commentsThe pose classifier eventually was bulletproof. This system had already dramatically improved the workout despite all its glitches. You know it's a game changer because no-one watches the video. Just like marriage & politics, the most mundane gadgets no-one cares about are the most revolutionary while the most exciting gadgets everyone wants are the least revolutionary.

The mane problem became connectivity. Lockups lasting several minutes & periods of many dropped frames continued. It seemed surmountable compared to the machine vision. Finally did the long awaited router upgrade.

![]()

![]()

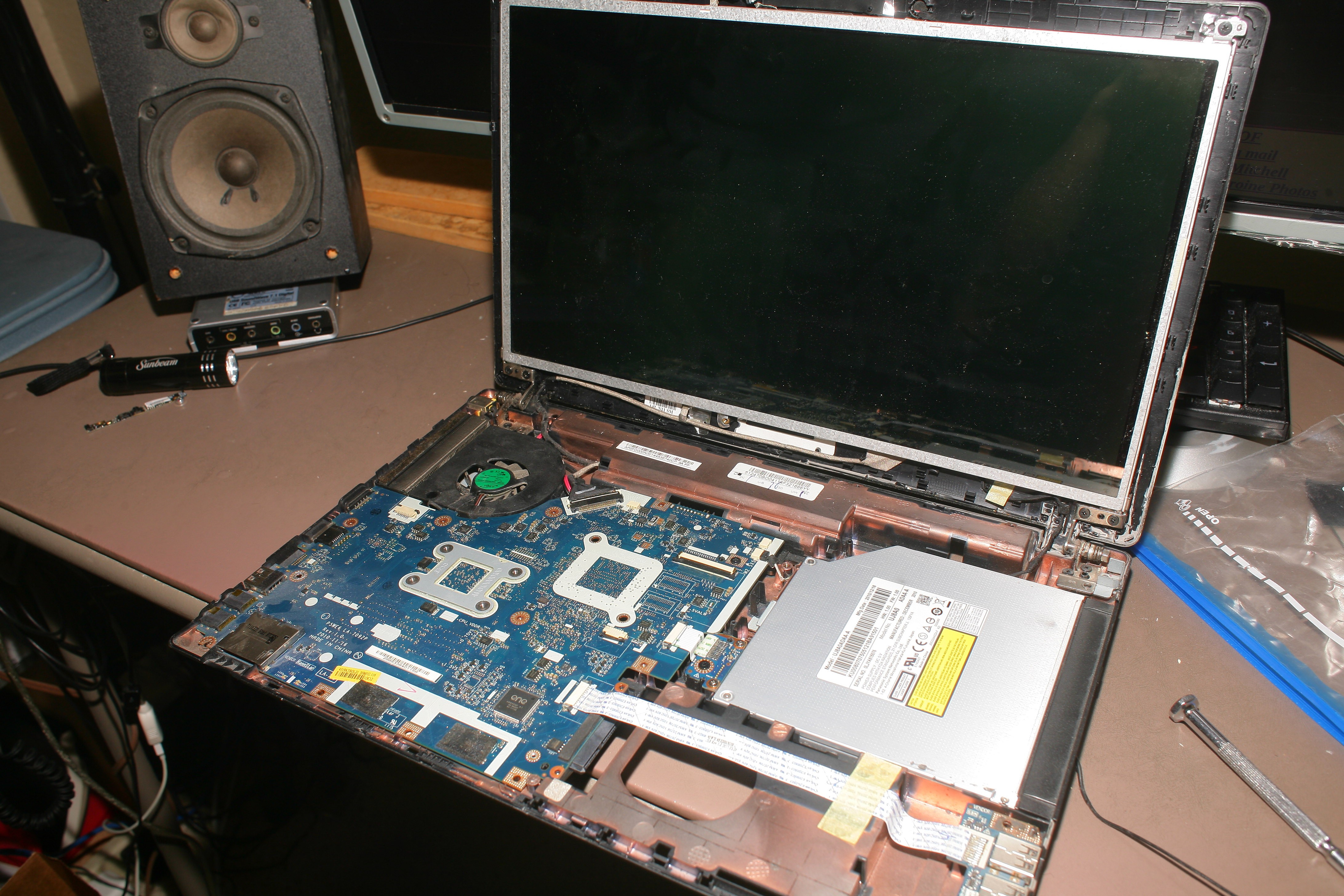

![]()

Its days of computing ended 6 years ago. It is now the apartment complex's most powerful router. Seem to recall this was the laptop lions used while dating ... the single women. Lions wrote firmware on it while the single women watched TV or ate their doritos.The other problem was for the pose classifier to work, it can't drop any frames. The tablet needs to capture only as many frames as the GPU can handle & the GPU needs to process all of them. Relying on the GPU to drop frames caused it to drop a lot of poses when a large number of frames got buffered & received in the time span of processing a single frame.

The hunt for connectivity eventually led to wifi not being fast enough to send JPEG frames both ways, at 6fps. The reason skype can send video 2 ways is H.264, which entails a native build of x264 so isn't worth it for this program. Wifi is also bad at multiplexing 2 way traffic. Slightly better results came from synchronizing the frame readbacks, but only after eliminating them completely did the rep counts have no delay & the lost connections go away. It counted instantaneously, even on the ancient broken tablet, but it didn't look as good without the openpose outlines. There's also bluetooth for frame readbacks or drawing vectors & statistics on the tablet, if H.264 is that bad.

-

Using spherecams with openpose

01/12/2019 at 00:41 • 0 commentsThe trick with the tracker is to use a spherecam on USB, but opencv can't deterministically select the same camera, scale the input, & display in fullscreen mode on a mac.

There is running a network client on virtualbox to handle all the I/O or fixing opencv. Running a network client in a virtual machine to fix the real machine is ridiculous, so the decision was made to fix opencv, uninstall the brew version of opencv & recompile it, caffe, & openpose again from scratch.

brew remove opencv

Openpose on the mac can't link to a custom opencv. You have to hack some cmake files or just link it manually.

Discovered openpose depends heavily on the aspect ratio & lens projection. It works much faster but detects fewer poses on a 1x1 aspect ratio. It works much slower & detects more poses on a 16x9 crop of the center. It works much slower on 512x512 & much faster on 480x480. Pillarboxing & stretching to fit the entire projection in 16x9 don't work.

Spherical projections are as good as equirectangular projections. The trick is detecting directly above & below with the 16x9 cropping. It's not going to detect someone looking down on it like the Facebook portal, but the current application doesn't need to see directly above & below. There's also scanning each frame in 2 passes.Despite paying a fortune to ship an ESCAM Q8 from China in 7 days, it was put on the same boat as the free shipping, 2 weeks ago.

-

GTX 1050 returns

01/11/2019 at 09:01 • 0 commentsAn edited video provided the 1st documentation of a computer counting reps. The reality was it only made 3.5fps instead of 4.5 & this wasn't fast enough to detect hip flexes.

![]()

It detected mane hair instead of arms.

Then it froze for several minutes before briefly hitting 4.5, then dropping back to 3.5. The only explanation was thermal throttling.

If only obsolete high end graphics cards could drop to $30 like they did 20 years ago, but tried running it again on the GTX 1050 with the framerate at 5fps. This actually allowed it to play 1920x1080 video with an acceptable amount of studdering, while also processing machine vision. They actually have some form of task switching on the GPU, but PCI express is a terrible way to transfer data.As it has always been with GPU computing, we have 32GB of mane memory on the fastest bus being used for nothing while all the computations are done on 2GB of graphics memory on the PCI bus.

-

Tracker issues

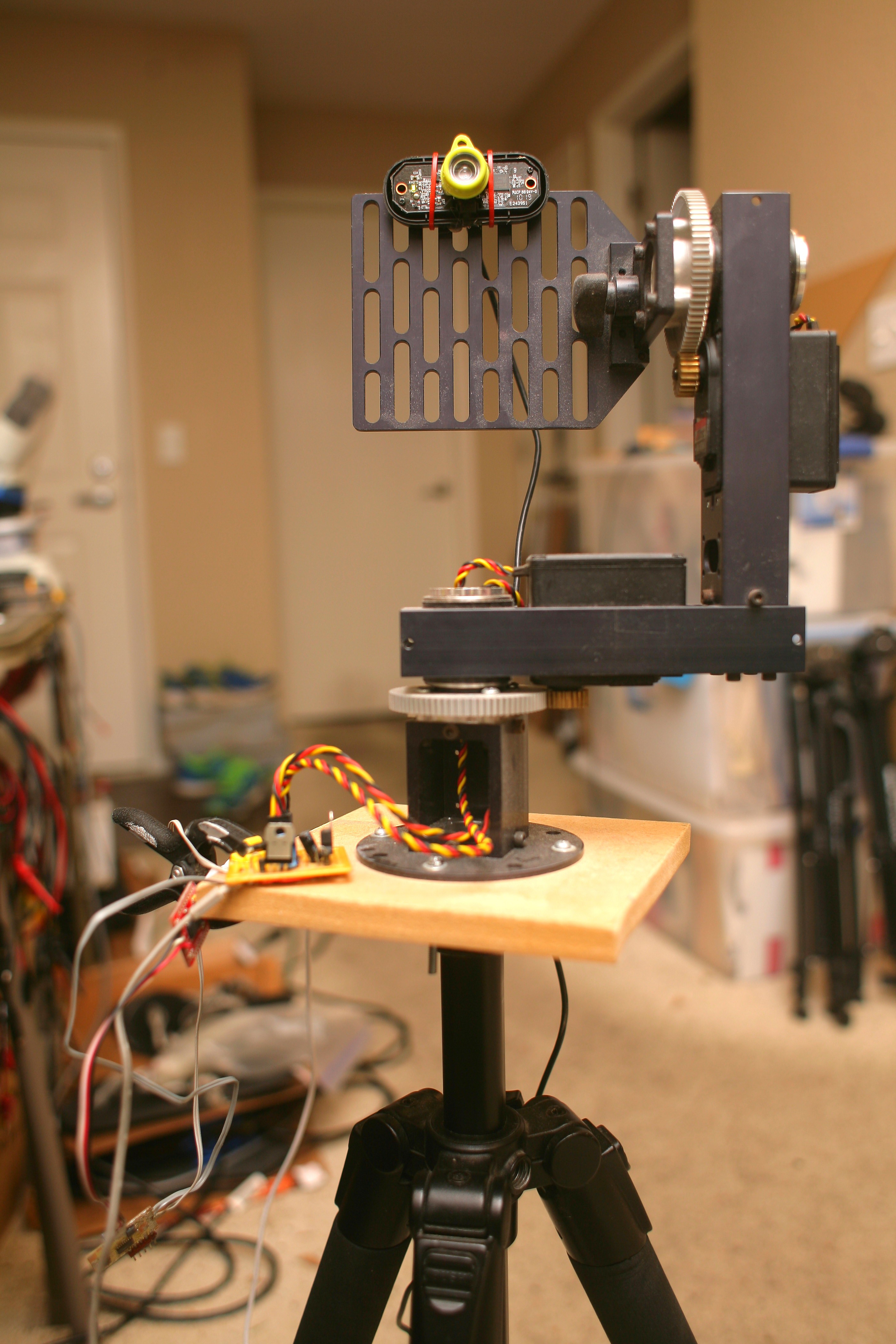

01/08/2019 at 06:39 • 0 comments![]()

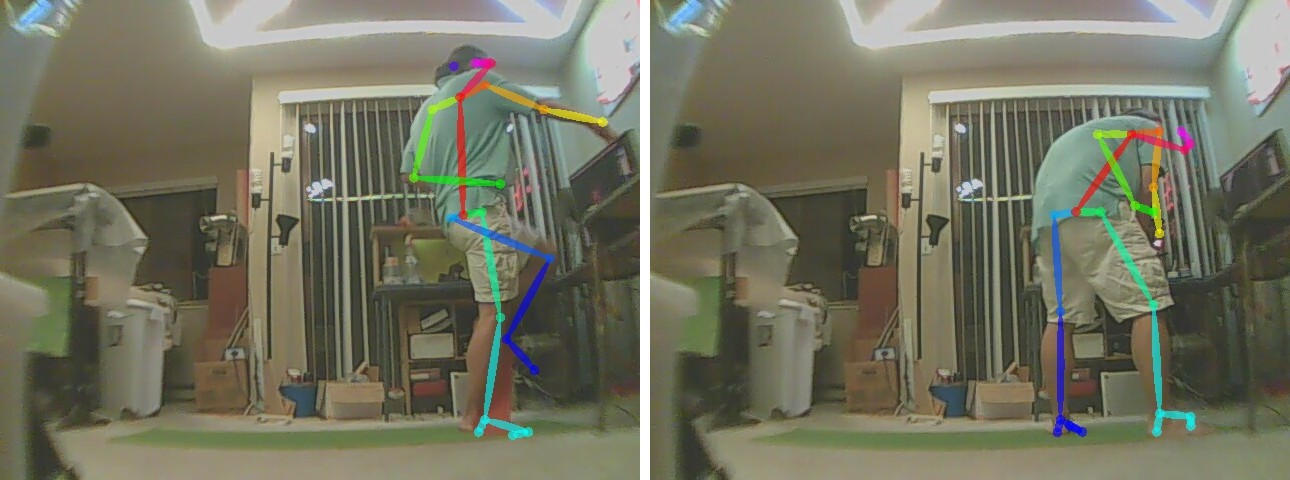

It began again with a webcam on the pan/tilt module. A wide angle lens was required for any tracking at 4fps to have a chance. 6 years on, the lion kingdom was still determined to find a use for that pan/tilt mount.

Tracking seemed easy: define a bounding box around the lion & point the camera at the bounding box. What really happened was body parts always came in & out of view because of lighting, glitches, movement out of frame, & movement too close to the camera. The system could not keep the head in view, even with a higher framerate, & would just center on a paw or a leg. This quickly showed tracking based only on the photographed field of view to be an unsolvable problem.

The Facebook Portal uses a spherical cam to track a subject & zooms in based on a bounding box of the entire body. It uses face detection to optionally track individual humans while using YOLO to detect the entire body. It probably doesn't have enough clockcycles for full pose detection.

Amazingly, for a corporation which once promoted live streaming & vlogging, it has no support for content creation or recording video locally. It just makes phone calls. It's either a new height of corporate dysfunction or only private phone calls are worth monetizing.

Any tracking camera needs a spherical cam as the tracker & to just live with the parallax error. Live video from a spherical cam is a premium feature. The Gear 360 requires you to use a Samsung phone to get live video. Newer cameras charge $300 for live output. There is exactly 1 hemisphere webcam which streams over USB, the ESCAM Q8 on a 1 month boat ride from China.

-

Lighting errors from 8 years ago

01/08/2019 at 06:39 • 0 comments![]()

With the sun facing the camera, the lion kingdom remembered abandoning quad copters flown by machine vision 6 years ago because of the extremely perfect lighting conditions required. Today's 12 figure valuations are based on the exact same level of errors. There hasn't been any improvement at all in the lighting requirements.

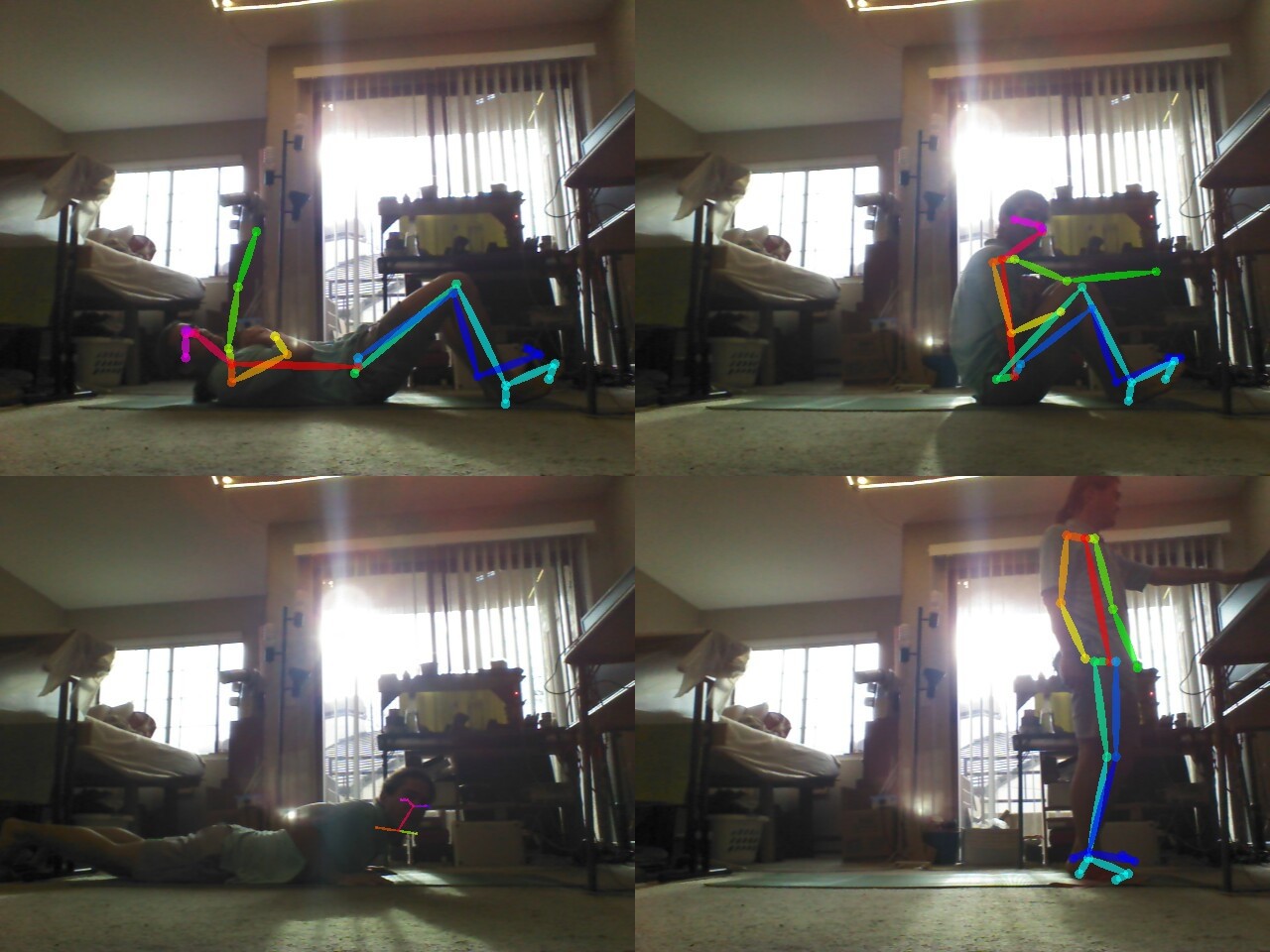

![]()

Finding corner cases would continue once a night, when the lighting was suitable & the lion was fresh. Detecting hip flexes with the rear leg remaned the hardest problem. There was requiring the ankles to be different heights, requiring the longest leg to be longer than the back, debouncing reps. The framerate was never high enough to throw out false images, but it could throw out reps which happened too close together. The profile camera view was never solved. You always have to face the camera. Squats, situps, & pushups were all bulletproof, however.

As much as openpose accomplishes with video alone, 3D pose estimation is really needed. It's such a practical need, it's hard to believe kinekts never became ubiquitous rep counters & camera trackers, except for the cost.

As long as you manually select the exercise, it can be quite scaleable. One could imagine ordinary humans setting it up in a gym & using it to count a wide variety of exercises.

-

Machine vision blues

01/06/2019 at 00:32 • 0 comments![]()

![]()

The machine vision blues continue just like 2013, but at 10,000,000x the valuations. It has trouble with the occluded leg & rubber band. Another problem is double counting.

![]()

Another $130 GTX 1050 would help immensely. A debouncing algorithm can help with double counting, but the lion just has to play with the workout to get it to register. There's also just facing the camera during this exercise.

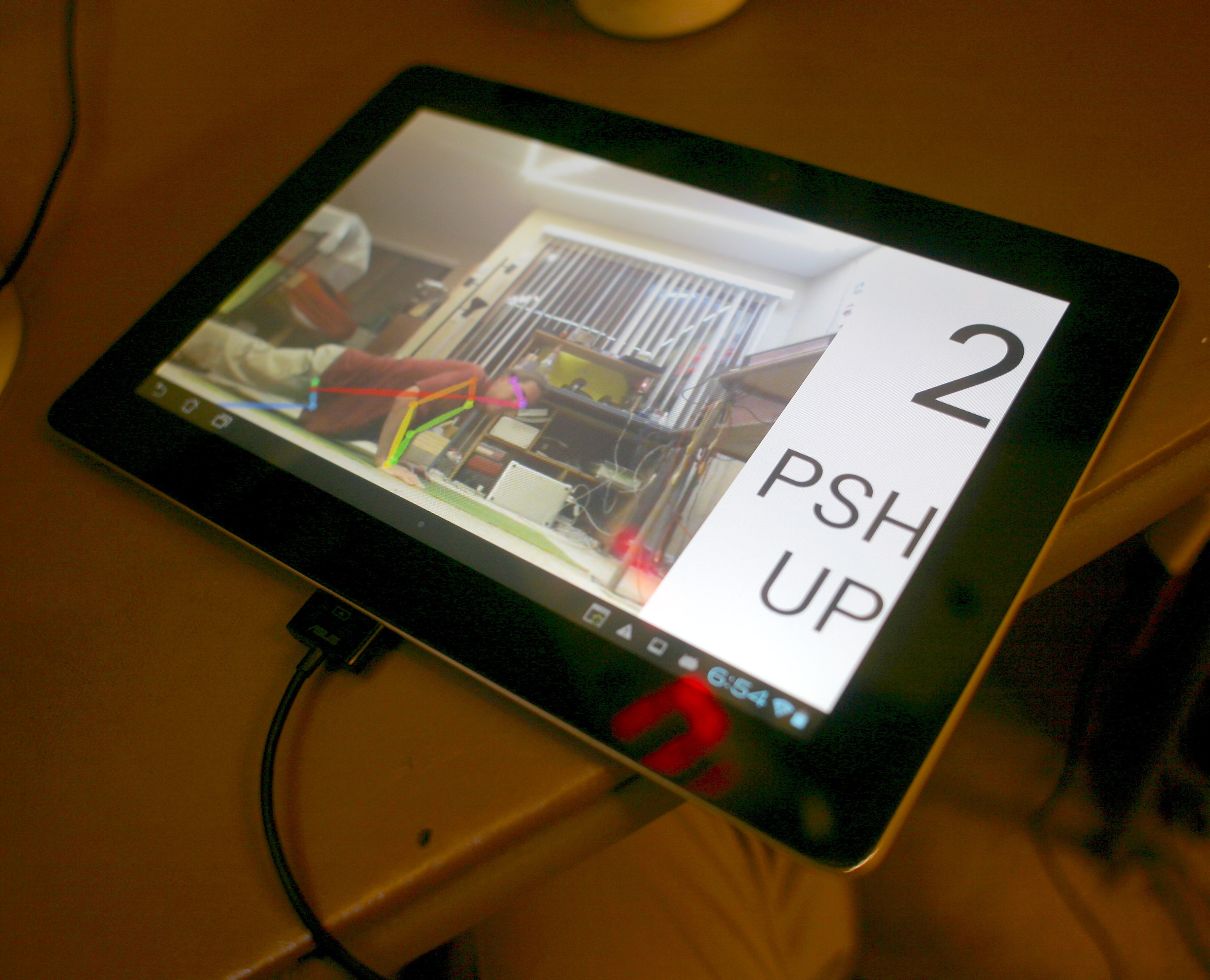

After much loathing, finally caved into the idea of a tablet serving as the camera & user interface, while still offloading the pose tracking over the network. Tablets have been utterly hopeless at finding any practical use for the last 10 years, but have finally come unto their own for computer vision.

Computer vision platforms need a subject facing camera, viewfinder which can be seen from far away, & always have to be on. That's making tablets perfect & why Facebook, Goog, & Amazon have finally started recycling their failed tablet inventory as the latest round of intelligent assistants.

The 6 year old Asus with a dead camera, dead GPS, marginal wifi, & marginal battery still has a better front facing camera than the webcam. After much implementation of a network protocol & fixing openpose bugs, the tablet was counting reps with the macbook as a remote compute server.

![]()

False positives abounded. If it detected a relevant pose anywhere in the 5 minutes before starting the workout, it falsely counted it as part of a rep. It also sagged into the carpet over time, causing the lion to drift out of frame. There is a long delay in counting, but it's bearable.

The Asus is still the best looking of all the tablets. The knife edge somehow works better than the fat edge of the ipad pro, although the ipad pro is overall the best gadget ever made in all history. The Asus looked utterly gigantic when it was new. It's tiny, now. The ipad might even be better for the rep counter, if lions weren't keen on finding uses for everything old.

-

Optimizing openpose on the macbook

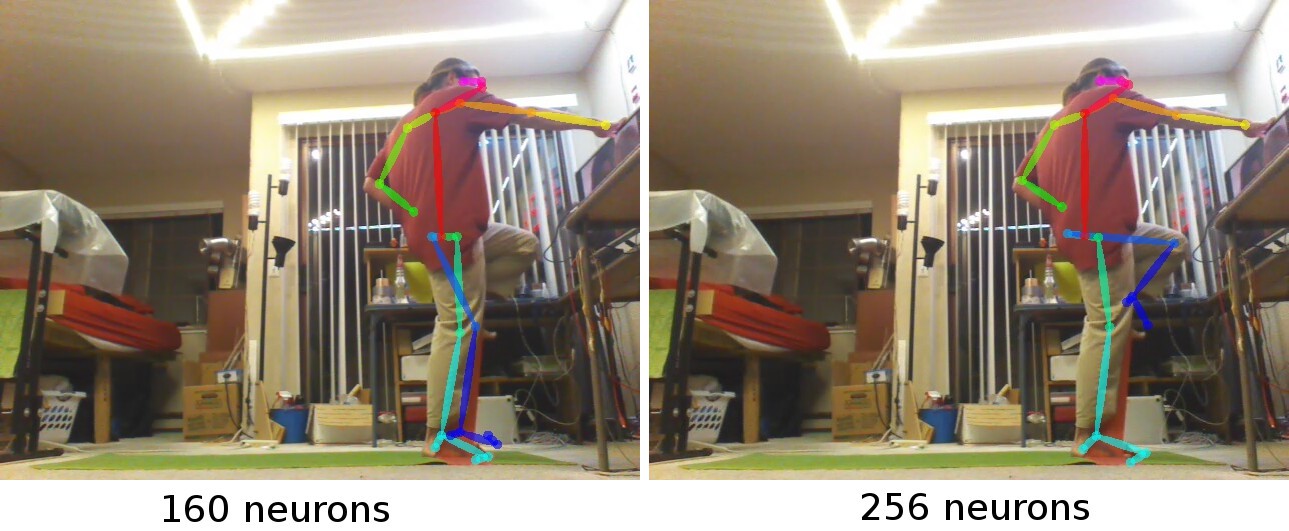

01/04/2019 at 19:32 • 0 commentsThe mane way to get more fps is decreasing netInputSize. There are diminishing returns with higher neuron count & higher noise with lower neuron count.

-1x368 was the default & too big for 2GB of RAM.

-1x256 gives 2fps & might be fast enough to track old people

-1x160 gives 4fps & noisy positions, but the lowest framerate needed to get all the reps on video

-1x128 gives 6fps & much noisier positions

The next task was classifying exercises based on noisy pose data. With the -1x160, openpose presented a few problems.

![]()

Falsly detecting humans.

![]()

Dropping lions of nearly the same pose as detected lions.

![]()

Differentiating between squats & a hip flex proved difficult, since the arms can either be horizontal or vertical in a squat & the knee angles are within the error bounds.

![]()

Situps & squats were also within the same error bounds.

The problem would only get harder if more exercises were added. Noise in the pose estimation & lack of 3D information reduced the angle precision.

To get the 100% accuracy of a manual counter, it needed prior knowledge of the exercise being performed. Manually setting the exercise on 1 device while setting up another device as a camera is a real pain, so the easiest solution was hard coding the total number of reps & exercises to be performed. The lion wouldn't be able to throw in a few extra if it was a good day.

A better camera might improve results, in any case.

There's also making a neural network to classify exercises & using YOLO instead of pose detection. Pose detection is the most general purpose algorithm & eventually the only one anyone is going to use. A neural network classifier would definitely not be reliable enough.

Despite its limitations, it's amazing how what are essentially miniaturized vacuum tubes & copper can identify high level biological movements in photos.

-

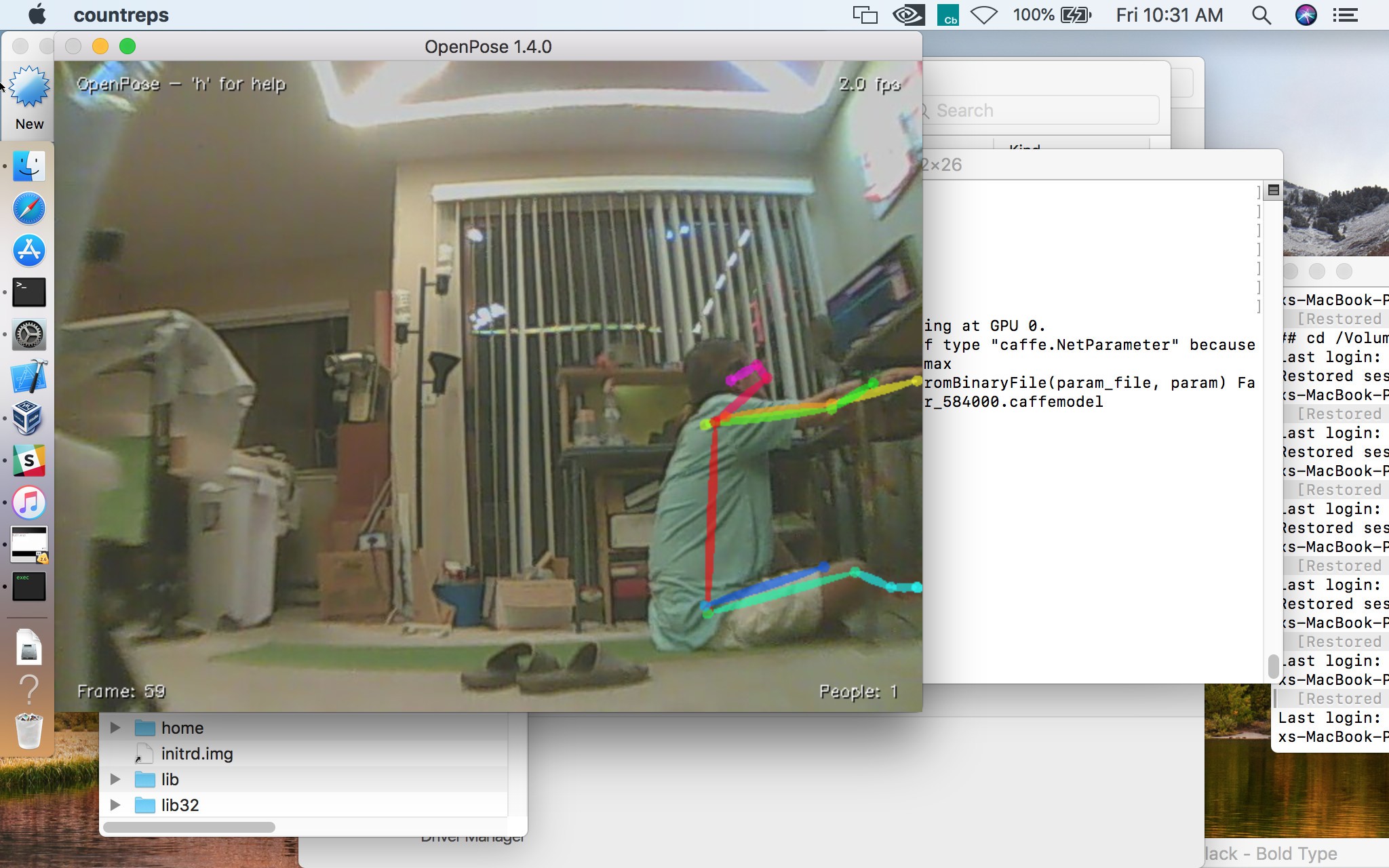

Openpose on a Macbook with CUDA

01/03/2019 at 05:18 • 0 commentsThe search for the cheapest portable clockcycles led to the ancient macbook. It has a GT 750M with 2Gig of RAM. It could support rep counter & camera tracker with the same openpose library, but it meant giving up on Linux. CUDA is another thing Virtualbox doesn't support & forget about running Linux natively on a macbook since 2013.

Development for Macos was always voodoo magic for someone who grew up with only commercial operating systems & no internet. It's now no different than Linux, ios, & android. The mane differences are the package manager on mac is brew, the compiler is clang instead of gcc, libraries end in .dylib instead of .so. The compiler takes a goofy -framework command which is a wrapper for multiple libraries. Then of course, there's the dreaded xcode-select command.

The notes for mac:

Mac notes: Openpose officially doesn't support CUDA on MAC, but hope springs eternal. https://maelfabien.github.io/myblog//dl/open-pose/ https://github.com/CMU-Perceptual-Computing-Lab/openpose/blob/master/doc/installation.md#operating-systems All the dependencies are system wide except caffe. The mac drivers are a rats nest of dependencies: Download CUDA, CUDNN, CUDA driver, & GPU driver for the current Macos version. The drivers are not accessible from nvidia.com. https://www.nvidia.com/object/mac-driver-archive.html http://www.macvidcards.com/drivers.html Obsolete caffe instructions: http://caffe.berkeleyvision.org/installation.html#compilation "brew tap homebrew/science" fails but isn't necessary. Some necessary packages: brew install wget brew install pkg-config brew install cmake To compile, clone caffe from microsoft/github. edit caffe/Makefile.config uncomment USE_CUDNN comment out CPU_ONLY comment out the required lines on the CUDA_ARCH line comment out the Q ?= @ line ERRORS: Can't compile "using xyz = std::xyz" or no nullptr? In the Makefile, add -std=c++11 to CXXFLAGS += and to NVCCFLAGS without an -Xcompiler flag. It must go directly to nvcc. Nvcc is some kind of shitty wrapper for the host compiler that takes some options but requires other options to be wrapped in -Xcompiler flags. nvcc doesn't work with every clang++ version. The version of clang++ required by nvcc is given on https://docs.nvidia.com/cuda/cuda-installation-guide-mac-os-x/index.html It requires installing an obsolete XCode & running sudo xcode-select -s /Applications/Obsolete XCode to select it. cannot link directly with ...vecLib...: in Makefile, comment out LDFLAGS += -framework vecLib Undefined symbol: cv::imread in Makefile, add LDFLAGS += `pkg-config --libs opencv` BUILDING IT: make builds it make distribute installs it in the distribute directory, but also attempts to build python modules. make -i distribute ignores the python modules. Then install it in this directory: cp -a bin/* /Volumes/192.168.56.101/root/countreps.mac/bin/ cp -a include/* /Volumes/192.168.56.101/root/countreps.mac/include/ cp -a lib/* /Volumes/192.168.56.101/root/countreps.mac/lib/ cp -a proto/* /Volumes/192.168.56.101/root/countreps.mac/proto/ cp -a python/* /Volumes/192.168.56.101/root/countreps.mac/python/ The openpose compilation: mkdir build cd build cmake \ -DGPU_MODE=CUDA \ -DUSE_MKL=n \ -DCaffe_INCLUDE_DIRS=/Volumes/192.168.56.101/root/countreps.mac/include \ -DCaffe_LIBS=/Volumes/192.168.56.101/root/countreps.mac/lib/libcaffe.so \ -DBUILD_CAFFE=OFF \ -DCMAKE_INSTALL_PREFIX=/Volumes/192.168.56.101/root/countreps.mac/ \ .. make make install Errors: Unknown CMake command "op_detect_darwin_version". comment out the Cuda.cmake line op_detect_darwin_version(OSX_VERSION) To build countreps: make To run it, specify the library path: LD_LIBRARY_PATH=lib/ ./countreps ERROR: Can't parse message of type "caffe.NetParameter" because it is missing required fields: layer[0].clip_param.min, layer[0].clip_param.max The latest Caffe is officially broken. Use revision f019d0dfe86f49d1140961f8c7dec22130c83154 of caffe.Bits of openpose & caffe that were changed for mac:

https://cdn.hackaday.io/files/1629446971396096/openpose.mac.tar.xz

Important files from the simplest demo for mac:

https://cdn.hackaday.io/files/1629446971396096/countreps.mac.tar.xz

After several days, it finally ran & yielded 2 frames per second.

![]()

Still 4x faster than CPU mode on the Ryzen, maybe enough to track a subject, but not enough to count reps.

The lion kingdom still wants something that costs too much.

lion mclionhead

lion mclionhead