A video summarizing the journey of this project:

An update of the current state of the prosthesis. Demonstration of all the current supported features, including all mechanical movements, brain-computer interface and active vision system.

An affordable 3D printed prosthesis using computer vision to track and choose grip patterns for the user. Support BCI and EMG as well.

To make the experience fit your profile, pick a username and tell us what interests you.

We found and based on your interests.

A video summarizing the journey of this project:

An update of the current state of the prosthesis. Demonstration of all the current supported features, including all mechanical movements, brain-computer interface and active vision system.

EMG2.6x5.zipThe PCB Gerber file for EMG sensor, size 50x26 (mm) Use this for easy soldering and starting point. This design has been utilized for components available on the market.x-zip-compressed - 36.69 kB - 09/23/2019 at 15:12 |

|

|

EMG2.2x2.2.zipThe PCB Gerber file for EMG sensor, size 22x22 (mm) WARNING: This design has not been utilized for components available on the market.x-zip-compressed - 75.08 kB - 09/23/2019 at 15:10 |

|

|

EMG3x1.6.zipThe PCB Gerber file for EMG sensor, size 31x16 (mm) WARNING: This design has not been utilized for components available on the market.x-zip-compressed - 71.40 kB - 09/23/2019 at 15:10 |

|

|

finger_mechanism.linkage2The Linkage file to design the trajectory of the finger module.XML - Extensible Markup Language - 1.52 kB - 09/23/2019 at 15:09 |

|

|

STL KONOSUBA.rarALL THE STL FILES NECESSARY TO CREATE THIS PROSTHESIS. Have fun :D And if you successfully made one, please send me an image! If you have any difficulty while printing and building the prosthesis, also send me a message.RAR Archive - 4.19 MB - 09/23/2019 at 14:50 |

|

The Github directory of the project is now usable, and I have updated all the source code that I have written, including:

I am not that good with documenting source code, but I will try to write instructions for each of the software/firmware.

On the other hand, here are two videos showing you how the oscilloscope works:

Single Channel:

Dual Channels:

The project is my capstone project for my undergraduate study, and I have logged the final report to this project directory.

Within the report you will see all the respective researches I have used, as well as all the things you need to know before viewing the project. I have also included all the schematics and software designs within the report.

The report offers complete study on the brain-computer interface used within the scope of this project, as well as the mechanical design and how it evolves throughout this project. There will also parts that give you more in-depth information regarding the EMG schematic, EMG design; and the computer vision system.

I have logged the Gerber files for PCB of EMG sensor. There are three version of 26x50 (mm), 31x16 (mm) and 22x22 (mm). Choose the one that fits your usage. Currently I am building an EMG bracelet (yes, similar to the myo armband currently available on the market. I just want to build one for me from scratch) so I am working with the 31x16 design.

Here are some pictures:

Just a recap of what I used to design the prosthesis.

Since I am an electrical engineering student, I do not have much experience with mechanism design and simulation. So I have been trying to find an easy and intuitive tool for me to do so. The trajectory of the finger module is designed using Linkage, an amazing and easy to use simulator. You can find more information about the software here: www.linkagesimulator.com

Here is a video of the Linkage project used to create the finger trajectory:

The file is also included in this project of Hackaday.

I have logged all the STL files necessary to create this prosthesis. I have also included 2 .3mf files for the elbow module and the wrist module to guide everyone through the assembly process. Have a look at the video below to understand how to use the .3mf file to find the where does which part go:

I use 3D Builder of Windows to view .3mf

Currently, the system simply uses the changes in the total area of the detected blob of the object to figure out the proximity of the object with respect to the palm. I am planning to use stereo vision for it, so that the distance is properly calculated, and that the object handing automation process would be improved. Here is a demo video of the stereo vision currently being developed. More work needs to be done before this is actually realized, but for now, you could more or less figure out what object is closer to the camera through the shades of the pixel.

I have been wanted to increase the number of supported objects for the active vision system for the prosthesis, as it means that there will be more supported grip patterns corresponding with the objects. Here is my weak attempt of creating a banana classifier.

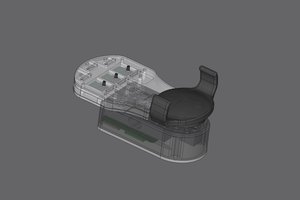

To make the acquisition of EMG signals more easier and more convenient, as well as to increase the number of channels acquired while maintaining the portability of the prosthesis, here is a much smaller PCB design for EMG sensor:

Current size is 30x16 mm.

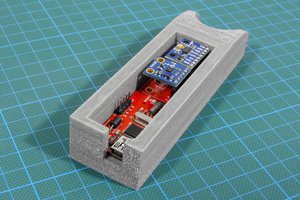

The PCB after soldering:

The myoelectric sensor is field tested. The following videos and figures show the signal output and working of the sensor.

The sensor PCB:

The signal captured in oscilloscope:

The working demo:

Putting the active vision system into the prosthesis. Demo videos:

Vertical movements:

Horizontal movements:

Putting everything together:

Rotated viewpoint for grip action:

Here only the active vision is working, there is no consent from the user (BCI or EMG). When putting everything together, the system will be more robust and accurate.

Create an account to leave a comment. Already have an account? Log In.

Awesome project! From the Bobblebot team, Pleasure meeting you at the ISMCR conference at UHCL. Best of luck!

Wow! This is really something! Good job! I consider the project like yours will change our future and imaginations about limits... both physical or mental. Thanks for sharing!

Currently I work on a research how modern technologies can improve our lives (you can click to read a review about writing service I work on) and your invention is really amazed me! Thanks for your hard work and imagination!

Hi Nguyễn

This is a very good project, congratulations for being a finalist of the Hackaday Price!

I would appreciate a citation for the prosthetic design you have used for your project development, which is one of my designs, Grasp 0.

https://www.thingiverse.com/thing:2122144

Thanks.

Xavier.

Hi Xavier, thank you for your endorsement. I did cite your design in my project history, could be seen from here, update name "First working hand module":

https://cocooncommunity.net/projects/2

As it is true that I did use yours in the previous version of the prosthesis. Thank you for the wonderful design which is also made public.

On the other hand, I just want to confirm that the current hand design with modular finger is my personal design. During the first stage of the project, I have used yours as a prototype for testing. I have then redesigned it to fit my use and to overcome some weakness I found. Thank you for your reaching out.

Yes I know, the modular design is yours, that was only because I have seen pictures of mine here. I had seen your project steps in this page: https://cocooncommunity.net/projects/2 and I did not realized that Grasp was cited here.

Thanks for the citation, and congratulations again for the project, first time I saw it I though was amazing.

hi Nguyễn!

What a great project, congratulations for all the great work. We have a doubt do you hace any type of enconder or homing sensors for the fingers?

Hi Jonathan, there is a potentiometer at the shaft of each finger to feedback the position of the respective joint. The resolution is currently low, but it's usable somehow.

Become a member to follow this project and never miss any updates

oneohm

oneohm

matt oppenheim

matt oppenheim

Dilshan Jayakody

Dilshan Jayakody

spacefelix

spacefelix

Hi Nguyen (sorry for spelling, hungarian keyboard),

a Time_Of_Flight camera (like in the Kinect) or sensor in order to get depth data along with the visuals. There must be a model for shorter distances. for starter REAL3™ image sensor family from Infineon. they have evaluation kits as i know. I guess someone from Hackaday can even has a better model in mind. (as Jonathan Diaz asked about "homing" of fingers) each fingertip can have its own homing sensor reporting distance from object.