-

Technical Log: Computer Vision and Machine Learning

08/25/2019 at 06:43 • 0 commentsSince we are using the Jetson Nano as our primary board, we wanted to explore what could be done with the GPU. We started our explorations with OpenCV and tried a little bit of machine learning with TensorFlow.

Though we knew that our needs would likely be best served with a neural network to classify different food items, we started with the OpenCV fundamentals. We wrote a basic image segmentation algorithm using a background subtraction technique included in OpenCV. This algorithm is sufficient to isolate objects placed on a shelf or in a fruit basket.

OSK-SegmentationTest from Mepix Bellcamp on Vimeo. Next, we began exploring the ways we could improve food identification using TensorFlow. TensorFlow is an open source framework developed by Google for creating Deep Learning models and numerical computation. TensorFlow is Python-friendly and makes machine learning easy.

TensorFlow allows the development of graphs which are structures that describe how data moves through a series of processing nodes. We used the Fruit 360 dataset to create a TensorFlow trained model to identify fruit. Code was based on a file found here. The code to create the model and graph and code to freeze the model into .pb and .pbtxt formats is on GitHub.

While we were successful at training the test dataset on our x86 computers, we were having some difficulties getting TensorRT to work on the Nano. Our future work will close this gap.

-

Technical Log: Swift Webserver on the Nano

08/25/2019 at 06:37 • 0 commentsA key part of the OSK infrastructure is the embedded webservice, which allows users to check the status of the device and provides an API for gadget-to-gadget communication. The webservice infrastructure provides capabilities for an end user to check GUI-based web pages on either a computer or mobile device. Different applicances can communicate using a device-to-device JSON data transfer via RESTful APIs.

The selected Swift-base webservice, Vapor, provides the following features:

- supports the ARM aarch64 used by the NVIDIA Nano and other embedded computers (see Swift-Arm.com, buildSwiftOnArm, swift-arm64)

- links directly with C/C++ via the LLVM toolchain

- is designed to be memory and CPU resource efficient

A standalone example and test for Swift/C/C++ integration is provided in OSK-Bridge and OSK-Bridge-Mock. OSK-Bridge provides integration with the OSK Gadget. Our current implementation of a characteristic OSK Gadget is our squirrel shaped fruit basket, which includes both a strain gauge weight sensor and a video camera sensor. The OSK-Bridge-Mock is used to provide a minimal API interface to support development and test.

The OSK-Bridge includes the device software which is independently developed in the OSK-Core. OSK-Core provides a C/C++ testbed for working directly with the sensor devices.

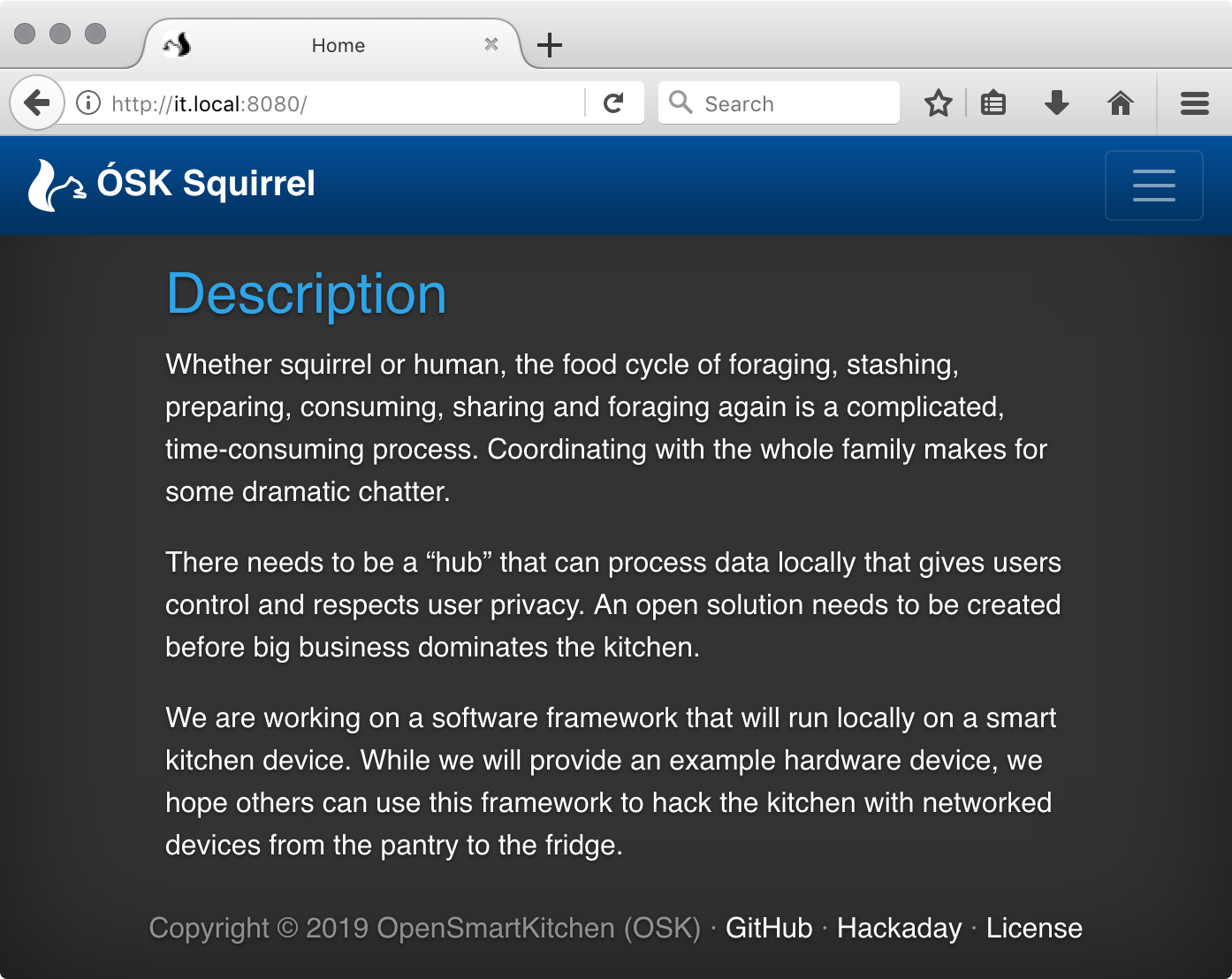

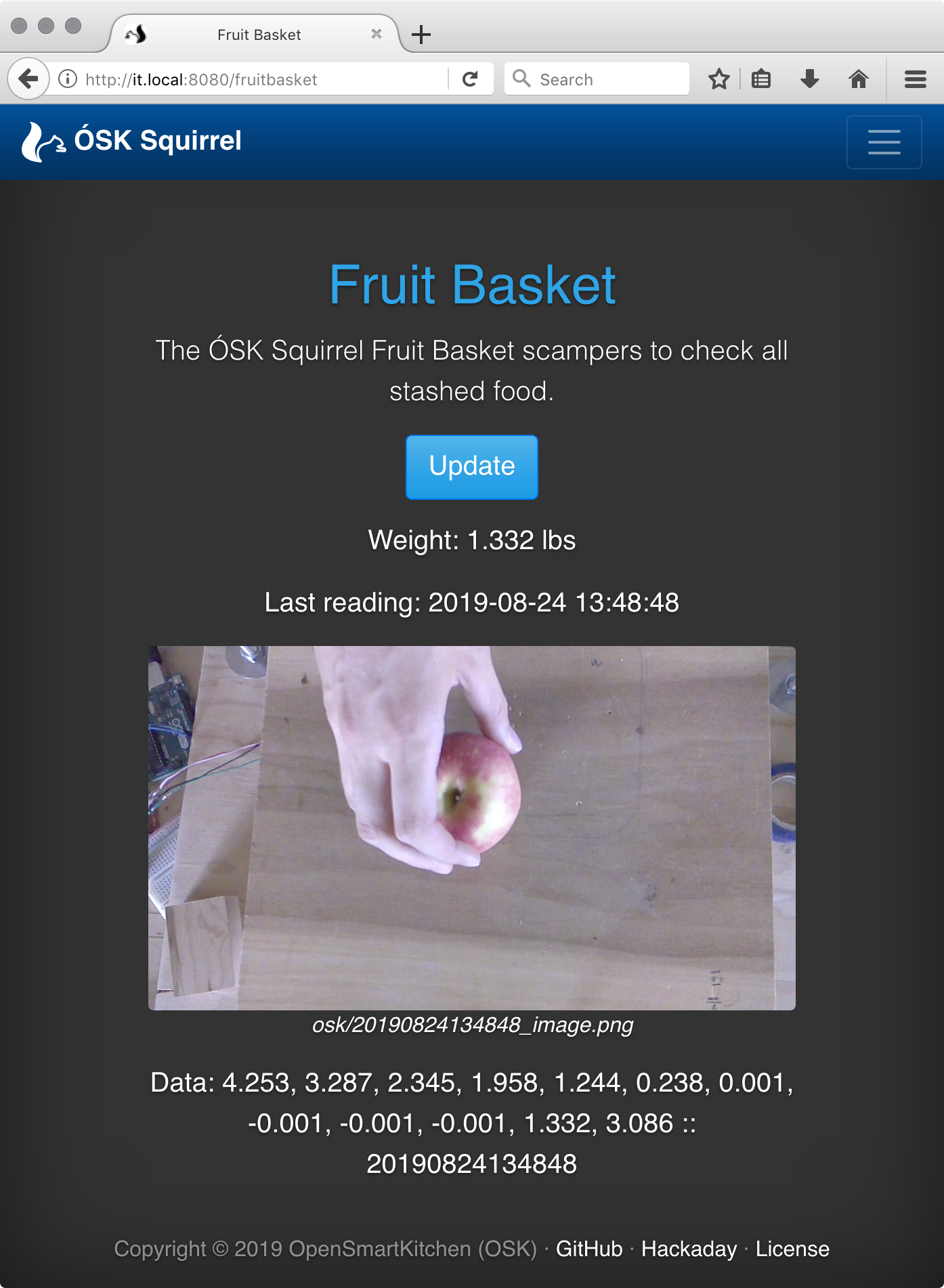

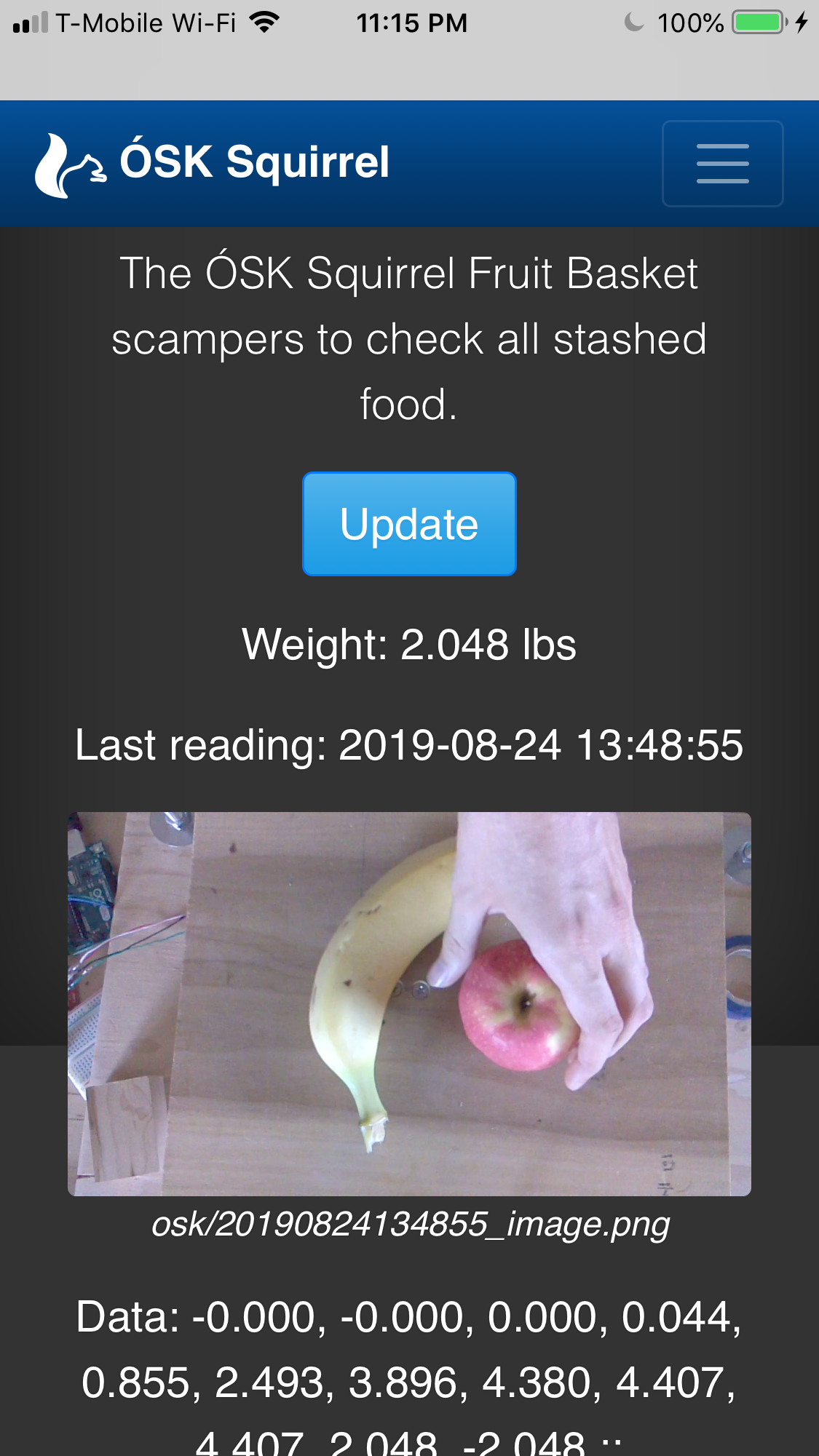

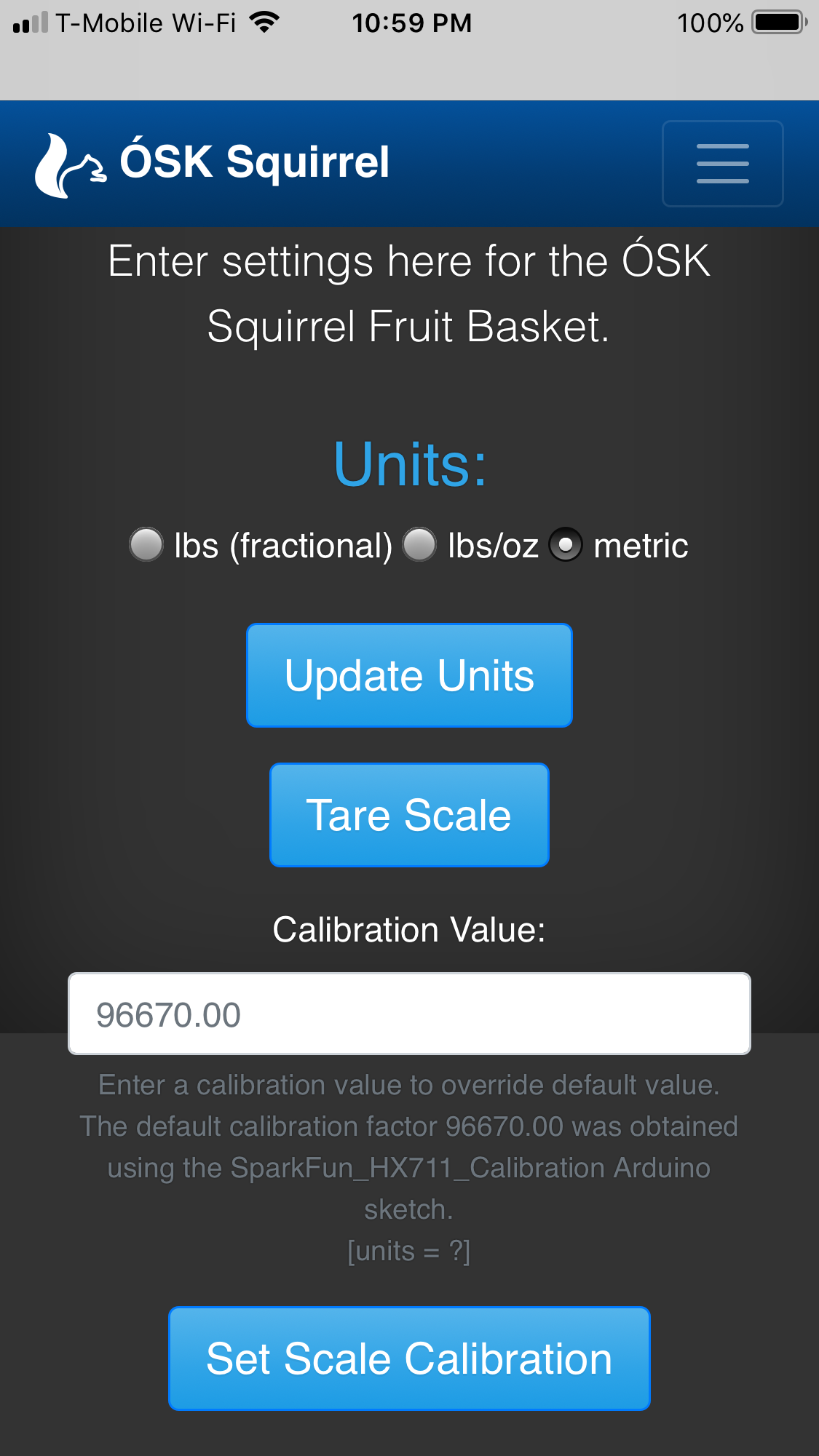

Below are some screen shots of the OSK-WebUI which is hosted on the NVIDIA Jetson Nano. (Note: the software environment is cross platform and code can be tested and validated on multiple operating systems before deployment on an embedded system.) The web pages shown below use the Bootstrap CSS/JS toolkit to provide responsive pages which dynamically scale for both mobile devices and desktop computers. Pages can be easily added and customized.

HOME PAGE

Provides a description and a placeholder where additional information can be provided to the user.

![]()

Fruit Basket

Displays the current contents of the fruit basket. This could be expanded to show additional data beyond our prototype or adapted to another gadget.![]() Since we are using Bootstrap for the template, the webpage easily adapts to mobile platforms.

Since we are using Bootstrap for the template, the webpage easily adapts to mobile platforms.![]()

Settings Page

The Settings Page allows a user to change their device preferences.![]()

-

Technical Log: Manufacturing

08/25/2019 at 05:57 • 0 commentsAfter the CAD was completed, we moved forward with the construction of our second prototype since we wanted to show something a little more "thematic" for the Hackaday Prize entry!

We used the CAM tool in Fusion 360 to carve the squirrel to hold the bracket. During the CNC operation, we left 1mm of material at the bottom of the cut to save the waste board on the machine. This extra material was removed with a Dremel and then sanded. (Here is a fun tutorial for those who want to get started with CNC and CAM using Fusion 360)

![]()

After the squirrel was carved, we had to mount it vertically on a test board. We chose some typical pine stock from Home Depot and some angle brackets. We used bolts to hold it together since we thought we might have to change or move some components around as we built the second prototype.

![]()

-

Technical Log: Squirreling Around with CAD

08/19/2019 at 04:46 • 0 commentsWe've done rough testing setups, so then it's time to work towards making something that actually looks like... well... looks nice and isn't just scrap slapped together. We decided to go with a hanging fruit basket design to more easily incorporate a camera and to implement a self-centering of the weight to guarantee accurate readings.

After squirreling around in Fusion 360, we decided to go with the design pictured below. CAD rendering is here and the file is in the project files.

![]()

-

Design Thinking 4: Prototype

08/18/2019 at 22:49 • 0 commentsThe fourth step in the design thinking process is Prototype. This stage is where the team developed scaled down versions and experimented with specific features found within a potential product. We decided to initially focus on a fruit basket that could sense when fruit like an apple or banana is removed or added to the basket and provide feedback to a server that could interact with a larger software ecosystem.

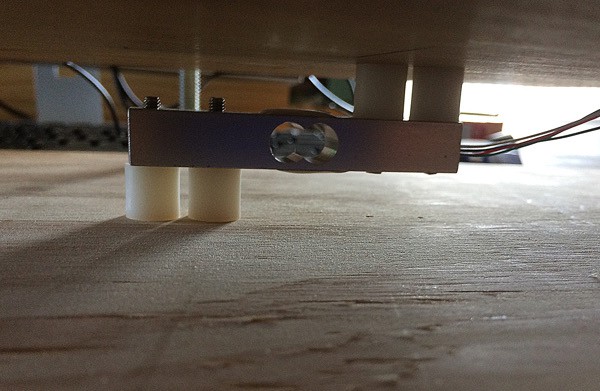

We first tested how software interacted with the different sensors to determine which might work with the features we were considering. Then we played around with the load cell and developed a "scale" to determine if the load balancing cell could detect weight differentials.

Following the load cell tutorial at SparkFun, we assembled a testing rig out of scrap wood. To simulate a fruit basket's use and to test the calibration and data collection process, we used an apple and kitchen weights

We changed the code to calibrate the scale and to add a ring buffer such that the data would only be returned if there's a change in weight over a fixed set of data.

Next we built on that initial prototype. We used threaded rods to provide a breadboard area to mount the camera and the NVIDIA® Jetson Nano™ above the scale. The camera is placed to identify items below in the fruit basket.

![]()

Scale

![]() Load Balancer

Load BalancerScale with rods added

-

Design Thinking 3: Ideate

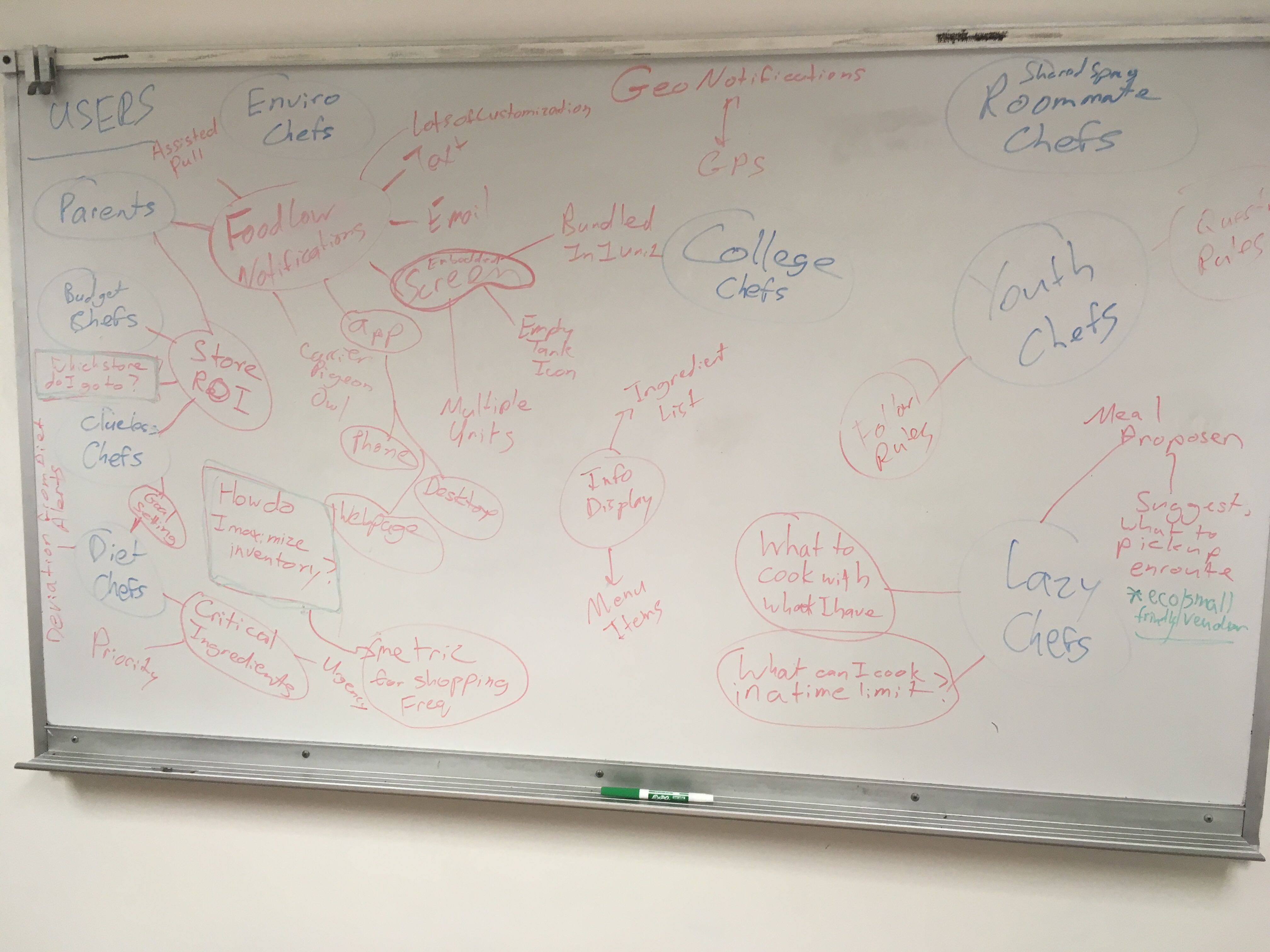

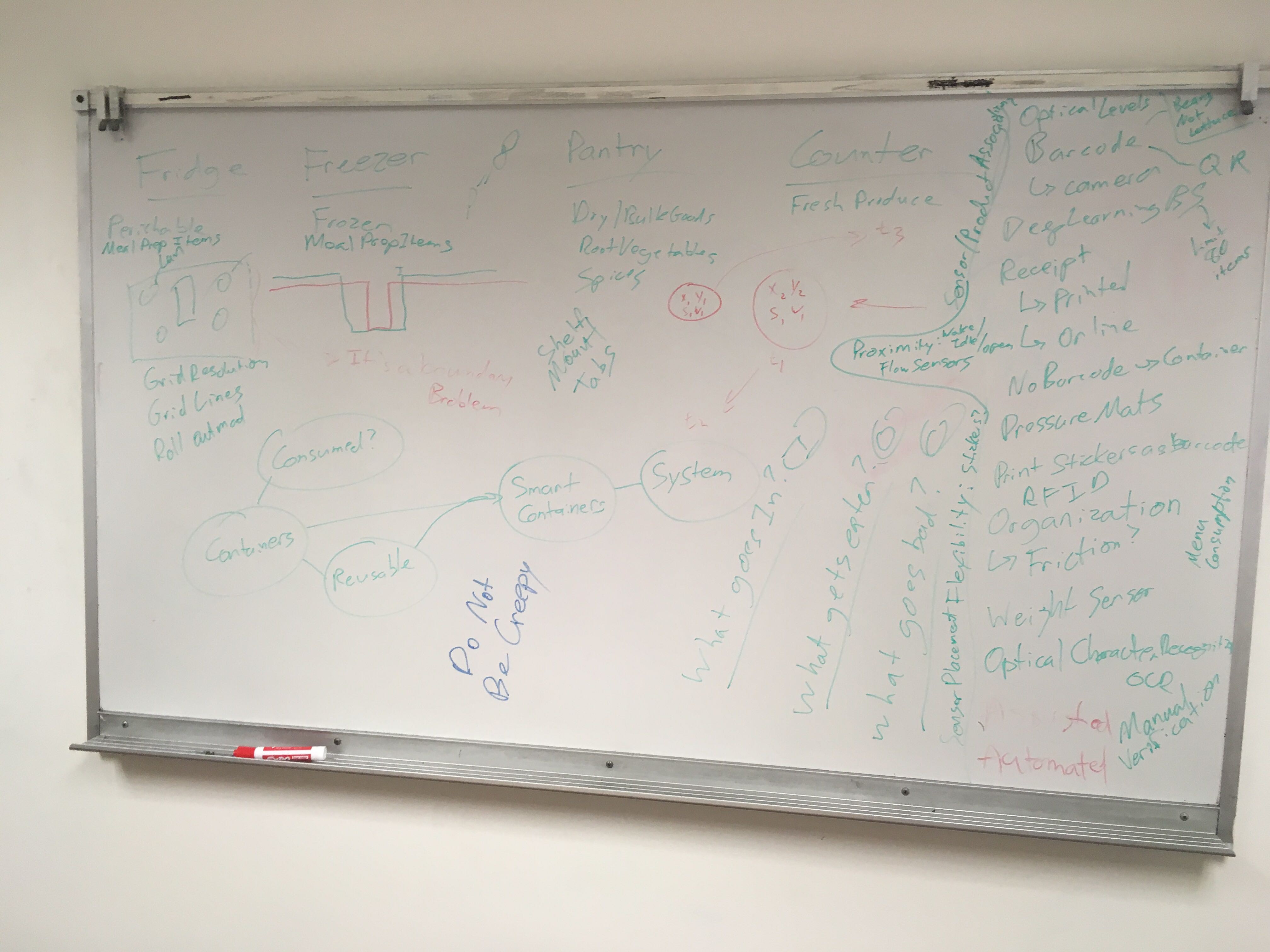

07/29/2019 at 21:45 • 0 commentsThe next step in design thinking is Ideation which is idea generation. We came up with several ideas to solve the problems we identified in the Define phase. Here are some of our ideas as depicted in these photos.

![]()

and

![]()

After this session, we came up with a list of sensors to try. Some of the sensors are pictured here:

Some of the sensors and supplies include:

• Raspberry Pi Camera Module V2

Can be used to take photos of food as it leaves a pantry for AI or scan bar codes.• SparkFun Qwiic HAT for Raspberry Pi

Can be used to stack as many sensors as you’d like to create a tower of sensing power!• SparkFun Qwiic Adapter

Can be used to make a I2C board into a Qwiic-enabled board• SparkFun Qwiic Cable Kit

Who doesn't need cable supplies for hacking?!• SparkFun Load Cell - 10kg, Straight Bar (TAL220)

This bar load cell (sometimes called a strain gauge) has the ability translate up to 10kg of pressure into an electrical signal. SparkFun had what seemed to be a great tutorial to help learn about this load cell.• SparkFun Load Cell Amplifier - HX711

This amplifier is a small breakout board that allows the user to easily read load cells to measure weight. It helps improve accuracy also.• SparkFun Qwiic Scale - NAU7802

This scale allows the user to easily read load cells to accurately measure the weight of an object. By connecting the board to a microcontroller, users can read the changes in the resistance of a load cell.• Square Force-Sensitive Resistor

This is a sensor that allow you to detect physical pressure, squeezing, and weight.• Magnetic Contact Switch (Door sensor)

This is a sensor that is used to detect when a door or drawer is opened. -

Design Thinking 2: Define

07/29/2019 at 21:37 • 0 commentsThe next step in design thinking is Define. We spent time trying to further define the problems in the kitchen based on the Empathize step. Define is determining users' needs, their problem and related insights.

Some of the things we came up with:

• Overworked and tight-budget users need an assistant to help save money and time because they want to take advantage of sales but don't have enough time to run all over the place to a bunch of different stores.

• Environmentally conscious chefs want ways to prevent waste of food and waste of food packaging to help them feel like they are contributing to saving the environment.

• Parents needs help making quick cooking decisions when they're hungry so they can avoid the pitfalls of making decision that don't meet their goals.

• People want group collaboration/input in meal planning, help in making menu decisions to that the family can enjoy what they eat and actually be able to sit down together and eat.

• Parents need fast and easy shopping assistance because bringing the kids to the store is difficult. Speeding up the food shopping and delivery processes is important.

• Shoppers need notification when products are used up by others. They are not the only one consuming the product and don't necessarily know when items are consumed/emptied.

• Cooks with restricted diets need an assistant to help stick with their diet so they can be healthy and live longer.

• Cooks with small space need to remember what is in the pantry so they have enough of the ingredients to cook specific recipes.

• Cooks want fast and easy meals to save money be eating out less and purchasing less prepared foods.

Cooks need some way to track what is consumed (eaten or spoiled) so that they have up-to-date information on what's available in the pantry.

-

Technical Log: Software Structure

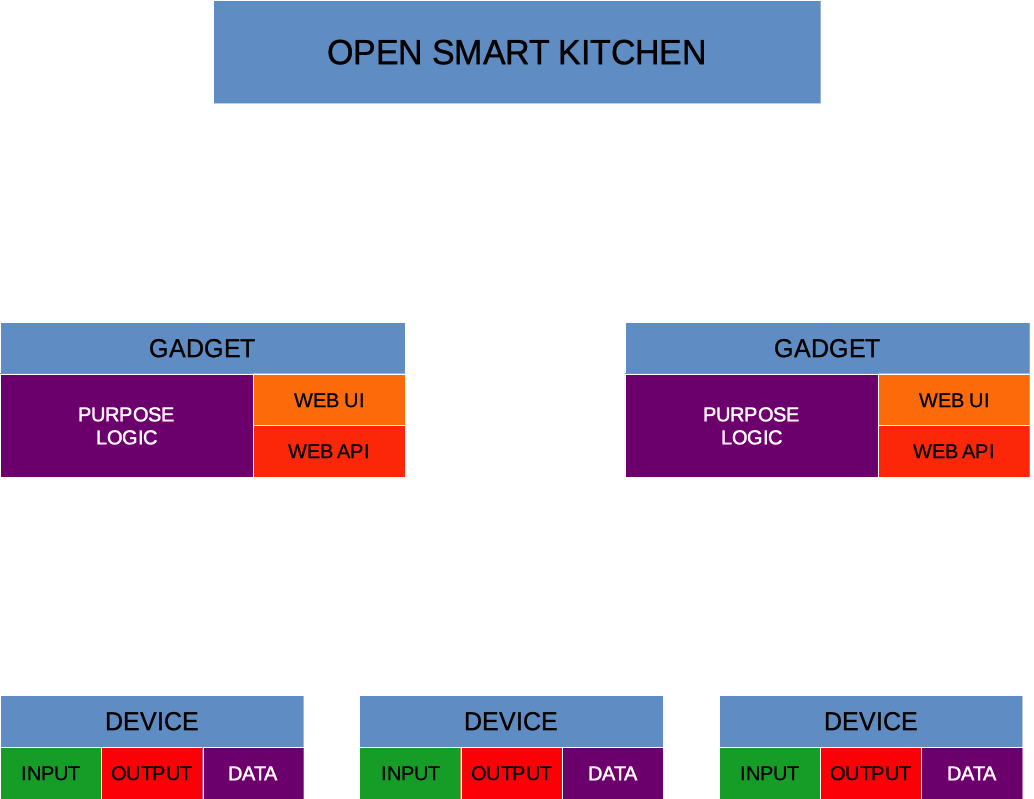

07/28/2019 at 20:28 • 0 commentsAs we began prototyping, we wanted to create a scalable software architecture.

Each smart kitchen contains smart gadgets (appliances, pantries, etc.). Each smart gadget is composed of one or more devices (cameras, weight sensors, humidity sensors, etc.). These devices all store some data and can act either as an input and/or output for the gadget.

Different gadgets in the OSK ecosystem can talk to each other, but they cannot directly access another gadget's device. If data needs to be shared, the appropriate API should be incorporated into the host device's logic.

This is our diagram for the gadgets. Software development has started, but is still very minimal as we want to keep the framework flexible at this stage. More information can be found on our Github.

![]()

-

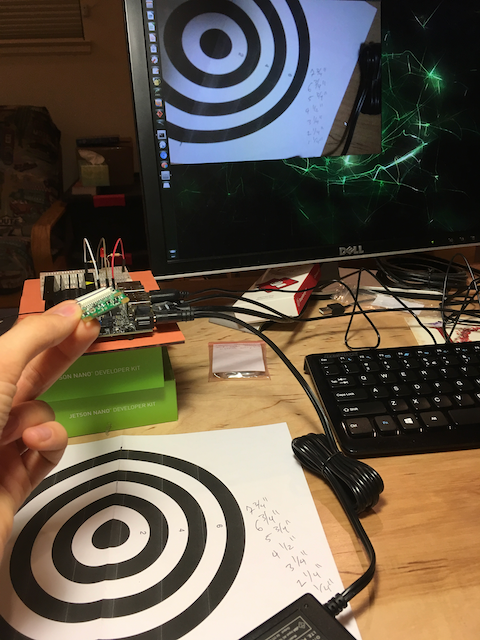

Technical Log: Pi Camera on the Jetson Nano

07/28/2019 at 20:21 • 0 commentsA quick test to verify the view angle for the Raspberry Pi camera.

We need a camera in our project to perform computer vision and other typical AI functionality. Git hub link with full information here.

Technical Parameters

The eLinux Wiki provides the technical information about the camera, including the Angle of View: 62.2 x 48.8 degrees.

Emperical Results

By measuring the distance between the camera and the calibrated target, we determined that the camera has a view angle of ~45 degrees, which is close enough to verify the 48.8 degrees in the spec.

![]()

-

Design Thinking 1: Empathy

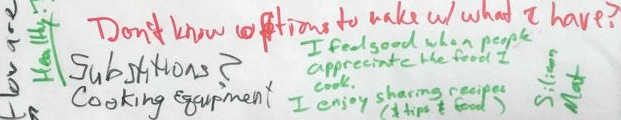

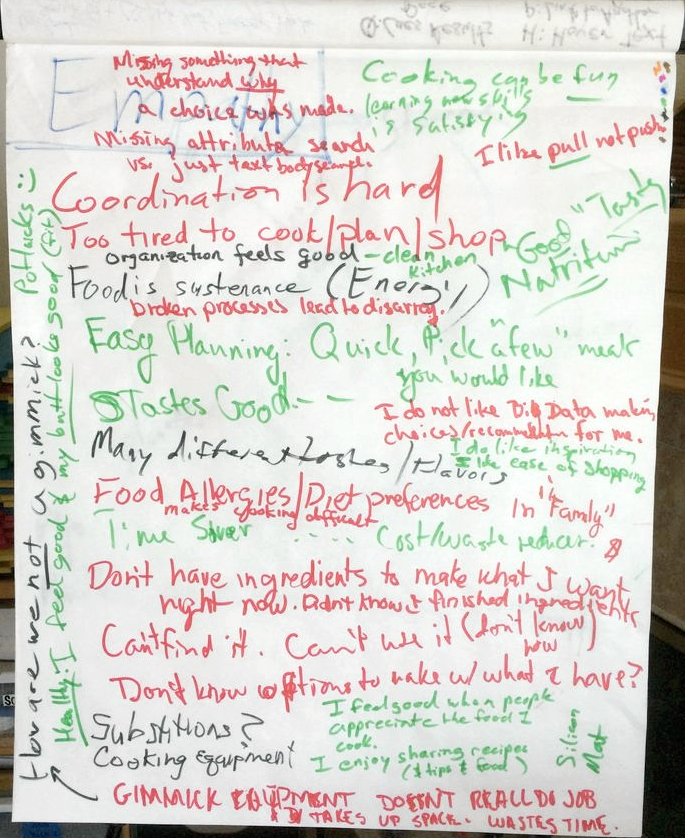

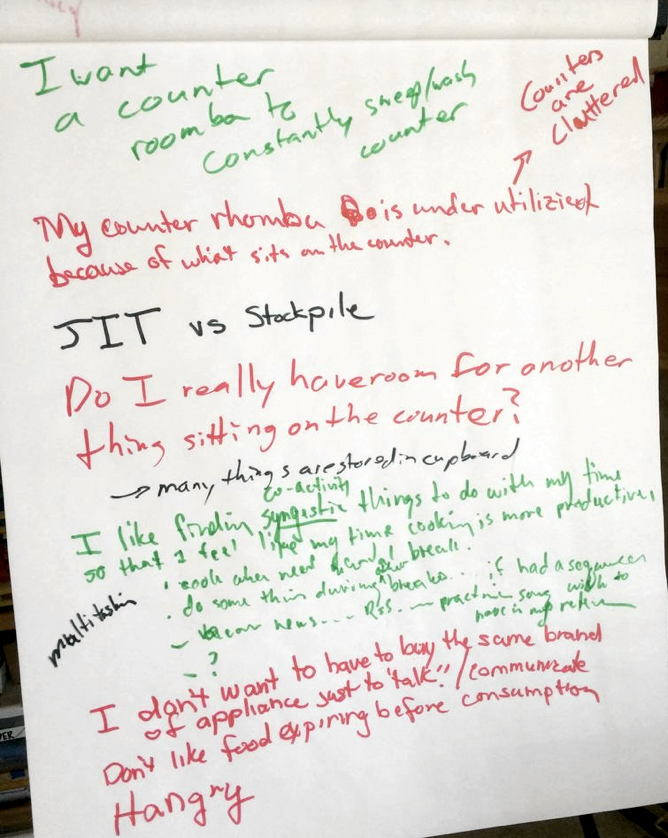

05/21/2019 at 00:39 • 0 commentsAs we start

playing with, testing the Jetson Nano, we are also trying to understand how this technology fits in the kitchen by conducting a mini design thinking exercise. The first step in design thinking is Empathy. We spent time understanding the different habits, attitudes, and problems experienced in the kitchen.Common themes were: variety, health, inspiration/recommendation, time, budget, waste, privacy, and social.

Our three key takeaways are:

1. While food underpins everything we do (we need energy to work, play, and create), people often dread spending excessive amount of time in the kitchen.

2. People strive to eat and live healthy lives, but often trade a healthy diet for convenience.

3. Though people feel bad creating extra waste (excess packaging, forgotten leftovers, and rotten fruits), chefs make choices that save time and money in the short term in an effort to reduce stress in the kitchen.

We've included photographs from our brainstorming sessions and a text summary of our findings. Click the Read More link below if you're interested.

Is there anything else you think we should add?

We would love your input.

![]() ---------- more ----------

---------- more ----------![]()

![]()

Points of Pain:

- I feel cooking apps/recipe recommenders are missing something that understands why a choice was made.

- I feel that many cooking apps are missing attribute search vs just text body search

- I like pull, not push.

- Coordination is hard

- I'm too tired to cook, plan, and shop

- I feel broken processes lead to disarray

- I do not like big data making choices/recommendations for me.

- I feel that food allergies, taste preferences, and diets make cooking difficult.

- I feel I often don't have ingredients to make what I want right now.

- I don't always know ingredients are finished.

- I want to find my ingredients in my pantry. If I can't find it, I can't use it (Don't know where it is)

- I don't know what to cook with what I have.

- I feel my counters are cluttered

- I feel my counter Roomba is underutilized because of what sits on the counter

- Do I really have room for another thing sitting on the counter?

- I feel that when I'm hangry I can make poor decisions

- I don't want to have to buy the same brand of appliance just to "talk"/ communicate with appliances.

- I don't like food expiring before consumption.

- I don't know how to cook.

- I don't like to decide what to cook for dinner.

- I get stressed trying to determine what to cook.

- I feel guilty when throwing away food.

- I feel rushed when in the kitchen

- I dislike cleaning a lot of dishes

- I don't like eating the same thing again and again and again and again...

Points of ... well, just some data points:

- Food is sustenance (energy)

- Many different tastes, flavors.

- Acceptable substitutions?

- Cooking equipment impacts my decisions on what to cook and storage, etc.

- How are we not a gimmick?

- JIT (Just in time) ordering vs Stockpile vs always out of stock. What's the right level?

- Many things are stored in cupboards

Points of Happiness:

- I feel cooking can be fun.

- I feel satisfaction when I learn new skills

- I feel good when my kitchen is clean and organized

- I want good, tasty, nutritious food.

- I want easy planning, quick pick a few meals you like.

- I do like inspiration for cooking.

- I like ease of shopping

- I want something to help me save time in cooking, planning, shopping, etc.

- I want something to help reduce my food cost and reduce food waste.

- I feel good when people appreciate the food I cook.

- I enjoy sharing recipes ( and tips and food) Example: Silicon mat.

- I want to feel healthy.

- I feel good and my butt looks good (fit)

- I enjoy potlucks and socializing with food.

- I don't want gimmick equipment that doesn't really do the job, takes up space, wastes time.

- I want a counter Roomba to constantly sweep/wash counter.

- I want to sit down with my family for a proper meal.

- I like to try new foods when I have time.

- I want food recommendations based on what's in my pantry.

- I want to spend less time grocery shopping.

- I like synergistic (non-distracting) activities that I do while cooking. (e.g. relaxing music)

- I like fresh food/produce.

- I like to stock up during sales.

- I would like to know what other people want to eat.

ÓSK Squirrel

An Open Smart Kitchen (OSK) assistant for saving resources and meeting dietary goals by better utilizing the food you squirrel away

Since we are using Bootstrap for the template, the webpage easily adapts to mobile platforms.

Since we are using Bootstrap for the template, the webpage easily adapts to mobile platforms.

Load Balancer

Load Balancer Scale with rods added

Scale with rods added