-

First star on the right, then straight on 'till Morning.

10/07/2021 at 03:56 • 0 comments![]()

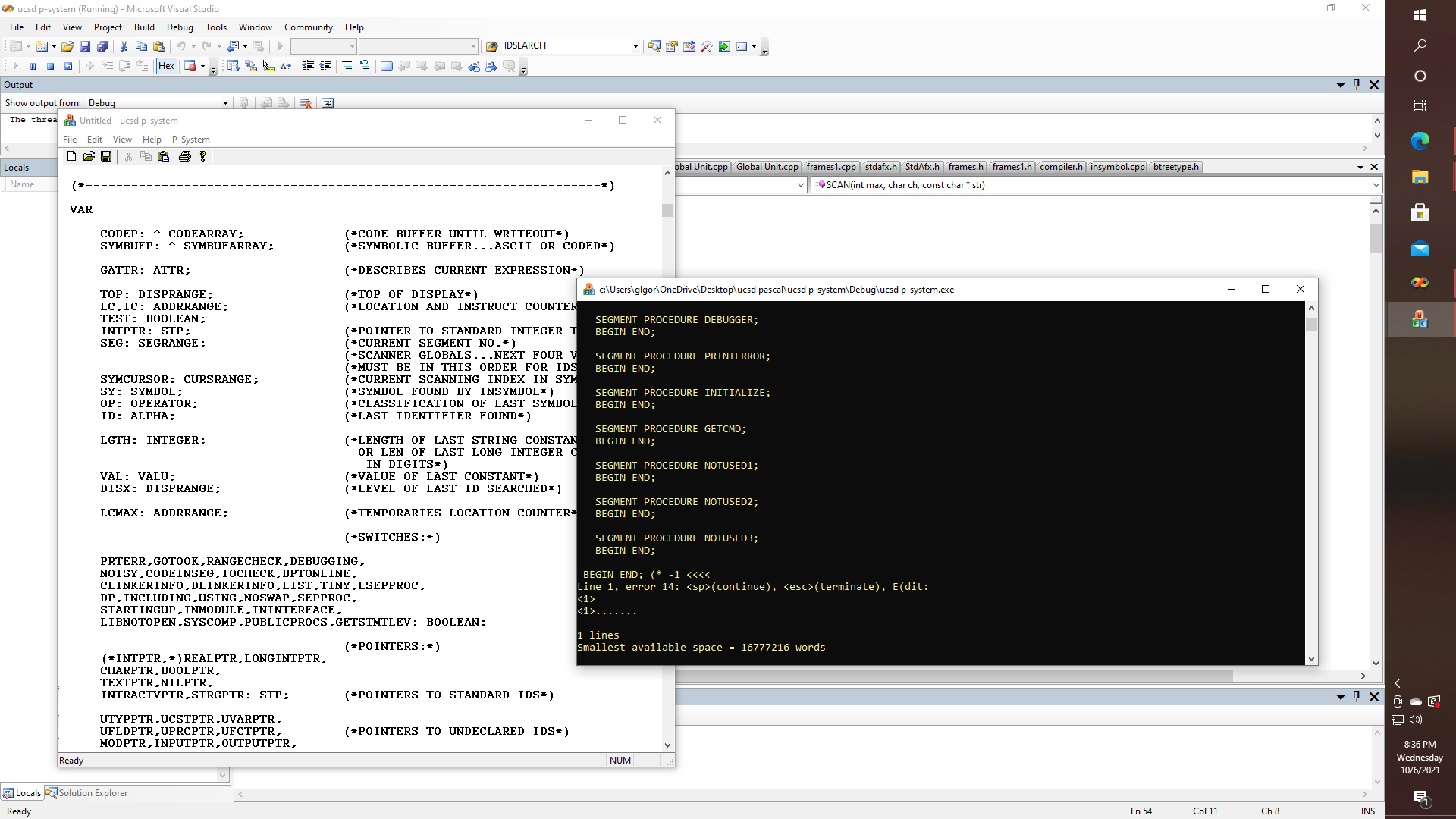

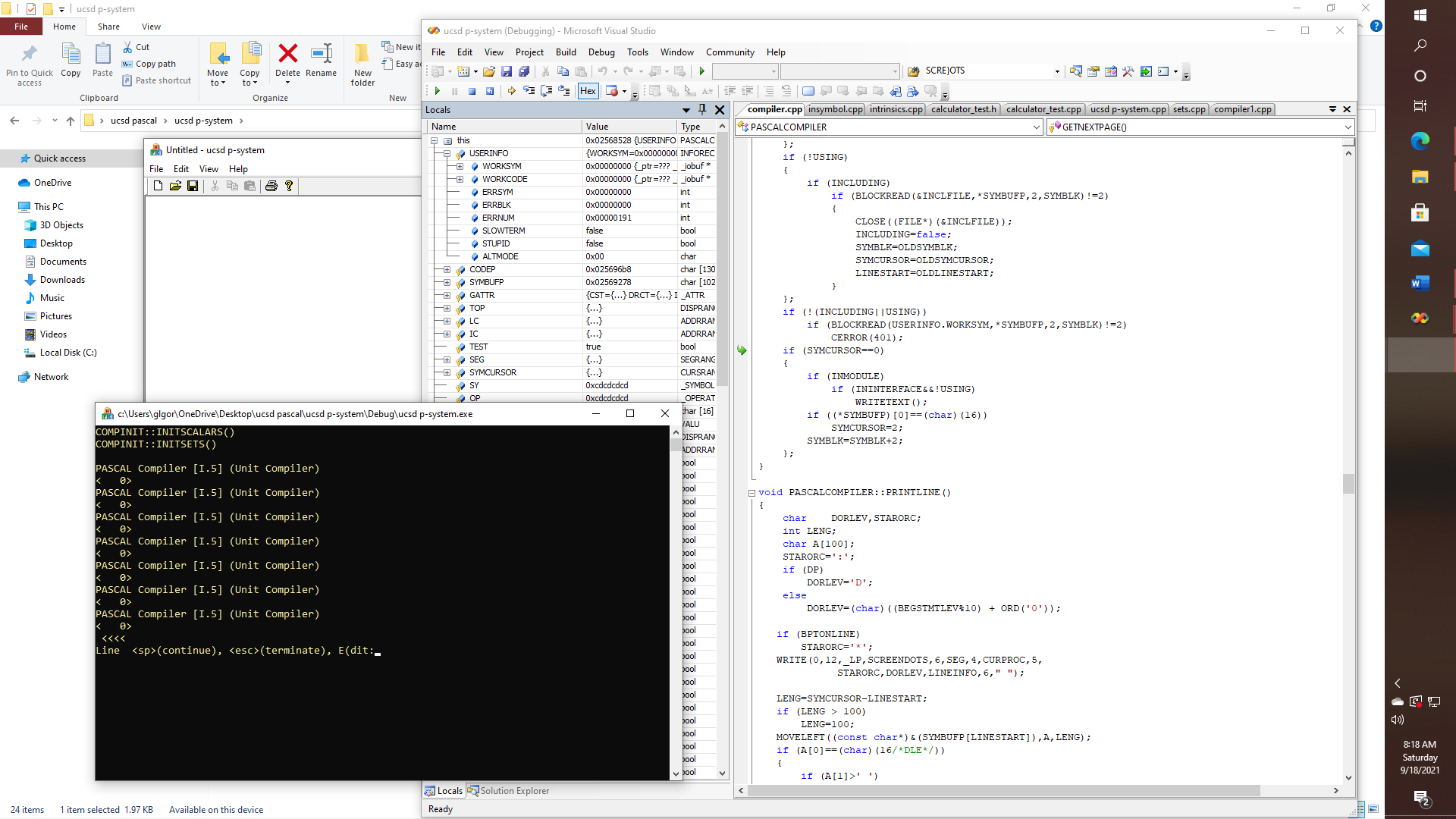

O.K., UCSD p-system Pascal is now compiling under Visual Studio to the point that I can load a Pascal file in a Microsoft MFC CEditView derived class, and then attempt to compile it, even though I only have about 2500 or so lines converted so far, i.e., mostly by using find and replace in a word processor and then manually fixing the VAR statements and the WITH statements. Had to write my own versions of BLOCKREAD, IDSEARCH, and TREESEARCH, among other things; as these were nowhere to be found in the UCSD distribution. TREESEARCH I already had, in my bTreeType class, but my earlier version uses recursion, so I redid that one. IDSEARCH is the one that is going to be more interesting since the whole concept of checking to see whether a token is on a list of keywords and then calling an appropriate function is also something that I am doing with Rubidium. Thus the Pascal version looks like this:

#include "stdafx.h" #include "afxmt.h" #include <iostream> #include "../Frame Lisp/defines.h" #include "../Frame Lisp/symbol_table.h" #include "../Frame Lisp/btreetype.h" #include "../Frame Lisp/node_list.h" #include "../Frame Lisp/text_object.h" #include "../Frame Lisp/scripts.h" #include "../Frame Lisp/frames.h" #include "../Frame Lisp/frames1.h" #include "../Frame Lisp/extras.h" #include "compiler.h" namespace SEARCH { char *keywords[] = { "DO","WITH","IN","TO","GOTO","SET","DOWNTO","LABEL", "PACKED","END","CONST","ARRAY","UNTIL","TYPE","RECORD", "OF","VAR","FILE","THEN","PROCSY","PROCEDURE","USES", "ELSE","FUNCTION","UNIT","BEGIN","PROGRAM","INTERFACE", "IF","SEGMENT","IMPLEMENTATION","CASE","FORWARD","EXTERNAL", "REPEAT","NOT","OTHERWISE","WHILE","AND","DIV","MOD", "FOR","OR", }; frame m_pFrame; symbol_table *m_keywords; void RESET_SYMBOLS(); void IDSEARCH(int &pos, char *&str); }; void SEARCH::RESET_SYMBOLS() { frame &f = SEARCH::m_pFrame; symbol_table *t=NULL; t = f.cons(keywords)->sort(); m_keywords = t; } void SEARCH::IDSEARCH(int &pos, char *&str) { char buf[32]; SEARCH::RESET_SYMBOLS(); symbol_table &T = *SEARCH::m_keywords; size_t i, len, sz; sz = T.size(); token *t; pos = -1; for (i=0;i<sz;i++) { t = &T[(int)i]; len = strlen(t->ascii); memcpy(buf,str,len); buf[len]=0; if (strcmp(buf,t->ascii)==0) { pos=(int)i; // PASCALCOMPILER::SY; // PASCALCOMPILER::OP; // PASCALCOMPILER::ID; break; } } }Now as it turns out, on the Apple II, we are told that this function was actually implemented in 6502 assembly; which is why it is not in the UCSD distribution. What it does of course, is check to see whether the symbols that are extracted by INSYMBOL are in the list of Pascal keywords, and then modify a bunch of ordinal values, enumerated types, or whatever in the global variable space; which is exactly what I need to do, but don't want to do in Rubidium; with respect to not wanting to use global variables, that is. That would be TOO simple. Even if this should turn out to be a refactoring job from hell, the payoff, in the end, will be well worth it - once I have clean C code that can be compiled, or transmogrified, or whatever to also run on the Propeller, or the Pi, or an Arduino, etc., such as by trans-compiling C to Forth, or C to SPIN, or C to Propeller assembly (PASM) or perhaps a mixture of all three. Oh, and let's not forget about LISP.

-

Pay no attention to the man behind the Curtain.

09/26/2021 at 22:37 • 0 commentsThat is a whole subject unto itself. The notion of the "curtain" that in effect separates the developers from the users, on the one hand, is generally there for a good reason, like if a full "debug version" of a program runs 50x slower than its release counterpart. But there are also problems with software - where "Uh oh! something bad happened!" which you never want to have to happen with your flight control system, or your robotic surgery unit, or your previously self-driving car, or robotic whatever, whether "it", whatever "it is" tried to re-enact a scene out of the movie "Lawn-Mower Man", or "Eagle Eye", or not.

So let's talk about debugging, code portability, and code bloat. Here is a fun piece of code, but what does it do, what was it intended to do, and what can it really do?

typedef enum { CHAR1, CHARPTR1, DOUBLE1, FLOAT1, INT1, SIZE1 } PTYPE; class deug_param { public: PTYPE m_type; union { char ch; char *str; double d; float f; int i; size_t sz; }; deug_param (char arg); deug_param (char* arg); deug_param (double arg); deug_param (float arg); deug_param (int arg); deug_param (size_t arg); };Before we answer some of those questions in further detail, let's examine some of the implementation details.

deug_param::deug_param (int arg) { m_type = INT1; i = arg; } deug_param::deug_param (size_t arg) { m_type = SIZE1; sz = arg; }O.K., before you say "nothing to see here, these aren't the droids you are looking for", take a closer look. What this little snippet of C++ does is capture the type of any variable that we want to pass to it, which allows for run-time type checking of floats, ints, strings, etc. This means that we can do THIS in C++!

// EXCERPT from void PASCALCOMPILER::CERROR(int ERRORNUM) .... if (NOISY) WRITELN(OUTPUT); else if (LIST&&(ERRORNUM<=400)) return; if (LINESTART==0) WRITE(OUTPUT,*SYMBUFP,SYMCURSOR.val); else { ERRSTART=SCAN( -(LINESTART.val-1),char(EOL),&(*SYMBUFP[LINESTART.val-2]) + (size_t)(LINESTART.val)-1); MOVELEFT(&(*SYMBUFP[ERRSTART]),&A[0],SYMCURSOR-ERRSTART); WRITE(OUTPUT,A /*SYMCURSOR-ERRSTART*/ ); }; WRITELN(OUTPUT," <<<<"); WRITE(OUTPUT,"Line ",SCREENDOTS,", error ",ERRORNUM,":"); if (NOISY) WRITE(OUTPUT," <sp>(continue), <esc>(terminate), E(dit"); char param[] = ":"; WRITE(OUTPUT,param); } .... etc ....Now like I said earlier with the right set of macros and some clever "glue" the C/C++ pre-processor+compiler can be made to chow down on Pascal programs, quite possibly without modification. There is some debate of course, as to whether the C/C++ pre-processor is actually Turing complete, since there is something like a 64 character limit on labels, and a 4096 character limit on line length, IIRC; so that "at best" the pre-processor is considered by some to be a kind of "push down automation". Yet, as I said, with the right "persuasion" the grammar of any well-defined programming language should be able to be transmogrified into any other.

Oh, and by the way - here is how you get, at least in part, the C++ pre-processor to chow down on Pascal WRITE and WRITELN statements, even if I haven't fully implemented Pascal-style text formatting, yet. (it's not a high priority).

First, we use our "debug parameters class" to capture variable types during compilation.

void WRITE(int uid, deug_param w1) { _WRITE(uid,1,&w1); } void WRITE(int uid, deug_param w1, deug_param w2) { _WRITE(uid,2,&w1,&w2); } void WRITE(int uid, deug_param w1, deug_param w2, deug_param w3) { _WRITE(uid,3,&w1,&w2,&w3); } .// etc ... // NOTE that this can also be done with templates or macros.Then we use another version of _WRITE or _WRITELN which accepts C style VAR_ARGS, and it WORKS, even though the VAR_ARGS method otherwise doesn't propagate type information.

void _WRITE(int uid, size_t sz,...) { char buffer1[256]; char buffer2[256]; deug_param *val; unsigned int i; va_list vl; va_start(vl,sz); i=0; memset(buffer1,0,256); memset(buffer2,0,256); while(i<sz) { val=va_arg(vl,deug_param*); switch (val->m_type) { case CHAR1: sprintf_s(buffer1,256,"%c",val->ch); break; case CHARPTR1: sprintf_s(buffer1,256,"%s",val->str); break; case DOUBLE1: sprintf_s(buffer1,256,"%lf",val->d); break; case FLOAT1: sprintf_s(buffer1,256,"%f",val->f); break; case INT1: sprintf_s(buffer1,256,"%d",val->i); break; case SIZE1: sprintf_s(buffer1,256,"%s"," "); break; default: break; } strcat_s(buffer2,256,buffer1); i++; } SYSCOMM::OutputDebugString(buffer2); va_end(vl); }Presto! Now a Pascal program that is being written in C/C++ can run with far less modification, such as NOT having to do this!

#if 0 void PASCALCOMPILER::CERROR(int err) { char buffer[64]; sprintf_s(buffer,64,"Compiler Error: %d",err); WRITELN(OUTPUT,buffer); } #endifThus, our Pascal to C/C++ code can remain in a more pristine state, on the one hand., i.e., by not having to clutter it up with unwanted printf's, scanf's, and other things that might not even be available on a recent IDE that works with a modern OS (which might require UNICODE strings for example; and which in turn has led to millions, if not billions of lines of broken or unmaintainable code; and which sadly might mean that in some cases, older "tried and true" applications that might have been written a "long time ago" in some language like COBOL or ADA or who knows what -- get replaced with code that is "completely rewritten" but also "completely broken." And then what? Do the planes fall out of the sky? Does the reactor melt down? Do buildings collapse because someone can't import the plans from the old CAD package into the new one - so they don't even know how serious the problem is?

I think that you can figure out the consequences of "inadequate" software tools.

-

Introducing "General Concept"

09/23/2021 at 21:40 • 0 commentsEarly on in this project I said that there would be robots, and by that I meant robots, in the plural form, not singular. Even though the focus of this project is primarily oriented toward AI development, data acquisition, hardware interfacing and control systems, every now and then as Han Solo would say, "Sometimes I might even surprise myself."

![]()

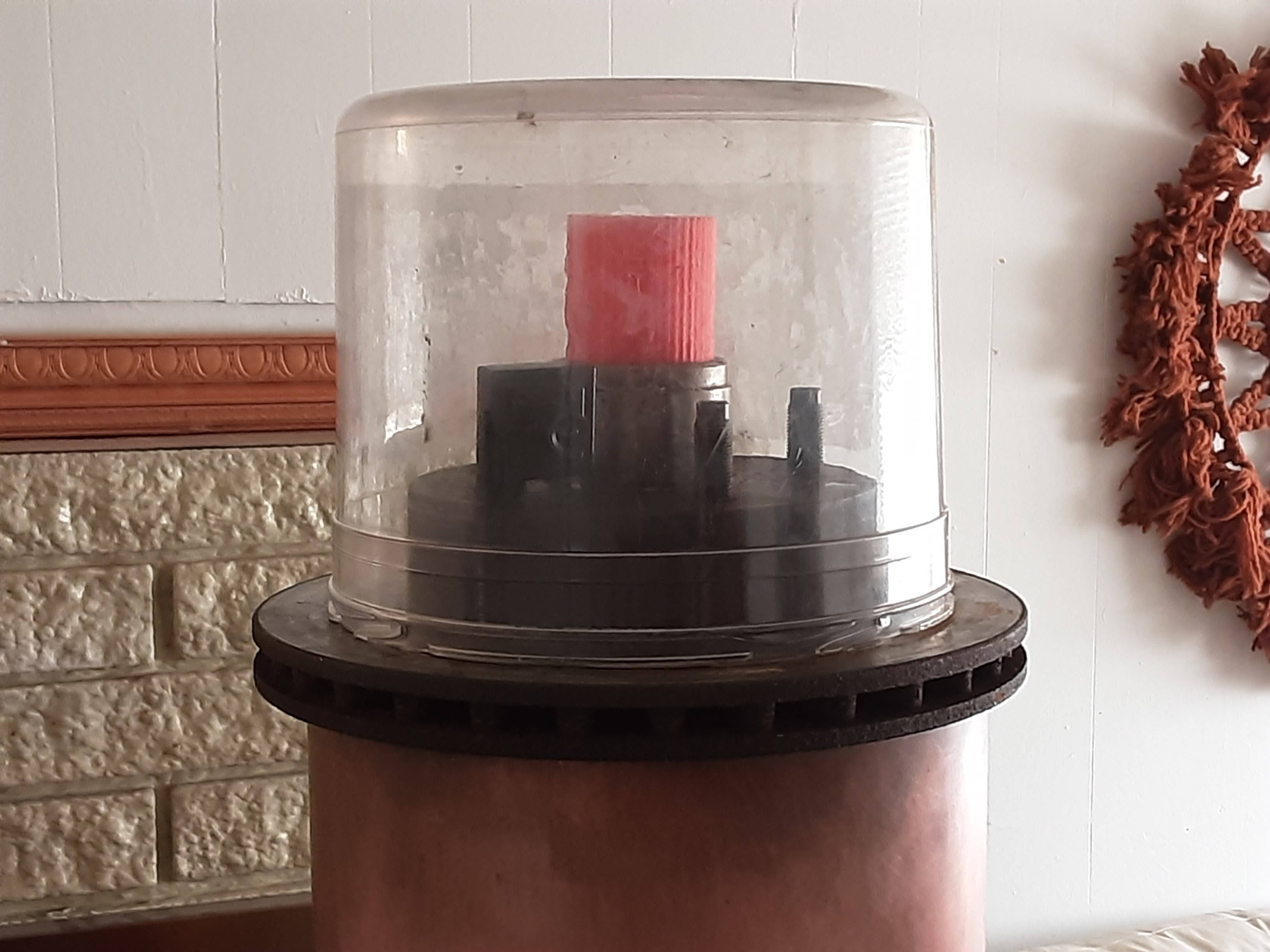

So here now we finally meet General Concept.

![]()

This could actually get very interesting.

![]()

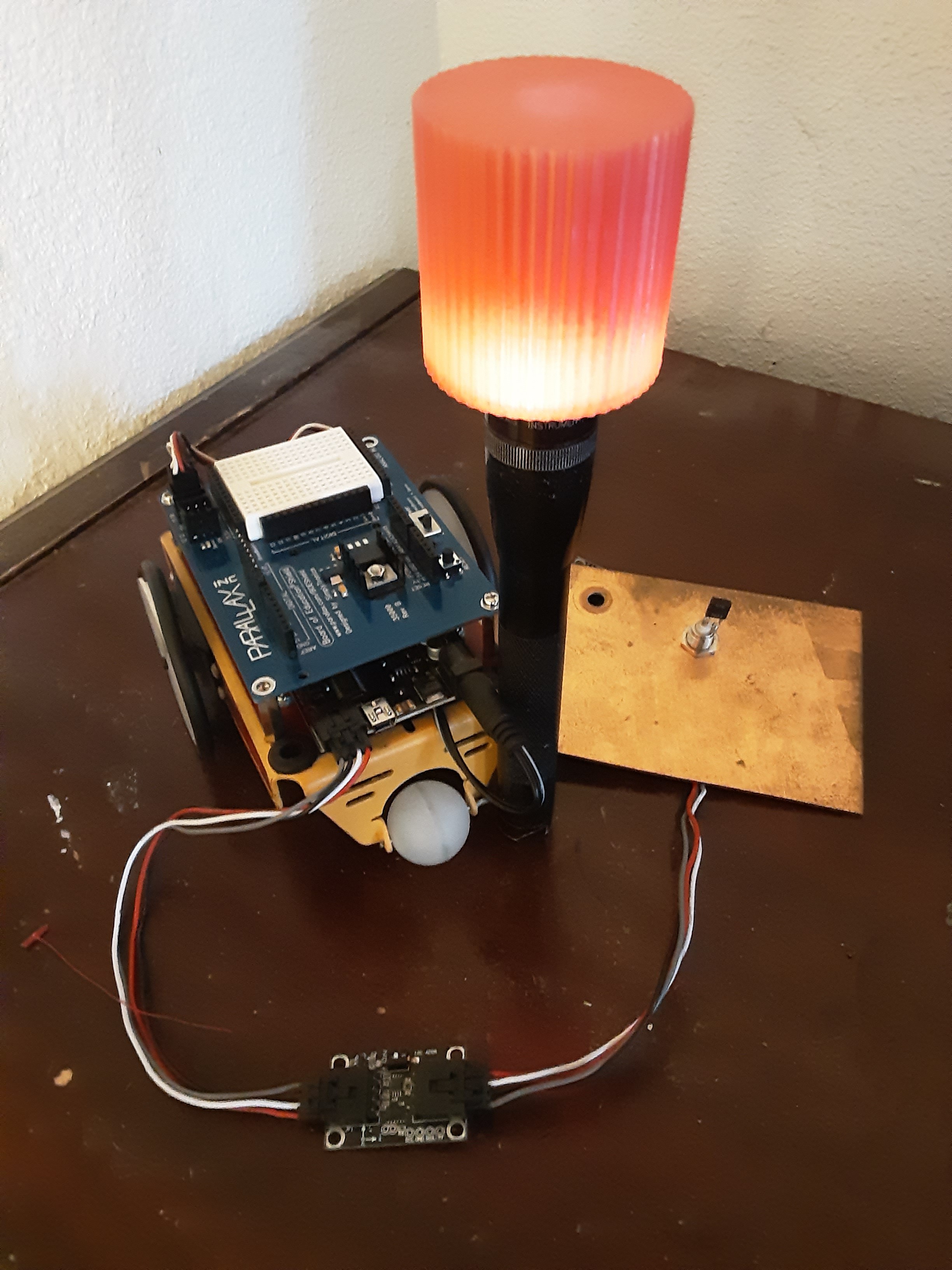

Since the 3 d printed gear might also work as an optical diffuser, useful for such things as omnidirectional infrared, or just lighting up really nice when the robot is speaking or preparing to move.

![]()

That doesn't mean that I have abandoned the concept of the Boe-dozer, by any means. There are plenty of pathways worthy of exploration. Now getting back on track with the software side of things should be my main focus, in the time remaining. Stay tuned!

-

Where have all the Flowers Gone?

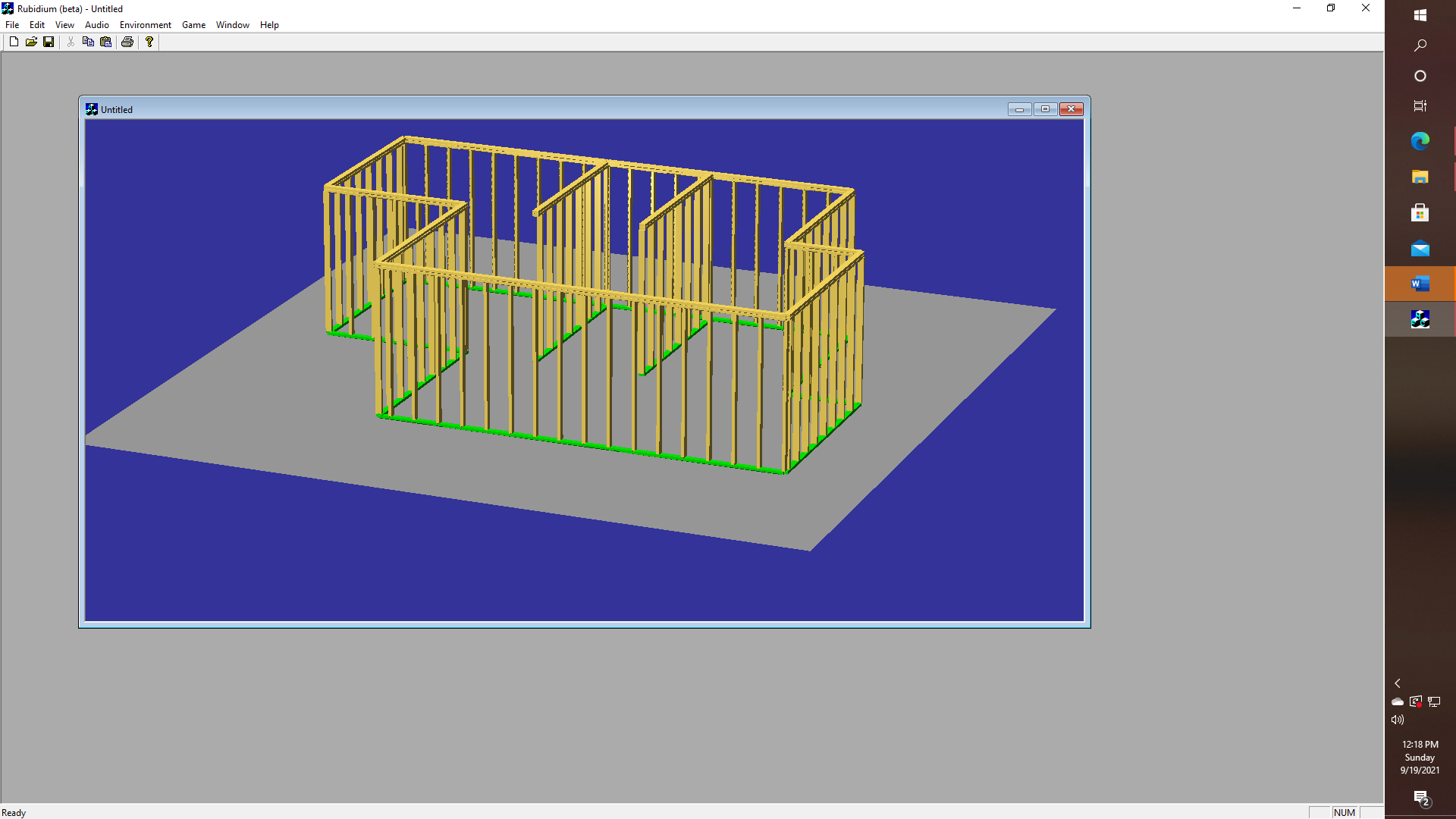

09/19/2021 at 18:22 • 0 comments![]()

I still haven't decided whether I should try to go the "Boe-dozer" route like I was thinking about earlier. Or maybe I could mount a small NTSC-compatible video camera as if it was a part of some kind of turret, like a miniature "tank." It would also be nice if I had a "bigger barn", so as to be better equipped for dealing with issues of scale when planning and/or doing live modeling, instead of just talking about running things in "simulation". This is the post that I was going to entitle "Go Ask Alice (when she is ten feet tall), but I changed my mind - after reading some of the news about Afghanistan, and how a relief worker and seven children were recently killed, and now the Generals just admitted that "no explosives" where found, and no link to ISIS existed. Maybe this isn't the place to be political, or else I could have said something about being "Wasted away again in Margaritaville" since "I read the news today oh boy" about empty Tequila bottles being found strewn about in the new Air Force One. End of rant. Back on topic: If robots become a part of out daily lives, and AI is going to be relied upon to make life or death decisions, well - are we ready yet to meet our new robot overlords?

![]()

O.K.. off of the rant now, and back on topic - I hope! Imagine a robot where we can give it commands - like "IDENTIFY THE ROOM WHICH CONTAINS THE CRYSTAL PYRAMID BUT DO NOT ATTEMPT TO ENTER ANY ROOM WHERE TROLLS MIGHT BE PRESENT." This sort of thing is very doable with game engines of the type that were used to implement classics like "Adventure" "Zork" or "The Wizard and the Princess". Provided that is that we have a way of building an accurate model of the world that we want our robots to exist or CO-EXIST in. Elon Musks' recent video about how Dojo will work was very interesting in several ways, like how the model view for autonomous self-driving cars is supposed to work. It's one thing to talk about OpenGL and graphics, yet there is so much fertile ground for exploration with programs that might try to generate an accurate point cloud by actual exploration, and then proceed to the problem of defining vertex information, line sets, bounding planes, then proceeding to the minimum convex hull, as well as collision domains, with object recognition thrown in along the way. The Boe-Bot is, of course, a bit too big to be able to enter and leave the various rooms of the model house that I built on its own, which is just another Goldilocks problem, I suppose, just as it is a bit small to be able to competently navigate a real world labyrinth. And I could still use a bigger barn.

![]()

Real-world programs of course might be variations of the type "IF TROLLS ARE FOUND YOU MAY FIRE MISSLES, AND CONTINUE FIRING MISSILES UNTIL ALL TROLLS ARE KILLED, BUT IF A CRYSTAL PYRAMID IS FOUND, WHICH IS BEING GUARDED BY THE TROLLS, OR OTHERWISE BEING HELD HOSTAGE - THEN CONTACT BASE COMMAND FOR FURTHER INSTRUCTIONS". Oops, I said that I wasn't going to get political. Even though I just did.

![]()

Somewhere. I have the source code for an old Eliza program that I converted to run under my Frame Lisp APIs, so I am fairly certain that I can get some SHRDLU like functionality up, but honestly, this thing is huge. The implications for this technology are even larger. Consider for example the economic consequences if an elderly person falls and breaks their hip, and then isn't strong enough even after hip replacement surgery to use a walker or a wheelchair on their own. The cost of nursing home care in the United States can run anywhere from $5000 to over $25000 per month, and Medicaid does not kick in until after you sell your stocks and other assets, and even then they can (or at least will try to) put a lien on your house (or your parent's house), and now how much does that add up to for example - if that sort of thing goes on for three or four years? Or eight? How many people have an extra million or two to watch evaporate? The whole concept of Elon's household robots has a certain appeal, at least in those situations where someone might be able to live semi-independently if "someone" was available to bring in the mail, take out the trash, put things away and take things down from high shelves, etc.. In the meantime, the very rich will continue to get even more so very rich, and the middle class will continue to vanish.

![]()

That of course wasn't the exact model that I actually built, but nonetheless, I think that should be possible to use a P2 chip as the "brain" for a simple robot, whether we have to give it the model that we already have - or better yet if it is possible (it should be) to take video input and generate the point cloud, vertex list, wireframe, surface list, etc. So that it should BE ABLE TO DO SOMETHING!

-

Once Upon a Midnight Dreary.

09/18/2021 at 15:33 • 0 commentsWhat is a programming language, and how is it specified? The following set of macros will give the user a head-start on getting almost any C compiler (actually the pre-processor) to chow down on Pascal Programs. As nearly always, or so it seems, there is still a lot more work that needs to be done, even if the only purpose of this exercise (for now) is to prove a point. Indeed, before we can even think about what a programming language is; maybe we need to address the issue of "what is a computer program?"

#define { /* #define } */ #define PROCUEDURE void #define BEGIN { #define END } #define := ASSIGN_EQ #define = COMPARE_EQ #define IF if ( #define ASSIGN_EQ = #define COMPARE_EQ == #define THEN ) #define REPEAT do { #define UNTIL } UNTIL_CAPTURE #define UNTIL_CAPTURE (!\ #define ; );\ #undef UNTIL_CAPTURE #define ; ); #define = [ = SET(\ #define ] )\ #define ) \ #undef = [\ #undef ] // so far so good .... #define WITH ????????But first, lets us consider how some hypothetical Alice can now convert her Pascal programs from that class she took in high school to run on Bob's C compiler, which hopefully is lint compatible, on the one hand, and which might also have a C to Spaghetti BASIC runtime option on the other. Or can she? Probably not with Visual Studio Code, at least in its present incarnation; and this sort of thing sadly, will result in an even bigger nightmare with Microsoft's purported AI approach to managing or I hinted at earlier,, "mangling" up code libraries on Git. It is as if we should be entering a new era of code renaissance, but to paraphrase Ted Nelson, who once said, "no matter how clever the hardware boys are, the software boys will piss it away" ... well, I will leave that as an exercise to the reader to figure out.

Despite the fact that you can get about a million hits on any search engine if you search for information about "context-free-grammars", it appears that a simple macro language based on the C pre-processor might indeed be Turing complete, at least in principle, if we had some improvements to the overall concept defining what a context is, or whether contexts should, in general, be understood in a dynamic sense, that is to say if that can be done in a well-defined way. The Pascal WITH statement might cause a simple macro-based translation scheme, fits since the preprocessor would have to have a way of maintaining a restricted symbol table so that it could match otherwise undeclared identifiers with the correct object forms. This is deeper than the problem with sets, but it is related to another issue and that is what is whenever we encounter a group of statements like SET S = [2,3,5]; S = S + [7,11,13] we try to transform the latter statement into something like ((SET)S) = ((SET)S)+SET(7,11,13); in effect enforcing a C style cast on every variable everywhere in the translation.

This has no effect on any subsequent C compilation, Footnote: consider int a,b,c; (int)a = (int)b + int(c); which should compile just fine in any dialect of C. yet it does give a lispy feel to the output, or maybe I should suggest Python? That is to say that if an intermediate representation of a program according to some translation scheme explicitly required that every occurrence of a parameter be tagged with type information; then that would give the meta language at least some of the properties of a reflective language, which in term implies that even though C/C++ does not explicitly support reflection, we can add reflection via a suitable set of library routines, which would be invoked as appropriate when converting algorithms which can be specified according to some meta-language.

Nothing is better than steak and eggs for breakfast; but stale cereal is better than nothing, right? Does it, therefore, follow that if A is better than B and B is better than C that A is also better than C? Maybe somewhere in the land of GNU, hidden among an abundance of NILs, NOTs, NULLs, and NEVERMORES, there is some hidden treasure, or some deadly pitfalls, besides and among those things aforementioned.

Now, If that is so, then is stale cereal better than steak and eggs? Not if any "better than operator" must always be contextually aware, so that in the context of does "rock beat scissors?" we can suggest that the "beats" operator is like the "better" than operator, on the one hand. Yet with "steak and eggs" the error is that the meaning of the word nothing has changed from one context to another within an apparent frame; whereas for those familiar with Galois fields, for example, the rock vs. scissors vs. paper example could be understood as a limiting case for yet another over-arching set type where local order is preserved, but circular dominance can also exist.

These types of problems can give rise to important legal challenges for example, such as the issue of liability for defective software. Is it better to override the pilot input if the pilot tries to push the yoke down, or pull up; contrary to the "opinion" of the software, even in the case of sensor failure? This has actually happened, at least twice. Now, who is liable, and was it because someone didn't really read the code, or tried to work out all of the corner cases and edge cases?

Now, what if the Pascal WITH statement contains a bug, and that that bug is inherent in the definition of the language itself; at least in principle, because Backus-Naur forms and other efforts to formalize problems of the "my dear aunt sally" variety with context-free grammars ... lack concepts, and thus they are vulnerable to frame substitution errors, on the one hand, which may or may not be masked by some types of polymorphism so that the code appears to execute correctly, "most of the time", and yet the PFC_LIST keeps getting corrupted, the IRQ is not less than equal, and here we go again with another bad patch Tuesday, and now they want us to write.

Somewhere me-thinks that there is some symplectic manifold that can be used to make business decisions that got lost in the maze of twisty little passages, long after the big company with the small name couldn't discern one rabbit hole from another, somewhere in the hall of mirrors that is connected to the maze of moving pathways. There is code for this, of course, somewhere on Git; it is just a matter of working out the compiler options and linker options.

Now of course, just as Pascal is a descendant of Algol-60, and Ada is a descendant of Pascal, but then along came C++ which combined features of Smalltalk with C, and so on. So, is Phyton just de-parenthesized Lisp in disguise; or does it not matter, since with a suitable grammar processor one can input a C or Pascal program and translate statements like print((2+3)*(4+5)); into the equivalent print(mult(add(2,3),plus(4,5)); which really gives rise to the pre-translation:

2: ENTER: 3: ADD: 4: ENTER: 5: ADD: MULT: PRINT:This is of course, both beautiful and ugly, so simple, and so elegant, all while being so classic, or maybe I could write a flow chart. Yet, nobody uses flow charts anymore, unless it is part of a sales pitch for some motivational seminar or something like that. However, it is probably not a good idea to use the term “flow chart” and “motivational seminar” and the same sentence, even though I just did, since --- cringe: some business methods are still patentable, and oh how the drafts-people love flow charts! Even if flowcharting is one of the easier rabbits to pull from almost any software hat; with the right tools or course.

Maybe I should have entitled this log "Go Ask Alice *(when she is ten feet tall), but that will be the subject matter for a later post. So for now, and in other news,, I have made some progress in converting the UCSD p-system from Pascal to C++, so that I can eventually convert it either into a native PASM compiler for the Parallax propeller, or else transmogrify it into a Pascal to Lisp converter, or a native language to pseudo-code converter, which then tries to find the right lisp functions, etc..

Hence, even though I HATE Pascal, as I said earlier - the UCSD p-system had a VERY elegant segmentation model that was also very memory efficient. Otherwise, there are many complaints that GCC for the propeller P1) has been abandoned for years, and thus there is also a desperate need for a working large memory model that is more up to date and which can for example use SD cards, or the now readily available (and low cost) 16 Megabyte serial flash memory as a kind of "virtual memory" or else write drivers so that the UCSD p-system thinks that the flash chip is a "hard disk", and then we would get a kind of virtual memory model as gravy, since p-system had a very aggressive segmentation model, that as I also stated - could be made to work more like DLL's work in windows.

![]()

That means of course that if we could run WINE or a subset of WINE on the P2, then the P2 architecture would be competitive with the Raspberry Pi world, except that when you look at how the P2 chip works, with its ability to do to isochronous interleaving of a plurality of tasks across an echelon of processor, with near nano-second scale granularity; there is no comparison.

-

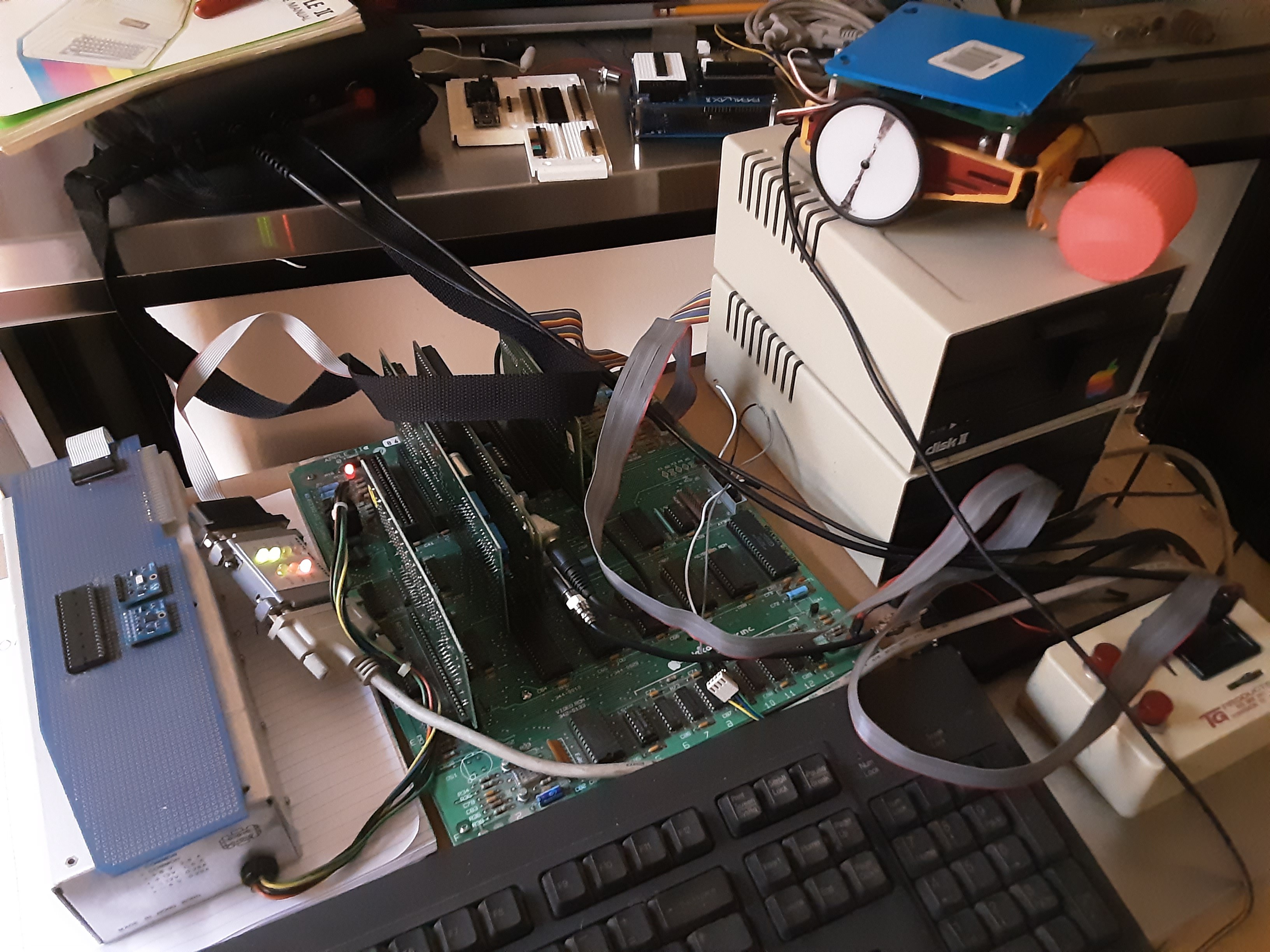

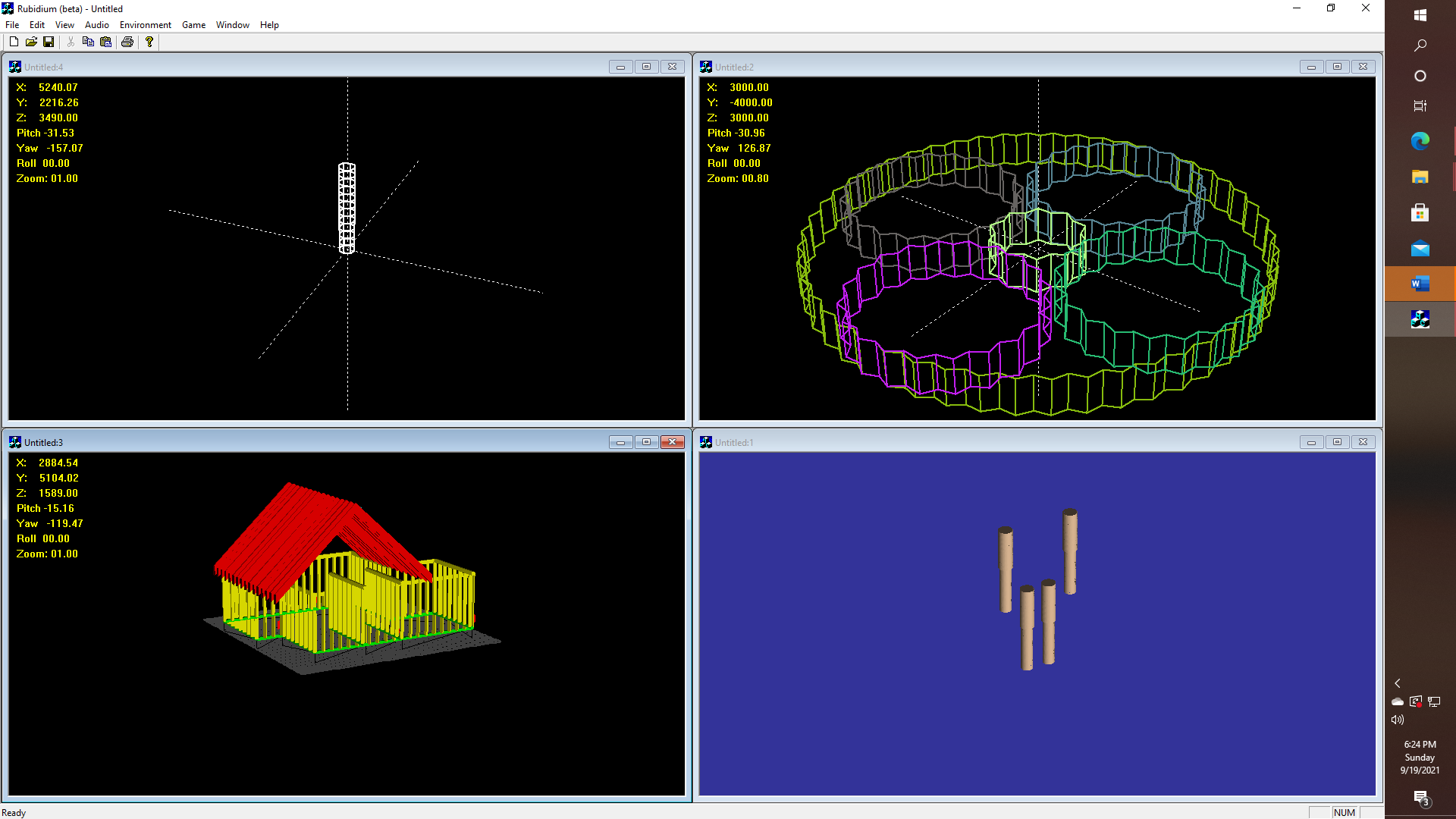

All Mimsy were the Borogoves

09/17/2021 at 18:51 • 0 commentsI am not sure if "mimsy" is quite the way to describe how I am feeling right now. I was trying to 3D print a planetary drive, using a graphics package I wrote from scratch, even though I used a commercial 3-d printer to do the actual print, and I ended up with a solid block of gear-ness. Maybe I should entitle this post ".. and did gyre and gimble in the wabe." Hopefully, I will have better luck next time, especially if I can conjure up a design for some kind of multi-axis gyroscopic platform, which might be used not so much as a part of an IMU (inertial measurements unit) - since we have GPS and MEMS (!!) accelerometers for that! Yet, what about giving the Boe-Bot a multi-axis stabilized camera platform, based on the traditional model of the type of stationary platform as was typically used in the IMU in the Apollo spacecraft era.?

![]()

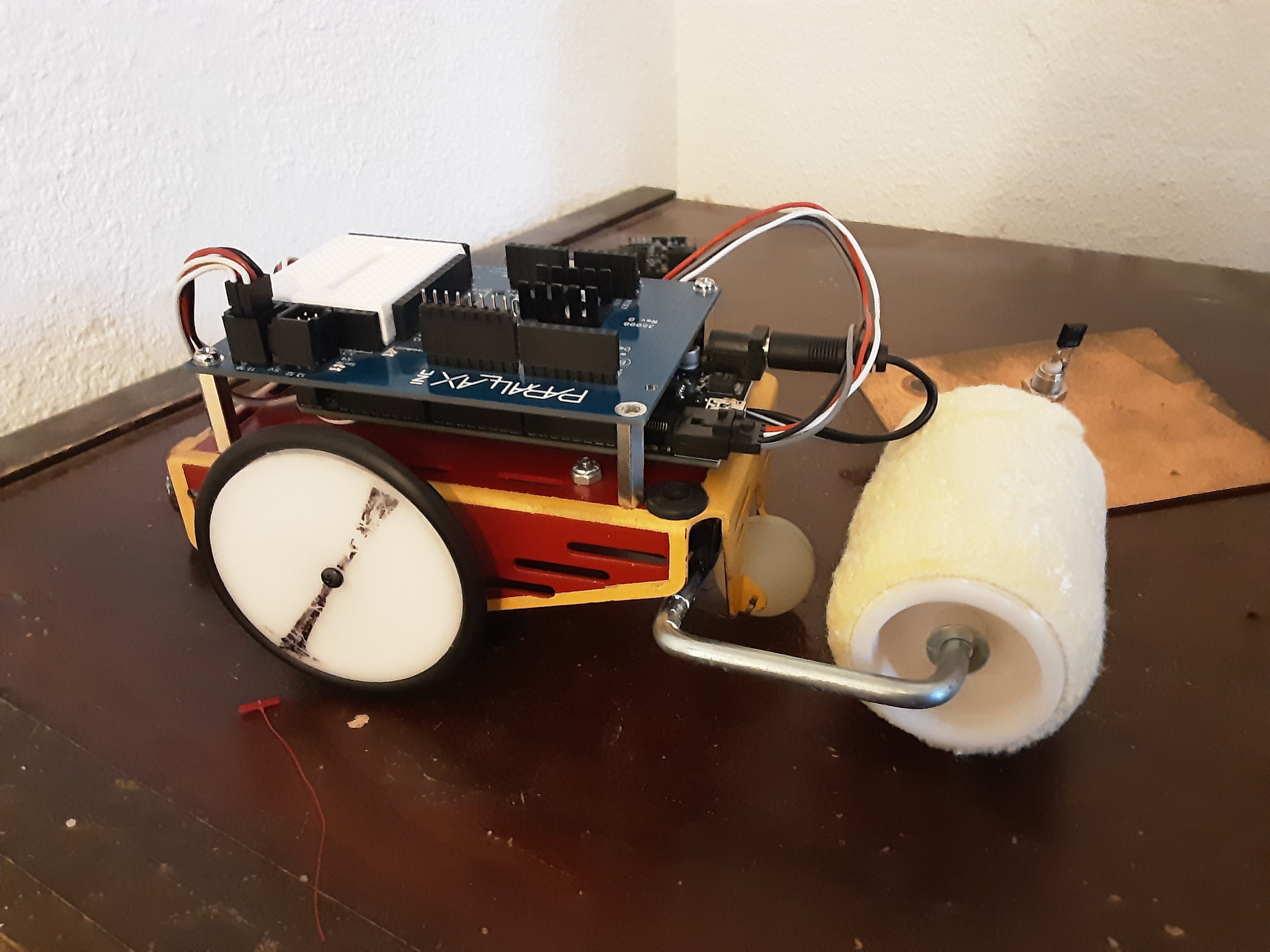

Right now, I am nowhere near doing that, as least as far as "implementation" is concerned, or perhaps it might be easier than I might think. So where are we? Perhaps the Boe-bot isn't very good at navigating rough terrains, such as brickwork, or shag carpet, and that makes me think of something otherwise out of the box and as if out of nowhere in this context, but which comes to mind, anyway. How about a Boe-Dozer? Not a bulldozer, because it's not a bulldozer at all, as it is more like a steam roller, that is to say, if I put that big gear on the front, it should be an easy mod. Or I can try to finish the oscilloscope code and get a camera mounted so that I can actually control this thing over Bluetooth.

![]()

Maybe this is finally a good time to open up this page for comments. What does the Hackaday community really need right now? A C++ compiler for the P2? A LISP for P2/Arduino that is "just enough lisp" to be able to run ELIZA/SHRDLU? Or a crystal skull that says "good evening" to passers-by on Halloween? (and then engages them in conversation sort of Eliza-like) I don't think I can quite pull that one off, just yet. The crystal skull part that is.

A SHRDLU-like "planner" with speech recognition and an Eliza-like interface is very doable with 3-rd party open source tools. I just need to finish Frame-Lisp and get the oscilloscope and spectrum analyzer interface running.

-

Here is another clue for you all ...

09/13/2021 at 16:45 • 0 comments![]()

I promised a clue for those who are searching for the labyrinth. Here is your first clue. I could have titled this post "up up and away", but I DIDN"T! The Reno Balloon Races event was just this last weekend (read yesterday and the day before as of 9/13.2021). It is so easy to imagine so many possible uses for a simple robotics package, besides the overabundance of hexapod and wheel bots. Now, of course, I am kicking myself in the head for not thinking ahead, i.e., so as to perhaps get permission to fly a drone at the event, or some kind of remote-controlled blimp. I don't know if they would allow that, with up to 100 manned balloons in the air all at once.

-

Imagine a Maze of Twisty Little Pathways.

09/13/2021 at 16:04 • 0 commentsIn Reno Nevada, if you know exactly where to look (stay tuned for a hint), you can find this lovely labyrinth. Which of course would be an ideal maze for a small robot to solve. Even though the terrain might be a little bit rough for a small robot like a Boe-bot, but imagine the possibilities.

![]()

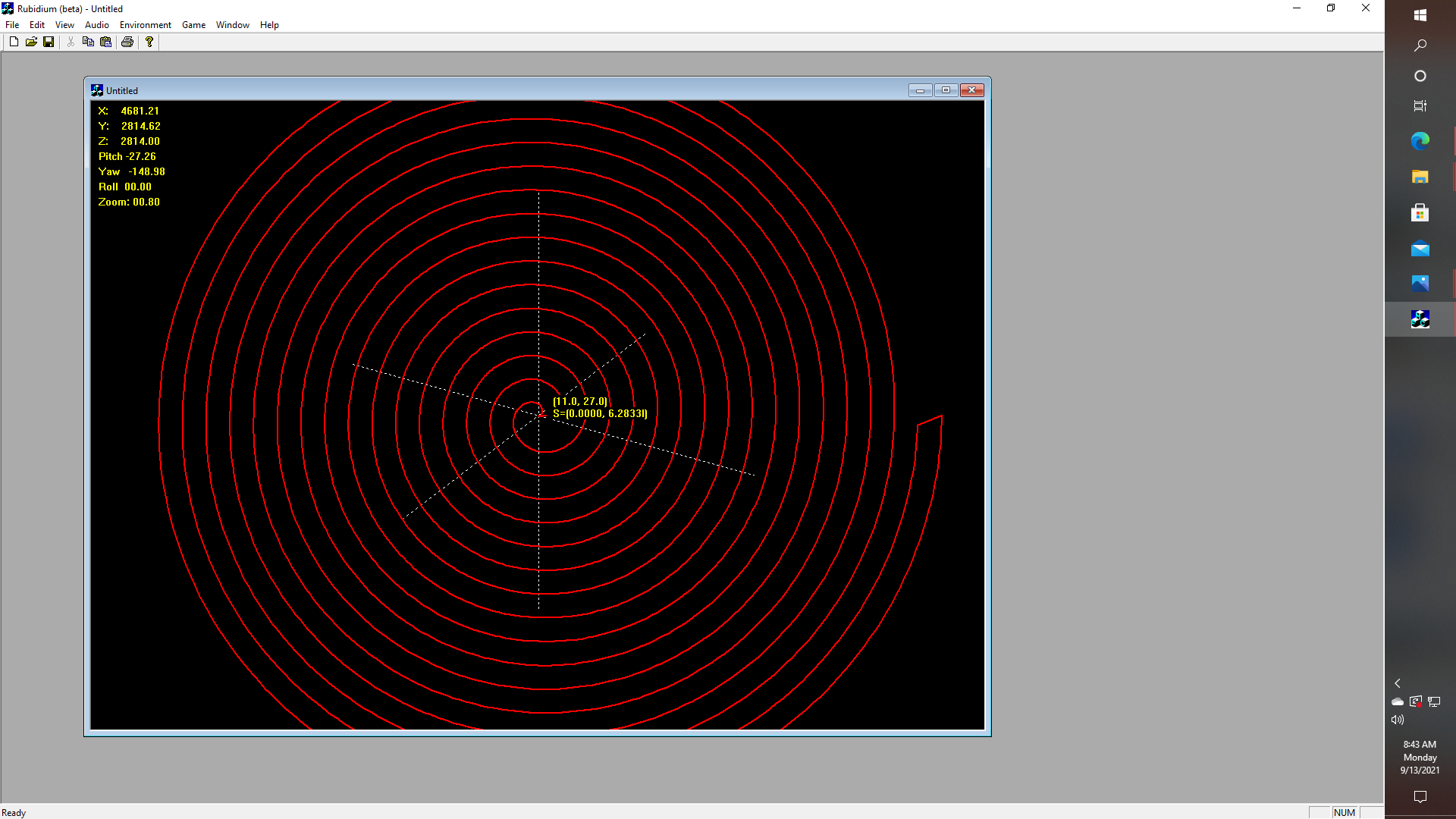

This is a kind of an epiphany of sorts. Since a very long time ago, I implemented my own open OpenGL Stack or a least a subset of OpenGL entirely from scratch, and one of the things that I thereupon decided to experiment with was doing things like calculating "Cauchy's Integral Formula", for those who are familiar with the theory of complex variables. Getting at least some hooks into some OpenGL routines appears to be very doable on the Parallax P2 chip, with its built-in HDMI, VGA, and standard NTSC/PAL or whatever capabilities., and of course there is the Propeller Debug Protocol, so that if a robot could be built that builds a kind of "OpenGL" like world view, while it does the job of exploring its environment. Ah, the possibilities!

![]()

Shades of Westworld? "How do I find the center of the maze?" "What if the maize isn't for you?" What if "they" are supposed to be the ones who "think differently"? Oddly enough, imagine a game where there is a hidden treasure behind the front door on the right, but there also might be a bomb, or some hungry trolls that have an appetite for human flesh, eagerly awaiting to pick the flesh from the bones of any mere mortal who tries to take this game too seriously. Ouch. The only way to disarm the bomb, or be prepared for the trolls, is to find the center of the maze. This is easy for a simple spiral, but probably nonetheless quite difficult for an AI, even in simulation.

Interestingly enough, if you understand "Cauchy's Integral Formula" from calculus then you know that it is possible to compute an integral, i.e., the integral of dz/(z-z0) along some path in the complex plane and that the result should be 2*pi*"square-root-of-minus-one" times the number of times that (z-z0) takes on the value zero INSIDE the path. This means that one can calculate whether a given point is either INSIDE or OUTSIDE an arbitrary enclosed region! And yes, it does work for a really big, weird spiral, that is to say - if you have the map.

O.K.., I admit that this is a HARD problem. Probably very hard even for a Tesla Robot.

-

Follow the Yellow Brick Road

08/28/2021 at 04:08 • 0 commentsSomewhere in the build instructions for the hardware part of this project I was also discussing how I am working on fixing up a sub-dialect of the programming language LISP that i actually developed, something like 25 years or so ago, as a part of a chat bot engine. Well, in any case - I did some updates today to the codebase which is now (at least in part) on GitHub, so this would seem like just as good of a time as any to discuss the role of LISP, in the C++ port of Propeller Debug Terminal.

Basically, the debug terminal responds to a set of commands that can be embedded in a text stream that ideally runs in a side channel alongside the regular printf/scanf I/O semantics that a "normal" ANSI C program might make use of when running in a terminal mode. Hence the command set looks something like this, and that is why LISP is so nice, even if it is written in C++, because at the end of the day it would be so nice to be able to simply CONS a symbol table, and then MAPCAR the symbol table onto a list of GUI commands, so that command processing can be done by tokenizing the input stream and generating the appropriate callbacks or messages.

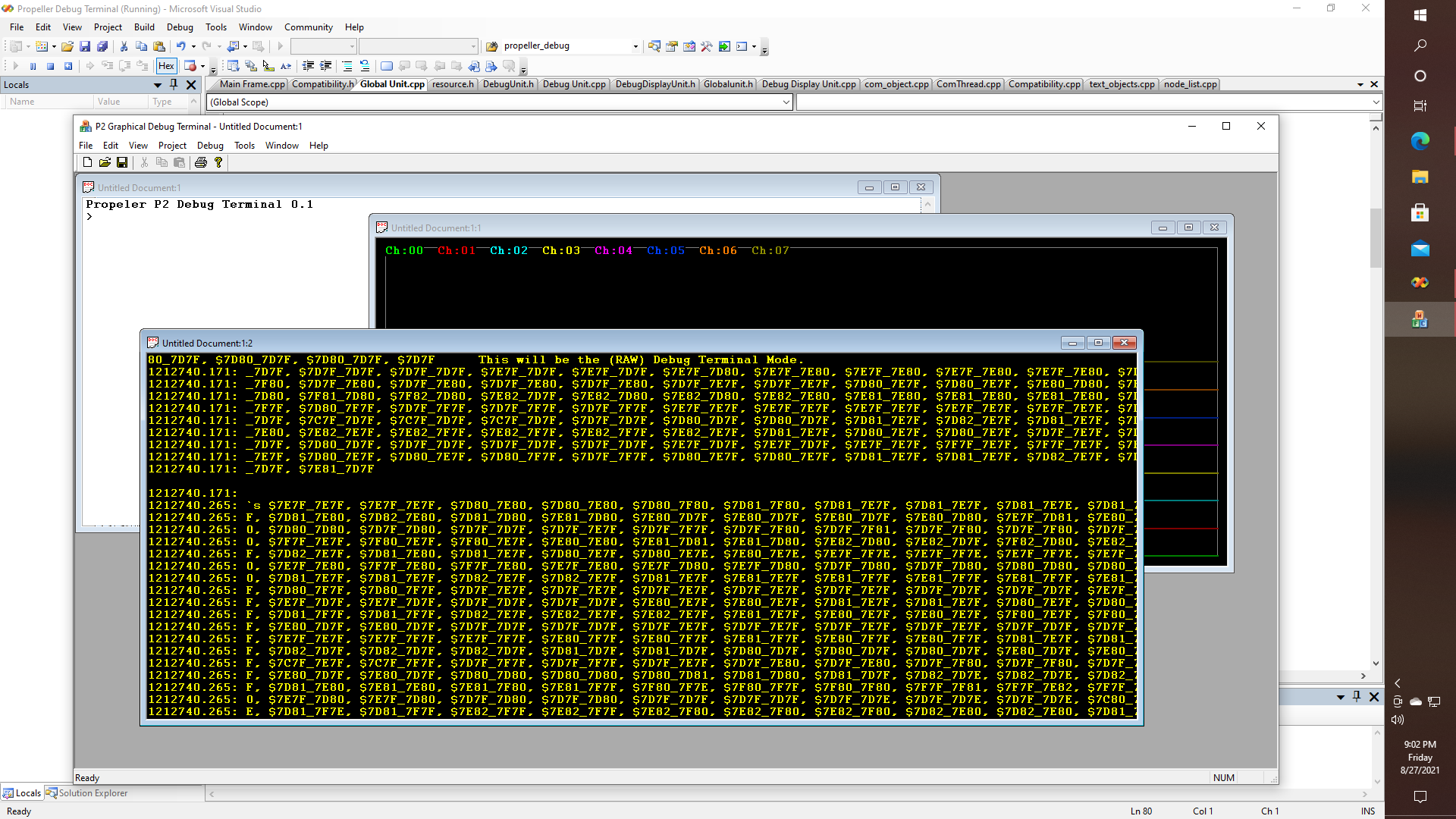

![]()

char *command_proc::debug_tokens[] = { "SCOPE","SCOPE_XY","SCOPE_RT","PLOT","TERM","TITLE","POS","SIZE", "RANGE","OFFSET","SAMPLES","TRIGGER","LABEL","COLOR","BACKCOLOR", "GRIDCOLOR","DOTSIZE","LOGSCALE","CLEAR","SAVE","UPDATE","LINESIZE", "LINECOLOR","FILLCOLOR","TO","RECT","RECTFILL","OVAL","OVALFILL", "POLY","POLYFILL","TEXTSIZE","TEXTCOLOR","TEXTSTYLE","TEXT",NULL }; void command_proc::reset_debug_symbols() { command_proc pdbg; frame &f = *(pFrame->get()); symbol_table *t=NULL; t = f.cons(command_proc::debug_tokens)->sort(); pdbg.exec(NULL1); }So this is a screenshot of the raw debugging stream coming from the P2 chip while running a four-channel oscilloscope program, and the hexadecimal data is oscilloscope data being streamed live. It is just not being plotted .just yet. Real soon now.

-

Having Problems with your Droid?

08/27/2021 at 00:13 • 0 commentsUploaded some images to the gallery of a Boe Bot getting ready for a P2 conversion, or maybe an alternative Arduino enhancement. So many possibilities. A good hack of course starts with whatever is on hand without having to go to Radio Shack (remember those?) and spend any additional $$$.

Computer Motivator

If you don't know what a computer motivator is by now, then you have been reading too many textbooks and not watching enough TV.

glgorman

glgorman