-

all your (moving) base are belong to confusion

09/07/2021 at 14:53 • 0 commentsWhen using the ublox f9p GPS chip, there's a lot of terminology, overloaded technobabble and when adding the documentation from ardusimple, which by it's a nature seems to based in spain, with non-native english speakers, i think you can probably tell whats going to happen...yep confusion. it takes time to get it, because the f9p has some many configuration settings and multiple ways it can be integrated and where RTCM can be piped, and processed.

In the documentation , it covers things like .

Base – rover configurationBase – multiple rovers configuration

RTK moving base configuration

Standalone with RTK/SSR corrections

So, we tried to come up without our own terminology

The Nest -> A non-movable GPS unit which provides RTCM correction data.

The Dog -> The actually robot the 'moves around'.

The Snout -> Another GPS unit on the Robot which can give us heading data.

When we started to use this, we got our heads around the problem better and was able to visualise what we were trying to do, like an animal, that has a body and snout, and lives in a nest!! :-).

As the documentation starts to ramble into moving base , to a moving rovers to the moving base rover, you can find this for your amusement here.

--------------------------

'Hi weedinator,

If your rover is formed by a simpleRTK2B and a simpleRTK2Blite, your rover is actually a “rover + moving base” and “rover from moving base”. Don’t mix these with your static base.

The RTCM stream is as follows:

– Static base sends static RTCM to “rover + moving base”

– “rover + moving base” sends moving base RTCM to rover-----------------------------

you can read more nonsense from ublox themselves.

https://www.u-blox.com/en/docs/UBX-19009093

in section 1.1 you can basically cause your head to spin.

so, the whole thing with base, base station, moving base, moving base station, move base..

so in the end, you just have to accept that it's 'all your base r belong to us, someone set us up the bomb'.

![]()

-

FPV (First Person View) Camera Rig

09/01/2021 at 16:15 • 0 comments![]()

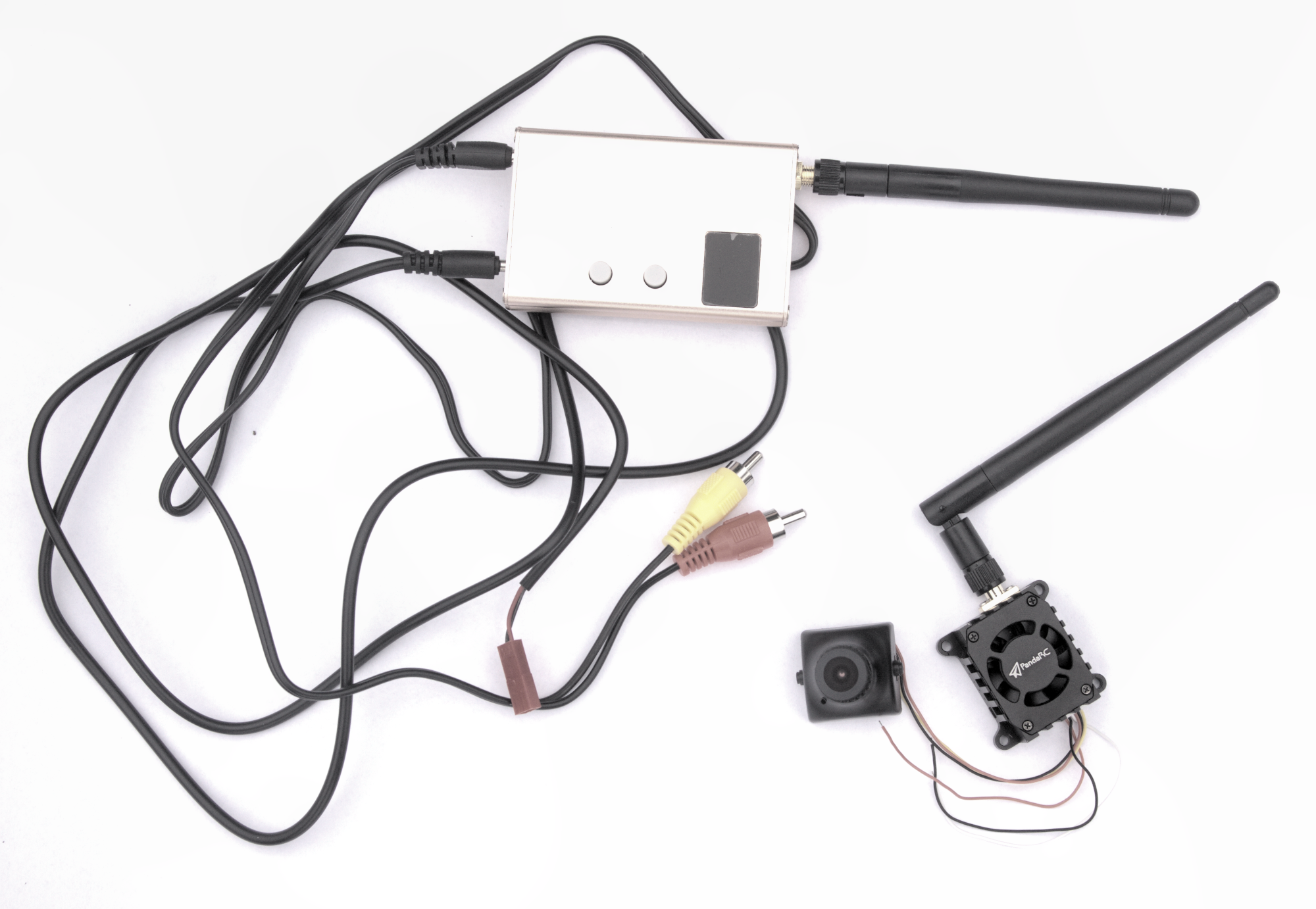

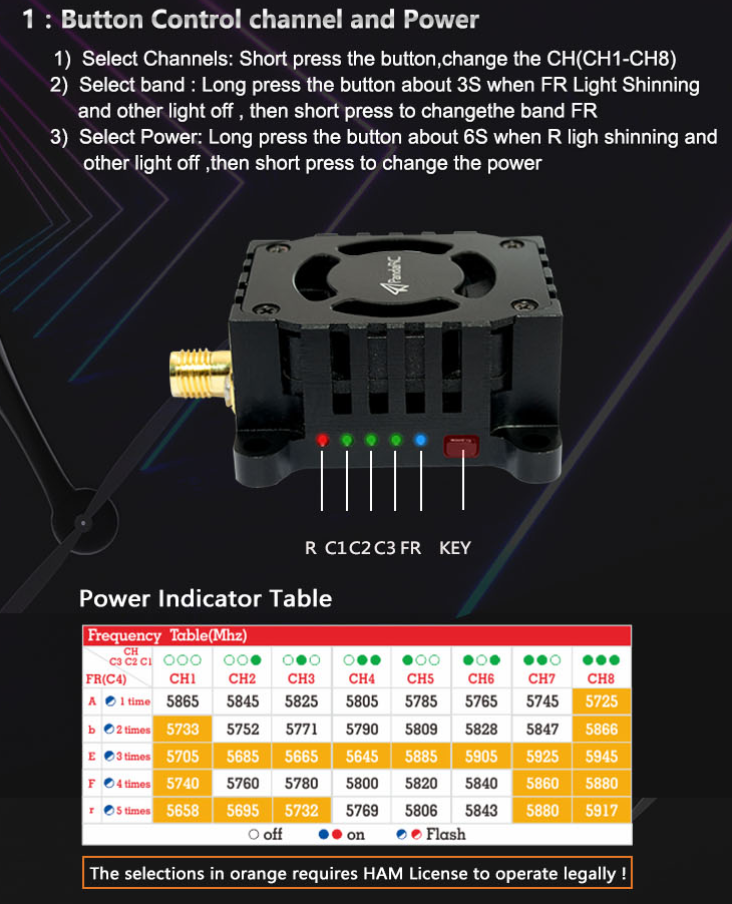

1 × 5ghz First Person View send/reciver unit 1 × Skyzone RC832-HD 48CH 5.8Ghz FPV Video Receiver with HDMI Output, Item No FPV-RX RC832HD 1 × PandaRC - VT5804 V3 5.8G 1W Long Range Adjustable Power FPV Video Transmitter Item No FPV-VTX-VT5804V3 1 × SONY 639 700TVL 1/3-Inch CCD Video Camera (PAL) Item No FPV-CAM-639 Receiver:

![]()

The receiver, as above, is a Skyzone RC832-HD 48CH 5.8Ghz FPV Video Receiver with HDMI Output, Item No FPV-RX RC832HD and features a HDMI output, as well as video AV. Power input: 6-24V.

Transmitter:

![]()

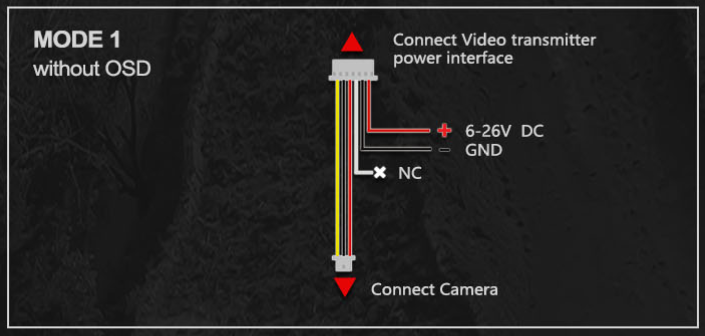

The transmitter is PandaRC - VT5804 V3 5.8G 1W Long Range Adjustable Power FPV Video Transmitter Item No FPV-VTX-VT5804V3 input voltage range: 7V-28V with adjustable power for long range use where appropriate.

1. Long press the button for 6 Seconds: The power indicator led flash while other indicator leds are off, then short press would change the power 25mW/200mW/400mW/800mW/1000mW. 2. Short Press one time, it will change the Channel (CH1-CH8). 3. Long press for 3 seconds and then short press, will change the FR (A-F).

IMPORTANT: Do not turn the transmitter on before attaching the antenna, doing so will permanently damage the board!

![]()

![]()

After testing the camera rig on various power supplies, we found it needed at least 12v and the manufacturers power supply specs are wrong.

-

GPS correction data over wifi.

08/31/2021 at 21:03 • 0 commentsThe ardusimple board we have been using a very accurate GPS system, essential for accurate localisation (positioning) of the robot.

The correction data is generated by the GPS base station and then this data needs to be fed to the GPS chip on the robot. The Real time correction messages (RTCM) are actually a propitiatory data format, but we don't need to decode it, we just need to be able to forward it over the network and supply it to the ardusimple board at the robot end. This data is time and latency sensitive, so the quicker you get it from your GPS base station to the robot the better....and the better GPS accuracy you will get.There are many ways to do this, some are easier than others, some are more expensive than others.

Correction data can be sourced from a 4G mobile connection, however we wanted the robot to be able to function without 4G mobile connectivity. Ardusimple gives other options, such as mountable wireless cards, using either wifi, zigbee and other exotic options. I think we chose the best solutions for out needs, wifi can be very easy to troubleshoot with tcp/ip tools, rather than a mysterious black box wireless card piggy backed on the Ardusimple board.. Again, some of the long range options are eye watering expensive too!... https://www.ardusimple.com/radio-links/

So we decided to pipe the correction data from the base station to the robot over good old WiFi, for maximum flexibility and to be able troubleshoot easily as well!

The excellent tutorial by TorxTecStuff was amazing. We could not of done it without you.

We used the str2str utility to pipe the RTCM data from out base station to the robot. It worked really well.

You'll be able to find the configuration for this on our github, so you should be able to recreate this method if you don't want to go for the more black box piggy back cards from Ardusimple.

We did have a few problems at first be found that we had two Serial devices connected to a single ardusimple UART pin on the robot end, a time costly mistake which was compounded by contradictory wiring diagrams from u-blox and ardusimple.. which was very very very bad, a bit like crossing the streams in ghost busters. You can read about why this problem occurred in another log and how worked around it.

![]()

-

Goodbye PI WIFI!

08/31/2021 at 20:12 • 0 commentsWe had upgraded our WIFI base station to a RUX11 , that was giving much better results. The robot still had 3x pi's on board, all connected via wifi and using the stock internal antennas.

The range of these antenna was actually quite surprising , but as data rates increased and the robot roamed farther from the wifi access point, we were seeing wifi drop outs and degraded data rates. Sometimes the raspberry pi's would refused to reconnect, this was resolved somewhat by given them static ip address assignments, rather them constantly asking for DHCP service on wifi reconnect.

Even with those optimisation, sometimes the network stack would just die, and not come back. Rebooting pi's becomes a chore, and not something you want to be doing on a production robot. The internal wifi cards on pi's are not designed for realtime robot communications!!!!!

We also need to get greater range, we played with a few external USB wifi cards, that had high gain antennas.

we tried

https://www.alfa.com.tw/products/awus1900?variant=36473966231624

![]()

it was kinda great? It was long range, had great performance.

I spent a little bit of time configuring the pi to become a wireless bridge and all seemed fine.

After testing however, on boot, sometimes the device would not be recognised, having to visit the robot, disconnect the usb and reconnect it was a bit of pain it the ass.

The drivers seemed to be pretty low quality, sometimes even core dumping the pi.

So a classic case of good hardware, poor software drivers that plagues many of these usb devices.

When pumping through low latency video, it would get hot, very hot, i think that's the thing that killed it in the end. This and the fact that the networking stack on rasbian is a bit of mess, and gets messier when you start adding extra usb wireless devices. To reduce complexity i completely disabled the pi on board wifi with rfkill and in the boot configuration file, after a few days ...the wifi device came back from the grave!!! causing a few scripts i'd hardcoded with interfaces address to start mysteriously failing!! arghh!!!

we decide to shelve this short lived USB wifi experiment and get serious.

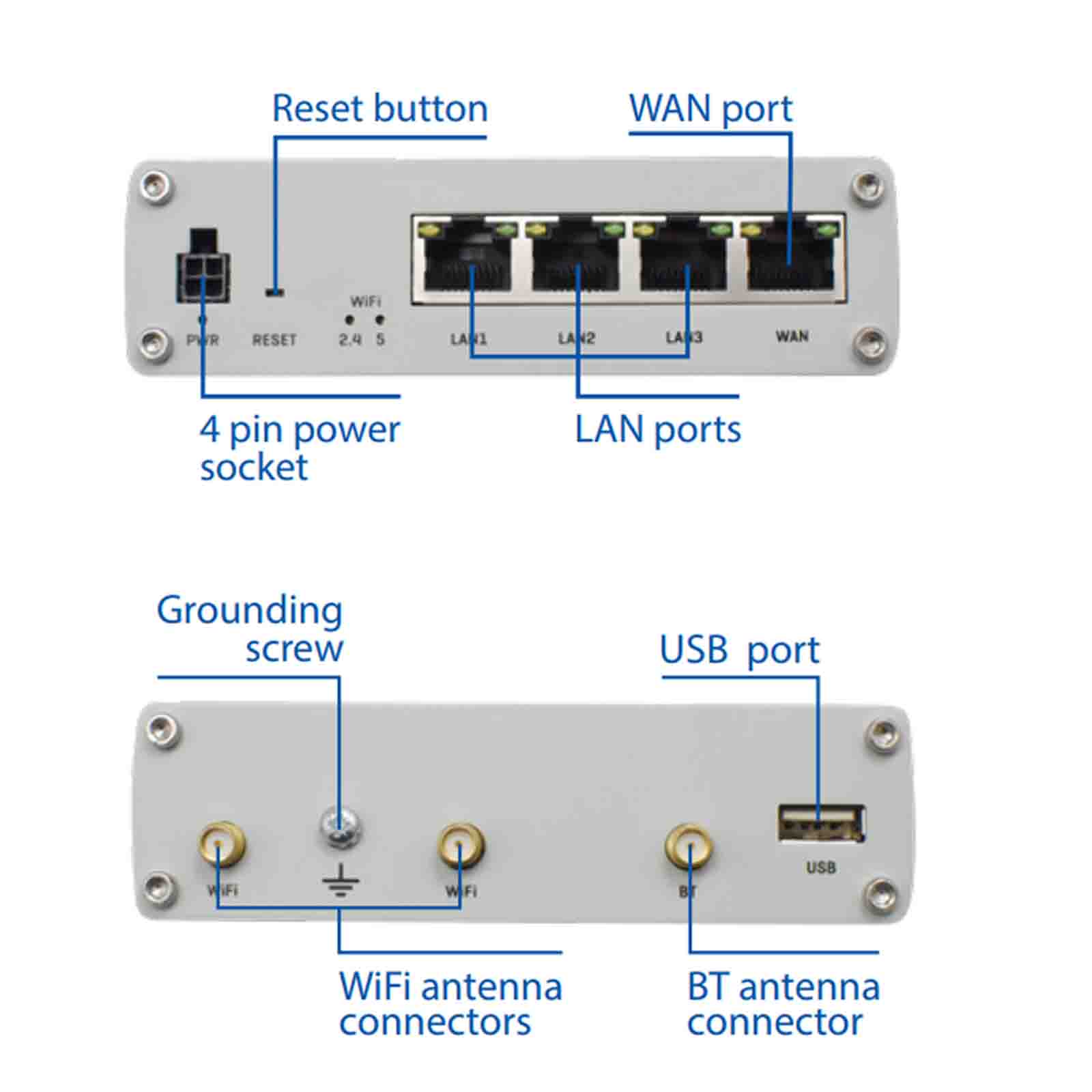

The solution was to add a dedicated RUTX10 router board to the robot, not only did this give us good stable wifi connectivity with the flexibility of openwrt, but able to add external high gain antenna's, and give us physical Ethernet ports too. As the pi's were now just using Ethernet to communicate and all wifi management was done by the RUTX10, the pi's were running cooler and using less power, less things connected to wifi too....i'd call that a win. Networking was starting to work reliably so we could get on with other things!

![]()

-

ROS VS ROS 2

08/31/2021 at 19:40 • 0 comments![]()

Robot operating system 1 is awesome.

Robot operating system 2 is also awesome.

When we started the project we were new to ros, our previous project the weedinator implemented all the network plumbing , serial communication and control systems with our own code!!!! You can see the result of our work here.

https://hackaday.io/project/53896-weedinator-2019

Ros has greatly simplified and standardised the communication between MCU control boards, various sensors, single board computers and all the other things that a robot needs to function in the real world. A robot is essentially a distributed message passing system and ros makes developing one a breeze.

We first tried the latest and greatest, ROS 2, although it seemed promising, there was a lack of coherent documentation, previous forum and support posts. This is not a technical problem or criticism of ROS 2, it's just because it's so new, a victim of it's newness!!. There are many pieces of code and packages important to robots that have yet to be ported to ROS 2. This is a monumental task so don't expect it to happen over night. Yes, we know we can run ros 1 modules on ros 2, but seeing as we were just learning, that seems a bit too star trek for back then.

We definitively think ros 2 is the future, but all the learning material and books we had all referenced ros 1 !

After we got into a few dead ends with ROS 2, we switched to ROS 1, and we started to make much more faster progress, just because of the wealth of support, articles , how to's , code and prebuilt packages that can be found on the internet and in book stores!!!

Ros 2 will surely get better as time goes on, but for now, we got what we wanted from ros 1.

We would like to thank everyone who has contributed to ros over the years, without the hardwork the ros community has done this project would not exist.

-

Realtime Low Latency Video

08/31/2021 at 19:06 • 0 commentsGetting good low latency video from the robot to the control centre is alway a bit of a condrum. using a webcam connect to a single board computer is usually the way forward. Picture Latency can cause problems when remote piloting leading to collision and crashes (real crashes not software ones!).

We needed good high quality video so we could remotely pilot the robot in various environmental and lighting conditions while being High Definition enough to spot obstacles and hazards in our path.

https://www.logitech.com/en-gb/products/webcams/brio-4k-hdr-webcam.960-001106.html

After playing we a few streaming solutions, from ffmpeg to RTMP servers, we were seeing latency of 5-10 seconds, after tuning codecs parameters and network/video buffers we could get it lower, but just felt it wasn't real time enough for our robot. We decided to have a go with NDI for a laugh.

NDI is proprietary codec for HD, low latency streaming over LAN's , however with the right networking equipment it will work over WIFI too ( with a few hacks ) :-).

We built, configured and compiled most of the software from scratch to get a NDI stack running on raspberry pi... it was a long arduous task until we found https://dicaffeine.com/about

![]()

This entire distribution turns your pi into dedicated NDI encoder device. You have lots of options for resolution and frame rate.

We terminated the NDI stream to a ubuntu machine running Open Broadcast Studio, which can be configured to receive NDI streams.

After we fixed the network problems with increase data rates of the NDI codec , detailed here https://hackaday.io/project/181449-basic-farm-robot-2021/log/197307-multitech-woes , we got good results with low latency (really insanely quick) HD video from the robot. Error recovery was also swift in case of degraded a wifi connection. Impressive.

You can see all this in action in our youtube video here

However, as with all things, the real world calls, we found the most of the wifi link bandwidth was saturated with NDI packets, we could of added another 2.4ghz/5ghz router to the robot and another access point on different dedicated wifi channels , but this would just of added extra expense and complexity to the robot and the control center. Perfect design is not what you can add to something, but when you cannot take anything more away.

In the end we decided to got for a dedicated 5ghz analogue video connection , they usually have better range and better error recovery, where a 'snowly' picture, is better for the human operator than a complete break in picture feed that many digital encoding systems suffer from.You can read more about adventures with FPV camera in another log.!

One last thing to mention is the https://dicaffeine.com/about really is great software for your low latency video projects, the free version will run for 30 mins before you need to restart it , it's certainly worth every penny if you plan to used NDI steaming on your network!!!!

-

Multitech woes

08/31/2021 at 17:52 • 0 commentsWifi Network woes.

Our first task was to get a stable wifi connection to the robot. The only thing we has available that was IP68 rated for out door used was a multitech MTCDTIP-LEU1 https://www.multitech.com/models/99999199LF wifi/lorawan/4g combined access point/gateway. It was quite flexible as it had power over Ethernet, so we could mount anywhere we could run a Ethernet cable.

![MTCDTIP-LEU1 pic]()

This seemed like a good choice , as we could mount it in the central field where the robot would be tested, making our wifi transmission distance optimal. We made a make shift 'mast' and mounted the multitech to it.

Our initial tests were good, we go good 2.4ghz wifi connectivity to the raspberry pi's on the robot platform, even *without* external antennas on the robot. We were also using the multi tech to provide us with 4g internet access. Things were looking good!

As we started to run higher data rates, especially real time video , although the wifi chip could sustain those rates with ease, the CPU was being pegged at 100%, causing increased latency, data flow drop outs and constant wifi re-transmits and disconnections...all in all not good for robot communuications.

It's poor ARM9 processor at 400 Mhz could not keep up with the data we were shunting through it, this was made worse as we were routing on main internet 4g wan through it too. Apparently new multitechs use much beefier cpu's! :-) .

We decided to ditch the old tech in favour of RUX industrial router boards and relegated the multitech the storage shelf once again :-(.

We didn't go with upgraded mulitechs because they use a yocto based linux distribution and although you had ssh access to the device for configuration/ diagnostics, there was a distinct lack of packages and configuration options. We needed to do some special ethernet/wifi bridging, multicast proxy and dns configurations. The RUX are thankfully openwrt based, so we knew we could have the flexibility of openwrt itself, great extention packages and great business quality support on top.

The motto of this log is, even though something looks cool and may have the features you need, it may not be cut out for robotic communication, which needs low latency, high data rates and bullet proof reliability that are essential for remote robot control and telemetry tasks.

GOAT INDUSTRIES

GOAT INDUSTRIES