-

Log #16: Related work (4)

a day ago • 0 commentsToday, I found Torin Blankensmith's youtube channel, where he shows connections between MediaPipe's hand tracking solution and the TouchDesigner software that he uses to create sounds and visual displays controlled from hand movements. I think it is very impressive and nice to watch.

He even created a MediaPipe TouchDesigner plugin, that uses GPU acceleration. So I think it should have pretty low latency.

Secondly, I would like to reference this youtube short, that I found by coincidence:

I like it, because it has a similar idea like lalelu_drums: There is not necessarily a new, impressive kind of music involved. The attractive part is the performance that is connected to the creation of music. This video has 56 million views as of today, so it seems I am not the only one who finds this attractive.

-

Log #15: puredata

01/30/2025 at 19:15 • 1 commentSo, I linked it up to puredata...

End of last year, I was pointed to a software called puredata, that can be used to synthesize sounds, play sound files, serve as a music sequencer and so on. In this log entry, I show that the gesture detection backend of lalelu drums can control a puredata patch via open sound control (OSC) messages.

The screencast shows the desktop of my Windows development PC. The live video on the left is rendered by the frontend running as a python program on the PC. On the right, a VNC remote desktop connection shows the puredata user interface, running on a raspberry pi 4.

The backend sends two different types of OSC messages to the puredata patch via cable based ethernet. The first type of messages encodes the vertical position of the left wrist of the player. It is used by puredata to control the frequency of a theremin-like sound. The second type of messages encodes triggers created from movements of the right wrist and elbow. The backend uses a 1D convolutional network to create this data, as it was shown in the last log entry. In puredata, these messages trigger a base drum sound.

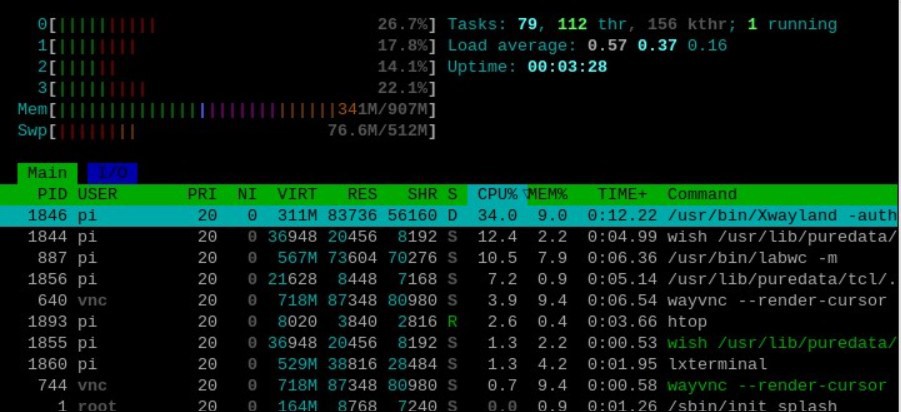

Here is the output of htop on the raspi4. It can be seen that there is significant load, but there is still some headroom.

![]()

Thanks go to Matthias Lang, who gave me the link to puredata. The melody in the video is taken from Borodin, Polovtsian Dances. The basedrum sound generation was taken from this video.

-

Log #14: Data driven drumming

01/07/2025 at 20:41 • 0 commentsThe gesture detecting algorithms that define at which point in time, depending on the movements of the player, a sound is triggered are central to the lalelu_drums project. My goal is to find one or multiple algorithms that give the player a natural feeling of drumming.

So far, the gesture detecting algorithms in lalelu_drums were all model based. This means that they apply a heuristic combination of rolling averages, vector algebra, derivatives, thresholds and hysteresis to the time series of estimated poses, eventually generating trigger signals that are then output e.g. to a MIDI sound generator.

In this log entry, for the first time, I present a data driven gesture detecting algorithm. It is based on training data generated in the following way.

A short repetitive rhythmic pattern is played to the user and she performs gestures in correlation with the sounds of the pattern. The movements of the user are recorded and so a dataset is generated that contains both, the time series of estimated poses together with ground-truth trigger times.

From these datasets, a 1D-convolutional network is trained that outputs a trigger signal based on the realtime pose estimation data. In the following example, the network takes the coordinates of the left wrist and the left elbow as input. A second network of the same structure is used to process the coordinates of the right wrist and the right elbow.

The video shows the performance of the networks. Additionally to the two networks, a classic position threshold is used to choose between two different sounds for each wrist.

In the example, each network has 219k parameters. The inference (pytorch) is done on the CPU since the GPU is blocked by the pose estimation network (tensorflow). The inference time for both networks together is below 2ms.

-

Log #13: Related work (3)

12/29/2024 at 21:38 • 0 commentsIn the last weeks I found the following three interesting topics:

First, there is DrumPy by Maurice Van Wassenhove. It is a result of his master thesis and shows a gesture controlled drumkit, so it addresses the same idea as lalelu_drums does. There is a demo video showing a basic drum pattern.

Second, there is Odd Ball. It is an elastic ball that features sensors and a wireless connection to a mobile device. It can be used to trigger sounds based on the movements of the ball, so it allows performances similar to those that I envision for lalelu_drums.

Third, I was pointed to the 3D drum dress of Lizzy Scharnofske. It is a wearable device featuring buttons that Lizzy uses on stage to trigger sounds. So again, the idea of the performance is similar to the motivation of lalelu_drums.

-

Log #12: Sternlein in concert

11/17/2024 at 19:19 • 0 commentsOn October 30th I had the chance to present lalelu_drums to an audience of children in the age of 5 to 12 years. I chose the German lulaby "Weißt Du wie viel Sternlein stehen?" in the arrangement I posted earlier. The song is about stars and clouds and the gestures are meant to resemble pointing at the stars or showing the moving clouds. From the video it can be seen that even though only the movements of the player are tracked, the connection of the gestures to sounds motivates the children to join in the movements.

-

Log #11: docker

11/02/2024 at 08:22 • 0 commentsIn this log entry I document a refresh of my backend's operating system installation, that I needed to do recently. For the first time, I used docker to setup the CUDA/tensorflow/tensorrt stack and it turned out to work very well for me.

The reason for the refresh was that some days ago, the operating system installation in my backend system turned into a state in which I could not run the lalelu application any more. A central observation was that nvidia-smi showed an error message saying that the nvidia driver is missing. I am not sure about the root cause, I suspect an automatic ubuntu update. I tried to fix the installation by running various apt and pip install or uninstall commands, but eventually, the system was so broken that I could not even connect to it via ssh (probably due to a kernel update that messed up the ethernet driver).

So, I had to go through a complete OS setup from scratch. My first approach was to install the cuda/tensorflow/tensorrt stack manually into a fresh ubuntu-22.04-server installation, similar how I did it for the previous installation one year ago. However, this time it failed. I could not find a way to get tensorrt running and it was unclear to me which CUDA toolkit version and tensorflow installation method I should combine.

Description of the system setup

In the second approach, I started all over again with a fresh ubuntu-22.04-server installation. I installed the nvidia driver by

sudo ubuntu-drivers installThe same command with the --gpgpu flag, that is recommended for computing servers, did not provide me with a working setup, at least nvidia-smi did not suggest a usable state.

I installed the serial port driver to the host, as described in Log #02: MIDI out.

I installed the docker engine, following its apt installation method and the nvidia container toolkit, also following its apt installation method.

I created the following dockerfile.txt

FROM nvcr.io/nvidia/tensorflow:24.10-tf2-py3 RUN DEBIAN_FRONTEND=noninteractive apt-get install -y net-tools python3-tables python3-opencv python3-gst-1.0 gstreamer1.0-plugins-good gstreamer1.0-tools libgstreamer1.0-dev v4l-utils python3-matplotliband built my image with the following command

sudo docker build -t lalelu-docker --file dockerfile.txt .The image has a size of 17.1 GB. To start it, I use

sudo docker run --gpus all -it --rm --network host -v /home/pi:/home_host --privileged -e PYTHONPATH=/home_host/lalelu -p 57000:57000 -p 57001:57001 lalelu-dockerIn the running container, the following is possible:

- perform a tensorrt conversion as described in Log #01: Inference speed

- run the lalelu application, including the following aspects

- run GPU accelerated tensorflow inference

- access the USB camera

- interface with the frontend via sockets on custom ports

- drive the specialized serial port with a custom (MIDI) baudrate

Characterization of the system setup

I could record the following startup times

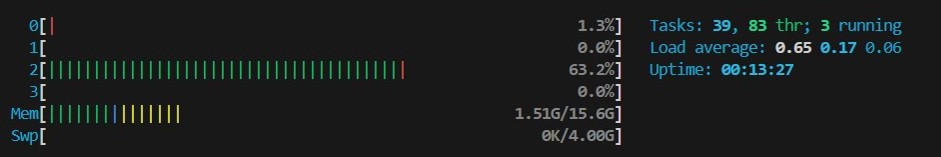

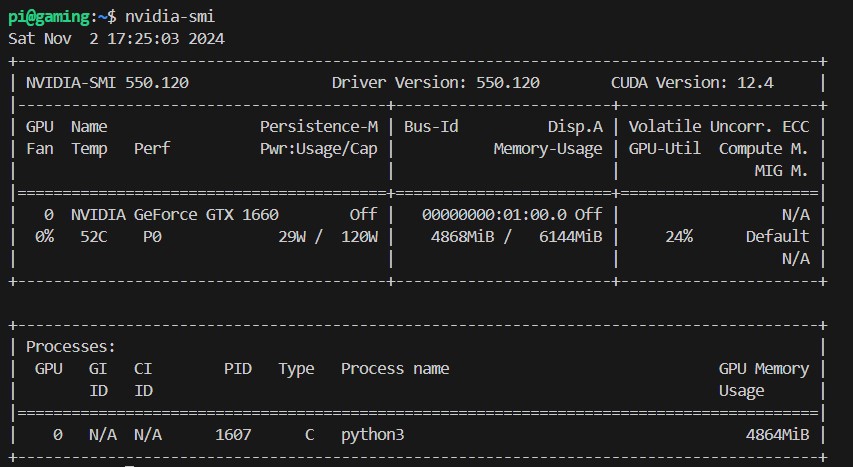

boot host operating system 30 s startup docker container < 1 s startup lalelu application 11 s When the lalelu application is running, htop and nvidia-smi display the following

![]()

![]()

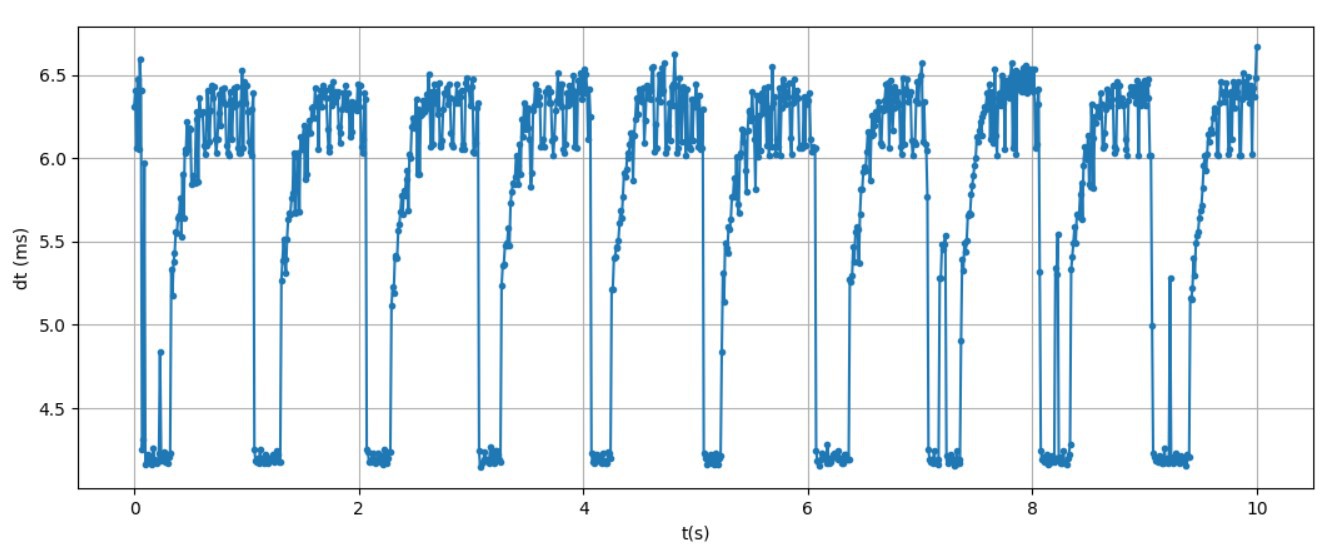

Finally, I recorded inference times in the running lalelu application. The result for 1000 consecutive inferences is shown in the following graph.

![]()

Since the inference time is well below 10ms, the lalelu application can run at 100fps without frame drops.

-

Log #10: Related work (2)

10/06/2024 at 19:59 • 0 commentsRecently I got to know the project SONIC MOVES that is strongly related to lalelu drums. They present an iOS app that can be used to control music from 3D body tracking on an iPhone XR or newer.

The developer Marc-André Weibezahn has a similar app called Affine Tuning, that seems to be a predecessor of sonic moves. He announced the foundation of sonic moves eight months ago on linkedin.

From the demo videos it appears that the player does not trigger individual notes, but rather controls a path through what they call a 'song blueprint'. The song blueprint consists of MIDI tracks and effect definitions and the player's movements control how the MIDI tracks are played back and set parameters of the effects. Accordingly, the description of Affine Tuning states that it is not meant to be a musical instrument (that you would have to practise), but rather aims to motivate physical exercise.

I like the idea of an intuitive application that does not require any training to use it. I think it can be great fun and very motivating to explore various song blueprints by experimenting with body movements. However, I wonder if the frame rate and latency that can be achieved on a mobile device allows the music output to be controlled precisely enough to play along with other musicians, as it is shown for example in Rapper's delight.

-

Log #09: Pattern player

10/02/2024 at 19:57 • 0 commentsAs promised in my last blog entry, I want to provide some more details about the new pattern player I added to the project. The pattern player provides the following two features

- from a roughly equidistant sequence of trigger events as an input from the player, continuously estimate the tempo and the beat count

- output a predefined musical pattern, following the estimated tempo and beat count

The input trigger sequence could be created in any way, for example:

- hitting a button on a keyboard

- playing notes on a piano

- recording a person's heart beat

- trigger events created from human pose estimation (shown in the following)

Here is a first demo video. Note that while all triggers from the player are used to estimate tempo and beat count, only the clapping action will start the playback of the pattern. The video also shows that different patterns can be played, using the same estimate of tempo and beat count.

The second video shows the adaptive tempo estimate. This feature makes the pattern player different from a classic looper device. It enables the player to play along with other musicians without imposing a specific tempo to them.

An interesting application I could envision is a tool for a conductor. The movements of the conductor could be used to control a predefined playback that will then follow the conducting. This arrangement could be helpful in the situation of a rehearsal where a specific musician is absent and is replaced with a playback.

-

Log #08: Rapper's delight

09/29/2024 at 18:54 • 0 commentsOn September 14th, I had the chance to present a lalelu drums performance together with the percussionist duo BeatBop on their concert 'Hands On!' in Friedenskapelle Kaiserslautern (Germany). They had a diverse program featuring many different percussion instruments played directly with the hands (no drumsticks) and together we prepared the piece 'Rapper's delight' with body percussion and lalelu drums adding a marimba bass line. I am very grateful for this opportunity and I really enjoyed the preparation and the concert together with Jonas and Timo!

Here is a video of the rehearsal. At first I introduce lalelu drums by playing individual marimaba notes. Then, I play the bass line using a combination of individual notes and predefined patterns. Jonas and Timo join in, clapping and slowly marching to the stage. They have a solo after which I join in again.

Note that the first and second part (before and after the solo) feature a different tempo, which is possible because the predefined patterns are played at the tempo defined by the preceding individual notes. Also, the tempo is continuously updated from the player's movements. I will make a blog post soon, giving more details about these features.

-

Log #07: Combinations

06/18/2024 at 17:27 • 0 commentsIn this log entry, I use a virtual base guitar as an example to show how multiple keypoints in combination can be used to control a single instrument.

The movements of the RIGHT_WRIST keypoint control the trigger of a base guitar sound, while the position of the LEFT_WRIST keypoint defines which note is played. Additionally, as an extension of the way a real base guitar is played, the position of the RIGHT_WRIST keypoint allows to override the note defined by LEFT_WRIST, so that the fundamental note of the scale is easily accessible.

lalelu_drums

Gesture controlled percussion based on markerless human-pose estimation with an AI network from a live video.