Overview

One of the goals has been to use this project as a learning platform. So far, we’ve been able to explore all sorts of software and hardware that we hadn’t worked with previously, including new technology, techniques, libraries, and platforms. This has also forced us to spin up on our linear algebra again.

Technologies

Some of the technologies in use so far are:

- Active-MQ (multi-protocol messaging middleware)

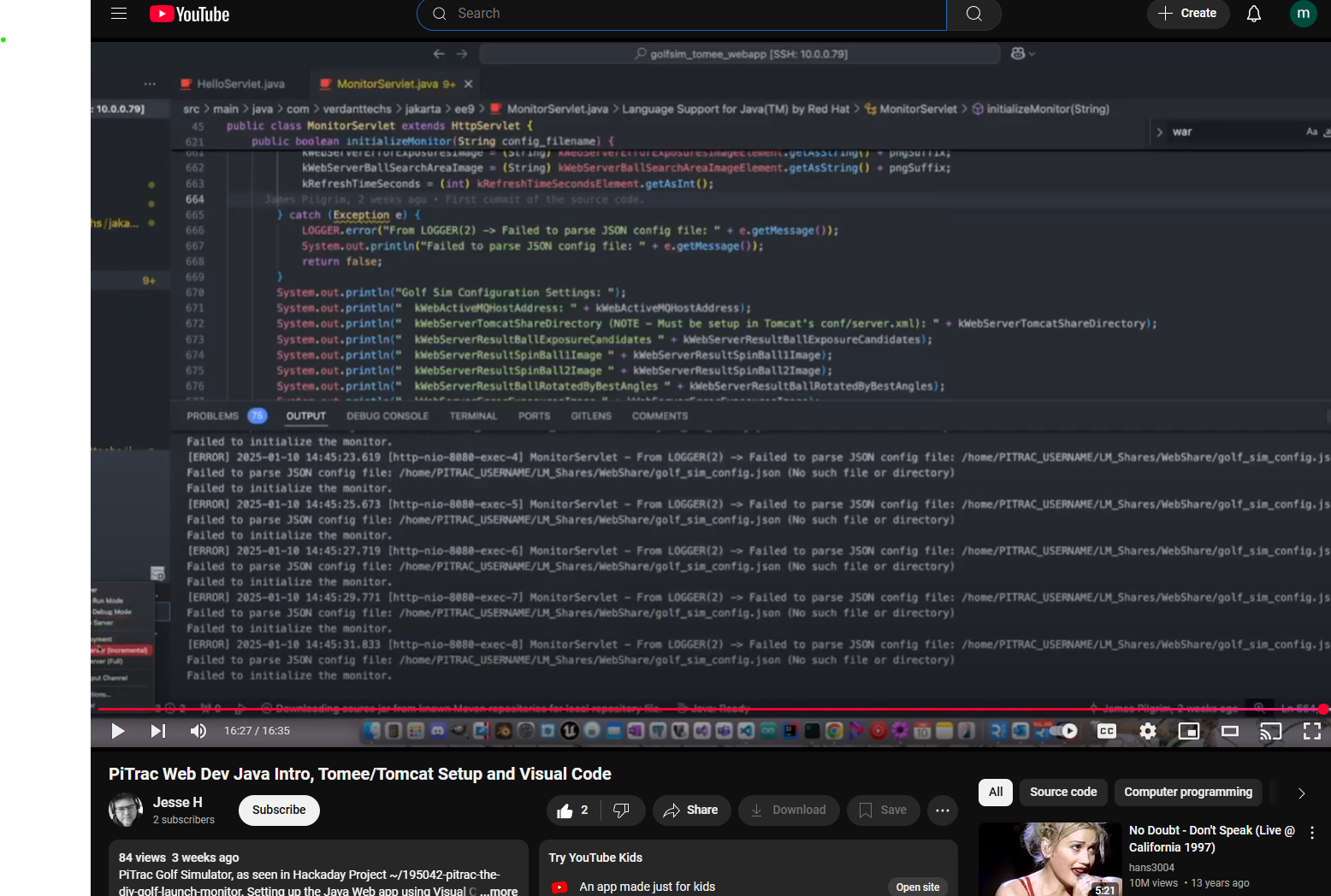

- Apache Jakarta Server Pages (serves the web-based default system GUI)

- Boost (various foundational libraries for logging, multi-threading, thread-safe data structures, command line processing, etc.)

- Debian (Raspbian) Linux (OS)

- FreeCad (Parametric 3D CAD/CAM software)

- JSON (serialization)

- JMS (messaging)

- KiCad (schematic capture and PCB layout)

- Libcamera and Libcamera/rpicam-apps App (open-source camera stack & framework)

- Maven (java build automation)

- Meson & Ninja (build tools)

- Msgpack (efficient architecture-independent serialization)

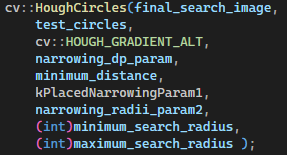

- OpenCV (image processing & filtering, matrix math)

- libgpiod (GPIO and SPI library)

- Prusa MK4S and PrusaSlicer(3D Printer and Slicer)

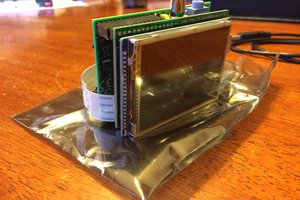

- Raspberry Pi Single-Board Computers

- Tomee (hosts the web-based interface)

- KiCad (Schematic and PCB software)

The software is written almost entirely in C++, with the goal of having the codebase look close to commercial standards in terms of testability, architecture, and documentation. There are some utilities (for functions like camera and lens calibration) that were easier to implement in Python and/or Unix scripts.

NOTE: Raspberry Pi is a trademark of Raspberry Pi Ltd. The PiTrac project is not endorsed, sponsored by or associated with Raspberry Pi or Raspberry Pi products or services.

Cost

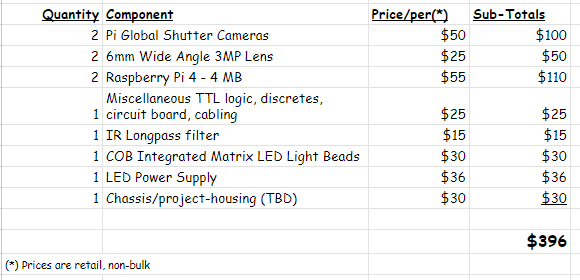

We’d like to have the entire system cost less than US$300. We're currently over-budget by about a hundred dollars, but have plans that should bring that price down. For example, the new Raspberry Pi 5 should be able to support both of the system cameras, which could decrease the price by around $50 by avoiding a second Pi.

The high-level parts list at this time:

Additional Project Videos (see the full set on our youtube channel):

James Pilgrim

James Pilgrim

Eugene

Eugene

jason.gullickson

jason.gullickson

Jacob David C Cunningham

Jacob David C Cunningham

One goal you had was to get to a single Raspberry Pi for this project. Will that be possible with the Raspberry Pi 5?