-

Restructuring the instruction bundles

12/07/2024 at 04:01 • 0 commentsI was very satisfied with my instruction-to-pipeline mapping, with one opcode for each globule followed by a control instruction. And today my ideas wandered somewhere else : what about the predicated instructions ?

Due to the still high cost of dealing with branches, it would be awesome to be able to simply "selectively skip" a (small) number of instructions. And this creates a whole lot of new issues, but this is required to reduce the overhead of small branches, due to the still small number of branch target slots.

First, how many instructions is it possible to skip ?

I'd say : not more than a cache line. That's 8 instructions, or 3 cycles at full bandwidth.

This can create some atomicity issues, particularly during decoding. And this also reshuffles the instruction bundle with the predicate in the first/leading position instead of the last.

The point of predication is to conditionally "abort" instructions by preventing the result(s) from being written back to the register set. Otherwise, we're entering the "shadow register hell" and blow up the core's complexity. This also means that predicated instructions can not reuse earlier results from the same predicate. This also implies that control instructions can't be predicated, and maybe no more than 4 ALU operations shadowed (2 cycles get us to the ALU result, it's time to make sure the result is written back, or not).

OTOH this gives just enough time for the control circuits to fetch the relevant condition and propagate the decision.

.

.

BTW the predicated sequences work in a way similar to the "critical section" instruction so there is something to explore deeper here.

.

.

.

-

Data Stack protections

11/19/2024 at 03:34 • 0 commentsYGREC32 has several features that I described in recent articles, in particular is can "shield" the bottom of the stack from read and/or write. This means that a caller can prevent callees from extracting or altering its own state.

There is a series of articles that cover the matter:

Les « tourments de la monopile », ou le « Single-Stack Syndrome »

Une histoire des piles et de leur protection

Une (autre) pile matérielle pour le modèle bipilaire

Au-delà de la fonction : libérez tout le potentiel de la pile de contrôle !

Recently I was thinking about what happens when a function returns but its data remain "on the stack" : the next call can scan these data and extract information.

The usual approach is to flush the data on exit. This is wasteful and new functions usually start by initialising/flushing their stack frame anyway. So this would be required only for "secure" functions, but this is another slippery slope, since "what needs to be secured ?"

So here is the proposal. YGREC32 already has the read_shield and write_shield ancillary registers, that trap when reading or writing below said addresses. Their values can be updated during call and return, thanks to the protected control stack. So why not make something similar but for the addresses above the stack frame ?

The behaviour is different though :

- reading above the given address will return 0 (or some canary value)

- writing will work and replace the old value in the cache. This will also invalidate the corresponding cache line, except for the written word.

This way :

- writing to the top of the stack will not trigger a cache read even

- reading above the stack top does not leak information from previous calls.

- all function calls are safe and fast.

Of course this requires cooperation with the data cache...

-

Globule symmetry

10/27/2024 at 21:35 • 0 commentsIt seems I'll have to change, adjust, adapt my model of the globules because of the memory access patterns.

The "symmetrical globule" idea is great because it's simple, efficient, easy. The functions can also be merged during one cycle by issuing an instruction pair to perform operations that are more complex than what a single instruction could do alone : 2R2W or 3R1W instructions such as

- addition with carries,

- multiplication,

- division,

- barrel shift/rot,

- bit insert/extract,

- MAC maybe...

Each globule is dumb and designed for speed, which limits their functionalities a lot, but new features naturally appear when they are coupled. And this breaks the symmetry, in particular for scheduling and the instruction decoder/dispatcher.

The original idea was very simple : instructions are grouped in 3 consecutive words, or less, that follow the following pattern G1 G2 C or any substring, so overall we can have the following sequences:

G1

G2

G1 G2

G1 G2 C

G1 C

G2 C

C(that's a total of 2³-1=7 combinations, and not 8 because the empty set does not exist).

But the pairing breaks this nice little clean system. A pair would be a special case of G1 G2 where the first opcode signals a pair, which constrains the second opcode in the pair. Each globule provides their operands and then both can receive a result, often after another cycle (or more depending on the operation).

But due to requirements in orthogonality, you may want your operands and results to go almost anywhere, right ? So you may end up with these sequences:

P1 Q2

P1 Q2 C

P2 Q1

P2 Q1 Cwhere P is the first opcode of a pair, and Q its "qompanion". And you see that the order of the globules can now be swapped! You still have a requirement to have certain operands and results in separate globules but inhibiting P2 Q1 would add too much stress on the register allocator, I think.

This also increases the number of opcode types : we have G, C, P and Q now. So the opcode requires 2 bits.

Also the operands of the Q opcodes are implicitly complementary to the leading P opcode, so there is no need to distinguish Q1 and Q2, it's possible to save a couple of bits but I doubt it's worth the effort. Let's keep both for now.

....

The system starts to break down when confronted to the constraints of accessing memory through the A/D register pairs. It's not a new system since the CDC6600 (and family) uses dedicated address and data registers as well, however 3 address registers are used for writing and 5 are for reading so the semantic is clear:

- Write to a write register, and any write to the corresponding data register will trigger a memory write cycle.

- Write to a read register and the read cycle is immediately triggered.

On the YASEP/Y8/Y32, the A/D pairs are not committed, which allows read-modify-write sequences. It's all fine with the YASEP (16-32) and Y8 because the memory is tightly coupled and usually has one level. Triggering a read cycle upon register write is not an issue. However Y32 has more latency, in fact it's meant to deal with several cycles, so if you only want to write to memory, updating the A register could trigger a cache miss and a long, painful fetch sequence...

.

.

.

.

... tbc ...

-

How fast can it run ?

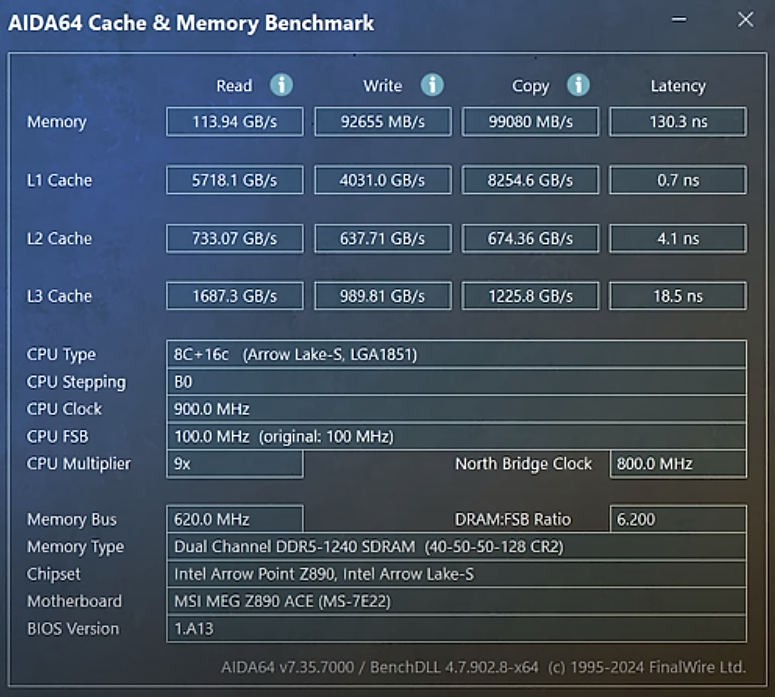

10/27/2024 at 17:46 • 0 commentsI just came across this very recent measurement on the latest Intel 2xx series chiplet-based CPU. It's interesting because it gives a modern view of the best performances and latencies the bleeding edge can achieve.

![]()

See the source there:

The main memory is still slow, hovering around 100ns, nothing new here. The quoted 130ns is from a misconfigured motherboard and an update brings it to a less sluggish 83ns, which has immediate effects on the performance. Further architectural enhancements can only come from the usual methods here : larger buses, more channels, more banks, tighter controller integration...

The interesting parts here are the latency ratios of the cache levels. L2=5.8×L1, L3=L2*5.6

Oh and that system can clock to 5.7GHz, so one L1 hit takes 4 clock cycles, and God knows how many instructions per cycle one such core can execute in this time.

An interesting outlier is this datapoint : L1 copy is faster than read or write. Some mechanism is at work here, probably "locality" or "streaming", with a large bundle/buffer that groups words and aligns them or something.

Note that 5.7GB/s at 5.7GHz amounts to one byte per cycle. That's decent but not much.

.......

The YGREC32 is not designed to work with the fastest silicon processes and most expensive foundries but the above numbers are rough estimates for the ratios that will be encountered in a "good enough" implementation. The point is that the architecture must be scalable and requires only little adjustment during shrinking.

This first thing we can conclude is that without L3 yet still following the "6× per level" rule-of-thumb, the CPU can't run much faster than one GHz or so. More or less, it's the L3 that enables the latest CPU to reach and keep running abot (say) 1.5GHz.

The other lesson is that memory latency is really, really important. An in-order CPU spends a lot of time waiting for data, leading to ever-increasing cache sizes and levels, as well as very deep out-of-order cores. Increasing the number of cores is another aspect, and Simultaneous MultiThreading helps with keeping the cores busy.

The worst problems come from OOO and the "solution" for this, explored in Y32, is to explicitly prefetch. The data are already playing the prefetch game since the D/A register pairs implicitly work like "reservation stations". Y32 has 16 such stations for data and 8 for code. This is comfortable maybe up to 500MHz or so. Faster clocks will require SMT, which is easy with Y32 but more than 2 threads will significantly bloat the core's area, so more cores become necessary.

OK, let's have two cores with 2× SMT for a decent speed. Now we hit two major walls: the FSB bandwidth wall gets hammered and there is probably not enough parellelism in the workload to occupy the cores. In interactive workloads, the separate cores act as local caches for the threads, since there is less space to move around, load to and spill from the caches. There is an incentive to keep as much data as possible onchip and swap only infrequently used data offchip. Speaking about swapping, this means that data blocks are more or less treated at the page level, or somesuch coarse granularity to increase efficiency, but this also increases latency by preventing multiplexing.

So overall, the clock range for Y32 would be from about 100 to 500MHz without extra architectural features. Out of the box, it could handle up to 16+8+2 concurrent memory transactions (data, instructions and stack) per thread. That's good but can only be exploited by good code, made by good compilers that can think beyond the old load-store paradigm.

The limiting factor will be the number of "reservation stations" (D/A registers and eBTB entries). FC1 will double them but that might not be sufficient, yet there is only so much space in an instruction word. "Register windows" and remapping of addressable resources will become necessary to push beyond the gigahertz hill.

-

Indirect branch

09/20/2024 at 05:17 • 0 commentsThe current ISA draft is very incomplete... it is characterised by a very tight and strict (almost crippling) branch system focused on speed and safety. But this is insufficient in practice and a better compromise is required and "indirect" jumps/branches are required (or else coding can become miserable).

An indirect branch can branch to one in a multitude of addresses that are stored in an array/table, to implement switch/case or other structures.

To prevent arbitrary jump target injections, instruction addresses can only be located in instruction memory, which is considered "safe" (to a certain degree) because it is constant and immutable. The compiler is responsible for the validity of the contents of this space.

Today's progress is the allocation of an opcode range, next to INV=0xFFFFFFFF, to hold indirect branch addresses. Instruction addresses are 24 bit long so the instruction prefix is 0xFE followed by the address. This prefix still decodes as INV though because it is NOT an instruction but a pointer so executing it must trap.

Also this is NOT a JMP instruction because the pointer should only be handled by a dedicated "indirect branch" opcode. However, JMP could be allocated as a neighbour 0xFD opcode to merge some logic/function. So the opcode value works like a sort of "indirect branch target validation tag" or similarly found in recent ARM architectures.

There are still a number of issues to resolve though :

- Range validation: how do you ensure that the address array is correctly indexed, with no out-of-range access ? I guess that the prefetch instruction must include a maximum index somehow, probably limited to 256 entries ?

- Multicycle operation: the prefetch must first fetch the instruction memory for the pointer then do another fetch cycle to get the actual instruction. That's not RISCy...

Food for thought...

-

more details.

08/17/2024 at 01:44 • 0 commentsThe Y32 architecture is mostly divided in four essential blocks, each with the dedicated memory buffers:

- Glob1 (with dCache1)

- Glob2 (with dCache2)

- eBTB (with iCache)

- control stack (with stack cache)

A 5th block (with no memory access or array) processes data for multi-cycle and/or more than 2R1W operations (Mul/Div/Barrel shifter/...)

Yet the YGREC32 is a 3-issue superscalar shallow-pipeline processor. This directly dictates the format of the instructions:

- MSB=0 for "operations", that is: opcodes delivered to the globules to process data. Note: opcode 0x00000000 is NOP. The following bit addresses the globule, so there are 30 bits per instruction.

- MSB=1 is the prefix of the "control" opcodes, such as pf/jmp/call/ret/IPC/spill/aspill/... This affects the control stack as well as the eBTB (sometimes simultaneously). Note: 0xFFFFFFFF is INV.

.

The globules are very similar to the YGREC8 : a simple ALU (with full features, boolean, even umin/umax/smin/smax), 16 registers with 8 for memory access, and a 32-bit byte shuffle unit (aka Insert/Extract unit, for alignment and byteswap: see the #YASEP Yet Another Small Embedded Processor's architecture).

It must be small, fast : Add/sub, boolean and I/E must take only one cycle.Complex operations are performed by external units (in the 5th, optional/auxiliary block) that get read and write port access with simultaneous reading of both register sets to perform 2R2W without bloating the main datapath: multiply, divide, barrel shift/rotate... The pair of opcodes get "fused" and can become atomic, but could also be executed separately.

Fused operations require an appropriate signaling in the opcode encoding.

For context save/restore, it would be good to have another, complementary behaviour, where one opcode is sent to both globs (broadcast).

It is good that control operations can be developed independently from the processing operations, though sharing the fields (operands and destinations) is still desired.

.

Both globs are identical, symmetrical, so there is only one to design, then mirror&stitch together.

Each glob communicates with the other through the extra read port (the register sets are actually 3R1W), and are fed instructions from the eBTB. The only connection with the control stack is for SPILL and UNSPILL, using the existing read & write ports. Each glob provides a set of status flags, which are copies of the MSB and LSBs of the registers (plus a zero flag). Hence some of the possible/available conditions are

- LSB0

- LSB1

- LSB2

- MSB

- Zero

The multiple LSB conditions help with dynamical languages that encode type and other ancillary values in the pointers (including #Aligned Strings format ). MSB can be a proxy for carry.

Oh and yes, add with carry is a "fused"/broadcast operation that writes the carry in the secondary destination.

-

a first basic module.

07/03/2024 at 10:32 • 0 commentsHere is a first attempt at writing a module that just prints "Hello world!".

It shows the basic structure of a module as well as the IPC/IPE/IPR instructions that allow modules to call each others.

; HelloWorld.asm Section Trampoline ; init: Entry 0 IPE ; nothing to initialise IPR ; run: Entry 1 IPE ; no check of who's calling. jmp main Section Module main: ; get the number/ID of the module that writes ; on the console, by calling service "ModuleLookup" ; from module #1 set 1 R1 set mod_str R2 IPC ModuleLookup R1 ; result: module ID in R1 ; write a string of characters on the console ; by calling the service "WriteString" ; in the module designated by par R1 set mesg_str R2 IPC WriteString R1 IPR ; end of program. Section PublicConstants mod_str: as8 "console" mesg_str: as8 "Hello world!"I'm a bit concerned that the IPC instruction has a rather long latency but... Prefetching would make it even more complicated, I suppose.

-

eBTB v2.0

07/03/2024 at 01:13 • 15 commentsThe last log introduced a modified, explicit Branch Target Buffer. It has evolved again since then and is taking a new life of its own...

As already explained, it totally changes the ISA, the compiler strategy, the coding practices, almost everything, but it is able to scale up in operating frequency, or with CPU/Memory mismatch, provided the external memory works with pipelined transactions. The new ISA exposes some of this and lets the compiler handle parts of the overlapping of prefetches.

So the jump system works with two phases :

- The program emits a prefetch operation: this associates a jump address to a fixed ID. The visible ID is 3 bits, there can be up to 8 simultaneously ongoing prefetch operations. For each ID, there is one 8-instruction cache line that is stored close to the instruction decoder, for immediate access. It usually takes several cycles to fetch the desired line and store in in the BTB.

- The actual "jump" instruction selects one of these 8 lines (with the 3-bit ID in the instruction) and checks the (possibly complex) condition. If the condition is verified, the selected line is chosen as source of instructions for the next cycle, and the PC starts to prefetch the next line(s) in its double buffer.

You can see this system as exposing a register set dedicated to instructions addresses. Each register is a target, so let's call them T0 to T7. You can only perform an overwrite of these registers with a prefetch instruction, or a "loopentry" instruction for example : there is no fancy way to derail the CPU's operation.

Each line has one of 4 states:

- absent/invalid

- fetching/checking

- failure/error/fault

- valid

This system mirrors the already studied mechanism for accessing data memory, but the BTB is much more constrained : Each data fetch address is explicitly computed and stored into a dedicated "Address register" (in Y32: A0 to A7), which triggers the TLB and cache checks, such that "a number of cycles later" the corresponding data is available in a coupled/associated "data" register (in Y32: D0 to D7). The #YASEP already uses this split scheme for 2 decades now.

And now, a similar decoupling scheme is also provided in the Y32.

So we get 8 visible registers : they must be saved and restored through all kinds of situations such as function calls, IPC or traps... yet there is no way to read them back to the general register file. The only way to get them out and back in is with the control stack, which can save 2 entries in one cycle.

So the pair T0-T1 can be saved and restored in one cycle, for example : it seems easier to pair them as neighbours. A whole set of targets can be saved in 4 instructions, 4 cycles. It still consumes a half-line of cache...

All the pairs of targets can be freely allocated by the compiler, with some hints and heuristics: the short-lived and immediate targets would be in the high numbers (T4-T7) while targets for later would be in the lower addresses (T0-T3). This makes it easier to save the context across the functions, as the buffer would be defragmented and there are fewer chances to save a register that doesn't need backup.

...

Another thing I didn't mention enough is that the control stack (its neighbour) also has hidden entries in the eBTB. Let's say 8 more lines (or even pairs for the double-buffering) which are allocated on a rolling basis. The stack manages the allocation and prefetch itself. Usually, these hidden lines are a copy of the current instruction buffer and its double/prefetch buffer, so there is only need of prefetch when the stack is emptying.

...

But the v2.0 goes beyond that: it manages the call/return and others, without the need for explicit saving/restoring by discrete instructions. These spill/unspill instructions are still possible but they take precious space (hence time).

What call and IPC now do is accept a 2-bit immediate (on top of the 3-bit target, the condition flags, the base address...) to say how many pairs of targets to push/pop automatically on the control stack.

But each pop or push takes a whole cycle, right ? Call already takes some time to push the PC and the context/base address.

The solution (which might sound familiar to Duane for example) is with a register window. In our case of 8 targets, 16 actual lines are actually implemented and addressed in a circular buffer. This means that a "base index" is added to the instruction's 3-bit Target ID to get the actual number of the physical entry.

What call/return do is simply add their 2-bit amount to the base index (shifted by 1) to make new entries available, while the old entries (now out of reach) can get saved in the background on the control stack, during the following cycles.

The granularity of 2 entries per cycle simplifies a few things, such as the computation of the effective index of the target/line, since the LSB is not changed. The instruction provides 3 bits but only 2 must be added to the current index, which is 3 bits as well but the LSB is implicit. That's a matter of a few XOR at most, so not a critical performance hog, unlike with a more classical register set in SPARC for example.

- Addressing a line from an instruction's target ID field is relatively simple: of the 3 bits, the LSB is preserved (pass-though) and the remaining 2 MSB (0-extended) are added to the 3-bit Target Based Index. Carry out is not calculated, it's a simple wrap-around.

- call instruction : 0-extended 2-bit field is added to the 3-bit TBI.

- ret only pops the destination, then also re-executes the call instruction to see how many levels must be restored, as well as which base register to restore.

Initially I was thinking that the 3-bit Target Based Index should be saved in the PC's MSB but it is not necessary if the stack is correctly emptied/spilled on the control stack. This TBI could be any value as long as the data is appropriate on the lines. It's all relative so who cares now ?

The control stack has a more straight-forward, direct addressing scheme, with 3 bits in the PC's MSB, next to the type field.

A typical function call would look like :

; main function: pf.t7 subfunction ; (a 24-bit immediate and 3 bits for TID) ; .. fill with some useful work if available ; while target #7 is being prefetched call t7, condition, a6, 2 ; t7 : target line 7 ; condition : the call can be "always" or check some flags ; a6 : the context/base pointer for the current function, to be saved. ; 2 : save 2 pairs of target lines, for reuse later. ; ... the rest of the function subfunction: ; ... do something ; all 8 of the target lines are available ; and can be overwritten/spilled/unspilled if needed. ret ; (eventual condition)

That's ... quite short, right ? Of course I didn't show the passing of parameters, which are moved/prepared in the sequence just after the prefetch instruction.

The function may choose to spill 0, 2, 4 or 6 targets across the call. During traps and/or IPC, it should be possible to also spill all the 8 current targets.

The depth of 16 entries is just enough to make the system work OK. 32 entries would use a lot more room for a smaller speedup.

The other very cool thing about this "target window" system : you can repeatedly call a function and the intermediate targets will remain in cache, in the window, speeding things up even more. There is a small stacked context, not just on the control stack but also the eBTB. This increases cache hit rates, frees some memory bandwidth in repetitive cases without programming efforts...

-

Second sketch

06/26/2024 at 14:49 • 0 commentsI have the drawing somewhere but it's not very different from the first one. There are two significant changes though.

The first change is the "instruction buffer", ex-Instruction L0, now called "explicit branch target buffer" (eBTB). It's a big change that also affects the instructions so I'll cover it later in detail.

The second change is the instruction decoder that is now meant to decode 3 instructions per cycle, instead of two. It uses an additional third slot in parallel with the 2 other pipelines (each inside one glob) to process the control stack, the jumps, the calls, the returns, and other instructions of the sort.

I couldn't... I just couldn't resolve myself to make a choice of which pipeline had to be sacrificed or modified to handle the jumps. And the nature of the operation is so different that it makes no sense to mix the control flow logic with the computations.

Ideally, the instructions are fed in this order : Glob1-Glob2-Stack. Any "slot" can be absent, so the realignment is going to be a bit tricky, and it increases the necessary bandwidth for the memories (FSB and caches). But the ILP can reach 3 in some cases, and is better than if I mixed the stack decoder with the Glob2 decoder, as this would create slot allocation contentions.

So the overall effect is a reasonable increase in ILP, the glob decoders remain simple, a special dedicated datapath can be carved out for the stack's operation and the "control" slot only communicates with the globs through status bits.

...

The other change, as alluded before, is with the instruction buffers that cache the L1, which are now explicitly addressed (at least at a first level). Most jumps work with a prefetch instruction that targets one of the lines, then a "switch" instruction that selects one of the lines for execution (and may stall if absent yet).

I have allocated 3 bits for the line ID. That makes 8 lines for prefetching upcoming instructions for a branch target. More lines (4?) are also dedicated-linked to the control stack.

Another line is the PC, which is actually implemented as a pair of line for double-buffering.

A direct Jump instruction acts as a direct prefetch to the double buffer, but it will stall the pipelines.

The targets for function calls, loops, switch/case, IF, and others are easy to statically schedule a few cycles in advance most of the time, leading to an efficient parallelism of all the units. Then a variety of branch instructions will conditionally select a different eBTB line ID, which gets virtually copied into Line #0.

So the eBTB has 2+8+4 lines working as L0 with specific ties to other units. More are possible using indexed/relative addressing of the eBTB.

This scheme mimics what is already happening with the data accesses : the core is decoupled from the memory system and communicates through registers. Instead, here, there is no register even though the lines virtually count as 8 address registers. But you can't read them back or alter them.

- A given target line can only be set with a prefetch instruction.

- A target line's address can be saved on the control stack (SPILL) and restored (UNSPILL), under program's control.

- Target lines are invalidated/hidden/forbidden across IPC/IPE/IPR because the change of the module makes the address irrelevant. However the addresses remain in cache and the stack keeps some extra metadata to keep the return smooth.

So the Y32 behaves as a 3-pipeline core, with one register set per pipeline, where the 2 short data pipelines (read-decode / ALU / writeback) can communicate with each other and memory, but the control/jump pipeline can't readback or move outside of its unit, to preserve safety/security/speed. The only way to steer execution of the control unit is:

- with explicit instructions containing immediate data,

- or by reading flags and status bits from the other pipelines.

The recent reduction of the PC width (now 24 bits) changes a lot of things compared to a classical/canonical RISC CPU and this enables certains instructions and combinations, while also reducing the computation's critical datapath.

Compared to YASEP32, the core is larger but has higher ILP and can reach higher operating frequencies due to finer pipelining, Y32 is also more capable of keeping a high throughput because it is designed to better handle cache memories from the ground up.

-

First sketch (and discussion)

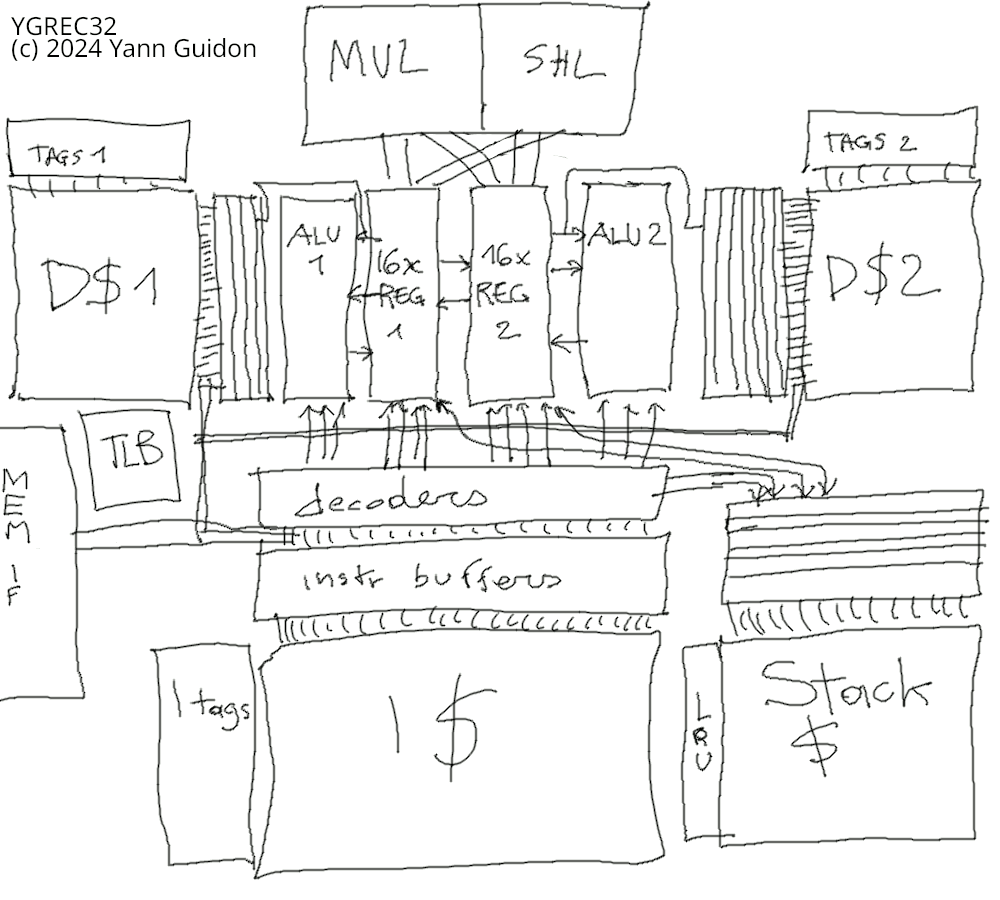

05/10/2024 at 02:34 • 0 commentsThat's about it, you have the rough layout of the YGREC32:

![]()

Sorry for the quick&dirty look, I'll take time later to redraw it better.

It's certainly more complex than the YASEP32 and it looks like a simpler but fatter F-CPU FC0.

There are a number of differentiating features, some inherited from FC0 (don't fire a winning team) and some quite recent, in particular the "globule" approach : a globule is a partial register set associated with a tightly coupled ALU and a data cache block. Copy&paste and you get 32 registers that work fast in parallel, which FC0 couldn't do.

YGREC32 has 2 globules, FC1 will have 4, but a more classic and superpipelined version can be chosen instead of the 2-way superscalar.

Scheduling and hazards are not hard to detect and manage, much simpler than FC0, with a simpler scoreboard and no crossbar. Yay! Most instructions are single-cycle, the more complex ones are either decoupled from the globules (in a separate, multi-cycle unit), or they pair the globules.

A superpipelined version with a unified register set would simplify some aspects and make others harder. Why choose a superscalar configuration, and not a single-issue superpipeline ? In 2-way configuration, a sort of per-pipeline FIFO can decouple execution (for a few cycles) so one globule could continue working while another is held idle by a cache fault (as long as data dependencies are respected). This is much less intrusive to implement than SMT though the advantages are moderate as well. But hey, it's a low hanging fruit so why waste it? And doing this with a single-issue pipeline would almost turn into OOO.

Each globule has a 3R1W register set that is small, fast and coupled to the ALU. More complex operations with more than 1 write operation per cycle (such as barrel shifting, multiplies and divisions) are implemented by coupling both globules.

2 read ports of each register set go directly to the ALU and the memory buffers (L0) that cache the respective data cache blocks. The 3rd read port is used for exchange between the twin globules, and/or accessing the stack.

The pipeline is meant to be short so no branch predictor is needed. Meanwhile, the hardware stack also works as a branch target buffer that locks the instruction cache, so the return addresses or loopback addresses (for example) are immediately available, no guessing or training.

I didn't draw it correctly but the tag fields of the instruction cache and data caches are directly linked to the stack unit, to lock certain lines that are expected to be accessed again soon.The native data format is int32. FP32 could be added later. Byte and half-word access is handled by a dedicated/duplicated I/E unit (insert-extract, not shown).

Aliasing of addresses between the 2 globules should be resolved at the cache line level and checking every write to an address register against other address registers of the other globule. I consider adding a 3rd data cache block with one port for each globule to reduce aliasing costs, for example to handle the data stack.

-o-O-0-O-o- I posted the announcement on Facebook:

Yes I haven't finished all the detailed design of Y8 but what's left is only technical implementation, not logical/conceptual design: I can already write ASM programs for Y8!

OTOH the real goal is to define and design a 64-bit 64-register superscalar processor in the spirit of F-CPU. But that's so ambitious...

F-CPU development switched to a different generation called FC1 which uses some of the techniques developed in the context of Y8 (which is a totally scaled down processor barely suitable for running Snake). Between the two, a huuuge architectural gap. But I need to define many high-level aspects of FC1.

So there, behold a bastardised FC1 with only 32 bits per registers, only 2 globules and 32 registers, but without all the bells and whistles that F-CPU has promised for more than a quarter of century!

Duane Sand shared interesting comments:

It looks like your intent is a dual-issue in-order RISC with a shallow pipeline. Using a design that could scale up to a 4-issue VLIW. The main register file is physically split up between ALU globs, a bit like how later DEC Alphas were implemented. You split up the data cache into 3 or 7 caches that run in parallel, rather than a unified dcache with 3 or 7 r/w ports.

What happens when two or more globs address the same cache line in memory? What happens when a glob writes into a shared cache line? I think programming the globs to use totally disjoint variables and proc frames and heap objects would be challenging and not scale to general purpose computing.

The stack cache would be very complicated, if its stacked values are ever the target of indirect loads and stores. Some stack machines simplify that problem by flushing all stack regs into memory while doing proc calls.

I didn't skim the FC1 notes enough to understand the functional split here between address regs and data-val regs. That part reminds me of CDC 6600 and of Motorola 68000.

I think your goal was for one glob pipeline to remain unblocked and continue computing ahead, when another glob pauses on an innermost-cache miss. How does the ifetch path support that and do the steering? Does the programmer and compiler see all this as a plain sequential processor?

In the FC1 notes, I totally didn't get how the machine helps your goal of capability-based secure computing.

This design reminds me of the Rational R1000 cpu which had two globs. One for executing the program's data calcs and branches. And the second glob for concurrently executing implicit datatype checks. Both globs were driven by a single wide microcode engine. Only the microcoders had to deal with the 2-glob physical nature of the machine.And here are my replies:

Thanks for your thoroughness 😃

The goal of splitting the data caches is many-fold, in particular increasing the number and speed of the individual blocs, since a larger bloc is slower and adding ports makes it worse. This also reduces thrashing (though I'm also working on a LRU system that also provides MRU policy). But also I want to seriously increase cache-reg bandwidth. This last part is partly provided by the L0 lines/buffers between the L1 blocs and the respective "globs".

Aliasing is an old inherent problem, particularly for FC1. the problem is less severe for Y32 there but it's still present. Part of the solution comes from the very mechanic of addressing: the address is not computed during a load/store instruction because it does not exist. The address is explicitly computed and written to an address register and at most 2 results are computed for each cycle, so it's easy to compare the tags of both cache blocks. Eventually, a unified cache tag array could do the magic here.

Another aspect is that the reference for memory coherency is the L0 buffer, not the cache, and these buffers (say 4 or 8 lines per cache block) can communicate faster than when addressing the large array.

I am still examining the split and I consider having a third L1 cache that is shared between the globs for use, indeed, with the data stack frame. So each glob could have 3 memory ports, 2 for the local bloc and 1 to the shared bloc.

There is already a protected control stack that is under active design right now and will be published. Yes it's a bit complicated BUT it resides in its own addressing space, out of reach of the user application so aliasing is absolutely not a concern, and it prevents many common flaws that still make headlines today (iconv flaw, ROP gadgets, stack smashing in general...)

There are no indirect load or stores on the protected stack, or at least not how you might imagine. The stack cache "plays along" with the other stack blocs to "freeze" cache lines associated with its entries, so it acts like a "branch target buffer", among other things. The complexity comes from other parts of the ABI/design though.

---------------------- Duane Sand said: "Some stack machines simplify that problem by flushing all stack regs into memory while doing proc calls."

yg: In this case, it's different : the stack cache is dedicated to certain features only and pushes only a few registers in a very limited number of cases, because the many data cache ports available to the globs make it faster to dump there.

(extra note: Y32 is not a stack machine and no "data" is processed or resides on it)

This is not a general purpose stack and the "frame" is located in the data L1, where the normal registers can access data. However the control of execution flow never leaves the decoding/stack complex and can't be hijacked, in part with techniques like "branch target identification": you can't return to an address that is not a call instruction.

As for FC1, the online notes are still quite vague but you can see some early implementation in the YASEP. Yes there is some CDC6600ness and I am aware of the 68k. My motivation here is that like the CDC machines, memory is slow. Cache is the exception when the worst case happens. You have to load a whole DRAM row in a buffer to start executing the code or use data. So the A/D registers are a sort of window to the buffers (L0). This is not very relevant for old/slower designs but the faster CPU internally split a load or store in two microops anyway. Here, llike the CDC6600 I give an opportunity to statically schedule a "prefetch" before accessing the data itself when the operation takes place.

---------------------- > "I think your goal was for one glob pipeline to remain unblocked and continue computing ahead,"

Yep, I entertain this idea of decoupling the "globs", maybe with a little FIFO that splits the instruction streams after they are demuxed and deemed independent.

A depth of 4 sounds reasonable but it's not a priority, it is an opportunity of enhancement for later. It's still far from the efficiency of a pure OOO core but the price to pay for this small adaptation (8×32 bits) is small and does not change the overall processor structure. This would become a bigger headache for FC1 with its 4 parallel globs, though. But let's focus on making it work before optimising it.

But yes the decoupling keeps a strict coherent instruction flow, so the same binary can work on a single-issue superpipelined core or whatever people want to play with. The only user-visible constraint is the separate control stack (you can't pop or push to the hardware protected stack and mess with it) and the registers are tied to one glob. There is no need to recompile if you update the CPU.Capability-based computing : it's still in its infancy for now, I'm slowly working on it, maybe I'll have a definitive answer in a year 😛

Rational R1000 : you made me discover it less than a week ago so I can't say much. I can say that I have programmed the Pentium to reach 98% of its peak efficiency through extensive manual optimisations, and I collected countless notes on how to do something and not to screw the programmer. I recently found a description of the MIPS R5000 that has some similar features as well. But like the Alpha, the 2 pipelines have a dedicated way for the FP units, limiting the throughput. Y32 and FC1 are more symmetrical and can execute most arithm/mem operation anywhere, except for the jumps and rets that would ideally be in the 2nd slot (for efficiency reasons).

---------------------- Duane Sand "shallow pipeline"

yg: that's spot on!

The BTB indirectly provided by the stack helps absorb some latencies, a one-cycle bubble is considered harmless so a branch predictor is not needed.

Performance is very affected by the memory latencies so there is a strong emphasis on prefetch, hinting, caching "right", splitting address generation and data use...

The philosophy, like in the original FC0, is to squeeze as much performance with a simple and smart design without going OOOe. FC0 used "out of order completion" (a bit like CDC6600) and it was already a warm mess. It is reasonable to consider an in-order core as much easier to implement and to "get working right" faster, then only can we optimise. Y32 is a step in this direction, not the destination---------------------- For a two-issue, two-ALU machine, I think it would be adequate to support just one write-to-dcache operation per cycle. And maybe just one read-or-write to data cache, per cycle. The 2 or more globs do not have to be functionally identical.

Your stack cache is exposing and leveraging the hidden hardware call/return stack that is implemented by branch predictor boxes in all high-perf cpu cores. Nice! Besides holding frame pointers and return branch addresses securely, it could hold the unexecuted portions of code cache lines of recent callers. Maybe giving zero-time returns.

Some secure machines use tag bits on every individual memory word to protect the critical words. Other machines segregate all those critical words into their own segments or pages, protected by segment- or page-level tag bits in the TLB. You are using protection at that segregated level, with separately addressed control frames and data frames. That could be enough. You don't need to have dedicated physical caching of that info too, in a minimal implementation. Sharing the L0 dcache structures would work, maybe augmented by a small buffer.

Most RISC machines use (reg)+immed as their primary addressing mode. This handles C stack frames well and global variables. But it adds an additional pipeline stage. AMD's forgotten AM29000 RISC arch of 1988 had reg-indirect as its only addressing mode. Just like in microengines. This required a separate add-immediate or load-immediate instruction for most loads and stores, but the pipeline was shorter. Another advantage is that the TLB conversion to physical addresses or the he tag probe could maybe be started sooner or done only once.

Controlling when DRAM rows get activated is a very hard problem now, with cpu cores cycling 350 times faster than DRAM. Controlling DRAM row actions from the instruction stream is hopeless. At best, the address registers can be hints to the cache mechanisms. With some kind of simple predictor monitoring successive DRAM cache line requests coming from the outermost cache. Or let the programmer hint this via I/O operations, for raster ops or float pt vectors.

yg:

only one port for cache, shared by the globs ? no way.

This plagued the Pentium for example. There is no benefit to limit the ports, unless you are severely constrained by the silicon area.

Think of saving/restoring the whole register set without a register window...Furthermore Y32 is not a load-store machine, but more like the CDC660.

except that the CDC reserved some registers for writes and other for reads.Y32 has relatively few registers, maybe 16 or 20 GPR, the rest are address and data registers, but they map directly to L0 and they can act as a "register window" if you modify the associated address register.

This is consistent with the purpose of the machine which is not destined for heavy duty computations.There is also a high chance that Y1 would be implemented in a silicon process that is not well exploited, where the bulk of the cache ram array is relatively slow.

The Y32 compromises the GPR space, where others (MIPS, ARM) will cache data from RAM, with a direct window to memory. Change the address of the window, tadah you have your data immediately as a register (unless you cast it or change the format, then the GPR must cache the result).Load/store bandwidth is a critical resource. And with FC1 it will be even more critical because I intend to do even crazier (but the method is not yet well formed enough to give authoritaive replies).

The register-mapped memory practically decouples the core(s) from the memory and can be designed less tightly.

..........

> "The 2 or more globs do not have to be functionally identical."

Of course it's not a hard requirement and there will be slight asymmetries so a pair of consecutive instructions will be able to perform 4R2W operations for example, with each half doing some of the work.However in practice, and for the sake of the compiler writers' sanity, orthogonality and symmetries are very, very much desired. Already the asymmetry due to the split caches creates a memory allocation constraint that is not usual and I don't want to increase the burden.

The principle of the globules is that they are identical, small, simple, tailored for speed, and copy-pastable as required. Each does the minimum, more complex operations are shared across the cluster keep the globules as fast as possible. And of course memory access is vital.

One globule would be able to compute the next address while a data item is also read from the data register. Since the starting address comes from a valid register, the new address with a small displacement only needs to be checked if it exceeds a page boundary, relieving the TLB.

.......

> "Your stack cache is exposing and leveraging the hidden hardware call/return stack that is implemented by branch predictor boxes in all high-perf cpu cores. Nice! Besides holding frame pointers and return branch addresses securely, it could hold the unexecuted portions of code cache lines of recent callers. Maybe giving zero-time returns."

That's exactly the point.

maybe not zero cycle, but significantly close to it.

Behind the curtain it's a bit more messy but I want to keep latency as low as possible, which is compatible with the "minimalist" theme of the group 🙂Branch predictions are hard. A Pentium-like branch predictor is nice but uses real estate, has some quirks, requires training... and the same effort (in penalties) is mostly the same for training and untraining.

I try to leverage other sources of context from the program's structure and the stack is perfect for this as it handles:

* if/switch

* loops

* call/ret

* try/throw

* syscalls

(ideally)Handling the first 3 items is already a significant gain, and I keep the usual conditional branch instructions for the cases where the "structured" version does not work but there will be a penalty.

> " Controlling DRAM row actions from the instruction stream is hopeless. "

yes, but it depends on the application and target.

Initially, the YASEP would run at maybe 10 or 100MHz and steer a SDRAM chip directly. It's a perfect match in this case. That's all that was required of that microcontroller.

In the extreme mismatch cases that you mention, latencies are absorbed by huge renaming files, hundreds of instructions remain in flight... and branch prediction is critical.

I'd rather use 2- or 4-way Simultaneous Multithreading, which is compatible with the Y32 since the globules have a very small number of registers. So extending each globule to 32 or 64 registers would not impede the frequency too much. It remains quite scalable. A monolithic register file would soon become too large and slow the pipeline down (this was an issue with FC0 that the blobules try to address).Realistically, at this moment, I think that I can handle a CPU that runs at about 200MHz FSB. I have matching pipelined SSRAM and the PCB design is still not too hard. 1MB or 2MB SSRAM at 250MHz can be found on eBay, the kinds of chip you can find loitering around an Alpha or R10K CPU. Maybe I can double-clock the core to 400Mhz eventually. This is compatible with a recent FPGA or a cheap fab run on 350nm, if I can't access the 180nm of GloFo or Skywater. All I have to do is make sure I have enough L2 onchip!

So for now I'm not too concerned about extreme RAM delays. There is more important for now, as I have not even defined the instruction set format.

YGREC32

because F-CPU, YASEP and YGREC8 are not enough and I want to get started with the FC1 but it's too ambitious yet.

Yann Guidon / YGDES

Yann Guidon / YGDES