-

WEEDINATOR Frontend Human - Machine Inteface Explained

10/13/2018 at 08:35 • 0 commentsRafael has come over all the way from Brazil to the Land of Dragons to visit! Progress on web the interface has been ongoing in the background and it's great to get a guided tour by the man himself on how it works:

-

Control System: Computer or Microcontroller?

07/02/2018 at 13:27 • 0 commentsOne of the debates that came out of the Liverpool Makefest 2018 was whether it would be better to use a computer eg Raspberry Pi or microcontroller such as Arduino for the control system? ….. I tried a Google search, but nowhere could I find a definitive answer.

In my mind, the Raspberry Pi, or the 'RPi', is great for complex servers or handling loads of complex data such as Ai based object recognition …… Or a complex robot with very many motors running at the same time. In contrast, the Arduino, or 'MCU', will handle simpler tasks with greater efficiency and reliability.

The RPi works with a huge operating system composed of a vast, almost indecipherable, network of inter-dependable files, using a very large amount of precious silicon. The problem here is that computers are prone to crashing due to their sheer complexity whilst a MCU, with only a few thousand lines of code, is at least one order of magnitude more reliable. The other question that was posed is that if the system, whatever it is, does crash, how long will it take to reboot?

As development of the machine continues, some of the tasks will be assigned to a small computer, the Nvidia TX2, hosting an enormous graphics processor for Ai based object recognition. More critical tasks such as navigation and detecting 'unexpected objects' will be done on MCUs. One of the major tasks is writing / finding code to get reliable communication between these devices. We might also want a simple 'watchdog' MCU to check that all the different systems are working properly. Maybe each system will constantly flash a 'heartbeat' LED (or equivalent) and the watchdog will monitor this. A small robotic arm would then move across to press the relevant reset button.

-

WEEDINATOR navigating using Pixy2 line tracking camera

06/10/2018 at 13:47 • 0 comments

The WEEDINATOR uses advanced, super accurate GPS to navigate along farm tracks to the start of the beds of vegetables with an accuracy of about plus/minus 20 mm. Once on the bed, accuracy needs to be even greater - at least plus/minus 5 mm.

Here, we can use object recognition cameras such as the Pixy2 which can perform 'on chip' line recognition without taxing our lowly Arduinos etc.

This test was done in ideal, cloudy conditions and eventually the lighting would need to be 100% controlled by extending the glass fibre body of the machine over the camera's field of view and using LEDs to illuminate the rope. Other improvements include changing the rope colour to white and moving the camera slightly closer to the rope.

The Pixy2 is incredibly easy to code:

#include <Pixy2.h> #include <PIDLoop.h> #define X_CENTER (pixy.frameWidth/2) Pixy2 pixy; PIDLoop headingLoop(5000, 0, 0, false); int32_t panError; int32_t flagValue; //////////////////////////////////////////////////////////////////////////// void initPixy() { digitalWrite(PIXY_PROCESSING,HIGH); pixy.init(); pixy.changeProg("line"); } //////////////////////////////////////////////////////////////////////////// /* LINE_MODE_MANUAL_SELECT_VECTOR Normally, the line tracking algorithm will choose what it thinks is the best Vector line automatically. * Setting LINE_MODE_MANUAL_SELECT_VECTOR will prevent the line tracking algorithm from choosing the Vector automatically. * Instead, your program will need to set the Vector by calling setVector(). _MODE_MANUAL_SELECT_VECTOR * uint8_t m_flags. This variable contains various flags that might be useful. * uint8_t m_x0. This variable contains the x location of the tail of the Vector or line. The value ranges between 0 and frameWidth (79) 3) * int16_t m_angle . This variable contains the angle in degrees of the line. */ void pixyModule() { if (not usePixy) return; int8_t res; int left, right; char buf[96]; // Get latest data from Pixy, including main vector, new intersections and new barcodes. res = pixy.line.getMainFeatures(); // We found the vector... if (res&LINE_VECTOR) { // Calculate heading error with respect to m_x1, which is the far-end (head) of the vector, // the part of the vector we're heading toward. panError = (int32_t)pixy.line.vectors->m_x1 - (int32_t)X_CENTER; flagValue = (int32_t)pixy.line.vectors->m_flags; DEBUG_PORT.print( F("Flag Value: ") );DEBUG_PORT.println(flagValue); panError = panError + 188; // Cant send negative values.Lower value makes machine go anti clockwise. pixy.line.vectors->print(); // Perform PID calcs on heading error. //headingLoop.update(panError); } //DEBUG_PORT.print( F("PAN POS: ") );DEBUG_PORT.println(panError); } // pixyModule -

Nvidia Jetson TX2 Installation HELL ....... AAAAAaaaaaaargh!!!

06/06/2018 at 21:38 • 0 comments![]()

-

Upgrade to Pixy Camera

06/01/2018 at 11:30 • 0 commentsPixy2 is out! …. And it does line vector recognition:

#include <Pixy2.h> #define X_CENTER (pixy.frameWidth/2) Pixy2 pixy; int32_t pan; void setup() { Serial.begin(115200); Serial.print("Starting...\n"); pixy.init(); pixy.changeProg("line"); pixy.setLamp(1, 1); } void loop() { pixy.line.getAllFeatures(); pan = (int32_t)pixy.line.vectors->m_x1 - (int32_t)X_CENTER; Serial.print("pan: ");Serial.println(pan); }…. Now just need it to stop raining outside so it can be tested on blue rope pinned down on vegetable beds.

-

Seamless Waypoint Satelite Navigation

05/20/2018 at 08:04 • 0 commentsProject milestone is reached!

-

Our Code is Easy to Understand!

05/14/2018 at 08:45 • 0 commentsWhen I myself write code I end up with a long series of lines which contain lots of different subsections for reading different sensors or getting data from different sources. Having somebody on the team who actually knows how to write code properly can also enable it to be more easily understood. What's the point of being open source if what you've created is a mess or very difficult to de-cypher?

The navigational hub - a Mega 2560 - has now been coded with all the main subsections split up into different headings as shown below:

![]() Even I can now understand it! Check it out on GitHub :https://github.com/paddygoat/WEEDINATOR

Even I can now understand it! Check it out on GitHub :https://github.com/paddygoat/WEEDINATOR -

Improved Navigation Code Tested on Machine

05/11/2018 at 10:34 • 0 comments -

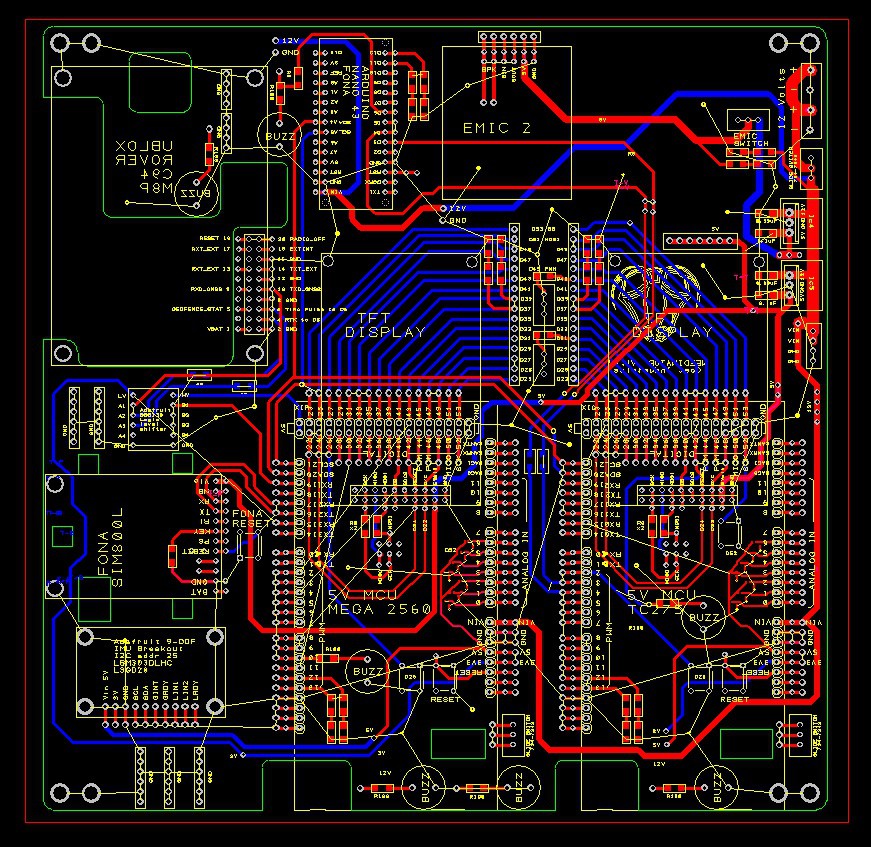

PCB Simplified

05/11/2018 at 07:37 • 0 commentsNow, according to the data flow in the previous log, the complexity of the control system has been reduced and the Mega MCU is now in charge of all the navigation and works autonomously, just sending all the data to the TC275 for actuating motors and displaying / broadcasting the data and waypoint state. The PCB has been modified so that the FONA GPRS module is closer to the Mega for good serial comms:

![]() Behind the scenes, SlashDev has been consolidating the code so that it works much more smoothly and data travels seamlessly between the modules. Check it out on GitHub!

Behind the scenes, SlashDev has been consolidating the code so that it works much more smoothly and data travels seamlessly between the modules. Check it out on GitHub! -

We now have torque sensing!

05/09/2018 at 16:26 • 0 commentsManaged to integrate this gadget into the control system to get control of the machine via torque rather than position:

Autonomous Agri-robot Control System

Controlling autonomous robots the size of a small tractor for planting, weeding and harvesting

Capt. Flatus O'Flaherty ☠

Capt. Flatus O'Flaherty ☠

Even I can now understand it! Check it out on GitHub :

Even I can now understand it! Check it out on GitHub : Behind the scenes, SlashDev has been consolidating the code so that it works much more smoothly and data travels seamlessly between the modules. Check it out on

Behind the scenes, SlashDev has been consolidating the code so that it works much more smoothly and data travels seamlessly between the modules. Check it out on