I was discussing with a friend a pet door that would only let out certain animals. We had a few basic ideas (RFID, magnets, etc), but a big issue with a dumb sensor is the wrong animal sneaking out when the door opens.

Maybe a camera would work? Sounds like a good use case for machine learning. This project distinguishes between three pets.

|

|

|

| Cody — Corgi/Monster mix | Malloc —Nimble hunter | Strcat — Loves strings, overflows buffers |

This is my first attempt applying machine learning to a real problem beyond coursework and MNIST/CIFAR- if you stumble on this and can recommend better ways to do things, please reach out!

Collecting Data

I hooked a webcam to a Raspberry Pi and mounted it with a view of the backdoor. Every minute it take a picture and uploads it to Amazon S3.

I wrote some tools to quickly classify training images by hand and output a CSV.

The Software

The software is written with Tensorflow (with TFLearn), and Scikit.

It seemed like I could run into a problem of a network that just always predicts an empty image. Since the scene is usually empty, predicting that the scene is empty would actually be a pretty low cost network. To mitigate this, I decided to break the problem into three parts:

- Determine Night/Day

- Determine if Anything is Happening

- Determine the Specific Animal

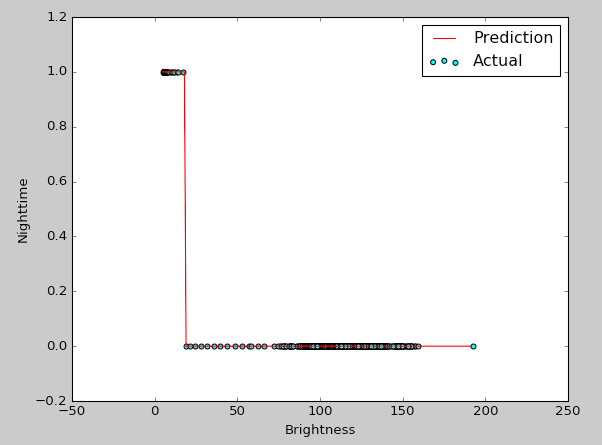

Determining Night/Day

This uses logistic regression on the average brightness of the image.

|

|

|

from sklearn import linear_model

Y = np.array(Y) # array of training answers, 1 or 0

X = np.array([]) # array of image brightness

for filename in filenames:

# load the images

image = misc.imread(constants.IMAGE_64_PATH + '/' + filename, mode='L')

avg_brightness = np.matrix(image).mean()

X = np.append(X, avg_brightness)

X = np.array([X]).transpose()

clf = linear_model.LogisticRegression(C=1e5)

clf.fit(X, Y)

Determining if Anything is Happening

The next step identifies if anyone is present in the image, or if it’s just an empty scene.

After a few attempts, I ultimately realized that this is a fixed position camera on a pretty limited scene. The scene can change a lot between night and day, but animals will always be in the same place- table, windowsill, floor. They won’t just be floating midair.

Given this, a fully-connected neural network worked fine. It doesn’t need to know what a cat looks like- just that a pixel may correspond to activity.

import tflearn

from tflearn.data_preprocessing import ImagePreprocessing

from tflearn.data_augmentation import ImageAugmentation

from tflearn.layers.core import input_data, dropout, fully_connected

from tflearn.layers.conv import conv_1d, max_pool_1d

from tflearn.layers.estimator import regression

img_prep = ImagePreprocessing()

img_prep.add_featurewise_zero_center()

img_prep.add_featurewise_stdnorm()

img_aug = ImageAugmentation()

img_aug.add_random_flip_leftright()

# Specify shape of the data, image prep

network = input_data(shape=[None, 52, 64],

data_preprocessing=img_prep,

data_augmentation=img_aug)

# Since the image position remains consistent and are fairly similar, this can be spatially aware.

# Using a fully connected network directly, no need for convolution.

network = fully_connected(network, 2048, activation='relu')

network = fully_connected(network, 2, activation='softmax')

network = regression(network, optimizer='adam',

loss='categorical_crossentropy',

learning_rate=0.00003)

model = tflearn.DNN(network, tensorboard_verbose=0)

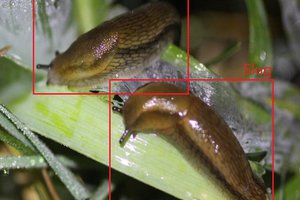

To handle issues of lighting and shadows changing throughout the day, I created an average image, which is the average of all daytime images. I subtracted this image from all training images, like zeroing a scale before weighing something. It’s not perfect, but it brings out a little more contrast in the photos. Notice the cat on the table becomes more visible when the average (center) is subtracted.

|

|

|

| Original image with a cat | Average of all daytime... |

Zach

Zach

Capt. Flatus O'Flaherty ☠

Capt. Flatus O'Flaherty ☠

Nick Bild

Nick Bild

Nyeli Kratz

Nyeli Kratz

You have don a nice work. I have also work on that animals machine learning for more detail you can see here https://atozanimalszoo.com/