-

A chain is only as strong as ...

3 hours ago • 0 commentsFinally got some Cuda Demo code to link. Hopefully, it will also be running soon. Of course, there is also that fact that I have all of my own real hair, for the most part - that is even after much proverbial hair pulling and head bashing. Yeah, apparently a certain amount of masochism or else capacity for mental self-flagellation is pretty much a requirement for any would-be software engineer when it comes to certain things, such as project and linker settings in Visual Studio, of course! What else did you think that I might be talking about?

So, after spending a hopefully not too unreasonable amount of time searching other forums for "why won't my MFC project link against CUDA", and finding that the debug dumps are numerous, long, and generally incomprehensible - on pretty much every forum, where the advice is almost a universal "Good Luck with That!" - well you get the idea. So, I knew it was time to jump right in and swim with the other angry crocodiles, while contemplating how many other ways there might be to somehow end up mopping the floor with my own blood - hopefully, only in a virtual sense, of course.

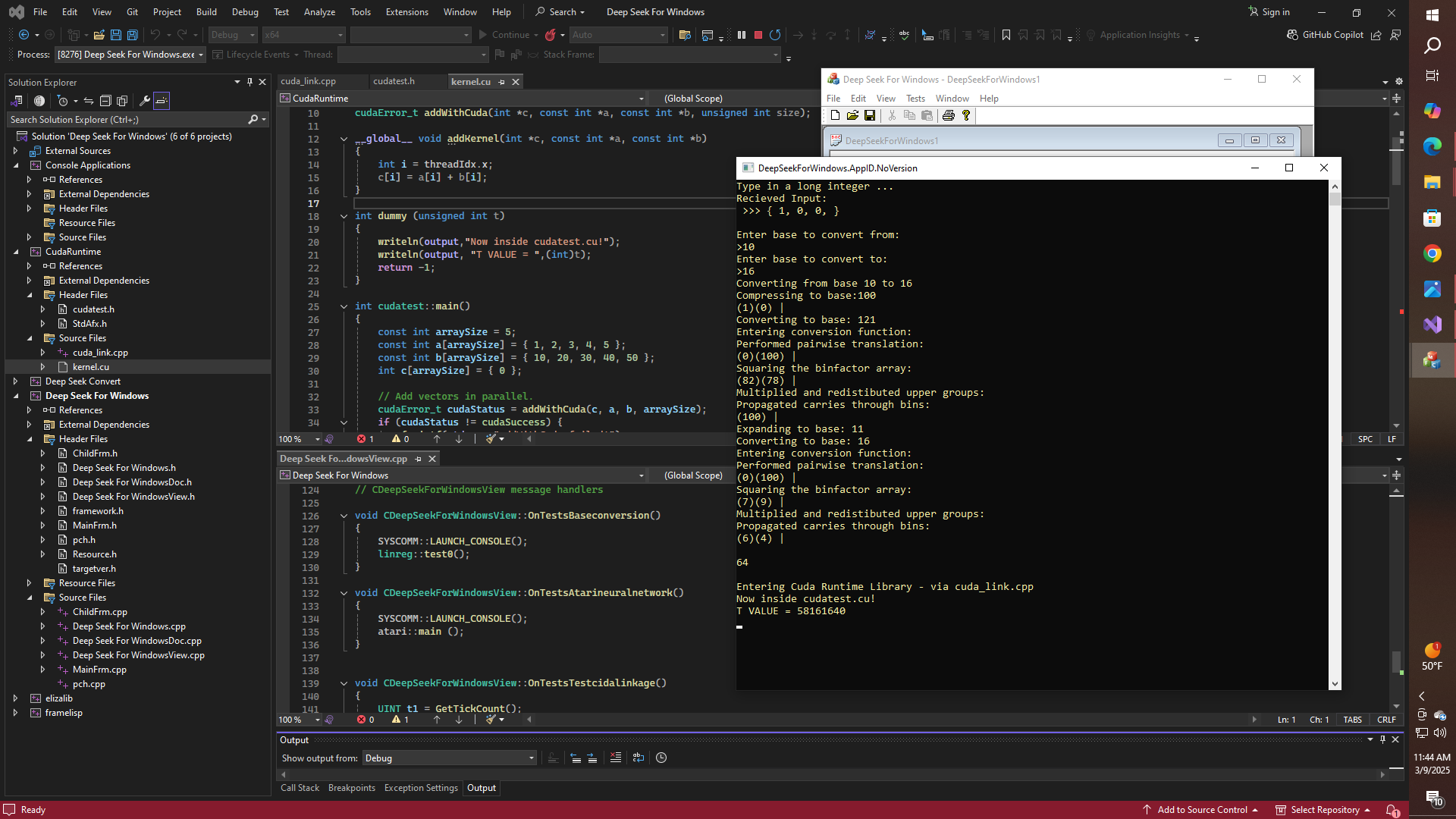

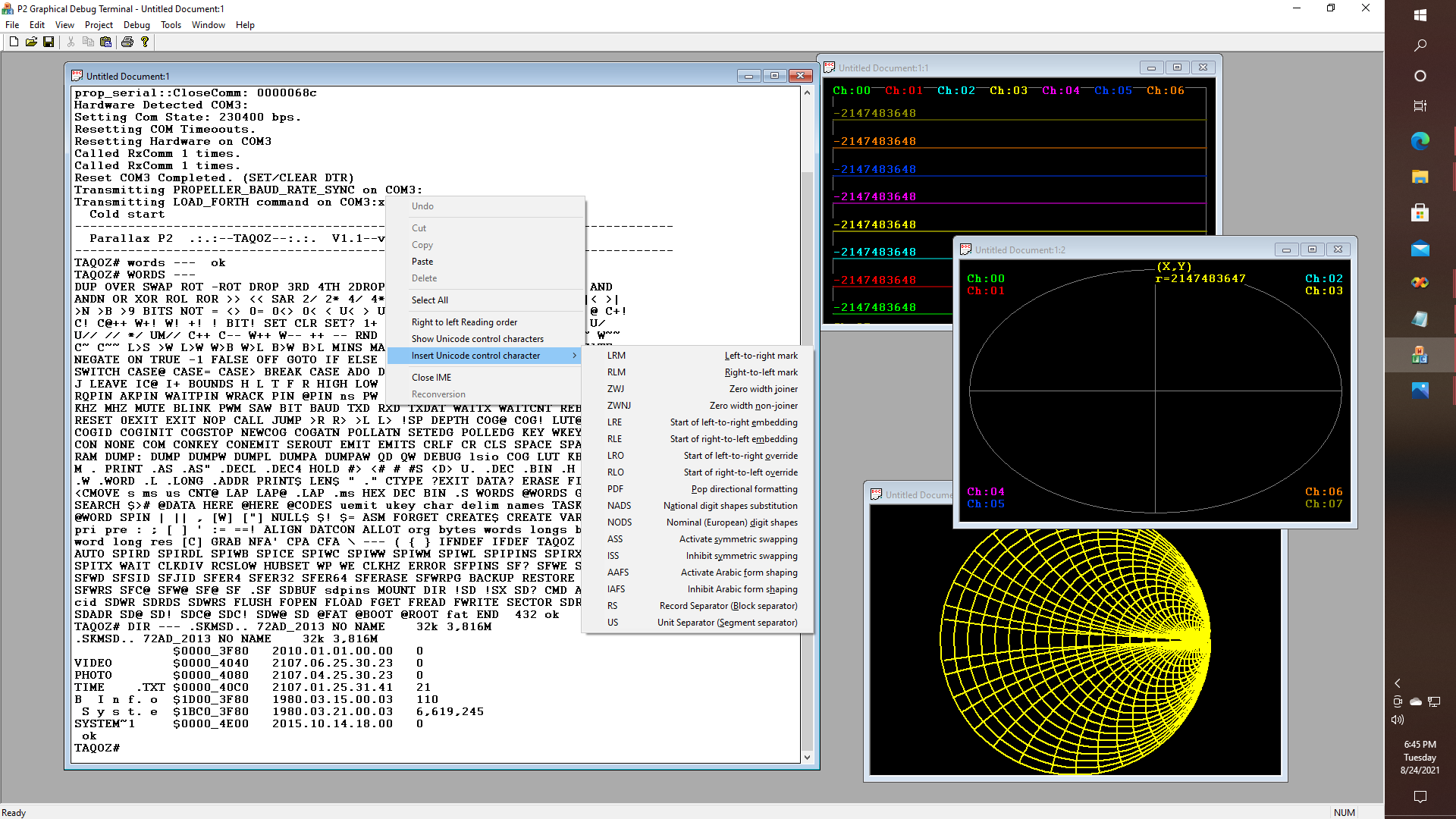

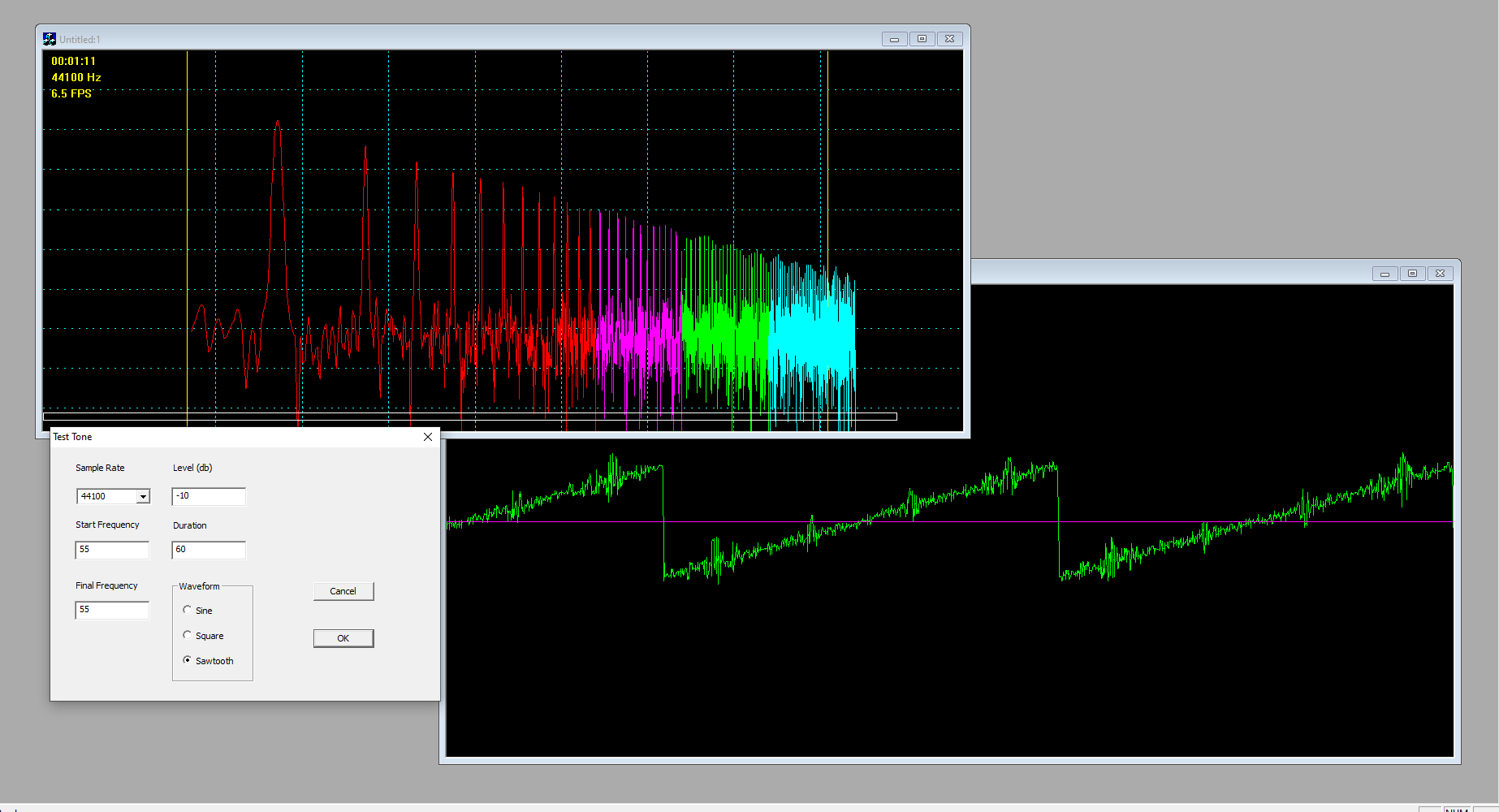

If you don't understand the need for such graphic language regarding this subject, then you haven't been doing this for very long. Speaking of graphics? Here is proof that I can make calls into legacy console applications, from MFC no less, return to MFC, and then run a test that lets me call some code in a cuda_kernel.cu file, which in turn makes callbacks into my very own favorite PASCAL-style IO routines. YES!

![]()

First, we run the MFC application, and from a Windows menu we launch a console and then run an interactive number base conversion routine which is actually in a "Console Applications" library then upon returning to MFC we make another menu-driven call into some stuff in the "Cuda Runtime" library which then makes calls back into VS2022 generated code in the Frame Lisp library - which is where the PASCAL-style IO intrinsics are located.

![]()

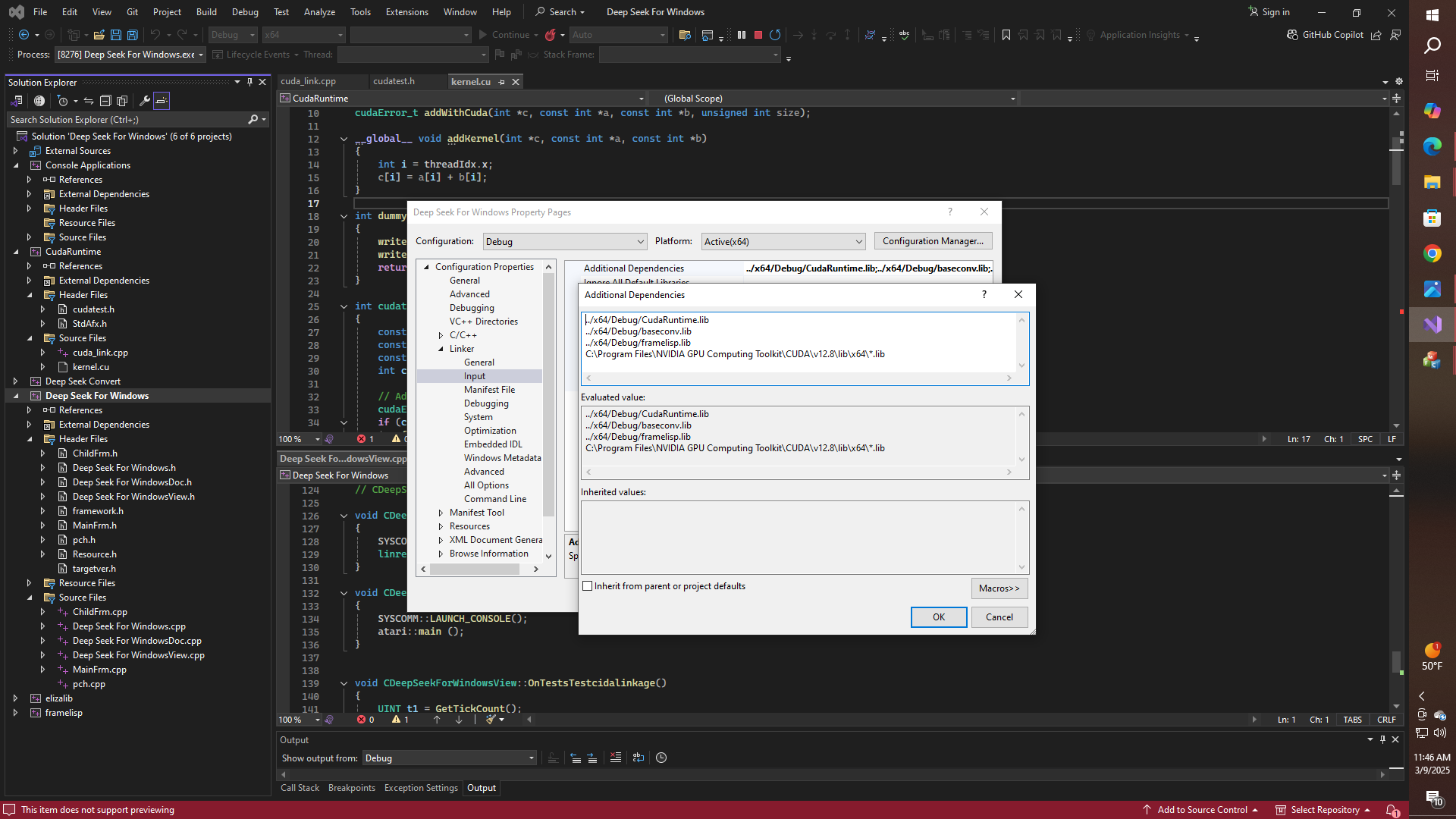

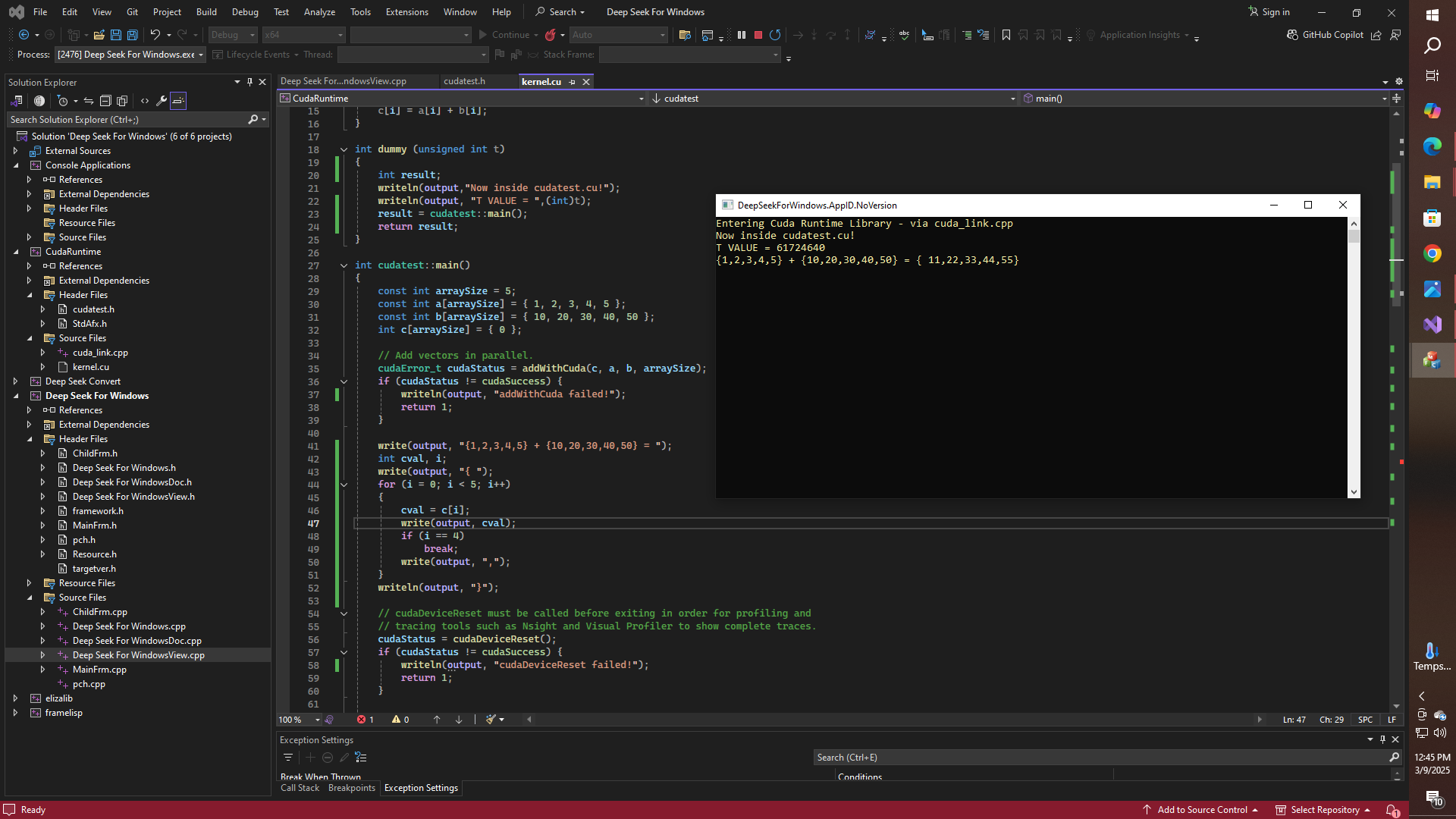

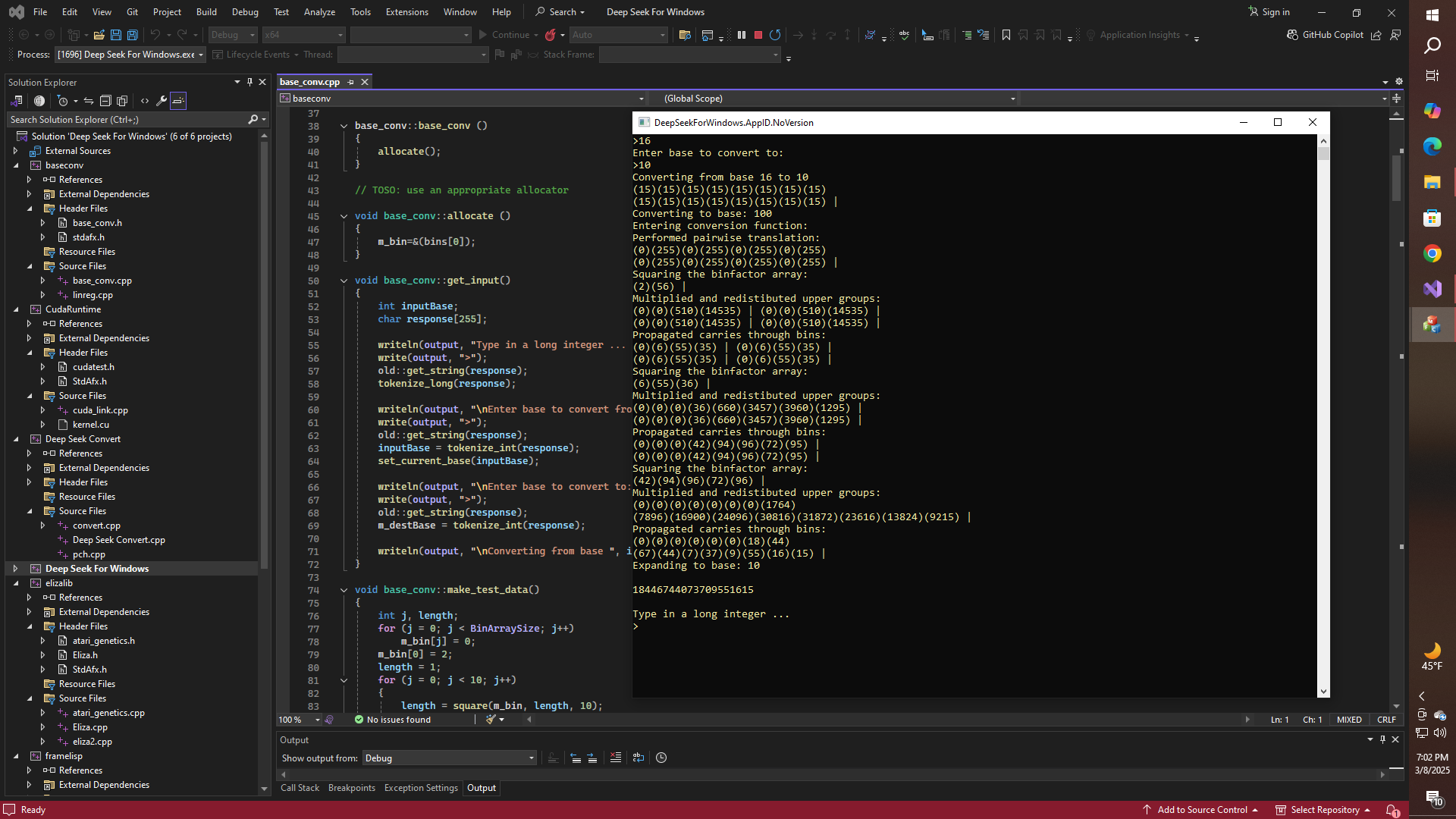

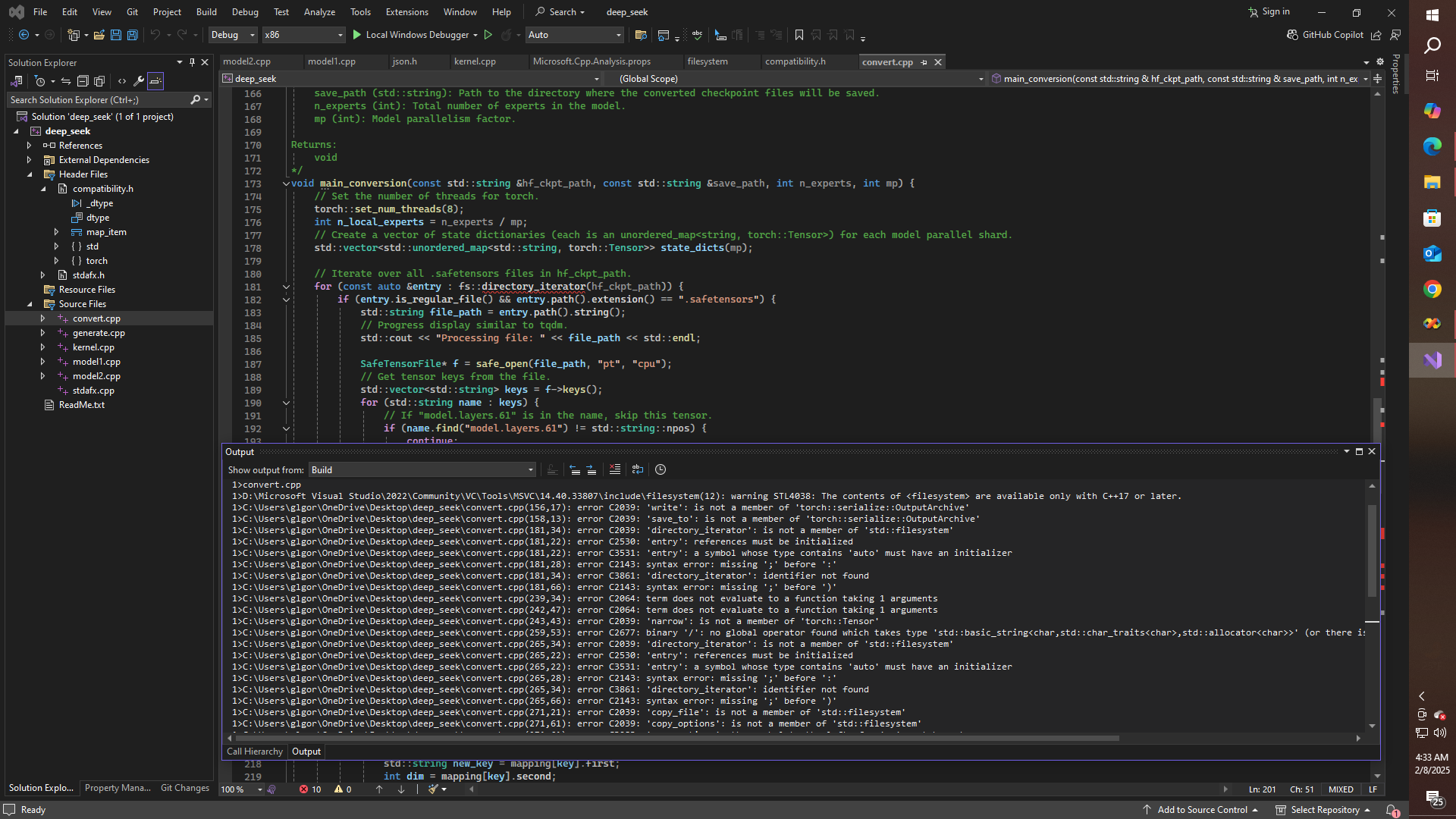

Getting CUDA compiler-generated code to link was not all that hard, once I got this mess to compile that is. Now it's time to try running some actual CUDA code of course, and then we can do some other long overdue stuff, like trying to figure out how Deep Seek works, and so on.

Of course, we can't be kept waiting. Now, can we?

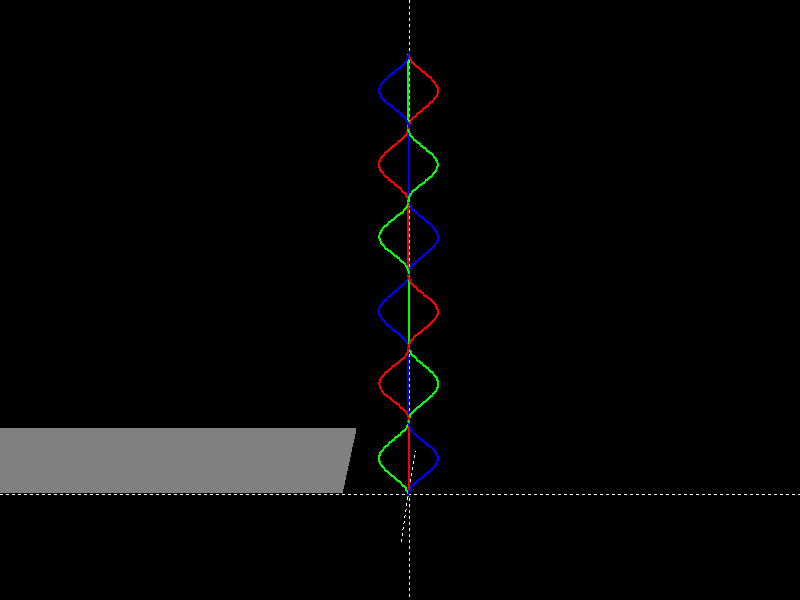

![]()

Now adding vectors in parallel CUDA style. Watch out Atari Genetics, and/or Number Base Conversion - hopefully you might be next!

-

Back into the Maze of Twisty Little Passages

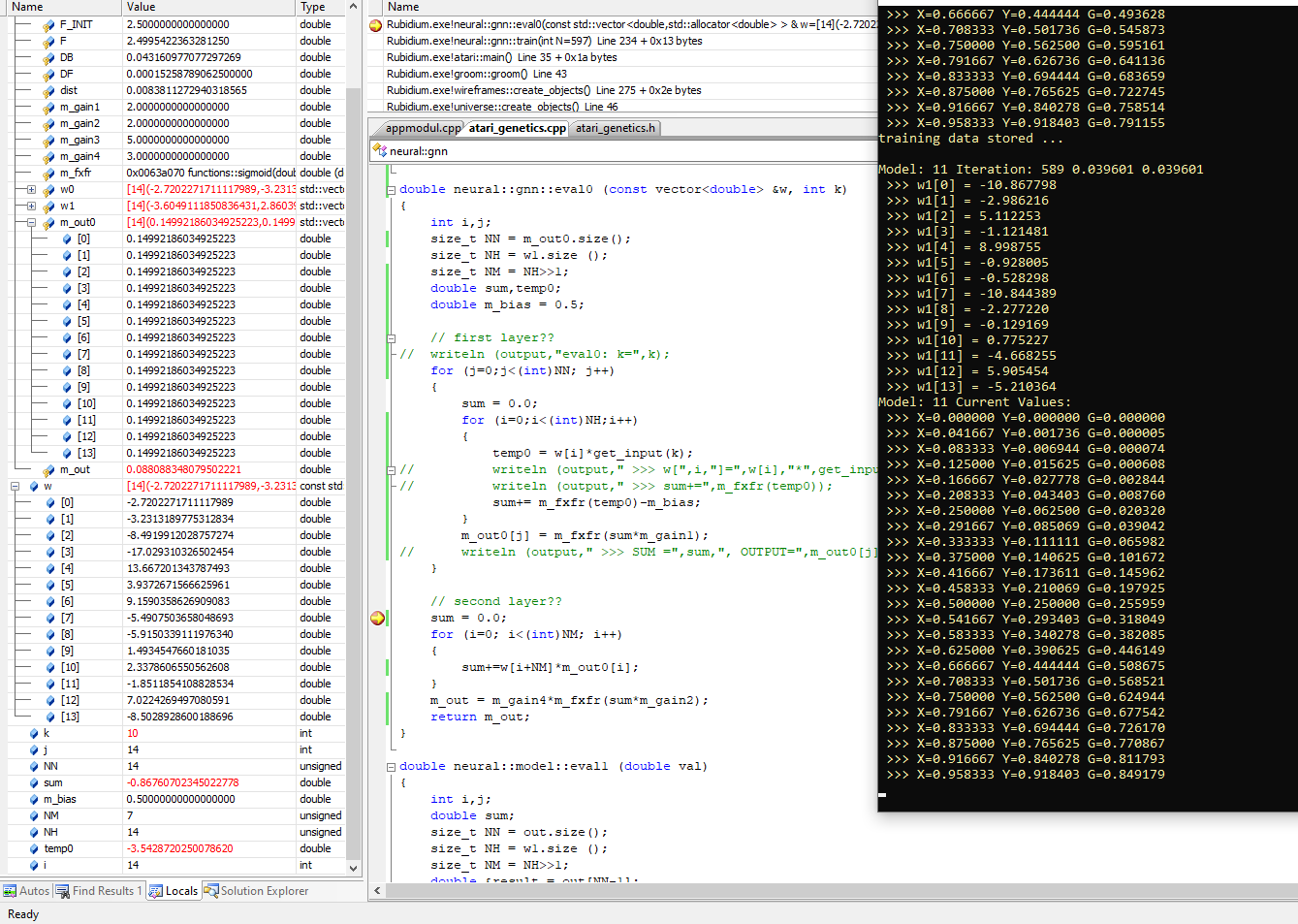

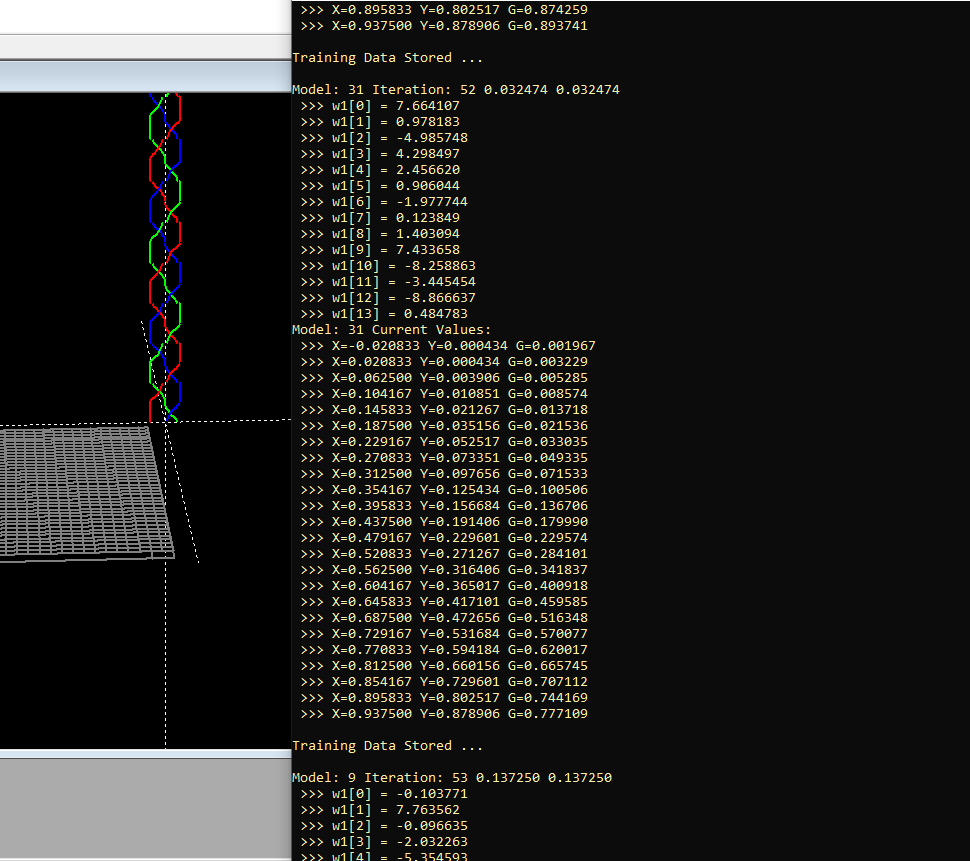

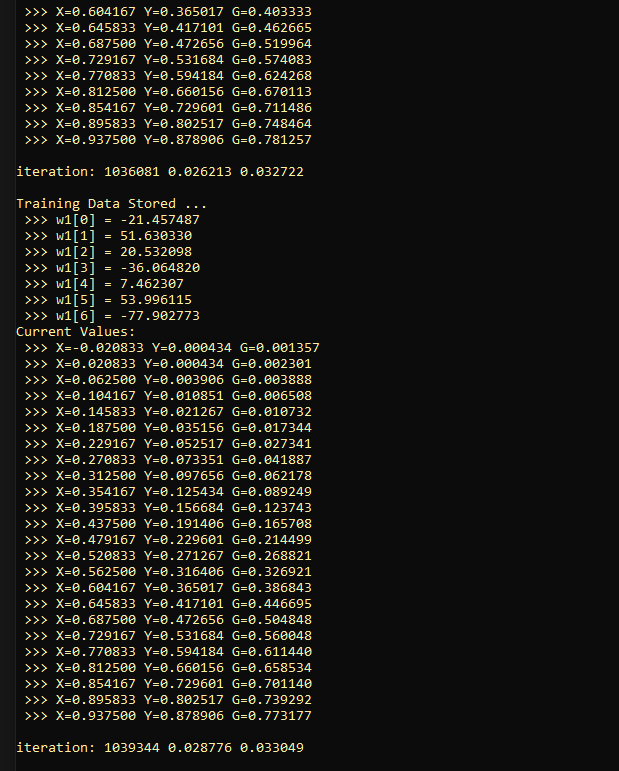

3 days ago • 0 commentsSo I am starting to figure out some of the issues with the original Atari Genetic algorithm code, and there are a bunch of issues, despite the fact that the original code does actually appear to work - sort of. For those that love to stare at debugging sessions, this will explain volumes. Maybe I could ask Deep Seek for an explanation of what is happening here?

![]()

First the good news. I completely refactored the C code that Deep Seek gave me, so as to have something that for now is in C++, and which I think I can eventually adapt to run on a Propeller, or on an Arduino, or an NVIDIA GPU, as discussed in the previous log entry, It is also quite easy to see that every element of the array m_out0 is getting filled with the same value, which I am pretty sure was also happening in the original Atari BASIC code. So clearly, some more work needs to be done on the topology of this network. Yet I have definitely had some substantial improvement in the performance of the algorithm by adding some gain and bias parameters.

Therefore, since I have a short, simple function that can easily be modified to work according to some different theory of operation, I can proceed therefore, with some of my ideas about running something like this in multiple threads, on the one hand, and/or adding some kind of evolutionary selection component, as previously discussed. In Windows of course, a simple call to a training function via AfxBeginThread is all that would be required to do the actual model training and instance evaluation, this is to say - in and of itself. Furthermore, all of that should work just fine, without needing a whole bunch of locks, semaphores, or event handles or whatever, that is to say - until we get to the reportage sections.

Obviously, we don't want multiple threads attempting to call WRITELN, at the same time. Yet if I add a critical section to the code where the reporting takes place, then this could result in blocking problems from time to time. Perhaps a simple mail slot system would be the way to go; so that a thread can create a report and then "submit it" to the IO queue as a completed text-object. That doesn't seem all that hard, given that most of what I would need to do that, already exists in my PASCAL style IO classes for WRITELN, etc., and where I could perhaps modify the WRITELN class to allow writing to a TextObject, maybe something like this:

#include "../Frame Lisp/intrinsics.h" #include "text_objects.h" ... TextObject debug_text; lisp::begin_message(debug_text); for (i=0; i<np; i++) { x = m_ds->x(i); y = m_ds->y(i); arg = double(i)/m_ds->size(); value = eval0(w1,i); writeln (debug_text," >>> X=",x," Y=",y, " G=",value); } lisp::end_message(debug_text); lisp::send_message(output,debug_text); ...That way I can put all of the memory management, as well as the synchronization objects in the LISP and/or PASCAL library text manipulation and/or IO handlers. Hopefully in such a way that there are no blocking calls that ever have to be made from within this otherwise critical loop.

And I still need to get back to reading the code to see how Deep Seek really works, as well is putting some of this to use with my braiding functions. Fortunately, there is quite a bit that is already done, at least as far as all of this other IO stuff is concerned,

Something to keep in mind therefore, is that there is already a project on Hackaday, as well as a source code repository on GitHub for a framework that I was working on, as hard as it is to believe right now, for bespoke application builder that I made in 2019 with the intent that it allow me to interact with Propeller and Arduino projects over a USB connection, among other things.

![]()

Therefore, a lot of the heavy lifting has already been done, that is - since that code base made heavy use of my Frame-Lisp library, among other things. So, yes - a lot of heavy lifting has already been done. Maybe I should go back and rewrite the messaging system from that project, in such a way as to be able to support the new APIs that I just suggested that I would like to have.

Or I could give the code to Deep Seek and see if it is up to the chore! Ha! Ha! Ha! Ha!

All, fun aside, it is easy to see how the idea of figuring out how BlueSky works on the one hand, with its AT = Authenticated Transfer Protocol, vs. whatever Deep Seek is doing, with its Mixture of Experts Model, and whatever that actually does.

![]()

Yet there is some other news of course, and that is that I finally did get back on track with trying to create a CUDA-aware version of some of the stuff that I have been working on., and that includes an update to a very weird method for doing number base conversion according to a convolutional algorithm, that is instead of the typical "schoolboy" method commonly referred to as serial division. Getting some of this stuff to just barely try to link in VS2022 took almost a whole day, yet finally, I have some progress in that direction. That means of course, that I am also on track with the idea of further modifying my PASCAL-style IO in C++ library to eventually be able to be used concurrently be usable from multiple threads, that is to say - without getting blocked or garbled, whether the code is running on NVIDIA hardware, or on an Arduino.

And that also means that there should hopefully be a Git repo coming out of this .... real soon now.

And so, it goes.

-

Genetics, Death and Taxes vs. Creation, Concurrency and Evolution

02/25/2025 at 19:53 • 0 commentsWell, for whatever it is worth, I have started work on parallelizing the genetic algorithm for approximating a mathematical function by using a neural network, and even though I haven't started yet on actually running it as a multi-threaded process, the results are nonetheless looking quite promising!

![]()

An interesting question should arise therefore; Is it better to train on a single genetic neural network, let's say for 2048 iterations, or is it better to try and run let's say 32 models in parallel, while iterating over each model, let's say 64 times. It appears that allowing multiple genetic models to compete against each other, as if according to an evolutionary metaphor is the better way to go, at least for the time being. Interestingly enough, since I have not yet started on running this on multiple threads, I think that if memory constraints permit, then this code just might be able to be back-ported to whatever legacy hardware might be of interest.

O.K., then - for now the code is starting to look like this, and soon I will most likely be putting some of this up on GitHub, just as soon as I iron out a few kinks.

int atari::main() { int t1,t2; int iter = ITERATIONS; int m,n; // first initialize the dataset vector<neural::dataset> datasets; datasets.resize (NUMBER_OF_MODELS); for (n=0;n<(int)datasets.size();n++) datasets[n].initialize (NP); // now construct the neural network vector<neural::ann> models; models.resize (NUMBER_OF_MODELS); for (n=0;n<(int)models.size();n++) { models[n].bind (&datasets[n]); models[n].init (n,iter); } t1 = GetTickCount(); bool result; models[0].report0(false); for (n=0;n<iter;n++) for (m=0;m<(int)models.size();m++) { result = models[m].train (n); if (result==true) models[m].store_solution(n); if (result==true) models[m].report(n); } t2 = GetTickCount(); writeln(output); writeln (output,"Training took ",(t2-t1)," msec."); models[0].report1(); models[0].report0(true); return 0; }It's really, not all that much more complicated than the code that Deep Seek generated. Mainly some heavy refactoring, and breaking things out into separate classes, etc. Yet, a whole bunch of other stuff comes to mind. Since it would almost be a one-liner at this point to try this on Cuda, on the one hand. Yet there is also the idea of getting under the hood of how Deep Seek works; since the idea also always comes to mind as to how one might train an actual DOOM expert or a hair expert for that matter, so as to be able to run in the Deep Seek environment.

Stay Tuned! Obviously, there should be some profound implications as to what might be accomplished next. Here are some ideas that I am thinking of right now:

- While evolving multiple models in parallel I could perhaps make some kind of "leader board" that looks like the current rank table for gainers or losers on the NASDAQ, or the current leaders in the Indy 500. Easy to do Linux style, if I make a view that looks like what you see when you do a SHOW PROCESS LIST in SQL or PS in Linux, for example.

- What if whenever a model shows an "improvement" over its previous best result, I not only store the results, but what if I also make a clone of that particular model, so that there would now be two copies of that particular improved model, evolving side by side?

- If there was a quota on the maximum number of fish that could be in the pond, so to speak, then some weaker models could be terminated - obviously, leading to a "survival of the fittest" metaphor.

- Likewise, what if I try combining multiple models, by taking two models that each have let's say 8 neurons, and combining them into a single network that might have 16 neurons for example or we could try deleting neurons from a model - so as to do some damage, and then let those mutations have a short period of time to adapt or die.

What seems surprising nonetheless, is how much that can be accomplished with so little code, since I think that what I have just described should work on any of a number of common micro-controllers, in addition to being able to be run on some the more massive parallelized and distributed super-computing environments that we usually think of when we think about AI.

-

Navigating the Twisted Convoluted Maze of Dark Passages

02/24/2025 at 01:59 • 0 commentsOr some other snooty remark. Like I said earlier. So, I have started on a C++ class based on the article that I saw about doing neural networks, with genetic algorithms, no less - on an Atari 800XL

class ann { protected: int NH,NN,NP; int NM, NG; double DB_INIT, F_INIT; double F, DB, DF; double DIST; double (*m_fptr)(double); vector<double> x0, y0; vector<double> U, wb, out; public: ann (); void init (int i); bool bind (double(*fptr)(double)); void randomize (); void train(int iter); void store_solution (int N); double test (double val); };Obviously there is a lot more of this, but what I really think that I should be doing is to actually put some of the graphics capabilities of my framework to good use. That would be kind of fun to watch in action, of course, whether on a PC, or on a classic 8-bit system. C++ is of course convenient, but maybe I could eventually try this in Apple II Pascal, or using Flex-C on the Parallax Propeller P2, just as soon as I figure out what it is that I am actually trying to do with it. Then of course there is also the task of figuring out how this relates to the land of all things LLM related. Maybe I should mention a conversation that I had with a chatbot a couple of years ago, about the Yang-Mills conjecture, since some of that might turn out to actually be relevant, finally - after all of this time.

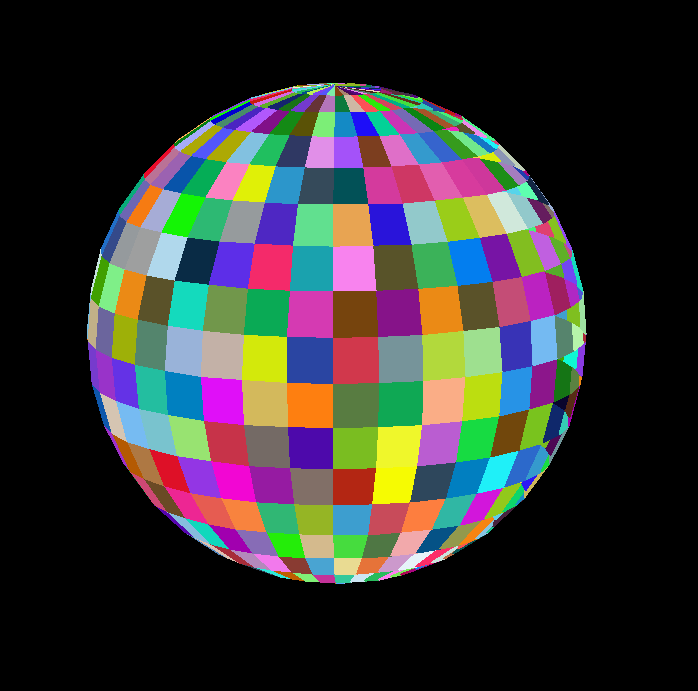

Maybe I am thinking about the idea that I had about pasting oscilloscope data on the surface of a cylinder, or warping the same data onto a sphere, and then how I realized how easy it is for that to get into either special or general relativity, so that sort of thing could both be a use case for an LLM, insofar as working out the theory, but also a use case for neural network based equation solvers.

![]()

So obviously there is more to this game than just regular tessellation, OpenGL, Cuda or whatever. Yet I am thinking about something else, of course, and that is also the problem of the icosahedron, which tessellates so much more nicely than the sphere, like if we take the icosahedron and subdivide each face into four triangles so that we get an 80 sided polytope, which can, of course, be further subdivided get a surface with, let's say 320 faces, which is pretty close the number of dimples on most golf balls. Interestingly enough.

Thus, in the meantime, I can definitely see some serious use cases for using neural networks of whatever type, for some kind of vertex nudging, for example. Yet, as suggested, it could get very interesting when we consider the possible role of LLM's as stated also, either in actual code or in developing the design concept.

Even better if Deep Seek or something similar should turn out to be useful for generating useful models that can not only be run on the desktop, but which can be trained locally.

In the meantime, however, we can have some more fun with our C++ version of the original genetic algorithm, by making some things a bit more generic. I am surprised for example that the original BASIC code simply fills an array in a loop by squaring the training values directly. Why not use the DEFINE FN idiom that most BASICS have, to define a callable function that takes an argument and then returns a value, just like we are accustomed to in a higher-level language such as C, or even Pascal or Fortran for that matter? Maybe it is just too slow to do in BASIC. Well, that doesn't mean that we can't do this is C++, however.

Let's take a look at two functions, bind and square:

bool ann::bind (double(*arg)(double)) { m_fptr=arg; if (m_fptr!=NULL) return true; else return false; } double square (double arg) { double result; result = arg*arg; return result; }Now for those who are unfamiliar with the arcane syntax of C/C++ function pointers, I have introduced a variable to the ann class, named m_fpt, which is of course, a member variable in which we can store a pointer to a function, i.e. the address of the function that we want to train on.

int atari::main() { int t1,t2; int iter = 32768; int n; ann test; t1 = GetTickCount(); test.bind (square); test.init (iter); for (n=0;n<iter;n++) { test.train (n); } t2 = GetTickCount(); writeln (output,"Training took ",(t2-t1)," msec."); return 0; }Notice that line of code where it says "test.bind(squre)"? That is how I tell the neural network the name of the function that I want to train on, and in this case, square is simply a function that takes a double as an argument, and which in turn returns a double. Likewise, I have started the process of breaking out the training loop, so that it could perhaps even be broken out into a separate thread, which could be made callable in the simple, usual way, while at the same time, "under the hood" a message might be passed instead to a waiting thread which would run each iteration, that is to say - whenever it receives a message to do so.

Even if I don't even know if the code is working the way that is it supposed to, at least I know that last time I checked function pointers to work in Flex-C on the Parallax Propeller P2, and hopefully they also work in GCC for Arduino.

Now that I think about it, of course, maybe that MoE class, I.e., the mixture of experts class from Deep Seek shouldn't look so intimidating. Or put differently, if we try to train bigger genetic models, lets say, by using Cuda, then it is going to be essential that we embrace some of the inherent parallelism in this sort of algorithm, as we shall see shortly!

Now I also need to try using some graphics capabilities, so as to be able to watch the whole process while it happens. In this case, changing the number of training points from 3 to 8. and the number of neurons to 16 enabled me to run 32768 iterations in 12250 msec using a single thread on a Pentium i7 in running compiled C++ in debug mode. I added some code to go ahead and dump the neuronal weights each time an iteration results in an improvement over the previously stored best result. Obviously, if improvements in performance are rare enough, especially as the number of iterations increases, then it should be easy to see how this algorithm could benefit from parallelization!

Then I decided to try to use a proper sigmoid function, and that actually seemed to hurt performance. So, I had to introduce some gain parameters, to deal with the overall fussy nature of the whole thing. But it is getting a bit more interesting, that is even without trying to parallelize things yet. Right now I trying to run it with 8 neurons but with 16 training points. Obviously, when trying to get a neural network to imitate a simple function like x^2, it should ideally be testable with arbitrary data. Yet clearly while it is actually quite fussy, it is also quite easy to think of lots of ways to optimize the technique, that is without completely breaking the ability to get something that can still run on a smaller system.

![]()

In any case - here is the latest mess, which is actually starting to look like something.

-

Let's adapt some Atari BASIC code that does a genetic algorithm?

02/23/2025 at 19:05 • 0 commentsElsewhere on Hackaday right now there is an article that describes another person's efforts to train a neural network to model a mathematical function, such as a simple function like y=x^2. Perhaps this approach might be useful to help with the alignment issues in my braiding algorithm. Might as well try it, right? Even though I have an idea in the works that I could just as well export a list of vector-drawn control points for the braid, and then use a Fourier transform-based low pass filter to smooth out some of the irregularities, as previously described. So, I tried giving the BASIC code from that project to Deep Seek, and I asked Deep Seek to convert it to C for me! Much to my surprise, the code ran the first time.

Here is the Hackaday Article about using Atari BASIC to implement a genetic algorithm.

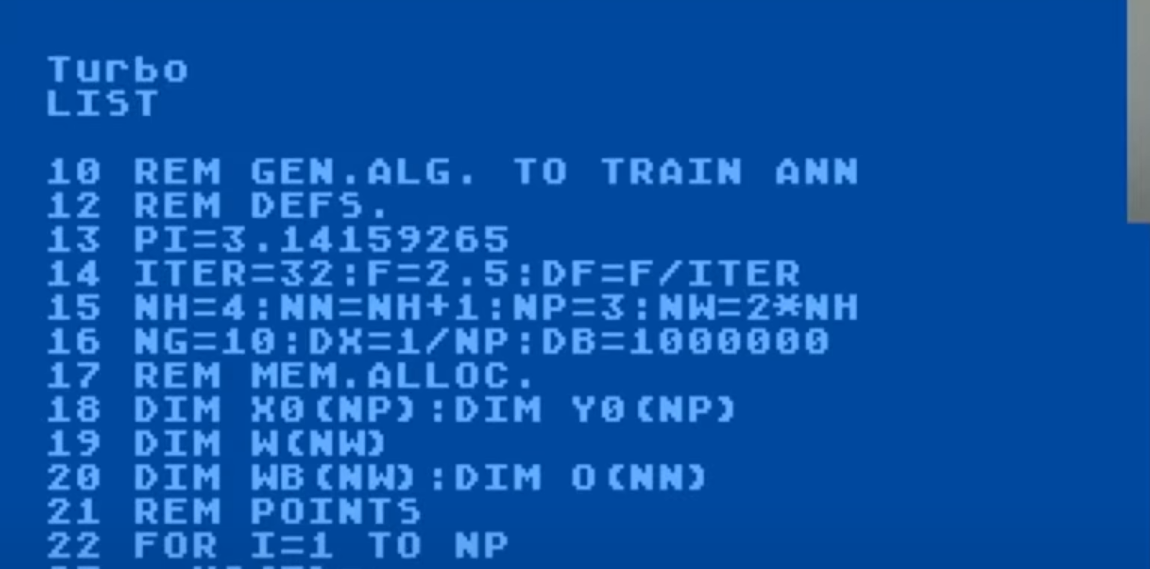

And here is a cropped screen shot from the author's YouTube video:

![]()

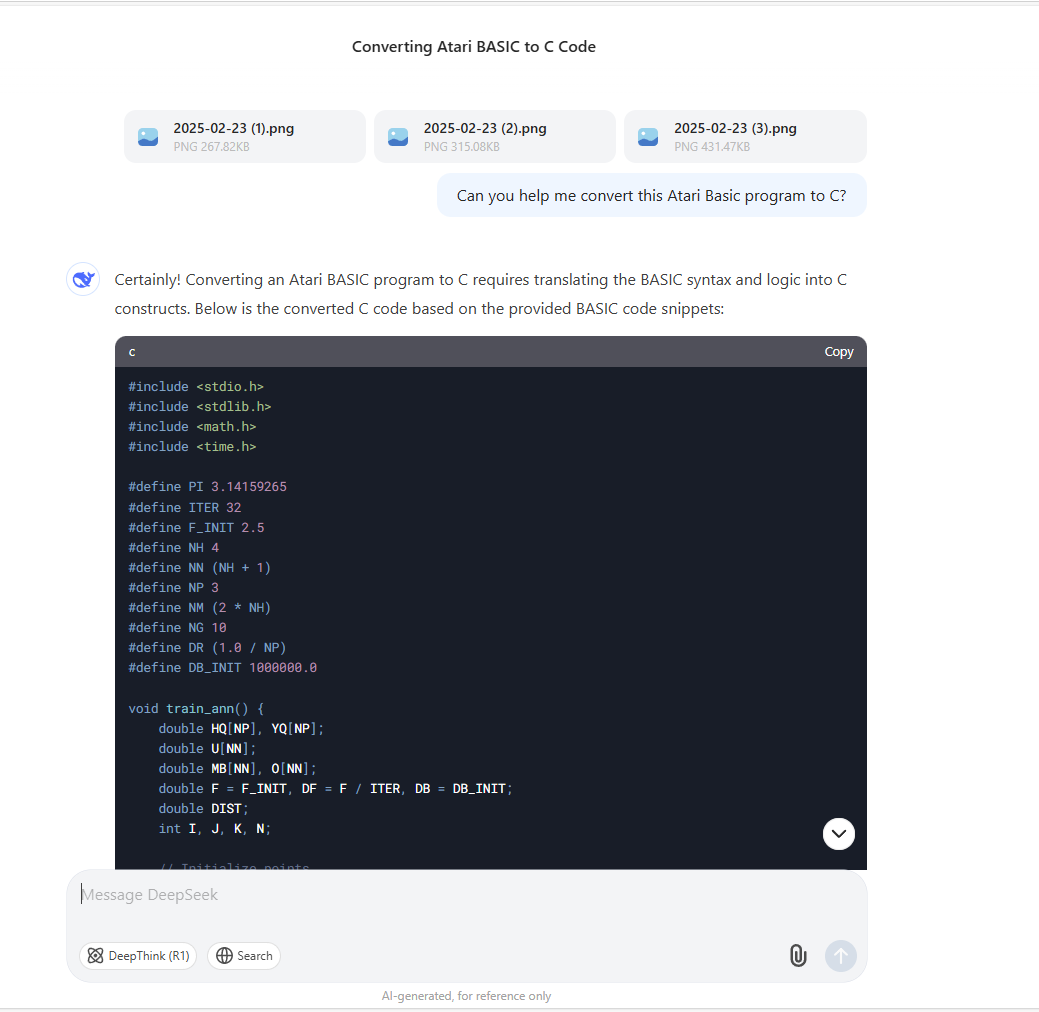

So naturally, I had to try and see if Deep Seek could handle the otherwise fairly straight forward translation problem. Obviously, using a sledgehammer to crush ants, maybe, but worth a try.

![]()

Deep Seek was even nice enough to provide an explanation as to how the code works!

1. **Initialization**: The constants and variables are defined at the beginning. Arrays are used to store the values of `HQ`, `YQ`, `U`, `MB`, and `O`.

2. **Random Initialization**: The `MB` array is initialized with random values using `srand` and `rand`.

3. **Training Loop**: The main training loop iterates `ITER` times. In each iteration, the `U` array is updated with new values based on `MB` and a random factor.

4. **Distance Calculation**: The distance `DIST` is calculated based on the difference between `YQ` and the output `O`.

5. **Update**: If the calculated distance is less than `DB`, `DB` is updated, and the `MB` array is updated with the values from `U`. The factor `F` is also decreased by `DF`.

6. **Output**: The current iteration number and distance are printed.

This C code should replicate the functionality of the original Atari BASIC program. Note that some parts of the BASIC code were incomplete or unclear, so assumptions were made to fill in the gaps.OK - very nice. We can grab screenshots of source code from YouTube, and translate the code to other languages! So now let's look at the generated code which I made some vary minor changes to in order to adapt it to my framework.

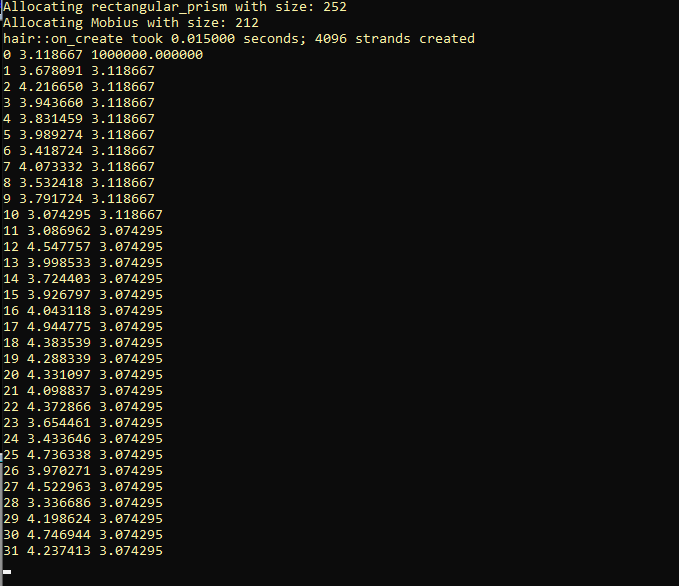

#include "stdafx.h" #include "../Frame Lisp/intrinsics.h" #include "eliza.h" #include <math.h> #include <time.h> #define PI 3.14159265 #define ITER 32 #define F_INIT 2.5 #define NH 4 #define NN (NH + 1) #define NP 3 #define NM (2 * NH) #define NG 10 #define DR (1.0 / NP) #define DB_INIT 1000000.0 void train_ann() { double HQ[NP], YQ[NP]; double U[NN]; double MB[NN], O[NN]; double F = F_INIT, DF = F / ITER, DB = DB_INIT; double DIST; int I, J, K, N; // Initialize points for (I = 0; I < NP; I++) { HQ[I] = (I - 0.5) * DR; YQ[I] = HQ[I] * HQ[I]; } // Initialize MB with random values srand(time(NULL)); for (I = 0; I < NM; I++) { MB[I] = (double)rand() / RAND_MAX; } // Training loop for (N = 0; N < ITER; N++) { DIST = 0.0; for (I = 0; I < NM; I++) { U[I] = MB[I] + F * ((double)rand() / RAND_MAX); } for (K = 0; K < NP; K++) { for (J = 0; J < NN - 1; J++) { double SUM = 0.0; for (I = 0; I < NH; I++) { SUM += U[I] * HQ[K]; } O[J] = sin(SUM * PI); } O[NN - 1] = 0.0; for (I = 0; I < NH; I++) { O[NN - 1] += U[I + NH] * O[I]; } O[NN - 1] = sin(O[NN - 1] * PI); DIST += pow(YQ[K] - O[NN - 1], 2); } DIST = sqrt(DIST / NP); writeln (output, N, " ",DIST, " ", DB); if (DB > DIST) { DB = DIST; for (I = 0; I < NH; I++) { MB[I] = U[I]; } F -= DF; } } } int atari::main() { train_ann(); return 0; }Now we simply have to try running it. So let's sneak a test case into my hair drawing code, so that it will dump some debugging info at startup.

![]()

Now I just need to go back and watch the original YouTube video and see if these results seem meaningful. Obviously, when an AI is developed that can learn whatever it can possibly learn from actually watching every video ever uploaded to YouTube, then that is going to have some profound implications, i.e., when the AI becomes able to do this sort of thing on its own. Wow! I should make a note that Visual Studio objects to this code with a stack corruption warning when the main function is trying to exit, but other than that, it does seem to do something. What now? Propeller, Arduino?

-

Transformers, Transformers - Oh, Where art Thou?

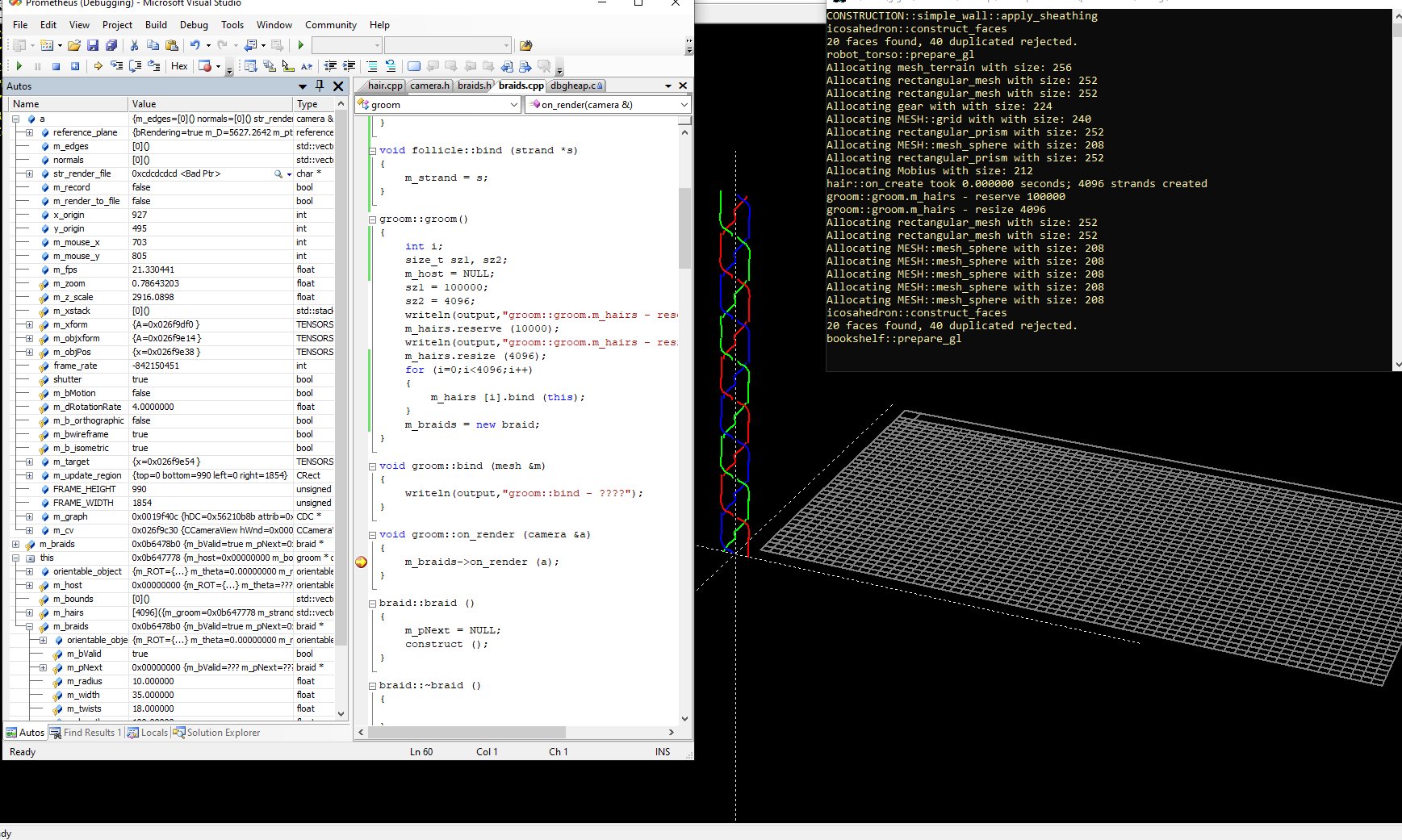

02/20/2025 at 14:38 • 0 commentsI don't know why I never thought about this until now, or maybe I did - but I just wasn't far enough along in my development efforts for it to matter. Yet, like isn't this all of a sudden - I have an idea. Something that maybe I should follow up on, because I think it is going to turn out to be very useful in transformer land. First, let's take a look at something that I am looking at right now in a debugging session.

![]()

Here you can see what some of the values are of some of the member variables associated with the camera class that I use when working with GDI-based stuff in 3D, and one of the things that this let's me do is write code that looks like this:

void twist::draw_strand1 (camera &a, COLORREF c, int i) { SETCOLOR _(a,c); MATH_TYPE theta= 0.0; MATH_TYPE w = -m_width; MATH_TYPE m_offset; MATH_TYPE step_size; if (i%2==1) m_offset = -m_width*0.25; else m_offset = m_width*0.25; _vector pos = xyz (theta,i); pos[0]+=m_offset; pos[2]+=(m_length*i*0.5); a.move_to_ex (pos); step_size = STEP_SIZE; for (;theta<=360.0;theta+=step_size) { pos = xyz (theta,i); pos[0]+=m_offset; pos[2]+=(m_length*i*0.5); a.line_to_ex (pos); } }So the most important part here, I suppose is the ease with which I can simply create objects by drawing in 3D, while doing a bunch of Open GL-like stuff like pushing and popping the necessary object transformations, while in effect generating vertex lists, as well as face and object data in some cases, which then in-turn allows for the creating of obj file like intermediate representations, which can then be played back at will, or saved to a file, or even 3-d printed in some cases. Yet for some reason, until now - even though my MFC application is Multi-Document and Mult-View capable, I have until now only made use of one camera at a time, i.e. when rendering a particular scene - so that in effect I have a CameraView class in MFC that derives from CView, and that in turn allows whichever window it is that is having its OnDraw method called by the framework to translate the 3D view into the final GDI calls for onscreen rendering. Or else, the more general conversion of primitive objects into fully GL-compatible objects is still a work in progress.

Yet what would happen if I for a sufficiently complex object, and for such a complex scene that might have doors, windows, mirrors, etc., I then create one camera for each point of view within the scene, or perhaps better still I try binding multiple instances of the camera class to some of the more complex objects themselves. Or, in a so-called "attentional" system, I try to find a meaningful way to bind multiple camera-like objects to the so-called transformers within a generative AI. Maybe this kind of recapitulates the notions of the earliest ideas of the programming language Smalltalk, that is in the sense that not only should you be able to tell an object to "draw itself" - yet obviously,, if I wanted to have multiple regions of braided hair, and each braid or bundle could take "ownership" or at least "possession" of a thread, let's say out of a thread pool, not just for concurrency reasons, but also for code readability and overall efficiency reasons, that is to say - just so long as RAM is cheap.

So, if a "groom" object might be associated with 100,000 hairs, for example, then how does sizeof(camera) affect performance if we bind the camera object to the object instead of to the view?

Testing this code snippet, I find no obvious effects on the program's behavior, other than to learn that the current size of the camera class object is just 504 bytes.

void groom::on_render (camera &a) { camera b = a; size_t sz = sizeof(camera); m_braids->on_render (b); }Now obviously, I don't want to incur the cost of copying the current rendering context every time I want to draw a polyline, but remember, there is a huge advantage to doing this, and it is not just because of parallelism. For example, if an object can do its own occlusion culling within a private rendering context, then that greatly simplifies the management of the BSP- related stuff. Yet in a transformer based AI which employs a multi-headed attentional system, this might be a critical step, i.e., by optionally binding rendering-contexts, as well as the data that is managed within then, not only to views or realms, but so also to objects, or perhaps transformers? Maybe it is that hard after all?

Naturally, I had to ask Deep Seek what it thinks of this - right!

![]()

Well, for whatever it is worth, it doesn't seem to have any insights about anything that I don't feel as if I don't already know. Yet it is pretty good at summarizing some of the content of this article, on the one hand, and maybe viewing the proposals herein in an alternate form might have some value, as in "Help me write a white paper that might seem of interest to venture capitalists", even if that isn't the question that I asked at all. Still - it's insight about AI implications seems more on target with what I was hoping to see, i.e., "In a transformer-based AI, multiple cameras could act as "attention heads" each focusing on a specific region or object. This could enhance the AI's ability to generate or manipulate 3D scenes with fine-grained control."

At least it is polite.

-

This May Be or May Knot Be Such a Good Idea?

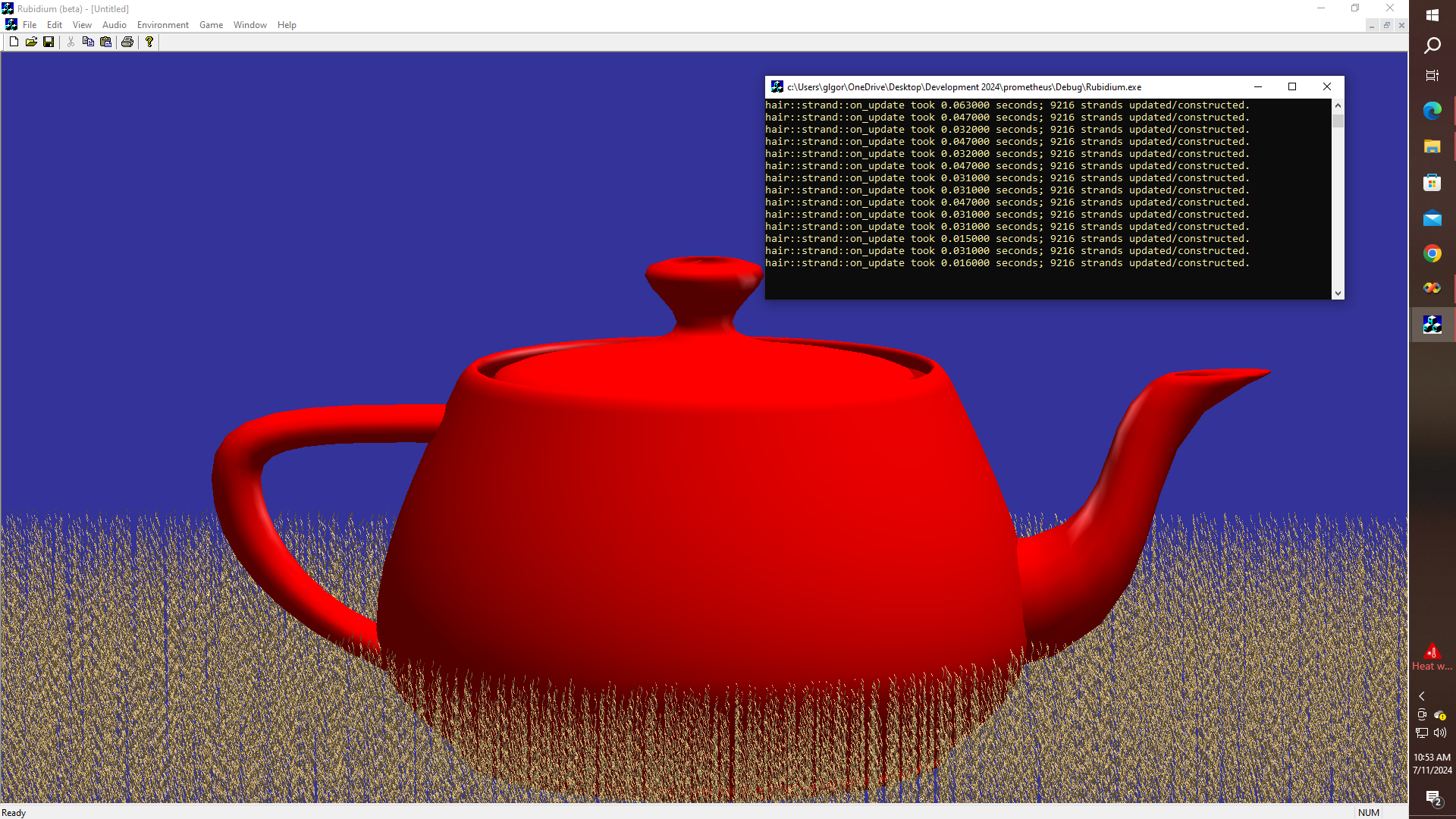

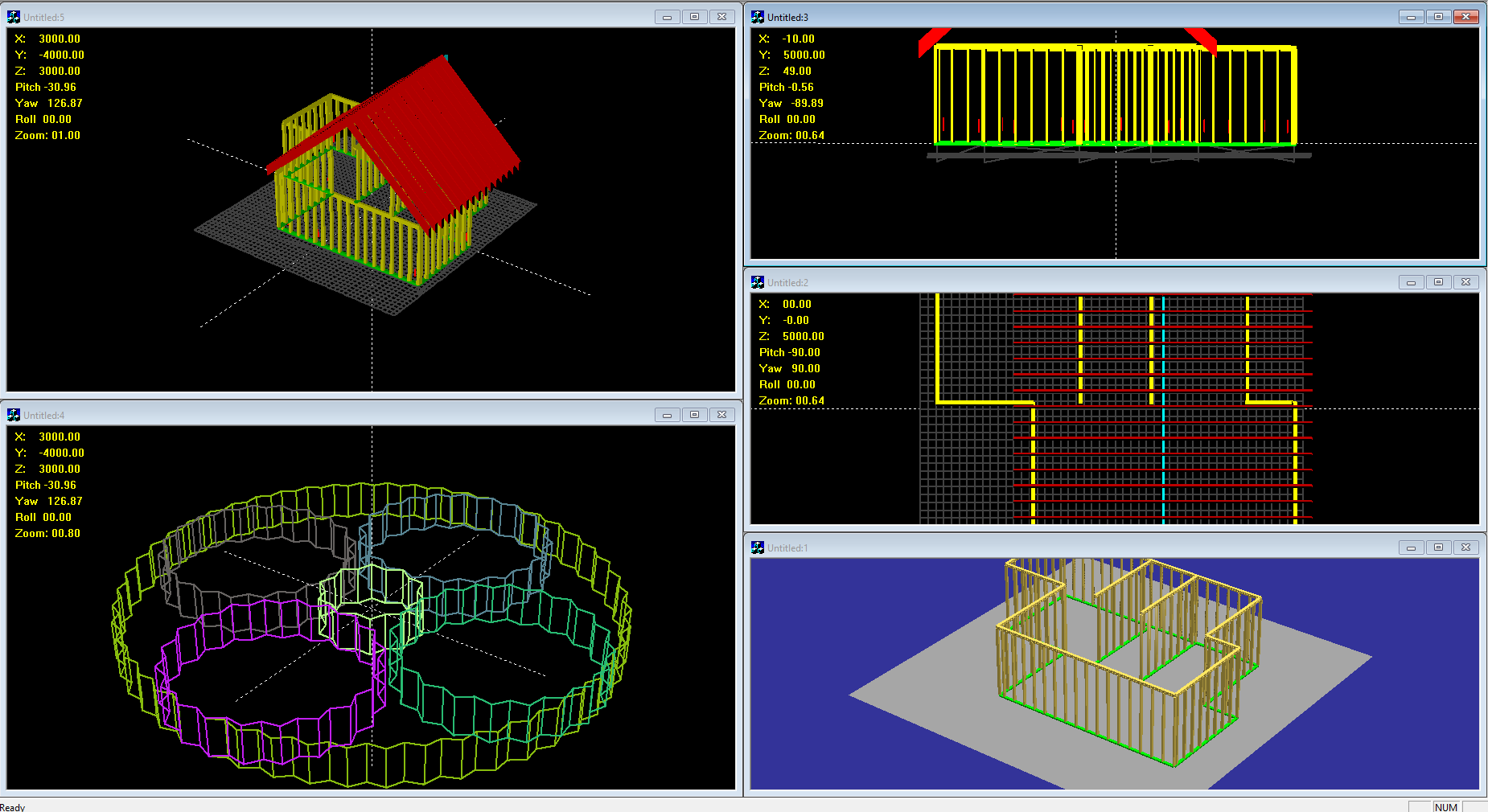

02/19/2025 at 18:02 • 0 commentsFirst. let's take a look back at something that I was working on last summer, and that is my very happy "Teapot in the Garden" Rendering.

![]()

How nice! Yes. how very nice indeed! Because I just saw something in the news the other day that NVIDIA has just announced their own Tensor Flow AI-based hair drawing routines, that supposedly allow for something like 240FPS rendering, and stuff like that, i.e., by requiring a 97% reduction in CPU/GPU utilization. O.K., now that THEY are finally getting their act together, I must contemplate therefore: can we do better? Well, for now, I am happy with my shader - even if it still mostly running on CPU + Open GL. Yet here might be another possible use for Deep Seek, maybe? I mean to say that is if I can get Deep Seek running, with or without Py-Torch in my own framework. So let's do something else, therefore, like revisiting the whole theory of braids, that is - since the shader is pretty much ready for use in production code.

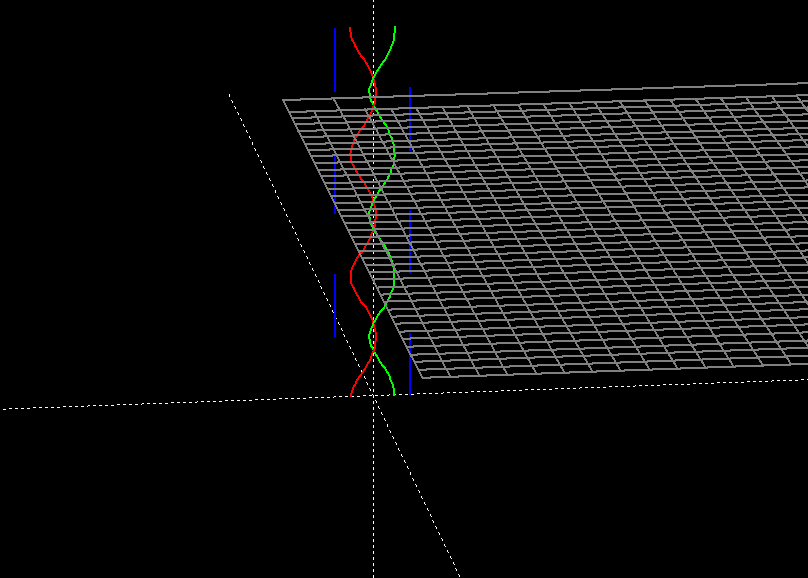

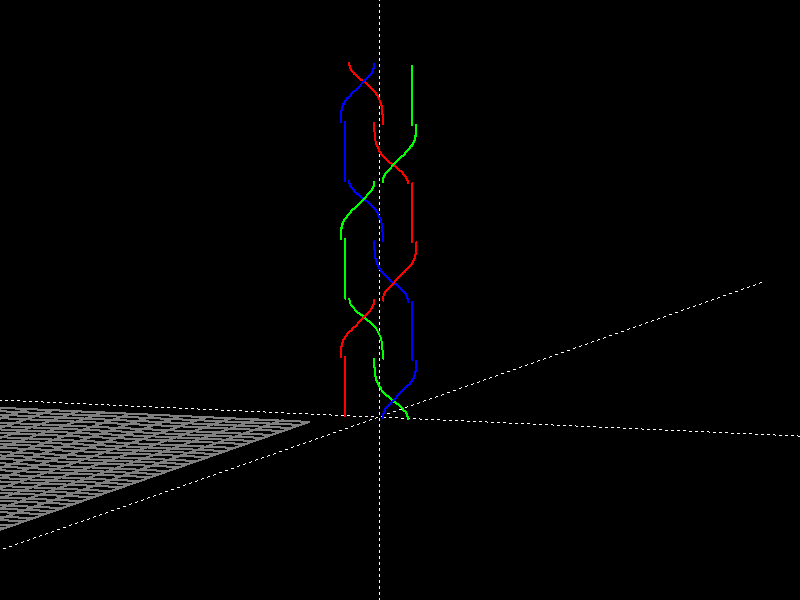

So yesterday I went on a coding binge - and started writing some code to do some simple GDI-based drawing of some braids, and for starters - I came up with a simple twist drawing routine.

![]()

After playing with the code, I finally came up with this mess - which might not be as terrible as it looks, since at least am at the point where the overall topology is mostly correct, even though there are obviously some alignment issues with how the different segments are being assembled, and this is something that I want to discuss further.

![]()

But first, let's have another view along the x-axis, i.e. so that we are looking at a projection, mostly of the YZ plane.

![]()

Now to understand what is happening here, take at look at the code for the "xyz" function:

_vector twist::xyz (MATH_TYPE l, int i) { MATH_TYPE theta,x1,y1,dz; theta = l*(TWO_PI/180.0); x1 = m_width*(-cos(theta*0.5)); if (i%2==0) y1 = m_radius*(1-cos(theta)); else y1 = -m_radius*(1-cos(theta)); dz = l*m_length*(1/360.0); _vector result (x1,y1,dz); return result; } void braid::set_colors(int i) { const COLORREF r = (int)COLOR::red; const COLORREF g = (int)COLOR::green; const COLORREF b = (int)COLOR::blue; struct ctab { COLORREF c[3]; }; ctab colors[] = { {r,g,b}, {b,g,r}, {g,b,r}, {r,b,g}, {b,r,g}, {g,r,b}, }; c1 = colors[i].c[0]; c2 = colors[i].c[1]; c3 = colors[i].c[2]; }Elsewhere, of course, I am calling the "xyz" function to find the values of some points along my control lines for my braid, while also calling another function in the same set of loops in order to decide what color to draw each segment with. The segment coloring function, is of course magic, since it relies on various permutations which I derived empirically, and put into a table lookup. Yet. for efficiency reasons, this might be a better way, I hope than trying to deduce some other magic formula that looks at the layer index and strand id and which then tries to use division and or modular arithmetic to determine the type of strand to draw, whether it goes over or under, and how to color it. So I'll live with it - for now. Yet clearly something needs to be done with that xyz function - and how it is being invoked.

Maybe I could treat the position of my control lines as complex numbers in the xy plane, and then treat the z-axis as time, and then compute the Fourier transform of the functions that define the position of each strand. Then a little low-pass filtering might yield a smoother function. With a smoother function, then - why not take the low-pass filtered data, and generate some coefficients of some low-ordered polynomials, so that the xyz function might be computable for each strand according to some overlapped piecewise cubic interpolation? Maybe this is a good place to mention that I hate Beziers!

This is only going to get more complicated when I get around to drawing bundles - instead of control lines, since then it will become necessary to take into account the collision and frictional properties of the bundle vs. bundle interactions - which right now, at least for the moment, seems to suggest that the collision mechanics might benefit computationally in terms of managing not just the topological domain aspect of each bundle, but how the collision domains interact between the associated realms - to use a more traditional vocabulary.

Perhaps transformers could be used; for example - to condition a network in such a way, as one might want to derive meshes from scalar data, via a traditional approach, such as "marching cubes", while at the same time, providing mesh vs. mesh interactions which would necessitate providing feedback - let's say - all the way back to how we might compute the FFT coefficients for our control vectors. Which seems to look a lot more like a kind of transformer-based generative adversarial network - whether it generates the meshes and vector fields directly, or whether it runs alongside and controls the algorithms, i.e., by fine-tuning the procedural mesh generation according to the necessary constraints.

-

Time to jump right in and swim with the big fish?

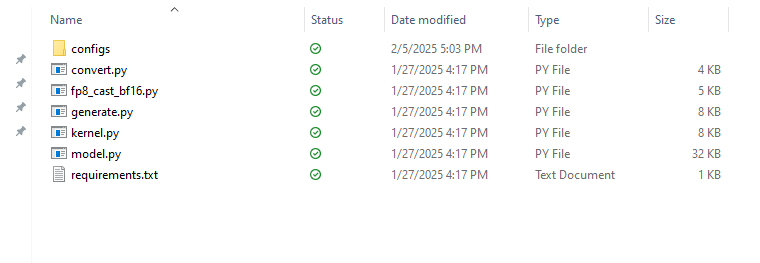

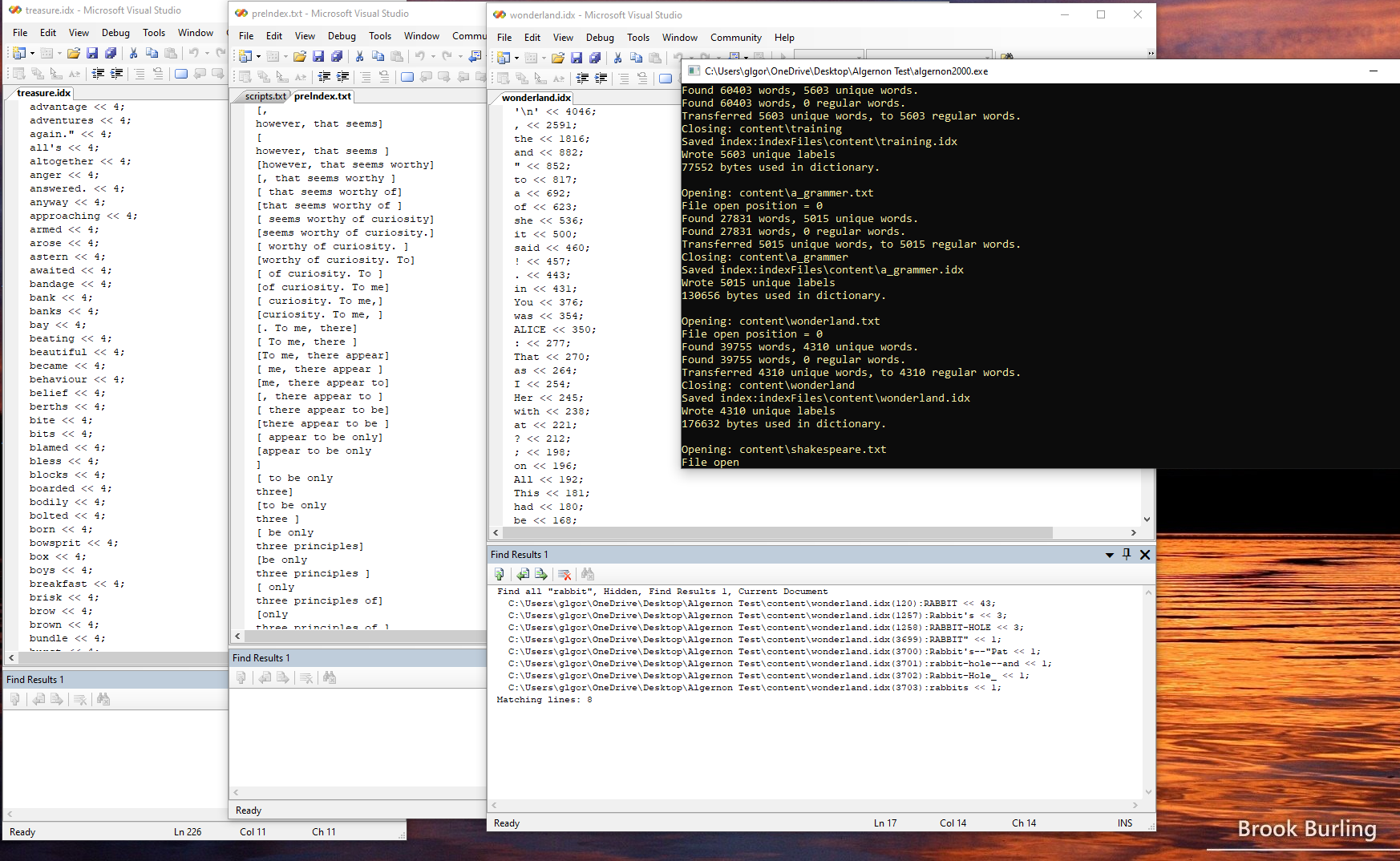

02/06/2025 at 01:42 • 0 commentsAlright, here goes. In an earlier series of projects, I was chatting with classic AI bots like ELIZA, Mega-Hal, and my own home-grown Algernon. Hopefully, I am on the right track, that is to say, if I am not missing out on something really mean in these shark-infested waters, and here is why I think that that is. Take a look at what you see here:

![]()

This is what I got as of January 27 when I downloaded the source files from GitHub for Deep-Seek. Other than some config files, it looks like all of the Python source fits quite nicely in 58K or less. Of course that doesn't include the dependencies on things like torch, and whatever else it might need. But model.py is just 805 lines. I checked. Now let's look at something different.

![]()

This is of course a screen-shot of a debugging session where I was doing things like counting the number of times that the word rabbit occurs in Alice in Wonderland, and so on. Maybe one approach to having a Mixture of Experts would require that we have some kind of framework. It is as if Porshe or Ferrari were to start giving away free engines to anyone, just for the asking, except that you have to bring your existing Porshe or Ferrari in to the dealer for installation, which would of course be "not free", unless you could convince the dealership that you have your own mechanics, and your own garage, etc., and don't really need help with all that much of anything - just give the stupid engine!

Well, in the software biz - what I am saying therefore, is that I have built my own framework, and that it is just a matter of getting to the point where I can, more or less, drop in a different engine. Assuming that I can capture and display images, tokenize text files., etc., all based on some sort of "make system" whether it is a Windows based bespoke application, like what you see here, or whether it is based on things like Bash and make under Linux, obviously. Thus, what seems to be lacking in the worlds of LLAMA as well as Deep Seek - is a content management system. Something that can handle text and graphics, like this:

![]()

Yet, we also need to be able to do stuff like handle wavelet data, when processing speech or music, or when experimenting with spectral properties of different kinds of noise for example:

![]()

Of course, if you have ever tried writing your own DOOM wad editor from scratch, you might be on the right track to creating your own AI ... which can either learn to play DOOM, or else it just might be able to create an infinite number of DOOM like worlds. Of course, we all want so much more, don't we?

Alright then, first let's take a peek at some of the source code for Deep-Seek and see for ourselves if we can figure out just what exactly it is doing, just in case we want a noise expert, or a gear expert, or something else altogether!

Are you ready - silly rabbit?

class MoE(nn.Module): """ Mixture-of-Experts (MoE) module. Attributes: dim (int): Dimensionality of input features. n_routed_experts (int): Total number of experts in the model. n_local_experts (int): Number of experts handled locally in distributed systems. n_activated_experts (int): Number of experts activated for each input. gate (nn.Module): Gating mechanism to route inputs to experts. experts (nn.ModuleList): List of expert modules. shared_experts (nn.Module): Shared experts applied to all inputs. """ def __init__(self, args: ModelArgs): """ Initializes the MoE module. Args: args (ModelArgs): Model arguments containing MoE parameters. """ super().__init__() self.dim = args.dim assert args.n_routed_experts % world_size == 0 self.n_routed_experts = args.n_routed_experts self.n_local_experts = args.n_routed_experts // world_size self.n_activated_experts = args.n_activated_experts self.experts_start_idx = rank * self.n_local_experts self.experts_end_idx = self.experts_start_idx + self.n_local_experts self.gate = Gate(args) self.experts = nn.ModuleList([Expert(args.dim, args.moe_inter_dim) if self.experts_start_idx <= i < self.experts_end_idx else None for i in range(self.n_routed_experts)]) self.shared_experts = MLP(args.dim, args.n_shared_experts * args.moe_inter_dim) def forward(self, x: torch.Tensor) -> torch.Tensor: """ Forward pass for the MoE module. Args: x (torch.Tensor): Input tensor. Returns: torch.Tensor: Output tensor after expert routing and computation. """ shape = x.size() x = x.view(-1, self.dim) weights, indices = self.gate(x) y = torch.zeros_like(x) counts = torch.bincount(indices.flatten(), minlength=self.n_routed_experts).tolist() for i in range(self.experts_start_idx, self.experts_end_idx): if counts[i] == 0: continue expert = self.experts[i] idx, top = torch.where(indices == i) y[idx] += expert(x[idx]) * weights[idx, top, None] z = self.shared_experts(x) if world_size > 1: dist.all_reduce(y) return (y + z).view(shape)Well for whatever it is worth, I must say that it certainly appears to make at least some sense. Like OK I sort of get the idea. What now? Install the latest Python on my Windows machine, and see how I can feed this thing some noise to train on? Or how about maybe some meta-data from an Audio-in sheet music out application from years ago?

Of course, personally, I don't particularly like Python - but maybe that's OK, because I decided to try a free online demo of a Python to C++ converter at some place that calls itself codeconvert.com. So, while I don't know how good this C++ code is, at least it looks like C++. Now if I can also find a torch/torch.h library - wouldn't that be nice - that is if such a thing already exists?

#include <vector> #include <cassert> #include <torch/torch.h> class MoE : public torch::nn::Module { public: MoE(const ModelArgs& args) { dim = args.dim; assert(args.n_routed_experts % world_size == 0); n_routed_experts = args.n_routed_experts; n_local_experts = args.n_routed_experts / world_size; n_activated_experts = args.n_activated_experts; experts_start_idx = rank * n_local_experts; experts_end_idx = experts_start_idx + n_local_experts; gate = register_module("gate", Gate(args)); for (int i = 0; i < n_routed_experts; ++i) { if (experts_start_idx <= i && i < experts_end_idx) { experts.push_back(register_module("expert" + std::to_string(i), Expert(args.dim, args.moe_inter_dim))); } else { experts.push_back(nullptr); } } shared_experts = register_module("shared_experts", MLP(args.dim, args.n_shared_experts * args.moe_inter_dim)); } torch::Tensor forward(torch::Tensor x) { auto shape = x.sizes(); x = x.view({-1, dim}); auto [weights, indices] = gate->forward(x); auto y = torch::zeros_like(x); auto counts = torch::bincount(indices.flatten(), n_routed_experts); for (int i = experts_start_idx; i < experts_end_idx; ++i) { if (counts[i].item<int>() == 0) { continue; } auto expert = experts[i]; auto [idx, top] = torch::where(indices == i); y.index_add_(0, idx, expert->forward(x.index_select(0, idx)) * weights.index_select(0, idx).index_select(0, top).view({-1, 1})); } auto z = shared_experts->forward(x); if (world_size > 1) { torch::distributed::all_reduce(y); } return (y + z).view(shape); } private: int dim; int n_routed_experts; int n_local_experts; int n_activated_experts; int experts_start_idx; int experts_end_idx; torch::nn::Module gate; std::vector<torch::nn::Module> experts; torch::nn::Module shared_experts; };So now we just need to convert more code, and see if it will work on bare metal? The mind boggles at the possibility however if we can so easily convert Python to C++, then why not go back to raw C, so that we can possibly identify parts that might readily be adaptable to synthesizable Verilog? Like I said, earlier - this is an ambitious project.

![]()

Alright then, decided to try an experiment, and thus I am working on two different code branches at the same time. In Visual Studio: 2022 I have started a Deep Seek Win32 library file based on the converted Python code, even though I haven't downloaded, installed, and configured the C++ version of PyTorch yet. So I have started on a file called "compatibility.h" which for now is just a placeholder for some torch stuff, that I am trying to figure out as I go So, yeah - this is very messy - but why not?

#include <string> using namespace std; #define _dtype enum { undef,kFloat, kFloat32,} typedef _dtype dtype; namespace torch { _dtype; class Tensor { protected: int m_id; public: size_t size; }; class TensorOptions { public: dtype dtype (dtype typ) { return typ; }; }; void set_num_threads(size_t); Tensor ones(size_t sz); Tensor ones(size_t sz, dtype typ); Tensor ones(int sz1, int sz2, dtype typ); Tensor empty (size_t sz); Tensor empty (dtype); namespace nn { class Module { public: }; }; namespace serialize { class InputArchive { public: void load_from(std::string filename); }; class OutputArchive { public: }; }; }; #ifdef ARDUINO_ETC struct map_item { char *key1, *key2; int dim; // map_item(std::string str1, std::string str2, int n); map_item(const char *str1, const char *str2, int n) { key1 = (char*)(str1); key2 = (char*)(str2); dim = n; } }; #endif namespace std { #ifdef ARDUINO_ETC template <class X, class Y> class unordered_map { public: map_item *m_map; // unordered_map (); ~unordered_map (); void *unordered_map<X,Y>::operator new (size_t,void*); unordered_map<X,Y> *find_node (X &arg); char *get_data () { char *result = NULL; return result; } unordered_map<X,Y> *add_node (X &arg); }; #endif namespace filesystem { void create_directories(std::string); void path(std::string); }; };Yes, this is a very messy way of doing things. but I am not aware of any tool that will autogenerate namespaces, class names, and function prototypes for the missing declarations. It is way too early of course to think that this will actually run anytime soon. But then again, why not? Especially if we also want to be able to run it on a propeller or a pi! Maybe it can be trained to play checkers, or ping pong, for that matter. Well, you get the idea. Figuring out how to get a working algorithm that doesn't need all of the modern sugar coating would be really nice to have - especially if can be taken even further back, like to C99, or System C, of course - so that we can also contemplate the implications of implementing it in synthesizable Verilog - naturally!!

Well, in any case - here it is 7:40 PM, PST -that is, and I could hardly believe that the Chiefs got smoked out by the Eagles in the last few hours, that is to say in Super-Bowl 59. I really need a new chat-bot to have some conversation about this with, or else I will have to start rambling about Shakespeare. Or maybe I have something else in mind? Getting back on topic then, take a look at this:

![]()

Alright - so I am now able to create an otherwise presently useless .obj file for the Deep Seek component convert.py, which I have of course converted to C++, and managed to get it to compile, but not yet link against anything. So, I think that the next logical step should be to try to create multiple projects within the Deep Seek workspace and make a "convert" project, that will eventually become a standalone executable. In the meantime, since I don't think that this project is quite ready for GitHub, what I think that I will do, is go ahead and post the current versions of "compatibilitiy.h" and "convert.cpp" to the files section of this project, i.e.., right here on Hackaday, of course. Just in case anyone wants to have a look. Enjoy!

Deep Sneak: For Lack of a Better Name

The mixture-of-experts and multi-model meme is in play, so lets let the reindeer games begin, even if we missed Christmas with Deep-Seek.

glgorman

glgorman