Licenses: Software: GPLv3; Hardware: Creative commons BY-SA.

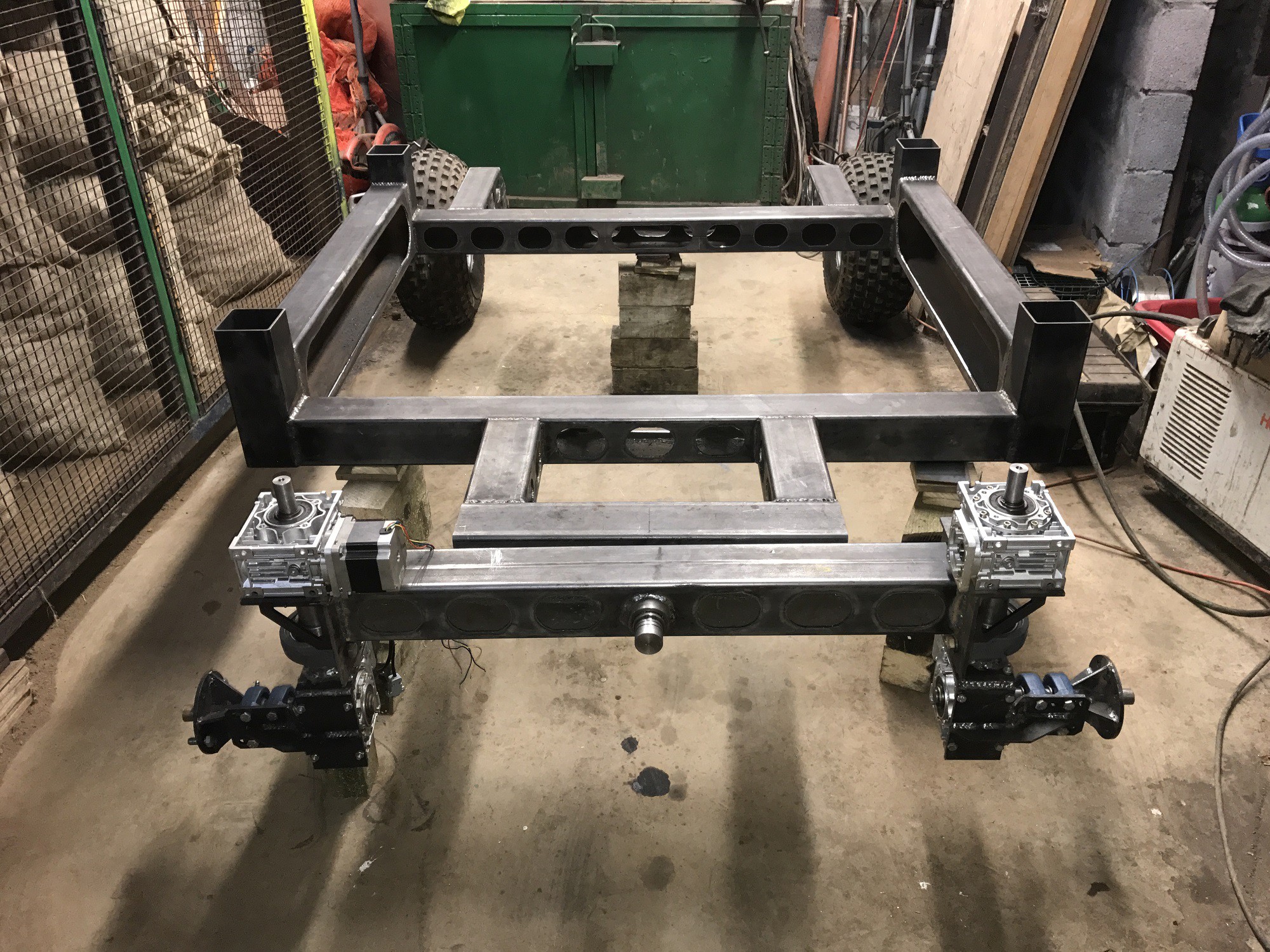

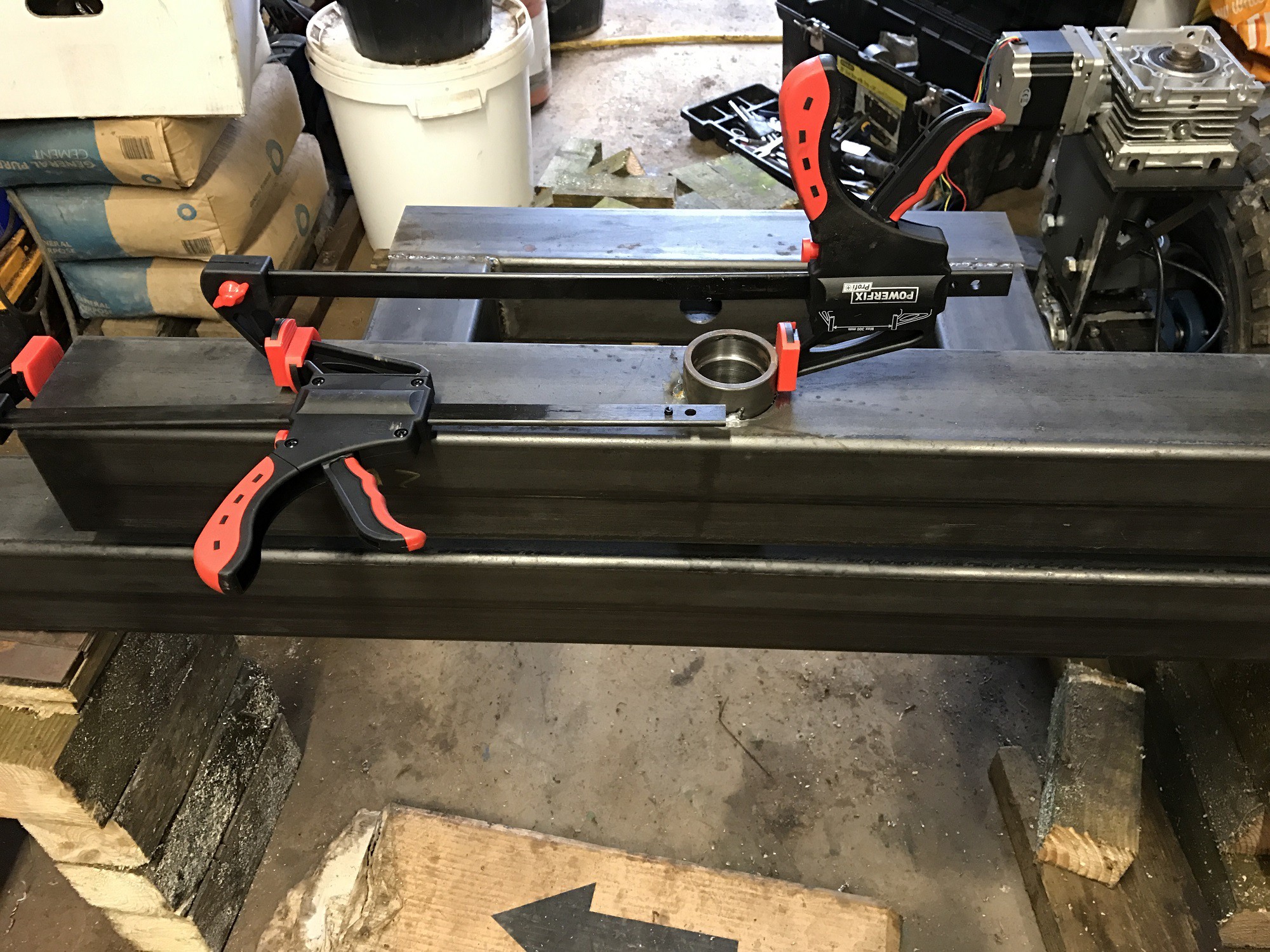

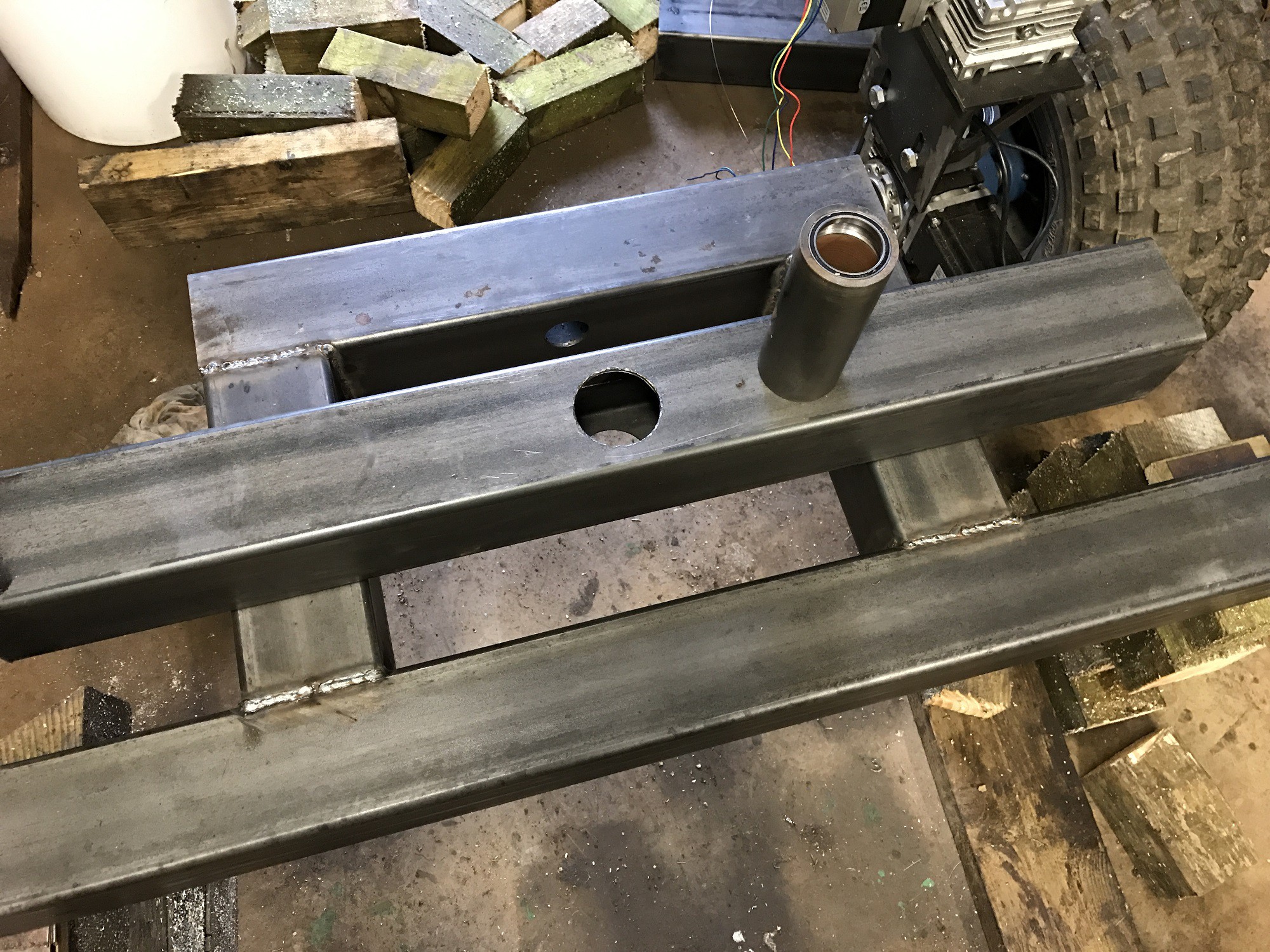

2018 sees the project moving forwards with the addition of a side project managed by Jonno which uses a skid steer system and higher powered drive motors. It will use the same control system as the WEEDINATOR.

This year we've also got more people on the team, including Tristan Lea, a successful open source entrepreneur, who apart from having superb technical skills, has actual open source business experience.

Also, the WEEDINATOR will be exhibited around the UK, including the Liverpool MakeFest, 30th June 2018 https://lpoolmakefest.org/, the Electromagnetic Field, August 31 - September 2nd 2018 and (hopefully) FarmHack UK 2018 4 October - 7 October 2018.

Project challenges:

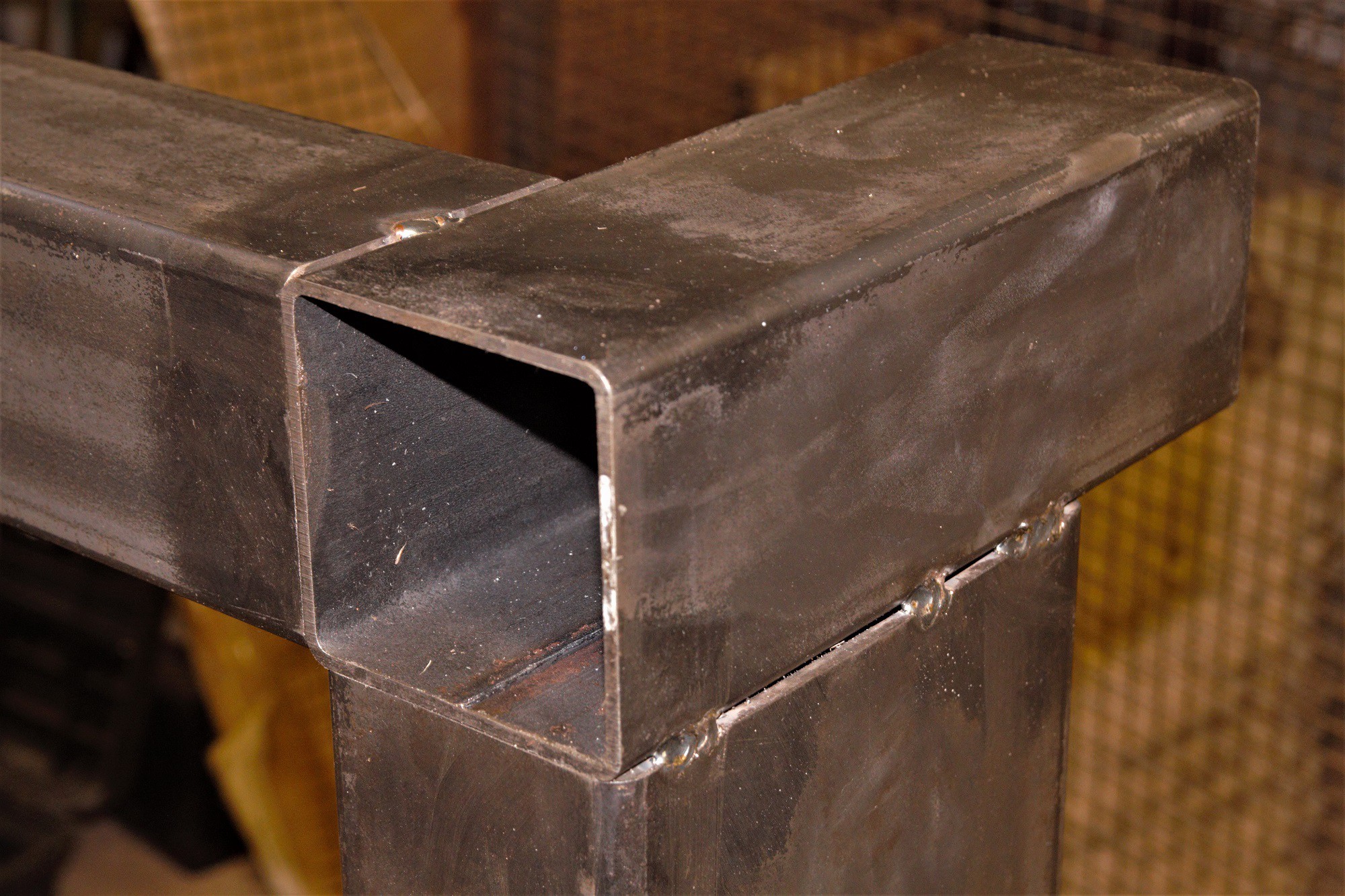

- Designing steering geometry that does not impinge on the planted crop - I did not want to use skid steer so a more complicated steering system is required with full 'differential' where speeds of steering and drive motors individually change according to steering and drive parameters eg forwards, backwards, clockwise etc.

- Selecting suitable motors and gearboxes - Cost is a major factor and the minimum requirement was that there should be optical encoders for monitoring 'steps' and speed. Other similar skid steer designs would use 24v truck windscreen wiper motors but these were thought to be too basic.

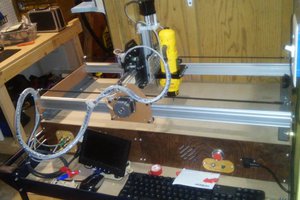

- Preventing abrasion and jamming of the CNC mechanism due to soil and dust - gaiters, rubber boots, wipers, delrin bearings ..... the list of solutions goes on!

- Selecting suitable power supply for motors - The obvious solution is batteries but lightweight lithium batteries are extremely expensive and are only good for a limited number of re-charges.

- Autonomous navigation - the nav system needs to be accurate to at least +-25mm to get accurate positioning on the crop beds. Think 'error correction'!

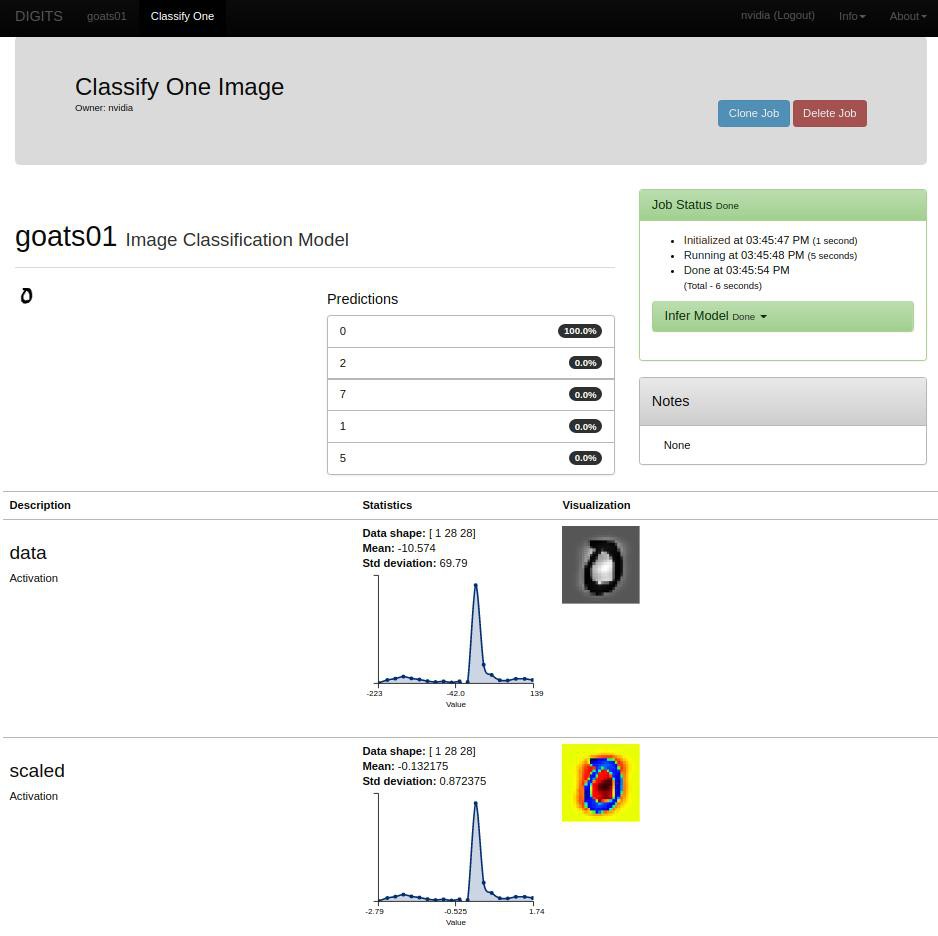

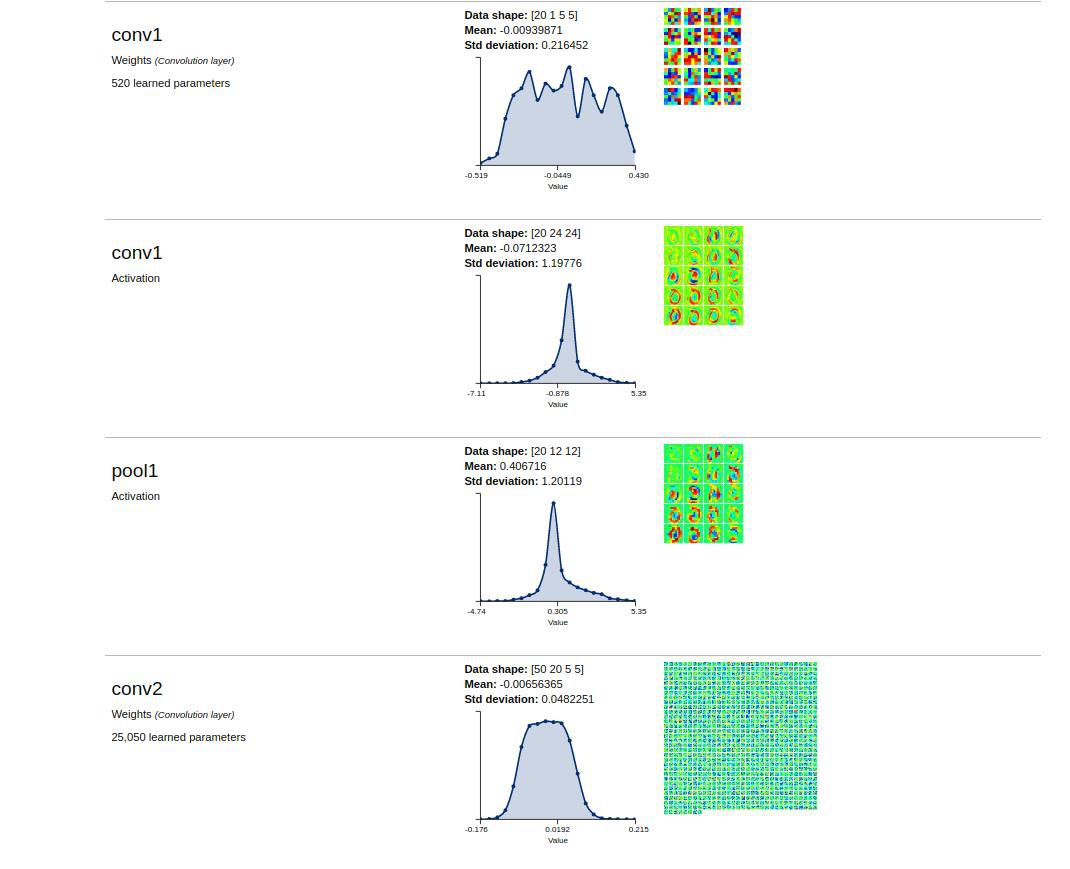

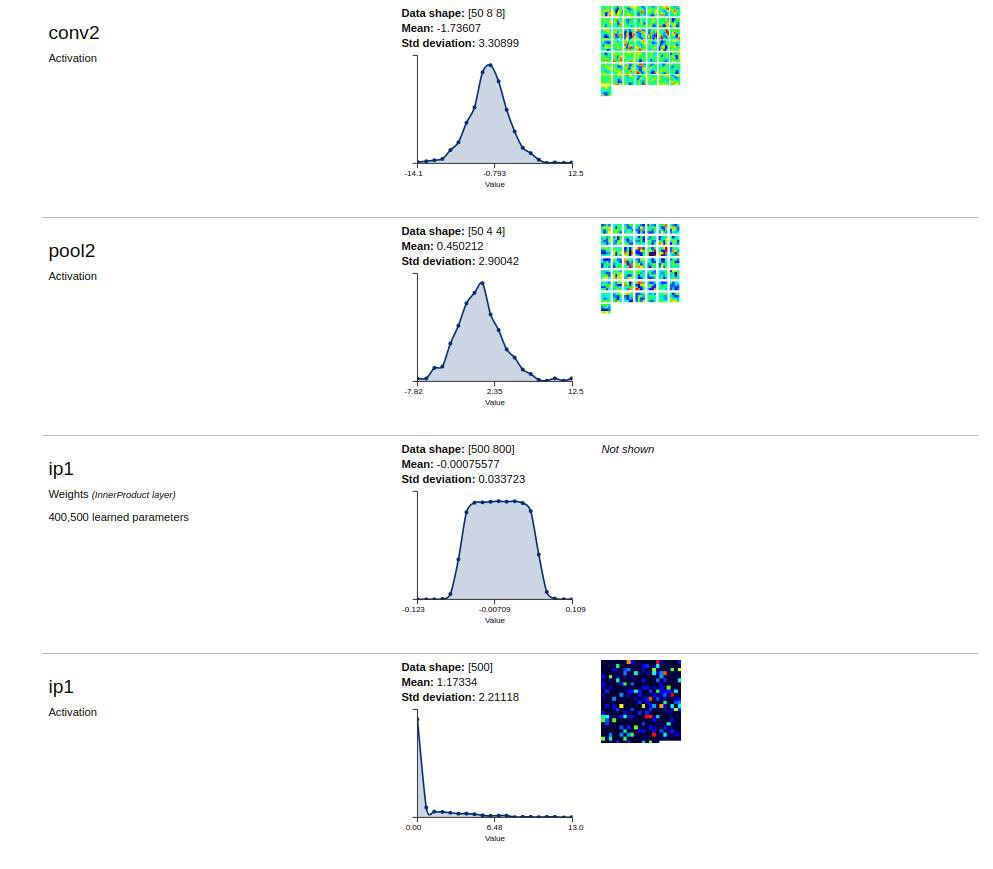

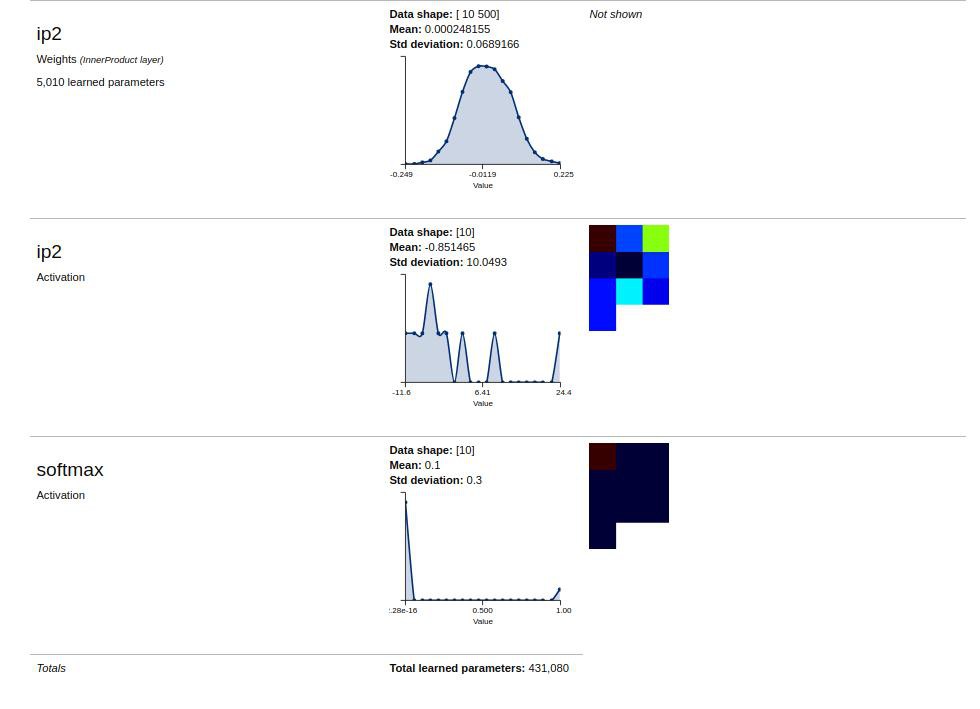

- Object recognition - The machine needs at least some basic OR. The weeding process is preventative so there's no need to distinguish weeds from crop. It's more about telling the difference between brown soil and green plants so the cameras are more likely to see green blobs on brown background. Objects can also be placed on the soil to aid navigation, enhancing the accuracy. But what about bright glaring sunshine?

- Cost - The machine needs to be built within a sensible budget so that in stands a chance of being commercially viable. The mechanical design needs to be as simple as possible with appropriate compromises with functionality. How close to the crop can the drive gearboxes be? How big is the crop going to be? Most weeding needs to be done when the crop is more vulnerable at the early stages. How 'ideal' does the steering need to be? The steering bearing does not necessarily have to be in the middle of the wheel - it can be offset to one side and changing the relative speeds of the drive wheels can aid the steering motors.

- Multi-purposing CNC - How to design the machine in such a way as to allow different implements to be changed over from one another in less than 5 minutes? For example, the weeding apparatus should be a bolt on assembly rather than bolted on individual components.

- Collision avoidance - Many new cars on the road (2018) have collision avoidance modules which prevent people being run over and the car hitting other obstacles. Can such systems be easily created or bought cheaply?

GOAT INDUSTRIES

GOAT INDUSTRIES

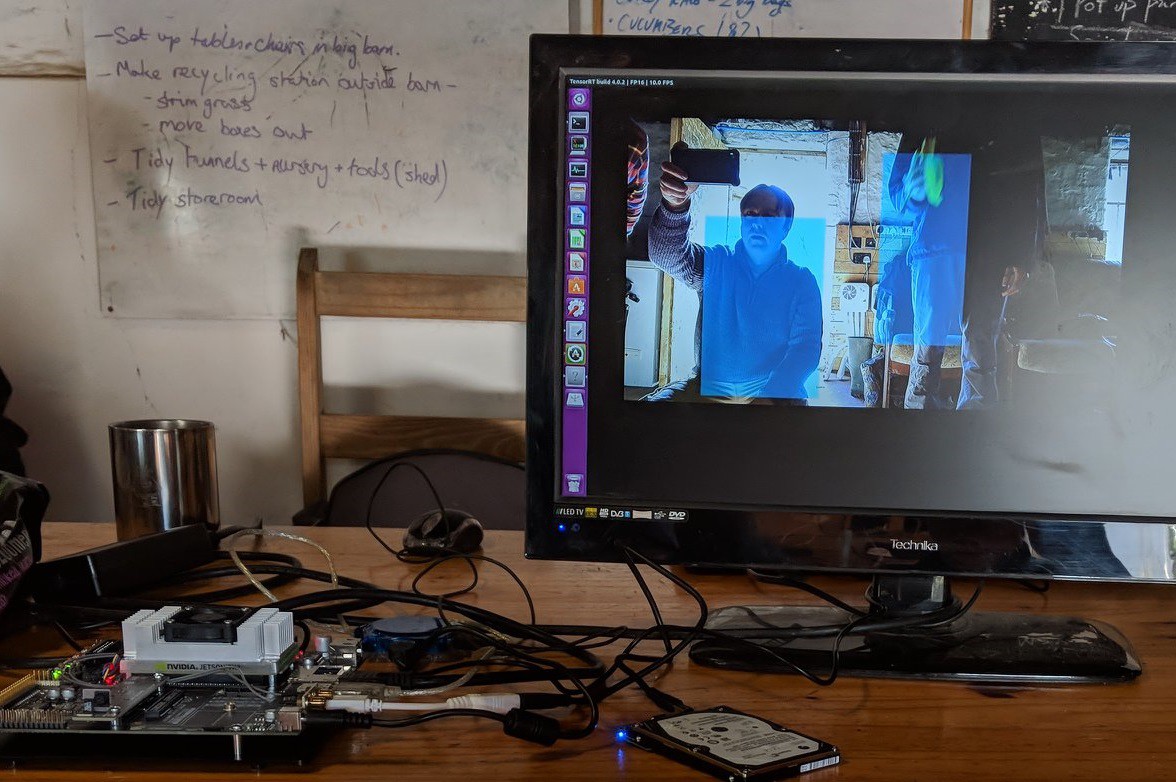

I had only previously got this feature working the day before the event and even then, it was not working properly. Fortunately, whilst setting up, I noticed a tiny plastic tab need the camera lens and it turned out that it had a rather opaque lens cap over the camera lens! After taking it off, it performed very much better and the system proved to be quite impressive.

I had only previously got this feature working the day before the event and even then, it was not working properly. Fortunately, whilst setting up, I noticed a tiny plastic tab need the camera lens and it turned out that it had a rather opaque lens cap over the camera lens! After taking it off, it performed very much better and the system proved to be quite impressive.

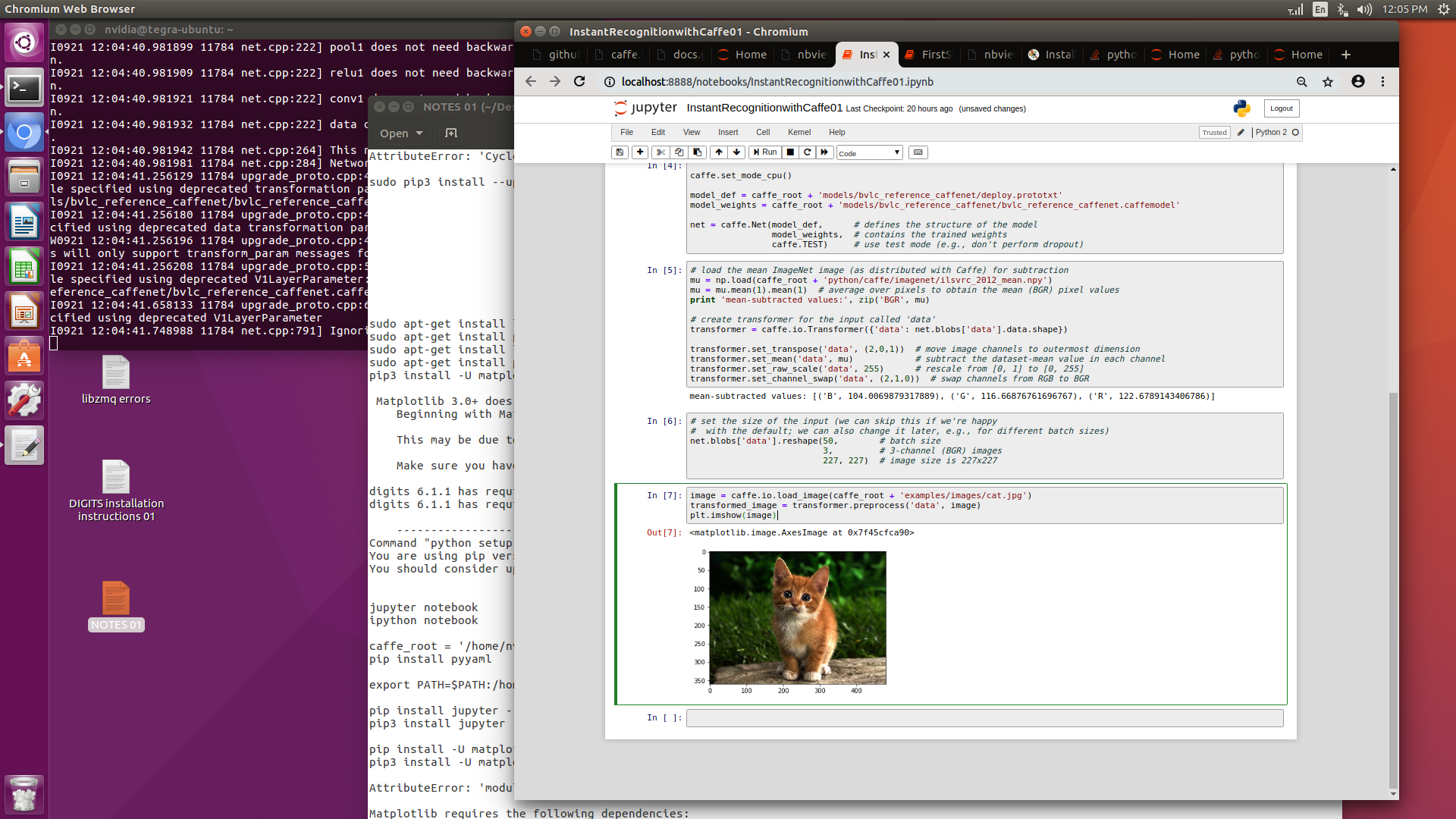

The installation uses two computers running Ubuntu and the tricks that I used are:

The installation uses two computers running Ubuntu and the tricks that I used are:

Jordan

Jordan

Joseph Eoff

Joseph Eoff

Very interested about the future weed detection and destruction system.

About accurate positionning you can think about something like this (+-300€) : https://www.thingiverse.com/thing:5182231/

Which is using DIY RTK open-source base (+-300€ also ) via the free centipede network.